Unboxing the Black Box of Attention Mechanisms in Remote Sensing Big Data Using XAI

Abstract

1. Introduction

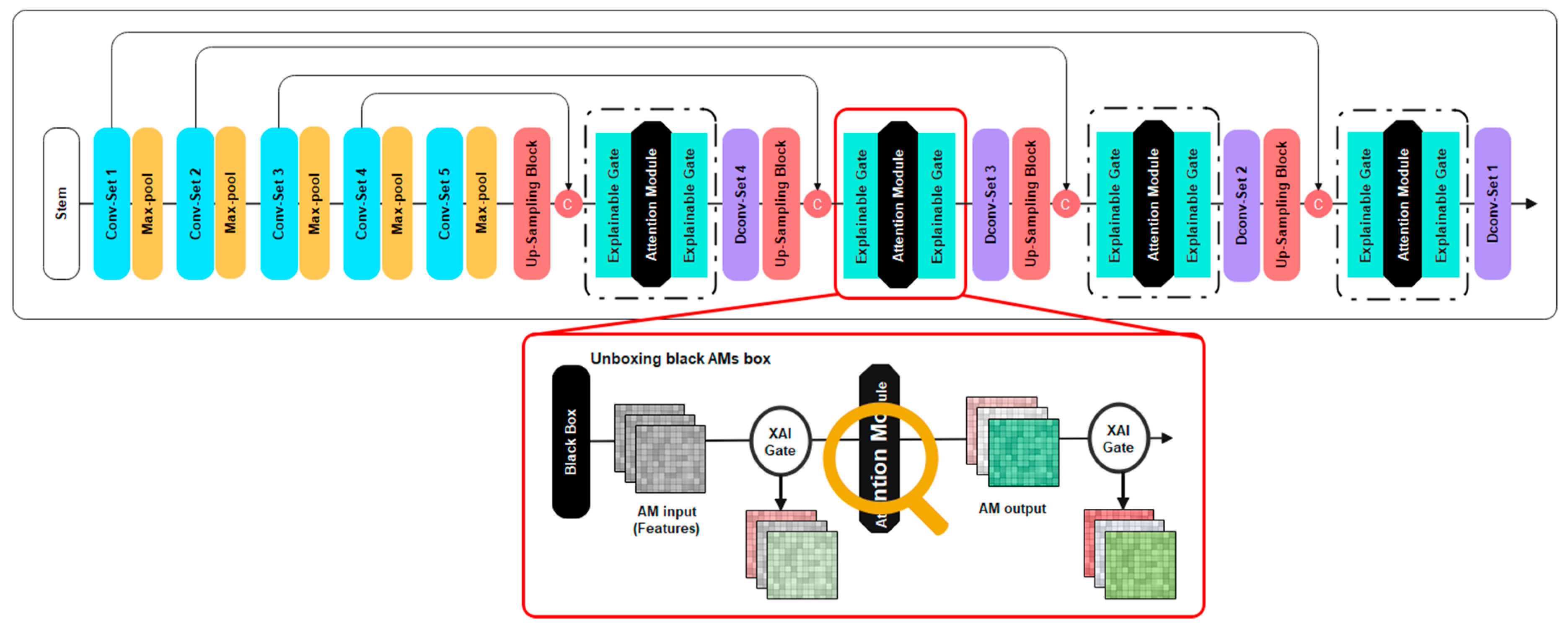

- (1)

- We propose an encoder-decoder CNN framework for building segmentation;

- (2)

- We evaluate the effectiveness of the AMs in each RS related task (based on the aforementioned metrics and XAI layer contribution methods);

- (3)

- We provide interpretations of attention blocks in different layers of the framework;

- (4)

- We attempt to unbox the black box of the AMs on the model decision by using XAI layer contribution methods.

2. Related Studies

2.1. Attention Mechanisms in RS Domains

2.1.1. Image Classification

2.1.2. Image Segmentation

2.1.3. Object Detection

2.1.4. Change Detection

2.1.5. Building Extraction

2.2. XAI Applications in RS Domains

3. Methodology

3.1. Attention Methods

3.1.1. Squeeze-and-Excitation Networks (SE)

3.1.2. Convolutional Block Attention Module (CBAM)

3.1.3. Efficient Channel Attention (ECA)

3.1.4. Shuffle Attention (SA)

3.1.5. Triplet Attention

3.2. Explainable Artificial Intelligence (XAI)

4. Experiments

4.1. Dataset

4.2. Implementations Details

4.3. Results Analysis

- (i)

- Precision—the ratio of correctly predicted buildings to the total number of samples predicted as buildings.

- (ii)

- Recall—the proportion of correctly predicted buildings among the total buildings.

- (iii)

- F1-score—computed as the harmonic mean between precision and recall.

- (iv)

- IoU—measures the overlap rate between the detected building pixels and labeled building pixels (ground truth).

- (v)

- Overall Accuracy (OA)—the ratio of the number of correctly labeled pixels to the total number of pixels in the whole image.

4.4. Attention Analysis by XAI

4.5. Computational Analysis

4.6. Transferability of the Proposed Framework

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Pradhan, B.; Al-Najjar, H.A.H.; Sameen, M.I.; Tsang, I.; Alamri, A.M. Unseen land cover classification fromhigh-resolution orthophotos using integration of zero-shot learning and convolutional neural networks. Remote Sens. 2020, 12, 1676. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land cover classification from fused DSM and UAV images using convolutional neural networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef]

- Jamali, A. Land use land cover mapping using advanced machine learning classifiers: A case study of Shiraz city, Iran. Earth Sci. Inform. 2020, 13, 1015–1030. [Google Scholar] [CrossRef]

- Kalantar, B.; Ueda, N.; Al-Najjar, H.A.H.; Halin, A.A. Assessment of convolutional neural network architectures for earthquake-induced building damage detection based on pre-and post-event orthophoto images. Remote Sens. 2020, 12, 3529. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, S.; Yang, T.; Chen, C.; Pan, D.; Chen, J.; Xiao, L.; Du, Q. BDANet: Multiscale Convolutional Neural Network with Cross-Directional Attention for Building Damage Assessment from Satellite Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, F.; Xia, J.; Xu, Y.; Li, G.; Xie, J.; Du, Z.; Liu, R. Building damage detection using u-net with attention mechanism from pre-and post-disaster remote sensing datasets. Remote Sens. 2021, 13, 905. [Google Scholar] [CrossRef]

- Ahmadi, K.; Kalantar, B.; Saeidi, V.; Harandi, E.K.G.; Janizadeh, S.; Ueda, N. Comparison of machine learning methods for mapping the stand characteristics of temperate forests using multi-spectral sentinel-2 data. Remote Sens. 2020, 12, 3019. [Google Scholar] [CrossRef]

- Kalantar, B.; Ueda, N.; Saeidi, V.; Janizadeh, S.; Shabani, F.; Ahmadi, K.; Shabani, F. Deep Neural Network Utilizing Remote Sensing Datasets for Flood Hazard Susceptibility Mapping in Brisbane, Australia. Remote Sens. 2021, 13, 2638. [Google Scholar] [CrossRef]

- Motta, M.; de Castro Neto, M.; Sarmento, P. A mixed approach for urban flood prediction using Machine Learning and GIS. Int. J. Disaster Risk Reduct. 2021, 56, 102154. [Google Scholar] [CrossRef]

- Kalantar, B.; Ameen, M.H.; Jumaah, H.J.; Jumaah, S.J.; Halin, A.A. Zab River (IRAQ) sinuosity and meandering analysis based on the remote sensing data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2020, 43, 91–95. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgu, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Ghanbari, H.; Mahdianpari, M.; Homayouni, S.; Mohammadimanesh, F. A Meta-Analysis of Convolutional Neural Networks for Remote Sensing Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 3602–3613. [Google Scholar] [CrossRef]

- Li, Y.; Chen, R.; Zhang, Y.; Zhang, M.; Chen, L. Multi-label remote sensing image scene classification by combining a convolutional neural network and a graph neural network. Remote Sens. 2020, 12, 4003. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Pradhan, B. Spatial landslide susceptibility assessment using machine learning techniques assisted by additional data created with generative adversarial networks. Geosci. Front. 2021, 12, 625–637. [Google Scholar] [CrossRef]

- Al-najjar, H.A.H.; Pradhan, B.; Sarkar, R.; Beydoun, G.; Alamri, A. A New Integrated Approach for Landslide Data Balancing and Spatial Prediction Based on Generative Adversarial. Remote Sens. 2021, 13, 4011. [Google Scholar] [CrossRef]

- Guo, D.; Xia, Y.; Luo, X. GAN-Based Semisupervised Scene Classification of Remote Sensing Image. IEEE Geosci. Remote Sens. Lett. 2021, 18, 2067–2071. [Google Scholar] [CrossRef]

- Zand, M.; Doraisamy, S.; Halin, A.A. Ontology-Based Semantic Image Segmentation Using Mixture Models and Multiple CRFs. IEEE Trans. Image Process. 2016, 25, 3233–3248. [Google Scholar] [CrossRef] [PubMed]

- Kalantar, B.; Mansor, S.B.; Abdul Halin, A.; Shafri, H.Z.M.; Zand, M. Multiple moving object detection from UAV videos using trajectories of matched regional adjacency graphs. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5198–5213. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Kundaje, A. Learning important features through propagating activation differences. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 7, pp. 4844–4866. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, 18th International Conference, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the Computer Vision—ECCV 2018, 15th European Conference, Munich, Germany, 8–14 September 2018; Volume 11211, pp. 3–19. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3138–3147. [Google Scholar] [CrossRef]

- Zhang, Q.L.; Yang, Y. Bin SA-Net: Shuffle attention for deep convolutional neural networks. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2235–2239. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Gupta, R.; Hosfelt, R.; Sajeev, S.; Patel, N.; Goodman, B.; Doshi, J.; Heim, E.; Choset, H.; Gaston, M. XBD: A dataset for assessing building damage from satellite imagery. arXiv 2019, arXiv:1911.09296. [Google Scholar]

- Ghaffarian, S.; Valente, J.; Van Der Voort, M.; Tekinerdogan, B. Effect of attention mechanism in deep learning-based remote sensing image processing: A systematic literature review. Remote Sens. 2021, 13, 2965. [Google Scholar] [CrossRef]

- Alhichri, H.; Alswayed, A.S.; Bazi, Y.; Ammour, N.; Alajlan, N.A. Classification of Remote Sensing Images Using EfficientNet-B3 CNN Model with Attention. IEEE Access 2021, 9, 14078–14094. [Google Scholar] [CrossRef]

- Tong, W.; Chen, W.; Han, W.; Li, X.; Wang, L. Channel-Attention-Based DenseNet Network for Remote Sensing Image Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4121–4132. [Google Scholar] [CrossRef]

- Ma, W.; Zhao, J.; Zhu, H.; Shen, J.; Jiao, L.; Wu, Y.; Hou, B. A spatial-channel collaborative attention network for enhancement of multiresolution classification. Remote Sens. 2021, 13, 106. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Yang, Y.; Wang, X. Classification of hyperspectral image based on double-branch dual-attention mechanism network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef]

- Zhu, M.; Jiao, L.; Liu, F.; Yang, S.; Wang, J. Residual Spectral-Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 449–462. [Google Scholar] [CrossRef]

- Zhao, Q.; Liu, J.; Li, Y.; Zhang, H. Semantic Segmentation With Attention Mechanism for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 3085889. [Google Scholar] [CrossRef]

- Nie, X.; Duan, M.; Ding, H.; Hu, B.; Wong, E.K. Attention Mask R-CNN for ship detection and segmentation from remote sensing images. IEEE Access 2020, 8, 9325–9334. [Google Scholar] [CrossRef]

- Ma, F.; Gao, F.; Sun, J.; Zhou, H.; Hussain, A. Attention graph convolution network for image segmentation in big SAR imagery data. Remote Sens. 2019, 11, 2686. [Google Scholar] [CrossRef]

- Li, J.; Xiu, J.; Yang, Z.; Liu, C. Dual path attention net for remote sensing semantic image segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 571. [Google Scholar] [CrossRef]

- Ding, L.; Tang, H.; Bruzzone, L. LANet: Local Attention Embedding to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 426–435. [Google Scholar] [CrossRef]

- Li, Y.; Huang, Q.; Pei, X.; Jiao, L.; Shang, R. RADet: Refine feature pyramid network and multi-layer attention network for arbitrary-oriented object detection of remote sensing images. Remote Sens. 2020, 12, 389. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, L.; Xiong, B.; Kuang, G. Attention receptive pyramid network for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2738–2756. [Google Scholar] [CrossRef]

- Zhou, M.; Zou, Z.; Shi, Z.; Zeng, W.J.; Gui, J. Local Attention Networks for Occluded Airplane Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 381–385. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid feature-based attention-guided siamese network for remote sensing orthoimagery building change detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; 1–14. [Google Scholar]

- Jie, C.; Ziyang, Y.; Jian, P.; Li, C.; Haozhe, H.; Jiawei, Z.; Yu, L.; Haifeng, L. Dasnet: Dual attentive fully convolutional siamese networks for change detection in highresolution satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar]

- Song, K.; Jiang, J. AGCDetNet:An Attention-Guided Network for Building Change Detection in High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4816–4831. [Google Scholar] [CrossRef]

- Liu, R.; Cheng, Z.; Zhang, L.; Li, J. Remote sensing image change detection based on information transmission and attention mechanism. IEEE Access 2019, 7, 156349–156359. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, D.; Li, P.; Lv, P. Change detection of remote sensing images based on attention mechanism. Comput. Intell. Neurosci. 2020, 2020, 6430627. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Du, P.; Lin, C.; Wang, X.; Li, E.; Xue, Z.; Bai, X. A hybrid attention-aware fusion network (Hafnet) for building extraction from high-resolution imagery and lidar data. Remote Sens. 2020, 12, 3764. [Google Scholar] [CrossRef]

- Li, C.; Fu, L.; Zhu, Q.; Zhu, J.; Fang, Z.; Xie, Y.; Guo, Y.; Gong, Y. Attention enhanced u-net for building extraction from farmland based on google and worldview-2 remote sensing images. Remote Sens. 2021, 13, 4411. [Google Scholar] [CrossRef]

- Guo, M.; Liu, H.; Xu, Y.; Huang, Y. Building extraction based on U-net with an attention block and multiple losses. Remote Sens. 2020, 12, 1400. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, G.; He, G.; Long, T.; Yin, R.; Zhang, Z.; Chen, S.; Luo, B. Robust building extraction for high spatial resolution remote sensing images with self-attention network. Sensors 2020, 20, 7241. [Google Scholar] [CrossRef] [PubMed]

- Pan, X.; Yang, F.; Gao, L.; Chen, Z.; Zhang, B.; Fan, H.; Ren, J. Building Extraction from High-Resolution Aerial Imagery Using a Generative Adversarial Network with Spatial and Channel Attention Mechanisms. Remote Sens. 2019, 11, 917. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Fan, W.; Guan, H.; Wang, C.; Li, J. Self-attention in reconstruction bias U-net for semantic segmentation of building rooftops in optical remote sensing images. Remote Sens. 2021, 13, 2524. [Google Scholar] [CrossRef]

- Tian, Q.; Zhao, Y.; Li, Y.; Chen, J.; Chen, X.; Qin, K. Multiscale Building Extraction with Refined Attention Pyramid Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8011305. [Google Scholar] [CrossRef]

- Das, P.; Chand, S. Extracting Building Footprints from High-resolution Aerial Imagery Using Refined Cross AttentionNet. IETE Tech. Rev. 2021, 39, 494–505. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Mandeep; Pannu, H.S.; Malhi, A. Deep learning-based explainable target classification for synthetic aperture radar images. In Proceedings of the 2020 13th International Conference on Human System Interaction (HSI), Tokyo, Japan, 6–8 June 2020; pp. 34–39. [Google Scholar] [CrossRef]

- Su, S.; Cui, Z.; Guo, W.; Zhang, Z.; Yu, W. Explainable Analysis of Deep Learning Methods for SAR Image Classification. arXiv 2022, arXiv:2204.06783. [Google Scholar]

- Abdollahi, A.; Pradhan, B. Urban vegetation mapping from aerial imagery using explainable AI (XAI). Sensors 2021, 21, 4738. [Google Scholar] [CrossRef] [PubMed]

- Wolanin, A.; Mateo-Garciá, G.; Camps-Valls, G.; Gómez-Chova, L.; Meroni, M.; Duveiller, G.; Liangzhi, Y.; Guanter, L. Estimating and understanding crop yields with explainable deep learning in the Indian Wheat Belt. Environ. Res. Lett. 2020, 15, 024019. [Google Scholar] [CrossRef]

- Al-najjar, H.A.H.; Pradhan, B.; Beydoun, G.; Sarkar, R.; Park, H.; Alamri, A. A Novel Method using Explainable Artificial Intelligence (XAI)-based Shapley Additive Explanations for Spatial Landslide Prediction using Time-Series SAR dataset. Gondwana Res. 2022, in press. [Google Scholar] [CrossRef]

- Kakogeorgiou, I.; Karantzalos, K. Evaluating explainable artificial intelligence methods for multi-label deep learning classification tasks in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102520. [Google Scholar] [CrossRef]

- Larochelle, H.; Hinton, G. Learning to combine foveal glimpses with a third-order Boltzmann machine. In Proceedings of the Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010. [Google Scholar]

- Deng, L. Deep learning: Methods and applications. Found. Trends® Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- WHU Building Dataset. Available online: http://gpcv.whu.edu.cn/data/building_dataset.html (accessed on 5 December 2022).

| Backbone | Encoder Layer | Feature Size | Kernel Size | Decoder Layer | Feature Size | Kernel Size |

|---|---|---|---|---|---|---|

| - | Input Image | 512 × 512 × 3 | - | Up-Sampling Block | - | 3 × 3 |

| SegNet | Conv-Set 1 | 512 × 512 × 64 | 3 × 3 | Deconv-Set 4 | 64 × 64 × 512 | |

| Conv-Set 2 | 256 × 256 × 128 | 3 × 3 | Attention Block 4 | 64 × 64 × 512 | 3 × 3 | |

| Conv-Set 3 | 256 × 256 × 128 | 3 × 3 | Up-Sampling Block | - | 3 × 3 | |

| Deconv-Set 3 | 128 × 128 × 256 | |||||

| Conv-Set 4 | 64 × 64 × 512 | 3 × 3 | Attention Block 3 | 128 × 128 × 256 | 3 × 3 | |

| Conv-Set 5 | 32 × 32 × 1024 | 3 × 3 | Up-Sampling Block | - | 3 × 3 | |

| Deconv-Set 2 | 256 × 256 × 128 | |||||

| Unet | Conv-Set 1 | 512 × 512 × 64 | 3 × 3 | Attention Block 2 | 256 × 256 × 128 | 3 × 3 |

| Conv-Set 2 | 256 × 256 × 128 | 3 × 3 | Up-Sampling Block | - | 3 × 3 | |

| Deconv-Set 1 | 512 × 512 × 64 | |||||

| Conv-Set 3 | 256 × 256 × 128 | 3 × 3 | Attention Block 1 | 512 × 512 × 64 | 3 × 3 | |

| Conv-Set 4 | 64 × 64 × 512 | 3 × 3 | Final Conv | 512 × 512 × 2 | 1 × 1 | |

| Conv-Set 5 | 32 × 32 × 512 | 3 × 3 |

| Backbone | Metric | Vanilla | SE | CBAM | ECA | SA | Triplet |

|---|---|---|---|---|---|---|---|

| SegNet | Precision | 87.03 | 89 (1.97) | 89.31 (2.28) | 88.04 (1.01) | 87.77 (0.74) | 88.02 (0.99) |

| Recall | 75.32 | 74.98 (−0.34) | 75.59 (0.27) | 77.83 (2.51) | 75.46 (0.14) | 75.4 (0.08) | |

| OA | 97.87 | 97.96 (0.09) | 98.01 (0.14) | 98.06 (0.19) | 97.92 (0.05) | 97.93 (0.06) | |

| IoU | 67.72 | 68.63 (0.91) | 69.32 (1.6) | 70.39 (2.67) | 68.29 (0.57) | 68.38 (0.66) | |

| F1-score | 80.75 | 81.39 (0.64) | 81.88 (1.13) | 82.62 (1.87) | 81.15 (0.4) | 81.22 (0.47) | |

| Unet | Precision | 87.07 | 88.76 (1.69) | 88.9 (1.83) | 87.53 (0.46) | 89.67 (2.6) | 88.42 (1.35) |

| Recall | 81.06 | 85.05 (3.99) | 84.5 (3.44) | 85.69 (4.63) | 82.55 (1.49) | 85.46 (4.4) | |

| OA | 98.16 | 98.47 (0.31) | 98.45 (0.29) | 98.42 (0.26) | 98.4 (0.24) | 98.47 (0.31) | |

| IoU | 72.36 | 76.79 (4.43) | 76.44 (4.08) | 76.37 (4.01) | 75.38 (3.02) | 76.86 (4.5) | |

| F1-score | 83.96 | 86.87 (2.91) | 86.64 (2.68) | 86.6 (2.64) | 85.96 (2) | 86.91 (2.95) |

| Model | Vanilla | SE | CBAM | Triplet | SA | ECA |

|---|---|---|---|---|---|---|

| Number of blocks | - | 4 | 4 | 4 | 4 | 4 |

| Parameters (k) | - | 87 | 44.98 | 1.2 | 0.528 | 0.012 |

| Total (Million) | 20.74 | 20.83 | 20.78 | 20.74 | 20.74 | 20.74 |

| Inference time (ms/img) | 23.60 | 23.81 | 40.32 | 25.94 | 24.40 | 23.79 |

| FLOPs (GMac) | 110.44 | 110.44 | 110.44 | 110.46 | 110.45 | 110.44 |

| Backbone | Metric | IoU | Recall | Accuracy | Precision | F1-Score |

|---|---|---|---|---|---|---|

| SegNet | Vanilla | 60.07 | 67.53 | 99.43 | 84.47 | 75.06 |

| SE | 65.26 (5.19) | 74.65 (7.12) | 99.50 (0.07) | 83.85 (-0.62) | 78.98 (3.92) | |

| CBAM | 67.28 (7.21) | 75.18 (7.65) | 99.54 (0.11) | 86.49 (2.02) | 80.44 (5.38) | |

| ECA | 61.97 (1.9) | 67.23 (-0.3) | 99.48 (0.05) | 88.79 (4.32) | 76.52 (1.46) | |

| Unet | Vanilla | 63.15 | 72.89 | 99.46 | 82.53 | 77.41 |

| SE | 67.15 (4) | 75.32 (2.43) | 99.53 (0.07) | 86.09 (3.56) | 80.35 (2.94) | |

| CBAM | 68.07 (4.92) | 79.48 (6.59) | 99.53 (0.07) | 82.59 (0.06) | 81.00 (3.59) | |

| Triplet | 68.73 (5.58) | 79.31 (6.42) | 99.54 (0.08) | 83.75 (1.22) | 81.47 (4.06) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasanpour Zaryabi, E.; Moradi, L.; Kalantar, B.; Ueda, N.; Halin, A.A. Unboxing the Black Box of Attention Mechanisms in Remote Sensing Big Data Using XAI. Remote Sens. 2022, 14, 6254. https://doi.org/10.3390/rs14246254

Hasanpour Zaryabi E, Moradi L, Kalantar B, Ueda N, Halin AA. Unboxing the Black Box of Attention Mechanisms in Remote Sensing Big Data Using XAI. Remote Sensing. 2022; 14(24):6254. https://doi.org/10.3390/rs14246254

Chicago/Turabian StyleHasanpour Zaryabi, Erfan, Loghman Moradi, Bahareh Kalantar, Naonori Ueda, and Alfian Abdul Halin. 2022. "Unboxing the Black Box of Attention Mechanisms in Remote Sensing Big Data Using XAI" Remote Sensing 14, no. 24: 6254. https://doi.org/10.3390/rs14246254

APA StyleHasanpour Zaryabi, E., Moradi, L., Kalantar, B., Ueda, N., & Halin, A. A. (2022). Unboxing the Black Box of Attention Mechanisms in Remote Sensing Big Data Using XAI. Remote Sensing, 14(24), 6254. https://doi.org/10.3390/rs14246254