Remote Sensing Monitoring of Grasslands Based on Adaptive Feature Fusion with Multi-Source Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Dataset

2.2.1. Data Introduction and Pre-Processing

2.2.2. Data Labeling

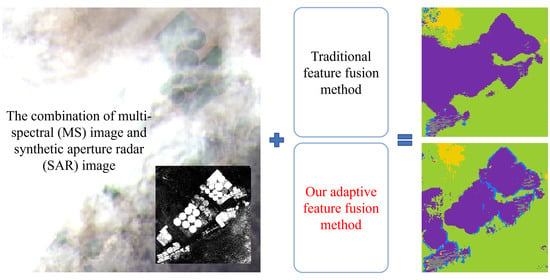

2.3. Our Method

2.3.1. Overview

2.3.2. Extraction of Multi-Source and Multi-Size Patch

2.3.3. CNN-Based Feature Extraction Network

2.3.4. Adaptive Feature Fusion and Classification

2.4. Evaluation Metrics

3. Results

3.1. Model Training

3.1.1. Training Process

3.1.2. Training Strategies

3.2. Test Result

3.2.1. Comparison between Different Data Sources

3.2.2. Comparison between Different Methods

4. Discussion

4.1. Dataset and Methods Selection

4.2. Problems Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- White, R. Pilot Analysis of Global Ecosystems: Grassland Ecosystems; World Resources Institute: Washington, DC, USA, 2000; ISBN 9781569734612. [Google Scholar]

- Liu, X.; Wang, Z.; Zheng, K.; Han, C.; Li, L.; Sheng, H.; Ma, Z. Changes in Soil Carbon and Nitrogen Stocks Following Degradation of Alpine Grasslands on the Qinghai-Tibetan Plateau: A Meta-analysis. Land Degrad. Dev. 2021, 32, 1262–1273. [Google Scholar] [CrossRef]

- Li, X.; Perry, G.L.W.; Brierley, G.; Gao, J.; Zhang, J.; Yang, Y. Restoration Prospects for Heitutan Degraded Grassland in the Sanjiangyuan. J. Mt. Sci. 2013, 10, 687–698. [Google Scholar] [CrossRef] [Green Version]

- Phinn, S.; Roelfsema, C.; Dekker, A.; Brando, V.; Anstee, J. Mapping Seagrass Species, Cover and Biomass in Shallow Waters: An Assessment of Satellite Multi-Spectral and Airborne Hyper-Spectral Imaging Systems in Moreton Bay (Australia). Remote Sens. Environ. 2008, 112, 3413–3425. [Google Scholar] [CrossRef]

- Lu, D.; Batistella, M.; Mausel, P.; Moran, E. Mapping and Monitoring Land Degradation Risks in the Western Brazilian Amazon Using Multitemporal Landsat TM/ETM+ Images. Land Degrad. Dev. 2007, 18, 41–54. [Google Scholar] [CrossRef]

- Wiesmair, M.; Feilhauer, H.; Magiera, A.; Otte, A.; Waldhardt, R. Estimating Vegetation Cover from High-Resolution Satellite Data to Assess Grassland Degradation in the Georgian Caucasus. Mred 2016, 36, 56–65. [Google Scholar] [CrossRef] [Green Version]

- Robinson, C.; Hou, L.; Malkin, K.; Soobitsky, R.; Czawlytko, J.; Dilkina, B.; Jojic, N. Large Scale High-Resolution Land Cover Mapping with Multi-Resolution Data. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12718–12727. [Google Scholar]

- Selvaraj, S.; Case, B.S.; White, W.L. Discrimination of Common New Zealand Native Seaweeds from the Invasive Undaria Pinnatifida Using Hyperspectral Data. J. Appl. Remote Sens. 2021, 15, 024501. [Google Scholar] [CrossRef]

- Pan, Y.; Pi, D.; Chen, J.; Chen, Y. Remote Sensing Image Fusion with Multistream Deep ResCNN. J. Appl. Remote Sens. 2021, 15, 032203. [Google Scholar] [CrossRef]

- Su, H.; Peng, Y.; Xu, C.; Feng, A.; Liu, T. Using Improved DeepLabv3+network Integrated with Normalized Difference Water Index to Extract Water Bodies in Sentinel-2A Urban Remote Sensing Images. J. Appl. Remote Sens. 2021, 15, 018504. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A Review of Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools, and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 2609. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Nicolau, A.P.; Flores-Anderson, A.; Griffin, R.; Herndon, K.; Meyer, F.J. Assessing SAR C-Band Data to Effectively Distinguish Modified Land Uses in a Heavily Disturbed Amazon Forest. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102214. [Google Scholar] [CrossRef]

- Solórzano, J.V.; Mas, J.F.; Gao, Y.; Gallardo-Cruz, J.A. Land Use Land Cover Classification with U-Net: Advantages of Combining Sentinel-1 and Sentinel-2 Imagery. Remote Sens. 2021, 13, 3600. [Google Scholar] [CrossRef]

- Pereira, L.O.; Freitas, C.C.; Sant’Anna, S.J.S.; Reis, M.S. Evaluation of Optical and Radar Images Integration Methods for LULC Classification in Amazon Region. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3062–3074. [Google Scholar] [CrossRef]

- Khan, A.; Govil, H.; Kumar, G.; Dave, R. Synergistic Use of Sentinel-1 and Sentinel-2 for Improved LULC Mapping with Special Reference to Bad Land Class: A Case Study for Yamuna River Floodplain, India. Spat. Inf. Res. 2020, 28, 669–681. [Google Scholar] [CrossRef]

- Sharma, A.; Liu, X.; Yang, X.; Shi, D. A Patch-Based Convolutional Neural Network for Remote Sensing Image Classification. Neural Netw. 2017, 95, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Du, S.; Du, S.; Zhang, X. Incorporating Deep Features into GEOBIA Paradigm for Remote Sensing Imagery Classification: A Patch-Based Approach. Remote Sens. 2020, 12, 3007. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

| Data Source | Accuracy | Average F1-Score |

|---|---|---|

| MS only | 85.51% | 0.80 |

| SAR only | 70.10% | 0.63 |

| MS + SAR | 93.12% | 0.91 |

| Method | Single-Size Patch | Multi-Size Patch | ||

|---|---|---|---|---|

| Accuracy | Average F1-Score | Accuracy | Average F1-Score | |

| Concatenation | 92.10% | 0.90 | 92.44% | 0.90 |

| SE-like model | 91.07% | 0.88 | 91.75% | 0.89 |

| SK-like model | 93.12% | 0.91 | 90.03% | 0.87 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Ma, Q.; Huang, J.; Feng, Q.; Zhao, Y.; Guo, H.; Chen, B.; Li, C.; Zhang, Y. Remote Sensing Monitoring of Grasslands Based on Adaptive Feature Fusion with Multi-Source Data. Remote Sens. 2022, 14, 750. https://doi.org/10.3390/rs14030750

Wang W, Ma Q, Huang J, Feng Q, Zhao Y, Guo H, Chen B, Li C, Zhang Y. Remote Sensing Monitoring of Grasslands Based on Adaptive Feature Fusion with Multi-Source Data. Remote Sensing. 2022; 14(3):750. https://doi.org/10.3390/rs14030750

Chicago/Turabian StyleWang, Weitao, Qin Ma, Jianxi Huang, Quanlong Feng, Yuanyuan Zhao, Hao Guo, Boan Chen, Chenxi Li, and Yuxin Zhang. 2022. "Remote Sensing Monitoring of Grasslands Based on Adaptive Feature Fusion with Multi-Source Data" Remote Sensing 14, no. 3: 750. https://doi.org/10.3390/rs14030750

APA StyleWang, W., Ma, Q., Huang, J., Feng, Q., Zhao, Y., Guo, H., Chen, B., Li, C., & Zhang, Y. (2022). Remote Sensing Monitoring of Grasslands Based on Adaptive Feature Fusion with Multi-Source Data. Remote Sensing, 14(3), 750. https://doi.org/10.3390/rs14030750