An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China

Abstract

:1. Introduction

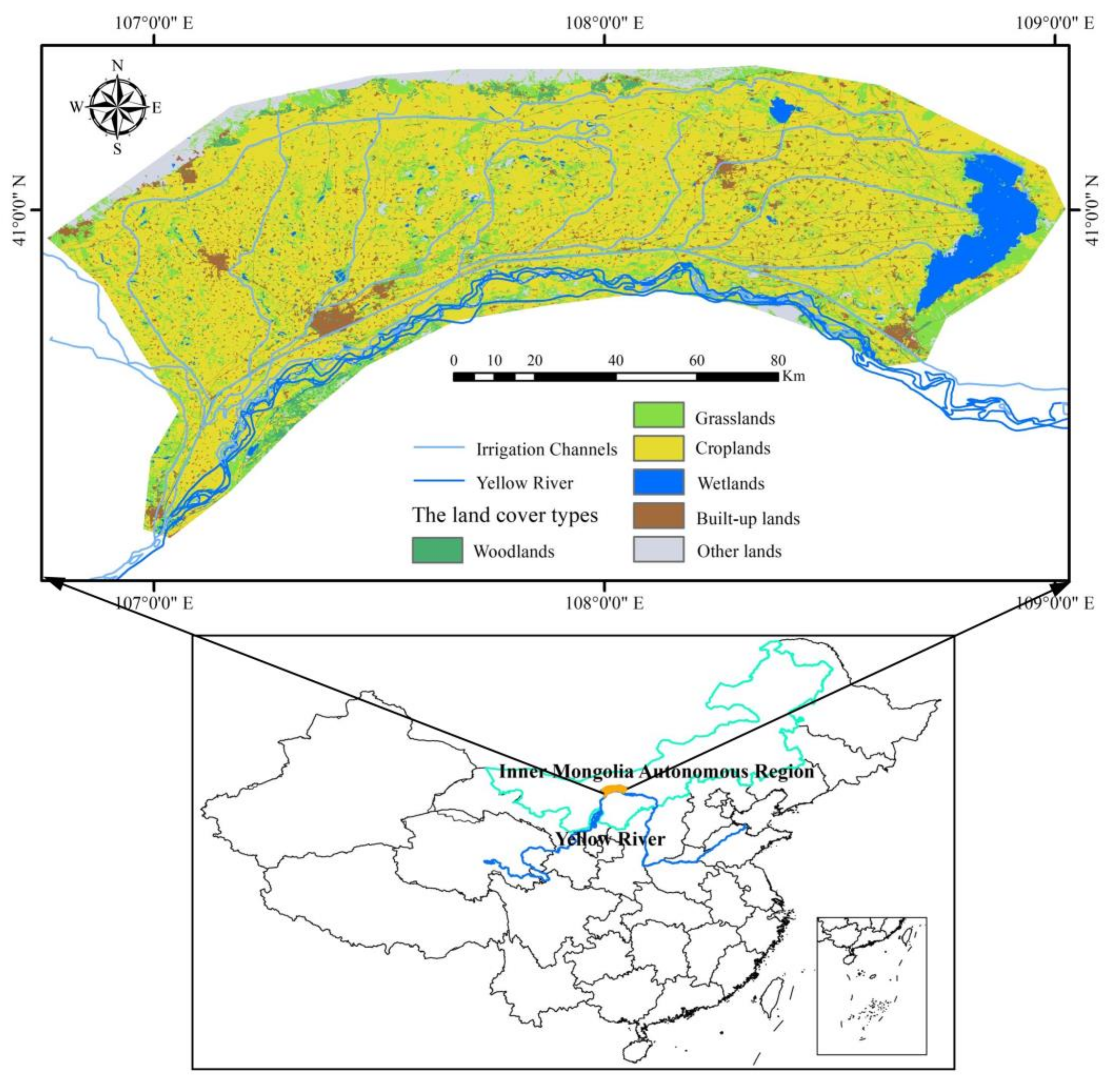

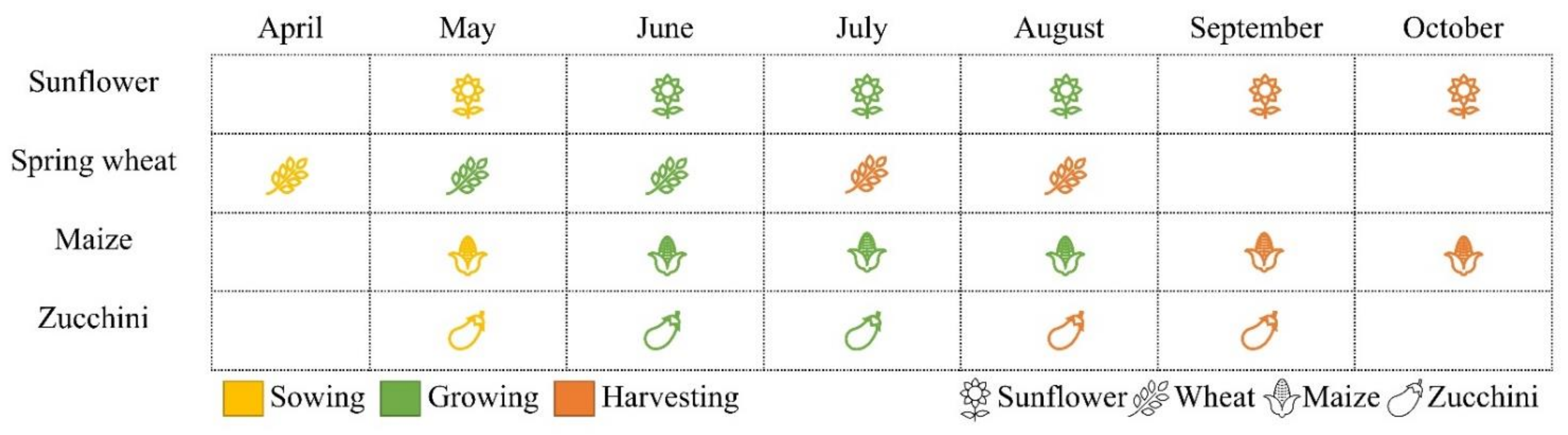

2. Study Area

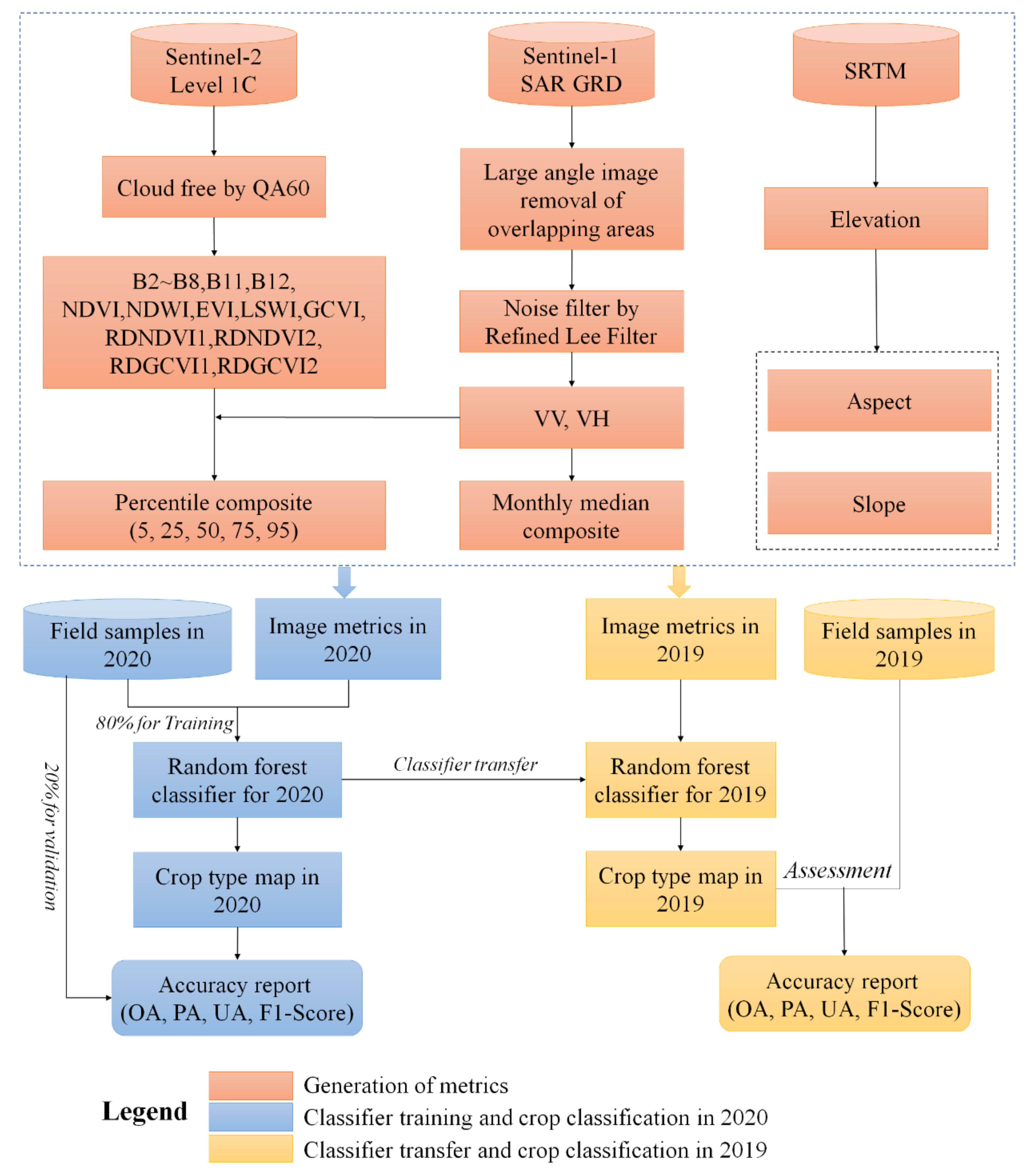

3. Materials and Methods

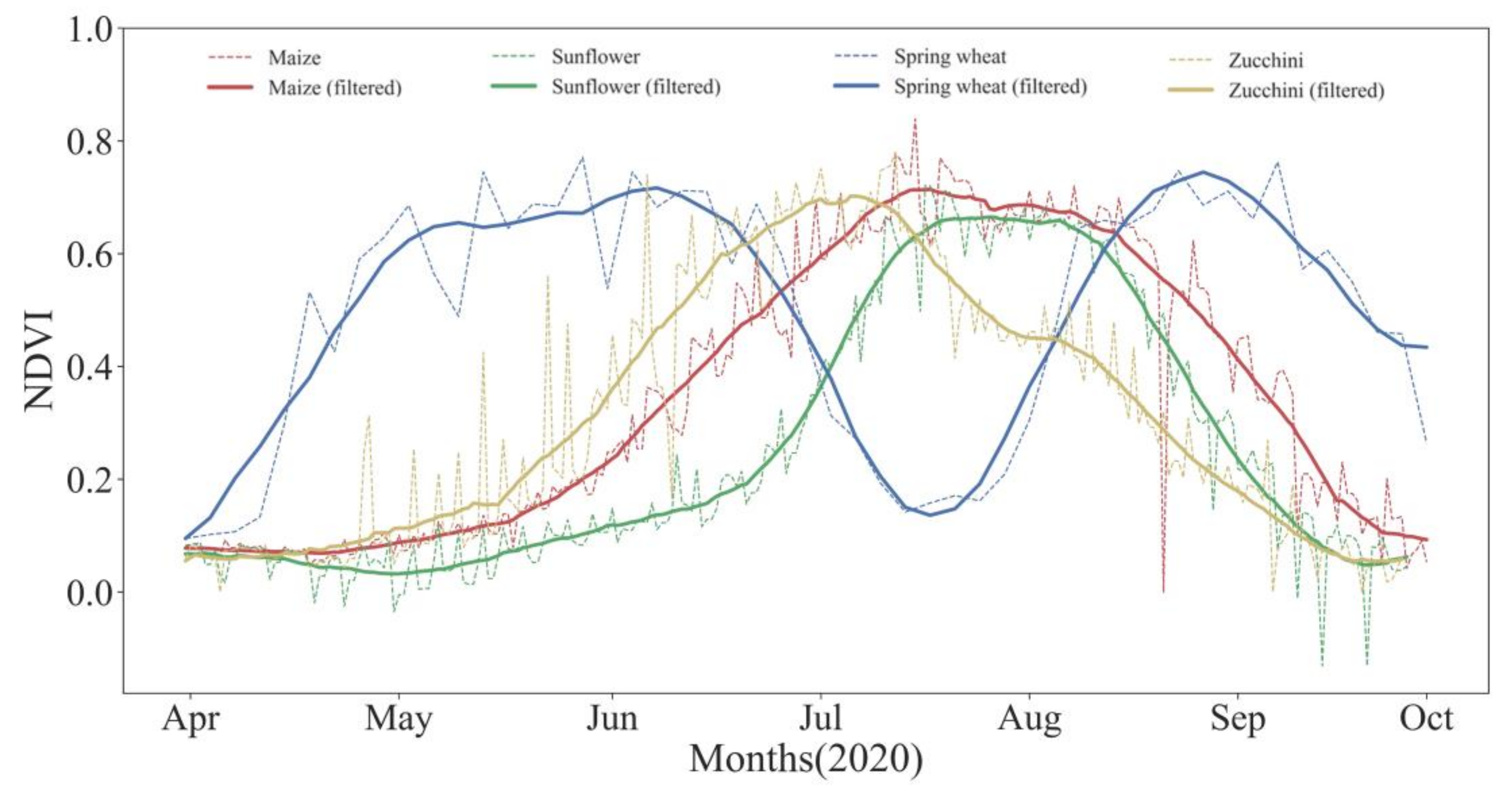

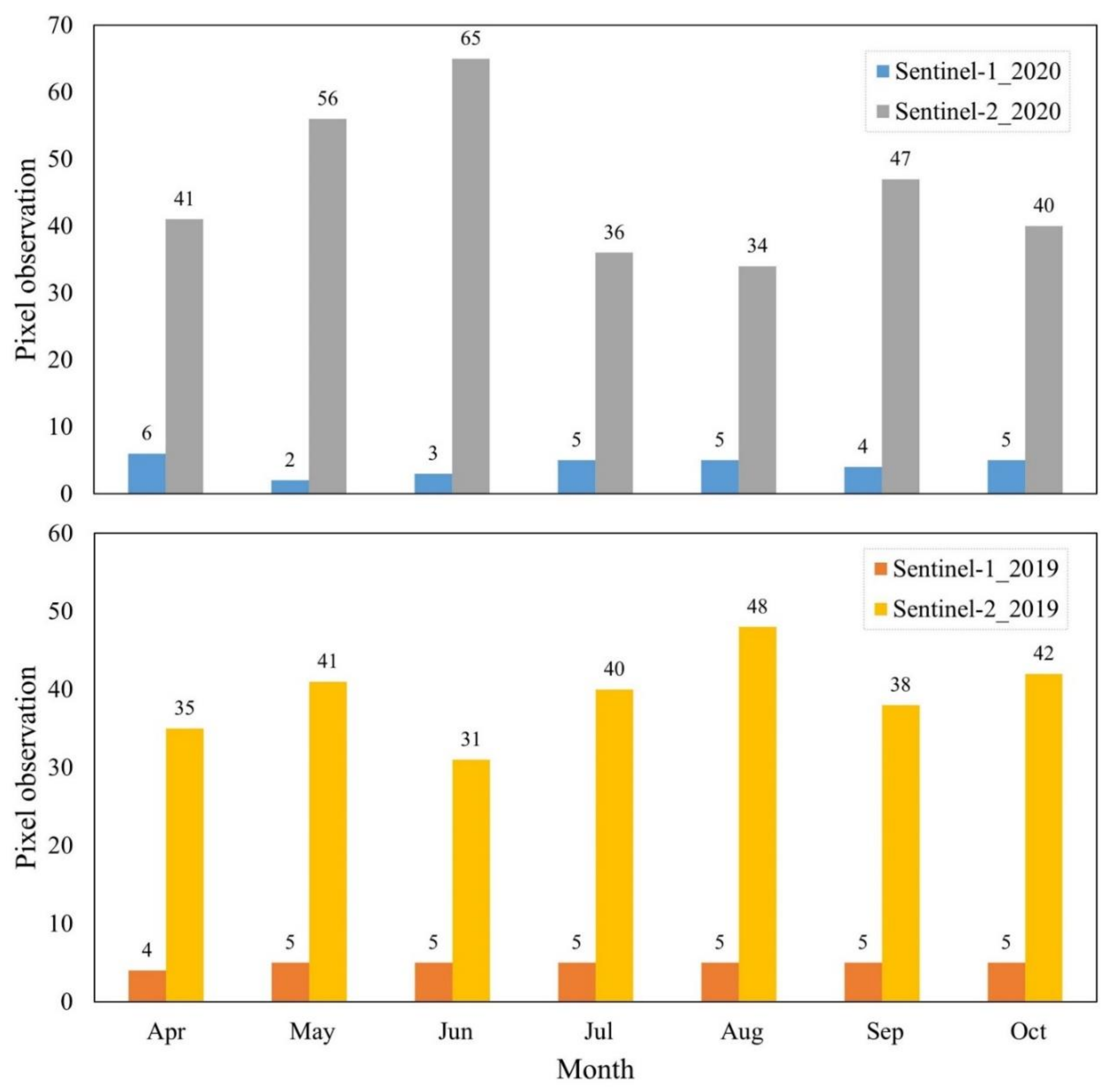

3.1. Sentinel-2 Imagery and Processing

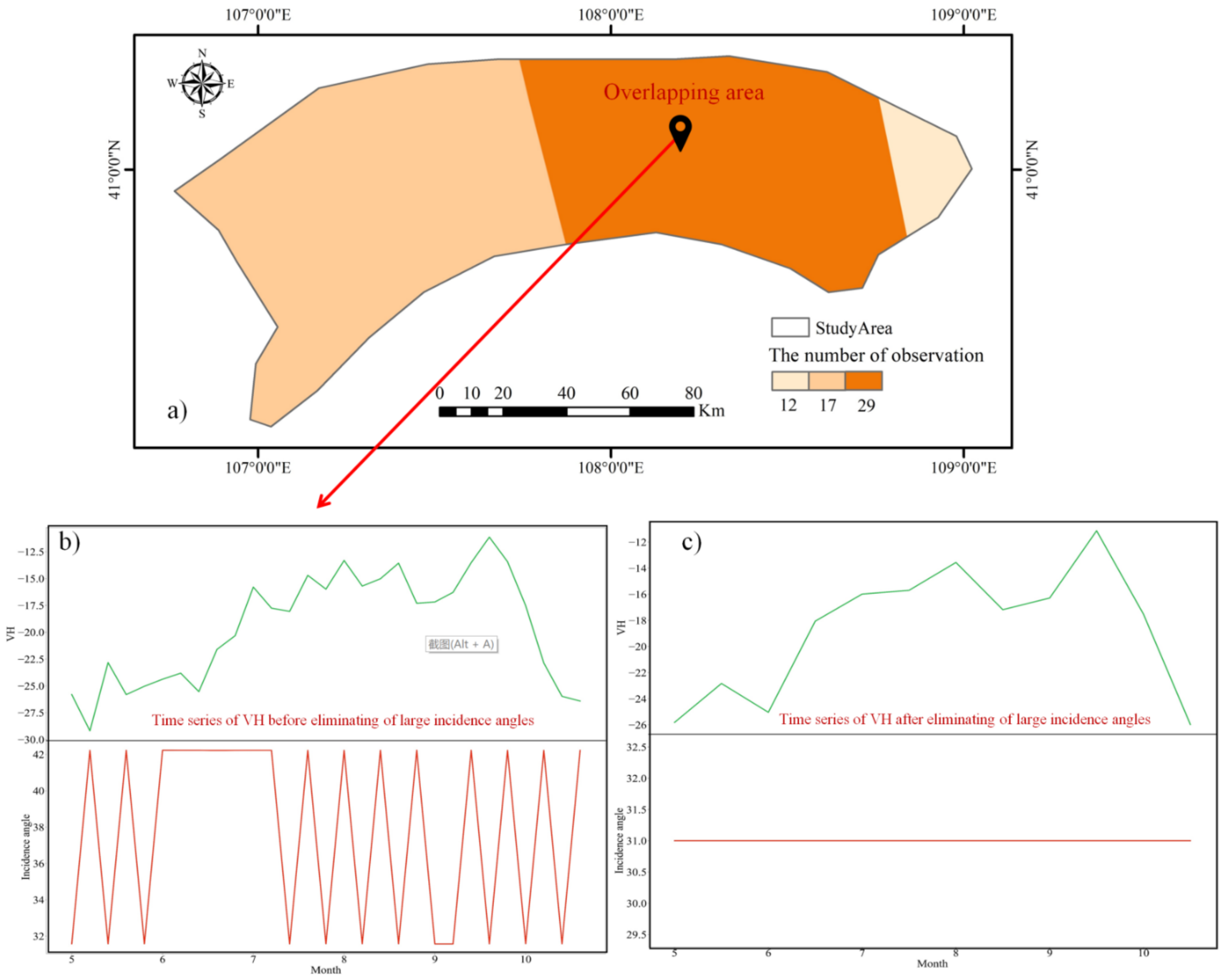

3.2. Sentinel-1 SAR Data and Processing

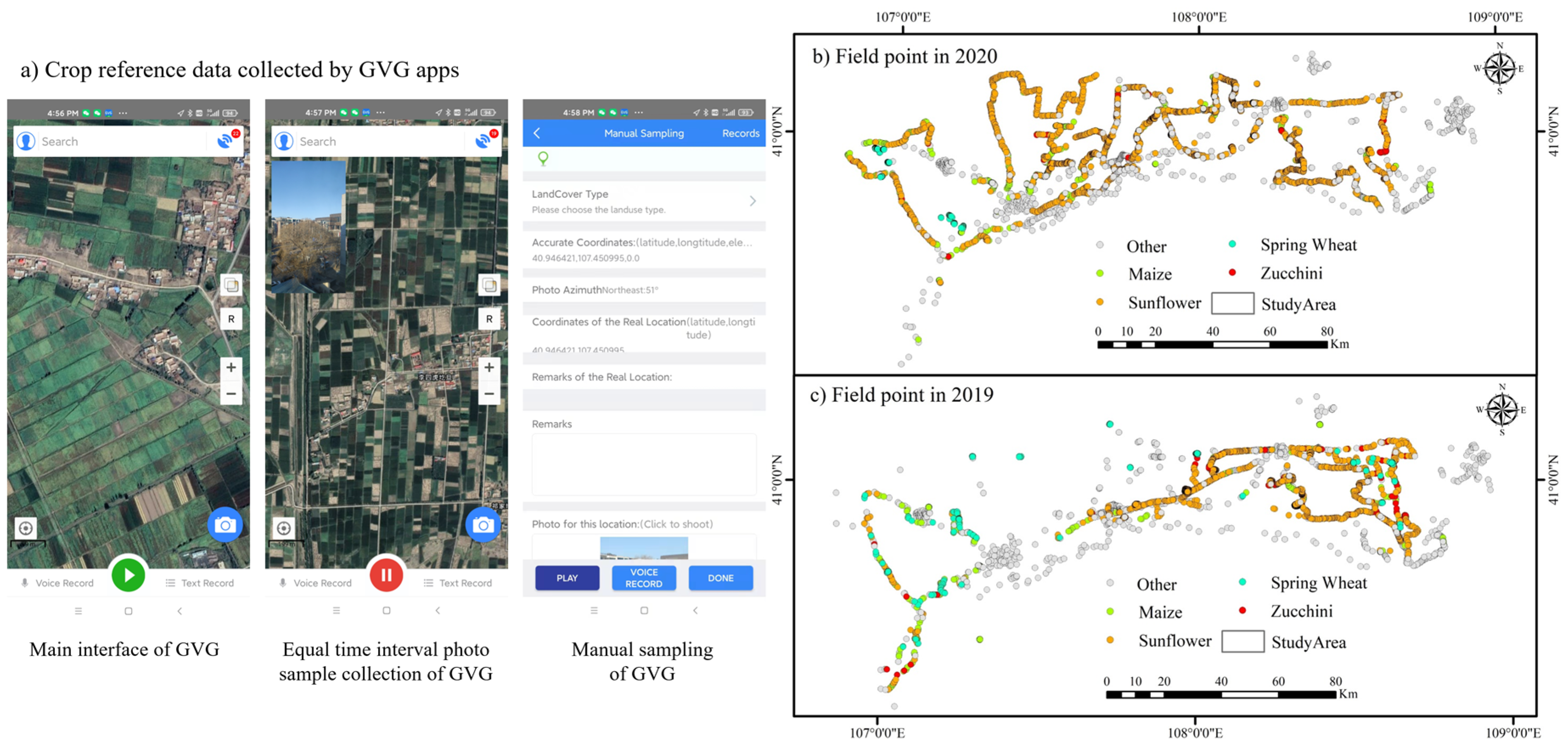

3.3. Topographic Data and Reference Crop Sample Data Collection

3.4. Methodology

3.4.1. Metric Composites

3.4.2. Training and Validation Dataset Preparation

3.4.3. Classifier: Random Forest

3.4.4. Model Transfer Scenario and Performance Assessment

3.4.5. Accuracy Assessment Indicators

4. Results

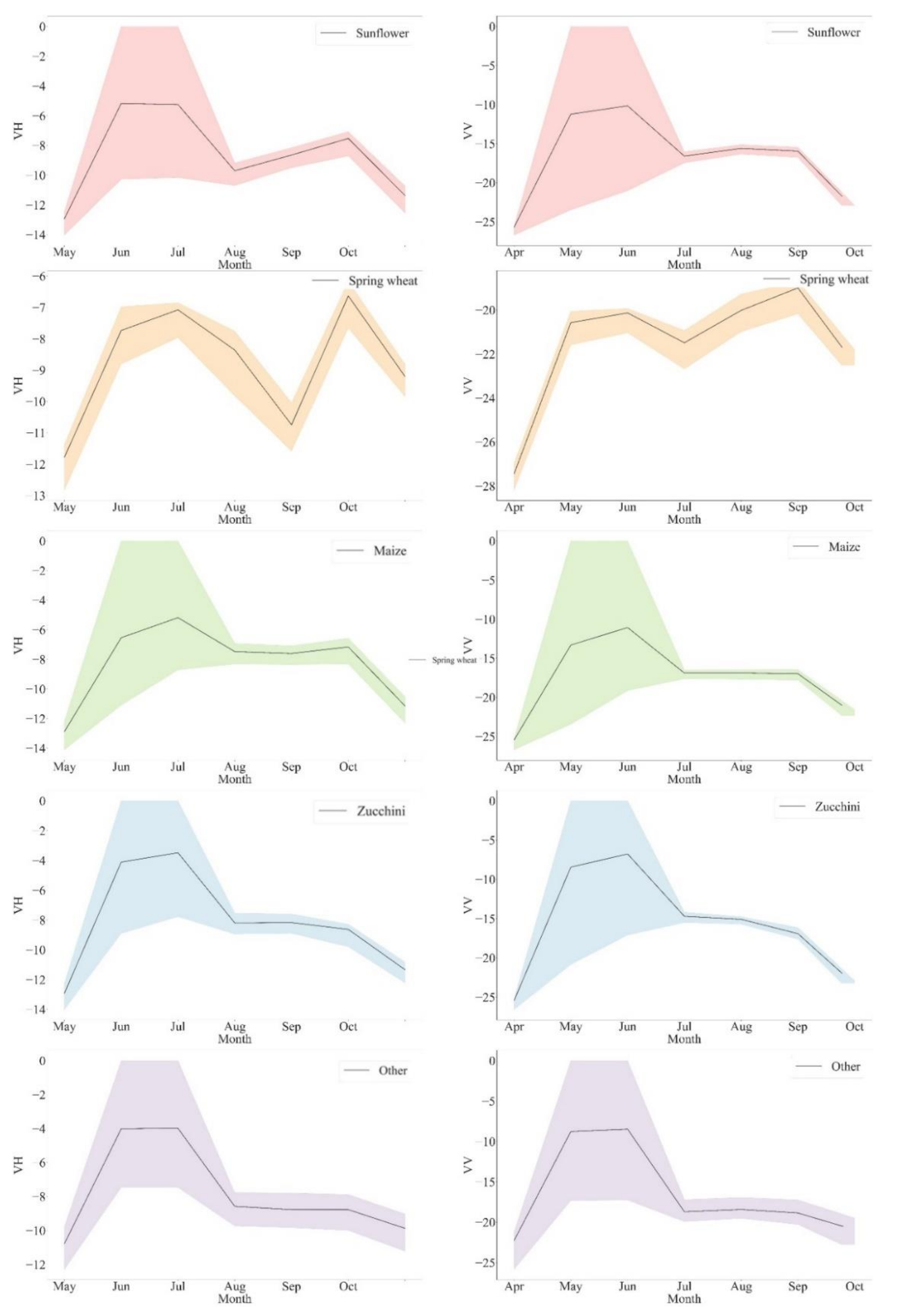

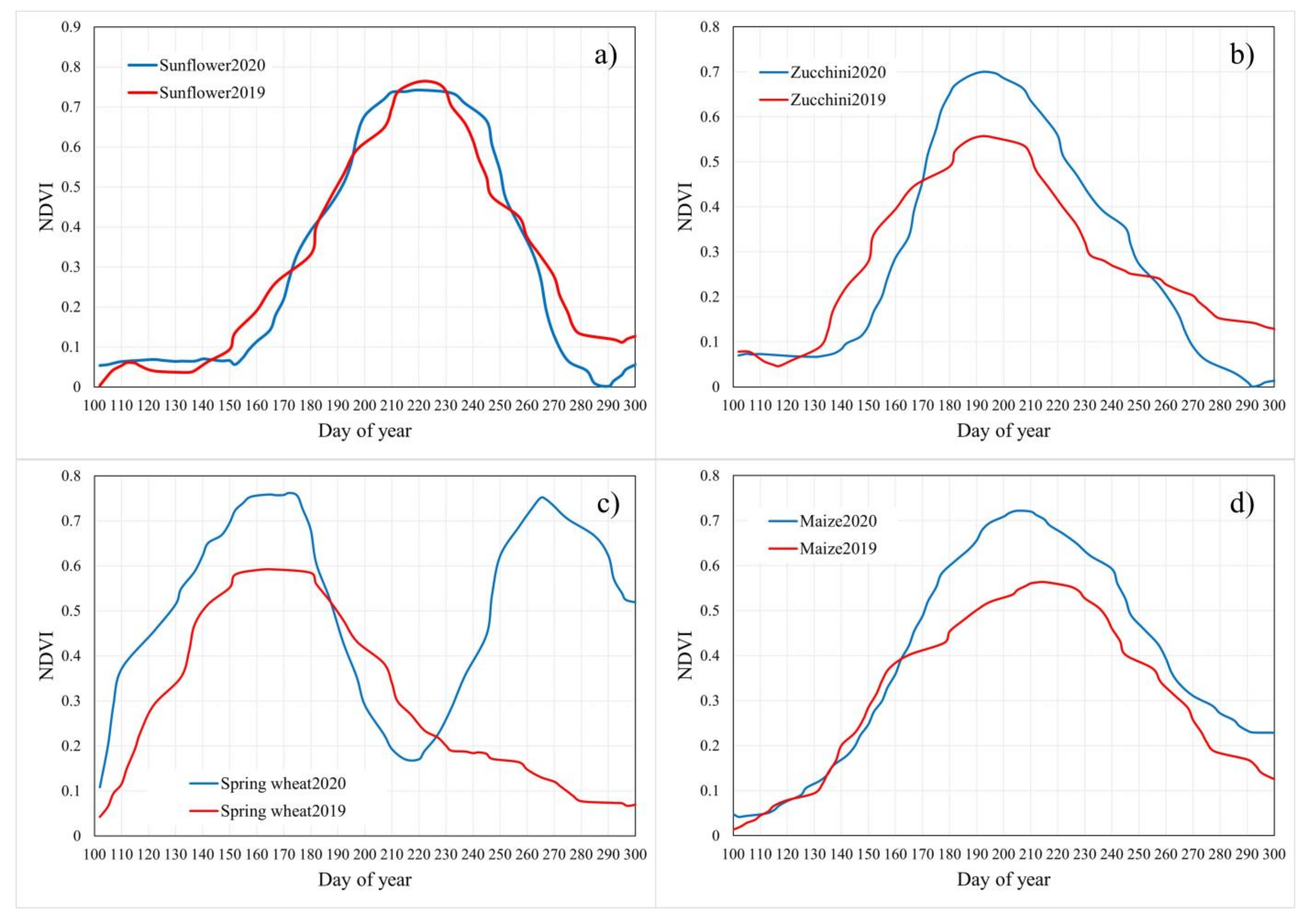

4.1. Metris Characteristic Changes

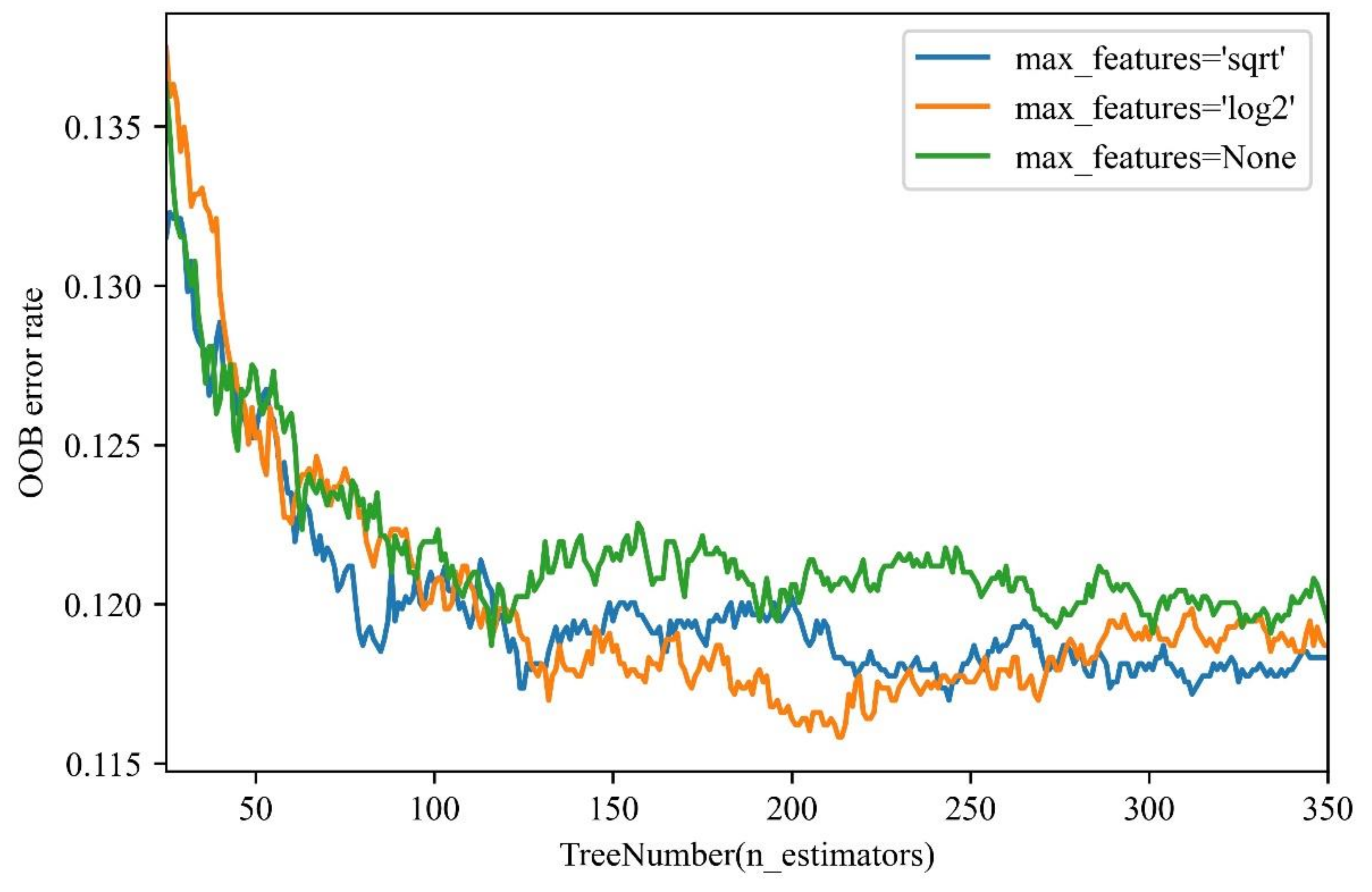

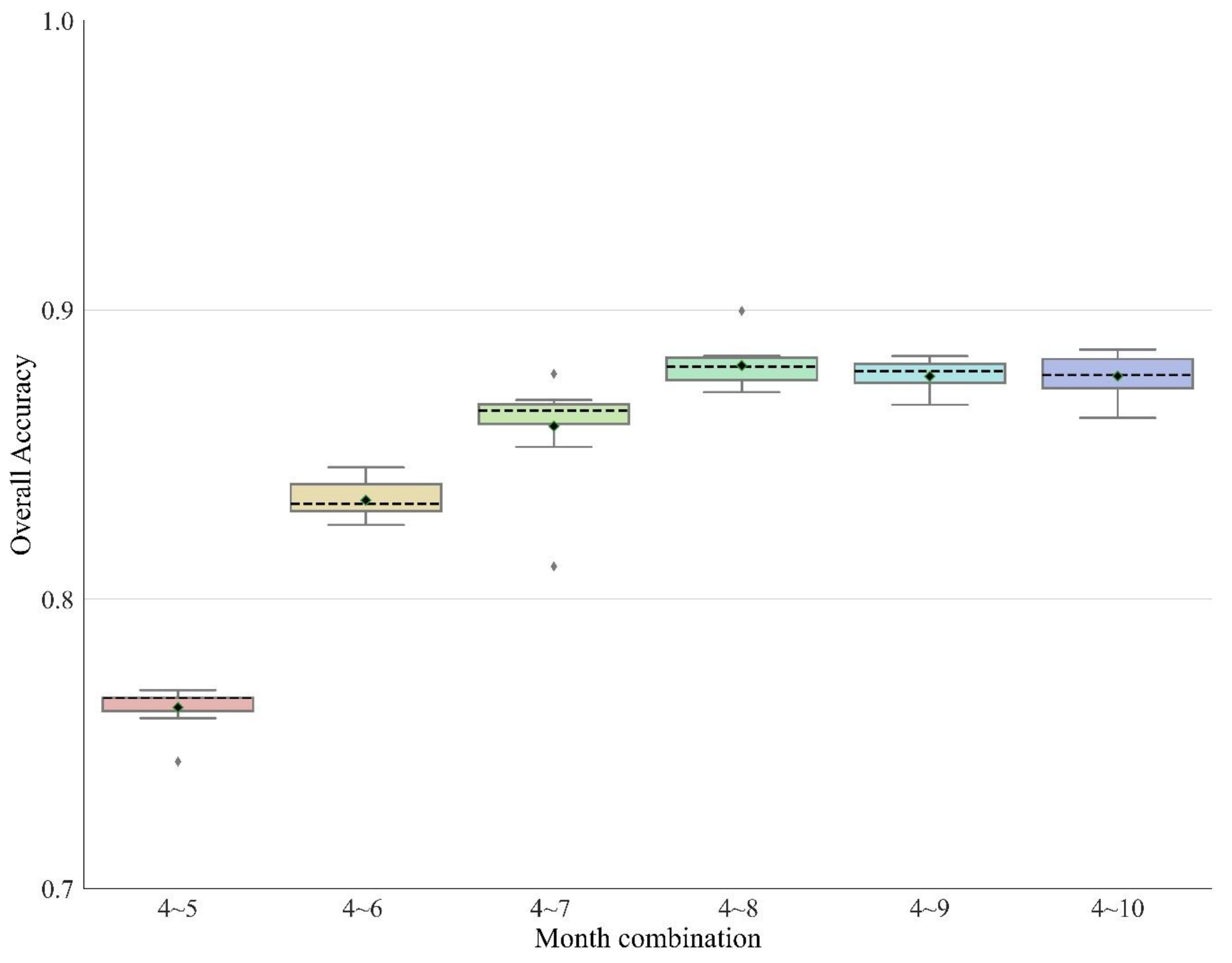

4.2. Optimization of Tree Number and Classification Period

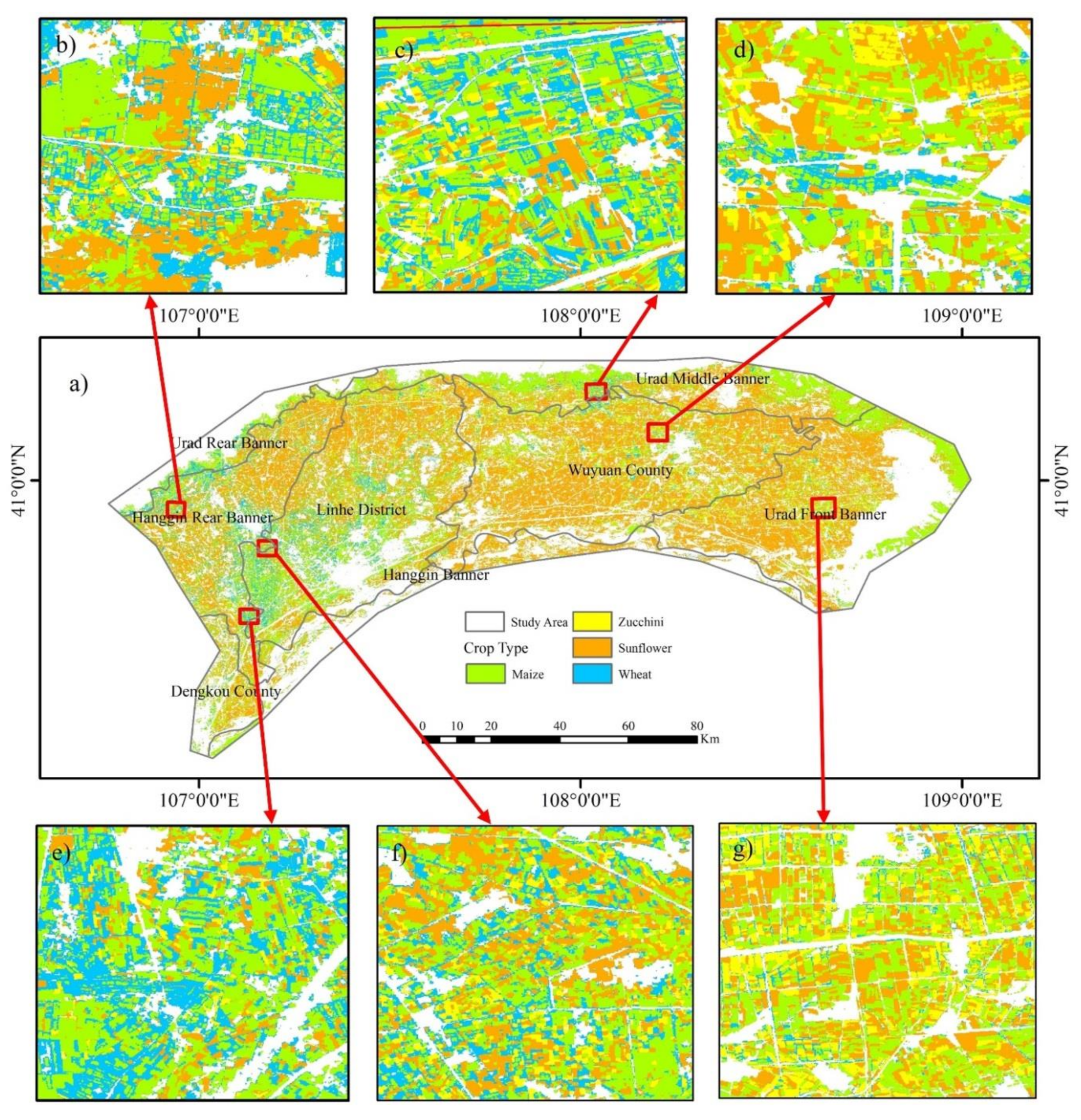

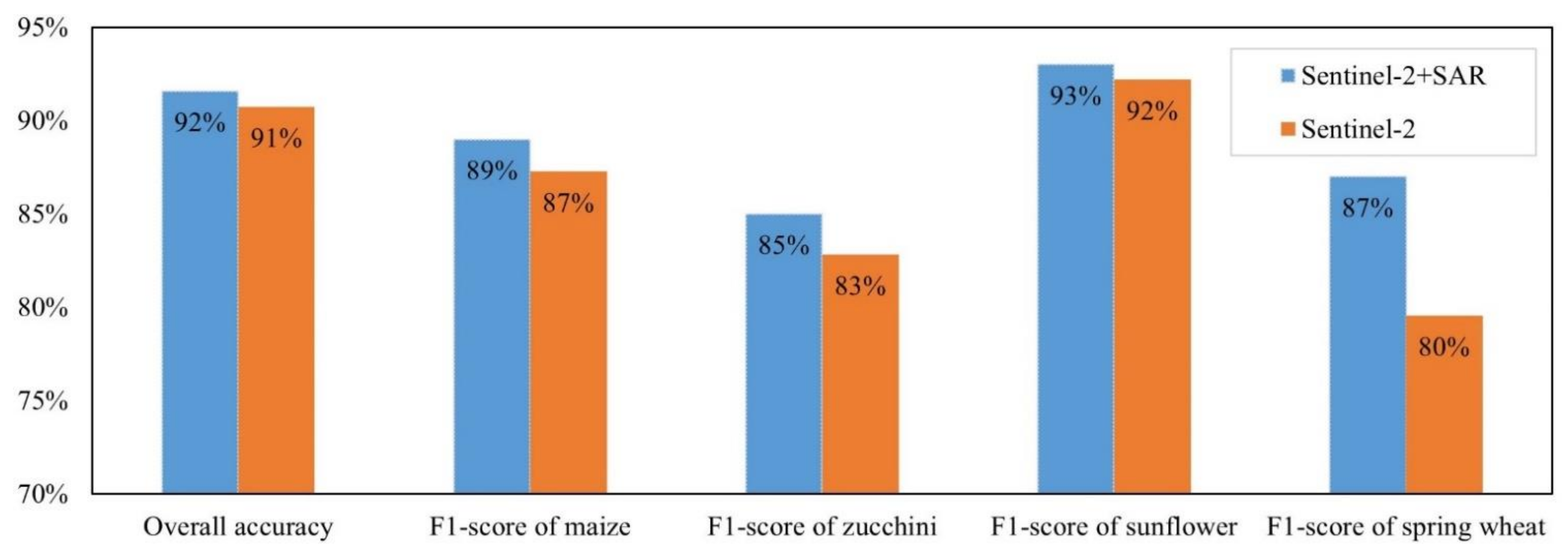

4.3. Crop Type Classification in 2020

4.4. Performance Analysis of Model Transfer in 2019

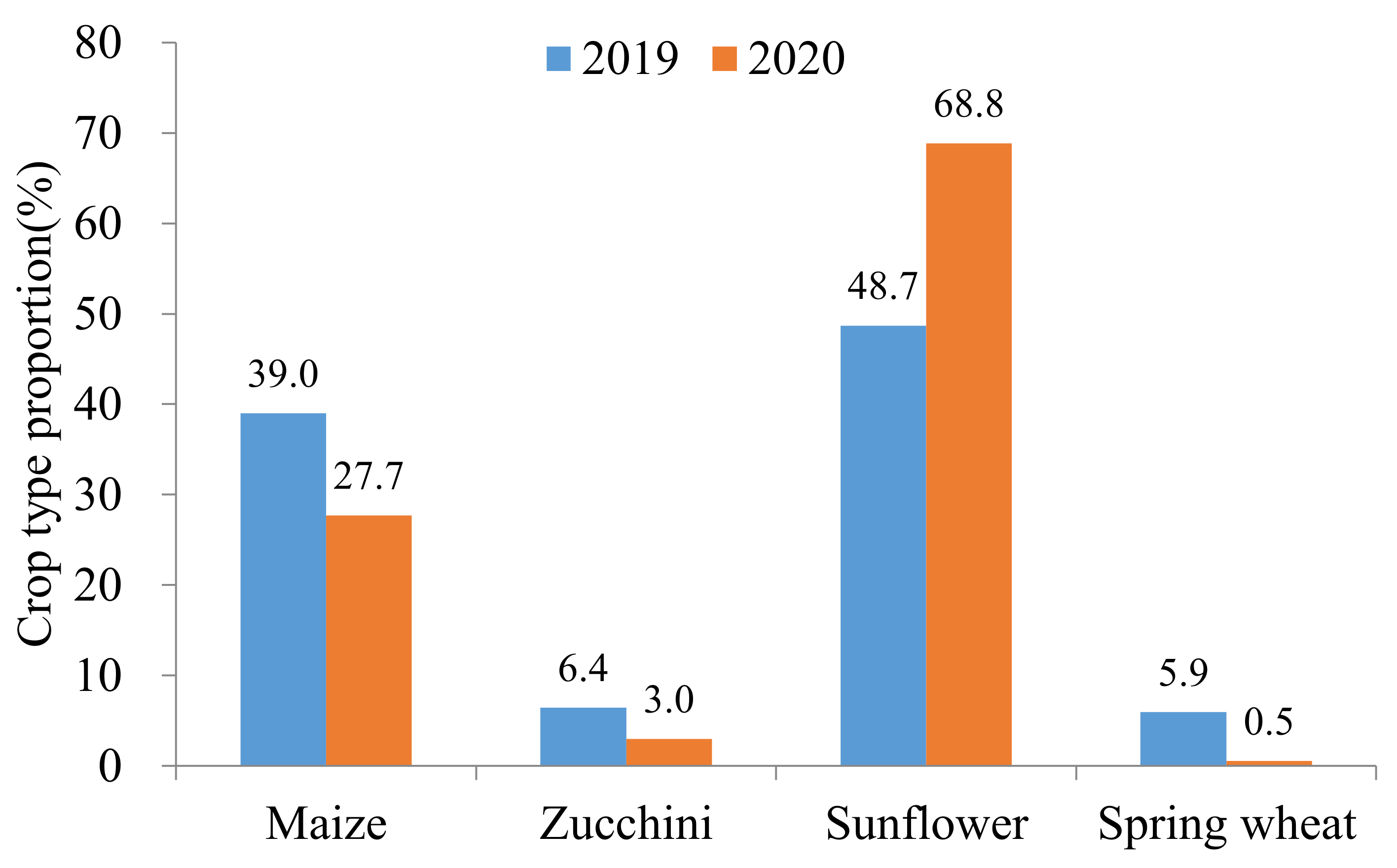

4.5. Analysis of Crop Type Proportions from 2019 to 2020

5. Discussion

5.1. Performance Analysis of Trained Model Transfer for Crop Type Classification

5.2. Performance Analysis of Trained Model Transfer for Crop Type Classification

5.3. Uncertainty Analysis and Outlook

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- UN. Transforming Our World: The 2030 Agenda for Sustainable Development. In A New Era in Global Health; Springer Publishing Company: New York, NY, USA, 2017. [Google Scholar]

- FAO; IFAD; UNICEF; WFP; WHO. The State of Food Security and Nutrition in the World 2021. Transforming Food Systems for Food Security, Improved Nutrition and Affordable Healthy Diets for All; FAO: Rome, Italy, 2021. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Hoogeveen, J.; Faurès, J.M.; Peiser, L.; Burke, J.; van de Giesen, N. GlobWat—A global water balance model to assess water use in irrigated agriculture. Hydrol. Earth Syst. Sci. 2015, 19, 3829–3844. [Google Scholar] [CrossRef] [Green Version]

- Zou, M.; Niu, J.; Kang, S.; Li, X.; Lu, H. The contribution of human agricultural activities to increasing evapotranspiration is significantly greater than climate change effect over Heihe agricultural region. Sci. Rep. 2017, 7, 8805. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zeng, H.; Wu, B.; Zhu, W.; Zhang, N. A trade-off method between environment restoration and human water consumption: A case study in Ebinur Lake. J. Clean. Prod. 2019, 217, 732–741. [Google Scholar] [CrossRef]

- Zhang, L.; Yin, X.A.; Xu, Z.; Zhi, Y.; Yang, Z. Crop Planting Structure Optimization for Water Scarcity Alleviation in China. J. Ind. Ecol. 2016, 20, 435–445. [Google Scholar] [CrossRef]

- Liu, J.; Wu, P.; Wang, Y.; Zhao, X.; Sun, S.; Cao, X. Impacts of changing cropping pattern on virtual water flows related to crops transfer: A case study for the Hetao irrigation district, China. J. Sci. Food Agric. 2014, 94, 2992–3000. [Google Scholar] [CrossRef] [PubMed]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

- Thenkabail, P.S.; Teluguntla, P.G.; Xiong, J.; Oliphant, A.; Congalton, R.G.; Ozdogan, M.; Gumma, M.K.; Tilton, J.C.; Giri, C.; Milesi, C.; et al. Global Cropland-Extent Product at 30-m Resolution (GCEP30) Derived from Landsat Satellite Time-Series Data for the Year 2015 Using Multiple Machine-Learning Algorithms on Google Earth Engine Cloud; U.S. Geological Survey: Reston, VA, USA, 2021; p. 63.

- d’Andrimont, R.; Verhegghen, A.; Lemoine, G.; Kempeneers, P.; Meroni, M.; van der Velde, M. From parcel to continental scale—A first European crop type map based on Sentinel-1 and LUCAS Copernicus in-situ observations. Remote Sens. Environ. 2021, 266, 112708. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m crop type maps in Northeast China during 2017–2019. Sci. Data 2021, 8, 41. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [Green Version]

- Yang, G.; Yu, W.; Yao, X.; Zheng, H.; Cao, Q.; Zhu, Y.; Cao, W.; Cheng, T. AGTOC: A novel approach to winter wheat mapping by automatic generation of training samples and one-class classification on Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102446. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient Identification of Corn Cultivation Area with Multitemporal Synthetic Aperture Radar and Optical Images in the Google Earth Engine Cloud Platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef] [Green Version]

- Lambert, M.-J.; Traoré, P.C.S.; Blaes, X.; Baret, P.; Defourny, P. Estimating smallholder crops production at village level from Sentinel-2 time series in Mali’s cotton belt. Remote Sens. Environ. 2018, 216, 647–657. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the Sen2-Agri automated system in various cropping systems around the world. Remote Sens. Environ. 2019, 221, 551–568. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved Early Crop Type Identification By Joint Use of High Temporal Resolution SAR And Optical Image Time Series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- Xun, L.; Zhang, J.; Cao, D.; Yang, S.; Yao, F. A novel cotton mapping index combining Sentinel-1 SAR and Sentinel-2 multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2021, 181, 148–166. [Google Scholar] [CrossRef]

- Kussul, N.; Kolotii, A.; Shelestov, A.; Lavrenyuk, M.; Bellemans, N.; Bontemps, S.; Defourny, P.; Koetz, B.; Symposium, R.S. Sentinel-2 for agriculture national demonstration in ukraine: Results and further steps. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5842–5845. [Google Scholar]

- Moumni, A.; Sebbar, B.E.; Simonneaux, V.; Ezzahar, J.; Lahrouni, A. Evaluation of Sen2agri System over Semi-Arid Conditions: A Case Study of The Haouz Plain in Central Morocco. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Tunis, Tunisia, 9–11 March 2020; pp. 343–346. [Google Scholar]

- Cintas, R.J.; Franch, B.; Becker-Reshef, I.; Skakun, S.; Sobrino, J.A.; van Tricht, K.; Degerickx, J.; Gilliams, S. Generating Winter Wheat Global Crop Calendars in the Framework of Worldcereal. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6583–6586. [Google Scholar]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available Sentinel 1/2 imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Tran, K.H.; Zhang, H.K.; McMaine, J.T.; Zhang, X.; Luo, D. 10 m crop type mapping using Sentinel-2 reflectance and 30 m cropland data layer product. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102692. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, B.; Ponce-Campos, G.E.; Zhang, M.; Chang, S.; Tian, F. Mapping up-to-Date Paddy Rice Extent at 10 M Resolution in China through the Integration of Optical and Synthetic Aperture Radar Images. Remote Sens. 2018, 10, 1200. [Google Scholar] [CrossRef] [Green Version]

- Hansen, M.C.; Egorov, A.; Potapov, P.V.; Stehman, S.V.; Tyukavina, A.; Turubanova, S.A.; Roy, D.P.; Goetz, S.J.; Loveland, T.R.; Ju, J.; et al. Monitoring conterminous United States (CONUS) land cover change with Web-Enabled Landsat Data (WELD). Remote Sens. Environ. 2014, 140, 466–484. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from Sentinel-2 and Landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Song, X.-P.; Potapov, P.V.; Krylov, A.; King, L.; Di Bella, C.M.; Hudson, A.; Khan, A.; Adusei, B.; Stehman, S.V.; Hansen, M.C. National-scale soybean mapping and area estimation in the United States using medium resolution satellite imagery and field survey. Remote Sens. Environ. 2017, 190, 383–395. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Iannelli, G.C.; Torres, M.A.; Martina, M.L.V. A Novel Strategy for Very-Large-Scale Cash-Crop Mapping in the Context of Weather-Related Risk Assessment, Combining Global Satellite Multispectral Datasets, Environmental Constraints, and In Situ Acquisition of Geospatial Data. Sensors 2018, 18, 591. [Google Scholar] [CrossRef] [Green Version]

- Gallego, J.; Delincé, J. The European land use and cover area-frame statistical survey. Agric. Surv. Methods 2010, 149–168. [Google Scholar] [CrossRef] [Green Version]

- Bingfang, W. Cloud services with big data provide a solution for monitoring and tracking sustainable development goals. Geogr. Sustain. 2020, 1, 25–32. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; See, L.; Schepaschenko, D.; van der Velde, M.; Kraxner, F.; Obersteiner, M. Geo-Wiki: An online platform for improving global land cover. Environ. Model. Softw. 2012, 31, 110–123. [Google Scholar] [CrossRef]

- Fritz, S.; McCallum, I.; Schill, C.; Perger, C.; Grillmayer, R.; Achard, F.; Kraxner, F.; Obersteiner, M. Geo-Wiki.Org: The Use of Crowdsourcing to Improve Global Land Cover. Remote Sens. 2009, 1, 345–354. [Google Scholar] [CrossRef] [Green Version]

- Laso Bayas, J.C.; Lesiv, M.; Waldner, F.; Schucknecht, A.; Duerauer, M.; See, L.; Fritz, S.; Fraisl, D.; Moorthy, I.; McCallum, I.; et al. A global reference database of crowdsourced cropland data collected using the Geo-Wiki platform. Sci. Data 2017, 4, 170136. [Google Scholar] [CrossRef]

- Yu, B.; Shang, S. Multi-Year Mapping of Maize and Sunflower in Hetao Irrigation District of China with High Spatial and Temporal Resolution Vegetation Index Series. Remote Sens. 2017, 9, 855. [Google Scholar] [CrossRef] [Green Version]

- Yu, B.; Shang, S.; Zhu, W.; Gentine, P.; Cheng, Y. Mapping daily evapotranspiration over a large irrigation district from MODIS data using a novel hybrid dual-source coupling model. Agric. For. Meteorol. 2019, 276–277, 107612. [Google Scholar] [CrossRef]

- Xu, X.; Huang, G.; Qu, Z.; Pereira, L.S. Assessing the groundwater dynamics and impacts of water saving in the Hetao Irrigation District, Yellow River basin. Agric. Water Manag. 2010, 98, 301–313. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, H.; Li, J.; Liu, Y.; Shi, R.; Du, H.; Chen, J. Occurrence and spatial variation of antibiotic resistance genes (ARGs) in the Hetao Irrigation District, China. Environ. Pollut. 2019, 251, 792–801. [Google Scholar] [CrossRef]

- Jiang, L.; Shang, S.; Yang, Y.; Guan, H. Mapping interannual variability of maize cover in a large irrigation district using a vegetation index—Phenological index classifier. Comput. Electron. Agric. 2016, 123, 351–361. [Google Scholar] [CrossRef]

- Wen, Y.; Shang, S.; Rahman, K.U. Pre-Constrained Machine Learning Method for Multi-Year Mapping of Three Major Crops in a Large Irrigation District. Remote Sens. 2019, 11, 242. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Sun, S.; Wu, P.; Wang, Y.; Zhao, X. Inter-county virtual water flows of the Hetao irrigation district, China: A new perspective for water scarcity. J. Arid. Environ. 2015, 119, 31–40. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, P.; Zhang, F.; Liu, X.; Yue, Q.; Wang, Y. Optimal irrigation water allocation in Hetao Irrigation District considering decision makers’ preference under uncertainties. Agric. Water Manag. 2021, 246, 106670. [Google Scholar] [CrossRef]

- Nie, W.-B.; Dong, S.-X.; Li, Y.-B.; Ma, X.-Y. Optimization of the border size on the irrigation district scale—Example of the Hetao irrigation district. Agric. Water Manag. 2021, 248, 106768. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Liu, M. Characterization of forest types in Northeastern China, using multi-temporal SPOT-4 VEGETATION sensor data. Remote Sens. Environ. 2002, 82, 335–348. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Dong, J.; Xiang, K.; Wang, S.; Han, W.; Yuan, W. A sub-pixel method for estimating planting fraction of paddy rice in Northeast China. Remote Sens. Environ. 2018, 205, 305–314. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Qiu, B.; Huang, Y.; Chen, C.; Tang, Z.; Zou, F. Mapping spatiotemporal dynamics of maize in China from 2005 to 2017 through designing leaf moisture based indicator from Normalized Multi-band Drought Index. Comput. Electron. Agric. 2018, 153, 82–93. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Lee, J.-S. Refined filtering of image noise using local statistics. Comput. Graph. Image Processing 1981, 15, 380–389. [Google Scholar] [CrossRef]

- Wu, B.; Tian, Y.; Li, Q. GVG, a crop type proportion sampling instrument. J. Remote Sens. 2004, 8, 570–580. (In Chinese) [Google Scholar]

- Johnson, D.M.; Mueller, R. Pre- and within-season crop type classification trained with archival land cover information. Remote Sens. Environ. 2021, 264, 112576. [Google Scholar] [CrossRef]

- Zeng, H.; Wu, B.; Wang, S.; Musakwa, W.; Tian, F.; Mashimbye, Z.E.; Poona, N.; Syndey, M. A Synthesizing Land-cover Classification Method Based on Google Earth Engine: A Case Study in Nzhelele and Levhuvu Catchments, South Africa. Chin. Geogr. Sci. 2020, 30, 397–409. [Google Scholar] [CrossRef]

- Potapov, P.V.; Turubanova, S.A.; Hansen, M.C.; Adusei, B.; Broich, M.; Altstatt, A.; Mane, L.; Justice, C.O. Quantifying forest cover loss in Democratic Republic of the Congo, 2000–2010, with Landsat ETM+ data. Remote Sens. Environ. 2012, 122, 106–116. [Google Scholar] [CrossRef]

- Liu, H.; Gong, P.; Wang, J.; Clinton, N.; Bai, Y.; Liang, S. Annual dynamics of global land cover and its long-term changes from 1982 to 2015. Earth Syst. Sci. Data 2020, 12, 1217–1243. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Wang, S.; Azzari, G.; Lobell, D.B. Crop type mapping without field-level labels: Random forest transfer and unsupervised clustering techniques. Remote Sens. Environ. 2019, 222, 303–317. [Google Scholar] [CrossRef]

- Martínez-Muñoz, G.; Suárez, A. Out-of-bag estimation of the optimal sample size in bagging. Pattern Recognit. 2010, 43, 143–152. [Google Scholar] [CrossRef] [Green Version]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Ekim, B.; Sertel, E. Deep neural network ensembles for remote sensing land cover and land use classification. Int. J. Digit. Earth 2021, 14, 1868–1881. [Google Scholar] [CrossRef]

- Lopez-Sanchez, J.M.; Cloude, S.R.; Ballester-Berman, J.D. Rice Phenology Monitoring by Means of SAR Polarimetry at X-Band. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2695–2709. [Google Scholar] [CrossRef]

- Liu, C.-A.; Chen, Z.-X.; Shao, Y.; Chen, J.-S.; Hasi, T.; Pan, H.-Z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef] [Green Version]

- Su, T.; Zhang, S. Object-based crop classification in Hetao plain using random forest. Earth Sci. Inform. 2021, 14, 119–131. [Google Scholar] [CrossRef]

- Guo, S.; Ruan, B.; Chen, H.; Guan, X.; Wang, S.; Xu, N.; Li, Y. Characterizing the spatiotemporal evolution of soil salinization in Hetao Irrigation District (China) using a remote sensing approach. Int. J. Remote Sens. 2018, 39, 6805–6825. [Google Scholar] [CrossRef]

- Forkuor, G.; Conrad, C.; Thiel, M.; Ullmann, T.; Zoungrana, E. Integration of Optical and Synthetic Aperture Radar Imagery for Improving Crop Mapping in Northwestern Benin, West Africa. Remote Sens. 2014, 6, 6472–6499. [Google Scholar] [CrossRef] [Green Version]

- Kaufman, Y.J.; Remer, L.A. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Guo, X.; Li, P. Mapping plastic materials in an urban area: Development of the normalized difference plastic index using WorldView-3 superspectral data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 214–226. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

| Band or Index | Central Wavelength/Index Formula | Satellite |

|---|---|---|

| VV | Vertically polarized backscatter | Sentinel-1 |

| VH | Horizontally polarized backscatter | Sentinel-1 |

| Blue | 490 nm | Sentinel-2 |

| Green | 560 nm | Sentinel-2 |

| Red | 665 nm | Sentinel-2 |

| RDED1 | 705 nm | Sentinel-2 |

| RDED2 | 740 nm | Sentinel-2 |

| RDED3 | 783 nm | Sentinel-2 |

| NIR | 842 nm | Sentinel-2 |

| SWIR1 | 1610 nm | Sentinel-2 |

| SWIR2 | 2190 nm | Sentinel-2 |

| NDVI | (NIR − RED)/(NIR + RED) | Sentinel-2 |

| RDNDVI1 | (NIR − RDED1)/(NIR + RDED1) | Sentinel-2 |

| RDNDVI2 | (NIR − RDED2)/(NIR + RDED2) | Sentinel-2 |

| EVI | 2.5×((NIR − RED)/(NIR + 6×RED − 7.5×BLUE + 1)) | Sentinel-2 |

| LSWI | (NIR − SWIR1)/(NIR + SWIR2) | Sentinel-2 |

| NDWI | (GREEN − NIR)/(GREEN + NIR) | Sentinel-2 |

| GCVI | (NIR/GREEN) − 1 | Sentinel-2 |

| RDGCVI1 | (NIR/RDED1) − 1 | Sentinel-2 |

| RDGCVI2 | (NIR/RDED2) − 1 | Sentinel-2 |

| Features | Metrics | Composite Method |

|---|---|---|

| Blue (B2) | B2_p5, B2_p25, B2_p50, B2_p75, B2_p95 | Percentile |

| Green (B3) | B3_p5, B3_p25, B3_p50, B3_p75, B3_p95 | |

| Red (B4) | B4_p5, B4_p25, B4_p50, B4_p75, B4_p95 | |

| Red Edge1 (B5) | Red Edge1_p5, Red Edge1_p25, Red Edge1_p50, Red Edge1_p75, Red Edge1_p95 | |

| Red Edge2 (B6) | Red Edge2_p5, Red Edge2_p25, Red Edge2_p50, Red Edge2_p75, Red Edge2_p95 | |

| Red Edge3 (B7) | Red Edge3_p5, Red Edge3_p25, Red Edge3_p50, Red Edge3_p75, Red Edge3_p95 | |

| NIR (B8) | B8_p5, B8_p25, B8_p50, B8_p75, B8_p95 | |

| SWIR1 (B11) | B11_p5, B11_p25, B11_p50, B11_p75, B11_p95 | |

| SWIR2 (B12) | B12_p5, B12_p25, B12_p50, B12_p75, B12_p95 | |

| NDVI | NDVI_p5, NDVI_p25, NDVI_p50, NDVI_p75, NDVI_p95 | |

| NDWI | NDWI_p5, NDWI_p25, NDWI_p50, NDWI_p75, NDWI_p95 | |

| LSWI | LSWI_p5, LSWI_p25, LSWI_p50, LSWI_p75, LSWI_p95 | |

| GCVI | GCVI_p5, GCVI_p25, GCVI_p50, GCVI_p75, GCVI_p95 | |

| RDNDVI1 | RDNDVI1_p5, RDNDVI1_p25, RDNDVI1_p50, RDNDVI1_p75, RDNDVI1_p95 | |

| RDNDVI2 | RDNDVI2_p5, RDNDVI2_p25, RDNDVI2_p50, RDNDVI2_p75, RDNDVI2_p95 | |

| RDGCVI1 | RDGCVI1_p5, RDGCVI1_p25, RDGCVI1_p50, RDGCVI1_p75, RDGCVI1_p95 | |

| RDGCVI2 | RDGCVI2_p5, RDGCVI2_p25, RDGCVI2_p50, RDGCVI2_p75, RDGCVI2_p95 | |

| EVI | EVI_p5, EVI_p25, EVI_p50, EVI_p75, EVI_p95 | |

| VV | VHP5, VHP25, VHP50, VHP75, VHP95 | |

| VH | VVP5, VVP25, VVP50, VVP75, VVP95 | |

| VV | VVMON4, VVMON5, VVMON6, VVMON7, VVMON8, VVMON9, VVMON10 | Monthly median |

| VH | VHMON4, VHMON5, VHMON6, VHMON7, VHMON8, VHMON9, VHMON10 |

| Maize | Zucchini | Sunflower | Spring Wheat | Other | PA | |

|---|---|---|---|---|---|---|

| Maize | 195 | 5 | 31 | 0 | 10 | 0.81 |

| Zucchini | 3 | 41 | 19 | 0 | 1 | 0.64 |

| Sunflower | 13 | 1 | 430 | 0 | 12 | 0.94 |

| Spring wheat | 0 | 0 | 0 | 35 | 0 | 1.00 |

| Other | 9 | 1 | 12 | 1 | 219 | 0.90 |

| UA | 0.89 | 0.85 | 0.87 | 0.97 | 0.90 | OA = 0.89 |

| F1-score | 0.85 | 0.73 | 0.91 | 0.99 | 0.90 |

| Maize | Zucchini | Sunflower | Spring Wheat | Other | PA | |

|---|---|---|---|---|---|---|

| Maize | 127 | 2 | 6 | 1 | 3 | 0.91 |

| Zucchini | 8 | 51 | 4 | 0 | 2 | 0.78 |

| Sunflower | 2 | 0 | 200 | 0 | 7 | 0.96 |

| Spring wheat | 6 | 0 | 0 | 44 | 4 | 0.81 |

| Other | 4 | 2 | 9 | 2 | 252 | 0.94 |

| UA | 0.86 | 0.93 | 0.91 | 0.94 | 0.94 | OA = 0.92 |

| F1-score | 0.89 | 0.85 | 0.93 | 0.87 | 0.94 |

| F1-Score | ||||

|---|---|---|---|---|

| Maize | 0.85 | 0.89 | 0.87 | 0.86 |

| Zucchini | 0.73 | 0.85 | 0.82 | 0.76 |

| Sunflower | 0.91 | 0.93 | 0.90 | 0.91 |

| Spring wheat | 0.99 | 0.87 | 0.91 | 0.93 |

| OA | 0.89 | 0.92 | 0.90 | 0.89 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Zeng, H.; Tian, F.; Zhang, M.; Wu, B.; Gilliams, S.; Li, S.; Li, Y.; Lu, Y.; Yang, H. An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China. Remote Sens. 2022, 14, 1208. https://doi.org/10.3390/rs14051208

Hu Y, Zeng H, Tian F, Zhang M, Wu B, Gilliams S, Li S, Li Y, Lu Y, Yang H. An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China. Remote Sensing. 2022; 14(5):1208. https://doi.org/10.3390/rs14051208

Chicago/Turabian StyleHu, Yueran, Hongwei Zeng, Fuyou Tian, Miao Zhang, Bingfang Wu, Sven Gilliams, Sen Li, Yuanchao Li, Yuming Lu, and Honghai Yang. 2022. "An Interannual Transfer Learning Approach for Crop Classification in the Hetao Irrigation District, China" Remote Sensing 14, no. 5: 1208. https://doi.org/10.3390/rs14051208