Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery

Abstract

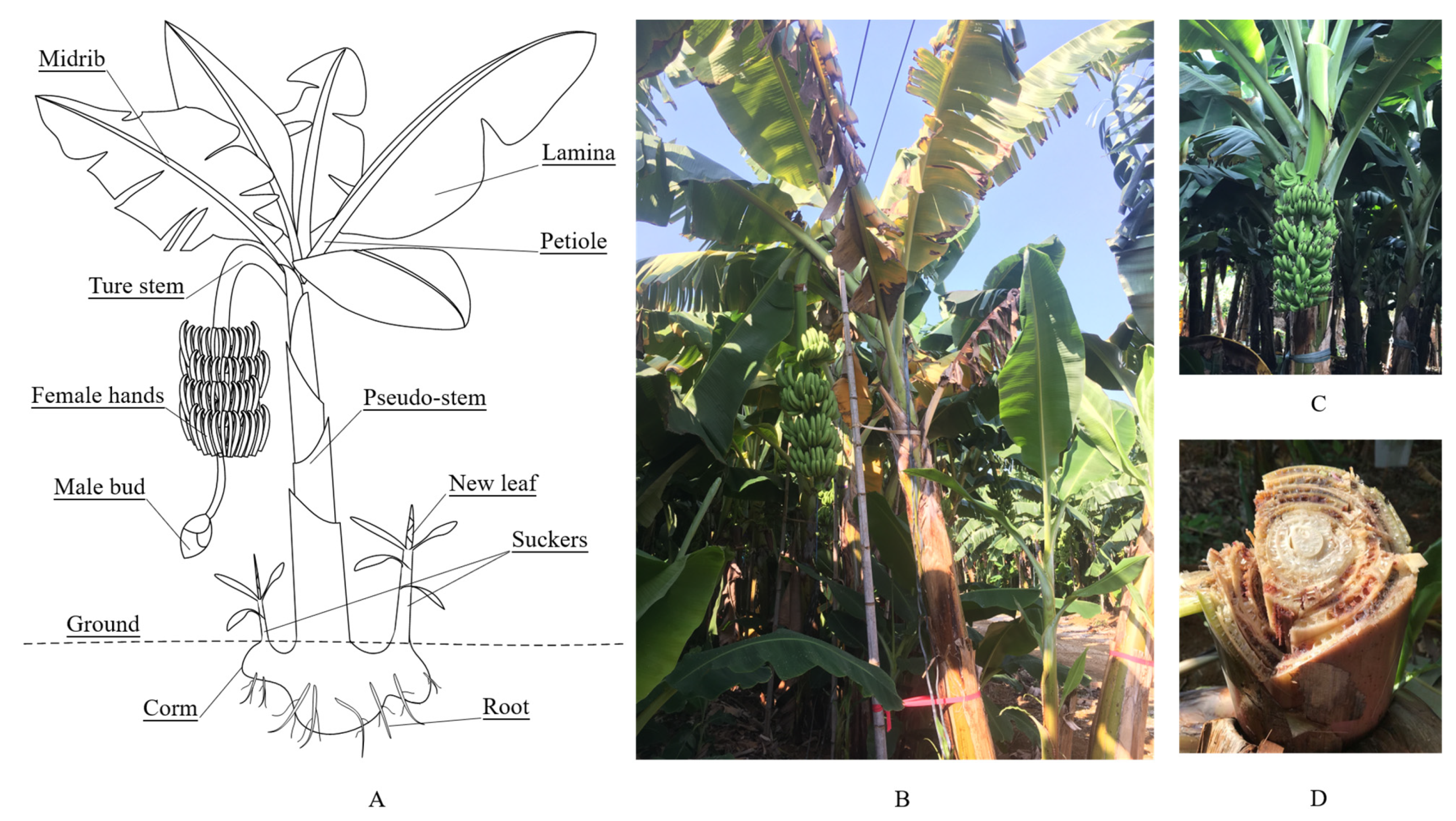

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Overall Workflow

2.3. Data Acquisition

2.4. Data Preprocessing

2.5. VIs

2.6. Classification Methods

2.6.1. SVM

2.6.2. RF

2.6.3. BPNN

2.6.4. LR

2.6.5. ISODATA

2.6.6. HA

2.7. Accuracy Assessment

3. Results

3.1. Spectral Feature Analyzing Results

3.1.1. Reflectance Difference of the Healthy and BFW-Infected Canopies

3.1.2. Feature Analyzing of the Selected VIs

3.2. Classification Results of the Supervised Models Based on Band Reflectance

3.3. Classification Results of the Unsupervised Models Based on Different VIs

3.3.1. Classification Results of the HA Models

3.3.2. Comparison of Results between the HA Models and the ISODATA Models

3.4. Classification Results in Plant Scale

4. Discussion

4.1. Spectral Features of BFW Disease

4.2. Performance Assessment of the Supervised Models

4.3. Performance Assessment of the Unsupervised Models

4.4. Optimal Classification Methods as Recommendations for Different Infection Stages

5. Conclusions

- BFW disease expressed obvious difference in red and NIR band, moderate difference in green band, and small difference in blue and RE band; the BFW-infected canopies had higher reflectance in the visible region, but lower reflectance in the NIR region. The VIs derived from the red, NIR, and green band showed significant difference between the BFW-infected class and the healthy class.

- The supervised methods had OAs of more than 96% for the five-band images and 88% for the three-band images based on pixel scale. SVM and RF were found to have the best consistency and stability among the four supervised methods, but the RF model based on the five-multispectral-band which had higher OA of 97.28% and faster running time of 22 min was considered as the optimal supervised model.

- For the unsupervised methods, HA, which utilized the statistical difference of VIs between the two classes as well as the local spatial distribution features, reached average OAs of more than 95% based on selected VIs both in July and August, showing an overwhelming advantage than ISODATA (52.61% in July, and 75.32% in August). VIs derived from the red and NIR band such as WDRVI, NDVI, and TDVI were recommended to build HA models.

- The supervised methods and unsupervised method (HA) yielded similar OAs of more than 95% in pixel-scale and similar distribution maps. Comprehensively considering the results of the classified areas and the plant-based OAs, the unsupervised method HA was recommended for BFW recognition due to its balance performance on accuracy and speed, especially in the late stage of infection; the supervised method RF was recommended in the early stage of infection to reach slightly higher accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Alonso Ruiz, H.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of banana plants and their major diseases through aerial images and machine learning methods: A case study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Olivares, B.O.; Rey, J.C.; Lobo, D.; Navas-Cortés, J.A.; Gómez, J.A.; Landa, B.B. Fusarium wilt of bananas: A review of agro-environmental factors in the Venezuelan production system affecting its development. Agronomy 2021, 11, 986. [Google Scholar] [CrossRef]

- Ploetz, R.C. Management of Fusarium wilt of banana: A review with special reference to tropical race 4. Crop Prot. 2015, 73, 7–15. [Google Scholar] [CrossRef]

- Pegg, K.G.; Coates, L.M.; O’Neill, W.T.; Turner, D.W. The epidemiology of Fusarium wilt of banana. Front. Plant Sci. 2019, 10, 1395. [Google Scholar] [CrossRef] [Green Version]

- Blomme, G.; Dita, M.; Jacobsen, K.S.; Vicente, L.P.; Molina, A.; Ocimati, W.; Poussier, S.; Prior, P. Bacterial diseases of bananas and enset: Current state of knowledge and integrated approaches toward sustainable management. Front. Plant Sci. 2017, 8, 1290. [Google Scholar] [CrossRef] [PubMed]

- Nakkeeran, S.; Rajamanickam, S.; Saravanan, R.; Vanthana, M.; Soorianathasundaram, K. Bacterial endophytome-mediated resistance in banana for the management of Fusarium wilt. 3 Biotech 2021, 11, 267. [Google Scholar] [CrossRef] [PubMed]

- Mahlein, A.K. Plant disease detection by imaging sensors-parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [Green Version]

- Zhong, C.Y.; Hu, Z.L.; Li, M.; Li, H.L.; Yang, X.J.; Liu, F. Real-time semantic segmentation model for crop disease leaves using group attention module. Trans. Chin. Soc. Agric. 2021, 37, 208–215, (In Chinese with English abstract). [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, J.; Ye, H.; Ali, M.L.; Nguyen, H.T.; Chen, P. Classification of soybean leaf wilting due to drought stress using UAV-based imagery. Comput. Electron. Agric. 2020, 175, 105576. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Huang, Z.; Yin, X.; Wei, S.; Lan, Y. Detection of citrus Huanglongbing based on multi-input neural network model of UAV hyperspectral remote sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Ishengoma, F.S.; Rai, I.A.; Said, R.N. Identification of maize leaves infected by fall armyworms using UAV-based imagery and convolutional neural networks. Comput. Electron. Agric. 2021, 184, 106124. [Google Scholar] [CrossRef]

- Lan, Y.; Huang, Z.; Deng, X.; Zhu, Z.; Huang, H.; Zheng, Z.; Lian, B.; Zeng, G.; Tong, Z. Comparison of machine learning methods for citrus greening detection on UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105234. [Google Scholar] [CrossRef]

- Rodríguez, J.; Lizarazo, I.; Prieto, F.; Angulo-Morales, V. Assessment of potato late blight from UAV-based multispectral imagery. Comput. Electron. Agric. 2021, 184, 106061. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.H. Spatio-temporal monitoring of wheat yellow rust using UAV multispectral imagery. Comput. Electron. Agric. 2019, 167, 105035. [Google Scholar] [CrossRef]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of banana Fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef] [Green Version]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Identification of banana Fusarium wilt using supervised classification algorithms with UAV-based multi-spectral imagery. Int. J. Agric. Biol. Eng. 2020, 13, 136–142. [Google Scholar] [CrossRef]

- Isip, M.F.; Alberto, R.T.; Biagtan, A.R. Exploring vegetation indices adequate in detecting twister disease of onion using Sentinel-2 imagery. Spat. Inf. Res. 2020, 28, 369–375. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Liu, H.J.; Cohen, S.; Tanny, J.; Lemcoff, J.H.; Huang, G. Transpiration estimation of banana (Musa sp.) plants with the thermal dissipation method. Plant Soil 2008, 308, 227–238. [Google Scholar] [CrossRef]

- Drenth, A.; Kema, G.H.J. The vulnerability of bananas to globally emerging disease threats. Phytopathology 2021, 111, 2146–2161. [Google Scholar] [CrossRef] [PubMed]

- Panigrahi, N.; Thompson, A.J.; Zubelzu, S.; Knox, J.W. Identifying opportunities to improve management of water stress in banana production. Sci. Hortic. 2021, 276, 109735. [Google Scholar] [CrossRef]

- Hernandez-Baquero, E. Characterization of the Earth’s Surface and Atmosphere from Multispectral and Hyperspectral Thermal Imagery. Ph.D. Thesis, Rochester Institute of Technology, Chester F. Carlsom Center for Imaging Science, Rochester, NY, USA, 2000. [Google Scholar]

- Dowman, I.; Dolloff, J.T. An evaluation of rational functions for photogrammetric restitution. Int. Arch. Photogramm. Remote Sens. 2000, 33, 252–266. [Google Scholar]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Kumar, S.; Röder, M.S.; Singh, R.P.; Kumar, S.; Chand, R.; Joshi, A.K.; Kumar, U. Mapping of spot blotch disease resistance using NDVI as a substitute to visual observation in wheat (Triticum aestivum L.). Mol. Breed. 2016, 36, 95. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Hass, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the 3rd Earth Resources Technology Satellite-1 (ERTS) Symposium, Washington, DC, USA, 10–14 December 1973; NASA SP-351. Volume 1, pp. 309–317. Available online: https://ntrs.nasa.gov/citations/19740022614 (accessed on 14 February 2022).

- Huete, A.R. A soil-adjusted vegetation indices (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Bannari, A.; Asalhi, H.; Teillet, P.M. Transformed difference vegetation indices (TDVI) for vegetation cover mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; pp. 3053–3055. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and FPAR: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Yang, Z.; Willis, P.; Mueller, R. Impact of band-ratio enhanced AWIFS image to crop classification accuracy. In Proceedings of the Pecora 17 Remote Sensing Symposium, Denver, CO, Canada, 18–20 November 2008; pp. 18–20. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Merzlyak, N.M.; Chivkunova, B.O. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Van der Linden, S.; Rabe, A.; Held, M.; Jakimow, B.; Leitão, P.; Okujeni, A.; Schwieder, M.; Suess, S.; Hostert, P. The EnMAP-box-a toolbox and application programming interface for EnMAP data processing. Remote Sens. 2015, 7, 11249–11266. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 415, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 1999; pp. 203–246. [Google Scholar] [CrossRef]

- Cramer, J.S. The Origins of Logistic Regression; Technical Report 119; Tinbergen Institute: Amsterdam, The Netherlands, 2002; pp. 167–178. [Google Scholar] [CrossRef] [Green Version]

- Ball, G.; Hall, D. ISODATA, a Novel Method of Data Analysis and Pattern Classification; Technical Report NTIS AD 699616; Stanford Research Institute: Stanford, CA, USA, 1965. [Google Scholar]

- Getis, A.; Ord, J.K. The analysis of spatial association by use of distance statistics. Geogr. Anal. 1992, 24, 189–206. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; Chapman and Hall: New York, NY, USA, 1986. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N. Signature analysis of leaf reflectance spectra: Algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 1996, 148, 494–500. [Google Scholar] [CrossRef]

- Jiang, Z.; Dong, Z.; Jiang, W.; Yang, Y. Recognition of rice leaf diseases and wheat leaf diseases based on multi-task deep transfer learning. Comput. Electron. Agric. 2021, 186, 106184. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, G.; Chen, A.; Yi, J.; Zhang, W.; Hu, Y. Identification of tomato leaf diseases based on combination of ABCK-BWTR and B-ARNet. Comput. Electron. Agric. 2020, 178, 105730. [Google Scholar] [CrossRef]

- Cabrera-Barona, P.F.; Jimenez, G.; Melo, P. Types of crime, poverty, population density and presence of police in the metropolitan district of Quito. ISPRS Int. J. Geo-Inf. 2019, 8, 558. [Google Scholar] [CrossRef] [Green Version]

- Achu, A.L.; Aju, C.D.; Suresh, V.; Manoharan, T.P.; Reghunath, R. Spatio-temporal analysis of road accident incidents and delineation of hotspots using geospatial tools in Thrissur District, Kerala, India. KN J. Cartogr. Geogr. Inf. 2019, 69, 255–265. [Google Scholar] [CrossRef]

- Rousta, I.; Doostkamian, M.; Haghighi, E.; Ghafarian Malamiri, H.R.; Yarahmadi, P. Analysis of spatial autocorrelation patterns of heavy and super-heavy rainfall in Iran. Adv. Atmos. Sci. 2017, 34, 1069–1081. [Google Scholar] [CrossRef]

- Mazumdar, J.; Paul, S.K. A spatially explicit method for identification of vulnerable hotspots of Odisha, India from potential cyclones. Int. J. Disaster Risk Reduct. 2018, 27, 391–405. [Google Scholar] [CrossRef]

- Watters, D.L.; Yoklavich, M.M.; Love, M.S.; Schroeder, D.M. Assessing marine debris in deep seafloor habitats off California. Mar. Pollut. Bull. 2010, 60, 131–138. [Google Scholar] [CrossRef]

- Kumar, D.; Singh, A.; Jha, R.K.; Sahoo, S.K.; Jha, V. Using spatial statistics to identify the uranium hotspot in groundwater in the mid-eastern Gangetic plain, India. Environ. Earth Sci. 2018, 77, 702. [Google Scholar] [CrossRef]

- Pinault, L.L.; Hunter, F.F. New highland distribution records of multiple Anopheles species in the Ecuadorian Andes. Malar. J. 2011, 10, 236. [Google Scholar] [CrossRef] [Green Version]

- Schwartz, G.G.; Rundquist, B.C.; Simon, I.J.; Swartz, S.E. Geographic distributions of motor neuron disease mortality and well water use in U.S. counties. Amyotroph. Lateral Scler. Front. Degener. 2017, 18, 279–283. [Google Scholar] [CrossRef] [Green Version]

- Liu, M.; Liu, M.; Li, Z.; Zhu, Y.; Liu, Y.; Wang, X.; Tao, L.; Guo, X. The spatial clustering analysis of COVID-19 and its associated factors in mainland china at the prefecture level. Sci. Total Environ. 2021, 777, 145992. [Google Scholar] [CrossRef]

| Dataset | Classes | 14 July 2020 | 23 August 2020 | ||

|---|---|---|---|---|---|

| Sample Plants | Sample Pixels/Pixel | Sample Plants | Sample Pixels/Pixel | ||

| Training set | BFW-infected | 96 | 21,644 | 98 | 62,507 |

| Healthy | 84 | 23,901 | 95 | 61,923 | |

| Testing set | BFW-infected | 43 | 16,803 | 48 | 32,035 |

| Healthy | 55 | 13,966 | 51 | 32,501 | |

| VIs | Calculation Formula | References |

|---|---|---|

| Normalized Difference Vegetation Index (NDVI) | [28] | |

| Soil Adjusted Vegetation Index (SAVI) | [29] | |

| Renormalized Difference Vegetation Index (RDVI) | [30] | |

| Wide Dynamic Range Vegetation Index (WDRVI) | [31] | |

| Transformed Difference Vegetation Index (TDVI) | [32] | |

| Simple Ratio Index (SRI) | [33] | |

| Modified Simple Ratio Index (MSRI) | [34] | |

| Non-Linear Index (NLI) | [35] | |

| Modified Non-Linear Index (MNLI) | [36] | |

| Green Difference Vegetation Index (GDVI) | [37] | |

| Anthocyanin Reflectance Index 1 (ARI1) | [38] | |

| Anthocyanin Reflectance Index 2 (ARI2) | [38] |

| Flight Dates | Inputs | Classes | SVM | RF | BPNN | LR | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision /% | Recall /% | F-Score | Precision /% | Recall /% | F-Score | Precision /% | Recall /% | F-Score | Precision /% | Recall /% | F-Score | |||

| 14 July 2020 | Three-visible-band images | BFW-infected | 99.07 | 90.61 | 0.95 | 96.80 | 92.73 | 0.95 | 95.51 | 95.30 | 0.95 | 99.96 | 79.27 | 0.88 |

| Healthy | 89.55 | 98.95 | 0.94 | 91.50 | 96.23 | 0.94 | 94.37 | 94.61 | 0.94 | 79.70 | 99.96 | 0.89 | ||

| OA/% | 94.35 | 94.30 | 94.99 | 88.56 | ||||||||||

| Kappa coefficient | 0.89 | 0.89 | 0.90 | 0.77 | ||||||||||

| Five-multispectral-band images | BFW-infected | 98.39 | 96.30 | 0.97 | 98.10 | 96.95 | 0.98 | 97.89 | 97.07 | 0.97 | 99.29 | 93.93 | 0.97 | |

| Healthy | 95.57 | 98.06 | 0.97 | 96.30 | 97.69 | 0.97 | 96.51 | 97.48 | 0.97 | 93.14 | 99.19 | 0.96 | ||

| OA/% | 97.09 | 97.28 | 97.25 | 96.32 | ||||||||||

| Kappa coefficient | 0.94 | 0.95 | 0.95 | 0.93 | ||||||||||

| Contribution of the RE and NIR bands in OA/% | 2.74 | 2.98 | 2.26 | 7.76 | ||||||||||

| 23 August 2020 | Three-visible-band images | BFW-infected | 93.66 | 96.15 | 0.95 | 87.83 | 96.42 | 0.92 | 93.50 | 95.68 | 0.95 | 99.70 | 78.30 | 0.88 |

| Healthy | 96.12 | 93.61 | 0.95 | 96.47 | 86.83 | 0.91 | 95.99 | 93.44 | 0.95 | 82.40 | 99.77 | 0.90 | ||

| OA/% | 94.87 | 91.59 | 94.55 | 89.13 | ||||||||||

| Kappa coefficient | 0.90 | 0.83 | 0.89 | 0.78 | ||||||||||

| Five-multispectral-band images | BFW-infected | 95.95 | 98.12 | 0.97 | 95.95 | 97.37 | 0.97 | 95.64 | 97.71 | 0.97 | 98.08 | 95.04 | 0.97 | |

| Healthy | 98.11 | 95.94 | 0.97 | 97.72 | 95.95 | 0.97 | 98.04 | 95.61 | 0.97 | 95.27 | 98.17 | 0.97 | ||

| OA/% | 97.02 | 96.66 | 96.65 | 96.62 | ||||||||||

| Kappa coefficient | 0.94 | 0.93 | 0.93 | 0.93 | ||||||||||

| Contribution of the RE and NIR bands in OA/% | 2.15 | 5.07 | 2.10 | 7.49 | ||||||||||

| Classifier | Training Time Based on the Five-Band Images/min |

|---|---|

| SVM | 245 |

| RF | 22 |

| BPNN | 31 |

| LR | 2 |

| Classifier | Classes | 14 July 2020 | 23 August 2020 | ||||

|---|---|---|---|---|---|---|---|

| Sample Pixels /Pixel | Area/Ha | Percentage of the Studied Area/% | Sample Pixels /Pixel | Area/Ha | Percentage of the Studies Area/% | ||

| SVM | BFW-infected | 2,780,478 | 0.53 | 33.13 | 2,724,278 | 0.52 | 32.50 |

| Healthy | 4,336,422 | 0.83 | 51.88 | 4,810,415 | 0.91 | 56.88 | |

| RF | BFW-infected | 2,798,059 | 0.53 | 33.13 | 2,685,407 | 0.51 | 31.88 |

| Healthy | 4,374,251 | 0.83 | 51.88 | 4,849,286 | 0.92 | 57.50 | |

| BPNN | BFW-infected | 3,114,152 | 0.59 | 36.88 | 2,800,873 | 0.53 | 33.13 |

| Healthy | 4,058,158 | 0.77 | 48.13 | 4,733,820 | 0.90 | 56.25 | |

| LR | BFW-infected | 2,359,306 | 0.45 | 28.13 | 2,282,519 | 0.43 | 26.88 |

| Healthy | 4,757,502 | 0.91 | 56.88 | 5,252,174 | 1.00 | 62.50 | |

| Inputs | Classes | 14 July 2020 | 23 August 2020 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision/% | Recall/% | F-Score | OA/% | Kappa Coefficient | Precision/% | Recall/% | F-Score | OA/% | Kappa Coefficient | ||

| MSRI | BFW-infected | 99.04 | 96.18 | 0.98 | 97.58 | 0.95 | 94.23 | 94.49 | 0.94 | 94.28 | 0.89 |

| Healthy | 96.15 | 99.03 | 0.98 | 94.63 | 94.07 | 0.94 | |||||

| SRI | BFW-infected | 98.48 | 96.66 | 0.98 | 97.54 | 0.95 | 96.86 | 94.72 | 0.96 | 95.81 | 0.92 |

| Healthy | 96.60 | 98.45 | 0.98 | 94.80 | 96.91 | 0.96 | |||||

| WDRVI | BFW-infected | 99.84 | 94.24 | 0.97 | 96.99 | 0.94 | 95.47 | 94.84 | 0.95 | 95.14 | 0.90 |

| Healthy | 94.36 | 99.84 | 0.97 | 95.03 | 95.45 | 0.95 | |||||

| NDVI | BFW-infected | 99.96 | 92.86 | 0.96 | 96.34 | 0.93 | 98.40 | 92.67 | 0.95 | 95.55 | 0.91 |

| Healthy | 93.10 | 99.96 | 0.96 | 93.20 | 98.45 | 0.96 | |||||

| TDVI | BFW-infected | 99.96 | 92.68 | 0.96 | 96.25 | 0.93 | 98.75 | 91.94 | 0.95 | 95.36 | 0.91 |

| Healthy | 92.94 | 99.96 | 0.96 | 92.59 | 98.80 | 0.96 | |||||

| GDVI | BFW-infected | 98.95 | 83.30 | 0.90 | 91.05 | 0.82 | 98.48 | 96.01 | 0.97 | 97.24 | 0.95 |

| Healthy | 85.12 | 99.09 | 0.92 | 96.27 | 98.49 | 0.97 | |||||

| RDVI | BFW-infected | 99.22 | 94.04 | 0.97 | 91.54 | 0.83 | 99.65 | 92.72 | 0.96 | 96.17 | 0.92 |

| Healthy | 85.72 | 99.32 | 0.92 | 93.33 | 99.65 | 0.96 | |||||

| SAVI | BFW-infected | 99.31 | 83.47 | 0.91 | 91.29 | 0.83 | 99.68 | 92.76 | 0.96 | 96.21 | 0.92 |

| Healthy | 85.29 | 99.39 | 0.92 | 93.36 | 99.68 | 0.96 | |||||

| NLI | BFW-infected | 100.00 | 86.86 | 0.93 | 93.31 | 0.87 | 99.70 | 91.80 | 0.96 | 95.73 | 0.92 |

| Healthy | 88.01 | 100.00 | 0.94 | 92.53 | 99.69 | 0.96 | |||||

| MNLI | BFW-infected | 99.03 | 82.66 | 0.90 | 90.76 | 0.82 | 99.23 | 91.96 | 0.95 | 95.60 | 0.91 |

| Healthy | 84.65 | 99.16 | 0.91 | 92.64 | 99.26 | 0.96 | |||||

| ARI2 | BFW-infected | 91.49 | 95.46 | 0.93 | 93.17 | 0.86 | 80.41 | 93.84 | 0.87 | 85.43 | 0.71 |

| Healthy | 95.07 | 90.80 | 0.93 | 92.77 | 76.96 | 0.84 | |||||

| ARI1 | BFW-infected | 62.97 | 82.91 | 0.72 | 66.48 | 0.33 | 63.91 | 85.53 | 0.73 | 68.50 | 0.37 |

| Healthy | 65.67 | 38.18 | 0.48 | 84.03 | 74.26 | 0.79 | |||||

| Inputs | Classes | 14 July 2020 | 23 August 2020 | ||||

|---|---|---|---|---|---|---|---|

| Sample Pixels /Pixel | Area/Ha | Percentage of Study Area/% | Sample Pixels /Pixel | Area/Ha | Percentage of Study Area/% | ||

| MSRI | BFW-infected | 2,284,183 | 0.43 | 26.88 | 2,563,659 | 0.49 | 30.63 |

| Healthy | 4,888,127 | 0.93 | 58.13 | 4,965,306 | 0.94 | 58.75 | |

| WDRVI | BFW-infected | 1,797,986 | 0.34 | 21.25 | 2,525,353 | 0.48 | 30.00 |

| Healthy | 5,374,324 | 1.02 | 63.75 | 5,009,340 | 0.95 | 59.38 | |

| NDVI | BFW-infected | 1,612,276 | 0.31 | 19.38 | 2,080,738 | 0.39 | 24.38 |

| Healthy | 5,560,034 | 1.05 | 65.63 | 5,453,955 | 1.04 | 65.00 | |

| GDVI | BFW-infected | 2,549,798 | 0.48 | 30.00 | 2,853,141 | 0.54 | 33.75 |

| Healthy | 4,622,512 | 0.88 | 55.00 | 4,681,552 | 0.89 | 55.63 | |

| Methods | VI | Classes | 14 July 2020 | 23 August 2020 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision/% | Recall/% | OA/% | Kappa Coefficient | Precision/% | Recall/% | OA/% | Kappa Coefficient | |||

| HA | MSRI | BFW-infected | 99.02 | 96.72 | 97.86 | 0.96 | 94.23 | 94.49 | 94.28 | 0.89 |

| Healthy | 96.74 | 99.02 | 94.63 | 94.07 | ||||||

| WDRVI | BFW-infected | 99.84 | 94.24 | 96.99 | 0.94 | 95.47 | 94.84 | 95.14 | 0.90 | |

| Healthy | 94.36 | 99.84 | 95.03 | 95.45 | ||||||

| NDVI | BFW-infected | 99.96 | 93.33 | 96.61 | 0.93 | 98.40 | 92.67 | 95.55 | 0.91 | |

| Healthy | 93.64 | 99.96 | 93.20 | 98.45 | ||||||

| GDVI | BFW-infected | 99.12 | 82.91 | 91.01 | 0.82 | 98.48 | 96.01 | 97.24 | 0.95 | |

| Healthy | 85.08 | 99.25 | 96.27 | 98.49 | ||||||

| Average OAs | 95.62 | 95.55 | ||||||||

| ISODATA | MSRI | BFW-infected | 99.73 | 3.13 | 53.21 | 0.03 | 80.04 | 67.18 | 75.13 | 0.50 |

| Healthy | 52.49 | 99.99 | 71.56 | 83.14 | ||||||

| WDRVI | BFW-infected | 100.00 | 0.02 | 49.13 | 0.0002 | 99.93 | 37.98 | 67.33 | 0.37 | |

| Healthy | 49.12 | 100.00 | 59.18 | 99.97 | ||||||

| NDVI | BFW-infected | 100.00 | 0.20 | 51.84 | 0.003 | 99.60 | 63.67 | 81.65 | 0.63 | |

| Healthy | 51.84 | 99.99 | 74.17 | 99.74 | ||||||

| GDVI | BFW-infected | 99.62 | 2.25 | 52.78 | 0.02 | 61.79 | 99.97 | 69.18 | 0.39 | |

| Healthy | 52.27 | 99.99 | 99.92 | 38.60 | ||||||

| Average OAs | 51.74 | 73.32 | ||||||||

| Models | Classes | 14 July 2020 | 23 August 2020 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ground Truth | Commission Error /% | Omission Error /% | OA/% | Ground Truth | Commission Error /% | Omission Error /% | OA/% | |||||

| BFW Infected | Healthy | BFW Infected | Healthy | |||||||||

| Supervised methods | SVM | BFW-infected | 124 | 0 | 0.00 | 10.79 | 94.60 | 123 | 0 | 0.00 | 15.75 | 92.12 |

| Healthy | 15 | 139 | 9.74 | 0.00 | 23 | 146 | 13.61 | 0.00 | ||||

| RF | BFW-infected | 120 | 0 | 0.00 | 13.67 | 93.17 | 123 | 0 | 0.00 | 15.75 | 92.12 | |

| Healthy | 19 | 139 | 12.03 | 0.00 | 23 | 146 | 13.61 | 0.00 | ||||

| BPNN | BFW-infected | 123 | 0 | 0.00 | 11.51 | 94.24 | 123 | 0 | 0.00 | 15.75 | 92.12 | |

| Healthy | 16 | 139 | 10.32 | 0.00 | 23 | 146 | 13.61 | 0.00 | ||||

| Average OAs | 94.00 | 92.12 | ||||||||||

| HA | MSRI | BFW-infected | 118 | 0 | 0.00 | 15.11 | 92.45 | 132 | 0 | 0.00 | 9.59 | 95.21 |

| Healthy | 21 | 139 | 13.13 | 0.00 | 14 | 146 | 8.75 | 0.00 | ||||

| WDRVI | BFW-infected | 108 | 0 | 0.00 | 22.30 | 88.85 | 131 | 0 | 0.00 | 10.27 | 94.86 | |

| Healthy | 31 | 139 | 18.24 | 0.00 | 15 | 146 | 9.32 | 0.00 | ||||

| NDVI | BFW-infected | 104 | 0 | 0.00 | 25.18 | 87.41 | 120 | 0 | 0.00 | 17.81 | 91.10 | |

| Healthy | 35 | 139 | 20.11 | 0.00 | 26 | 146 | 15.12 | 0.00 | ||||

| GDVI | BFW-infected | 119 | 0 | 0.00 | 14.39 | 92.81 | 131 | 0 | 0.00 | 10.27 | 94.86 | |

| Healthy | 20 | 139 | 12.58 | 0.00 | 15 | 146 | 9.32 | 0.00 | ||||

| Average OAs | 90.38 | 94.01 | ||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Li, X.; Ba, Y.; Lyu, X.; Zhang, M.; Li, M. Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sens. 2022, 14, 1231. https://doi.org/10.3390/rs14051231

Zhang S, Li X, Ba Y, Lyu X, Zhang M, Li M. Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sensing. 2022; 14(5):1231. https://doi.org/10.3390/rs14051231

Chicago/Turabian StyleZhang, Shimin, Xiuhua Li, Yuxuan Ba, Xuegang Lyu, Muqing Zhang, and Minzan Li. 2022. "Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery" Remote Sensing 14, no. 5: 1231. https://doi.org/10.3390/rs14051231

APA StyleZhang, S., Li, X., Ba, Y., Lyu, X., Zhang, M., & Li, M. (2022). Banana Fusarium Wilt Disease Detection by Supervised and Unsupervised Methods from UAV-Based Multispectral Imagery. Remote Sensing, 14(5), 1231. https://doi.org/10.3390/rs14051231