Fusion and Classification of SAR and Optical Data Using Multi-Image Color Components with Differential Gradients

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Satellite Data

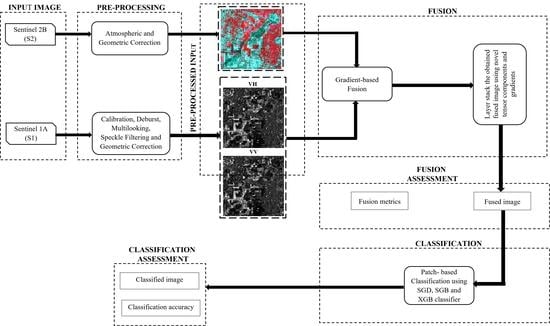

2.2. Proposed Methodology

2.2.1. Preprocessing

2.2.2. Fusion

- Absolute Difference

- 2.

- Sobel-based gradient of the S1 and S2 image

- 3.

- Color components of the gradients in x, y, and z direction

- 4.

- Applying fusion rule for SAR and optical image

- 5.

- Obtain final gradient image using gradient color components

- 6.

- Obtaining final fused image

2.2.3. Classification

- Stochastic Gradient Descent (SGD) Classifier

- 2.

- Stochastic Gradient Boosting (SGB) Classifier

- 3.

- Extreme Gradient Boosting (XGB) Classifier

3. Results

3.1. Fusion

- (1)

- Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) calculates the quality in terms of normalized average error of the fused image. A higher value of ERGAS indicates distortion in the fused image whereas lower value indicates similarity of the reference and fused images.

- (2)

- Spectral Angle Mapper (SAM) computes the spectral angle between the pixels, vector of the reference image, and fused image. A lower value of SAM closer or equal to zero indicates the absence of spectral distortion.

- (3)

- Relative Average Spectral Error (RASE) represents the average performance in the spectral bands where the lower value of RASE indicates higher spectral quality of the fused image.

- (4)

- Universal Image Quality Index (UIQI) computes the data transformation from reference image to the fused image. Range of this metric is −1 to 1 and the value close to 1 indicates the similarity of the reference and fused images.

- (5)

- Structural Similarity Index (SSIM) compares the local patterns of pixel intensities of the reference and fused images. Range of this metric varies from −1 to 1 and the value closer to 1 indicates similarity of the reference and fused images.

- (6)

- Peak Signal-to-Noise Ratio (PSNR) is calculated by dividing the corresponding pixels of the fused image with the reference. A higher value of this metric indicates superior fusion that suggests the similarity of the reference and fused images.

- (7)

- Correlation Coefficient (CC) computes the similarity of spectral features between the reference and fused images. The value closer to 1 indicates the similarity of the reference and fused images.

3.2. Classification

4. Discussion

4.1. Fusion

4.2. Classification

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Karathanassi, V.; Kolokousis, P.; Ioannidou, S. A Comparison Study on Fusion Methods Using Evaluation Indicators. Int. J. Remote Sens. 2007, 28, 2309–2341. [Google Scholar] [CrossRef]

- Abdikan, S.; Balik Sanli, F.; Sunar, F.; Ehlers, M. A Comparative Data-Fusion Analysis of Multi-Sensor Satellite Images. Int. J. Digit. Earth 2014, 7, 671–687. [Google Scholar] [CrossRef]

- Farah, I.R.; Ahmed, M.B. Towards an Intelligent Multi-Sensor Satellite Image Analysis Based on Blind Source Separation Using Multi-Source Image Fusion. Int. J. Remote Sens. 2010, 31, 13–38. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Bakar, S.A.; Yao, K.; Idrees, M.O.; Pradhan, B. Fusion of RADARSAT-2 and Multispectral Optical Remote Sensing Data for LULC Extraction in a Tropical Agricultural Area. Geocarto Int. 2017, 32, 735–748. [Google Scholar] [CrossRef]

- Parihar, N.; Rathore, V.S.; Mohan, S. Combining ALOS PALSAR and AVNIR-2 Data for Effective Land Use/Land Cover Classification in Jharia Coalfields Region. Int. J. Image Data Fusion 2017, 8, 130–147. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the Pansharpening Methods for Remote Sensing Images Based on the Idea of Meta-Analysis: Practical Discussion and Challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Zhang, F.; Ni, J.; Yin, Q.; Li, W.; Li, Z.; Liu, Y.; Hong, W. Nearest-Regularized Subspace Classification for PolSAR Imagery Using Polarimetric Feature Vector and Spatial Information. Remote Sens. 2017, 9, 1114. [Google Scholar] [CrossRef] [Green Version]

- Shakya, A.; Biswas, M.; Pal, M. Parametric Study of Convolutional Neural Network Based Remote Sensing Image Classification. Int. J. Remote Sens. 2021, 42, 2663–2685. [Google Scholar] [CrossRef]

- Sheoran, A.; Haack, B. Optical and Radar Data Comparison and Integration: Kenya Example. Geocarto Int. 2014, 29, 370–382. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, L.; Mei, J.-P. Stochastic Gradient Descent Based Fuzzy Clustering for Large Data. In Proceedings of the 2014 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Beijing, China, 6–11 July 2014; pp. 2511–2518. [Google Scholar]

- Tripathi, G.; Pandey, A.C.; Parida, B.R.; Kumar, A. Flood Inundation Mapping and Impact Assessment Using Multi-Temporal Optical and SAR Satellite Data: A Case Study of 2017 Flood in Darbhanga District, Bihar, India. Water Resour. Manag. 2020, 34, 1871–1892. [Google Scholar] [CrossRef]

- Shakya, A.; Biswas, M.; Pal, M. CNN-Based Fusion and Classification of SAR and Optical Data. Int. J. Remote Sens. 2020, 41, 8839–8861. [Google Scholar] [CrossRef]

- Clerici, N.; Valbuena Calderón, C.A.; Posada, J.M. Fusion of Sentinel-1A and Sentinel-2A Data for Land Cover Mapping: A Case Study in the Lower Magdalena Region, Colombia. J. Maps 2017, 13, 718–726. [Google Scholar] [CrossRef] [Green Version]

- Hughes, L.H.; Merkle, N.; Burgmann, T.; Auer, S.; Schmitt, M. Deep Learning for SAR-Optical Image Matching. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July 2019–2 August 2019; pp. 4877–4880. [Google Scholar]

- Benedetti, P.; Ienco, D.; Gaetano, R.; Ose, K.; Pensa, R.G.; Dupuy, S. M3Fusion: A Deep Learning Architecture for Multiscale Multimodal Multitemporal Satellite Data Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4939–4949. [Google Scholar] [CrossRef] [Green Version]

- Pajares, G.; Manuel de la Cruz, J. A Wavelet-Based Image Fusion Tutorial. Pattern Recognit. 2004, 37, 1855–1872. [Google Scholar] [CrossRef]

- Lewis, J.J.; O’Callaghan, R.J.; Nikolov, S.G.; Bull, D.R.; Canagarajah, N. Pixel- and Region-Based Image Fusion with Complex Wavelets. Inf. Fusion 2007, 8, 119–130. [Google Scholar] [CrossRef]

- Toet, A.; van Ruyven, L.J.; Valeton, J.M. Merging Thermal And Visual Images By A Contrast Pyramid. Opt. Eng. 1989, 28, 287789. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, J.; Chen, C.; Fan, F.; Ma, J. Infrared and Visible Image Fusion Using Total Variation Model. Neurocomputing 2016, 202, 12–19. [Google Scholar] [CrossRef]

- Zhao, J.; Cui, G.; Gong, X.; Zang, Y.; Tao, S.; Wang, D. Fusion of Visible and Infrared Images Using Global Entropy and Gradient Constrained Regularization. Infrared Phys. Technol. 2017, 81, 201–209. [Google Scholar] [CrossRef]

- Chen, Y.; Bruzzone, L. Self-Supervised SAR-Optical Data Fusion of Sentinel-1/-2 Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5406011. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, Y.; Yang, X.; Gao, S.; Li, F.; Kong, A.; Zu, D.; Sun, L. Improved Remote Sensing Image Classification Based on Multi-Scale Feature Fusion. Remote Sens. 2020, 12, 213. [Google Scholar] [CrossRef]

- Sun, Y.; Qin, Q.; Ren, H.; Zhang, T.; Chen, S. Red-Edge Band Vegetation Indices for Leaf Area Index Estimation From Sentinel-2/MSI Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 826–840. [Google Scholar] [CrossRef]

- Jiang, J.; Liu, L.; Wang, L.; Shao, W.; Yan, Y. Fusion of Visible and Infrared Images Based on Multiple Differential Gradients. J. Mod. Opt. 2020, 67, 329–339. [Google Scholar] [CrossRef]

- Hua, Y.; Liu, W. Generalized Karhunen-Loeve Transform. IEEE Signal Process. Lett. 1998, 5, 141–142. [Google Scholar] [CrossRef] [Green Version]

- Pandey, P.C.; Koutsias, N.; Petropoulos, G.P.; Srivastava, P.K.; Ben Dor, E. Land Use/Land Cover in View of Earth Observation: Data Sources, Input Dimensions, and Classifiers—A Review of the State of the Art. Geocarto Int. 2021, 36, 957–988. [Google Scholar] [CrossRef]

- Pal, M. Random Forest Classifier for Remote Sensing Classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Ghimire, B.; Rogan, J.; Galiano, V.R.; Panday, P.; Neeti, N. An Evaluation of Bagging, Boosting, and Random Forests for Land-Cover Classification in Cape Cod, Massachusetts, USA. GIScience Remote Sens. 2012, 49, 623–643. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of Machine-Learning Classification in Remote Sensing: An Applied Review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Rogan, J.; Miller, J.; Stow, D.; Franklin, J.; Levien, L.; Fischer, C. Land-Cover Change Monitoring with Classification Trees Using Landsat TM and Ancillary Data. Photogramm. Eng. Remote Sens. 2003, 69, 793–804. [Google Scholar] [CrossRef] [Green Version]

- Pal, M.; Mather, P.M. Support Vector Machines for Classification in Remote Sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools, and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 1. [Google Scholar] [CrossRef]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional Vegetation Cover Estimation Algorithm for Chinese GF-1 Wide Field View Data. Remote Sens. Environ. 2016, 177, 184–191. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Al-Obeidat, F.; Al-Taani, A.T.; Belacel, N.; Feltrin, L.; Banerjee, N. A Fuzzy Decision Tree for Processing Satellite Images and Landsat Data. Procedia Comput. Sci. 2015, 52, 1192–1197. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2019, 12, 86. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.; Weng, Q. A Survey of Image Classification Methods and Techniques for Improving Classification Performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Ghatkar, J.G.; Singh, R.K.; Shanmugam, P. Classification of Algal Bloom Species from Remote Sensing Data Using an Extreme Gradient Boosted Decision Tree Model. Int. J. Remote Sens. 2019, 40, 9412–9438. [Google Scholar] [CrossRef]

- Pham, T.; Dang, H.; Le, T.; Le, H.-T. Stochastic Gradient Descent Support Vector Clustering. In Proceedings of the 2015 2nd National Foundation for Science and Technology Development Conference on Information and Computer Science (NICS), Ho Chi Minh City, Vietnam, 16–18 September 2015; pp. 88–93. [Google Scholar]

- Friedman, J.H. Stochastic Gradient Boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An Assessment of Support Vector Machines for Land Cover Classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Nguyen, T.; Duong, P.; Le, T.; Le, A.; Ngo, V.; Tran, D.; Ma, W. Fuzzy Kernel Stochastic Gradient Descent Machines. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 3226–3232. [Google Scholar]

- Labusch, K.; Barth, E.; Martinetz, T. Robust and Fast Learning of Sparse Codes With Stochastic Gradient Descent. IEEE J. Sel. Top. Signal Process. 2011, 5, 1048–1060. [Google Scholar] [CrossRef]

- Singh, A.; Ahuja, N. On Stochastic Gradient Descent and Quadratic Mutual Information for Image Registration. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 1326–1330. [Google Scholar]

- Jafarzadeh, H.; Mahdianpari, M.; Gill, E.; Mohammadimanesh, F.; Homayouni, S. Bagging and Boosting Ensemble Classifiers for Classification of Multispectral, Hyperspectral and PolSAR Data: A Comparative Evaluation. Remote Sens. 2021, 13, 4405. [Google Scholar] [CrossRef]

- Prasad, A.M.; Iverson, L.R.; Liaw, A. Newer Classification and Regression Tree Techniques: Bagging and Random Forests for Ecological Prediction. Ecosystems 2006, 9, 181–199. [Google Scholar] [CrossRef]

- Powell, S.L.; Cohen, W.B.; Healey, S.P.; Kennedy, R.E.; Moisen, G.G.; Pierce, K.B.; Ohmann, J.L. Quantification of Live Aboveground Forest Biomass Dynamics with Landsat Time-Series and Field Inventory Data: A Comparison of Empirical Modeling Approaches. Remote Sens. Environ. 2010, 114, 1053–1068. [Google Scholar] [CrossRef]

- Moisen, G.G.; Frescino, T.S. Comparing Five Modelling Techniques for Predicting Forest Characteristics. Ecol. Model. 2002, 157, 209–225. [Google Scholar] [CrossRef] [Green Version]

- Man, C.D.; Nguyen, T.T.; Bui, H.Q.; Lasko, K.; Nguyen, T.N.T. Improvement of Land-Cover Classification over Frequently Cloud-Covered Areas Using Landsat 8 Time-Series Composites and an Ensemble of Supervised Classifiers. Int. J. Remote Sens. 2018, 39, 1243–1255. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; Vanhuysse, S.; Lennert, M.; Shimoni, M.; Wolff, E. Very High Resolution Object-Based Land Use–Land Cover Urban Classification Using Extreme Gradient Boosting. IEEE Geosci. Remote Sens. Lett. 2018, 15, 607–611. [Google Scholar] [CrossRef] [Green Version]

- Hirayama, H.; Sharma, R.C.; Tomita, M.; Hara, K. Evaluating Multiple Classifier System for the Reduction of Salt-and-Pepper Noise in the Classification of Very-High-Resolution Satellite Images. Int. J. Remote Sens. 2019, 40, 2542–2557. [Google Scholar] [CrossRef]

- Di Zenzo, S. A Note on the Gradient of a Multi-Image. Comput. Vis. Graph. Image Process. 1986, 33, 116–125. [Google Scholar] [CrossRef]

- Lawrence, R. Classification of Remotely Sensed Imagery Using Stochastic Gradient Boosting as a Refinement of Classification Tree Analysis. Remote Sens. Environ. 2004, 90, 331–336. [Google Scholar] [CrossRef]

| Class Number | Class Name | Training Samples | Testing Samples |

|---|---|---|---|

| 1 | Fallow land | 663 | 221 |

| 2 | Built-up-area | 1376 | 459 |

| 3 | Dense Vegetation | 217 | 72 |

| 4 | Fenugreek | 126 | 42 |

| 5 | Fodder | 85 | 28 |

| 6 | Gram | 533 | 178 |

| 7 | Sparse Vegetation | 2058 | 686 |

| 8 | Wheat | 773 | 258 |

| 9 | Mustard | 310 | 103 |

| 10 | Oat | 337 | 112 |

| 11 | Pea | 371 | 124 |

| 12 | Spinach | 179 | 60 |

| Fusion Approach | Polarization | Fusion Metric | ||||||

|---|---|---|---|---|---|---|---|---|

| ERGAS | SAM | UIQI | SSIM | CC | RASE | PSNR | ||

| KLT | VH | 54.17 | 126.28 | −1.63 | 4.49 | 0.01 | −175.9 | −49.02 |

| VV | 56.32 | 131.6 | 3.09 | 4.48 | 0.05 | −165.91 | −53.96 | |

| GIV | VH | 6.04 | 4.94 | 0.79 | 0.47 | 0.96 | 24.17 | 36.47 |

| VV | 5.75 | 4.16 | 0.83 | 0.79 | 0.97 | 22.98 | 38.56 | |

| Proposed | VH | 6.62 | 2.94 | 0.86 | 0.78 | 0.98 | 26.47 | 38.27 |

| VV | 6.01 | 3.11 | 0.88 | 0.85 | 0.98 | 24.05 | 39.80 | |

| Classification Approach with Patch Size = 3 | Polarization | Accuracy Measure | |

|---|---|---|---|

| Overall Accuracy (%) | Kappa Value | ||

| SGD | VH | 71.16 | 0.65 |

| VV | 71.39 | 0.66 | |

| SGB | VH | 91.42 | 0.89 |

| VV | 91.89 | 0.90 | |

| XGB | VH | 93.72 | 0.92 |

| VV | 94.75 | 0.93 | |

| XGB Classifier with Patch Size = 3 | Accuracy Measure | |

|---|---|---|

| Overall Accuracy (%) | Kappa Value | |

| S2 | 88.49 | 86.25 |

| S2 layer stacked with S1 (VV) | 90.01 | 88.08 |

| S2 layer stacked with S1 (VH) | 89.43 | 87.38 |

| Class Name | Overall Accuracy (%) | ||||

|---|---|---|---|---|---|

| S2 | S2 + VH | S2 + VV | Proposed Approach | ||

| VH | VV | ||||

| Fallow land | 93.00 | 93.00 | 95.00 | 94.00 | 96.00 |

| Built-up-area | 99.00 | 99.00 | 99.00 | 100.00 | 100.00 |

| Dense Vegetation | 86.00 | 93.00 | 89.00 | 88.00 | 97.00 |

| Fenugreek | 38.00 | 38.00 | 61.00 | 56.00 | 62.00 |

| Fodder | 59.00 | 73.00 | 62.00 | 91.00 | 91.00 |

| Gram | 81.00 | 82.00 | 83.00 | 93.00 | 92.00 |

| Sparse Vegetation | 99.00 | 99.00 | 99.00 | 100.00 | 99.00 |

| Wheat | 77.00 | 82.00 | 78.00 | 87.00 | 87.00 |

| Mustard | 70.00 | 75.00 | 60.00 | 84.00 | 89.00 |

| Oat | 71.00 | 80.00 | 84.00 | 79.00 | 87.00 |

| Pea | 83.00 | 87.00 | 81.00 | 95.00 | 91.00 |

| Spinach | 71.00 | 57.00 | 68.00 | 92.00 | 93.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shakya, A.; Biswas, M.; Pal, M. Fusion and Classification of SAR and Optical Data Using Multi-Image Color Components with Differential Gradients. Remote Sens. 2023, 15, 274. https://doi.org/10.3390/rs15010274

Shakya A, Biswas M, Pal M. Fusion and Classification of SAR and Optical Data Using Multi-Image Color Components with Differential Gradients. Remote Sensing. 2023; 15(1):274. https://doi.org/10.3390/rs15010274

Chicago/Turabian StyleShakya, Achala, Mantosh Biswas, and Mahesh Pal. 2023. "Fusion and Classification of SAR and Optical Data Using Multi-Image Color Components with Differential Gradients" Remote Sensing 15, no. 1: 274. https://doi.org/10.3390/rs15010274