An Overview of Key SLAM Technologies for Underwater Scenes

Abstract

:1. Introduction

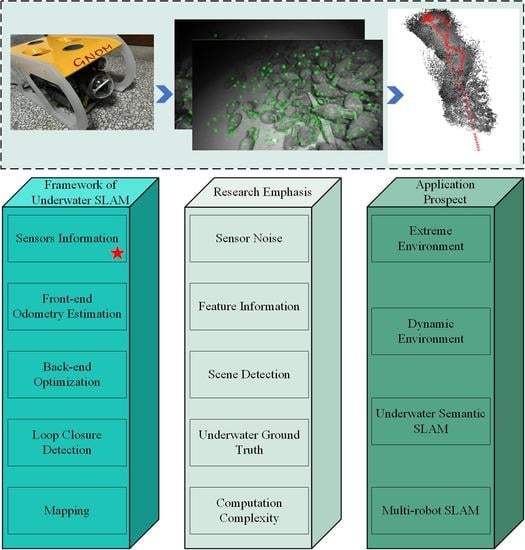

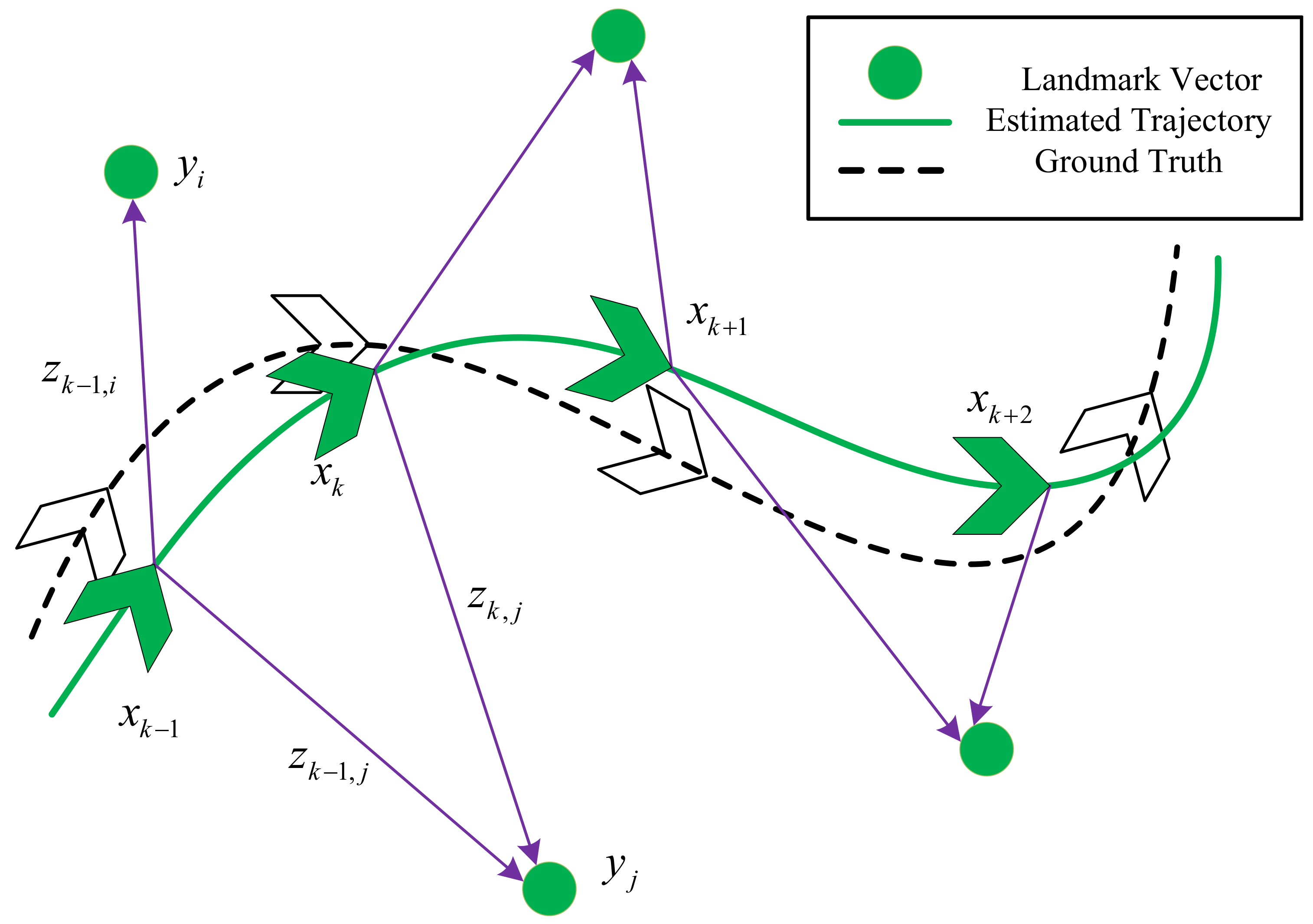

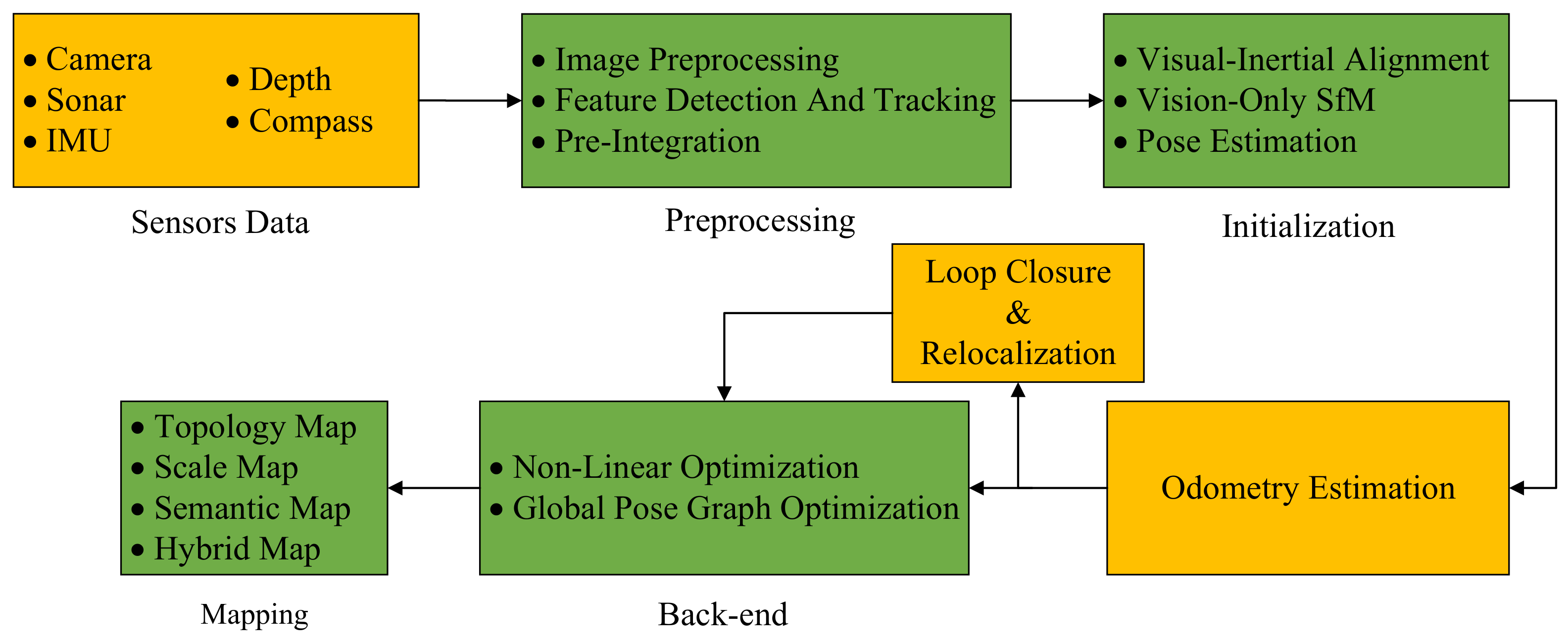

2. Framework of Underwater SLAM

2.1. Sensor Information

2.1.1. Proprioceptive Sensors

2.1.2. Exteroceptive Sensors

- (1)

- Vision SensorsSLAM based on vision sensors is an important class of SLAM algorithms, which can be classified into monocular, stereo, and RGB-D SLAM depending on the type of camera used. Additionally, algorithms such as ORB-SLAM3 can also be employed for pinhole and fisheye cameras.

- Monocular CameraMonocular cameras use a single lens to generate images, offering advantages such as a low cost, a simple structure, and usability. Mono SLAM was the first implementation of real-time monocular vision SLAM. In underwater scenarios, Hidalgo et al. conducted controlled experiments using ORB-SLAM under different setup conditions, as reported in their paper [43]. The results demonstrated that ORB-SLAM could be used effectively under the conditions of a sufficient illumination, low flicker, and rich scene features. On the other hand, they also indicated that monocular cameras were susceptible to light variations, object motion, and texture blurring when used in underwater scenarios.Ferrera et al. [44] presented a new monocular visual odometry method that was robust to turbid and dynamic underwater environments. Results showed that the optical flow method had better tracking performance than the classical descriptor-based methods. The optical flow tracking was further enhanced by adding a retracking mechanism, making it robust to short occlusions caused by environmental dynamics. The algorithm was evaluated on both simulated and real underwater datasets and could be used in applications such as underwater archaeology. Roznere et al. [45] proposed a real-time depth estimation method for underwater monocular camera images by fusing measurements from a single-beam echosounder. The proposed method matched the echosounder measurements with the detected feature points of the monocular SLAM system and then integrated them into the monocular SLAM system to adjust the visible map points and scale. They implemented the proposed method in ORB-SLAM2 and evaluated its performance in a swimming pool and the ocean to verify the improved effect of image depth estimation, which proved that the method had a certain application value in underwater exploration and mapping.

- Stereo CameraIn monocular camera SLAM, the scale problem cannot be determined due to the lack of depth information. In contrast, a stereo camera can acquire the distance between the camera and the object using the parallax principle. Mei et al. [46] presented a relative SLAM for the constant-time estimation of structure and motion with a stereo camera system as the only sensor. This approach employed a topological metric representation of relative position sequences based on a heuristic quadtree approach, which allowed for real-time processing while not strictly limiting the size of the maps that could be constructed. Moreover, Pi et al. [47] proposed a visual SLAM method based on a stereo camera as a sensor, leveraging the SURF algorithm for feature detection and matching and the EKF to fuse the feature coordinates and AUV pose to enable motion estimation in real time and feature map construction. Furthermore, Zhang et al. [48] suggested an underwater stereo visual–inertial localization method (FBUS-EKF) based on an open-source benchmark in the EKF framework. This method fused inertial and visual information and eliminated severe noise in order to implement a SLAM system. Experimental results indicated that the typical localization error of the FBUS-EKF method was less than 3%. Thus, stereo cameras hold great promise for the accurate proximity operation and localization of underwater robots.

- RGB-D CameraRGB-D cameras can obtain RGB maps and depth maps directly by physical ranging. According to their principles, they can be divided into structured light methods (e.g., Kinect v1) and time-of-flight methods (e.g., Kinect v2). However, existing RGB-D cameras typically use infrared light, which is severely attenuated in underwater environments and has high measurement limitations. As a result, it is difficult to use RGB-D cameras as vision sensors for underwater vision SLAM. Therefore, monocular and stereo cameras remain the most popularly used underwater vision sensors.

- (2)

- Sonar SensorsThe lack of illumination in the underwater environment can significantly impact the quality of the final images. To overcome this issue, sonar can be used to detect and locate objects in the absence of light by exploiting their property of reflecting sound waves. Compared to vision, sound waves demonstrate a smaller attenuation rate and longer propagation distance than light in marine scenes and are not affected by light and geomagnetic interference. Sonar sensors can be categorized into forward-looking sonar (FLS), side-scan sonar (SSS), and acoustic lens sonar (ALS) according to the scanning mode. FLS can be further divided into single-beam sonar and multibeam sonar. The basic principles of sonar SLAM are shown in Figure 6.

- Single-beam SonarSonar is an essential external detection sensor for simultaneous localization and mapping in underwater vehicles. To this end, a variational Bayesian-based simultaneous localization and mapping method for autonomous underwater vehicle navigation (VB-AUFastSLAM) was proposed based on the Unscented-FastSLAM (UFastSLAM) and the variational Bayesian (VB) approaches [49]. The proposed algorithm was validated in an open-source simulation environment, and its effectiveness in the marine environment was subsequently verified by constructing an underwater vehicle SLAM system based on an inertial navigation system, a Doppler velocity log (DVL), and a single-beam mechanical scanning imaging sonar (MSIS).

- Multibeam SonarMultibeam sonar (MBS), as sonar for underwater sounding, has become one of the most dominant survey instruments employed in marine activities. The multibeam echosounder (MBES) typically consists of a projector and a hydrophone, which are responsible for transmitting and receiving echo soundings to measure topography. An MBE can have several hundred beams, making it the most suitable sonar sensor for deep water-terrain applications [50]. In [51], a filter-based multibeam forward-looking sonar (MFLS) algorithm for underwater SLAM was presented. Environmental features were extracted using an MFLS and the acquired sonar images were converted to a sparse point-cloud format by threshold segmentation and distance-constrained filtering to avoid a computational explosion problem. Furthermore, the method also fused DVL, IMU, and sonar data of the underwater vehicle to estimate the position of the vehicle and generate an occupancy grid map using a SLAM method based on a Rao–Blackwellized particle filter (RBPF) [52].

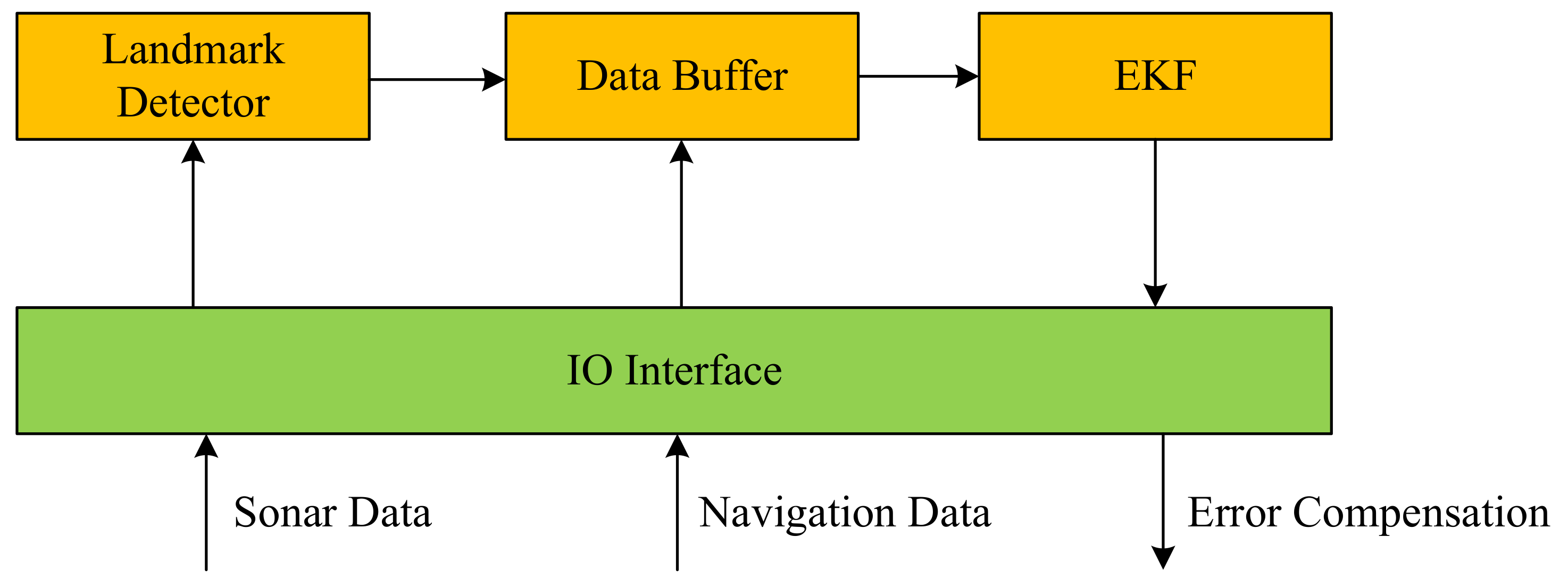

- Side-scan Sonar.Although MBS has a high resolution, it is a bathymetric tool rather than an imaging system. Side-scan sonar (SSS), with a wider range of applications, is now a commonly used tool for detecting submarine targets such as wrecks, mines, and pipelines. SSS can visually provide acoustic imaging of the seafloor morphology with a relatively high resolution. MBS and SSS have good complementarity in detecting seafloor targets and can improve the accuracy of underwater SLAM. Side et al. [53] described a side-scan sonar SLAM system for online drift compensation for underwater robots. The processing chain consisted of an automatic landmark detector, an automatic data association module, and the SLAM filter. In order to improve the robustness of the whole system while satisfying real-time performance, a batch processing method based on joint compatibility branch and bound (JCBB) was used for data association [54]. The effectiveness of the system was verified in sea trials. Furthermore, there are other sonar systems such as synthetic aperture sonar (SAS) [55] and dual-frequency identification sonar (DIDSON) [56]. A comprehensive survey of sonar SLAM can be found in [57,58,59].

- (3)

- LiDAR SensorsLiDAR sensors are capable of providing high-frequency range measurements that can operate consistently in complex lighting conditions and optically featureless scenarios [60]. Compared to camera or sonar imaging, laser-scanning imaging can provide higher-resolution 3D measurements of the seafloor in scenes lacking texture underwater. These point cloud data, generated by LiDAR, can provide easy access to the SLAM system. Moreover, the data generated by LiDAR can be used to accurately map the seafloor and create detailed 3D models. Additionally, LiDAR sensors can be used to detect objects in the environment, allowing for the creation of more accurate navigational maps. Therefore, LiDAR has become a popular choice for seafloor mapping and navigation.Collings et al. [61] deployed an underwater LiDAR system in parallel with an MBES to survey Kingston Reef of Rottnest Island, Western Australia. In that paper, the relative accuracy and characteristics of underwater LiDAR and multibeam sonar were compared and summarized to map the habitat. Massot et al. [62] proposed a bathymetric SLAM solution for underwater vehicles. The alignment problem of point clouds collected from a single-line-laser structured-light system was solved. In that work, the relative uncertainty in the vehicle localization was reduced by using time-constrained subgraphs. Three translational degrees of freedom and one localization degree of freedom were also used for positional estimation. However, the system could not utilize traditional SLAM image features. Palomer et al. [63] used a 3D underwater laser-scanning system to achieve underwater pipeline structure mapping on a Girona 500 AUV, which can be used for SLAM framework construction.However, the data quality of LiDAR measurements is susceptible to extreme environments and the point cloud alignment errors caused by the smoothness of the motion. Therefore, the use of single-laser sensors in underwater SLAM environments is more restrictive. Debeunne et al. [64] provides a comprehensive survey on visual–LiDAR SLAM. Solutions using vision, LiDAR, and sensor fusion of both modalities are highlighted.

2.1.3. Multiple Sensors

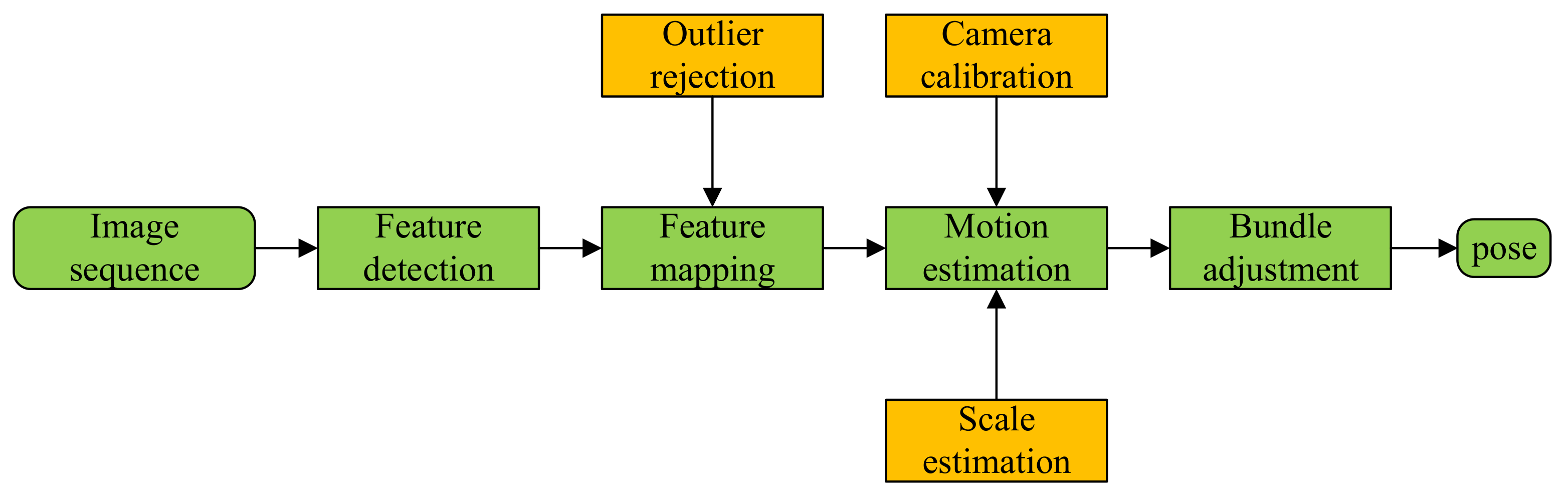

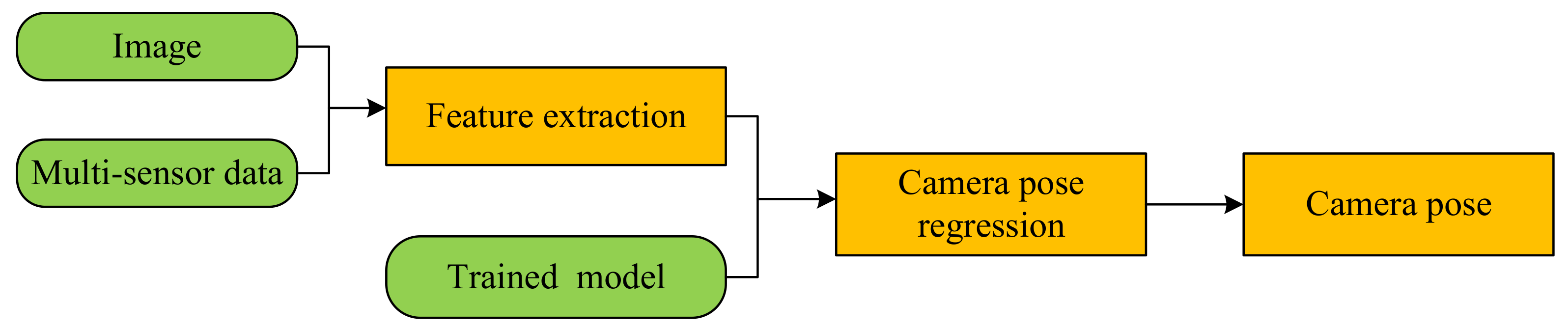

2.2. Front-End Odometry Estimation

2.3. Back-End Optimization

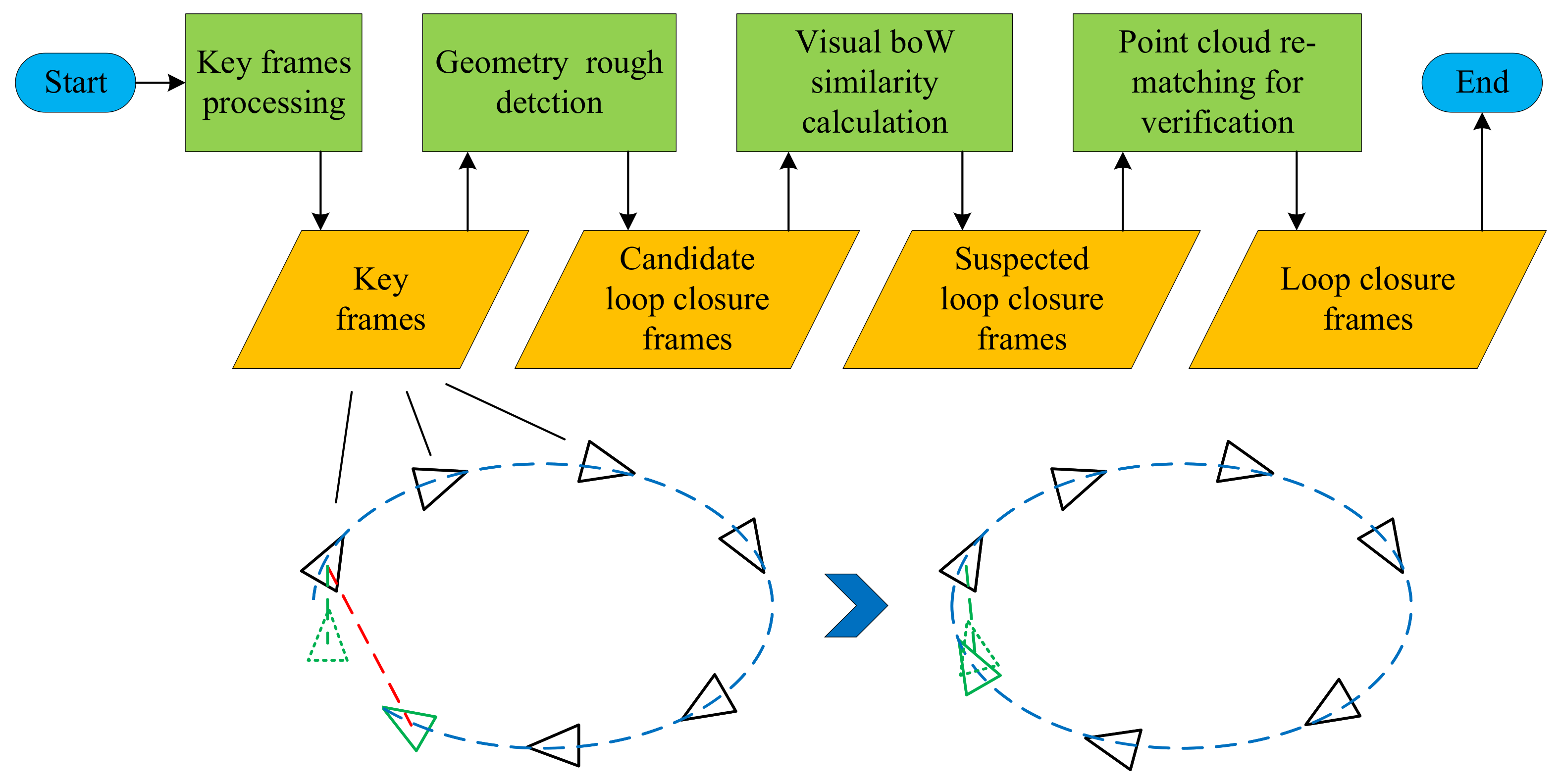

2.4. Loop-Closure Detection

2.5. Mapping

- (1)

- Topology MapTopological maps possess a high degree of abstraction and are well-suited to environments with large areas and simple structures. This approach represents the environment as a graph in a topological sense, with nodes in the graph corresponding to a feature state or location in the environment. Key frames are utilized as nodes of the map, and common data associations between them are used as edges of the map. By abstracting the map into nodes and edges in line with graph theory, the maps’ compatibility with human thinking is improved [100]. Choset et al. proposed a novel approach to simultaneous localization and mapping (SLAM) that utilized the topology of free space to localize the robot on a partially constructed map [101].Topological maps can be used for path planning, due to their relatively small storage and search space, making them computationally efficient. Furthermore, they enable the utilization of numerous sophisticated and efficient search and inference algorithms [102]. However, topological maps typically lack metric information and are therefore unsuitable for navigation. The use of such maps relies on the identification and matching of topological nodes. If the environment is too similar, topological map methods may have difficulty distinguishing between two points.

- (2)

- Scale Map

- Raster MapThe raster map divides the 3D environment space into cubes of equal size, each representing an area of 3D space in the real environment. The value of each cube reflects the probability of an obstacle existing in the corresponding 3D space. The raster map preserves as much information as possible about the entire environment, enabling self-positioning, path planning, localization, navigation, and obstacle avoidance. Furthermore, it has great advantages for fusing multisensor information, such as weighted average methods and D-S evidence inference methods. However, as the size of the environment increases, more computation and storage space are required. When the number of rasters increases, for instance in large-scale environments or when the environment is divided in greater detail, the maintenance behavior of the map becomes more difficult. The search space in the localization process is large and, without a better simplification algorithm, real-time performance is poor [103].

- Landmark MapGeometric features of the environment are represented using parametric features (e.g., points, lines, and planes). Based on the feature point density, these can be further classified into sparse, semidense, and dense maps. Notably, sparse road maps can only be used for localization, whereas dense maps can be used for navigation and obstacle avoidance functions [104].

- Point Cloud MapThe environment is described by a large number of three-dimensional spatial points, discretizing all objects in the environment into a dense point cloud [105]. Such point cloud maps are suitable for localization, navigation, obstacle avoidance, and 3D reconstruction. Meanwhile, large-scale environments necessitate a greater amount of computation and more storage space.

- (3)

- Semantic MapSemantic maps are composed of several distinguishable semantic elements, which can be either scene types or object types. The emphasis is placed on associating semantic concepts with objects in the map, giving them a more abstract meaning. Furthermore, these maps enable mobile robots to act more intelligently and perform more complex interaction tasks [106]. However, the types of objects in different environments often differ. On the one hand, it is not possible to assign semantic concepts to all objects when constructing semantic classes. On the other hand, objects of the same type may differ significantly, while objects of different classes may be more similar, making it difficult to create cognitive maps for complex environments. Furthermore, the complex cognitive map creation algorithm also necessitates a greater computational effort.

- (4)

- Hybrid MapCurrently, no single map representation is capable of adequately meeting all task requirements (localization, navigation, obstacle avoidance, path planning, 3D reconstruction, interaction, etc.) and performance criteria (high accuracy, speed, low computational effort, small storage space, etc.). Consequently, it would be more advantageous to describe the underwater environment using multiple different map representations, thereby harnessing the advantages of each and ultimately achieving different objectives.

3. Research Emphasis and Difficulties

3.1. Sensor Noise

3.2. Feature Limitation

3.3. Scene Detection

3.4. Underwater Ground Truth

3.5. Computational Complexity

4. Discussion

4.1. Underwater SLAM in Extreme Environments

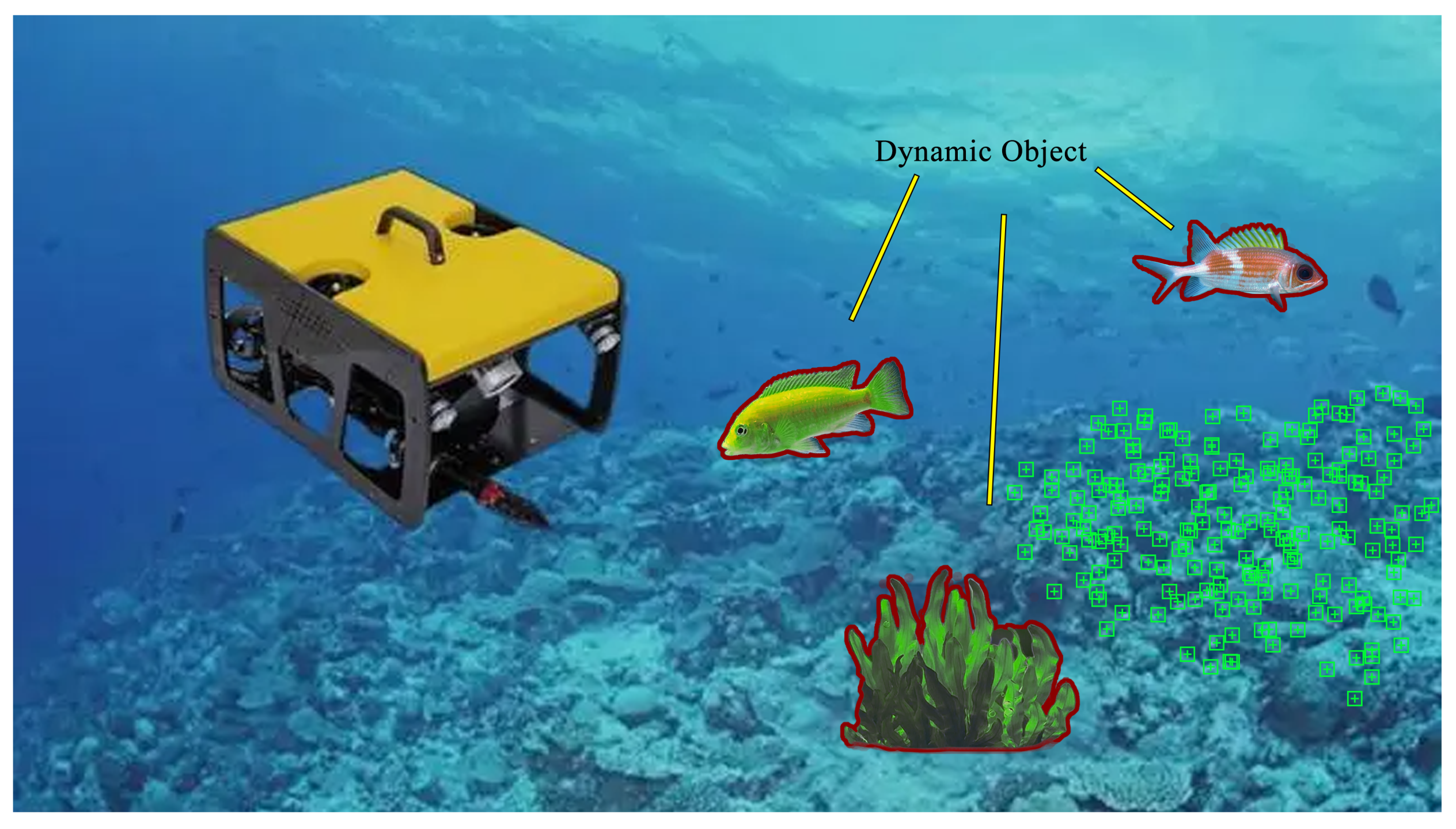

4.2. Underwater SLAM in Dynamic Environments

4.3. Underwater Semantic SLAM

4.4. Multirobot Underwater SLAM

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SLAM | Simultaneous localization and mapping | SSS | Side-scan sonar |

| GNSS | Global navigation satellite system | ALS | Acoustic lens sonar |

| GPS | Global Positioning System | VB | Variational Bayesian |

| UUV | Unmanned underwater vehicles | UFast | Unscented-fast |

| ROV | Remotely operated vehicles | MSIS | Mechanical scanning imaging sonar |

| AUV | Autonomous underwater vehicles | MBS | Multibeam sonar |

| SBL | Short baseline | MBES | Multibeam echosounder |

| USBL | Ultrashort baseline | MFLS | Multibeam forward looking sonar |

| EKF | Extended Kalman filter | RBPF | Rao–Blackwellized particle filter |

| PF | Particle filter | JCBB | Joint compatibility branch and bound |

| MLE | Maximum likelihood estimation | SAS | Synthetic aperture sonar |

| ORB | Oriented FAST and Rotation BRIEF | DIDSON | Dual-frequency identification sonar |

| LSD | Large-scale direct | MSCKF | Multistate constraint Kalman filter |

| SVO | Semidirect visual odometry | FBUS | Fiducial-based, underwater stereo |

| PTAM | Parallel tracking and mapping | BoW | Bag-of-words |

| DT | Deferred triangulation | AHE | Adaptive histogram equalization |

| IMU | Inertial measurement units | MF | Median filtering |

| DVL | Doppler velocity log | DCP | Dark channel prior |

| 6-DOF | Six-degree-of-freedom | SIFT | Scale-invariant feature transform |

| LBL | Lone baseline | SURF | Speed up robust feature |

| ROVIO | Robust visual inertial odometry | SVO | Semidirect visual odometry |

| VIO | Visual inertial odometry | DSO | Direct sparse odometry |

| SVIn | Sonar, visual, inertial | MAP | Maximum a posteriori |

| VINS | Visual–inertial state | BA | Bundle adjustment |

| CNN | Convolutional neural networks | NetHALOC | Network hash-based loop closure |

| SfM | Structure from motion | AHRS | Attitude and heading reference system |

Appendix A

| Simulated datasets produced using UWSim (2016) [122] | This paper provides an open collection of seven different simulated datasets produced using an underwater simulation. Those datasets present three trajectories and two simulated seafloor visual data based on real coral reef mosaics. |

| Underwater caves sonar and vision dataset (2017) [123] | The dataset was collected with an autonomous underwater vehicle test bed in the unstructured environment of an underwater cave. The vehicle was equipped with two mechanically scanned imaging sonar sensors to simultaneously map the cave’s horizontal and vertical surfaces, a Doppler velocity log, two inertial measurement units, a depth sensor, and a vertically mounted camera imaging the sea floor for ground-truth validation at specific points. |

| Datasets collected by an underwater sensor suite (2018) [124] | The proposed sensor suite was used to collect sonar, visual, inertial, and depth data in a variety of environments. More specifically, shipwreck and coral reef data were collected during field trials in Barbados. More data were collected at Fantasy Lake, NC, and at different locales near High Springs, FL. |

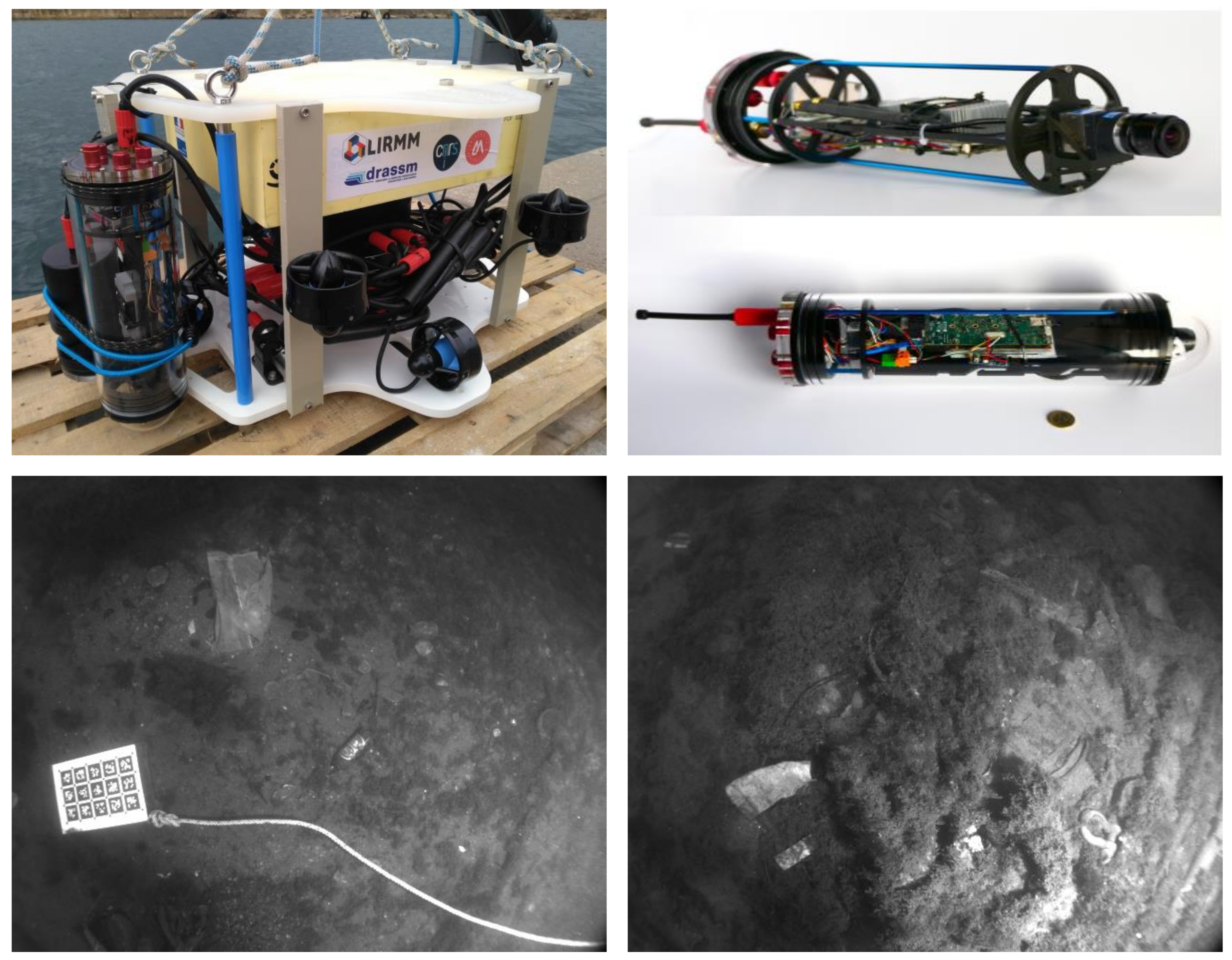

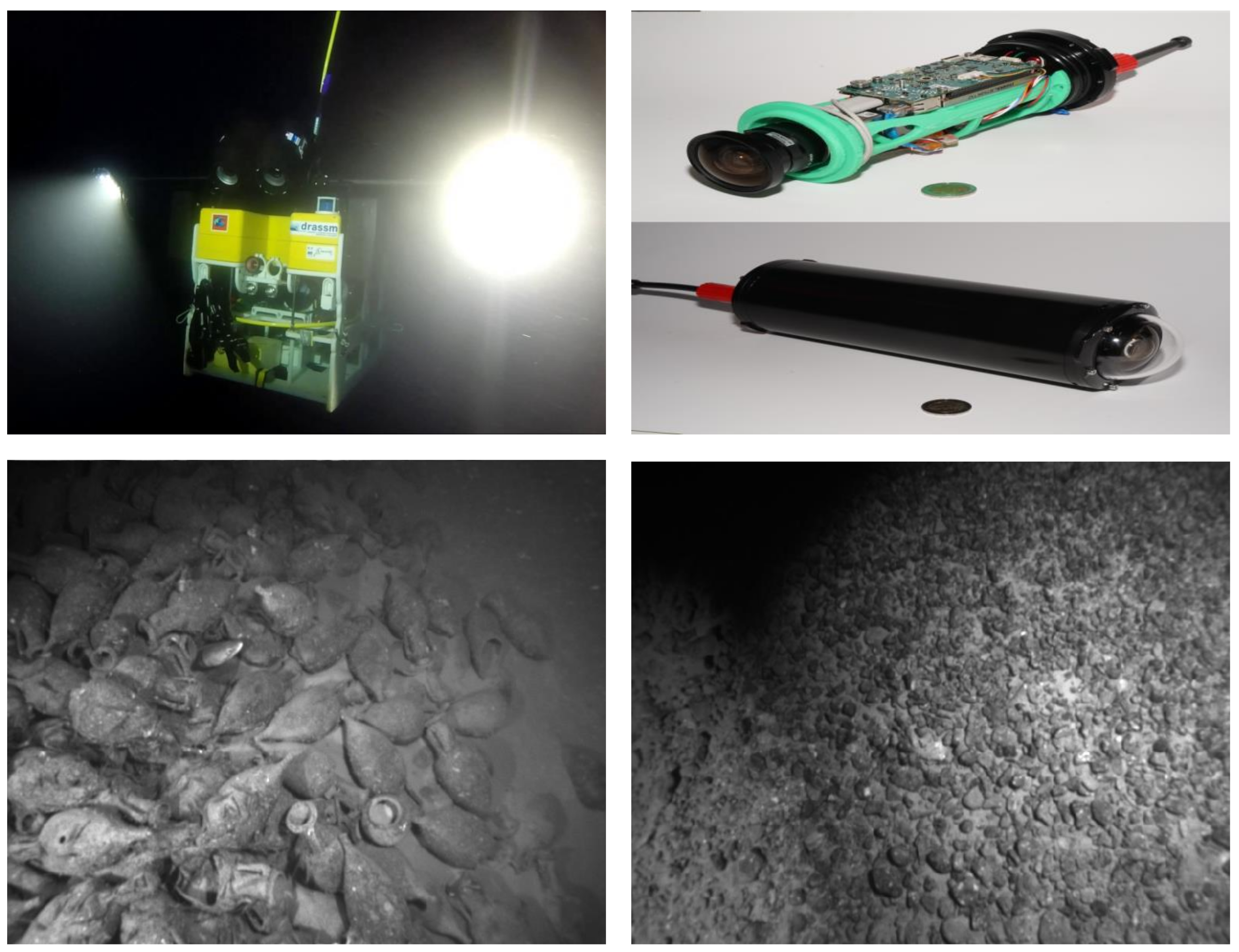

| Aqualoc (2019) [125] | The data sequences composing this dataset were recorded at three different depths: a few meters, 270 m, and 380 m. Seventeen sequences were made available in the form of ROS bags and as raw data. For each sequence, a trajectory was also computed offline using a structure-from-motion library in order to allow the comparison with real-time localization methods. |

| VAROS synthetic underwater dataset (2021) [126] | Pose sequences were created by first defining waypoints for the simulated underwater vehicle. The scenes were rendered using the ray-tracing method, which generates realistic images by integrating direct light and indirect volumetric scattering. The VAROS dataset version 1 provides images, inertial measurement unit (IMU), and depth gauge data, as well as ground-truth poses, depth images, and surface normal images. |

| A bathymetric mapping and SLAM dataset with high-precision ground truth for marine robotics (2022) [127] | This paper presents a dataset with four separate surveys designed to test bathymetric SLAM algorithms using two modern sonar sensors, typical underwater vehicle navigation sensors, and a high-precision (2 cm horizontal, 10 cm vertical) real-time kinematic (RTK) GPS ground truth. |

References

- Zhu, N.; Marais, J.; Bétaille, D.; Berbineau, M. GNSS position integrity in urban environments: A review of literature. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2762–2778. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Van Nam, D.; Gon-Woo, K. Solid-state LiDAR based-SLAM: A concise review and application. In Proceedings of the 2021 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju Island, Republic of Korea, 17–20 January 2021; pp. 302–305. [Google Scholar]

- Cho, H.; Jeong, S.K.; Ji, D.H.; Tran, N.H.; Vu, M.T.; Choi, H.S. Study on control system of integrated unmanned surface vehicle and underwater vehicle. Sensors 2020, 20, 2633. [Google Scholar] [CrossRef]

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater robots: From remotely operated vehicles to intervention-autonomous underwater vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Blidberg, D.R. The development of autonomous underwater vehicles (AUV); a brief summary. Proc. IEEE Icra 2001, 4, 1–12. [Google Scholar]

- Zhao, W.; He, T.; Sani, A.Y.M.; Yao, T. Review of slam techniques for autonomous underwater vehicles. In Proceedings of the 2019 International Conference on Robotics, Intelligent Control and Artificial Intelligence, New York, NY, USA, 20 September 2019; pp. 384–389. [Google Scholar]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV navigation and localization: A review. IEEE J. Ocean. Eng. 2013, 39, 131–149. [Google Scholar] [CrossRef]

- Burguera, A.; Bonin-Font, F.; Font, E.G.; Torres, A.M. Combining Deep Learning and Robust Estimation for Outlier-Resilient Underwater Visual Graph SLAM. J. Mar. Sci. Eng. 2022, 10, 511. [Google Scholar] [CrossRef]

- Burguera, A.; Bonin-Font, F. Localization, Mapping and SLAM in Marine and Underwater Environments; MDPI: Basel, Switzerland, 2022. [Google Scholar]

- Smith, R.; Self, M.; Cheeseman, P. Estimating uncertain spatial relationships in robotics. In Autonomous Robot Vehicles; Springer: Berlin/Heidelberg, Germany, 1990; pp. 167–193. [Google Scholar] [CrossRef]

- Bailey, T.; Nieto, J.; Guivant, J.; Stevens, M.; Nebot, E. Consistency of the EKF-SLAM algorithm. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3562–3568. [Google Scholar]

- Van Der Merwe, R.; Doucet, A.; De Freitas, N.; Wan, E. The unscented particle filter. In Proceedings of the Advances in Neural Information Processing Systems, Cambridge, MA, USA, 1 January 2000; Volume 13. [Google Scholar]

- Dutilleul, P. The MLE algorithm for the matrix normal distribution. J. Stat. Comput. Simul. 1999, 64, 105–123. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An overview on visual slam: From tradition to semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Lai, T. A Review on Visual-SLAM: Advancements from Geometric Modelling to Learning-Based Semantic Scene Understanding Using Multi-Modal Sensor Fusion. Sensors 2022, 22, 7265. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A comprehensive survey of visual slam algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. Cnn-slam: Real-time dense monocular slam with learned depth prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6243–6252. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Engel, J.; Stückler, J.; Cremers, D. Large-scale direct SLAM with stereo cameras. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1935–1942. [Google Scholar]

- Kerl, C.; Sturm, J.; Cremers, D. Dense visual SLAM for RGB-D cameras. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2100–2106. [Google Scholar]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part II 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Herrera, D.C.; Kim, K.; Kannala, J.; Pulli, K.; Heikkilä, J. Dt-slam: Deferred triangulation for robust slam. In Proceedings of the 2014 2nd International Conference on 3D Vision, Tokyo, Japan, 8–11 December 2014; Volume 1, pp. 609–616. [Google Scholar]

- Williams, S.B.; Newman, P.; Dissanayake, G.; Durrant-Whyte, H. Autonomous underwater simultaneous localisation and map building. In Proceedings of the Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 1793–1798. [Google Scholar]

- Hashemi, M.; Karmozdi, A.; Naderi, A.; Salarieh, H. Development of an integrated navigation algorithm based on IMU, depth, DVL sensors and earth magnetic field map. Modares Mech. Eng. 2017, 16, 235–243. [Google Scholar]

- Menaka, D.; Gauni, S.; Manimegalai, C.T.; Kalimuthu, K. Challenges and vision of wireless optical and acoustic communication in underwater environment. Int. J. Commun. Syst. 2022, 35, e5227. [Google Scholar] [CrossRef]

- Vargas, E.; Scona, R.; Willners, J.S.; Luczynski, T.; Cao, Y.; Wang, S.; Petillot, Y.R. Robust underwater visual SLAM fusing acoustic sensing. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 2140–2146. [Google Scholar]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Hodne, L.M.; Leikvoll, E.; Yip, M.; Teigen, A.L.; Stahl, A.; Mester, R. Detecting and suppressing marine snow for underwater visual slam. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 5101–5109. [Google Scholar]

- Zhang, S.; Zhao, S.; An, D.; Liu, J.; Wang, H.; Feng, Y.; Li, D.; Zhao, R. Visual SLAM for underwater vehicles: A survey. Comput. Sci. Rev. 2022, 46, 100510. [Google Scholar] [CrossRef]

- Hidalgo, F.; Kahlefendt, C.; Bräunl, T. Monocular ORB-SLAM application in underwater scenarios. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–4. [Google Scholar]

- Ferrera, M.; Moras, J.; Trouvé-Peloux, P.; Creuze, V. Real-time monocular visual odometry for turbid and dynamic underwater environments. Sensors 2019, 19, 687. [Google Scholar] [CrossRef]

- Roznere, M.; Li, A.Q. Underwater monocular image depth estimation using single-beam echosounder. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 1785–1790. [Google Scholar]

- Mei, C.; Sibley, G.; Cummins, M.; Newman, P.; Reid, I. RSLAM: A system for large-scale mapping in constant-time using stereo. Int. J. Comput. Vis. 2011, 94, 198–214. [Google Scholar] [CrossRef]

- Pi, S.; He, B.; Zhang, S.; Nian, R.; Shen, Y.; Yan, T. Stereo visual SLAM system in underwater environment. In Proceedings of the OCEANS 2014-TAIPEI, Taipei, Taiwan, 7–10 April 2014; pp. 1–5. [Google Scholar]

- Zhang, P.; Wu, Z.; Wang, J.; Kong, S.; Tan, M.; Yu, J. An Open-Source, Fiducial-Based, Underwater Stereo Visual-Inertial Localization Method with Refraction Correction. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4331–4336. [Google Scholar]

- Mu, P.; Zhang, X.; Qin, P.; He, B. A Variational Bayesian-Based Simultaneous Localization and Mapping Method for Autonomous Underwater Vehicle Navigation. J. Mar. Sci. Eng. 2022, 10, 1563. [Google Scholar] [CrossRef]

- Melo, J.; Matos, A. Survey on advances on terrain based navigation for autonomous underwater vehicles. Ocean. Eng. 2017, 139, 250–264. [Google Scholar] [CrossRef]

- Cheng, C.; Wang, C.; Yang, D.; Liu, W.; Zhang, F. Underwater localization and mapping based on multi-beam forward looking sonar. Front. Neurorobot. 2022, 15, 189. [Google Scholar] [CrossRef]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Siantidis, K. Side scan sonar based onboard SLAM system for autonomous underwater vehicles. In Proceedings of the 2016 IEEE/OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016; pp. 195–200. [Google Scholar]

- Neira, J.; Tardós, J.D. Data association in stochastic mapping using the joint compatibility test. IEEE Trans. Robot. Autom. 2001, 17, 890–897. [Google Scholar] [CrossRef]

- Gerg, I.D.; Monga, V. Deep Multi-Look Sequence Processing for Synthetic Aperture Sonar Image Segmentation. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 4200915. [Google Scholar] [CrossRef]

- Belcher, E.; Hanot, W.; Burch, J. Dual-frequency identification sonar (DIDSON). In Proceedings of the 2002 Interntional Symposium on Underwater Technology (Cat. No. 02EX556), Tokyo, Japan, 19 April 2002; pp. 187–192. [Google Scholar]

- Jiang, M.; Song, S.; Li, Y.; Jin, W.; Liu, J.; Feng, X. A survey of underwater acoustic SLAM system. In Proceedings of the Intelligent Robotics and Applications: 12th International Conference, ICIRA 2019, Shenyang, China, 8–11 August 2019; Proceedings, Part II 12. Springer: Berlin/Heidelberg, Germany, 2019; pp. 159–170. [Google Scholar]

- Fallon, M.F.; Folkesson, J.; McClelland, H.; Leonard, J.J. Relocating underwater features autonomously using sonar-based SLAM. IEEE J. Ocean. Eng. 2013, 38, 500–513. [Google Scholar] [CrossRef]

- Evers, C.; Naylor, P.A. Acoustic slam. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1484–1498. [Google Scholar] [CrossRef]

- Maksymova, I.; Steger, C.; Druml, N. Review of LiDAR sensor data acquisition and compression for automotive applications. Proceedings 2018, 2, 852. [Google Scholar]

- Collings, S.; Martin, T.J.; Hernandez, E.; Edwards, S.; Filisetti, A.; Catt, G.; Marouchos, A.; Boyd, M.; Embry, C. Findings from a combined subsea LiDAR and multibeam survey at Kingston reef, Western Australia. Remote Sens. 2020, 12, 2443. [Google Scholar] [CrossRef]

- Massot-Campos, M.; Oliver, G.; Bodenmann, A.; Thornton, B. Submap bathymetric SLAM using structured light in underwater environments. In Proceedings of the 2016 IEEE/OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016; pp. 181–188. [Google Scholar]

- Palomer, A.; Ridao, P.; Ribas, D. Inspection of an underwater structure using point-cloud SLAM with an AUV and a laser scanner. J. Field Robot. 2019, 36, 1333–1344. [Google Scholar] [CrossRef]

- Debeunne, C.; Vivet, D. A review of visual-LiDAR fusion based simultaneous localization and mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R. Keyframe-based visual-inertial slam using nonlinear optimization. In Proceedings of the Robotis Science and Systems (RSS), Berlin, Germany, 24–28 June 2013. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Leutenegger, S. Okvis2: Realtime scalable visual-inertial slam with loop closure. arXiv 2022, arXiv:2202.09199. [Google Scholar]

- Rahman, S.; Li, A.Q.; Rekleitis, I. Svin2: An underwater slam system using sonar, visual, inertial, and depth sensor. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1861–1868. [Google Scholar]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology (ICCAT), Sousse, Tunisia, 20–22 January 2013; pp. 1–5. [Google Scholar]

- Lu, H.; Serikawa, S. Underwater scene enhancement using weighted guided median filter. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Yang, H.Y.; Chen, P.Y.; Huang, C.C.; Zhuang, Y.Z.; Shiau, Y.H. Low complexity underwater image enhancement based on dark channel prior. In Proceedings of the 2011 Second International Conference on Innovations in Bio-Inspired Computing and Applications, Shenzhen, China, 16–18 December 2011; pp. 17–20. [Google Scholar]

- Hou, G.; Li, J.; Wang, G.; Yang, H.; Huang, B.; Pan, Z. A novel dark channel prior guided variational framework for underwater image restoration. J. Vis. Commun. Image Represent. 2020, 66, 102732. [Google Scholar] [CrossRef]

- Sahu, P.; Gupta, N.; Sharma, N. A survey on underwater image enhancement techniques. Int. J. Comput. Appl. 2014, 87, 19–23. [Google Scholar] [CrossRef]

- Zhou, J.; Yang, T.; Zhang, W. Underwater vision enhancement technologies: A comprehensive review, challenges, and recent trends. Appl. Intell. 2023, 53, 3594–3621. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Bruno, H.M.S.; Colombini, E.L. LIFT-SLAM: A deep-learning feature-based monocular visual SLAM method. Neurocomputing 2021, 455, 97–110. [Google Scholar] [CrossRef]

- Zhou, Z.; Fan, X.; Shi, P.; Xin, Y. R-MSFM: Recurrent multi-scale feature modulation for monocular depth estimating. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12777–12786. [Google Scholar]

- Fan, X.; Zhou, Z.; Shi, P.; Xin, Y.; Zhou, X. RAFM: Recurrent atrous feature modulation for accurate monocular depth estimating. IEEE Signal Process. Lett. 2022, 29, 1609–1613. [Google Scholar] [CrossRef]

- Wang, R.; Pizer, S.M.; Frahm, J.M. Recurrent neural network for (un-) supervised learning of monocular video visual odometry and depth. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15 April 2019; pp. 5555–5564. [Google Scholar]

- Wagstaff, B.; Peretroukhin, V.; Kelly, J. Self-supervised deep pose corrections for robust visual odometry. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2331–2337. [Google Scholar]

- Teixeira, B.; Silva, H.; Matos, A.; Silva, E. Deep learning for underwater visual odometry estimation. IEEE Access 2020, 8, 44687–44701. [Google Scholar] [CrossRef]

- Wang, K.; Ma, S.; Chen, J.; Ren, F.; Lu, J. Approaches, challenges, and applications for deep visual odometry: Toward complicated and emerging areas. IEEE Trans. Cogn. Dev. Syst. 2020, 14, 35–49. [Google Scholar] [CrossRef]

- Wirth, S.; Carrasco, P.L.N.; Codina, G.O. Visual odometry for autonomous underwater vehicles. In Proceedings of the 2013 MTS/IEEE OCEANS-Bergen, Bergen, Norway, 10–14 June 2013; pp. 1–6. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the Vision Algorithms: Theory and Practice: International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Qader, W.A.; Ameen, M.M.; Ahmed, B.I. An overview of bag of words; importance, implementation, applications, and challenges. In Proceedings of the 2019 International Engineering Conference (IEC), Erbil, Iraq, 23–25 June 2019; pp. 200–204. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Uijlings, J.R.; Smeulders, A.W.; Scha, R.J. Real-time bag of words, approximately. In Proceedings of the ACM International Conference on Image and Video Retrieval, New York, NY, USA, 8 July 2009; pp. 1–8. [Google Scholar]

- Garcia-Fidalgo, E.; Ortiz, A. ibow-lcd: An appearance-based loop-closure detection approach using incremental bags of binary words. IEEE Robot. Autom. Lett. 2018, 3, 3051–3057. [Google Scholar] [CrossRef]

- Bonin-Font, F.; Burguera Burguera, A. NetHALOC: A learned global image descriptor for loop closing in underwater visual SLAM. Expert Syst. 2021, 38, e12635. [Google Scholar] [CrossRef]

- Memon, A.R.; Wang, H.; Hussain, A. Loop closure detection using supervised and unsupervised deep neural networks for monocular SLAM systems. Robot. Auton. Syst. 2020, 126, 103470. [Google Scholar] [CrossRef]

- Hong, S.; Kim, J.; Pyo, J.; Yu, S.C. A robust loop-closure method for visual SLAM in unstructured seafloor environments. Auton. Robot. 2016, 40, 1095–1109. [Google Scholar] [CrossRef]

- Cattaneo, D.; Vaghi, M.; Valada, A. Lcdnet: Deep loop closure detection and point cloud registration for lidar slam. IEEE Trans. Robot. 2022, 38, 2074–2093. [Google Scholar] [CrossRef]

- Gao, X.; Zhang, T. Unsupervised learning to detect loops using deep neural networks for visual SLAM system. Auton. Robot. 2017, 41, 1–18. [Google Scholar] [CrossRef]

- Merrill, N.; Huang, G. Lightweight unsupervised deep loop closure. arXiv 2018, arXiv:1805.07703. [Google Scholar]

- Williams, B.; Klein, G.; Reid, I. Automatic relocalization and loop closing for real-time monocular SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1699–1712. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Relocalization, global optimization and map merging for monocular visual-inertial SLAM. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1197–1204. [Google Scholar]

- Blochliger, F.; Fehr, M.; Dymczyk, M.; Schneider, T.; Siegwart, R. Topomap: Topological mapping and navigation based on visual slam maps. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3818–3825. [Google Scholar]

- Choset, H.; Nagatani, K. Topological simultaneous localization and mapping (SLAM): Toward exact localization without explicit localization. IEEE Trans. Robot. Autom. 2001, 17, 125–137. [Google Scholar] [CrossRef]

- Chen, Q.; Lu, Y.; Wang, Y.; Zhu, B. From topological map to local cognitive map: A new opportunity of local path planning. Intell. Serv. Robot. 2021, 14, 285–301. [Google Scholar] [CrossRef]

- Thoma, J.; Paudel, D.P.; Chhatkuli, A.; Probst, T.; Gool, L.V. Mapping, localization and path planning for image-based navigation using visual features and map. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7383–7391. [Google Scholar]

- Cheng, W.; Yang, S.; Zhou, M.; Liu, Z.; Chen, Y.; Li, M. Road mapping and localization using sparse semantic visual features. IEEE Robot. Autom. Lett. 2021, 6, 8118–8125. [Google Scholar] [CrossRef]

- Li, J.; Zhan, J.; Zhou, T.; Bento, V.A.; Wang, Q. Point cloud registration and localization based on voxel plane features. ISPRS J. Photogramm. Remote. Sens. 2022, 188, 363–379. [Google Scholar] [CrossRef]

- Nüchter, A.; Hertzberg, J. Towards semantic maps for mobile robots. Robot. Auton. Syst. 2008, 56, 915–926. [Google Scholar] [CrossRef]

- Han, L.; Lin, Y.; Du, G.; Lian, S. Deepvio: Self-supervised deep learning of monocular visual inertial odometry using 3d geometric constraints. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6906–6913. [Google Scholar]

- Almalioglu, Y.; Turan, M.; Saputra, M.R.U.; de Gusmão, P.P.; Markham, A.; Trigoni, N. SelfVIO: Self-supervised deep monocular Visual–Inertial Odometry and depth estimation. Neural Netw. 2022, 150, 119–136. [Google Scholar] [CrossRef]

- Silveira, L.; Guth, F.; Drews, P., Jr.; Ballester, P.; Machado, M.; Codevilla, F.; Duarte-Filho, N.; Botelho, S. An open-source bio-inspired solution to underwater SLAM. IFAC-PapersOnLine 2015, 48, 212–217. [Google Scholar] [CrossRef]

- Yuan, X.; Martínez-Ortega, J.F.; Fernández, J.A.S.; Eckert, M. AEKF-SLAM: A new algorithm for robotic underwater navigation. Sensors 2017, 17, 1174. [Google Scholar] [CrossRef]

- Kim, A.; Eustice, R.M. Real-time visual SLAM for autonomous underwater hull inspection using visual saliency. IEEE Trans. Robot. 2013, 29, 719–733. [Google Scholar] [CrossRef]

- Felemban, E.; Shaikh, F.K.; Qureshi, U.M.; Sheikh, A.A.; Qaisar, S.B. Underwater sensor network applications: A comprehensive survey. Int. J. Distrib. Sens. Netw. 2015, 11, 896832. [Google Scholar] [CrossRef]

- Köser, K.; Frese, U. Challenges in underwater visual navigation and SLAM. AI Technol. Underw. Robot. 2020, 96, 125–135. [Google Scholar]

- Amarasinghe, C.; Ratnaweera, A.; Maitripala, S. Monocular visual slam for underwater navigation in turbid and dynamic environments. Am. J. Mech. Eng. 2020, 8, 76–87. [Google Scholar] [CrossRef]

- Guth, F.; Silveira, L.; Botelho, S.; Drews, P.; Ballester, P. Underwater SLAM: Challenges, state of the art, algorithms and a new biologically-inspired approach. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014; pp. 981–986. [Google Scholar]

- Muhammad, N.; Strokina, N.; Toming, G.; Tuhtan, J.; Kämäräinen, J.K.; Kruusmaa, M. Flow feature extraction for underwater robot localization: Preliminary results. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1125–1130. [Google Scholar]

- Cong, Y.; Gu, C.; Zhang, T.; Gao, Y. Underwater robot sensing technology: A survey. Fundam. Res. 2021, 1, 337–345. [Google Scholar] [CrossRef]

- Negre, P.L.; Bonin-Font, F.; Oliver, G. Cluster-based loop closing detection for underwater slam in feature-poor regions. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2589–2595. [Google Scholar]

- Hu, M.; Li, S.; Wu, J.; Guo, J.; Li, H.; Kang, X. Loop closure detection for visual SLAM fusing semantic information. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4136–4141. [Google Scholar]

- Mukherjee, A.; Chakraborty, S.; Saha, S.K. Detection of loop closure in SLAM: A DeconvNet based approach. Appl. Soft Comput. 2019, 80, 650–656. [Google Scholar] [CrossRef]

- Techy, L.; Morganseny, K.A.; Woolseyz, C.A. Long-baseline acoustic localization of the Seaglider underwater glider. In Proceedings of the 2011 American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 3990–3995. [Google Scholar]

- Duarte, A.C.; Zaffari, G.B.; da Rosa, R.T.S.; Longaray, L.M.; Drews, P.; Botelho, S.S. Towards comparison of underwater SLAM methods: An open dataset collection. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–5. [Google Scholar]

- Mallios, A.; Vidal, E.; Campos, R.; Carreras, M. Underwater caves sonar data set. Int. J. Robot. Res. 2017, 36, 1247–1251. [Google Scholar] [CrossRef]

- Rahman, S.; Karapetyan, N.; Li, A.Q.; Rekleitis, I. A modular sensor suite for underwater reconstruction. In Proceedings of the OCEANS 2018 MTS/IEEE Charleston, Charleston, SC, USA, 22–25 October 2018; pp. 1–6. [Google Scholar]

- Ferrera, M.; Creuze, V.; Moras, J.; Trouvé-Peloux, P. AQUALOC: An underwater dataset for visual–inertial–pressure localization. Int. J. Robot. Res. 2019, 38, 1549–1559. [Google Scholar] [CrossRef]

- Zwilgmeyer, P.G.O.; Yip, M.; Teigen, A.L.; Mester, R.; Stahl, A. The varos synthetic underwater data set: Towards realistic multi-sensor underwater data with ground truth. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3722–3730. [Google Scholar]

- Krasnosky, K.; Roman, C.; Casagrande, D. A bathymetric mapping and SLAM dataset with high-precision ground truth for marine robotics. Int. J. Robot. Res. 2022, 41, 12–19. [Google Scholar] [CrossRef]

- Song, C.; Zeng, B.; Su, T.; Zhang, K.; Cheng, J. Data association and loop closure in semantic dynamic SLAM using the table retrieval method. Appl. Intell. 2022, 52, 11472–11488. [Google Scholar] [CrossRef]

- Cieslewski, T.; Choudhary, S.; Scaramuzza, D. Data-efficient decentralized visual SLAM. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2466–2473. [Google Scholar]

- Carreno, Y.; Willners, J.S.; Petillot, Y.; Petrick, R.P. Situation-Aware Task Planning for Robust AUV Exploration in Extreme Environments. In Proceedings of the IJCAI Workshop on Robust and Reliable Autonomy in the Wild, Montreal, QC, Canada, 19 August 2021. [Google Scholar]

- Watson, S.; Duecker, D.A.; Groves, K. Localisation of unmanned underwater vehicles (UUVs) in complex and confined environments: A review. Sensors 2020, 20, 6203. [Google Scholar] [CrossRef]

- Aitken, J.M.; Evans, M.H.; Worley, R.; Edwards, S.; Zhang, R.; Dodd, T.; Mihaylova, L.; Anderson, S.R. Simultaneous localization and mapping for inspection robots in water and sewer pipe networks: A review. IEEE Access 2021, 9, 140173–140198. [Google Scholar] [CrossRef]

- Xiao, L.; Wang, J.; Qiu, X.; Rong, Z.; Zou, X. Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robot. Auton. Syst. 2019, 117, 1–16. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, M.; Meng, M.Q.H. Improving RGB-D SLAM in dynamic environments: A motion removal approach. Robot. Auton. Syst. 2017, 89, 110–122. [Google Scholar] [CrossRef]

- Ni, J.; Wang, X.; Gong, T.; Xie, Y. An improved adaptive ORB-SLAM method for monocular vision robot under dynamic environments. Int. J. Mach. Learn. Cybern. 2022, 13, 3821–3836. [Google Scholar] [CrossRef]

- Chen, C.; Wang, B.; Lu, C.X.; Trigoni, N.; Markham, A. A survey on deep learning for localization and mapping: Towards the age of spatial machine intelligence. arXiv 2020, arXiv:2006.12567. [Google Scholar]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A semantic visual SLAM towards dynamic environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Himri, K.; Ridao, P.; Gracias, N.; Palomer, A.; Palomeras, N.; Pi, R. Semantic SLAM for an AUV using object recognition from point clouds. IFAC-PapersOnLine 2018, 51, 360–365. [Google Scholar] [CrossRef]

- Wen, S.; Li, P.; Zhao, Y.; Zhang, H.; Sun, F.; Wang, Z. Semantic visual SLAM in dynamic environment. Auton. Robot. 2021, 45, 493–504. [Google Scholar] [CrossRef]

- Chang, Y.; Ebadi, K.; Denniston, C.E.; Ginting, M.F.; Rosinol, A.; Reinke, A.; Palieri, M.; Shi, J.; Chatterjee, A.; Morrell, B.; et al. LAMP 2.0: A robust multi-robot SLAM system for operation in challenging large-scale underground environments. IEEE Robot. Autom. Lett. 2022, 7, 9175–9182. [Google Scholar] [CrossRef]

- Pire, T.; Baravalle, R.; D’alessandro, A.; Civera, J. Real-time dense map fusion for stereo SLAM. Robotica 2018, 36, 1510–1526. [Google Scholar] [CrossRef]

- Paull, L.; Huang, G.; Seto, M.; Leonard, J.J. Communication-constrained multi-AUV cooperative SLAM. In Proceedings of the 2015 IEEE international conference on robotics and automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 509–516. [Google Scholar]

- Ji, P.; Li, X.; Gao, W.; Li, M. A Vision Based Multi-robot Cooperative Semantic SLAM Algorithm. In Proceedings of the 2022 34th Chinese Control and Decision Conference (CCDC), Hefei, China, 15–17 August 2022; pp. 5663–5668. [Google Scholar]

| Classification | Feature-Based Method | Direct Method |

|---|---|---|

| Concept | Based on feature point matching; minimize the reprojection error | Based on gray invariant; minimize the luminosity error |

| Advantage | Strong robustness and high precision | Can be used in scenes with repetitive textures and missing corners |

| Disadvantage | Both quantity and quality of feature points are required | Sensitive to illumination changes; difficult to realize loop-closure detection and relocation |

| Characteristic | Data association and pose estimation are decoupled; builds sparse maps; loop-closure detection and relocation are required | Data association and pose estimation are coupled; builds semidense or dense maps; suitable for multisensor fusion |

| Sensors | Method | Optimization | Loop Closure | Scenario | |

|---|---|---|---|---|---|

| LSD-SLAM [30] | Mono | Direct | Pose graph | Yes | Large-scale, consistent maps |

| DSO [78] | Camera | Direct and sparse | Nonlinear joint | No | - |

| SVO [31] | Mono | Semidirect | Minimize reprojection error | No | - |

| ORB-SLAM2 [28] | Mono, stereo, RGB-D | Indirect | BA | Yes | Textured environment |

| ORB-SLAM3 [29] | Mono, stereo, RGB-D, pinhole, fisheye, IMU | Indirect | BA | Yes | Textured environment |

| ROVIO [66] | IMU, camera | Direct | EKF | No | Employed in UAV |

| OKVIS [67] | Camera, IMU | Indirect | Marginalization of key frames | No | Hand-held indoor motion, bicycle riding |

| OKVIS2 [69] | Stereo, IMU | Indirect | Marginalization of common observations | Yes | - |

| SVIn2 [70] | Stereo, IMU, Depth, Sonar | Indirect | Tightly coupled | Yes | Underwater environments |

| VINS-Mono [68] | Mono, IMU | Indirect | Tightly coupled and pose graph | Yes | Employed in UAV |

| MSCKF [65] | Mono, stereo, IMU | Indirect | EKF | No | Real-World environment trajectory |

| DeepVIO [107] | Stereo, IMU | Self-supervised learning method | - | No | - |

| SelfVIO [108] | Mono, IMU | Self-supervised learning method | - | No | - |

| Dolphin SLAM [109] | Sonar, camera, DVL, IMU | Indirect | Bioinspired | Yes | Underwater environments |

| AEKF-SLAM [110] | Sonar (mainly) | Indirect | AEKF | Yes | Underwater environments |

| [63] | Laser, AHRS, DVL, pressure sensor | Indirect | EKF | No | Underwater pipe structure |

| [111] | Mono | Indirect | BA | Yes | Autonomous underwater ship hull inspection |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Fan, X.; Shi, P.; Ni, J.; Zhou, Z. An Overview of Key SLAM Technologies for Underwater Scenes. Remote Sens. 2023, 15, 2496. https://doi.org/10.3390/rs15102496

Wang X, Fan X, Shi P, Ni J, Zhou Z. An Overview of Key SLAM Technologies for Underwater Scenes. Remote Sensing. 2023; 15(10):2496. https://doi.org/10.3390/rs15102496

Chicago/Turabian StyleWang, Xiaotian, Xinnan Fan, Pengfei Shi, Jianjun Ni, and Zhongkai Zhou. 2023. "An Overview of Key SLAM Technologies for Underwater Scenes" Remote Sensing 15, no. 10: 2496. https://doi.org/10.3390/rs15102496

APA StyleWang, X., Fan, X., Shi, P., Ni, J., & Zhou, Z. (2023). An Overview of Key SLAM Technologies for Underwater Scenes. Remote Sensing, 15(10), 2496. https://doi.org/10.3390/rs15102496