Detection of Smoke from Straw Burning Using Sentinel-2 Satellite Data and an Improved YOLOv5s Algorithm

Abstract

:1. Introduction

2. Materials and Methods

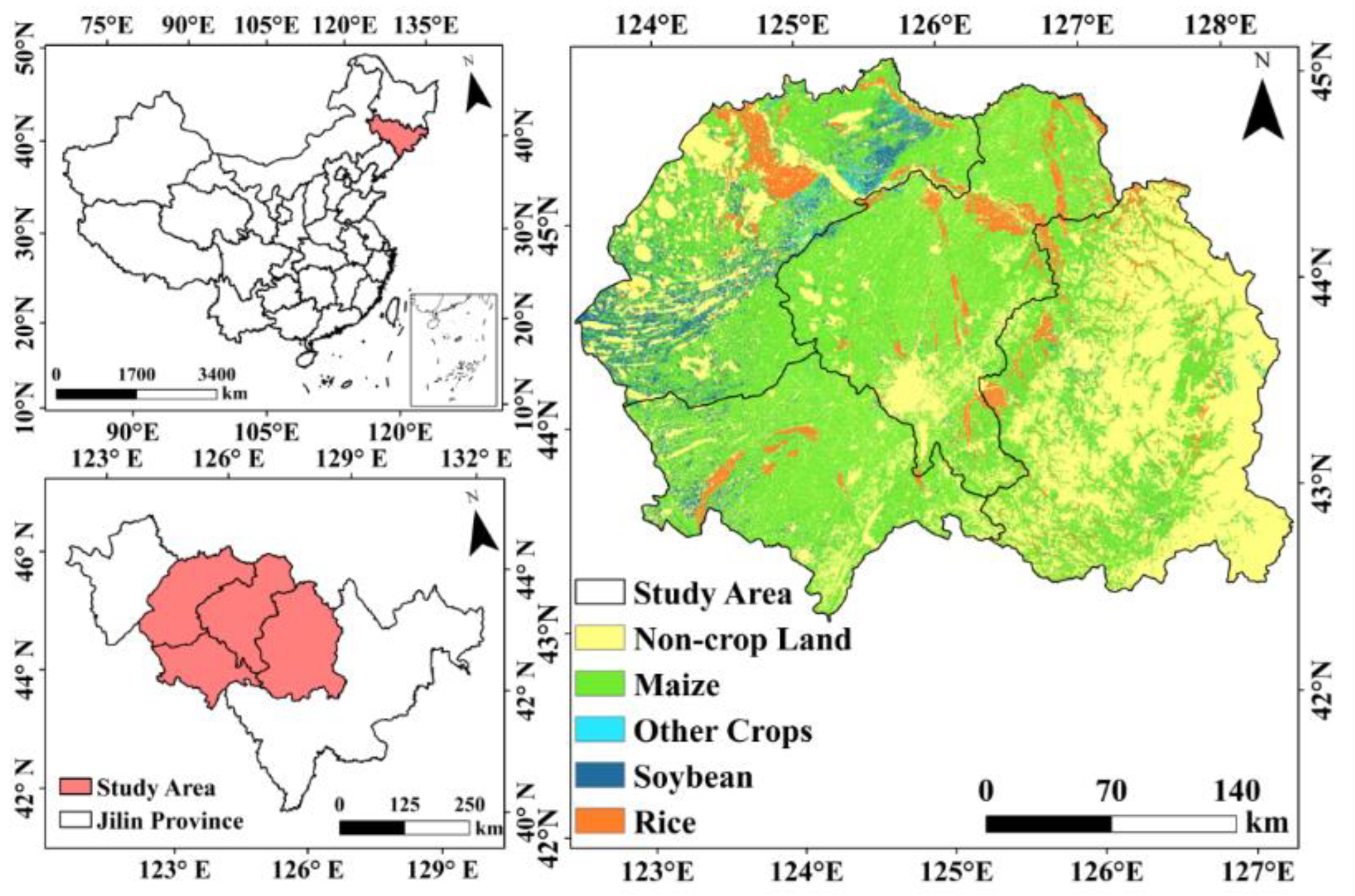

2.1. Overview of the Study Area

2.2. Data Sources

2.3. Data Preprocessing

2.4. Dataset Construction

2.5. Improved YOLOv5s Model

2.6. Test Environment and Parameter Settings

2.7. Evaluation Indicators

3. Results

3.1. Separation Methods

3.2. Comparison of Attention Models

3.3. Ablation Experiments

3.4. Comparison of Different Channel Combinations as Inputs

4. Discussion

4.1. Comparison of Different Spatial Resolutions

4.2. The Challenge of Insufficient Data

4.3. Impact of Other Types of Smoke

4.4. Real-Time Monitoring Issues

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Akagi, S.K.; Yokelson, R.J.; Wiedinmyer, C.; Alvarado, M.J.; Reid, J.S.; Karl, T.; Crounse, J.D.; Wennberg, P.O. Emission Factors for Open and Domestic Biomass Burning for Use in Atmospheric Models. Atmos. Chem. Phys. 2011, 11, 4039–4072. [Google Scholar] [CrossRef]

- van Marle, M.J.; Kloster, S.; Magi, B.I.; Marlon, J.R.; Daniau, A.-L.; Field, R.D.; Arneth, A.; Forrest, M.; Hantson, S.; Kehrwald, N.M.; et al. Historic Global Biomass Burning Emissions for CMIP6 (BB4CMIP) Based on Merging Satellite Observations with Proxies and Fire Models (1750–2015). Geosci. Model Dev. 2017, 10, 3329–3357. [Google Scholar] [CrossRef]

- Chen, J.; Li, C.; Ristovski, Z.; Milic, A.; Gu, Y.; Islam, M.S.; Wang, S.; Hao, J.; Zhang, H.; He, C.; et al. A Review of Biomass Burning: Emissions and Impacts on Air Quality, Health and Climate in China. Sci. Total Environ. 2017, 579, 1000–1034. [Google Scholar] [CrossRef]

- Shi, Z.; Yang, S.; Chang, Z.; Zhang, S. Investigation of Straw Yield and Utilization Status and Analysis of Difficulty in Prohibition Straw Burning: A Case Study in A Township in Jiangsu Province, China. J. Agric. Resour. Environ. 2014, 31, 103. [Google Scholar]

- Mehmood, K.; Chang, S.; Yu, S.; Wang, L.; Li, P.; Li, Z.; Liu, W.; Rosenfeld, D.; Seinfeld, J.H. Spatial and Temporal Distributions of Air Pollutant Emissions from Open Crop Straw and Biomass Burnings in China from 2002 to 2016. Environ. Chem. Lett. 2018, 16, 301–309. [Google Scholar] [CrossRef]

- Yim, H.; Oh, S.; Kim, W. A Study on the Verification Scheme for Electrical Circuit Analysis of Fire Hazard Analysis in Nuclear Power Plant. J. Korean Soc. Saf. 2015, 30, 114–122. [Google Scholar] [CrossRef]

- Mehmood, K.; Bao, Y.; Saifullah; Bibi, S.; Dahlawi, S.; Yaseen, M.; Abrar, M.M.; Srivastava, P.; Fahad, S.; Faraj, T.K. Contributions of Open Biomass Burning and Crop Straw Burning to Air Quality: Current Research Paradigm and Future Outlooks. Front. Environ. Sci. 2022, 10, 852492. [Google Scholar] [CrossRef]

- Xiaohui, M.; Yixi, T.; Zhaobin, S.; Ziming, L. Analysis on the Impacts of Straw Burning on Air Quality in Beijing-Tianjing-Hebei Region. Meteorol. Environ. Res. 2017, 8, 49–53. [Google Scholar]

- Mott, J.A.; Meyer, P.; Mannino, D.; Redd, S.C.; Smith, E.M.; Gotway-Crawford, C.; Chase, E. Wildland Forest Fire Smoke: Health Effects and Intervention Evaluation, Hoopa, California, 1999. West. J. Med. 2002, 176, 157. [Google Scholar] [CrossRef]

- Hasinoff, S.W.; Kutulakos, K.N. Photo-Consistent Reconstruction of Semitransparent Scenes by Density-Sheet Decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 870–885. [Google Scholar] [CrossRef]

- Jiang, M.; Zhao, Y.; Yu, F.; Zhou, C.; Peng, T. A self-attention network for smoke detection. Fire Saf. J. 2022, 129, 103547. [Google Scholar] [CrossRef]

- Avgeris, M.; Spatharakis, D.; Dechouniotis, D.; Kalatzis, N.; Roussaki, I.; Papavassiliou, S. Where There Is Fire There Is Smoke: A Scalable Edge Computing Framework for Early Fire Detection. Sensors 2019, 19, 639. [Google Scholar] [CrossRef] [PubMed]

- Tlig, L.; Bouchouicha, M.; Tlig, M.; Sayadi, M.; Moreau, E. A Fast Segmentation Method for Fire Forest Images Based on Multiscale Transform and PCA. Sensors 2020, 20, 6429. [Google Scholar] [CrossRef]

- Yoon, J.H.; Kim, S.-M.; Eom, Y.; Koo, J.M.; Cho, H.-W.; Lee, T.J.; Lee, K.G.; Park, H.J.; Kim, Y.K.; Yoo, H.-J.; et al. Extremely Fast Self-Healable Bio-Based Supramolecular Polymer for Wearable Real-Time Sweat-Monitoring Sensor. ACS Appl. Mater. Interfaces 2019, 11, 46165–46175. [Google Scholar] [CrossRef]

- Deng, C.; Ji, X.; Rainey, C.; Zhang, J.; Lu, W. Integrating Machine Learning with Human Knowledge. iScience 2020, 23, 101656. [Google Scholar] [CrossRef] [PubMed]

- Nie, S.; Zhang, Y.; Wang, L.; Wu, Q.; Wang, S. Preparation and Characterization of Nanocomposite Films Containing Nano-Aluminum Nitride and Cellulose Nanofibrils. Nanomaterials 2019, 9, 1121. [Google Scholar] [CrossRef]

- Liu, K.; Li, Y.; Han, T.; Yu, X.; Ye, H.; Hu, H.; Hu, Z. Evaluation of Grain Yield Based on Digital Images of Rice Canopy. Plant Methods 2019, 15, 28. [Google Scholar] [CrossRef]

- Gubbi, J.; Marusic, S.; Palaniswami, M. Smoke Detection in Video Using Wavelets and Support Vector Machines. Fire Saf. J. 2009, 44, 1110–1115. [Google Scholar] [CrossRef]

- Chen, T.-H.; Wu, P.-H.; Chiou, Y.-C. An Early Fire-Detection Method Based on Image Processing. In Proceedings of the 2004 International Conference on Image Processing (ICIP’04), Singapore, 24–27 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 1707–1710. [Google Scholar]

- Li, H.; Yuan, F. Image Based Smoke Detection Using Pyramid Texture and Edge Features. J. Image Graph. 2015, 20, 0772–0780. [Google Scholar]

- Xie, Y.; Qu, J.; Xiong, X.; Hao, X.; Che, N.; Sommers, W. Smoke Plume Detection in the Eastern United States Using MODIS. Int. J. Remote Sens. 2007, 28, 2367–2374. [Google Scholar] [CrossRef]

- Zhao, T.X.-P.; Ackerman, S.; Guo, W. Dust and Smoke Detection for Multi-Channel Imagers. Remote Sens. 2010, 2, 2347–2368. [Google Scholar] [CrossRef]

- Li, Z.; Khananian, A.; Fraser, R.H.; Cihlar, J. Automatic Detection of Fire Smoke Using Artificial Neural Networks and Threshold Approaches Applied to AVHRR Imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1859–1870. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Misra, D. Mish: A Self Regularized Non-Monotonic Neural Activation Function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Yan, L.; Wang, Y.; Feng, G.; Gao, Q. Status and Change Characteristics of Farmland Soil Fertility in Jilin Province. Sci. Agric. Sin. 2015, 48, 4800–4810. [Google Scholar]

- Liu, H.; Li, J.; Du, J.; Zhao, B.; Hu, Y.; Li, D.; Yu, W. Identification of Smoke from Straw Burning in Remote Sensing Images with the Improved YOLOv5s Algorithm. Atmosphere 2022, 13, 925. [Google Scholar] [CrossRef]

- Xi, W.; Sun, Y.; Yu, G.; Zhang, Y. The Research About the Effect of Straw Resources on the Economic Structure of Jilin Province. In Proceedings of the 22nd International Conference on Industrial Engineering and Engineering Management 2015; Springer: Berlin/Heidelberg, Germany, 2016; pp. 511–518. [Google Scholar]

- Guo, H.; Xu, S.; Wang, X.; Shu, W.; Chen, J.; Pan, C.; Guo, C. Driving Mechanism of Farmers’ Utilization Behaviors of Straw Resources—An Empirical Study in Jilin Province, the Main Grain Producing Region in the Northeast Part of China. Sustainability 2021, 13, 2506. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Geng, P.; Yang, Q.; Chen, K.; Liu, N.; Fan, Y.; Zhan, X.; Han, X. Effects of Different Returning Method Combined with Decomposer on Decomposition of Organic Components of Straw and Soil Fertility. Sci. Rep. 2021, 11, 15495. [Google Scholar] [CrossRef]

- Huo, Y.; Li, M.; Teng, Z.; Jiang, M. Analysis on Effect of Straw Burning on Air Quality in Harbin. Environ. Pollut. Control 2018, 40, 1161–1166. [Google Scholar]

- Wang, J.; Xie, X.; Fang, C. Temporal and Spatial Distribution Characteristics of Atmospheric Particulate Matter (PM10 and PM2.5) in Changchun and Analysis of Its Influencing Factors. Atmosphere 2019, 10, 651. [Google Scholar] [CrossRef]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2A, Sentinel-2B and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar] [CrossRef]

- Louis, J.; Debaecker, V.; Pflug, B.; Main-Knorn, M.; Bieniarz, J.; Mueller-Wilm, U.; Cadau, E.; Gascon, F. Sentinel-2 Sen2Cor: L2A Processor for Users. In Proceedings of the Proceedings Living Planet Symposium, Prague, Czech Republic, 9–13 May 2016; pp. 1–8. [Google Scholar]

- Johnson, A.D.; Handsaker, R.E.; Pulit, S.L.; Nizzari, M.M.; O’Donnell, C.J.; de Bakker, P.I. SNAP: A Web-Based Tool for Identification and Annotation of Proxy SNPs Using HapMap. Bioinformatics 2008, 24, 2938–2939. [Google Scholar] [CrossRef] [PubMed]

- Juan, Y.; Shun, L. Detection Method of Illegal Building Based on YOLOv5. Comput. Eng. Appl. 2021, 57, 236–244. [Google Scholar]

- Ting, L.; Baijun, Z.; Yongsheng, Z.; Shun, Y. Ship Detection Algorithm Based on Improved YOLO V5. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 483–487. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Wang, Y.; Ma, H.; Alifu, K.; Lv, Y. Remote Sensing Image Description Based on Word Embedding and End-to-End Deep Learning. Sci. Rep. 2021, 11, 3162. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, Z.; Yang, S.; Ding, D.; Ning, J. Identifying Sunflower Lodging Based on Image Fusion and Deep Semantic Segmentation with UAV Remote Sensing Imaging. Comput. Electron. Agric. 2020, 179, 105812. [Google Scholar] [CrossRef]

- Qiu, C.; Zhang, S.; Wang, C.; Yu, Z.; Zheng, H.; Zheng, B. Improving Transfer Learning and Squeeze- and-Excitation Networks for Small-Scale Fine-Grained Fish Image Classification. IEEE Access 2018, 6, 78503–78512. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing Intersection-over-Union in Deep Neural Networks for Image Segmentation. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 234–244. [Google Scholar]

- Zhou, L.; Li, Y.; Rao, X.; Liu, C.; Zuo, X.; Liu, Y. Ship Target Detection in Optical Remote Sensing Images Based on Multiscale Feature Enhancement. Comput. Intell. Neurosci. 2022, 2022, 2605140. [Google Scholar] [CrossRef]

- John, P.S.; Bomble, Y.J. Approaches to Computational Strain Design in the Multiomics Era. Front. Microbiol. 2019, 10, 597. [Google Scholar] [CrossRef]

- Li, X.; Song, W.; Lian, L.; Wei, X. Forest Fire Smoke Detection Using Back-Propagation Neural Network Based on MODIS Data. Remote Sens. 2015, 7, 4473–4498. [Google Scholar] [CrossRef]

- Li, X.; Wang, J.; Song, W.; Ma, J.; Telesca, L.; Zhang, Y. Automatic Smoke Detection in Modis Satellite Data Based on K-Means Clustering and Fisher Linear Discrimination. Photogramm. Eng. Remote Sens. 2014, 80, 971–982. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Xiang, X.; Du, J.; Jacinthe, P.-A.; Zhao, B.; Zhou, H.; Liu, H.; Song, K. Integration of Tillage Indices and Textural Features of Sentinel-2A Multispectral Images for Maize Residue Cover Estimation. Soil Tillage Res. 2022, 221, 105405. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic Segmentation and Analysis on Sensitive Parameters of Forest Fire Smoke Using Smoke-Unet and Landsat-8 Imagery. Remote Sens. 2022, 14, 45. [Google Scholar] [CrossRef]

- Bai, H.; Shi, Y.; Seong, M.; Gao, W.; Li, Y. Influence of Spatial Resolution on Satellite-Based PM2.5 Estimation: Implications for Health Assessment. Remote Sens. 2022, 14, 2933. [Google Scholar] [CrossRef]

- Zheng, T.; Bergin, M.H.; Hu, S.; Miller, J.; Carlson, D.E. Estimating Ground-Level PM2.5 Using Micro-Satellite Images by a Convolutional Neural Network and Random Forest Approach. Atmos. Environ. 2020, 230, 117451. [Google Scholar] [CrossRef]

- Wang, Q.; Xu, J.; Chen, Y.; Li, J.; Wang, X. Influence of the Varied Spatial Resolution of Remote Sensing Images on Urban and Rural Residential Information Extraction. Resour. Sci. 2012, 34, 159–165. (In Chinese) [Google Scholar]

- Otsu, N. A threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Huang, S.; Yang, J.; Fong, S.; Zhao, Q. Artificial Intelligence in Cancer Diagnosis and Prognosis: Opportunities and Challenges. Cancer Lett. 2020, 471, 61–71. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling Maize Above-Ground Biomass Based on Machine Learning Approaches Using UAV Remote-Sensing Data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Shamjad, P.; Tripathi, S.; Pathak, R.; Hallquist, M.; Arola, A.; Bergin, M. Contribution of Brown Carbon to Direct Radiative Forcing over the Indo-Gangetic Plain. Environ. Sci. Technol. 2015, 49, 10474–10481. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep Learning in Environmental Remote Sensing: Achievements and Challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-Use Mapping Using a Deep Convolutional Neural Network with High Spatial Resolution Multispectral Remote Sensing Imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-Cover Classification with High-Resolution Remote Sensing Images Using Transferable Deep Models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, C.; Ma, Z.; Quan, W. Acute and Cumulative Effects of Haze Fine Particles on Mortality and the Seasonal Characteristics in Beijing, China, 2005–2013: A Time-Stratified Case-Crossover Study. Int. J. Environ. Res. Public Health 2019, 16, 2383. [Google Scholar] [CrossRef]

- Cao, H.; Han, L. The Short-Term Impact of the COVID-19 Epidemic on Socioeconomic Activities in China Based on the OMI-NO2 Data. Environ. Sci. Pollut. Res. 2022, 29, 21682–21691. [Google Scholar] [CrossRef]

- Kumar, D. Urban Objects Detection from C-Band Synthetic Aperture Radar (SAR) Satellite Images through Simulating Filter Properties. Sci. Rep. 2021, 11, 6241. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.; Qin, Y.; Dong, J.; Zhang, G.; Kou, W.; Jin, C.; Zhou, Y.; Zhang, Y. Mapping Paddy Rice Planting Area in Wheat-Rice Double-Cropped Areas through Integration of Landsat-8 OLI, MODIS and PALSAR images. Sci. Rep. 2015, 5, 10088. [Google Scholar] [CrossRef]

| Channel Combination | Training Set | Test Set | Validation Set | Total Number |

|---|---|---|---|---|

| RGB (Red-Green–Blue, 10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band5 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band5_Band6 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band5_Band6_Band7 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band5_Band6_Band7_Band8 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band6 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band6_Band7 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band6_Band7_Band8 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band7 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band7_Band8 (10 m) | 2431 | 810 | 819 | 4060 |

| RGB_Band8 (10 m) | 2431 | 810 | 819 | 4060 |

| Spatial Resolution | Training Set | Test Set | Validation Set | Total Number |

|---|---|---|---|---|

| 60 m | 2001 | 667 | 653 | 3321 |

| 20 m | 2015 | 663 | 661 | 3339 |

| 10 m | 2431 | 810 | 819 | 4060 |

| Band | Variable | Smoke | Cloud | Background | Water |

|---|---|---|---|---|---|

| B1 | Mean | 15.37 | 15.38 | 2.86 | 4.17 |

| Std | 9.39 | 8.75 | 2.63 | 3.63 | |

| B2 | Mean | 18.36 | 18.22 | 5.53 | 6.06 |

| Std | 8.77 | 7.59 | 4.32 | 3.55 | |

| B3 | Mean | 18.85 | 20.13 | 7.88 | 8.69 |

| Std | 8.23 | 7.09 | 5.98 | 3.90 | |

| B4 | Mean | 20.68 | 23.98 | 11.65 | 8.89 |

| Std | 7.38 | 7.30 | 8.78 | 4.42 | |

| B5 | Mean | 21.70 | 25.43 | 13.05 | 9.90 |

| Std | 7.17 | 7.38 | 9.58 | 4.77 | |

| B6 | Mean | 22.52 | 26.66 | 14.21 | 9.71 |

| Std | 7.06 | 7.40 | 10.30 | 6.56 | |

| B7 | Mean | 23.60 | 28.17 | 15.49 | 10.42 |

| Std | 7.07 | 7.52 | 11.14 | 7.15 | |

| B8 | Mean | 27.03 | 32.39 | 18.09 | 11.36 |

| Std | 8.23 | 8.38 | 13.13 | 8.61 | |

| B8A | Mean | 25.47 | 30.70 | 17.66 | 11.18 |

| Std | 7.37 | 7.86 | 12.61 | 8.19 |

| Attention Mechanism | Contraction Ratio | mAP50/% |

|---|---|---|

| None | 77.27 | |

| SE | 8 | 80.71 |

| 16 | 76.66 | |

| 32 | 78.55 | |

| CBAM | 8 | 69.36 |

| 16 | 68.63 | |

| 32 | 65.72 |

| Model Used for Object Detection | P/% | R/% | mAP50/% |

|---|---|---|---|

| YOLOv5s | 73.82 | 81.58 | 78.44 |

| YOLOv5s − Mish | 78.15 | 77.67 | 78.76 |

| YOLOv5s + SE8 | 75.05 | 79.33 | 79.31 |

| Improved YOLOv5s | 75.63 | 81.00 | 82.49 |

| Dataset | Number of Channels | P/% | R/% | mAP50/% |

|---|---|---|---|---|

| RGB (10 m) | 3 | 76.84 | 44.76 | 49.17 |

| RGB_Band5 (10 m) | 4 | 80.54 | 49.78 | 56.20 |

| RGB_Band5_Band6 (10 m) | 5 | 67.35 | 40.94 | 42.80 |

| RGB_Band5_Band6_Band7 (10 m) | 6 | 70.60 | 38.89 | 43.03 |

| RGB_Band5_Band6_Band7_Band8 (10 m) | 7 | 75.95 | 45.22 | 50.04 |

| RGB_Band6 (10 m) | 4 | 82.90 | 50.54 | 57.39 |

| RGB_Band6_Band7 (10 m) | 5 | 69.10 | 42.48 | 45.89 |

| RGB_Band6_Band7_Band8 (10 m) | 6 | 73.10 | 45.40 | 49.29 |

| RGB_Band7 (10 m) | 4 | 72.30 | 42.91 | 45.98 |

| RGB_Band7_Band8 (10 m) | 5 | 52.15 | 28.45 | 26.84 |

| RGB_Band8 (10 m) | 4 | 74.98 | 47.60 | 52.30 |

| Dataset | P/% | R/% | mAP50/% |

|---|---|---|---|

| 60 m | 84.18 | 90.87 | 90.87 |

| 20 m | 73.13 | 82.00 | 80.71 |

| 10 m | 45.05 | 63.61 | 49.79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Liu, H.; Du, J.; Cao, B.; Zhang, Y.; Yu, W.; Zhang, W.; Zheng, Z.; Wang, Y.; Sun, Y.; et al. Detection of Smoke from Straw Burning Using Sentinel-2 Satellite Data and an Improved YOLOv5s Algorithm. Remote Sens. 2023, 15, 2641. https://doi.org/10.3390/rs15102641

Li J, Liu H, Du J, Cao B, Zhang Y, Yu W, Zhang W, Zheng Z, Wang Y, Sun Y, et al. Detection of Smoke from Straw Burning Using Sentinel-2 Satellite Data and an Improved YOLOv5s Algorithm. Remote Sensing. 2023; 15(10):2641. https://doi.org/10.3390/rs15102641

Chicago/Turabian StyleLi, Jian, Hua Liu, Jia Du, Bin Cao, Yiwei Zhang, Weilin Yu, Weijian Zhang, Zhi Zheng, Yan Wang, Yue Sun, and et al. 2023. "Detection of Smoke from Straw Burning Using Sentinel-2 Satellite Data and an Improved YOLOv5s Algorithm" Remote Sensing 15, no. 10: 2641. https://doi.org/10.3390/rs15102641