Spatial Validation of Spectral Unmixing Results: A Systematic Review

Abstract

1. Introduction

1.1. Background

1.2. Reviews on the Spectral Unmixing Procedure

1.3. Objectvives

2. Materials and Methods

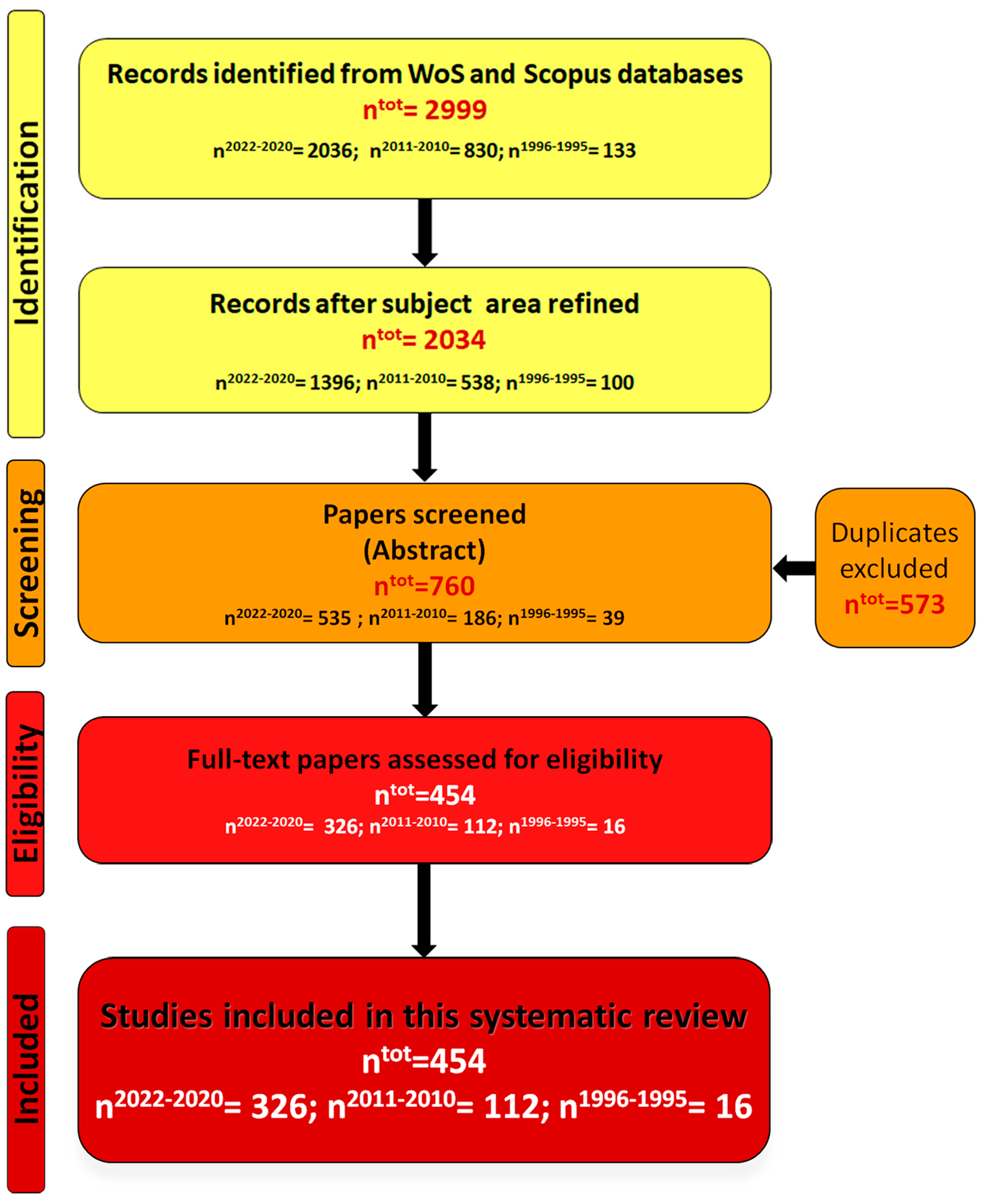

2.1. Identification Criteria

2.2. Screening and Eligible Criteria

3. Results

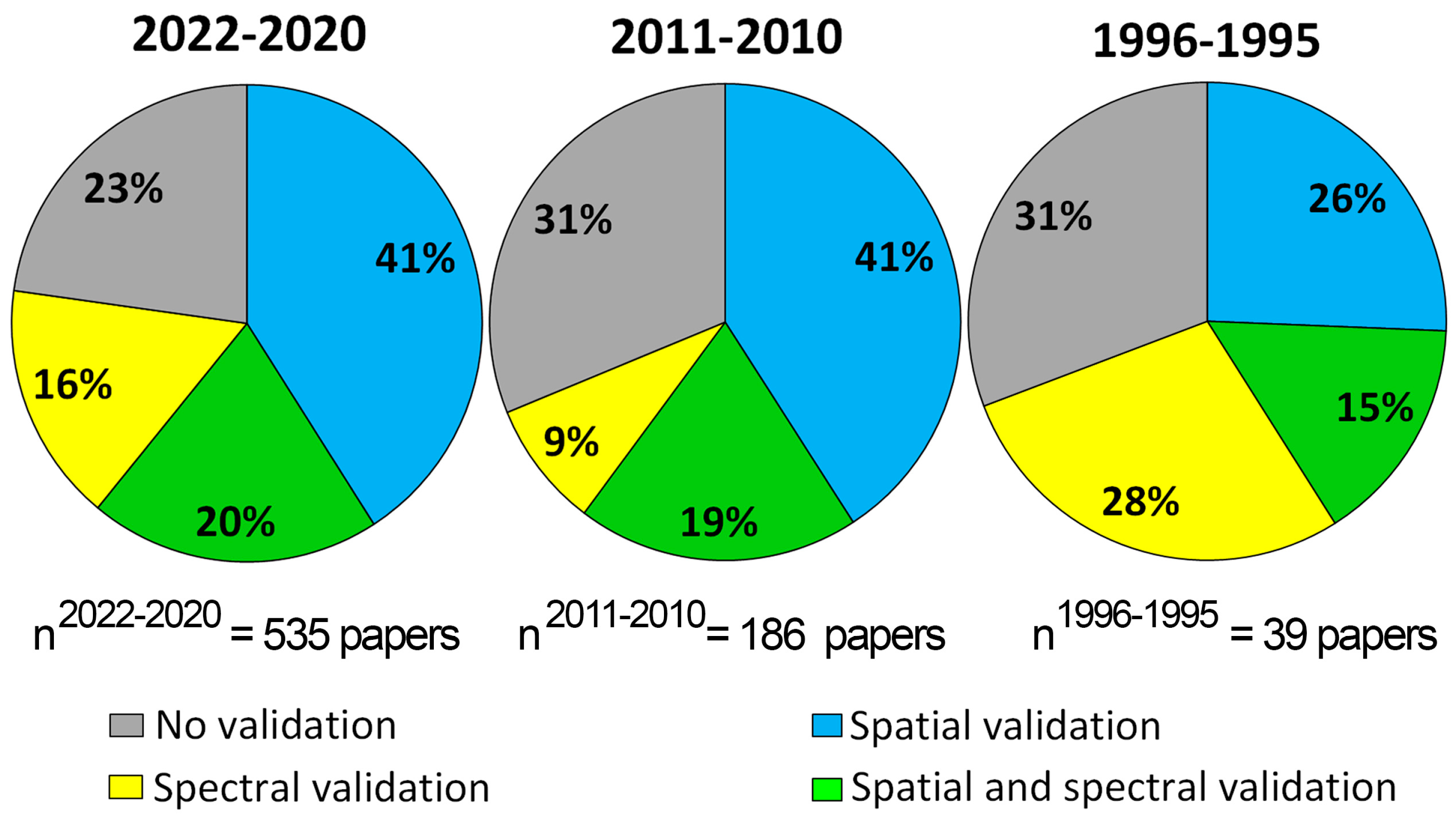

3.1. Spatial Validation of Spectral Unmixing Results

3.2. Remote Images

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| AMMIS * (0.5 m) [55] | No | Local | [56,57] | |

| Apex * (2.5 m) [58] | No | Local | [59] | |

| ASTER (15–30–90 m) [60] | No | Regional 1 | [61] | |

| ASTER (15–30 m) | Yes 2 | Local | [62] | |

| AVHRR (1–5 km) [63] | Yes 1 | Regional 1 | [64] | [65,66] |

| AVIRIS * (10/20 m) [67] | No | Local | [57,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87] | [88,89,90,91,92,93,94,95,96,97,98] |

| AVIRIS-NG * (5 m) [99] | No | Local | [100] | |

| CASI * (2.5 m) [101] | No | Local | [59,78] | |

| DESIS * (30 m) [102] | Yes 1 | Regional 1 | [103] | |

| DESIS * (30 m) | No | Local | [104] | |

| EnMap * (30 m) [105] | No | Local | [69] | |

| GaoFen-6 (2–8–16 m) [106] | No | Regional 1 | [107] | |

| GaoFen-2 (3.2 m) | Yes 1 | Regional 1 | [108] | |

| GaoFen-1 (2–8–16 m) | No | Local | [109] | |

| HYDICE * (10 m) [110] | No | Local | [59,68,76,77,79,81,82,85,86,90,111] | [89,96,97] |

| Hyperion * (30 m) [112] | Yes 1 | Local | [75] | |

| Hyperion * (30 m) | No | Local | [113] | [114,115,116] |

| HySpex * (0.6–1.2 m) [104] | No | Local | [104,117] | |

| Landsat (15–30 m) [118] | Yes 1 | Continental 1 | [119] | |

| Landsat (15–30 m) | Yes 1 | Regional 1 | [108,120,121,122,123,124,125,126,127,128,129,130,131,132,133] | [134,135] |

| Landsat (15–30 m) | No | Regional 1 | [107,136,137] | |

| Landsat (15–30 m) | Yes 1 | Local | [138,139] | [62] |

| Landsat (15–30 m) | No | Local 2 | [140,141] | |

| Landsat (15–30 m) | No | Local | [109,142] | |

| M3 hyperspectral image * [143] | No | Moon | [143] | |

| MIVIS * (8 m) [144] | No | Local | [145] | |

| MERIS (300 m) [146] | Yes 1 | Local | [147] | |

| MODIS (0.5–1 km) [148] | Yes 1 | Continental 1 | [149] | |

| MODIS (0.5 km) | Yes 1 | Regional 1 | [108,150,151,152] | [137] |

| MODIS (0.5 km) | No | Local | [153] | |

| NEON * (1 m) [154] | No | Local | [154] | |

| PRISMA * (30 m) [155] | No | Local | [114,156,157,158] | |

| ROSIS * (4 m) [159] | No | Local | [56,57,78,81,85] | |

| Samson * (3.2 m) [59] | No | Local | [59,72] | [89,97] |

| Sentinel-2 (10–20–60 m) [160] | Yes 1 | Regional 1 | [108,133,161,162,163] | |

| Sentinel-2 (10–20–60 m) | No | Regional 1 | [136] | [107,164,165] |

| Sentinel-2 (10–20–60 m) | Yes 1 | Local | [166,167] | [168] |

| Sentinel-2 (10–20–60 m) | No | Local 2 | [104] | |

| Sentinel-2 (10–20–60 m) | No | Local | [169] | |

| Specim IQ * [170] | Yes 1 | Laboratory | [170] | |

| SPOT (10–20 m) [171] | No | Local 2 | [140] | |

| WorldView-2 (0.46–1.8 m) [172] | No | Local | [166] | |

| WorldView-3 (0.31–1.24–3.7 m) | No | Local | [166] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| ASTER (15–30–90 m) | No | Regional 1 | [173] | |

| AVIRIS * | No | Local | [174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201] | [202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225] |

| AVIRIS-NG * (5 m) | No | Local | [226] | |

| CASI * | No | Local | [174,227] | |

| Simulated EnMAP * | Yes 1 | Regional 1 | [228] | |

| GaoFen-5 * (30 m) | No | Local | [229] | |

| HYDICE * (10 m) | No | Local | [192,230,231,232] | [204,212,214,216,218] |

| HyMap * (4.5 m) | Yes | Local | [233] | |

| Hyperion * (30 m) | No | Local | [212,234,235] | |

| Hyperion * (30 m) | Yes 1 | Local | [236,237] | |

| HySpex | No | Local | [238] | |

| Landsat (30 m) | Yes 1 | Regional 1 | [239,240,241,242,243,244] | |

| Landsat (30 m) | Yes 1 | Local 2 | [245,246,247,248,249,250,251,252,253] | |

| Landsat (30 m) | No | Local | [227,254,255,256,257,258,259] | |

| Landsat (30 m) | No | Regional 1 | [260] | |

| MODIS (0.5–1 km) | No | Local | [254,261] | |

| MODIS (0.5–1 km) | Yes 1 | Regional 1 | [262,263,264] | |

| PRISMA * (30 m) | No | Local | [265] | |

| ROSIS * (4 m) | No | Local | [191,200,266] | [217,267] |

| Samson * (3.2 m) | No | Local | [188,232,268] | [207,210,211,214,224,225,267] |

| Sentinel-2 (10–20–60 m) | No | Local | [255,258] | [226,269] |

| Sentinel-2 (10–20–60 m) | Yes 1 | Local | [243,253,270] | [229,271,272] |

| Sentinel-2 (10–20–60 m) | No | Regional 1 | [273] | |

| Sentinel-2 (10–20–60 m) | Yes 1 | Regional 1 | [244] | |

| UAV multispectral image [274] | No | Local | [274] | |

| WorldView-2 (0.46–1.8 m) | Yes 1 | Local | [275] | |

| WorldView-3 (0.31–1.24–3.7 m) | No | Local 2 | [276] | |

| ZY-1-02D * (30 m) [228] | No | Local | [228] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| AISA Eagle II airborne hyperspectral scanner * [277] | No | Local | [277] | |

| ASTER (15–30–90 m) | No | Regional 1 | [278] | |

| ASTER (15–30–90 m) | Yes 1 | Local 2 | [279,280] | |

| AVIRIS * | No | Local | [281,282,283,284,285,286,287,288,289,290,291,292,293,294,295,296,297,298] | [299,300,301,302,303,304,305,306,307,308,309,310,311,312,313,314,315,316,317,318,319,320,321,322,323,324,325,326,327] |

| AVIRIS NG * | No | Local | [291] | |

| AWiFS [328] | Yes 1 | Local 2 | [328] | |

| CASI * | No | Local | [329] | |

| Simulated EnMAP * (30 m) | No | Regional 1 | [330] | |

| GaoFen-1 WFV | Yes 1 | Local | [331] | |

| GaoFen-1 WFV | Yes 1 | Local 2 | [332] | [333] |

| GaoFen-2 | No | Local 2 | [332] | |

| HYDICE * (10 m) | No | Local | [292,293,298,334,335] | [299,307,309,310,316,318,321,322,324] |

| HyMAP * | No | Local 2 | [280] | |

| HyMAP * | No | Local | [336] | |

| HySpex * (0.7 m) | No | Local | [337] | |

| Hyperion * (30 m) | No | Local | [336] | [338] |

| Landsat (30 m) | Yes 1 | Local 2 | [332] | [280,339] |

| Landsat (30 m) | Yes 1 | Local | [252,340,341,342,343,344,345,346,347] | |

| Landsat (30 m) | Yes 1 | Continental 1 | [348] | |

| Landsat (30 m) | Yes 1 | Regional 1 | [349,350,351,352,353,354,355] | [356] |

| Landsat (30 m) | No | Regional 1 | [357] | |

| MODIS (0.5–1 km) | Yes 1 | Local | [340,358,359,360,361] | [333] |

| MODIS (0.5–1 km) | Yes 1 | Regional 1 | [362,363] | |

| MODIS (0.5–1 km) | Yes 1 | Local 2 | [364,365] | [279] |

| PlanetScope (3 m) [366] | Yes 1 | Local 2 | [366] | |

| PROBA-V (100 m) [367] | Yes 1 | Regional 1 | [353,368,369,370,371] | |

| ROSIS * (4 m) | No | Local | [285,372] | [373] |

| Samson * (3.2 m) | No | Local | [284,374,375] | [301,303,305,315,320,323,324] |

| Sentinel-2 (10–20–60 m) | No | Local 2 | [332,376] | [280,339] |

| Sentinel-2 (10–20–60 m) | Yes 1 | Local | [328,340,377,378,379,380,381,382] | [333,383] |

| Suomi NPP-VIIRS [354] | Yes 1 | Regional 1 | [353] | |

| UAV hyperspectral data * [384] | Yes 1 | Local | [384] | |

| WorldView-2 | Yes 1 | Local | [342] | |

| WorldView-2 | Yes 1 | Local 2 | [385] | |

| WorldView-3 | Yes 1 | Local 2 | [385] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| AHS * [386] | No | Local | [386] | |

| ASTER | No | Local | [387,388,389] | |

| ASTER | Yes 1 | Local | [390,391] | |

| AVIRIS * | No | Local | [307,392,393,394,395,396,397,398,399,400,401,402,403] | [387,404,405,406,407,408,409,410,411,412,413,414,415,416,417] |

| CASI * | No | Local | [418] | |

| MERIS (300 m) | No | Local | [419] | |

| MODIS (0.5–1 km) | Yes 1 | Local | [420,421,422,423] | |

| HYDICE * | No | Local | [392,424] | [414,415,425] |

| HyMAP * | No | Local | [392,426] | [427] |

| Hyperion * (30 m) | No | Local | [387,428] | |

| HJ-1 * (30 m) [429] | No | Local | [429,430] | |

| Landsat (30 m) | Yes 1 | Local | [431,432,433] | [387] |

| Landsat (30 m) | No | Local | [434,435] | |

| Landsat (30 m) | Yes 1 | Local 2 | [436,437,438] | |

| Landsat (30 m) | No | Local 2 | [423,439] | |

| QuickBird (0.6–2.4 m) [440] | No | Local | [441,442] | |

| SPOT (10–20 m) | No | Local 2 | [439,441] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| Airborne hyper-spectral image * (about 1.5 m) [443] | No | Regional 1 | [443] | |

| AHS * (2.4 m) | No | Local | [444] | |

| ASTER (15–30–90 m) | Yes 1 | Local | [445,446] | |

| ASTER (15–30–90 m) | Yes 1 | Regional 1 | [447] | |

| ATM (2 m) [101] | No | Local 2 | [101] | |

| AVHRR (1 km) | Yes 1 | Regional 1 | [448] | |

| AVIRIS * (20 m) | No | Local | [449,450,451,452,453,454,455,456,457] | [458,459,460,461,462,463] |

| CASI * (2 m) | No | Local | [101] | |

| CASI * | No | Laboratory | [464,465] | |

| CHRIS * (17 m) [466] | No | Local | [467] | |

| DAIS * (6 m) [464] | No | Local | [465] | |

| DESIS * | No | Local | [468,469] | |

| HYDICE * | No | Local | [455,470,471] | [458,463] |

| HyMAP * | No | Local | [471] | |

| Hyperion * (30 m) | No | Local | [472,473,474] | |

| HJ-1 * (30 m) | No | Local | [475,476] | |

| Landsat (30 m) | Yes 1 | Regional 1 | [477,478,479,480,481,482,483] | |

| Landsat (30 m) | No | Regional 1 | [484,485,486,487,488,489] | [490] |

| Landsat (30 m) | No | Local 2 | [491,492] | |

| Landsat (30 m) | No | Local | [493] | |

| MIVIS * (3 m) | No | Regional 1 | [494] | |

| MODIS (0.5–1 km) | Yes 1 | Regional 1 | [495] | |

| MODIS (0.5–1 km) | Yes 1 | Continental 1 | [496] | |

| QuickBird (2.4 m) | No | Local 2 | [491] | |

| QuickBird (2.4 m) | No | Local | [497,498] | |

| SPOT (10–20 m) | Yes 1 | Regional 1 | [480] | |

| SPOT (2.5–10–20 m) | No | Local 2 | [486,491,492] | |

| SPOT (2.5–10–20 m) | No | Local | [499] | [500] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| AVIRIS * | No | Local | [501,502] | [503] |

| GERIS * [504] | No | Local | [504] | |

| Landsat (30 m) | No | Local | [14,505] | [506] |

| SPOT (2.5–10–20 m) | No | Local | [507] |

| Remote Image Analyzed | Time Series | Study Area Scale | Spatial Validation Carried Out | Spatial and Spectral Validation Carried Out |

|---|---|---|---|---|

| AVHRR (1–5 km) | Yes 1 | Regional 1 | [508] | |

| AVIRIS * (20 m) | No | Local | [509] | [510,511] |

| Landsat (30 m) | No | Local | [512] | [513] |

| MIVIS * (4 m) | No | Local | [514] | |

| MMR * [515] | Yes 1 | Local | [515] |

3.3. Accuracy Metrics

3.4. Key Issues in the Spatial Validation

3.4.1. Validated Endmembers

3.4.2. Sampling Designs for the Reference Data

3.4.3. Sources of the Reference Data

3.4.4. Reference Fractional Abundance Maps

3.4.5. Validation of the Reference Data with Other Reference Data

3.4.6. Error in Co-Localization and Spatial Resampling

4. Conclusions

- The first key issue concerned the number of the endmembers validated. Some authors chose to focus on only one or two endmembers, and only these were spatially validated. This key issue was designed to facilitate the conduct of regional- or continental-scale studies and/or multitemporal analysis. It is important to note that 8% of the eligible papers did not specify which endmembers were validated.

- The second key issue concerned the sampling designs for the reference data. The authors who analyzed hyperspectral images preferred to validate the whole study area, whereas those who analyzed multispectral images preferred to validate small sample sizes that were randomly distributed. It is important to point out that 16% of the eligible papers did not specify the sampling designs for the reference data.

- The third key issue concerned the reference data sources. The authors who analyzed hyperspectral images primarily used the previously referenced maps and secondarily created reference maps using in situ data, whereas the authors who analyzed multispectral images chose to create reference maps primarily using high-spatial-resolution images and secondarily using in situ data.

- The fourth key issue was, perhaps, the one most closely related to the spectral unmixing procedure; it concerned the creation of the reference fractional abundance maps. Only 45% of the eligible papers created the reference fractional abundance maps to spatially validate the fractional abundance maps retrieved. These mainly employed high-resolution images and secondarily in situ data. Therefore, 55% of the eligible papers did not specify the employment of the reference fractional abundance maps.

- The fifth key issue concerned the validation of the reference data with other reference data; it was addressed only by 19% of the eligible papers. Therefore, 81% of the eligible papers did not validate the reference data.

- The sixth key issue concerned the error in co-localization and spatial resampling data, which was minimized and/or evaluated only by 6% of the eligible papers. Therefore, 94% of the eligible papers did not address the error in co-localization and spatial resampling data.

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Paper | Remote Image | Determined Endmembers | Validated Endmembers | Sources of Reference Data | Method for Mapping the Endmembers | Validation of Reference Data with Other Reference Data | Sample Sizes and Number of Small Sample Sizes | Sampling Designs | Reference Data | Estimation of Fractional Abundances | Error in Co-Localization and Spatial Resampling |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Abay et al. [62] | ASTER (15–30 m) Landsat OLI (30 m) | Goethite, hematite | All | Geological map | - | In situ observations | - | - | Reference map | - | - |

| Ambarwulan et al. [147] | MERIS (300 m) | Several total suspended matter concentrations | All | In situ data | - | - | 171 samples | - | - | - | - |

| Benhalouche et al. [156] | PRISMA (30 m) | Hematite, magnetite, limonite, goethite, apatite | All | In situ data | - | - | - | - | - | - | - |

| Bera et al. [120] | Landsat TM, ETM+, OLI (30 m) | Vegetation, impervious surface, soil | All | Google Earth images | Photointerpretation | Soil map | 101 polygons | Uniform | Reference fractional abundance maps | Partial | - |

| Brice et al. [121] | Landsat TM, OLI (30 m) | Water, wetland vegetation, trees, grassland | 1 | Planet images (4 m) | Photointerpretation | In situ observations | 427 wetlands | - | Reference fractional abundance map | Partial | - |

| Cao et al. [164] | Sentinel-2 (10–20–60 m) | Vegetation, high albedo impervious surface, low albedo impervious surface, soil | All | GaoFen-2(0.8–3.8 m) | Photointerpretation | In situ observations | 300 squares (100 × 100 m) | Stratified random | Reference fractional abundance maps | Partial | Polygon size |

| Cavalli [114] | Hyperion (30 m) PRISMA (30 m) | Lateritic tiles, lead plates, asphalt, limestone, trachyte rock, grass, trees, lagoon water | All All | Panchromatic IKONOS image (1 m) Synthetic Hyperion and PRISMA images (0.30 m) | Photointerpretation The same spectral unmixing procedure performed to real images | In situ observations and shape files provided by the city and lagoon portal of Venice (Italy) | The whole study area | The whole study area | Reference fractional abundance maps | Full | Spatial resampling the reference maps and evaluation of the errors Evaluation of the errors in co-localization and spatial-resampling |

| Cavalli [145] | MIVIS (8m) | Lateritic tiles, lead plates, vegetation, asphalt, limestone, trachyte rock | All All | Panchromatic IKONOS image (1 m) Synthetic MIVIS image (0.30 m) | Photointerpretation The same spectral unmixing procedure performed to real image | In situ observations and shape files provided by the city and lagoon portal of Venice (Italy) | The whole study area | The whole study area | Reference fractional abundance maps | Partial | Spatial resampling the reference maps and evaluation of the errors Evaluation of the errors in co-localization and spatial-resampling |

| Cerra et al. [104] | DESIS (30 m) HySpex (0.6–1.2 m) Sentinel-2 (10–20–60 m) | PV panels, 2 grass, 2 forest, 2 soil, 2impervious surfaces | 1 | Reference map | - | - | The whole study area | The whole study area | - | - | - |

| Cipta et al. [137] | Landsat OLI (30 m) MODIS (500 m) | Rice, non-rice | All | In situ data | - | - | 10 samples | - | - | - | - |

| Compains Iso et al. [134] | Landsat TM, OLI (30 m) | Forest, shrubland, grassland, water, rock, bare soil | All | Orthophoto (≤ 0.5 m) | Photointerpretation | - | 50 squares (30 × 30 m) | Random | Reference fractional abundance maps | Partial | - |

| Damarjati et al. [157] | PRISMA (30 m) | A. obtusifolia, sand, wetland vegetations | All | In situ data | - | - | - | - | Reference maps | - | - |

| Dhaini et al. [70] | AVIRIS (20 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite Road, trees, water, soil Asphalt, dirt, tree, roof | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Ding et al. [122] | Landsat TM, OLI (30 m) | Vegetation, impervious surface, soil | All | Google satellite images (1 m) | Photointerpretation | - | 100 points | Random | Reference maps | - | - |

| Ding et al. [152] | MODIS (250–500 m) | Vegetation, non-vegetation | All | Landsat (30 m) | K-means unsupervised classified method | Google map | 5 Landsat images | Representative areas | Reference fractional abundance maps | Partial | Spatial resampling the reference maps |

| Fang et al. [71] | AVIRIS (20 m) | Road, 2building, trees, grass, soil Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Fernández-Guisuraga et al. [161] | Sentinel-2 (10–20 m) | Soil, green vegetation, non-photosynthetic vegetation | 1 | Photos | - | - | 60 situ plots (20 × 20 m) | Stratified random | Reference fractional abundance map | Full | - |

| Gu et al. [98] | AVIRIS (20 m) | Vegetation, soil, road, river soil, water, vegetation | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Guan et al. [86] | AVIRIS (20 m) | Trees, water, dirt, road | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Hadi et al. [68] | AVIRIS (20 m) | Trees, water, dirt, road | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Hajnal et al. [169] | Sentinel-2 (10–20–60 m) | Vegetation, impervious surface, soil | All | APEX image (2 m), High-resolution land cover map | Support vector classification | - | APEX image | Representative areas | Reference fractional abundance maps | Full | Spatial resampling the reference maps |

| Halbgewachs et al. [123] | Landsat TM, OLI (30 m), TIRS (60 m) | Forest, non-Forest (non-photosynthetic vegetation, soil, shade) | 2 | Annual classifications of the Program for Monitoring Deforestation in the Brazilian Amazon (PRODES) | - | Official truth-terrain data from deforested and non-deforested areas prepared by PRODES | 494 samples | Stratified random | Reference maps | - | - |

| He et al. [56] | AMMIS (0.5 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Hong et al. [69] | AVIRIS (20 m) | Trees, water, dirt, road, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| EnMAP (30 m) | Asphalt, soil, water, vegetation | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Hu et al. [149] | MODIS (0.5–1 km) | Blue ice, coarse-grained snow, fresh snow, bare rock, deep water, slush, wet snow | 1 | Sentinel-2 images | The same spectral unmixing procedure performed to MODIS images | Five auxiliary datasets | Six test areas identified as blue ice areas in the Landsat-based LIMA product | Representative areas | Reference fractional abundance maps | Full | - |

| Hua et al. [72] | AVIRIS (10 m) Samson (3.2 m) | Dirt, road - | All All | Reference map Reference map | - | - | The whole study area The whole study area | The whole study area The whole study area | Reference maps Reference maps | - | - |

| Jamshid Moghadam et al. [115] | Hyperion (30 m) | Kaolinite/smeetite, sepiolite, lizardite, chorite | All | Geological map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Jin et al. [143] | M3 hyperspectral image | Lunar surface materials | All | Lunar Soil Characterization Consortium dataset | - | - | - | - | Reference fractional abundance maps | Full | - |

| Jin et al. [73] | AVIRIS (10 m) Samson (3.2 m) | Road, soil, tree, water Water, tree, soil | All All | Reference map Reference map | - | - | The whole study area The whole study area | The whole study area The whole study area | Reference maps Reference maps | - | - |

| Kremezi et al. [166] | Sentinel-2 (10–20–60 m) WorldView-2 (0.46–1.8 m) WorldView-3 (0.31–1.24–3.7 m) | PET-1.5 l bottles, LDPE bags, fishing nets | All | In situ data | - | - | 3 squares (10 × 10 m) | - | Reference map | - | - |

| Kuester et al. [111] | HYDICE (10 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Kumar et al. [113] | Hyperion (30 m) | Sal-forest, teak-plantation, scrub, grassland, water, cropland, mixed forest, urban, dry riverbed | All | Google Earth images | - | - | Same squares (30 × 30 m) | - | Reference fractional abundance maps | Partial | - |

| Lathrop et al. [124] | Landsat 8 OLI (15–30 m) | Mud, sandy mud, muddy sand, sand | All | In situ data | - | - | 805 circles (250 m radius) | Uniform | Reference fractional abundance map | Partial | - |

| Legleiter et al. [103] | DESIS (30 m) | 12 cyanobacteria genera, water | All | In situ data | - | - | - | - | - | - | - |

| Li et al. [75] | AVIRIS (10 m) | Vegetation, bare soil, vineyard, etc. | All | Field reference data | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Hyperion (30 m) | - | All | Hyperion (30 m) image | - | - | The whole study area | The whole study area | Reference map | - | - | |

| Li et al. [74] | AVIRIS (10 m) | Tree, water, dirt, road | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Li et al. [107] | GaoFen-6 (2–8–16 m) Landsat 8 OLI (15–30 m) Sentinel-2 (10–20–60 m) | Green vegetation, bare rock, bare soil, non-photosynthetic vegetation | All | Photo acquired with drones | Classification | In situ measurements of fractional vegetation cover and bare rock | 285 polygons | Random | Reference fractional abundance maps | Full | Polygon size |

| Li et al. [76] | AVIRIS (10 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Luo et al. [77] | AVIRIS (10 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Lyngdoh et al. [100] | AVIRIS (20 m) AVIRIS-NG (5 m) | Trees, water, dirt, road Red soil, black soil, crop residue, built-up areas, bituminous roads, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Ma & Chang [78] | AVIRIS (10 m) | - | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | Spatial resampling the reference maps |

| CASI (2.5 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Matabishi et al. [469] | DESIS (30 m) | Roof materials | All | VHR images | - | Field validation data | 1053 ground reference points | - | Reference fractional abundance maps | Full | - |

| Meng et al. [163] | Sentinel-2 (10–20–60 m) | Vegetation, non-vegetation | 1 | Google Earth Pro image (1 m) | - | - | 10535 squares (10 × 10 m) | Stratified random | Reference fractional abundance maps | Partial | - |

| Nill et al. [125] | Landsat TM, OLI (30 m) | Shrubs, coniferous trees, herbaceous plants, lichens, water, barren surfaces | All | RGB camera (0.4–8 cm) Orthophotos (10–15 cm) | - | Field validation data | 216 validation pixels | Stratified random | Reference fractional abundance maps | Full | - |

| Ouyang et al. [126] | Landsat-8 OLI (30 m) | Impervious surface, evergreen vegetation, seasonally exposed soil | 1 | Land use and land cover maps (0.5 m) | - | - | 264 circles (1 km radius) | Random | Reference fractional abundance map | Partial | - |

| Ozer & Leloglu [167] | Sentinel-2 (10–20–60 m) | Soil, vegetation, water | All | Aerial images (30 cm) | - | - | - | - | Reference fractional abundance map | Partial | - |

| P et al. [61] | ASTER (90 m) | Iron Oxide | 1 | In situ data | - | - | 13 samples | - | - | - | - |

| Palsson et al. [59] | Apex | Asphalt, vegetation, water, roof | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| AVIRIS (10 m) | Road, soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| CASI (2.5) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Samson (3.2 m) | Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Pan & Jiang [65] | AVHRR (1–5 km) | Snow, bare land, grass, forest, shadow | All | Landsat7 TM+ image (30 m) | The same procedure performed to AVHRR image | - | Landsat image | Representative area | Reference fractional abundance maps | Full | - |

| Pan et al. [66] | AVHRR (1–5 km) | Snow, bare land, grass, forest, shadow | All | Landsat5 TM image (30 m) | The same procedure performed to AVHRR image | The land use/land cover | Landsat image | Representative area | Reference fractional abundance maps | Full | - |

| Paul et al. [470] | DESIS (30 m) | PV panel, vegetation, sand | All | VHR image | - | - | - | Random | Reference fractional abundance maps | Full | - |

| Pervin et al. [154] | NEON (1 m) | Tall woody plants, herbaceous and low stature vegetation, bare soil | All | NEON AOP image (0.1 m) | Supervised classification | Drone imagery (0.01 m) | 13 sets of 10 pixels | Random | Reference fractional abundance maps | Partial | - |

| Qi et al. [89] | AVIRIS (10 m) | Road, soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Asphalt, grass, trees, roofs | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Samson (3.2 m) | Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Rajendran et al. [116] | Hyperion (30 m) | Chlorophyll-a | 1 | WorldView-3 image (0.31–1.24–3.7 m) | Field validation data | - | - | Reference fractional abundance maps | Full | - | |

| Ronay et al. [170] | Specim IQ | Weed species | All | In situ data | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Santos et al. [131] | Landsat MSS, TM, OLI (30 m) | Natural vegetation, anthropized area, burned, water | All | In situ data | - | - | samples | Random | Reference maps | - | - |

| Shaik et al. [158] | PRISMA (30 m) | Broadleaved forest, Coniferous forest, Mixed forest, Natural grasslands, Sclerophyllous vegetation | All | Land use and land cover map | - | Field validation data | - | - | Reference maps | - | - |

| Shao et al. [109] | Landsat-8 OLI (15–30 m) GaoFen-1 (2–8–16 m) | Vegetation, soil impervious surfaces (high albedo; low albedo), water | 1 | GaoFen-1 image (2 m) | Object-based classification and photointerpretation of the results. | Ground-based measurements | 300 pixels | Uniform | Reference fractional abundance map | Partial | - |

| Shi et al. [90] | AVIRIS (10 m) | Road, soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Shi et al. [79] | AVIRIS (10 m) | Road, soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Shimabukuro et al. [132] | Landsat TM, OLI (30 m) | Forest plantation | All | MapBiomas annual LULC map collection 6.0 | - | - | 20000 samples | Stratified random | Reference maps | Partial | - |

| Silvan-Cardenas et al. [139] | Landsat (30 m) | - | - | In situ data | - | - | samples | - | Reference maps | - | - |

| Sofan et al. [135] | Landsat-8 OLI (15–30 m) | Vegetation, smoldering, burnt area | All | PlanetScope images (3 m) | Photointerpretation | - | - | Random | - | - | - |

| Song et al. [153] | MODIS (0.5 km) | Water, urban, tree, grass | All | GlobalLand30 maps (GLC30) produced based on Landsat (30 m) | - | - | - | - | Reference fractional abundance maps | Full | - |

| AVIRIS (10 m) | - | - | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | - | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Sun et al. [80] | AVIRIS (10 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Sun et al. [165] | Sentinel-2 (10–20–60 m) | Rice residues, soil, green moss, white moss | 1 | Photos (1.5 m) | Photointerpretation | In situ observations | 30 samples | Random | Reference fractional abundance maps | Partial | - |

| Sutton et al. [119] | Landsat TM, OLI (30 m) | Drylands, semi-arid zone, arid zone | All | In situ data | - | - | 4207 samples | No-uniform | - | - | - |

| Tao et al. [91] | AVIRIS (10 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Tarazona Coronel [127] | Landsat TM, OLI (30 m) | Vegetation | 1 | Landsat (15–30 m) and Sentinel-2 (10–20–60 m) images | Photointerpretation | Official truth-terrain data from deforested and non-deforested areas prepared by PRODES | 300 samples | Stratified random | Reference fractional abundance maps | Partial | - |

| van Kuik et al. [133] | Landsat TM, OLI (30 m) Sentinel-2 (10–20–60 m) | Blowouts to sand, water, vegetation | 1 | Unoccupied Aerial Vehicle (UAV) orthomosaics (1 m) | Photointerpretation | - | - | - | Reference fractional abundance maps | Partial | - |

| Viana-Soto et al. [138] | Landsat TM, OLI (30 m) | Tree, shrub, background (herbaceous, soil, rock) | 1 | Orthophotos | Photointerpretation | Validation samples | - | Uniform | Reference fractional abundance maps | Full | - |

| Wang et al. [87] | AVIRIS (10 m) | - | - | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Wang et al. [142] | Landsat-8 OLI (30 m) | Impervious surfaces (high albedo, low albedo), forest, grassland, soil | 1 | QuickBird image (0.6 m) | Spectral angle mapping classification | In situ observations | 13,080 points | Random | Reference fractional abundance maps | Partial | - |

| Wang et al. [150] | MODIS (0.5 km) | Vegetation, non-vegetation | All | Landsat image (30 m) | K-means-based unsupervised classification | - | Landsat image | Representative area | Reference fractional abundance maps | Partial | - |

| Wang et al. [92] | AVIRIS (10 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wu & Wang [85] | AVIRIS (10 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Xia et al. [128] | Landsat ETM+, OLI (30 m) | High albedo, vegetation low albedo, shadow | 2 | Google Earth images | Photointerpretation | - | 100 polygons (30 × 30 m) | Random | Reference fractional abundance maps | Partial | - |

| Xu et al. [162] | Sentinel-2 (10–20–60 m) | Impervious surface, water body, vegetation, bare land | All | Google Earth images | Photointerpretation | In situ observations | - | - | Reference fractional abundance maps | Partial | - |

| Yang et al. [57] | AMMIS (0.5 m) AVIRIS ROSIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Yang [81] | AVIRIS (20 m) | Vegetation, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Yang et al. [141] | Landsat-8 OLI (30 m) | Water, non-water | All | Google Earth images | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Partial | - |

| Yi et al. [82] | AVIRIS (20 m) | Vegetation, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Yin et al. [82] | MODIS (0.250 km) | Water, soil | 1 | Landsat OLI image (30 m) | Modified normalized difference water index (MNDWI) | - | Landsat image | Representative area | Reference fractional abundance maps | Partial | Spatial resampling the reference maps |

| Zhang & Jiang [108] | Landsat (30 m) Sentinel-2 (20 m) MODIS (0.5 km) | Snow | 1 | GaoFen-2 image (3.2 m) | Supervised classification | - | - | - | Reference fractional abundance map | Partial | - |

| Zhang et al. [117] | HySpec (0.7 m) | Bitumen, red-painted metal sheets, blue fabric, red fabric, green fabric, grass | All | Reference map | - | - | - | - | Reference maps | Partial | - |

| Zhang et al. [83] | AVIRIS (20 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhang et al. [93] | AVIRIS (10/20 m) | Dumortierite, muscovite, Alunite+muscovite, kaolinite, alunite, montmorillonite Tree, water, road, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhang et al. [129] | Landsat-8 OLI (30 m) | Vegetation, impervious surfaces | All | GaoFen-1 image (2–8 m) | Photointerpretation | - | 101 samples | Uniform | Reference fractional abundance maps | Partial | - |

| Zhang et al. [130] | Landsat-8 OLI (30 m) | Vegetation | All | GaoFen-1 image (2–8 m) | Object-based classification | - | 101 samples | Uniform | Reference fractional abundance map | Partial | - |

| Zhang et al. [88] | AVIRIS (10/20 m) | Cuprite, road, trees, water, soil Asphalt, dirt, tree, roof | All | Reference map Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhao et al. [84] | AVIRIS (10 m) | Road, trees, water, soil Asphalt, grass, tree, roof, metal, dirt | All | Reference map Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhao et al. [96] | AVIRIS (10 m) | Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Zhao et al. [94] | AVIRIS (10 m) | Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhao et al. [95] | AVIRIS (20 m) | Andradite, chalcedony, kaolinite, jarosite, montmorillonite, nontronite | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Zhao et al. [136] | Landsat-8 OLI (30 m) Sentinel-2 (10–20–60 m) | Impervious surfaces, vegetation, soil, water | 2 | WorldView-2 image (0.50–2 m) | - | - | 172 polygons (480 × 480 m) | Random | Reference fractional abundance maps | Full | - |

| Zhao et al. [140] | Landsat (30 m) Spot (30 m) | Vegetation | 1 | Fractional vegetation cover reference maps (provided by VALERI project and the ImagineS) | - | In situ measurements of LAI (provided by VALERI project and the ImagineS) | 445 squares (20 × 20 m or 30 × 30 m) | - | Reference fractional abundance map | Full | - |

| Zhao & Qin [168] | Sentinel-2 (10–20–60 m) | Vegetation, mineral area | All | In situ data | - | - | - | - | Reference fractional abundance maps | Partial | - |

| Zhu et al. [64] | AVHRR (1–5 km) | Snow, non-snow (bare land, vegetation, and water) | 1 | Landsat TM image (30 m) | Normalized difference snow index | - | Landsat image | Representative area | Reference fractional abundance map | Full | Spatial resolution variation |

| Zhu et al. [97] | AVIRIS (10 m) | Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| HYDICE (10 m) | Road, roof, soil, grass, trail, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - | |

| Samson (3.2 m) | Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference maps | - | - |

| Paper | Remote Image | Determined Endmembers | Validated Endmembers | Sources of Reference Data | Method for Mapping the Endmembers | Validation of Reference Data with Other Reference Data | Sample Sizes and Number of Small Sample Sizes | Sampling Designs | Reference Data | Estimation of Fractional Abundances | Error in Co-Localization and Spatial Resampling |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Azar et al. [174] | AVIRIS CASI | Trees, Mostly Grass Ground Surface, Mixed Ground Surface, Dirt/Sand, Road | All All | Reference map CASI image | - Photointerpretation | - | The whole study area | The whole study area | Reference map Reference map | - | - |

| Badola et al. [226] | AVIRIS-NG (5 m) Sentinel-2 (10–20–60 m) | Black Spruce Birch Alder Gravel | All | In situ data | Photointerpretation | In situ observations | 29 plots | Random | Reference map | - | - |

| Bai et al. [175] | AVIRIS | Asphalt, Grass, Tree, Roof, Metal, Dirt | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Bair et al. [254] | Landsat MODIS | Snow, canopy | 1 | WorldView-2–3 images (0.34–0.55 m) | Photointerpretation | Airborne Snow Observatory (ASO) (3 m) | - | - | Reference fractional abundance map | Full | Spatial resampling the reference maps Evaluation of the errors in co-localization and spatial-resampling |

| Benhalouche et al. [230] | HYDICE (10 m) Samson (3.2) | Asphalt, grass, tree, roof Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Benhalouche et al. [265] | PRISMA (30 m) | Mineral | All | Geological map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Borsoi et al. [176] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Cerra et al. [238] | HySpex | Target | All | In situ data | - | Reference targets and Aeronet data | - | - | Reference fractional abundance maps | - | - |

| Chang et al. [229] | GF-5 (30 m) Sentinel 2 (10–20–60 m) ZY-1-02D (30 m) | - | All | In situ data | - | - | - | - | Reference fractional abundance maps | - | - |

| Chen et al. [239] | Landsat | - | All | UAV images | - | Ground survey data | - | - | Reference fractional abundance maps | - | - |

| Chen et al. [245] | Landsat | Vegetation, impervious surface, bare soil, and water | All | Google Earth images | - | - | - | - | Reference fractional abundance maps | - | - |

| Chen et al. [246] | Landsat | - | All | Google Earth images | - | Field surveys | 300 plots | Random | Reference fractional abundance maps | - | - |

| Converse et al. [247] | Landsat | Green vegetation, non-photosynthetic vegetation, soil | All | UAS images | - | Field surveys | Plots | - | Reference fractional abundance maps | Full | - |

| Di et al. [177] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Dong & Yuan [178] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Dong et al. [179] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Dong et al. [180] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Dutta et al. [248] | Landsat | Vegetation, impervious surface, bare soil, | 1 | In situ data | - | Built-up density, urban expansion and population density of the area | - | - | Reference fractional abundance maps | Full | - |

| Ekanayake et al. [181] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Elrewainy & Sherif [182] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Feng & Fan [255] | Landsat (30 m) Sentinel 2 (10–20–60 m) | Vegetation, high-albedo impervious surface, low-albedo impervious surface soil | All | In situ data | - | - | 18000 testing areas | random | Reference fractional abundance maps | Full | - |

| Fernández-García et al. [256] | Landsat (30 m) | Arboreal vegetation, shrubby vegetation, herbaceous vegetation, rock and bare soil, water | All | Orthophotographs (0.25 m) | - | - | 250 plots (30 × 30 m) | random | Reference fractional abundance maps | Full | Spatial resolution variation |

| Finger et al. [249] | Landsat (30 m) | - | All | California Department of Fish and Wildlife (CDFW) aerial survey canopy area product | - | - | - | - | Reference fractional abundance maps | Full | - |

| Gu et al. [183] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Guo et al. [184] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Gu et al. [185] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Han et al. [186] | AVIRIS | Asphalt, grass, tree, roof | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Han et al. [268] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Haq et al. [234] | Hyperion (30 m) | Clean snow, blue ice, refreezing ice dirty snow, dirty glacier ice, firn, moraine, and glacier ice | All | In situ data | - | Sentinel-2 images | - | - | Reference fractional abundance maps | Full | - |

| He et al. [231] | HYDICE (10 m) MODIS (0.5–1 km) | - | All All | Reference map Finer Resolution Observation and Monitoring of Global Land Cov (30 m) | - | - | - 61 scenes | - | Reference fractional abundance maps | Full | - |

| He et al. [56] | ROSIS (4m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Hua et al. [187] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Hua et al. [188] | AVIRIS Samson (3.2) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Huang et al. [189] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Jia et al. [190] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Ji et al. [235] | Hyperion (30 m) | Photosynthetic vegetation, non-photosynthetic vegetation, bore soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Jiji [250] | Landsat (30 m) | Heavy metals | All | In situ data | - | - | 17 samples | Random | Reference fractional abundance maps | Full | - |

| Jin et al. [267] | ROSIS (4 m) Samson (3.2 m) | Urban surface materials Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Kneib et al. [271] | Sentinel 2 (10–20–60 m) | - | all | Pleiades images (2 m) | Photointerpretation | - | - | - | Reference fractional abundance maps | Full | - |

| Kucuk & Yuksel [202] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Kumar & Chakravortty [191] | AVIRIS ROSIS (4 m) | - Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [203] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [192] | AVIRIS HYDICE (10 m) | Cuprite - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [193] | AVIRIS | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - | |

| Li [194] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [195] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [196] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [197] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [251] | Landsat (30 m) | Impervious, vegetation, bare land, water | All | Google Earth images | - | Field surveys | 4296 sampled points | Random | Reference fractional abundance maps | Full | - |

| Li [257] | Landsat (30 m) | Impervious, soil, vegetation | All | Images | - | - | 200 sample points | Random | Reference fractional abundance maps | Full | - |

| Li et al. [204] | AVIRIS HYDICE (10 m) | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li et al. [205] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Liu et al. [206] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Lui & Zhu [207] | AVIRIS Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Lombard & Andrieu [240] | Landsat | - | 3 | Google Earth images | Phointerpretation | - | 8490 sample points | Random | Reference fractional abundance maps | Full | - |

| Luo & Chen [260] | Landsat | Vegetation, impervious, soil | 1 | Gaofen-2 and WorldView-2 images | - | - | - | - | Reference fractional abundance maps | Full | Spatial resolution variation |

| Ma et al. [276] | WorldView-3 | Vegetation | All | Digital cover photography | - | Vegetation spectra | 30 sample points | - | Reference fractional abundance map | Full | - |

| Mudereri et al. [273] | Sentinel 2 (10–20–60 m) | - | All | Google Earth images | - | Field surveys | 1370 pixels | Random | Reference fractional abundance maps | Full | - |

| Muhuri et al. [258] | Landsat Sentinel 2 (10–20–60 m) | Snow cover | All | In situ data | - | Airborne Snow Observatory (ASO) (2 m) | - | - | Reference fractional abundance maps | Full | - |

| Okujeni et al. [228] | Simulated EnMAP | - | All | Google Earth images | - | Landsat images | 3183 sites | Random | Reference fractional abundance maps | Full | - |

| Ou et al. [233] | HyMap (4.5 m) | Soil organic matter, soil heavy meta | All | In situ data | - | - | 95 soil samples | Random | Reference fractional abundance maps | Full | - |

| Pan et al. [261] | MODIS (0.5–1 km) | Snow | All | Landsat images | MESMA | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Patel et al. [208] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Peng et al. [209] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Qin et al. [210] | AVIRIS Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Racoviteanu et al. [241] | Landsat | Debris-covered glaciers | All | Pléiades 1A image (2 m) RapidEye image (5 m) PlanetScope (3 m) | Phointerpretation | DEM | 151 test pixels | Random | Reference fractional abundance maps | Full | - |

| Rittger et al. [262] | MODIS (0.5–1 km) | Snow | All | Landsat images | - | - | - | Random | Reference fractional abundance maps | Full | Spatial resolution variation |

| Sall et al. [252] | Landsat (30 m) | Waterbodies | All | DigitalGlobe WorldView-2 (0.46 m) | - | National AgricultureImagery Program (NAIP) | - | - | Reference fractional abundance maps | Full | - |

| Sarkar & Sur [173] | ASTER (15–30–90 m) | Bauxite minerals | All | In situ data | - | Petrological, EPMA, SEM-EDS studies DEM | - | - | Reference fractional abundance maps | Full | - |

| Seydi & Hasanlou [236] | Hyperion (30 m) | - | All | In situ data | - | - | 73505 samples | Random | Reference fractional abundance maps | Full | - |

| Seydi & Hasanlou [237] | Hyperion (30 m) | - | All | In situ data | - | - | - | - | Reference fractional abundance maps | Full | - |

| Shahid & Schizas [211] | AVIRIS Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Shen et al. [242] | Landsat (30 m) | Impervious, non-impervious surface | All | Land use map by the National Basic Geographic Information Center | - | - | - | - | Reference map | - | - |

| Shen et al. [270] | Sentinel 2 (10–20–60 m) | - | All | Google Earth images | Phointerpretation | - | 467 polygons | Random | Reference fractional abundance maps | Full | - |

| Shumack et al. [243] | Landsat (30 m) Sentinel 2 (10–20–60 m) | Bare soil, photosynthetic vegetation, non-photosynthetic vegetation | All | Orthorectified mosaic images (0.02 m) | Object based image analyses | SLATS dataset of fractional ground cover surveys | 400 point per images | Random | Reference fractional abundance maps | Full | - |

| Song et al. [232] | HYDICE (10 m) Samson (3.2 m) | Road, trees, water, soil Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Soydan et al. [272] | Sentinel 2 (10–20–60 m) | - | All | Laboratory analysis of field collected samples through Inductive Coupled Plasma | - | Laboratory analysis of field collected samples through X-Ray Diffraction, and ASD spectral analysis | - | - | Reference fractional abundance maps | Full | - |

| Su et al. [212] | AVIRIS HYDICE (10 m) Hyperion (30 m) | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Sun et al. [263] | MODIS (0.5–1 km) | Green vegetation, sand, saline, and dark surface | All | Google Earth images Field observations | - | - | 89 samples 10 plots (1 × 1 km) | Random | Reference fractional abundance maps | Full | Spatial resolution variation |

| Sun et al. [275] | WorldView-2 | Mosses, lichens, rock, water, snow | In situ data | - | Photos and spectra | 32 plots (2 × 2 m) | Random | Reference fractional abundance maps | - | - | |

| Tan et al. [198] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Vibhute et al. [213] | AVIRIS | Tree, soil, water, road | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wan et al. [214] | AVIRIS HYDICE (10 m) Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [215] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Vermeulen et al. [244] | Landasat Sentinel 2 (10–20–60 m) | Soil, Photosynthetic Vegetation, Non-Photosynthetic Vegetation | All | Images, field data | - | - | (10 × 10 m) plots | - | Reference fractional abundance maps | - | - |

| Wang et al. [199] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [216] | AVIRIS HYDICE (10 m) | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang [217] | AVIRIS ROSIS (4 m) | - Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [200] | AVIRIS ROSIS (4 m) | - Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wu et al. [253] | Landsat Sentinel 2 (10–20–60 m) | Bare soil, agricultural crop Water, vegetation, urban | All | Google Maps | Phointerpretation | - | - | - | Reference fractional abundance maps | Full | - |

| Xiong et al. [201] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xiong et al. [218] | AVIRIS HYDICE (10 m) | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu et al. [219] | AVIRIS | Cuprite | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu & Somers [269] | Sentinel 2 (10–20–60 m) | Vegetation, soil, impervious surface | All | Google Earth images | Object-oriented classification | - | - | - | Reference fractional abundance maps | Full | - |

| Yang et al. [264] | MODIS (0.5–1 km) | Vegetation, soil | All | GF-1, Google Earth images | - | - | 2044 samples (0.5 × 0.5 km) | Random | Reference fractional abundance maps | Full | - |

| Ye et al. [220] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yu et al. [227] | Landasat (30 m) CASI | - | All | GF-1 image (2 m) GeoEye image (2 m) Reference map | Classification | - | The whole study area | The whole study area | Reference fractional abundance maps | Partial | - |

| Yuan et al. [274] | UAV multispectral image | - | All | In situ data | - | - | 67 samples | - | Reference fractional abundance maps | Full | - |

| Yuan & Dong [221] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yuan et al. [222] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Zang et al. [259] | Landsat | Vegetation, soil, impervious surface | All | Google Earth Pro image | Night light data, population data at township scale, administrative data | 120 samples | Random | Reference fractional abundance maps | Full | - | |

| Zhang & Pezeril [223] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Zhao et al. [266] | ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Zheng et al. [224] | AVIRIS Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Zhu et al. [225] | AVIRIS Samson (3.2 m) | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Paper | Remote Image | Determined Endmembers | Validated Endmembers | Sources of Reference Data | Method for Mapping the Endmembers | Validation of Reference Data with Other Reference Data | Sample Sizes and Number of Small Sample Sizes | Sampling Designs | Reference Data | Estimation of Fractional Abundances | Error in Co-Localization and Spatial Resampling |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Aalstad et al. [340] | Landsat MODIS Sentinel2 | Shadow, cloudy, snow, snow-free | All | 305 terrestrial images | Classification | DEM | - | - | Reference fractional abundance maps | Full | - |

| Aldeghlawi et al. [334] | HYDICE | Urban surface materials | All | Reference maps | - | - | The whole study area | The whole study area | Reference map | - | - |

| Arai et al. [368] | PROBA-V | Vegetation, soil, shade | All | Landsat images (30 m) | Calculate Geometry function | Land use and land cover map produced by the MapBiomas Project and the Agricultural Census | 298 sampling units | Uniform | Reference fractional abundance maps | Full | Spatial resampling the reference maps |

| Bai et al. [281] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Benhalouche et al. [278] | ASTER | - | All | In situ data | - | - | 2 samples | - | Reference fractional abundance maps | Full | - |

| Binh et al. [341] | Landsat | - | All | Google Earth images | Phointerpretation | Field surveys | - | - | Reference fractional abundance maps | Full | Evaluation of the errors in co-localization and spatial-resampling |

| Borsoi et al. [283] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Borsoi et al. [282] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Borsoi et al. [176] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Bullock et al. [349] | Landsat | - | All | In situ data | - | - | 500 samples | Random | Reference fractional abundance maps | Full | - |

| Carlson et al. [377] | Sentinel (10–20–60 m) | - | All | In situ data | - | Aerial photograhs | - | Random | Reference fractional abundance maps | Full | - |

| Chen et al. [299] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Cheng et al. [543] | Hyperspectral | - | All | In situ data | - | - | - | Random | Reference fractional abundance maps | Full | Evaluation of the errors in co-localization and spatial-resampling |

| Cooper et al. [330] | Simulated EnMAP (30 m) | - | All | Google Earth images | Phointerpretation | - | 260 polygons (90 × 90 m) | Random | Reference fractional abundance maps | Full | - |

| Czekajlo et al. [350] | Landsat | - | All | Google Earth images | Phointerpretation | - | 1085 grids (6 × 6 m) | Random | Reference fractional abundance maps | Full | - |

| Dai et al. [351] | Landsat | - | All | In situ data | DEM | 2223 samples sites | Random | ||||

| Das et al. [300] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Dou et al. [301] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Drumetz et al. [329] | CASI | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Elkholy et al. [284] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Fang et al. [285] | AVIRIS ROSIS | - Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Fathy et al. [286] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Fernández-Guisuraga et al. [342] | Landsat WorldView-2 | Photosynthetic vegetation, non-photosynthetic vegetation, soil and shade | All | In situ data | - | - | 85 (30 × 30 m) field plots 360 (2 × 2 m) field plots | Random | Reference fractional abundance maps | Full | Co-localization the maps |

| Firozjaei et al. [364] | MODIS | - | All | Landsat images | - | Annual primary energy consumption, Global gridded population density, Population size data, Normalized difference vegetation index (NDVI) Data, CO and NOx emissions | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Fraga et al. [378] | Sentinel-2 (10–20–60 m) | - | All | In situ data | - | 15 sampling points | Random | Reference fractional abundance maps | Full | - | |

| Gharbi et al. [545] | Hyperspectral | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Girolamo-Neto et al. [379] | Sentinel-2 (10–20–60 m) | - | All | In situ data | 461 field observations | Random | Reference fractional abundance maps | Full | - | ||

| Godinho Cassol et al. [369] | PROBA-V | Vegetation, soil, shade | All | Landsat images (30 m) | - | - | 622 sampling units | Uniform | Reference fractional abundance maps | Full | - |

| Han et al. [287] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| He et al. [356] | Landsat | - | All | In situ data | - | Photos | 118 field sites | Random | Reference fractional abundance maps | Full | - |

| Holland & Du [288] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Hua et al. [289] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Huang et al. [302] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Huechacona-Ruiz et al. [380] | Sentinel-2 (10–20–60 m) | - | All | In situ data | - | GPS | 288 sampling units | Random | Reference fractional abundance maps | Full | - |

| Imbiriba et al. [303] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Jarchow et al. [358] | Landsat | - | All | WorldView-2 (0.5 m) | - | National Agriculture Imagery Program (NAIP) scene | 154 pods | Random | Reference fractional abundance maps | Full | - |

| Ji et al. [333] | GF1 Landsat Sentinel-2 (10–20–60 m) | - | All | In situ data | - | GPS | 111 surveyed fractional-cover sites | Random | Reference fractional abundance maps | Full | - |

| Jiang et al. [304] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Karoui et al. [290] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Khan et al. [352] | Landsat | - | All | In situ data | - | GPS, “Land Use, Land Use Change and Forestry Projects” | 108 circular sample plots | Random | Reference fractional abundance maps | Full | - |

| Kompella et al. [328] | AWiFS Sentienl-2 (10–20–60 m) | - | All | In situ data | - | GPS | 2 sampling areas | - | Reference fractional abundance maps | Partial | Co-localization the maps |

| Laamrani et al. [343] | Landsat | - | All | Photographs | - | Field surveys, GPS | 70 (30 × 30 m) sampling area | - | Reference fractional abundance maps | Full | Co-localization the maps |

| Lewińska et al. [359] | MODIS | Soil, green vegetation, non-photosynthetic vegetation shade | Land cover classifications (30 m), Map of the Natural Vegetation of Europe | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - | |

| Li et al. [305] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Li [360] | Landsat | Vegetation, high albedo, low albedo, soil | All | Orthophotography images, Google Earth images | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Ling et al. [365] | MODIS | water and land | All | Radar altimetry water levels | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Full | - |

| Liu et al. [332] | GF1 GF2 Landsat Sentinel-2 (10–20–60 m) | Water, vegetation, soil | All | Google Earth images | Meteorological data | 129 sample points | Reference fractional abundance maps | Full | - | ||

| Lu et al. [306] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Lymburner et al. [348] | Landsat | - | All | LIDAR survey | - | - | 100 (10 × 10 km) tiles | Random | Reference fractional abundance maps | Full | - |

| Lyu et al. [338] | Hyperion (30 m) | - | All | In situ data | - | Land use data | 36 plots | Random | Reference fractional abundance maps | Full | - |

| Markiet & Mõttus [277] | AISA Eagle II airborne hyperspectral scanner | - | - | In situ data | - | Site fertility class, tree species composition, diameter at breast height, median tree height, effective leaf area index calculated from canopy gap fraction | 250 plots | Random | Reference fractional abundance maps | Full | - |

| Mei et al. [307] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Moghadam et al. [336] | HyMap Hyperion (30 m) | - | All | Geological map | - | - | The whole study area | The whole study area | Reference fractional abundance maps | Partial | - |

| Montorio et al. [339] | Landsat Sentinel-2 (10–20–60 m) | - | All | Pléiades-1A orthoimage | - | - | 275/280 plots | Random | Reference fractional abundance maps | Full | - |

| Park et al. [546] | Hyperspectral | - | All | In situ data | - | - | - | - | Reference fractional abundance maps | Full | - |

| Patel et al. [372] | ROSIS | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Peng et al. [297] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Peroni Venancio et al. [347] | Landsat | photosynthetic vegetation, soil/non-photosynthetic vegetation | All | In situ data | - | - | - | Random | Reference fractional abundance maps | Full | - |

| Qi et al. [312] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | |

| Qi et al. [308] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Qian et al. [309] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Qu & Bao [321] | AVIRIS HYDICE | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Quintano et al. [381] | Sentinel-2 (10–20–60 m) | Char, green vegetation, non-photosynthetic vegetation, soil, shade | All | Official burn severity (three severity levels) and fire perimeter maps provided by Portuguese Study Center of Forest Fires | - | - | The whole study area | The whole study area | Reference map | - | - |

| Rasti et al. [320] | AVIRIS Samson | - Trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Redowan et al. [371] | Landsat | - | All | Google Earth images | - | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - |

| Rathnayake et al. [293] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Salvatore et al. [385] | WorldView-2 WorldView-3 | - | All | In situ data | - | - | - | - | Reference fractional abundance maps | Full | - |

| Sall et al. [252] | Landsat | - | All | WorldView-2 (0.46 m) | National Agriculture Imagery Program (NAIP | 89 waterbodies | The whole study area | Reference fractional abundance maps | Full | - | |

| Salehi et al. [280] | HyMap ASTER Landsat Sentinel-2 | - | All | In situ data | - | Geological map, X-ray fluorescence analysis | - | - | Reference fractional abundance maps | Full | - |

| Senf et al. [345] | Landsat | - | All | Aerial images | - | - | 360 sample areas | Random | Reference fractional abundance maps | Full | - |

| Shah et al. [313] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Shih et al. [354] | Landsat | Vegetation, Impervious, Soil | All | Google Earth VHR images | - | - | 107 (90 × 90 m) samples | Random | Reference fractional abundance maps | Partial | |

| Shimabukuro et al. [370] | PROBA-V | - | All | Sentinel-2 | - | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - |

| Shimabukuro et al. [353] | Landsat Suomi NPP-VIIRS ROBA-V | - | All | Sentinel-2 MODIS | - | Annual classifications of the Program for Monitoring Deforestation in the Brazilian Amazon (PRODES), Global Burned Area Products (Fire CCI, MCD45A1,MCD64A1) | - | - | Reference fractional abundance maps | Partial | - |

| Siebels et al. [319] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Sing & Gray [363] | Landsat | - | All | In situ data | - | - | 346 field plots | Random | Reference fractional abundance maps | Full | - |

| Sun et al. [331] | GF-1 | - | All | Google Earth images | - | - | 4500 pixels | Random | Reference fractional abundance maps | Full | - |

| Takodjou Wambo et al. [279] | ASTER Landsat | - | All | In situ data | - | Geological map, X-ray diffraction analysis | 7 outcrops, 53 rock samples | - | Reference fractional abundance maps | Full | - |

| Tao et al. [315] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Thayn et al. [357] | Landsat | - | All | Low-altitude aerial imagery collected from a DJI Mavic Pro drone | - | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - |

| Tong et al. [311] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Topouzelis et al. [382] | Sentinel-2 (10–20–60 m) | - | All | Unmanned Aerial System images | - | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - |

| Topouzelis et al. [383] | Sentinel-2 (10–20–60 m) | - | All | Unmanned Aerial System images | - | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - |

| Trinder & Liu [344] | Landsat | - | All | Ziyuan-3 image, Gaofen-1 satellite image, | - | - | - | - | Reference fractional abundance maps | Full | - |

| Uezato et al. [325] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Vijayashekhar et al. [292] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [375] | Samson | Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [366] | PlanetScope (3 m) | Green vegetation Non-photosynthetic vegetation | All | In situ data | Field measurements of LAI, phenocam-based leafless tree-crown fraction, phenocam-based leafy tree-crown fraction | no | no | Reference fractional abundance maps | Full | Expansion of the windows of field sample size | |

| Wang et al. [346] | Landsat | Water, urban, agriculture, forest | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [373] | ROSIS (4 m) | Urban surface materials | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wang et al. [322] | AVIRIS HYDICE | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Wright & Polashenski [362] | MODIS (0.5 m) | - | All | WorldView-2 (0.46 m) WorldView-3 (0.31 m) | - | Representative areas | Representative areas | Reference fractional abundance maps | Full | - | |

| Xiong et al. [323] | AVIRIS Samson | - Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu et al. [295] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu et al. [296] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu et al. [316] | AVIRIS HYDICE | - Road, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Xu et al. [318] | AVIRIS HYDICE | - Asphalt, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yang & Chen [294] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yang et al. [327] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yang et al. [298] | AVIRIS HYDICE | - Asphalt, trees, water, soil | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yang et al. [374] | Samson | Soil, tree, water | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |

| Yin et al. [355] | Landsat | - | All | Google Earth images | - | - | 500 samples | Random | Reference fractional abundance maps | Full | - |

| Yuan et al. [314] | AVIRIS | - | All | Reference map | - | - | The whole study area | The whole study area | Reference map | - | - |