An Improved VMD-LSTM Model for Time-Varying GNSS Time Series Prediction with Temporally Correlated Noise

Abstract

:1. Introduction

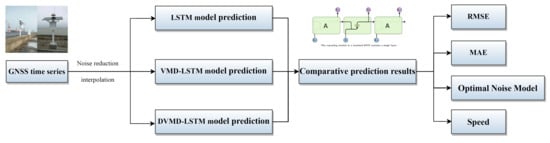

2. Principle and Method

2.1. Variational Modal Decomposition (VMD)

2.2. Long Short-Term Memory (LSTM)

2.3. Dual Variational Mode Decomposition Long-Short Term Memory Network Model (DVMD-LSTM)

2.4. Precision Evaluation Index

3. Data and Experiments

3.1. Data Sources

3.2. Data Preprocessing

3.3. VMD Parameter Discussion

4. Experimental Results and Analysis

4.1. DVMD-LSTM Prediction Results Analysis

4.2. DVMD-LSTM Model Prediction Results and Precision Analysis

4.3. Optimal Noise Model Research

4.3.1. Comparison of Optimal Noise Models under Each Prediction Model

4.3.2. Velocity Estimation Impact Analysis

5. Conclusions

- (1)

- The VMD-LSTM model shows good prediction results for each IMF value after VMD decomposition but performs poorly in predicting the residual component. The proposed DVMD-LSTM model utilizes VMD decomposition to extract the fluctuation characteristics of the residual component, leading to a significant improvement in the prediction accuracy of the residual component and enhancing the overall prediction accuracy;

- (2)

- Compared to the initial VMD-LSTM hybrid model, the DVMD-LSTM model exhibits significant improvements in prediction accuracy. The RMSE values for the DVMD-LSTM model are reduced by an average of 9.71% in the E direction, 8.84% in the N direction, and 11.02% in the U direction. Additionally, the MAE values decreased by an average of 9.17% in the E direction, 8.55% in the N direction, and 10.61% in the U direction. Moreover, the DVMD-LSTM model shows an average increase of 20.68% in R2 for the E direction, an average increase of 12.18% in R2 for the N direction, and an average increase of 21.03% in R2 for the U direction. Across all measurement stations, the DVMD-LSTM model consistently outperforms the VMD-LSTM model, indicating its superior predictive accuracy, adaptability, and robustness;

- (3)

- Compared to the LSTM model, the DVMD-LSTM model achieves an average improvement of 36.50% in the accuracy of the average optimal noise model across all stations, reaching an overall accuracy of 79.17%. This demonstrates that the DVMD-LSTM model adequately considers the noise characteristics of the data during the prediction process and achieves superior prediction results. By calculating the velocities obtained from the optimal noise models, it is evident that the DVMD-LSTM model achieves an average improvement of 33.02% in velocity prediction accuracy compared to the VMD-LSTM model, further confirming the outstanding predictive performance of the DVMD-LSTM model.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Ohta, Y.; Kobayashi, T.; Tsushima, H.; Miura, S.; Hino, R.; Takasu, T.; Fujimoto, H.; Iinuma, T.; Tachibana, K.; Demachi, T.; et al. Quasi real-time fault model estimation for near-field tsunami forecasting based on RTK-GPS analysis: Application to the 2011 Tohoku-Oki earthquake (Mw 9.0). J. Geophys. Res. Solid Earth 2012, 117. [Google Scholar] [CrossRef]

- Serpelloni, E.; Faccenna, C.; Spada, G.; Dong, D.; Williams, S.D. Vertical GPS ground motion rates in the Euro-Mediterranean region: New evidence of velocity gradients at different spatial scales along the Nubia-Eurasia plate boundary. J. Geophys. Res. Solid Earth 2013, 118, 6003–6024. [Google Scholar] [CrossRef] [Green Version]

- Serpelloni, E.; Vannucci, G.; Pondrelli, S.; Argnani, A.; Casula, G.; Anzidei, M.; Baldi, P.; Gasperini, P. Kinematics of the Western Africa-Eurasia plate boundary from focal mechanisms and GPS data. Geophys. J. Int. 2007, 169, 1180–1200. [Google Scholar] [CrossRef]

- Kong, Q.; Zhang, L.; Han, J.; Li, C.; Fang, W.; Wang, T. Analysis of coordinate time series of DORIS stations on Eurasian plate and the plate motion based on SSA and FFT. Geod. Geodyn. 2023, 14, 90–97. [Google Scholar] [CrossRef]

- Younes, S.A. Study of crustal deformation in Egypt based on GNSS measurements. Surv. Rev. 2022, 55, 338–349. [Google Scholar] [CrossRef]

- Cina, A.; Piras, M. Performance of low-cost GNSS receiver for landslides monitoring: Test and results. Geomat. Nat. Hazard Risk 2015, 6, 497–514. [Google Scholar] [CrossRef]

- Shen, N.; Chen, L.; Wang, L.; Hu, H.; Lu, X.; Qian, C.; Liu, J.; Jin, S.; Chen, R. Short-term landslide displacement detection based on GNSS real-time kinematic positioning. IEEE Trans. Instrum. Meas. 2021, 70, 1004714. [Google Scholar] [CrossRef]

- Shen, N.; Chen, L.; Chen, R. Displacement detection based on Bayesian inference from GNSS kinematic positioning for deformation monitoring. Mech. Syst. Signal. Pract. 2022, 167, 108570. [Google Scholar] [CrossRef]

- Meng, X.; Roberts, G.W.; Dodson, A.H.; Cosser, E.; Barnes, J.; Rizos, C. Impact of GPS satellite and pseudolite geometry on structural deformation monitoring: Analytical and empirical studies. J. Geodesy 2004, 77, 809–822. [Google Scholar] [CrossRef]

- Yi, T.H.; Li, H.N.; Gu, M. Experimental assessment of high-rate GPS receivers for deformation monitoring of bridge. Measurement 2013, 46, 420–432. [Google Scholar] [CrossRef]

- Xiao, R.; Shi, H.; He, X.; Li, Z.; Jia, D.; Yang, Z. Deformation monitoring of reservoir dams using GNSS: An application to south-to-north water diversion project, China. IEEE Access 2019, 7, 54981–54992. [Google Scholar] [CrossRef]

- Reguzzoni, M.; Rossi, L.; De Gaetani, C.I.; Caldera, S.; Barzaghi, R. GNSS-based dam monitoring: The application of a statistical approach for time series analysis to a case study. Appl. Sci. 2022, 12, 9981. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, Y.; Xiang, Z.; Zhang, S.; Li, X.; Wang, X.; Ma, X.; Hu, C.; Pan, J.; Zhou, Y.; et al. A novel low-cost GNSS solution for the real-time deformation monitoring of cable saddle pushing: A case study of Guojiatuo suspension bridge. Remote Sens. 2022, 14, 5174. [Google Scholar] [CrossRef]

- Altamimi, Z.; Rebischung, P.; Métivier, L.; Collilieux, X. ITRF2014: A new release of the International Terrestrial Reference Frame modeling nonlinear station motions. J. Geophys. Res. Solid Earth 2016, 121, 6109–6131. [Google Scholar] [CrossRef] [Green Version]

- Chen, M.; Zhang, Q. Analysis of positioning deviation between Beidou and GPS based on National Reference Stations in China. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 209–214. [Google Scholar] [CrossRef]

- Blewitt, G.; Lavallée, D. Effect of annual signals on geodetic velocity. J. Geophys. Res. Solid Earth 2002, 107, ETG 9-1–ETG 9-11. [Google Scholar] [CrossRef] [Green Version]

- Segall, P.; Davis, J.L. GPS applications for geodynamics and earthquake studies. Annu. Rev. Earth Planet. Sci. 1997, 25, 301–336. [Google Scholar] [CrossRef] [Green Version]

- Usifoh, S.E.; Männel, B.; Sakic, P.; Dodo, J.D.; Schuh, H. Determination of a GNSS-Based Velocity Field of the African Continent; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Chen, J.H. Petascale direct numerical simulation of turbulent combustion—Fundamental insights towards predictive models. Proc. Combust. Inst. 2011, 33, 99–123. [Google Scholar] [CrossRef]

- Xu, W.; Xu, H.; Chen, J.; Kang, Y.; Pu, Y.; Ye, Y.; Tong, J. Combining numerical simulation and deep learning for landslide displacement prediction: An attempt to expand the deep learning dataset. Sustainability 2022, 14, 6908. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, W.; Li, Z.; Lu, Y. A new multi-scale sliding window LSTM framework (MSSW-LSTM): A case study for GNSS time-series prediction. Remote Sens. 2021, 13, 3328. [Google Scholar] [CrossRef]

- Klos, A.; Olivares, G.; Teferle, F.N.; Hunegnaw, A.; Bogusz, J. On the combined effect of periodic signals and colored noise on velocity uncertainties. GPS Solut. 2018, 22, 1. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–30 June 2016; pp. 770–778. [Google Scholar]

- Li, Y. Research and application of deep learning in image recognition. In Proceedings of the 2022 IEEE 2nd International Conference on Power, Electronics and Computer Applications (ICPECA), Shenyang, China, 21–23 January 2022; pp. 994–999. [Google Scholar]

- Xiong, J.; Yu, D.; Liu, S.; Shu, L.; Wang, X.; Liu, Z. A review of plant phenotypic image recognition technology based on deep learning. Electronics 2021, 10, 81. [Google Scholar] [CrossRef]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A survey of the usages of deep learning for natural language processing. IEEE Trans. Neural Networks Learn. Syst. 2020, 32, 604–624. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lauriola, I.; Lavelli, A.; Aiolli, F. An introduction to deep learning in natural language processing: Models, techniques, and tools. Neurocomputing 2022, 470, 443–456. [Google Scholar] [CrossRef]

- Wu, L.; Chen, Y.; Shen, K.; Guo, X.; Gao, H.; Li, S.; Pei, J.; Long, B. Graph neural networks for natural language processing: A survey. Found. Trends Mach. 2023, 16, 119–328. [Google Scholar] [CrossRef]

- Deng, L.; Platt, J. Ensemble deep learning for speech recognition. In Proceedings of the Interspeech 2014, Singapore, 14–18 September 2014. [Google Scholar]

- Lee, W.; Seong, J.J.; Ozlu, B.; Shim, B.S.; Marakhimov, A.; Lee, S. Biosignal sensors and deep learning-based speech recognition: A review. Sensors 2021, 21, 1399. [Google Scholar] [CrossRef]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech recognition using deep neural networks: A systematic review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A 2021, 379, 20200209. [Google Scholar] [CrossRef]

- Hua, Y.; Zhao, Z.; Li, R.; Chen, X.; Liu, Z.; Zhang, H. Deep learning with long short-term memory for time series prediction. IEEE Commun. Mag. 2019, 57, 114–119. [Google Scholar] [CrossRef] [Green Version]

- Sezer, O.B.; Gudelek, M.U.; Ozbayoglu, A.M. Financial time series forecasting with deep learning: A systematic literature review: 2005–2019. Appl. Soft. Comput. 2020, 90, 106181. [Google Scholar] [CrossRef] [Green Version]

- Torres, J.F.; Hadjout, D.; Sebaa, A.; Martínez-Álvarez, F.; Troncoso, A. Deep learning for time series forecasting: A survey. Big Data 2021, 9, 3–21. [Google Scholar] [CrossRef] [PubMed]

- Masini, R.P.; Medeiros, M.C.; Mendes, E.F. Machine learning advances for time series forecasting. J. Econ. Surv. 2023, 37, 76–111. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Networks 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Gasparin, A.; Lukovic, S.; Alippi, C. Deep learning for time series forecasting: The electric load case. CAAI Trans. Intell. Technol. 2022, 7, 5929–5955. [Google Scholar] [CrossRef]

- Bashir, T.; Haoyong, C.; Tahir, M.F.; Liqiang, Z. Short term electricity load forecasting using hybrid prophet-LSTM model optimized by BPNN. Energy Rep. 2022, 8, 1678–1686. [Google Scholar] [CrossRef]

- Lin, J.; Ma, J.; Zhu, J.; Cui, Y. Short-term load forecasting based on LSTM networks considering attention mechanism. Int. J. Electr. Power 2022, 137, 107818. [Google Scholar] [CrossRef]

- Yao, W.; Huang, P.; Jia, Z. Multidimensional LSTM networks to predict wind speed. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 7493–7497. [Google Scholar]

- Li, J.; Song, Z.; Wang, X.; Wang, Y.; Jia, Y. A novel offshore wind farm typhoon wind speed prediction model based on PSO–Bi-LSTM improved by VMD. Energy 2022, 251, 123848. [Google Scholar] [CrossRef]

- Yan, Y.; Wang, X.; Ren, F.; Shao, Z.; Tian, C. Wind speed prediction using a hybrid model of EEMD and LSTM considering seasonal features. Energy Rep. 2022, 8, 8965–8980. [Google Scholar] [CrossRef]

- Kim, H.U.; Bae, T.S. Deep learning-based GNSS network-based real-time kinematic improvement for autonomous ground vehicle navigation. J. Sensors 2019, 2019, 3737265. [Google Scholar] [CrossRef] [Green Version]

- Tao, Y.; Liu, C.; Chen, T.; Zhao, X.; Liu, C.; Hu, H.; Zhou, T.; Xin, H. Real-time multipath mitigation in multi-GNSS short baseline positioning via CNN-LSTM method. Math. Probl. Eng. 2021, 2021, 6573230. [Google Scholar] [CrossRef]

- Xie, P.; Zhou, A.; Chai, B. The application of long short-term memory (LSTM) method on displacement prediction of multifactor-induced landslides. IEEE Access 2019, 7, 54305–54311. [Google Scholar] [CrossRef]

- Wang, Y.; Markert, R.; Xiang, J.; Zheng, W. Research on variational mode decomposition and its application in detecting rub-impact fault of the rotor system. Mech. Syst. Signal Process. 2015, 60, 243–251. [Google Scholar] [CrossRef]

- Lian, J.; Liu, Z.; Wang, H.; Dong, X. Adaptive variational mode decomposition method for signal processing based on mode characteristic. Mech. Syst. Signal Process. 2018, 107, 53–77. [Google Scholar] [CrossRef]

- Lahmiri, S. A variational mode decompoisition approach for analysis and forecasting of economic and financial time series. Expert. Syst. Appl. 2016, 55, 268–273. [Google Scholar] [CrossRef]

- Zhao, L.; Li, Z.; Qu, L.; Zhang, J.; Teng, B. A hybrid VMD-LSTM/GRU model to predict non-stationary and irregular waves on the east coast of China. Ocean Eng. 2023, 276, 114136. [Google Scholar] [CrossRef]

- Wang, X.; Wang, Y.; Yuan, P.; Wang, L.; Cheng, D. An adaptive daily runoff forecast model using VMD-LSTM-PSO hybrid approach. Hydrol. Sci. J. 2021, 66, 1488–1502. [Google Scholar] [CrossRef]

- Xu, D.; Hu, X.; Hong, W.; Li, M.; Chen, Z. Power Quality Indices Online Prediction Based on VMD-LSTM Residual Analysis. J. Phys. Conf. Ser. 2022, 2290, 012009. [Google Scholar] [CrossRef]

- Tao, D.; Yang, Y.; Cai, Z.; Duan, J.; Lan, H. Application of VMD-LSTM in Water Quality Prediction. J. Phys. Conf. Ser. 2023, 2504, 012057. [Google Scholar] [CrossRef]

- Huang, Y.; Yan, L.; Cheng, Y.; Qi, X.; Li, Z. Coal thickness prediction method based on VMD and LSTM. Electronics 2022, 11, 232. [Google Scholar] [CrossRef]

- Zhang, T.; Fu, C. Application of Improved VMD-LSTM Model in Sports Artificial Intelligence. Comput. Intell. Neurosci. 2022, 2022, 3410153. [Google Scholar] [CrossRef]

- Han, L.; Zhang, R.; Wang, X.; Bao, A.; Jing, H. Multi-step wind power forecast based on VMD-LSTM. IET Renew. Power Gen. 2019, 13, 1690–1700. [Google Scholar] [CrossRef]

- Xing, Y.; Yue, J.; Chen, C.; Cong, K.; Zhu, S.; Bian, Y. Dynamic displacement forecasting of dashuitian landslide in China using variational mode decomposition and stack long short-term memory network. Appl. Sci. 2019, 9, 2951. [Google Scholar] [CrossRef] [Green Version]

- He, X.; Bos, M.S.; Montillet, J.P.; Fernandes, R.; Melbourne, T.; Jiang, W.; Li, W. Spatial variations of stochastic noise properties in GPS time series. Remote Sens. 2021, 13, 4534. [Google Scholar] [CrossRef]

- Nistor, S.; Suba, N.S.; Maciuk, K.; Kudrys, J.; Nastase, E.I.; Muntean, A. Analysis of noise and velocity in GNSS EPN-repro 2 time series. Remote Sens. 2021, 13, 2783. [Google Scholar] [CrossRef]

- He, X.; Montillet, J.P.; Fernandes, R.; Bos, M.; Yu, K.; Hua, X.; Jiang, W. Review of current GPS methodologies for producing accurate time series and their error sources. J. Geodyn. 2017, 106, 12–29. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2013, 62, 531–544. [Google Scholar] [CrossRef]

- Ur Rehman, N.; Aftab, H. Multivariate variational mode decomposition. IEEE Trans. Signal Process. 2019, 67, 6039–6052. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; He, X.; Shen, H.; Fan, S.; Zeng, Y. Multi-source information fusion to identify water supply pipe leakage based on SVM and VMD. Inf. Process. Manag. 2022, 59, 102819. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, G.; Li, M.; Yin, H. Variational mode decomposition denoising combined the detrended fluctuation analysis. Signal Process. 2016, 125, 349–364. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D 2020, 404, 132306. [Google Scholar] [CrossRef] [Green Version]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Muhuri, P.S.; Chatterjee, P.; Yuan, X.; Roy, K.; Esterline, A. Using a long short-term memory recurrent neural network (LSTM-RNN) to classify network attacks. Information 2020, 11, 243. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Yadav, A.; Jha, C.K.; Sharan, A. Optimizing LSTM for time series prediction in Indian stock market. Procedia Comput. Sci. 2020, 167, 2091–2100. [Google Scholar] [CrossRef]

- Fischer, T.; Krauss, C. Deep learning with long short-term memory networks for financial market predictions. Eur. J. Oper. Res. 2018, 270, 654–669. [Google Scholar] [CrossRef] [Green Version]

- Malhotra, P.; Vig, L.; Shroff, G.; Agarwal, P. Long Short Term Memory Networks for Anomaly Detection in Time Series. In Proceedings of the Esann 2015, Bruges, Belgium, 22–24 April 2015; Volume 2015, p. 89. [Google Scholar]

- Liao, X.; Liu, Z.; Deng, W. Short-term wind speed multistep combined forecasting model based on two-stage decomposition and LSTM. Wind Energy 2021, 24, 991–1012. [Google Scholar] [CrossRef]

- Jin, Y.; Guo, H.; Wang, J.; Song, A. A hybrid system based on LSTM for short-term power load forecasting. Energies 2020, 13, 6241. [Google Scholar] [CrossRef]

- Sun, Z.; Zhao, S.; Zhang, J. Short-term wind power forecasting on multiple scales using VMD decomposition, K-means clustering and LSTM principal computing. IEEE Access 2019, 7, 166917–166929. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Chen, X.; Yu, J. Denoising and feature extraction algorithms using NPE combined with VMD and their applications in ship-radiated noise. Symmetry 2017, 9, 256. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Wu, Y.; Lin, H.; Li, J.; Zhang, F.; Yang, Y. ECG denoising method based on an improved VMD algorithm. IEEE Sens. J. 2022, 22, 22725–22733. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef] [Green Version]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- He, X.; Bos, M.S.; Montillet, J.P.; Fernandes, R.M.S. Investigation of the noise properties at low frequencies in long GNSS time series. J. Geodesy 2019, 93, 1271–1282. [Google Scholar] [CrossRef]

- Neath, A.A.; Cavanaugh, J.E. The Bayesian information criterion: Background, derivation, and applications. Wires Comput. Stat. 2012, 4, 199–203. [Google Scholar] [CrossRef]

- Vrieze, S.I. Model selection and psychological theory: A discussion of the differences between the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychol. Methods 2012, 17, 228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williams, S.D.P. CATS: GPS coordinate time series analysis software. GPS Solut. 2008, 12, 147–153. [Google Scholar] [CrossRef]

- He, Y.; Zhang, S.; Wang, Q.; Liu, Q.; Qu, W.; Hou, X. HECTOR for analysis of GPS time series. In China Satellite Navigation Conference (CSNC) 2018 Proceedings: Volume I; Springer: Singapore, 2018; pp. 187–196. [Google Scholar]

- Tingley, M.P.; Huybers, P. A Bayesian algorithm for reconstructing climate anomalies in space and time. Part II: Comparison with the regularized expectation–maximization algorithm. J. Clim. 2010, 23, 2782–2800. [Google Scholar] [CrossRef] [Green Version]

- Conchello, J.A.; McNally, J.G. Fast regularization technique for expectation maximization algorithm for optical sectioning microscopy. In Proceedings of the SPIE, Three-Dimensional Microscopy: Image Acquisition and Processing III, San Jose, CA, USA, 28 January–2 February 1996; Volume 2655, pp. 199–208. [Google Scholar]

- Schneider, T. Analysis of incomplete climate data: Estimation of mean values and covariance matrices and imputation of missing values. J. Clim. 2001, 14, 853–871. [Google Scholar] [CrossRef]

- Christiansen, B.; Schmith, T.; Thejll, P. A surrogate ensemble study of climate reconstruction methods: Stochasticity and robustness. J. Clim. 2009, 22, 951–976. [Google Scholar] [CrossRef]

- Mei, L.; Li, S.; Zhang, C.; Han, M. Adaptive signal enhancement based on improved VMD-SVD for leak location in water-supply pipeline. IEEE Sens. J. 2021, 21, 24601–24612. [Google Scholar] [CrossRef]

- Ding, M.; Shi, Z.; Du, B.; Wang, H.; Han, L. A signal de-noising method for a MEMS gyroscope based on improved VMD-WTD. Meas. Sci. Technol. 2021, 32, 095112. [Google Scholar] [CrossRef]

- Ding, J.; Xiao, D.; Li, X. Gear fault diagnosis based on genetic mutation particle swarm optimization VMD and probabilistic neural network algorithm. IEEE Access 2020, 8, 18456–18474. [Google Scholar] [CrossRef]

- Agnew, D.C. The time-domain behavior of power-law noises. Geophys. Res. Lett. 1992, 19, 333–336. [Google Scholar] [CrossRef]

- Zhang, J.; Bock, Y.; Johnson, H.; Fang, P.; Williams, S.; Genrich, J.; Wdowinski, S.; Behr, J. Southern California Permanent GPS Geodetic Array: Error analysis of daily position estimates and site velocities. J. Geophys. Res.-Solid Earth 1997, 102, 18035–18055. [Google Scholar] [CrossRef]

- Mao, A.; Harrison, C.G.A.; Dixon, T.H. Noise in GPS coordinate time series. J. Geophys. Res.-Solid Earth 1999, 104, 2797–2816. [Google Scholar] [CrossRef] [Green Version]

- Williams, S.D.P. The effect of coloured noise on the uncertainties of rates estimated from geodetic time series. J. Geod. 2003, 76, 483–494. [Google Scholar] [CrossRef]

- Hackl, M.; Malservisi, R.; Hugentobler, U.; Wonnacott, R. Estimation of velocity uncertainties from GPS time series: Examples from the analysis of the South African TrigNet network. J. Geophys. Res.-Solid Earth 2011, 116. [Google Scholar] [CrossRef]

- Langbein, J. Estimating rate uncertainty with maximum likelihood: Differences between power-law and flicker–random-walk models. J. Geod. 2012, 86, 775–783. [Google Scholar] [CrossRef] [Green Version]

- Bos, M.S.; Fernandes, R.M.S.; Williams, S.D.P.; Bastos, L. Fast error analysis of continuous GNSS observations with missing data. J. Geod. 2013, 87, 351–360. [Google Scholar] [CrossRef] [Green Version]

- Dmitrieva, K.; Segall, P.; DeMets, C. Network-based estimation of time-dependent noise in GPS position time series. J. Geod. 2015, 89, 591–606. [Google Scholar] [CrossRef]

| Site | Longitude (°) | Latitude (°) | Time Span (Year) | Date Missing Rate |

|---|---|---|---|---|

| ALBH | −123.49 | 48.39 | 2000–2022 | 0.61% |

| BURN | −117.84 | 42.78 | 2000–2022 | 1.27% |

| CEDA | −112.86 | 40.68 | 2000–2022 | 2.74% |

| FOOT | −113.81 | 39.37 | 2000–2022 | 3.40% |

| GOBS | −120.81 | 45.84 | 2000–2022 | 3.65% |

| RHCL | −118.03 | 34.02 | 2000–2022 | 1.79% |

| SEDR | −122.22 | 48.52 | 2000–2022 | 0.49% |

| SMEL | −112.84 | 39.43 | 2000–2022 | 0.79% |

| Site | Direction | ||

|---|---|---|---|

| N | E | U | |

| ALBH | 3 | 6 | 3 |

| BURN | 4 | 4 | 3 |

| CEDA | 4 | 4 | 3 |

| FOOT | 3 | 8 | 5 |

| GOBS | 3 | 6 | 5 |

| RHCL | 7 | 3 | 3 |

| SEDR | 3 | 5 | 7 |

| SMEL | 7 | 3 | 5 |

| Site | ENU | LSTM | VMD-LSTM | DVMD-LSTM | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | R2 | RMSE | I/% | MAE | I/% | R2 | I/% | RMSE | I/% | MAE | I/% | R2 | I/% | ||

| ALBH | E | 0.89 | 0.65 | 0.65 | 0.76 | 13.91 | 0.55 | 14.03 | 0.74 | 13.75 | 0.67 | 24.56 | 0.49 | 24.31 | 0.80 | 22.89 |

| BURN | 1.40 | 1.10 | 0.51 | 1.16 | 17.00 | 0.92 | 16.70 | 0.66 | 30.37 | 1.02 | 27.00 | 0.82 | 25.78 | 0.74 | 45.61 | |

| CEDA | 1.73 | 1.35 | 0.70 | 1.37 | 20.75 | 1.06 | 21.18 | 0.81 | 16.00 | 1.21 | 29.82 | 0.94 | 30.32 | 0.85 | 21.83 | |

| FOOT | 0.58 | 0.44 | 0.13 | 0.51 | 12.91 | 0.38 | 13.51 | 0.34 | 157.6 | 0.45 | 22.12 | 0.34 | 22.27 | 0.47 | 256.7 | |

| GOBS | 1.00 | 0.70 | 0.86 | 0.86 | 13.74 | 0.58 | 16.08 | 0.90 | 4.10 | 0.77 | 23.53 | 0.52 | 24.50 | 0.92 | 6.66 | |

| RHCL | 1.62 | 1.28 | 0.61 | 1.07 | 34.08 | 0.83 | 34.78 | 0.83 | 35.51 | 0.94 | 41.63 | 0.74 | 41.91 | 0.87 | 41.40 | |

| SEDR | 0.68 | 0.53 | 0.66 | 0.58 | 15.00 | 0.45 | 15.13 | 0.76 | 14.23 | 0.50 | 27.07 | 0.39 | 26.76 | 0.82 | 24.00 | |

| SMEL | 0.57 | 0.44 | 0.40 | 0.40 | 30.80 | 0.30 | 31.08 | 0.71 | 77.69 | 0.34 | 40.11 | 0.26 | 39.98 | 0.79 | 95.60 | |

| ALBH | N | 0.73 | 0.57 | 0.62 | 0.55 | 24.53 | 0.43 | 24.23 | 0.78 | 26.18 | 0.49 | 32.77 | 0.38 | 32.53 | 0.83 | 33.33 |

| BURN | 1.39 | 1.11 | 0.55 | 1.07 | 22.74 | 0.85 | 23.37 | 0.73 | 32.59 | 0.95 | 31.65 | 0.76 | 32.13 | 0.79 | 43.08 | |

| CEDA | 1.38 | 1.10 | 0.46 | 1.05 | 23.54 | 0.83 | 24.05 | 0.68 | 48.72 | 0.90 | 34.50 | 0.72 | 34.33 | 0.77 | 66.97 | |

| FOOT | 0.59 | 0.43 | 0.48 | 0.39 | 33.45 | 0.29 | 31.81 | 0.77 | 59.65 | 0.34 | 41.35 | 0.26 | 39.95 | 0.82 | 70.25 | |

| GOBS | 0.86 | 0.63 | 0.78 | 0.63 | 26.95 | 0.46 | 26.60 | 0.88 | 13.33 | 0.56 | 34.86 | 0.41 | 34.10 | 0.91 | 16.46 | |

| RHCL | 3.14 | 2.54 | 0.46 | 1.71 | 45.59 | 1.31 | 48.53 | 0.84 | 81.39 | 1.58 | 49.55 | 1.21 | 52.28 | 0.86 | 86.19 | |

| SEDR | 0.85 | 0.63 | 0.44 | 0.66 | 22.23 | 0.50 | 21.79 | 0.66 | 50.49 | 0.56 | 34.15 | 0.42 | 33.10 | 0.76 | 72.34 | |

| SMEL | 0.55 | 0.42 | 0.45 | 0.47 | 15.62 | 0.35 | 16.54 | 0.61 | 35.42 | 0.41 | 26.53 | 0.30 | 26.91 | 0.70 | 56.60 | |

| ALBH | U | 3.38 | 2.60 | 0.58 | 2.89 | 14.57 | 2.25 | 13.77 | 0.69 | 19.40 | 2.51 | 25.74 | 1.96 | 0.83 | 0.77 | 32.21 |

| BURN | 2.30 | 1.78 | 0.53 | 1.94 | 15.78 | 1.49 | 16.29 | 0.66 | 26.08 | 1.66 | 27.82 | 1.29 | 0.79 | 0.75 | 42.98 | |

| CEDA | 2.65 | 2.03 | 0.51 | 2.27 | 14.48 | 1.73 | 15.08 | 0.64 | 25.63 | 1.96 | 26.09 | 1.49 | 0.77 | 0.73 | 43.28 | |

| FOOT | 2.39 | 1.83 | 0.31 | 1.87 | 21.89 | 1.43 | 22.23 | 0.58 | 88.11 | 1.60 | 32.94 | 1.23 | 0.82 | 0.69 | 124.3 | |

| GOBS | 2.92 | 2.22 | 0.62 | 2.28 | 22.17 | 1.72 | 22.48 | 0.77 | 24.56 | 1.99 | 32.04 | 1.53 | 0.91 | 0.82 | 33.52 | |

| RHCL | 2.45 | 1.90 | 0.31 | 2.10 | 14.50 | 1.63 | 14.04 | 0.49 | 60.46 | 1.87 | 23.68 | 1.46 | 0.86 | 0.60 | 93.85 | |

| SEDR | 3.33 | 2.62 | 0.65 | 2.37 | 28.68 | 1.87 | 28.79 | 0.82 | 26.63 | 1.96 | 41.19 | 1.54 | 0.76 | 0.88 | 35.44 | |

| SMEL | 2.36 | 1.87 | 0.32 | 1.84 | 22.38 | 1.43 | 23.12 | 0.59 | 85.49 | 1.58 | 33.17 | 1.24 | 0.70 | 0.70 | 118.9 | |

| Site | ENU | Optimal Noise Model | |||

|---|---|---|---|---|---|

| TURE | LSTM | VMD-LSTM | DVMD-LSTM | ||

| ALBH | E | RW + FN + WN | PL + WN | RW + FN + WN | RW + FN + WN |

| BURN | RW + FN + WN | PL + WN | PL + WN | RW + FN + WN | |

| CEDA | RW + FN + WN | PL + WN | PL + WN | RW + FN + WN | |

| FOOT | PL + WN | GGM + WN | FN + WN | PL + WN | |

| GOBS | RW + FN + WN | PL + WN | RW + FN + WN | RW + FN + WN | |

| RHCL | RW + FN + WN | GGM + WN | PL + WN | RW + FN + WN | |

| SEDR | RW + FN + WN | PL + WN | PL + WN | RW + FN + WN | |

| SMEL | FN + WN | PL + WN | FN + WN | FN + WN | |

| ALBH | N | RW + FN + WN | PL + WN | RW + FN + WN | RW + FN + WN |

| BURN | FN + WN | PL + WN | PL + WN | PL + WN | |

| CEDA | RW + FN + WN | PL + WN | PL + WN | RW + FN + WN | |

| FOOT | FN + WN | GGM + WN | FN + WN | FN + WN | |

| GOBS | RW + FN + WN | PL + WN | RW + FN + WN | RW + FN + WN | |

| RHCL | RW + FN + WN | RW + FN + WN | PL + WN | PL + WN | |

| SEDR | FN + WN | GGM + WN | RW + FN + WN | FN + WN | |

| SMEL | FN + WN | PL + WN | FN + WN | FN + WN | |

| ALBH | U | PL + WN | PL + WN | RW + FN + WN | FN + WN |

| BURN | PL + WN | GGM + WN | PL + WN | PL + WN | |

| CEDA | PL + WN | PL + WN | RW + FN + WN | PL + WN | |

| FOOT | PL + WN | PL + WN | FN + WN | FN + WN | |

| GOBS | PL + WN | GGM + WN | PL + WN | FN + WN | |

| RHCL | FN + WN | PL + WN | RW + FN + WN | FN + WN | |

| SEDR | PL + WN | PL + WN | PL + WN | PL + WN | |

| SMEL | PL + WN | PL + WN | FN + WN | PL + WN | |

| Site | ENU | Trend (mm/Year) | |||

|---|---|---|---|---|---|

| TURE | LSTM | VMD-LSTM | DVMD-LSTM | ||

| ALBH | E | −0.041 | 0.020 | 0.055 | −0.044 |

| BURN | −0.108 | −0.005 | −0.051 | −0.116 | |

| CEDA | −0.726 | −0.528 | −0.693 | −0.736 | |

| FOOT | 0.02 | 0.015 | 0.001 | 0.009 | |

| GOBS | 0.659 | 0.656 | 0.672 | 0.682 | |

| RHCL | 0.811 | 0.666 | 0.805 | 0.783 | |

| SEDR | 0.354 | 0.341 | 0.378 | 0.313 | |

| SMEL | 0.026 | 0.009 | 0.023 | 0.021 | |

| ALBH | N | 0.327 | 0.245 | 0.276 | 0.295 |

| BURN | 0.124 | 0.080 | 0.116 | 0.130 | |

| CEDA | −0.065 | −0.041 | −0.227 | −0.042 | |

| FOOT | 0.009 | 0.029 | −0.036 | 0.005 | |

| GOBS | 0.063 | 0.078 | 0.029 | −0.020 | |

| RHCL | 1.253 | 0.743 | 1.132 | 1.071 | |

| SEDR | 0.199 | 0.170 | 0.212 | 0.195 | |

| SMEL | 0.020 | −0.001 | −0.025 | 0.017 | |

| ALBH | U | 0.383 | 0.204 | 0.131 | 0.268 |

| BURN | 0.241 | 0.144 | 0.238 | 0.216 | |

| CEDA | 0.016 | 0.159 | 0.074 | 0.137 | |

| FOOT | 0.194 | 0.125 | 0.194 | 0.202 | |

| GOBS | 0.301 | 0.278 | 0.283 | 0.262 | |

| RHCL | 0.298 | 0.206 | 0.367 | 0.264 | |

| SEDR | 0.017 | 0.022 | 0.082 | 0.04 | |

| SMEL | 0.195 | 0.182 | 0.206 | 0.183 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Lu, T.; Huang, J.; He, X.; Yu, K.; Sun, X.; Ma, X.; Huang, Z. An Improved VMD-LSTM Model for Time-Varying GNSS Time Series Prediction with Temporally Correlated Noise. Remote Sens. 2023, 15, 3694. https://doi.org/10.3390/rs15143694

Chen H, Lu T, Huang J, He X, Yu K, Sun X, Ma X, Huang Z. An Improved VMD-LSTM Model for Time-Varying GNSS Time Series Prediction with Temporally Correlated Noise. Remote Sensing. 2023; 15(14):3694. https://doi.org/10.3390/rs15143694

Chicago/Turabian StyleChen, Hongkang, Tieding Lu, Jiahui Huang, Xiaoxing He, Kegen Yu, Xiwen Sun, Xiaping Ma, and Zhengkai Huang. 2023. "An Improved VMD-LSTM Model for Time-Varying GNSS Time Series Prediction with Temporally Correlated Noise" Remote Sensing 15, no. 14: 3694. https://doi.org/10.3390/rs15143694

APA StyleChen, H., Lu, T., Huang, J., He, X., Yu, K., Sun, X., Ma, X., & Huang, Z. (2023). An Improved VMD-LSTM Model for Time-Varying GNSS Time Series Prediction with Temporally Correlated Noise. Remote Sensing, 15(14), 3694. https://doi.org/10.3390/rs15143694