Locality Preserving Property Constrained Contrastive Learning for Object Classification in SAR Imagery

Abstract

:1. Introduction

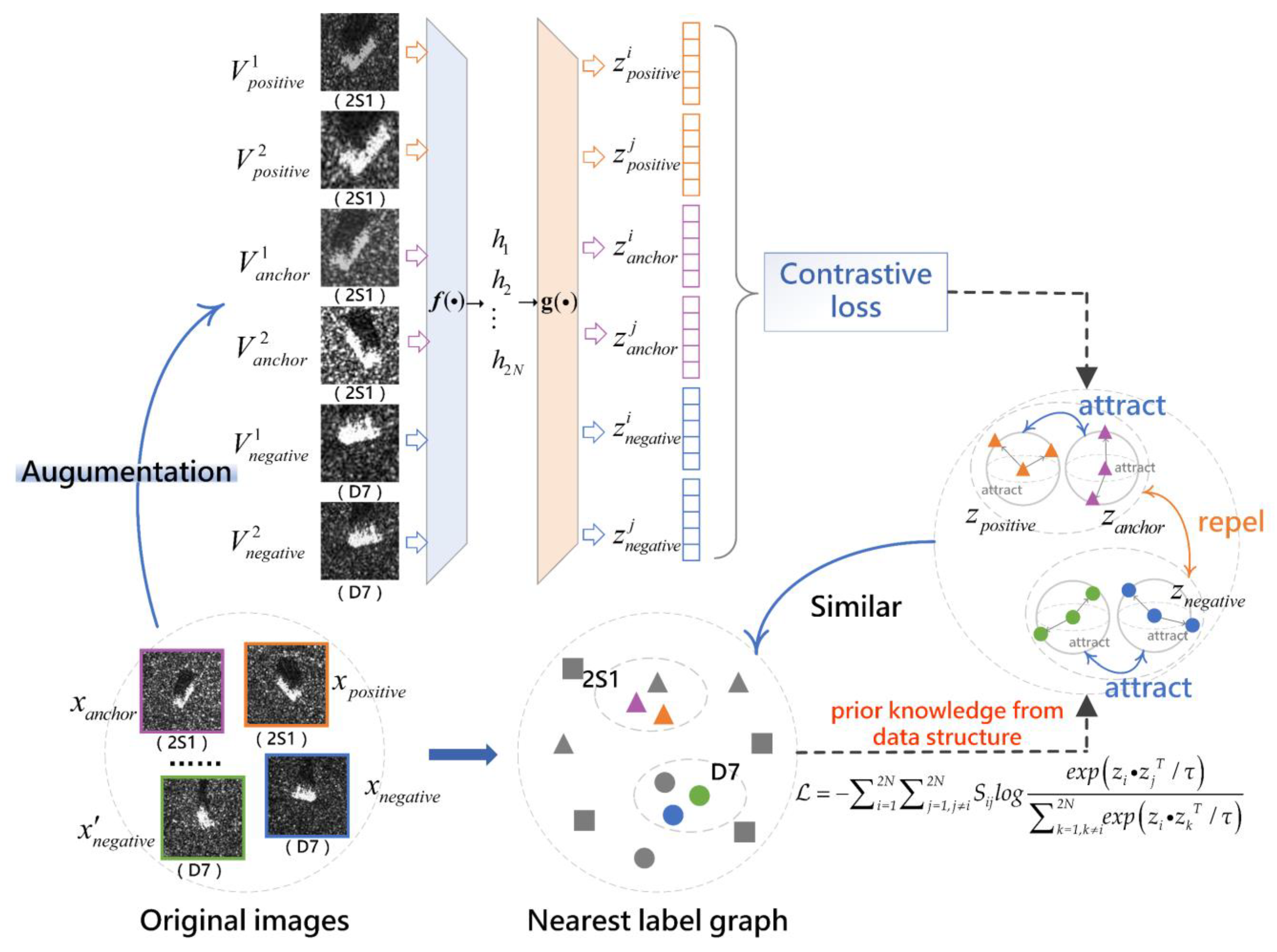

- Prior knowledge of local similarity was embedded into the InfoNCE loss, which was reformulated in the cross-entropy form, reproducing the debiased contrastive loss that the intra-class relationship of nearby samples maintains in the feature space.

- A multi-branch structure was devised to replace the traditional double-branch structure of CSL, significantly improving the sample diversity, the robustness of representations, and the stability of mutual information estimation.

- The novel self-attention pooling was introduced to replace the global average pooling in the standard ResNet encoders, providing an adaptive informative feature extraction capability according to the characteristics of inputs and thus avoiding information loss caused by traditional hand-crafted pooling methods.

2. Related Work

3. Background

3.1. Locality Preserving Projections

3.2. Contrastive Learning and InfoNCE Loss

4. Methodology

4.1. Locality Preserving Property Constrained InfoNCE Loss

4.2. Multi-Branch Contrastive Learning

| Algorithm 1 The proposed LPPCL algorithm |

| input: batch size , matrix , constant , m, the structure of f, g, .

for do obtain the corresponding affinity matrix draw augmentation functions , for in range (): for in range (, ): for all do , , , , end for end for end for update f and g to minimize end for return f and g |

4.3. Model Architecture

5. Experiments and Results

5.1. Experimental Settings

5.1.1. Dataset Description

5.1.2. Experiment Setup

5.2. Recognition Results under SOC

5.3. Recognition Results under EOC

5.4. Comparison with Reference Methods

5.5. Further Analyses of Ablation Study

5.6. Experiment with Noise Corruption

5.7. Experiment with Resolution Variance

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, P.; Liu, W.; Chen, J.; Niu, M.; Yang, W. A High-Order Imaging Algorithm for High-Resolution Spaceborne SAR Based on a Modified Equivalent Squint Range Model. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1225–1235. [Google Scholar] [CrossRef] [Green Version]

- Kechagias-Stamatis, O.; Aouf, N. Fusing Deep Learning and Sparse Coding for SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 785–797. [Google Scholar] [CrossRef] [Green Version]

- Qi, G.J.; Luo, J. Small Data Challenges in Big Data Era: A Survey of Recent Progress on Unsupervised and Semi-Supervised Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2168–2187. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15979–15988. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef] [Green Version]

- Le-Khac, P.H.; Healy, G.; Smeaton, A.F. Contrastive Representation Learning: A Framework and Review. IEEE Access 2020, 8, 193907–193934. [Google Scholar] [CrossRef]

- Gromada, K. Unsupervised SAR Imagery Feature Learning with Median Filter-Based Loss Value. Sensors 2022, 22, 6519. [Google Scholar] [CrossRef] [PubMed]

- Du, L.; Li, L.; Guo, Y.; Wang, Y.; Ren, K.; Chen, J. Two-Stream Deep Fusion Network Based on VAE and CNN for Synthetic Aperture Radar Target Recognition. Remote Sens. 2021, 13, 4021. [Google Scholar] [CrossRef]

- Cao, C.; Cui, Z.; Cao, Z.; Wang, L.; Yang, J. An Integrated Counterfactual Sample Generation and Filtering Approach for SAR Automatic Target Recognition with a Small Sample Set. Remote Sens. 2021, 13, 3864. [Google Scholar] [CrossRef]

- Poole, B.; Ozair, S.; van den Oord, A.; Alemi, A.A.; Tucker, G. On Variational Bounds of Mutual Information. arXiv 2019, arXiv:1905.06922. [Google Scholar]

- Chuang, C.Y.; Robinson, J.; Yen-Chen, L.; Torralba, A.; Jegelka, S. Debiased Contrastive Learning. arXiv 2020, arXiv:2007.00224. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:2002.05709. [Google Scholar]

- Gutmann, M.; Hyvrinen, A. Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010. [Google Scholar]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-Supervised Learning: Generative or Contrastive. IEEE Trans. Knowl. Data Eng. 2023, 35, 857–876. [Google Scholar] [CrossRef]

- Jing, L.; Tian, Y. Self-Supervised Visual Feature Learning With Deep Neural Networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4037–4058. [Google Scholar] [CrossRef]

- Hjelm, R.D.; Fedorov, A.; Lavoie-Marchildon, S.; Grewal, K.; Bachman, P.; Trischler, A.; Bengio, Y. Learning deep representations by mutual information estimation and maximization. arXiv 2019, arXiv:1808.06670. [Google Scholar]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep clustering for unsupervised learning of visual features. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 132–149. [Google Scholar]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised Feature Learning via Non-parametric Instance Discrimination. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3733–3742. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 9726–9735. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, C.; Poole, B.; Krishnan, D.; Schmid, C.; Isola, P. What Makes for Good Views for Contrastive Learning? arXiv 2020, arXiv:2005.10243. [Google Scholar]

- Grill, J.B.; Strub, F.; Altche, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.D.; Azar, M.G.; et al. Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning. arXiv 2020, arXiv:2006.07733. [Google Scholar]

- Chen, X.; He, K. Exploring Simple Siamese Representation Learning. In Proceedings of the Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, C.; Gu, H.; Su, W. SAR Image Classification Using Contrastive Learning and Pseudo-Labels With Limited Data. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4012505. [Google Scholar] [CrossRef]

- Zhou, X.; Tang, T.; Cui, Y.; Zhang, L.; Kuang, G. Novel Loss Function in CNN for Small Sample Target Recognition in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4018305. [Google Scholar] [CrossRef]

- Zhai, Y.; Zhou, W.; Sun, B.; Li, J.; Ke, Q.; Ying, Z.; Gan, J.; Mai, C.; Labati, R.D.; Piuri, V.; et al. Weakly Contrastive Learning via Batch Instance Discrimination and Feature Clustering for Small Sample SAR ATR. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5204317. [Google Scholar] [CrossRef]

- Bi, H.; Liu, Z.; Deng, J.; Ji, Z.; Zhang, J. Contrastive Domain Adaptation-Based Sparse SAR Target Classification under Few-Shot Cases. Remote Sens. 2023, 15, 469. [Google Scholar] [CrossRef]

- Chen, Y.; Bruzzone, L. Self-Supervised SAR-Optical Data Fusion of Sentinel-1/-2 Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5406011. [Google Scholar] [CrossRef]

- Xu, Y.; Cheng, C.; Guo, W.; Zhang, Z.; Yu, W. Exploring Similarity in Polarization: Contrastive Learning with Siamese Networks for Ship Classification in Sentinel-1 SAR Images. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 835–838. [Google Scholar] [CrossRef]

- Wang, C.; Huang, Y.; Liu, X.; Pei, J.; Zhang, Y.; Yang, J. Global in Local: A Convolutional Transformer for SAR ATR FSL. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4509605. [Google Scholar] [CrossRef]

- Ren, H.; Yu, X.; Wang, X.; Liu, S.; Zou, L.; Wang, X. Siamese Subspace Classification Network for Few-Shot SAR Automatic Target Recognition. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2634–2637. [Google Scholar] [CrossRef]

- Liu, C.; Sun, H.; Xu, Y.; Kuang, G. Multi-Source Remote Sensing Pretraining Based on Contrastive Self-Supervised Learning. Remote Sens. 2022, 14, 4632. [Google Scholar] [CrossRef]

- Xiao, X.; Li, C.; Lei, Y. A Lightweight Self-Supervised Representation Learning Algorithm for Scene Classification in Spaceborne SAR and Optical Images. Remote Sens. 2022, 14, 2956. [Google Scholar] [CrossRef]

- Liu, F.; Qian, X.; Jiao, L.; Zhang, X.; Li, L.; Cui, Y. Contrastive Learning-Based Dual Dynamic GCN for SAR Image Scene Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Jiao, L.; Liu, F.; Hou, B.; Yang, S.; Zhang, Y.; Wang, J. Coarse-to-Fine Contrastive Self-Supervised Feature Learning for Land-Cover Classification in SAR Images With Limited Labeled Data. IEEE Trans. Image Process. 2022, 31, 6502–6516. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Wu, Y.; Zhao, Q.; Gan, L. SAR target configuration recognition using Locality Preserving Projections. In Proceedings of the 2011 IEEE CIE International Conference on Radar, Chengdu, China, 24–27 October 2011; Volume 1, pp. 740–743. [Google Scholar] [CrossRef]

- Oord, A.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Cai, Q.; Wang, Y.; Pan, Y.; Yao, T.; Mei, T. Joint Contrastive Learning with Infinite Possibilities. arXiv 2020, arXiv:2009.14776. [Google Scholar]

- Chen, F.; Datta, G.; Kundu, S.; Beerel, P. Self-Attentive Pooling for Efficient Deep Learning. arXiv 2022, arXiv:2209.07659. [Google Scholar]

- Ross, T.D.; Worrell, S.W.; Velten, V.J.; Mossing, J.C.; Bryant, M.L. Standard SAR ATR evaluation experiments using the MSTAR public release data set. In Algorithms for Synthetic Aperture Radar Imagery; International Society for Optics and Photonics: Bellingham, WA, USA, 1998; pp. 566–573. [Google Scholar]

- Tian, S.; Lin, Y.; Gao, W.; Zhang, H.; Wang, C. A Multi-Scale U-Shaped Convolution Auto-Encoder Based on Pyramid Pooling Module for Object Recognition in Synthetic Aperture Radar Images. Sensors 2020, 20, 1533. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, C.; Liu, X.; Huang, Y.; Luo, S.; Pei, J.; Yang, J.; Mao, D. Semi-Supervised SAR ATR Framework with Transductive Auxiliary Segmentation. Remote Sens. 2022, 14, 4547. [Google Scholar] [CrossRef]

- Zhang, L.; Leng, X.; Feng, S.; Ma, X.; Ji, K.; Kuang, G.; Liu, L. Domain Knowledge Powered Two-Stream Deep Network for Few-Shot SAR Vehicle Recognition. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5215315. [Google Scholar] [CrossRef]

| Type | Serial No. | Number of Samples | |

|---|---|---|---|

| 17° | 15° | ||

| 2S1 | b01 | 299 | 274 |

| 9563 | 233 | 194 | |

| BMP2 | 9566 | 232 | 196 |

| c21 | 233 | 196 | |

| BRDM2 | E-71 | 298 | 274 |

| BTR60 | K10yt7532 | 256 | 195 |

| BTR70 | c71 | 233 | 196 |

| D7 | 92v13015 | 299 | 274 |

| T62 | A51 | 299 | 273 |

| 132 | 232 | 196 | |

| T72 | 812 | 231 | 195 |

| s7 | 233 | 191 | |

| ZIL131 | E12 | 299 | 274 |

| ZSU234 | d08 | 299 | 274 |

| Class | 2S1 | BMP2 | BRDM2 | BTR60 | BTR70 | D7 | T72 | T62 | ZIL131 | ZSU234 | (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2S1 | 266 | 0 | 0 | 1 | 0 | 0 | 0 | 7 | 0 | 0 | 97.08 |

| BMP2 | 0 | 189 | 0 | 0 | 1 | 0 | 4 | 0 | 0 | 0 | 97.42 |

| BRDM2 | 2 | 0 | 269 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 98.18 |

| BTR60 | 0 | 0 | 3 | 184 | 0 | 2 | 0 | 5 | 0 | 1 | 94.36 |

| BTR70 | 0 | 0 | 0 | 0 | 196 | 0 | 0 | 0 | 0 | 0 | 1 |

| D7 | 0 | 0 | 0 | 0 | 0 | 272 | 0 | 1 | 0 | 1 | 99.27 |

| T72 | 0 | 8 | 0 | 0 | 0 | 0 | 188 | 0 | 0 | 0 | 95.92 |

| T62 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 269 | 1 | 1 | 98.53 |

| ZIL131 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 270 | 3 | 98.54 |

| ZSU234 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 271 | 98.91 |

| Total | 97.94 |

| Class | 2S1 | BMP2 | BRDM2 | BTR60 | BTR70 | D7 | T72 | T62 | ZIL131 | ZSU234 | (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2S1 | 266 | 0 | 0 | 1 | 0 | 0 | 0 | 7 | 0 | 0 | 97.08 |

| BMP2 | 0 | 561 | 0 | 0 | 3 | 0 | 22 | 0 | 0 | 0 | 95.73 |

| BRDM2 | 2 | 0 | 269 | 0 | 0 | 2 | 0 | 0 | 1 | 0 | 98.18 |

| BTR60 | 0 | 0 | 3 | 184 | 0 | 2 | 0 | 5 | 0 | 1 | 94.36 |

| BTR70 | 0 | 0 | 0 | 0 | 196 | 0 | 0 | 0 | 0 | 0 | 1 |

| D7 | 0 | 0 | 0 | 0 | 0 | 272 | 0 | 1 | 0 | 1 | 99.27 |

| T72 | 0 | 24 | 0 | 0 | 0 | 0 | 558 | 0 | 0 | 0 | 95.88 |

| T62 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 269 | 1 | 1 | 98.53 |

| ZIL131 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 270 | 3 | 98.54 |

| ZSU234 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 2 | 271 | 98.91 |

| Total | 97.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Tian, S.; Feng, X.; Zhang, B.; Wu, F.; Zhang, H.; Wang, C. Locality Preserving Property Constrained Contrastive Learning for Object Classification in SAR Imagery. Remote Sens. 2023, 15, 3697. https://doi.org/10.3390/rs15143697

Wang J, Tian S, Feng X, Zhang B, Wu F, Zhang H, Wang C. Locality Preserving Property Constrained Contrastive Learning for Object Classification in SAR Imagery. Remote Sensing. 2023; 15(14):3697. https://doi.org/10.3390/rs15143697

Chicago/Turabian StyleWang, Jing, Sirui Tian, Xiaolin Feng, Bo Zhang, Fan Wu, Hong Zhang, and Chao Wang. 2023. "Locality Preserving Property Constrained Contrastive Learning for Object Classification in SAR Imagery" Remote Sensing 15, no. 14: 3697. https://doi.org/10.3390/rs15143697