1. Introduction

Sea ice is primarily distributed in the polar regions and exerts a profound impact on global climate and environmental change by affecting the exchange of energy and material between the ocean and the atmosphere and by redistributing salt within the ocean [

1]. In the Arctic region, sea ice variability has significant implications for the normal Arctic shipping routes [

2]. Therefore, sea ice monitoring not only contributes to scientific research on polar and global climate and environment but also has important practical implications for maritime shipping and polar expeditions. As science and technology evolve, satellite remote sensing has become a better option than traditional sea ice observational methods, such as in situ and ice station observations, for increased monitoring and the ability to monitor sea ice at high spatial and temporal resolution [

3].

Satellite remote sensing can monitor sea ice in the visible, infrared, and microwave regions of the electromagnetic spectrum [

4]. The visible and infrared remote sensing instruments, such as the Operational Linescan System (OLS), the Moderate Resolution Imaging Spectroradiometer (MODIS), and the Visible Infrared Imaging Radiometer Suite (VIIRS), are limited by their operating hours and cloud cover, which constrain their monitoring capability for sea ice. By contrast, electromagnetic waves in the microwave band can acquire observations during both day and night and through any weather conditions [

5]. Synthetic Aperture Radar (SAR), a special type of active microwave imaging radar, benefits from advanced technology and complex data, providing more detailed information on sea ice [

4]. Notable SAR satellites include NASA’s Seasat SAR, the European Space Agency’s (ESA) ERS-1, ERS-2, Sentinel-1, the Canadian Space Agency’s (CSA) RADARSAT-1, RADARSAT-2, and China’s Gaofen-3 (GF-3). Over time, SAR satellites have developed from the original L-band with single polarization to the current L-, C-, and X-band SAR with dual-polarization and quad-polarization, providing a substantial amount of data and a rich source of information for sea ice monitoring.

Sea ice classification for Synthetic Aperture Radar (SAR) data serves as the foundation for both sea ice research and operational ice services [

6]. Its primary objective is to identify the main sea ice features related to ice types and surface roughness and group them into predetermined categories [

7]. The sea ice categories that are commonly used in current research, and also the standard for sea ice classification, are defined by the World Meteorological Organization (WMO). According to the formation and development of sea ice, WMO classified sea ice into three main categories: (1) Ice less than 30 cm thick (including new ice (NI), young ice (YI), etc.); (2) Ice 30 cm–2 m thick, known as first-year ice (FI) (including thin first-year ice and medium first-year ice); (3) Old ice (OI) (including second-year (SY) and multi-year ice (MY)) [

8].

Initial SAR sea ice classification methods were based primarily on backscatter coefficients and texture features for single polarization or simple combinations of polarization bands [

9,

10]. However, the backscattering coefficient of sea ice can be affected by several factors, such as small-scale surface roughness and large-scale atmospheric circulation [

11]. Thus the backscattering coefficients of different sea ice types are similar under certain imaging conditions, making it difficult to distinguish between them [

12]. For the textural features of sea ice, although the grey level co-occurrence matrix (GLCM) and Markov Random Field (MRF) have been widely used with good results [

13,

14,

15], the classification accuracy is affected by scale factors such as the window size, leading to unstable performance due to issues such as scaling and rotation of images.

Compared to SAR in single-polarization mode, polarimetric SAR (PolSAR) provides additional information on the backscattering mechanisms and physical characteristics of natural surfaces, allowing for a more comprehensive observation and analysis of targets from multiple perspectives [

16]. Polarimetric decomposition has become the dominant research direction in the analytical use of PolSAR information. After the concept of target decomposition was introduced [

17], polarimetric decomposition can be divided into two types: decompositions of the coherent scattering matrix and eigenvector decompositions of the coherency or covariance matrix based on target scattering characteristics [

18]. Polarization decomposition has also been applied to sea ice classification. Scheuchl et al. [

19,

20] used

H-

polarization decomposition with the Wishart classifier for sea ice classification. Singha et al. [

21] extracted the polarization features by

H-

A-

decomposition and analyzed their classification performance in different bands to ultimately achieve four classes of ice and water classification. In addition, Moen et al. [

22,

23] used C-band quad-polarization SAR data for sea ice classification with improved Freeman decomposition.

Several sea ice classification algorithms, including random forest [

24], decision tree [

25], and image segmentation [

26], have demonstrated reliable results. Additionally, the support vector machine has been widely applied to both sea ice type and ice/water classification [

25,

27,

28]. However, the continuous development of deep learning in recent years has showcased its remarkable superiority over traditional physical- or statistical-based algorithms for extracting image information [

29]. Deep learning frameworks have been extensively used in remote sensing image information mining, including sea ice detection, monitoring, and classification. Convolutional neural network (CNN) is a representative of Deep Neural Networks (DNN)that exhibits excellent performance in deep feature extraction and image classification [

30]. Several advanced CNN structures, such as Alexnet [

31], VGGNet [

32], GoogleNet [

33], ResNet [

34], and DensNet [

35], have been proposed. In general, CNN-based classification strategies include using pretrained CNNs as feature extractors, fine-tuning pretrained CNNs, or training CNNs from scratch [

36]. For instance, [

37] employed CNN networks for ice/water classification with Sentinel-1 SAR data and proposed a modified VGG-16 network, and trained from scratch for sea ice classification with Sentinel-2 cloud-free optical data. Tianyu et al. [

38] proposed MSI-ResNet for arctic sea ice classification with GF-3 C-band SAR data and compared them with a classical SVM classifier. Zhang et al. [

39] built a Multiscale MobileNet (MSMN) based on the MobileNetV3 for sea ice classification with GF-3 dual-polarization SAR data and achieved higher accuracy than traditional CNN and ResNet18 models.

Furthermore, there is a lot of current research on the classification of SAR images using CNNs, and many novel CNN structures and training methods have been proposed [

40,

41,

42]. The central point is that CNN is designed to automatically and adaptively learn spatial hierarchies of features primarily [

43]. Most current sea ice classification studies simply use a combination of backscatter coefficients from different polarization bands. However, for polarimetric SAR complex imagery, amplitude and phase information is equally vital. Although polarization decomposition provides valuable information to understand the physics of the SAR image [

44], it does not provide sufficient frequency information in the CNN structure. To address this issue, Huang et al. [

45] proposed Deep SAR-Net, which extracts spatial features with the CNN framework and learns the physical properties of the objects, such as buildings, vegetation, and agriculture, by joint time-frequency analysis on complex-valued SAR images. Their proposed method shows superior performance compared with the proposed CNN models only based on intensity information.

The receptive field of a CNN is crucial for the accurate detection and classification of objects in images, especially large features such as sea ice [

46]. Thus current CNN-based studies on sea ice classification usually focus on the structure of neural networks, proposing many complex network models, which often require the support of a large number of samples [

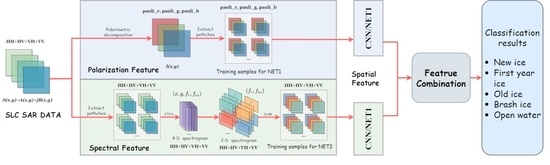

37,

38,

39]. Moreover, for the single-look complex (SLC) quad-polarization SAR data, feature extraction is insufficient in current studies on sea ice classification. In light of these limitations and inspired by existing studies, we propose a multi-featured sea ice classification method based on a convolutional neural network, where the multi-feature classification method simultaneously learns the spatial texture features of the sea ice along with backscattering information, including its physical properties. The main highlights of this paper are:

We utilized polarization decomposition and joint time-frequency analysis to extract multi-features of the sea ice.

We built a CNN structure that learns and fuses multi-features, including spatial texture features of sea ice and physical properties of backscatter for sea ice classification.

The experimental results show that the multi-featured approach combines the advantages of sea ice polarization features and spectrogram features on the basis of learning the spatial features of sea ice, which improves the accuracy of sea ice classification.

5. Discussion

In this study, we utilized 30 scenes of ALOS PALSAR SLC satellite data from the Arctic region. SAR is a widely adopted technique for sea ice monitoring due to its capability of working all day and in all weather conditions. SAR functions by obtaining the scattered echo characteristics of sea ice, and different types of sea ice generate distinct echo signals under different polarized electromagnetic waves based on their diverse physical and chemical properties. PolSAR can obtain different polarization information through its multiple polarization modes, and these differences in polarization information are reflected in the polarization characteristics obtained via polarization decomposition. The quad-polarization SAR data used in our research can obtain scattering intensity information of sea ice under different scattering mechanisms after polarization decomposition, which provides fundamental assurance for sea ice classification. Besides polarization decomposition, we also applied joint time-frequency analysis for SAR signals and imaging. The JTFA technique can be utilized to analyze and describe the radar target signature, which can be used as features for sea ice classification. We utilized the 2-D spectrogram obtained by reducing the dimensionality and reconstructing the 4D spectrogram as a new feature for our study. Furthermore, the use of CNNs is crucial in obtaining spatial features of sea ice SAR images. CNNs can identify texture and shape features of sea ice by using multiple convolutional layers; the network can learn hierarchical representations of the image and extract important features for classification.

5.1. Influencing Factors

Although the experimental results show that the multi-feature method proposed in this paper achieves better classification results, there are still some issues worth discussing. The backscatter signal from sea ice is known to be influenced by season and angle of incidence in SAR images. Sea ice backscatter varies seasonally in SAR images, with images of different bands (C-, L-, Ku-band), polarizations, and for different sea ice types backscatter at different seasons of the year [

67]. Given the complexity of multiple polarizations and sea ice types, it was challenging to accurately account for seasonally varying backscatter. To address this issue, we chose to ignore this complication and instead selected time-specific images using specific classification criteria. However, we must acknowledge that our data do not fully cover sea ice features at typical times of the year. This is especially the case in winter, as sea ice is usually more stable during this time, showing relatively uniform reflection intensity and texture. With limited data, we did not select enough sea ice features for training within these time periods. This is an aspect that needs to be complemented by subsequent work.

In addition to the season, the ice classification is also affected by backscatter, which varies with the incident angle. The variation in backscatter is complex and less significant when the angle of incidence changes by a smaller amount. In this study, the incidence angle is mostly concentrated in the range of 22–27

, with a variation of

or less in a single image. Therefore, the variation in backscatter is minimal. In our study, the spectral, polarization, and spatial characteristics are the main features considered, compared to the limited effect of backscatter variation, so the effect of season and angle of incidence is not taken into account in the classification process [

68].

5.2. Algorithms

In the choice of algorithms, we used a CNN for the classification. Our approach differs from previous studies as we opted not to use deeper or more complex networks for training. The selection of a simple network structure for classification was due to limitations imposed by the limited SAR data available. However, it enabled us to better demonstrate the improvements in the classification effect of the proposed multi-featured method in this paper.

Several details regarding the classification experiment require clarification. Firstly, the selection of the sample size has a crucial impact on the classification results. To determine the suitable sample sizes for the two networks, we referred to previous studies and selected three sample sizes, namely 24, 36, and 48, for comparative experimental analysis. By analyzing the loss curves and actual classification results, we determined that the relatively suitable sample sizes were 36 and 12 for the two networks, respectively. Although these sample sizes achieved a better classification effect, there is still room for improvement in the interval of size selection. Further testing of more sizes may yield optimal results. However, given that the focus of this article is not on the size of the samples, we provide only a brief discussion of this aspect.

It is clear that the algorithm of our article is not complicated, and its core idea is to learn two features by CNN and combine them to improve the classification accuracy. Compared to many current studies using deep learning CNN methods for sea ice classification, we simply use a very basic VGG structure. Most current research focuses on more sophisticated neural network structures and methods to improve classification accuracy, while the data they use are not mined and processed more deeply but rely on the network itself to extract information from the data for classification. Our approach, on the other hand, extracts and combines information from the physical mechanisms of the data, which has the advantage that we can use a simple network with less computation to achieve higher classification accuracy. Our method is based on CNN, which can well extract the spatial texture features of sea ice and then supplement them with polarization features and spectral features that contain the physical mechanism of sea ice in order to achieve high accuracy sea ice classification with less computational consumption.

5.3. Results Analysis

In terms of the classification accuracy analysis, we employed the widely used confusion matrix. It should be noted that the calculation of the confusion matrix depends on the ground truth pixels, so we took great care in selecting the region of interest (ROI) and only included regions where the classes could be clearly identified for the calculation. However, this may have led to higher accuracy in the final classification since many regions with complex sea ice types were not included in the calculation. Therefore we calculated the range of classification errors based on the sampling error and presented it in the form of an error bar in

Figure 17 and

Figure 18.

It is worth noting that the JTFA method may encounter confusion when classifying areas with both NI and OW, resulting in the misclassification of NI as OW or vice versa. This issue is demonstrated in

Figure 16 for S3 and S4, as well as in

Table 6 for V3 and V4, which provide the classification accuracy of the JTFA method. We attribute this confusion to the similarities in spectrogram characteristics between NI and OW. NI and OW are consecutive stages in the sea ice development process. NI is characterized by its thinness, high transparency, and relatively weak textural characteristics, which can contribute to confusion and misclassification when performing classification. To address this, employing a multi-feature method that incorporates constraints on polarization features, including backscatter information, would improve the situation.

The difference in accuracy between combined features and individual features is also a matter for discussion. Reasons for this phenomenon include the complementary nature of the features and their ability to capture different aspects of sea ice features. From the classification results, we can observe that the polarimetric decomposition approach can usually accurately distinguish between different types of sea ice, but the marginal regions of different types of sea ice can be blurred by the classification. The JTFA method, on the other hand, has a more prominent ability to portray sea ice contours and can clearly distinguish different types of sea ice, but misclassification occurs in the determination of sea ice categories. Both methods have certain defects leading to low classification accuracy numerically. Our proposed feature combination method using one-hot coding, however, filters out the poorly classified parts of the two methods by calculating the probability, highlights the advantages of each method, and thus improves the classification accuracy of the combined method.

Furthermore, the analysis of the accuracy of specific classes showed that the producer accuracy and user accuracy of the polarization decomposition method and the JTFA method often differed significantly, indicating a high commission or omission rate for each category. The multi-feature method improved this situation by fusing the advantages of the two methods to complement each other. The classification results obtained by the Pol. Decomp. method showed more accurate class judgment, while the JTFA method provided a better description of the morphological edge information of different classes of sea ice, especially in the classification of NI and FI, as shown in

Figure 19. In addition, we also performed the calculation of Intersection over Union (IoU) accuracy as a supplementary note. IoU is a commonly used metric in computer vision tasks and is computed with the formula: IoU = (Intersection area) / (Union area). IoU accuracy values range from 0 to 1 and are useful metrics for assessing the quality of object segmentation results. As shown in

Table 7, the effectiveness of the JTFA method in segmenting sea ice is sometimes at an advantage in the classification of NI and FI. The multi-featured method also combines these advantages to improve the accuracy of sea ice classification.

5.4. Feature Combination

In the selection of the feature combination method, we took a simpler approach which is to obtain the calculation results of the two methods first and then generate the fused images by comparing the probabilities of the classes. Although this approach has been effective, there are cases of errors in judgment. Therefore, in the process of practical operation, we combined the conclusions drawn from the comparison of the classification accuracy of the two methods, that is, in the determination of the category, if the deviation of the calculated probability is small, it will be biased to determine that the category judged by the Pol. Decomp. method is accurate. Meanwhile, it should be noted that the theoretical basis of this method is not so sufficient and tends to be more of an empirical approach, so there is still much room for improvement.

In general, our study focused on multiple features and, therefore, did not fully consider other factors affecting sea ice classification, such as seasonal variations. In subsequent studies, we plan to address these limitations and incorporate these factors to enhance our classification accuracy. In addition, for the data and network, the spatial and temporal resolution of the SAR data, the waveband, the network model, and its parameters will also be of major concern, and more new quad-polarization SAR data need to be considered, such as GF-3 and RADARSAT-2.

6. Conclusions

In this study, we employed four polarisations (HH, HV, VH, and VV) of ALOS PALSAR SLC data for sea ice classification and proposed a multi-feature sea ice classification method. Our method exploits polarization features obtained through polarization decomposition and spectral features obtained through JTFA. The purpose of the multi-feature method is to obtain more useful information from the data. We combined sea ice backscatter features, spectral features, and spatial features that are accessible to CNNs. To learn and train, we designed a simple convolutional neural network and used a confusion matrix to evaluate the final accuracy of our classification of sea ice into five categories NI, FI, OI, DI, and OW.

In addition, we designed several comparative experiments to comprehensively illustrate the experiment, including the comparison and selection of sample sizes, the comparison of the accuracy of different sea ice categories, and the comparative analysis of different accuracy judgment types for different methods. Regarding producer accuracy, the multi-feature method achieved high levels of accuracy, usually exceeding , for most categories and in most cases. This indicates that the multi-feature method is highly effective in accurately identifying different types of sea ice. Moreover, in terms of user accuracy, the multi-feature method also achieved over accuracy, indicating its ability to effectively identify specific categories of sea ice. In terms of overall accuracy, the combined multi-feature method demonstrated high levels of accuracy for the four scenes selected in this study, achieving , , , and accuracy, respectively. The kappa coefficients for these scenes reached 0.92, 0.85, 0.93, and 0.93, respectively, demonstrating a high level of consistency and further validating the accuracy and reliability of the integrated multi-feature method.

The experimental results show that combining multiple features can exploit the advantages of SAR data with quad-polarization and significantly improve the accuracy of sea ice classification. We believe that this integrated multi-feature method could be useful in the future for more complex and accurate sea ice classification, thereby improving classification accuracy. Furthermore, in areas where sufficient data are lacking, the multi-feature method can supplement information.