Exploring Effective Detection and Spatial Pattern of Prickly Pear Cactus (Opuntia Genus) from Airborne Imagery before and after Prescribed Fires in the Edwards Plateau

Abstract

1. Introduction

2. Materials and Methods

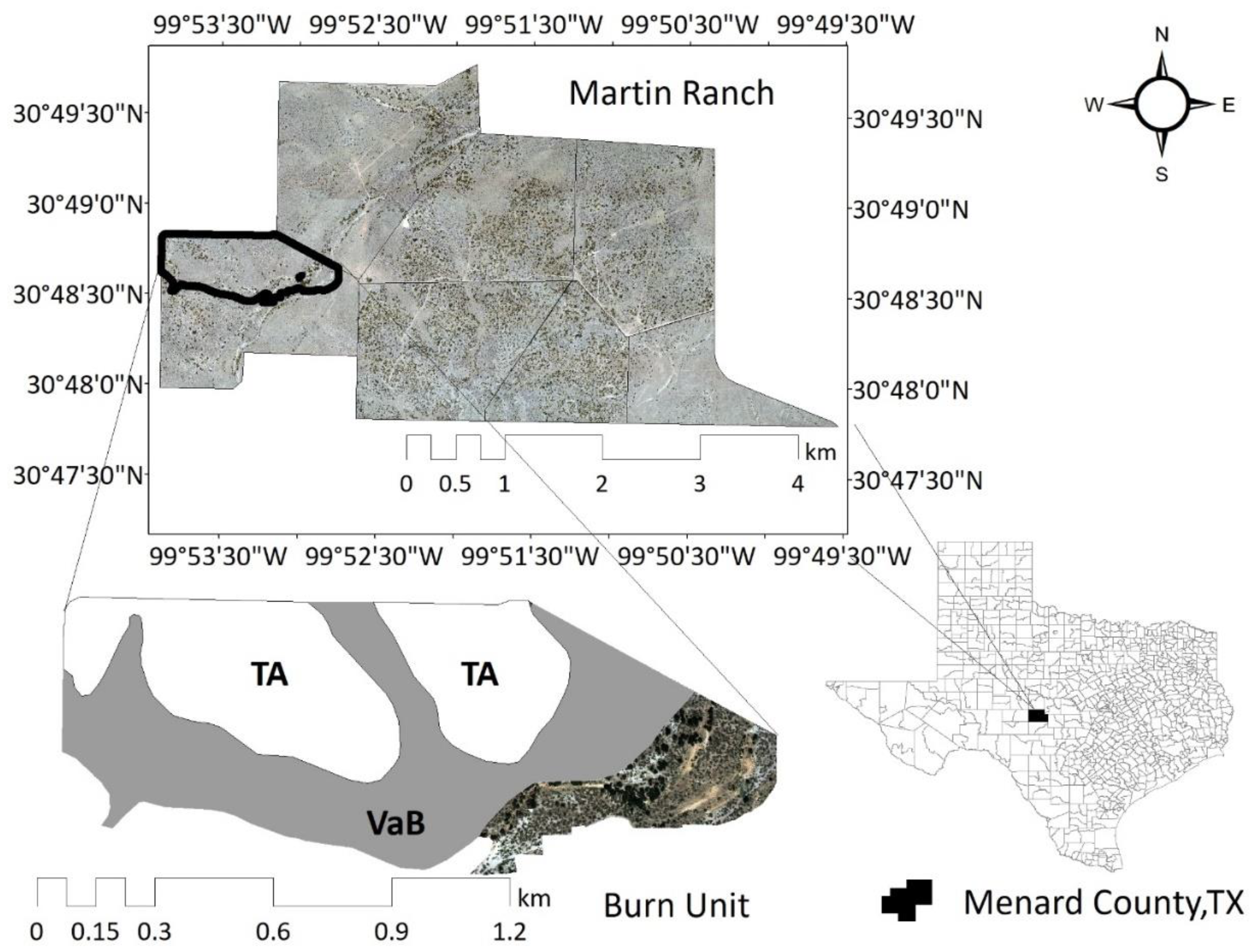

2.1. Study Area and Field Sampling

2.1.1. Post-Fire Testing Data

2.2. Remote Sensing Data Collection and Processing

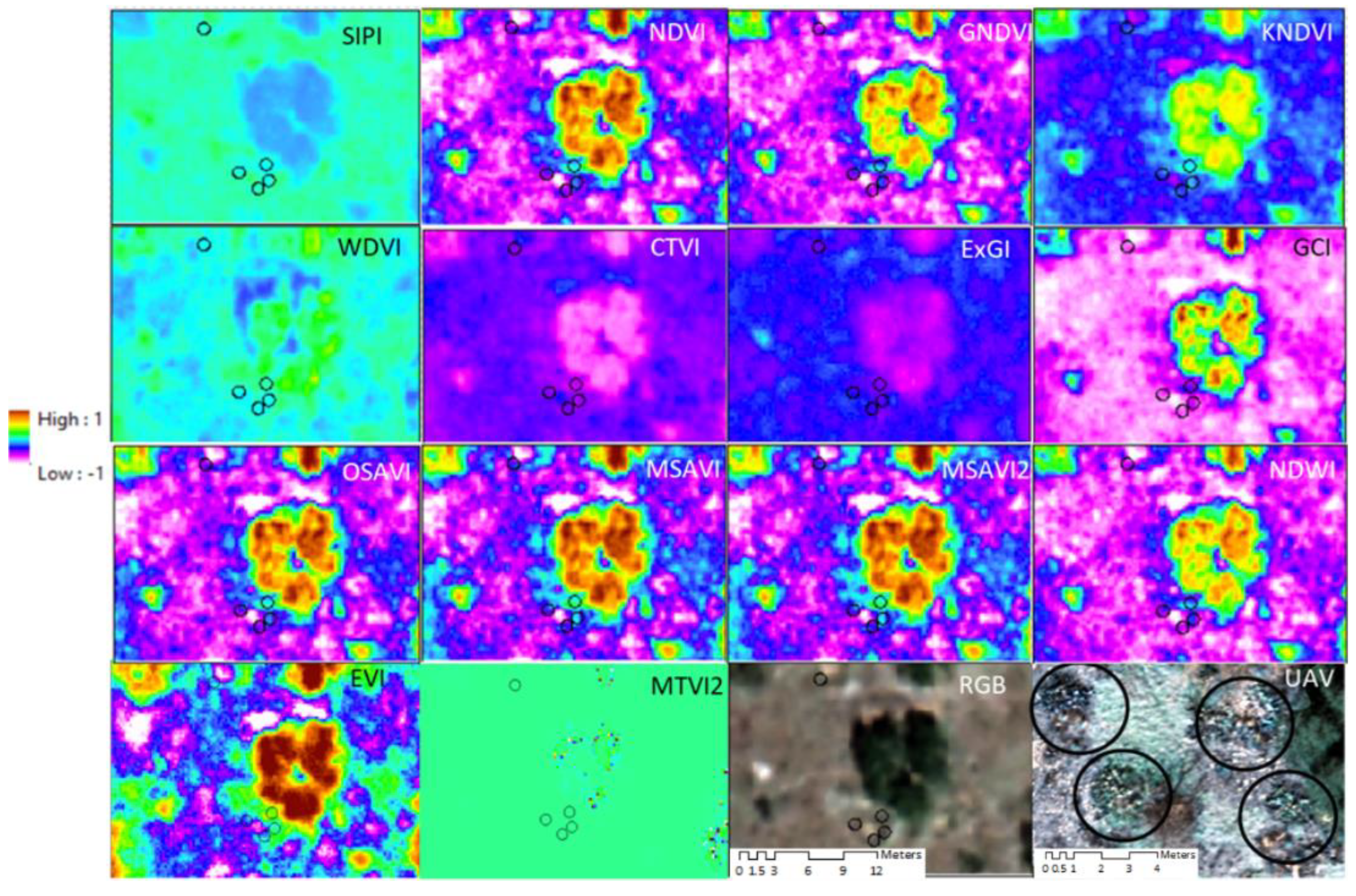

Spectral Profile Library

2.3. Classification Methods and Accuracy Assessment

- (1)

- Classification using object-oriented methods [23,24,25] (see Appendix A.2). We used the Feature Extraction-Example Based Workflow from ENVI 5.5 (NV5 Geospatial Solutions, Inc., Broomfield, CO, USA.), which includes K Nearest Neighbor (KNN), Principal Components Analysis (PCA), and Support Vector Machines [28,63] as classification methods based on the user-chosen training samples (e.g., regions of interest).

- (2)

- Classification using pixel extraction and regression models. In RStudio [64] (see Appendix A.2), we used the RandomForest Package (RF) [26,65,66]. RF is a robust ensemble learning classification algorithm resistant to overfitting. Moreover, RF is effective in classifying large datasets, rating variables of importance, generating unbiased estimates of the Out of Bag (OOB) error (generalization error), and maintaining its robustness against outliers and data noise [26,67].

- (3)

- Classification of spectral profiles. The Spectral Angle Mapper (SAM) [51,52,53] from the Spectral Hourglass Wizard in ENVI 5.5 (see Appendix A.2) was used to geometrically match pixels to a reference spectral library, resulting in a spectral classification based on spectral and physical similarities [53,54,68]. To classify spectral profiles based on the Spectral Information Divergence (SID) algorithm [56,57], we used the ENVI 5.5 Endmember Collection tool (see Appendix A.2). Compared to SAM, the SID method discriminates between spectral profiles among random pixels in the image (extracted using stochastic measures) following a maximum divergence threshold for probabilistic spectral behavior [53,56].

Accuracy Assessment to Determine the Best Classification Method

2.4. Effect of Fire on the Amount and Spatial Pattern of Prickly Pear Cover—A Case Assessment

3. Results

3.1. Pre- and Post-Fire Classification Performance

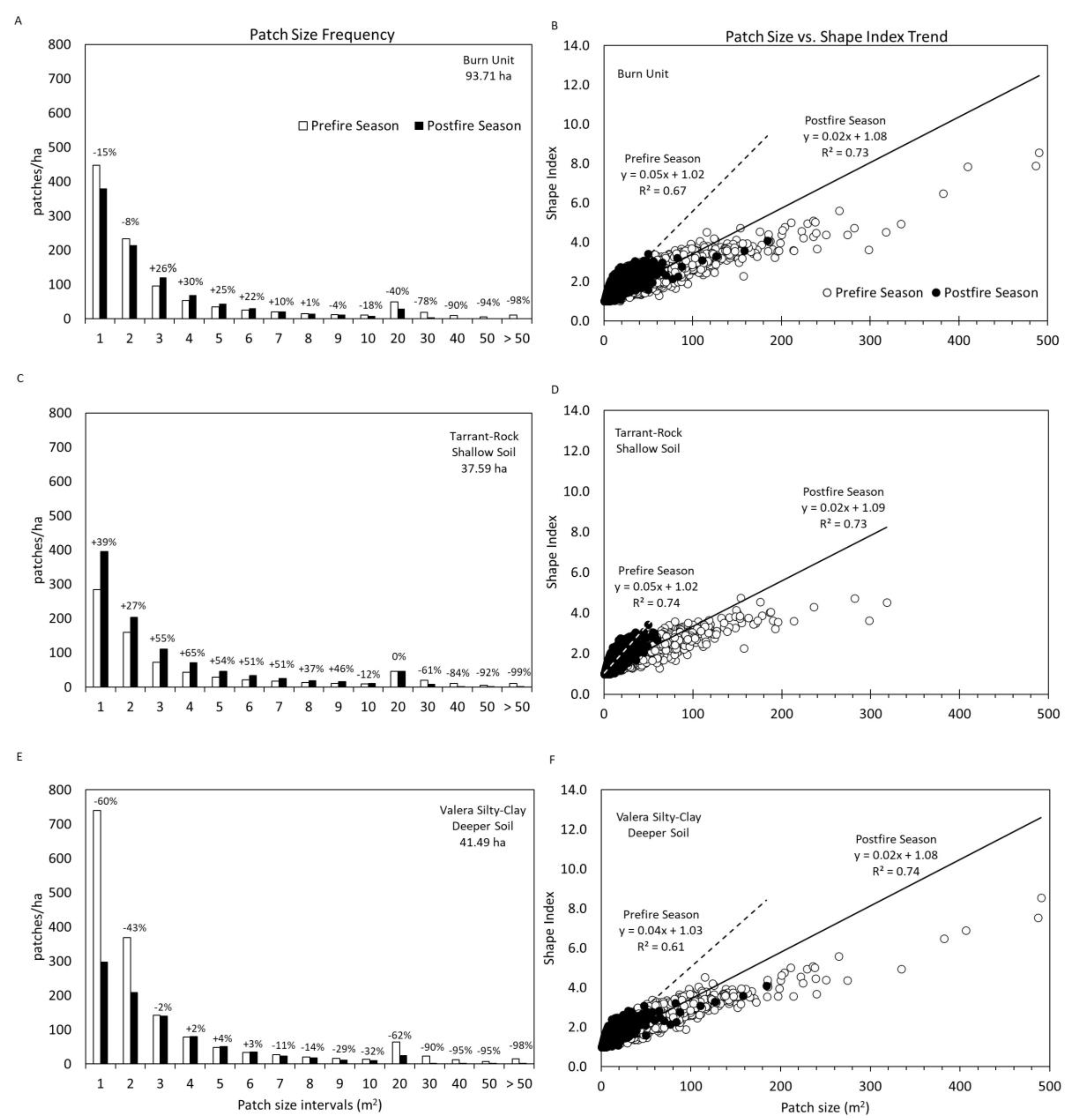

3.2. Effect of Fire on the Amount and Spatial Pattern of Prickly Pear Cover—A Case Assessment

4. Discussion

4.1. Best-Performing Classification Methods

4.2. Fire Effects on the Cover and Spatial Pattern of Prickly Pear—A Case Assessment

4.3. Limitations and Recommendations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Description of the USDA Agricultural Research Service’s Multispectral Imaging System and Image Processing

- Description of flight logistics, multispectral cameras, UAV equipment, and data processing.

| Band Name | Band No. | Spectral Range (nm) | Spatial Resolution (m) | Radiometric Resolution (bits) |

|---|---|---|---|---|

| Red | 1 | 604.7–698.0 | 0.21 | 8 |

| Green | 2 | 501.1–599.6 | 0.21 | 8 |

| Blue | 3 | 418.2–495.9 | 0.21 | 8 |

| NIR-1 * | 4 | 703.2–900.2 | 0.21 | 8 |

| NIR-2 | 5 | 703.2–900.2 | 0.21 | 8 |

| NIR-3 | 6 | 703.2–900.2 | 0.21 | 8 |

Appendix A.2. Description of the Land Cover Classification Methods Evaluated in Detecting Prickly Pear Cactus

- Object-based Image Feature Extraction

- 2.

- Pixel Extraction and Random Forest Modeling

- 3.

- Endmember-based classification

References

- Hanselka, C.W.; Falconer, L.L. Prickly pear management in south Texas. Rangel. Arch. 1994, 16, 102–106. [Google Scholar]

- Hart, C.R.; Lyons, R.K. Prickly Pear Biology and Management; Texas AgriLife Extension Service: College Station, TX, USA, 2010; pp. 1–8. [Google Scholar]

- Bunting, S.C.; Wright, H.A.; Neuenschwander, L.F. Long-term effects of fire on cactus in the southern mixed prairie of Texas. Rangel. Ecol. Manag. Range Manag. Arch. 1980, 33, 85–88. [Google Scholar] [CrossRef]

- Ansley, R.J.; Castellano, M.J. Prickly pear cactus responses to summer and winter fires. Rangel. Ecol. Manag. 2007, 60, 244–252. [Google Scholar] [CrossRef]

- Archer, S.R.; Andersen, E.M.; Predick, K.I.; Schwinning, S.; Steidl, R.J.; Woods, S.R. Woody plant encroachment: Causes and consequences. In Rangeland Systems: Processes, Management and Challenges; Briske, D.D., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 25–84. ISBN 978-3-319-46709-2. [Google Scholar]

- Wilcox, B.P.; Birt, A.; Fuhlendorf, S.D.; Archer, S.R. Emerging frameworks for understanding and mitigating woody plant encroachment in grassy biomes. Curr. Opin. Environ. Sustain. 2018, 32, 46–52. [Google Scholar] [CrossRef]

- Melgar, B.; Dias, M.I.; Ciric, A.; Sokovic, M.; Garcia-Castello, E.M.; Rodriguez-Lopez, A.D.; Barros, L.; Ferreira, I. By-product recovery of Opuntia spp. peels: Betalainic and phenolic profiles and bioactive properties. Ind. Crops Prod. 2017, 107, 353–359. [Google Scholar] [CrossRef]

- Cruz-Martins, N.; Roriz, C.; Morales, P.; Barros, L.; Ferreira, I. Food colorants: Challenges, opportunities and current desires of agro-industries to ensure consumer expectations and regulatory practices. Trends Food Sci. Technol. 2016, 52, 1–15. [Google Scholar] [CrossRef]

- Guevara, J.C.; Suassuna, P.; Felker, P. Opuntia forage production systems: Status and prospects for rangeland application. Rangel. Ecol. Manag. 2009, 62, 428–434. [Google Scholar] [CrossRef]

- Ueckert, D.N.; Livingston, C.W., Jr.; Huston, J.E.; Menzies, C.S.; Dusek, R.K.; Petersen, J.L.; Lawrence, B.K. Range and Sheep Management for Reducing Pearmouth and Other Pricklypear-related Health Problems in Sheep Flocks. TTS. Available online: https://sanangelo.tamu.edu/files/2011/11/1990_7.pdf#page=46 (accessed on 15 June 2023).

- McMillan, Z.; Scott, C.; Taylor, C.; Huston, J. Nutritional value and intake of prickly pear by goats. J. Range Manag. 2002, 55, 139. [Google Scholar] [CrossRef]

- Felker, P.; Paterson, A.; Jenderek, M. Forage potential of Opuntia clones maintained by the USDA, National Plant Germplasm System (NPGS) collection. Crop Sci. 2006, 46, 2161–2168. [Google Scholar] [CrossRef]

- McGinty, A.; Smeins, F.E.; Merrill, L.B. Influence of spring burning on cattle diets and performance on the Edwards Plateau. J. Range Manag. 1983, 36, 175. [Google Scholar] [CrossRef]

- Ansley, R.J.; Taylor, C. The future of fire as a tool for managing brush. In Brush Management—Past, Present, Future; Hamilton, W.T., McGunty, A., Ueckert, D.N., Hanselka, C.W., Lee, M.R., Eds.; Texas A&M University Press: College Station, TX, USA, 2004; pp. 200–210. ISBN 978-1-58544-355-0. [Google Scholar]

- Vermeire, L.T.; Roth, A.D. Plains Prickly pear response to fire: Effects of fuel load, heat, fire weather, and donor site soil. Rangel. Ecol. Manag. 2011, 64, 404–413. [Google Scholar] [CrossRef]

- Scifres, C.J.; Hamilton, W.T. Prescribed burning for brushland management: The south Texas example. Texas A&M University Press: College Station, TX, USA, 1993; ISBN 0890965390. [Google Scholar]

- Sankey, J.B.; Munson, S.M.; Webb, R.H.; Wallace, C.S.A.; Duran, C.M. Remote sensing of Sonoran desert vegetation structure and phenology with ground-based LiDAR. Remote Sens. 2015, 7, 342–359. [Google Scholar] [CrossRef]

- Bazzichetto, M.; Malavasi, M.; Barták, V.; Acosta, A.T.R.; Moudrý, V.; Carranza, M.L. Modeling plant invasion on mediterranean coastal landscapes: An integrative approach using remotely sensed data. Landsc. Urban Plan. 2018, 171, 98–106. [Google Scholar] [CrossRef]

- Oddi, L.; Cremonese, E.; Ascari, L.; Filippa, G.; Galvagno, M.; Serafino, D.; Cella, U.M. Using UAV imagery to detect and map woody species encroachment in a subalpine grassland: Advantages and limits. Remote Sens. 2021, 13, 1239. [Google Scholar] [CrossRef]

- Mirik, M.S.A.A.; Ansley, R.J. Comparison of ground-measured and image-classified mesquite (Prosopis glandulosa) canopy cover. Rangel. Ecol. Manag. 2012, 65, 85–95. [Google Scholar] [CrossRef]

- Mirik, M.; Ansley, R.J.; Steddom, K.; Jones, D.C.; Rush, C.M.; Michels, G.J.; Elliott, N.C. Remote distinction of a noxious weed (Musk Thistle: Carduus Nutans) using airborne hyperspectral imagery and the Support Vector Machine classifier. Remote Sens. 2013, 5, 612–630. [Google Scholar] [CrossRef]

- Olariu, H.G.; Malambo, L.; Popescu, S.C.; Virgil, C.; Wilcox, B.P. Woody plant encroachment: Evaluating methodologies for semiarid woody species classification from drone images. Remote Sens. 2022, 14, 1665. [Google Scholar] [CrossRef]

- Hay, G.; Castilla, G. Object-based image analysis: Strengths, Weaknesses, Opportunities and Threats (SWOT). In The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Science (ISPRS Archives); Salzburg University: Salzburg, Austria, 2006; Volume XXXVI-4/C42, Available online: https://www.isprs.org/proceedings/XXXVI/4-C42/Papers/OBIA2006_Hay_Castilla.pdf (accessed on 10 May 2022).

- Weih, R.; Riggan, N. Object-based classification vs. Pixel-based classification: Comparative importance of multi-resolution imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2010, 38, C7. [Google Scholar]

- Laliberte, A.; Rango, A.; Havstad, K.; Paris, J.; Beck, R.; Mcneely, R.; Gonzalez, A. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sens. Environ. 2004, 93, 198–210. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Amini, S.; Homayouni, S.; Safari, A.; Darvishsefat, A. Object-based classification of hyperspectral data using Random Forest algorithm. Geo-Spat. Inf. Sci. 2018, 21, 127–138. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine versus Random Forest for remote sensing image classification: A Meta-analysis and systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Larrinaga, A.R.; Brotons, L. Greenness indices from a low-cost UAV imagery as tools for monitoring post-fire forest recovery. Drones 2019, 3, 6. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Huete, A.; Justice, C.; Liu, H. Development of vegetation and soil indices for MODIS-EOS. Remote Sens. Environ. 1994, 49, 224–234. [Google Scholar] [CrossRef]

- Zhao, F.; Xu, B.; Yang, X.; Jin, Y.; Li, J.; Xia, L.; Chen, S.; Ma, H. Remote sensing estimates of grassland aboveground biomass based on MODIS net primary productivity (NPP): A Case study in the Xilingol grassland of northern China. Remote Sens. 2014, 6, 5386. [Google Scholar] [CrossRef]

- Li, Z.; Angerer, J.P.; Jaime, X.; Yang, C.; Wu, X.B. Estimating rangeland fine fuel biomass in western Texas using high-resolution aerial imagery and machine learning. Remote Sens. 2022, 14, 4360. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Buschmann, C.; Lichtenthaler, H.K. Leaf chlorophyll fluorescence corrected for re-absorption by means of absorption and reflectance measurements. J. Plant Physiol. 1998, 152, 283–296. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Campos-Taberner, M.; Moreno, A.; Walther, S.; Duveiller, G.; Cescatti, A.; Mahecha, M.; Muñoz, J.; García-Haro, F.; Guanter, L.; et al. A unified vegetation index for quantifying the terrestrial biosphere. Sci. Adv. 2021, 7, eabc7447. [Google Scholar] [CrossRef] [PubMed]

- Perry, C.R.; Lautenschlager, L.F. Functional equivalence of spectral vegetation indices. Remote Sens. Environ. 1984, 14, 169–182. [Google Scholar] [CrossRef]

- Silleos, N.G.; Alexandridis, T.K.; Gitas, I.Z.; Perakis, K. Vegetation Indices: Advances Made in Biomass Estimation and Vegetation Monitoring in the Last 30 Years. Geocarto Int. 2006, 21, 21–28. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Long, D.S.; Gessler, P.E.; Smith, A.M.S. Using in-situ measurements to evaluate the new RapidEyeTM satellite series for prediction of wheat nitrogen status. Int. J. Remote Sens. 2007, 28, 4183–4190. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Richardsons, A.J.; Wiegand, A. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Clevers, J.G.P.W. Application of the WDVI in estimating LAI at the generative stage of barley. ISPRS J. Photogramm. Remote Sens. 1991, 46, 37–47. [Google Scholar] [CrossRef]

- Gao, B. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Jackson, R.; Huete, A. Interpreting vegetation indices. Prev. Vet. Med. 1991, 11, 185–200. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Munkhdulam, O.; Atzberger, C.; Chambers, J.; Amarsaikhan, D. Mapping pasture biomass in Mongolia using partial least squares, Random Forest regression and Landsat 8 imagery. Int. J. Remote Sens. 2018, 40, 3204–3226. [Google Scholar] [CrossRef]

- Fern, R.; Foxley, E.; Montalvo, A.; Morrison, M. Suitability of NDVI and OSAVI as estimators of green biomass and coverage in a semi-arid rangeland. Ecol. Indic. 2018, 94, 16–21. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Liang, T.; Yang, S.; Feng, Q.; Liu, B.; Zhang, R.; Huang, X.; Xie, H. Multi-factor modeling of above-ground biomass in alpine grassland: A case study in the Three-River Headwaters region, China. Remote Sens. Environ. 2016, 186, 164–172. [Google Scholar] [CrossRef]

- Fusaro, L.; Salvatori, E.; Mereu, S.; Manes, F. Photosynthetic traits as indicators for phenotyping urban and peri-urban forests: A case study in the metropolitan city of Rome. Ecol. Indic. 2019, 103, 301–311. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)—Interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Odden, B.; Kneubuehler, M.; Itten, K. Comparison of a hyperspectral classification method implemented in two remote sensing software packages. In Proceedings of the 6th Workshop on Imaging Spectroscopy, Tel Aviv, Israel, 19 March 2009; European Association of Remote Sensing Laboratories. pp. 1–8. [Google Scholar] [CrossRef]

- Durgasi, A.; Guha, A. Potential utility of Spectral Angle Mapper and Spectral Information Divergence methods for mapping lower Vindhyan rocks and their accuracy assessment with respect to conventional lithological map in Jharkhand, India. J. Indian Soc. Remote Sens. 2018, 46, 737–747. [Google Scholar] [CrossRef]

- Klinken, R.V.; Shepherd, D.; Parr, R.; Robinson, T.; Anderson, L. Mapping mesquite (Prosopis) distribution and density using visual aerial surveys. Rangel. Ecol. Manag. 2007, 60, 408–416. [Google Scholar] [CrossRef]

- Chignell, S.M.; Luizza, M.W.; Skach, S.; Young, N.E.; Evangelista, P.H. An integrative modeling approach to mapping wetlands and riparian areas in a heterogeneous Rocky Mountain watershed. Remote Sens. Ecol. Conserv. 2018, 4, 150–165. [Google Scholar] [CrossRef]

- Chang, C.-I. Spectral Information Divergence for Hyperspectral Image Analysis. In Proceedings of the IEEE 1999 International Geoscience and Remote Sensing Symposium. IGARSS’99, Hamburg, Germany, 28 June–2 July 1999; 1999; Volume 1, pp. 509–511. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, X.; Yang, S.; Wang, S. Improving hyperspectral image classification using Spectral Information Divergence. IEEE Geosci. Remote Sens. Lett. 2014, 11, 249–253. [Google Scholar] [CrossRef]

- Soil Survey Staff Soil Survey Geographic (SSURGO) Database. Available online: https://sdmdataaccess.sc.egov.usda.gov (accessed on 21 October 2022).

- Jin, Y.; Yang, X.; Qiu, J.; Li, J.; Gao, T.; Wu, Q.; Zhao, F.; Ma, H.; Yu, H.; Xu, B. Remote Sensing-based biomass estimation and its spatio-temporal variations in temperate grassland, northern China. Remote Sens. 2014, 6, 1496–1513. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, L.; Xiao, J.; Chen, S.; Kato, T.; Zhou, G. A Comparison of satellite-derived vegetation indices for approximating gross primary productivity of grasslands. Rangel. Ecol. Manag. 2014, 67, 9–18. [Google Scholar] [CrossRef]

- Ren, H.; Zhou, G.; Zhang, X. Estimation of green aboveground biomass of desert steppe in Inner Mongolia based on red-edge reflectance curve area method. Biosyst. Eng. 2011, 109, 385–395. [Google Scholar] [CrossRef]

- Richards, J.A.; Richards, J.A. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 2022; Volume 5. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- R Development Core Team R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing. 2022. Available online: http://www.R-project.org (accessed on 10 May 2022).

- Liaw, A.; Wiener, M. Classification and regression by RandomForest. Forest 2001, 23, 18–22. [Google Scholar]

- Pelletier, J.D.; Broxton, P.D.; Hazenberg, P.; Zeng, X.; Troch, P.A.; Niu, G.-Y.; Williams, Z.; Brunke, M.A.; Gochis, D. A gridded global data set of soil, intact regolith, and sedimentary deposit thicknesses for regional and global land surface modeling. J. Adv. Model. Earth Syst. 2016, 8, 41–65. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Oldeland, J.; Dorigo, W.; Lieckfeld, L.; Lucieer, A.; Jürgens, N. Combining vegetation indices, constrained ordination and fuzzy classification for mapping semi-natural vegetation units from hyperspectral imagery. Remote Sens. Environ. 2010, 114, 1155–1166. [Google Scholar] [CrossRef]

- McGarigal, K.; Cushman, S.A.; Ene, E. FRAGSTATS v4: Spatial Pattern Analysis Program for Categorical Maps. Computer Software Program Produced by the Authors. 2023. Available online: https://www.fragstats.org (accessed on 5 February 2022).

- McGarigal, K.; Tagil, S.; Cushman, S.A. Surface metrics: An alternative to patch metrics for the quantification of landscape structure. Landsc. Ecol. 2009, 24, 433–450. [Google Scholar] [CrossRef]

- Perotto-Baldivieso, H.L.; Wu, X.B.; Peterson, M.J.; Smeins, F.E.; Silvy, N.J.; Schwertner, T.W. Flooding-induced landscape changes along dendritic stream networks and implications for wildlife habitat. Landsc. Urban Plan. 2011, 99, 115–122. [Google Scholar] [CrossRef]

- Hesselbarth, M.; Sciaini, M.; With, K.; Wiegand, K.; Nowosad, J. Landscapemetrics: An open-source R tool to calculate landscape metrics. Ecography 2019, 42, 1648–1657. [Google Scholar] [CrossRef]

- Hijmans, R.J. raster: Geographic Data Analysis and Modeling. 2021. Cranr-Proj. Available online: https://CRAN.R-project.org/package=raster (accessed on 10 May 2022).

- Hijmans, R.J. Terra: Spatial data analysis. 2021. Cranr-Proj. Available online: https://CRAN.R-project.org/package=terra (accessed on 10 May 2022).

- Oksanen, J.; Simpson, G.; Blanchet, F.; Kindt, R.; Legendre, P.; Minchin, P.; O’hara, R.; Solymos, P.; Stevens, M.; Szoecs, E.; et al. _Vegan: Community Ecology Package_. R Package Version 2.6-4, Cranr-Proj. 2022. Available online: https://CRAN.R-project.org/package=vegan (accessed on 10 May 2022).

- Marsaglia, G.; Tsang, W.W.; Wang, J. Evaluating Kolmogorov’s distribution. J. Stat. Softw. 2003, 8, 1. [Google Scholar] [CrossRef]

- Sekhon, J.S. Multivariate and propensity score matching software with automated balance optimization: The Matching package for R. J. Stat. Softw. 2011, 42, 1. [Google Scholar] [CrossRef]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Cutler, D.R.; Jr, T.C.E.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for classification in ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef] [PubMed]

- Poznanovic, A.; Falkowski, M.; Maclean, A.; Smith, A.; Evans, J. An accuracy assessment of tree detection algorithms in Juniper woodlands. Photogramm. Eng. Remote Sens. 2014, 80, 627–637. [Google Scholar] [CrossRef]

- Kopeć, D.; Sławik, Ł. How to effectively use long-term remotely sensed data to analyze the process of tree and shrub encroachment into open protected wetlands. Appl. Geogr. 2020, 125, 102345. [Google Scholar] [CrossRef]

- Cao, X.; Liu, Y.; Liu, Q.; Cui, X.; Chen, X.; Chen, J. Estimating the age and population structure of encroaching shrubs in arid/semiarid grasslands using high spatial resolution remote sensing imagery. Remote Sens. Environ. 2018, 216, 572–585. [Google Scholar] [CrossRef]

- Chang, C.-I. An information-theoretic approach to spectral variability, similarity, and discrimination for hyperspectral image analysis. IEEE Trans. Inf. Theory 2000, 46, 1927–1932. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.; Ritenour, M.; Bonn, W. Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. J. Food Eng. 2009, 93, 183–191. [Google Scholar] [CrossRef]

- Lindgren, A.; Lu, Z.; Zhang, Q.; Hugelius, G. Reconstructing past global vegetation with Random Forest machine learning, sacrificing the dynamic response for robust results. J. Adv. Model. Earth Syst. 2021, 13, e2020MS002200. [Google Scholar] [CrossRef]

- Wang, C.; Shu, Q.; Wang, X.; Guo, B.; Liu, P.; Li, Q. A random forest classifier based on pixel comparison features for urban LiDAR data. ISPRS J. Photogramm. Remote Sens. 2018, 148, 75–86. [Google Scholar] [CrossRef]

- Schröer, G.; Trenkler, D. Exact and randomization distributions of Kolmogorov-Smirnov tests two or three samples. Comput. Stat. Data Anal. 1995, 20, 185–202. [Google Scholar] [CrossRef]

- Zhang, T.; Su, J.; Xu, Z.; Luo, Y.; Li, J. Sentinel-2 satellite imagery for urban land cover classification by optimized Random Forest classifier. Appl. Sci. 2021, 11, 543. [Google Scholar] [CrossRef]

- Lawrence, R.L.; Ripple, W.J. Comparisons among vegetation indices and bandwise regression in a highly disturbed, heterogeneous landscape: Mount St. Helens, Washington. Remote Sens. Environ. 1998, 64, 91–102. [Google Scholar] [CrossRef]

- Ullah, S.; Si, Y.; Schlerf, M.; Skidmore, A.; Shafique, M.; Iqbal, I.A. Estimation of grassland biomass and nitrogen using MERIS data. Int. J. Appl. Earth Obs. Geoinformation 2012, 19, 196–204. [Google Scholar] [CrossRef]

- Heirman, A.L.; Wright, H.A. Fire in medium fuels of west Texas. J. Range Manag. 1973, 26, 331–335. [Google Scholar] [CrossRef]

- Bradshaw, L.S.; Deeming, J.E.; Burgan, R.E.; Cohen, J.D. The 1978 National Fire-Danger Rating System: Technical documentation. General Technical Report INT-169. U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station: Ogden, UT, USA, 1984; 44p. [Google Scholar]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef] [PubMed]

- Meng, T.; Jing, X.; Yan, Z.; Pedrycz, W. A survey on machine learning for data fusion. Inf. Fusion 2020, 57, 115–129. [Google Scholar] [CrossRef]

- Yang, C. Remote Sensing Technologies for Crop Disease and Pest Detection. In Soil and Crop Sensing for Precision Crop Production; Li, M., Yang, C., Zhang, Q., Eds.; Agriculture Automation and Control; Springer International Publishing: Cham, Switzerland, 2022; pp. 159–184. ISBN 978-3-030-70432-2. [Google Scholar]

- Song, X.; Yang, C.; Wu, M.; Zhao, C.; Yang, G.; Hoffmann, W.C.; Huang, W. Evaluation of Sentinel-2A satellite imagery for mapping cotton root rot. Remote Sens. 2017, 9, 906. [Google Scholar] [CrossRef]

- Li, X.; Yang, C.; Huang, W.; Tang, J.; Tian, Y.; Zhang, Q. Identification of cotton root rot by multifeature selection from Sentinel-2 images using random forest. Remote Sens. 2020, 12, 3504. [Google Scholar] [CrossRef]

- Yang, C.; Westbrook, J.K.; Suh, C.P.-C.; Martin, D.E.; Hoffmann, W.C.; Lan, Y.; Fritz, B.K.; Goolsby, J.A. An airborne multispectral imaging system based on two consumer-grade cameras for agricultural remote sensing. Remote Sens. 2014, 6, 5257–5278. [Google Scholar] [CrossRef]

- Du, Y.; Chang, C.-I.; Ren, H.; Chang, C.-C.; Jensen, J.O.; D’Amico, F.M. New hyperspectral discrimination measure for spectral characterization. Opt. Eng. 2004, 43, 1777–1786. [Google Scholar]

| Band Name | Band No. | Spectral Range (nm) | Spatial Resolution (m) | Radiometric Resolution (bits) |

|---|---|---|---|---|

| Red | 1 | 604.7–698.0 | 0.21 | 8 |

| Green | 2 | 501.1–599.6 | 0.21 | 8 |

| Blue | 3 | 418.2–495.9 | 0.21 | 8 |

| NIR | 4 | 703.2–900.2 | 0.21 | 8 |

| Abbrev. | Formula | Index Name † | Reference |

|---|---|---|---|

| ExGI | Excess Green (I) | [30] | |

| GCI | Green Chlorophyll (I) | [29] | |

| NDVI | Normalized Difference (VI) | [31] | |

| EVI | Enhanced (VI) | [32] | |

| GNDVI | Green-Normalized Difference (VI) | [35] | |

| KNDVI | ; | Kernel-Normalized Difference (VI) | [36] |

| CTVI | Corrected Transformed (VI) | [37] | |

| MTVI2 | Modified-Transformed (VI) 2 | [40] | |

| NDWI | Normalized Difference Water (I) | [43] | |

| SAVI | Soil-Adjusted (VI) | [45] | |

| OSAVI | Optimized Soil-Adjusted (VI) | [47] | |

| MSAVI | Modified Soil-Adjusted (VI) | [48] | |

| MSAVI2 | Modified Soil-Adjusted (VI) 2 | [48] | |

| SIPI | Structure-Insensitive Pigment (I) | [50] |

| Method | Timing | OA (%) | KC (%) | UA (%) | PA (%) | |

|---|---|---|---|---|---|---|

| Object-based | Support Vector Machine (SVM) | Pre-fire | 48.9 | 23.0 | 91.3 | 49.0 |

| Post-fire | 59.3 | 49.9 | 83.8 | 60.1 | ||

| K Nearest Neighbor (KNN) | Pre-fire | 43.5 | 15.6 | 93.0 | 32.1 | |

| Post-fire | 67.1 | 63.4 | 78.7 | 70.9 | ||

| Pixel-based | RandomForest (RF) | Pre-fire | 91.2 | 82.4 | 88.0 | 94.0 |

| Post-fire | 85.8 | 71.6 | 80.9 | 89.6 | ||

| Endmember-based | Spectral Angle Mapper (SAM) | Pre-fire | 96.6 | 93.2 | 95.4 | 97.7 |

| Post-fire | 91.2 | 86.9 | 86.4 | 91.6 | ||

| Spectral Information Divergence (SID) | Pre-fire | 94.7 | 89.4 | 92.8 | 96.5 | |

| Post-fire | 84.6 | 81.8 | 88.93 | 82.5 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaime, X.A.; Angerer, J.P.; Yang, C.; Walker, J.; Mata, J.; Tolleson, D.R.; Wu, X.B. Exploring Effective Detection and Spatial Pattern of Prickly Pear Cactus (Opuntia Genus) from Airborne Imagery before and after Prescribed Fires in the Edwards Plateau. Remote Sens. 2023, 15, 4033. https://doi.org/10.3390/rs15164033

Jaime XA, Angerer JP, Yang C, Walker J, Mata J, Tolleson DR, Wu XB. Exploring Effective Detection and Spatial Pattern of Prickly Pear Cactus (Opuntia Genus) from Airborne Imagery before and after Prescribed Fires in the Edwards Plateau. Remote Sensing. 2023; 15(16):4033. https://doi.org/10.3390/rs15164033

Chicago/Turabian StyleJaime, Xavier A., Jay P. Angerer, Chenghai Yang, John Walker, Jose Mata, Doug R. Tolleson, and X. Ben Wu. 2023. "Exploring Effective Detection and Spatial Pattern of Prickly Pear Cactus (Opuntia Genus) from Airborne Imagery before and after Prescribed Fires in the Edwards Plateau" Remote Sensing 15, no. 16: 4033. https://doi.org/10.3390/rs15164033

APA StyleJaime, X. A., Angerer, J. P., Yang, C., Walker, J., Mata, J., Tolleson, D. R., & Wu, X. B. (2023). Exploring Effective Detection and Spatial Pattern of Prickly Pear Cactus (Opuntia Genus) from Airborne Imagery before and after Prescribed Fires in the Edwards Plateau. Remote Sensing, 15(16), 4033. https://doi.org/10.3390/rs15164033