Vessel Detection with SDGSAT-1 Nighttime Light Images

Abstract

:1. Introduction

2. Data

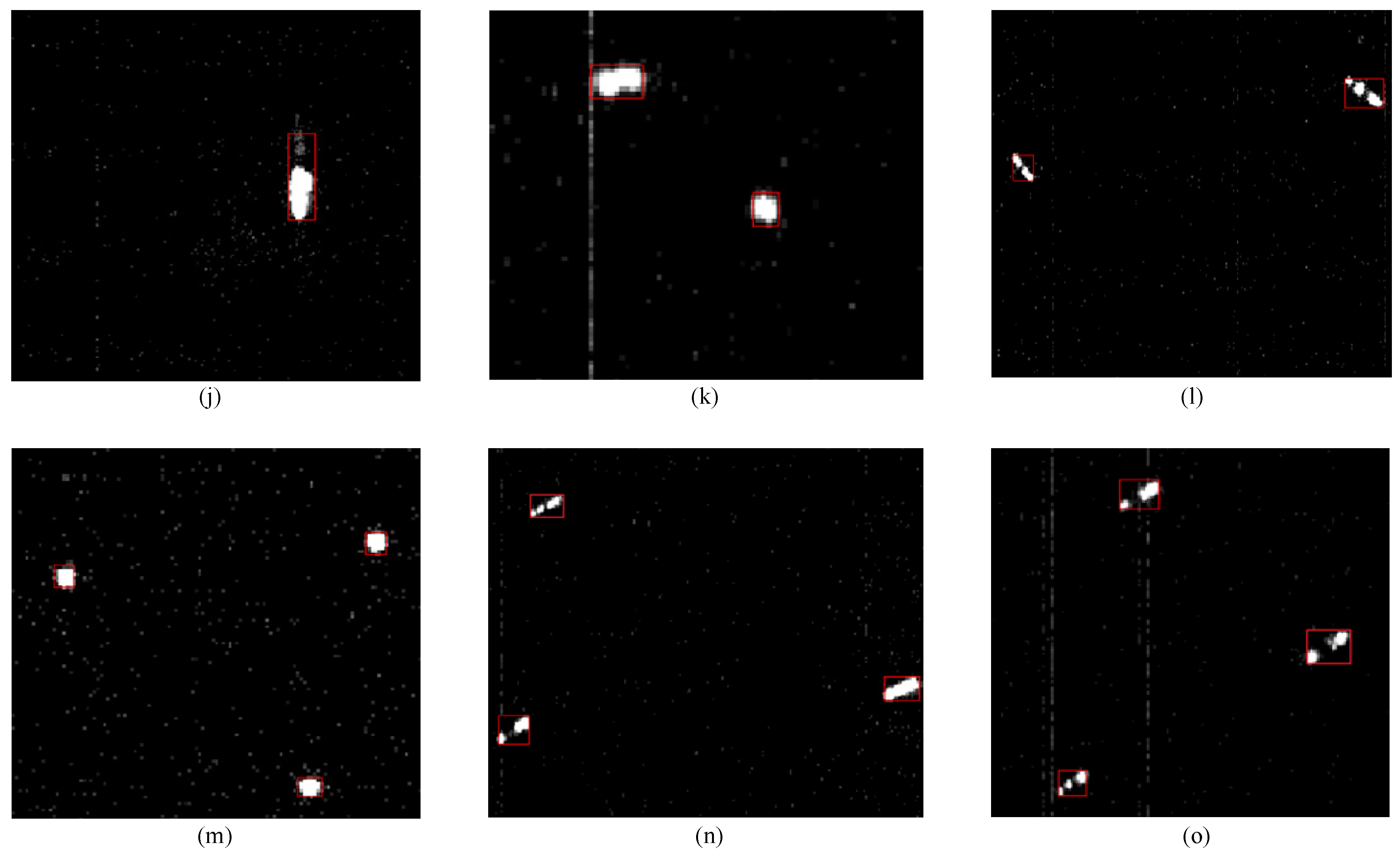

2.1. Overview of the SDGSAT-1 GIU Data

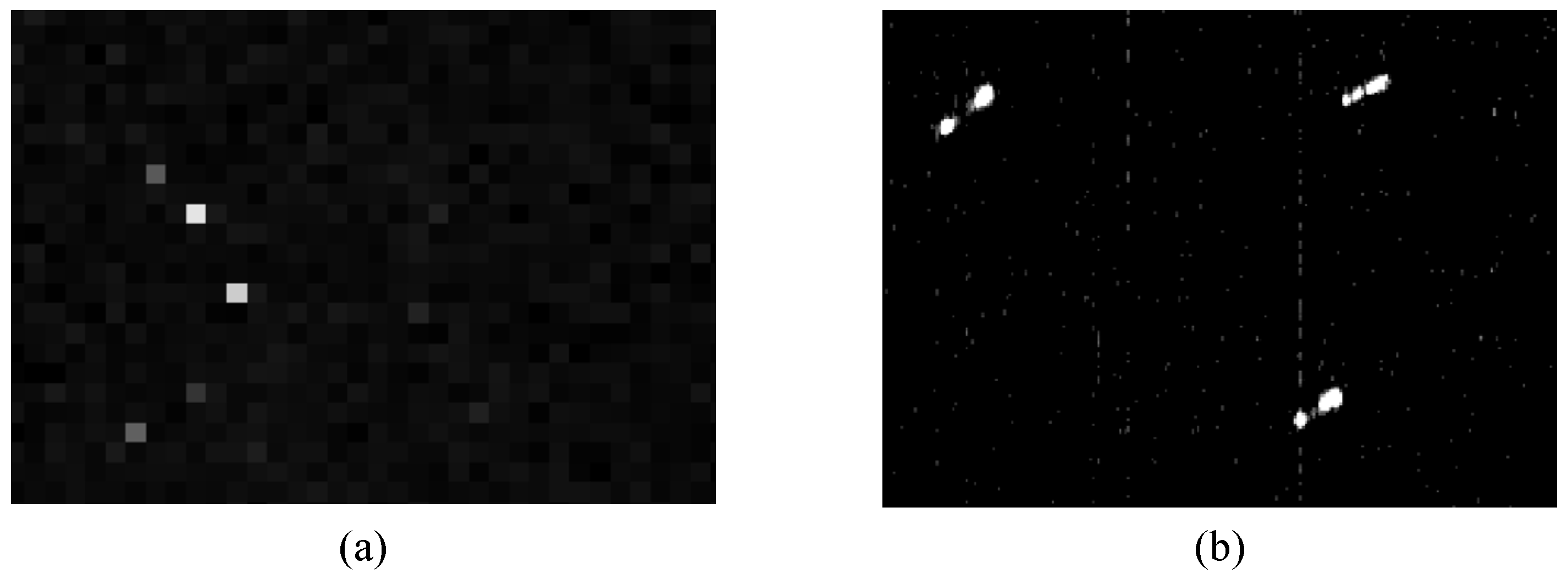

2.2. Characteristics of Vessels in GIU Data

- The vessels are presented as a local bright spot or bright spot with an irregular shape;

- Compared with the vast and empty background, the vessels are small in size and sparsely distributed, accounting for a low proportion of the overall image;

- Part of the vessels are unconnected targets, consisting of several close but disconnected bright spots;

- The image noise is complex, but the noise brightness value is generally lower than the target peak area of the vessels.

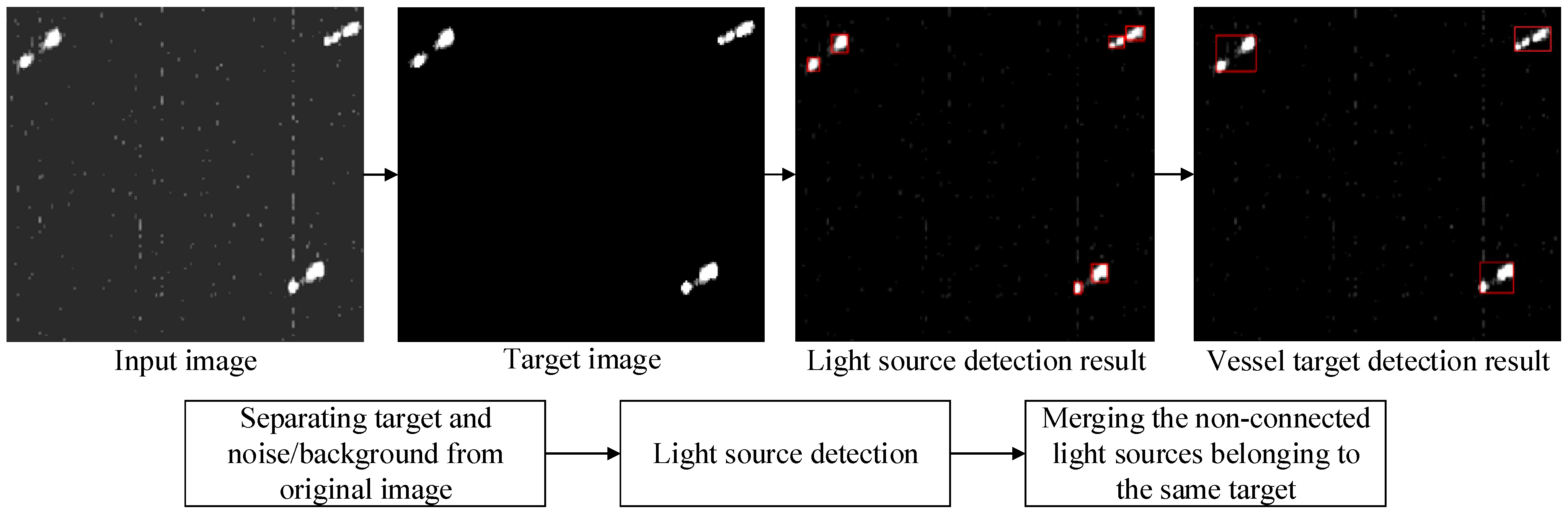

3. Method

3.1. Noise and Background Separation through Weighted RPCA

| Algorithm 1 Solution via Inexact ALM Method |

| Input: Original image , Weighting parameter Initialize: ; , , While not converged do: Update : ; Update : ; Update : ; Update : ; Check the convergence conditions ; Update k: ; End while Output: Background image , Target image |

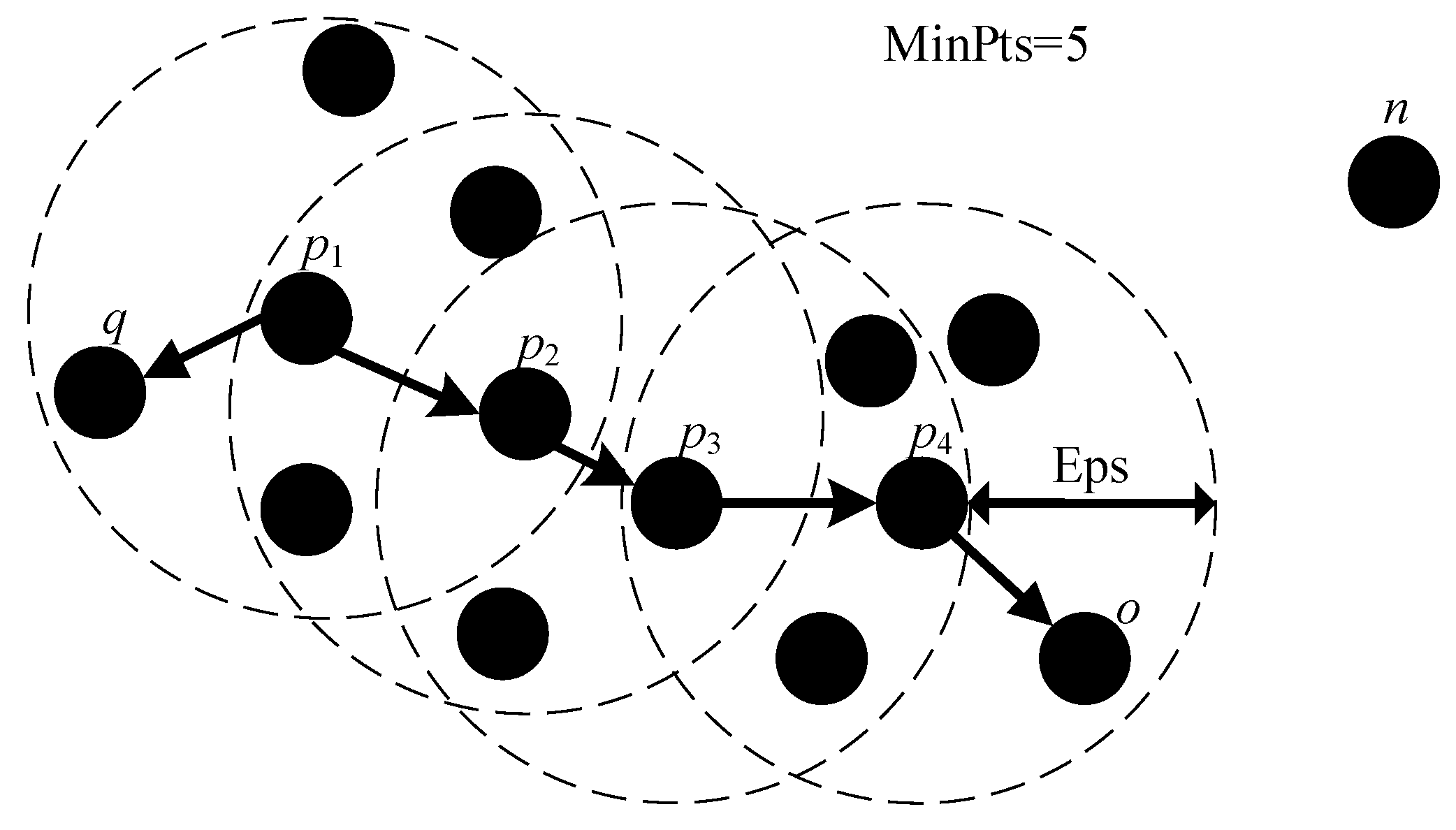

3.2. Light Source Detection Based on DBSCAN

| Algorithm 2 Light source detection via DBSCAN |

| Input: target image T, Initialize: MinPts = 5, Eps = 1, object set , For pixel ai in image T: If , ; End if End for While all objects in are not traversed Take an untraversed object p from ; If p is a core object, Initialize Target cluster set traverse to find all objects which are Density-reachable from p, traverse to find all objects , which are Directly Density-reachable from any object in , ; ; k = k + 1; end if end while Output: target cluster result |

3.3. Clusters Merging through Relative Interconnection Index

| Algorithm 3 Clusters merging through relative interconnection index |

| Input: target cluster result Initialize: , , , , , While While , for if , , , end if end for end while , , end while , Output: Vessel detection result |

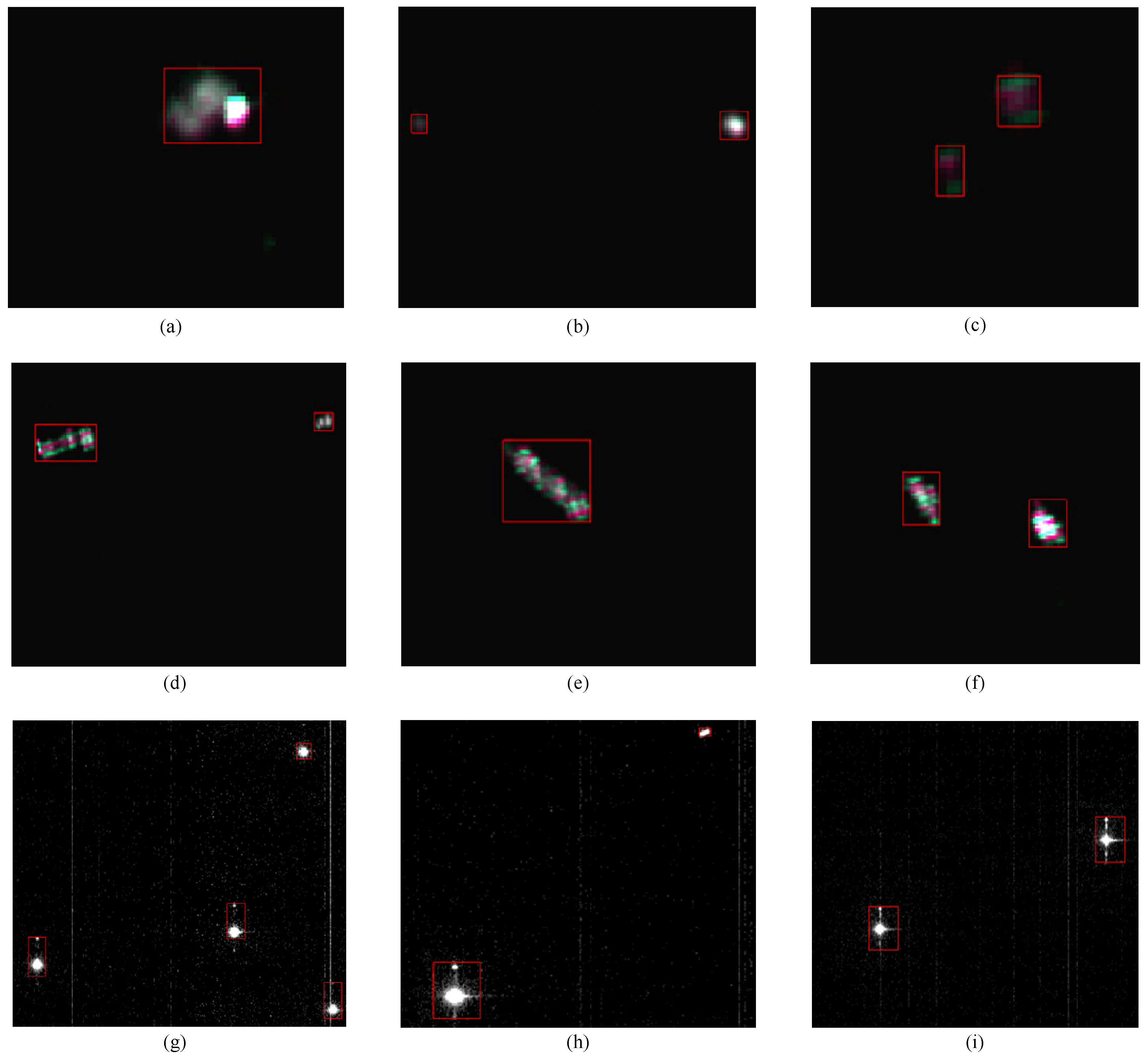

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Perera, L.P.; Oliveira, P.; Soares, C.G. Maritime traffic monitoring based on vessel detection, tracking, state estimation, and trajectory prediction. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1188–1200. [Google Scholar] [CrossRef]

- Kroodsma, D.A.; Mayorga, J.; Hochberg, T.; Miller, N.A.; Boerder, K.; Ferretti, F.; Wilson, A.; Bergman, B.; White, T.D.; Block, B.A.J. Tracking the global footprint of fisheries. Science 2018, 359, 904–908. [Google Scholar] [CrossRef]

- Kanjir, U.; Greidanus, H.; Oštir, K.J. Vessel detection and classification from spaceborne optical images: A literature survey. Remote Sens. Environ. 2018, 207, 1–26. [Google Scholar] [CrossRef]

- Li, B.; Xie, X.; Wei, X.; Tang, W. Ship detection and classification from optical remote sensing images: A survey. Chin. J. Aeronaut. 2021, 34, 145–163. [Google Scholar] [CrossRef]

- Ophoff, T.; Puttemans, S.; Kalogirou, V.; Robin, J.-P.; Goedemé, T.J. Vehicle and vessel detection on satellite imagery: A comparative study on single-shot detectors. Remote Sens. 2020, 12, 1217. [Google Scholar] [CrossRef]

- Farahnakian, F.; Heikkonen, J.J. Deep learning based multi-modal fusion architectures for maritime vessel detection. Remote Sens. 2020, 12, 2509. [Google Scholar] [CrossRef]

- Ding, K.; Yang, J.; Lin, H.; Wang, Z.; Wang, D.; Wang, X.; Ni, K.; Zhou, Q. Towards real-time detection of ships and wakes with lightweight deep learning model in Gaofen-3 SAR images. Remote Sens. Environ. 2023, 284, 113345. [Google Scholar] [CrossRef]

- Miller, S.D.; Mills, S.P.; Elvidge, C.D.; Lindsey, D.T.; Lee, T.F.; Hawkins, J.D.J. Suomi satellite brings to light a unique frontier of nighttime environmental sensing capabilities. Proc. Natl. Acad. Sci. USA 2012, 109, 15706–15711. [Google Scholar] [CrossRef] [PubMed]

- Miller, S.D.; Straka, W., III; Mills, S.P.; Elvidge, C.D.; Lee, T.F.; Solbrig, J.; Walther, A.; Heidinger, A.K.; Weiss, S.C.J. Illuminating the capabilities of the suomi national polar-orbiting partnership (NPP) visible infrared imaging radiometer suite (VIIRS) day/night band. Remote Sens. 2013, 5, 6717–6766. [Google Scholar] [CrossRef]

- Liu, Y.; Saitoh, S.-I.; Hirawake, T.; Igarashi, H.; Ishikawa, Y.J. Detection of squid and pacific saury fishing vessels around Japan using VIIRS Day/Night Band image. Proc. Asia-Pac. Adv. Netw. 2015, 39, 28. [Google Scholar] [CrossRef]

- Straka, W.C., III; Seaman, C.J.; Baugh, K.; Cole, K.; Stevens, E.; Miller, S.D.J. Utilization of the suomi national polar-orbiting partnership (npp) visible infrared imaging radiometer suite (viirs) day/night band for arctic ship tracking and fisheries management. Remote Sens. 2015, 7, 971–989. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Cinzano, P.; Pettit, D.; Arvesen, J.; Sutton, P.; Small, C.; Nemani, R.; Longcore, T.; Rich, C.; Safran, J. The Nightsat mission concept. Int. J. Remote Sens. 2007, 28, 2645–2670. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Zhizhin, M.; Baugh, K.; Hsu, F.-C.J. Automatic boat identification system for VIIRS low light imaging data. Remote Sens. 2015, 7, 3020–3036. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.; Zhizhin, M.; Hsu, F.-C.; Ghosh, T. Supporting international efforts for detecting illegal fishing and GAS flaring using viirs. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2802–2805. [Google Scholar]

- Hsu, F.-C.; Elvidge, C.D.; Baugh, K.; Zhizhin, M.; Ghosh, T.; Kroodsma, D.; Susanto, A.; Budy, W.; Riyanto, M.; Nurzeha, R. Cross-matching VIIRS boat detections with vessel monitoring system tracks in Indonesia. Remote Sens. 2019, 11, 995. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Ghosh, T.; Baugh, K.; Zhizhin, M.; Hsu, F.-C.; Katada, N.S.; Penalosa, W.; Hung, B.Q. Rating the effectiveness of fishery closures with visible infrared imaging radiometer suite boat detection data. Front. Mar. Sci. 2018, 5, 132. [Google Scholar] [CrossRef]

- Lebona, B.; Kleynhans, W.; Celik, T.; Mdakane, L. Ship detection using VIIRS sensor specific data. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1245–1247. [Google Scholar]

- Zhong, L.; Liu, X.S. Application of LJ1-01 Remote Sensing Data in Marine Vessel detection. Remote Sens. Inf. 2019, 34, 126–131. [Google Scholar] [CrossRef]

- Crisp, D.J. The state-of-the-art in ship detection in synthetic aperture radar imagery; Department of Defence: Canberra, Australia, 2004; p. 115.

- Guo, G.; Fan, W.; Xue, J.; Zhang, S.; Zhang, H.; Tang, F.; Cheng, T. Identification for operating pelagic light-fishing vessels based on NPP/VIIRS low light imaging data. Trans. Chin. Soc. Agric. Eng. 2017, 33, 245–251. [Google Scholar]

- Kim, E.; Kim, S.-W.; Jung, H.C.; Ryu, J.-H.J. Moon phase based threshold determination for VIIRS boat detection. Korean J. Remote Sens. 2021, 37, 69–84. [Google Scholar]

- Xue, C.; Gao, C.; Hu, J.; Qiu, S.; Wang, Q. Automatic boat detection based on diffusion and radiation characterization of boat lights during night for VIIRS DNB imaging data. Opt. Express 2022, 30, 13024–13038. [Google Scholar] [CrossRef]

- Shao, J.; Yang, Q.; Luo, C.; Li, R.; Zhou, Y.; Zhang, F.J. Vessel detection from nighttime remote sensing imagery based on deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 12536–12544. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Motomura, K.; Nagao, T. Fishing activity prediction from satellite boat detection data. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 2870–2875. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. pp. 234–241. [Google Scholar]

- Liu, W.; Nie, Y.; Chen, X.; Li, J.; Zhao, L.; Zheng, F.; Han, Y.; Liu, S. Deep Learning Method in Complex Scenes Luminous Ship Target Detection. Spacecr. Recovery Remote Sens. 2022, 43, 124–137. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Lee, T.E.; Miller, S.D.; Turk, F.J.; Schueler, C.; Julian, R.; Deyo, S.; Dills, P.; Wang, S. The NPOESS VIIRS day/night visible sensor. Bull. Am. Meteorol. Soc. 2006, 87, 191–200. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Baugh, K.E.; Kihn, E.A.; Kroehl, H.W.; Davis, E.R. Mapping city lights with nighttime data from the DMSP Operational Linescan System. Photogramm. Eng. Remote Sens. 1997, 63, 727–734. [Google Scholar]

- Liao, L.; Weiss, S.; Mills, S.; Hauss, B. Suomi NPP VIIRS day-night band on-orbit performance. J. Geophys. Res. Atmos. 2013, 118, 12705–12718. [Google Scholar] [CrossRef]

- Li, D.; Zhang, G.; Shen, X.; Zhong, X.; Jiang, Y.; Wang, T.; Tu, J.; Li, Z. Design and processing night light remote sensing of LJ-1 01 satellite. J. Remote Sens. 2019, 23, 1011–1022. [Google Scholar] [CrossRef]

- Guo, H.; Dou, C.; Chen, H.; Liu, J.; Fu, B.; Li, X.; Zou, Z.; Liang, D. SDGSAT-1: The world’s first scientific satellite for sustainable development goals. Sci. Bull. 2022, 68, 34–38. [Google Scholar] [CrossRef]

- Wright, J.; Ganesh, A.; Rao, S.; Peng, Y.; Ma, Y. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. Density-based spatial clustering of applications with noise. In Proceedings of the International Conference Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996. [Google Scholar]

- Lin, Z.; Chen, M.; Ma, Y. The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared patch-image model for small target detection in a single image. IEEE Trans. Image Process 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Guo, J.; Wu, Y.; Dai, Y. Small target detection based on reweighted infrared patch-image model. IET Image Proc. 2018, 12, 70–79. [Google Scholar] [CrossRef]

- Vu, C.T.; Chandler, D.M. S3: A spectral and spatial sharpness measure. In Proceedings of the 2009 First International Conference on Advances in Multimedia, Colmar, France, 20–25 July 2009; pp. 37–43. [Google Scholar]

- Blanchet, G.; Moisan, L.; Rougé, B. Measuring the global phase coherence of an image. In Proceedings of the 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 1176–1179. [Google Scholar]

- Karypis, G.; Han, E.-H.; Kumar, V. Chameleon: Hierarchical clustering using dynamic modeling. Computer 1999, 32, 68–75. [Google Scholar] [CrossRef]

- Guo, B.; Hu, D.; Zheng, Q. Potentiality of SDGSAT-1 glimmer imagery to investigate the spatial variability in nighttime lights. Int. J. Appl. Earth Obs. Geoinf. 2023, 119, 103313. [Google Scholar] [CrossRef]

| Satellite and Imager | Index Item | Detail |

|---|---|---|

| SDGSAT-1 | Orbit type | Sun-synchronous orbit |

| Orbit height | 505 km | |

| Orbit angle | 97.5° | |

| Revisit cycle | 11 days | |

| Band | 4 Glimmer Imager for Urbanization (GIU) bands, 7 Multispectral Imager for Inshore (MII) bands, 3 Thermal Infrared Spectrometer (TIS) bands | |

| Glimmer Imager and GIU data | Imaging width | 300 km |

| Detection spectrum | P: 444~910 nm (PL/PH) B: 424~526 nm G: 506~612 nm R: 600~894 nm | |

| Pixel resolution | PL/PH/HDR 10 m, RGB 40 m | |

| SNR for urban trunk road light | ≥50 (P/RGB, 1.0 × 10−2 W/m2/sr) | |

| SNR for urban residential area | ≥10 (P/RGB, 1.6 × 10−3 W/m2/sr) | |

| SNR for Polar Moonlight | ≥10 (P, 3.0 × 10−5 W/m2/sr) | |

| Dynamic range of single scene | ≥60 dB |

| Area | Image | Time and Position | Target Number |

|---|---|---|---|

| Bohai Sea | Image 1(RGB) | 36.78°N to 40.61°N, 117.33°E to 121.97°E | 314 |

| 2022-03-20T13:10:38 | |||

| East China Sea | Image 2(HDR) | 27.30°N to 30.27°N, 122.84°E to 126.02°E | 99 |

| 2022-08-20T12:59:43 | |||

| Gulf of Mexico | Image 3(HDR) | 25.33°N to 28.34°N, 86.71°W to 89.56°W | 157 |

| 2022-05-19T03:10:29 | |||

| Image 4(RGB) | 28.04°N to 31.04°N, 87.32°W to 90.55°W | ||

| 2022-05-19T03:11:12 |

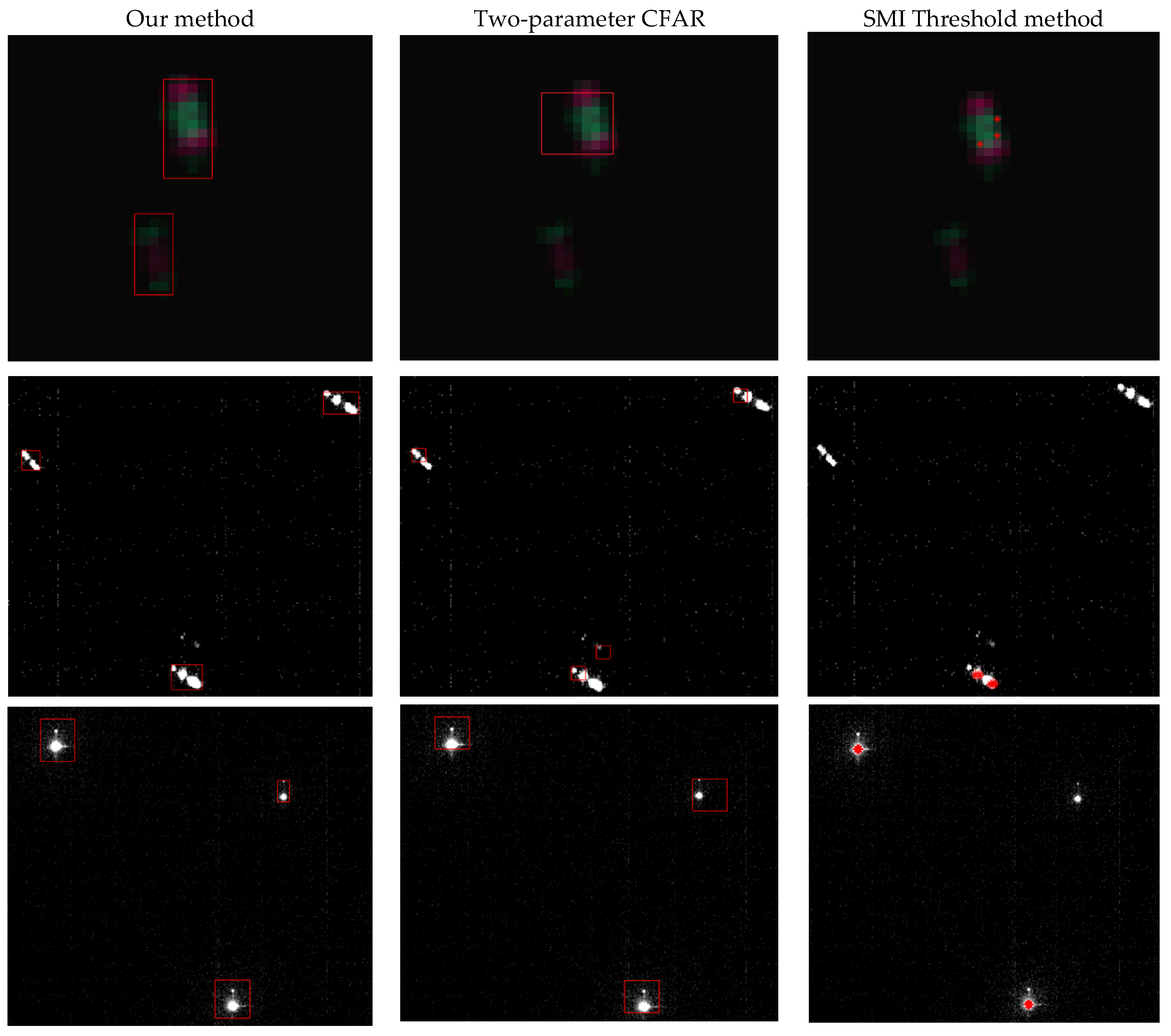

| Method | Study Area | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Our method | Gulf of Mexico | 0.9623 | 0.9745 | 0.9684 |

| Bohai sea | 0.9743 | 0.9650 | 0.9696 | |

| East China Sea | 0.9596 | 0.9596 | 0.9596 | |

| total | 0.9684 | 0.9667 | 0.9675 | |

| SMI Threshold method | Gulf of Mexico | 0.4421 | 0.6561 | 0.5282 |

| Bohai sea | 0.4134 | 0.6306 | 0.4994 | |

| East China Sea | 0.4776 | 0.6465 | 0.5494 | |

| total | 0.4471 | 0.6825 | 0.5403 | |

| Two-parameter CFAR | Gulf of Mexico | 0.7584 | 0.7197 | 0.7386 |

| Bohai sea | 0.7672 | 0.7452 | 0.7561 | |

| East China Sea | 0.7556 | 0.6869 | 0.7196 | |

| total | 0.7629 | 0.7281 | 0.7451 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Qiu, S.; Chen, F.; Chen, Y.; Qian, Y.; Cui, H.; Zhang, Y.; Khoramshahi, E.; Qiu, Y. Vessel Detection with SDGSAT-1 Nighttime Light Images. Remote Sens. 2023, 15, 4354. https://doi.org/10.3390/rs15174354

Zhao Z, Qiu S, Chen F, Chen Y, Qian Y, Cui H, Zhang Y, Khoramshahi E, Qiu Y. Vessel Detection with SDGSAT-1 Nighttime Light Images. Remote Sensing. 2023; 15(17):4354. https://doi.org/10.3390/rs15174354

Chicago/Turabian StyleZhao, Zheng, Shi Qiu, Fu Chen, Yuwei Chen, Yonggang Qian, Haodong Cui, Yu Zhang, Ehsan Khoramshahi, and Yuanyuan Qiu. 2023. "Vessel Detection with SDGSAT-1 Nighttime Light Images" Remote Sensing 15, no. 17: 4354. https://doi.org/10.3390/rs15174354

APA StyleZhao, Z., Qiu, S., Chen, F., Chen, Y., Qian, Y., Cui, H., Zhang, Y., Khoramshahi, E., & Qiu, Y. (2023). Vessel Detection with SDGSAT-1 Nighttime Light Images. Remote Sensing, 15(17), 4354. https://doi.org/10.3390/rs15174354