Attention-Enhanced One-Shot Attack against Single Object Tracking for Unmanned Aerial Vehicle Remote Sensing Images

Abstract

:1. Introduction

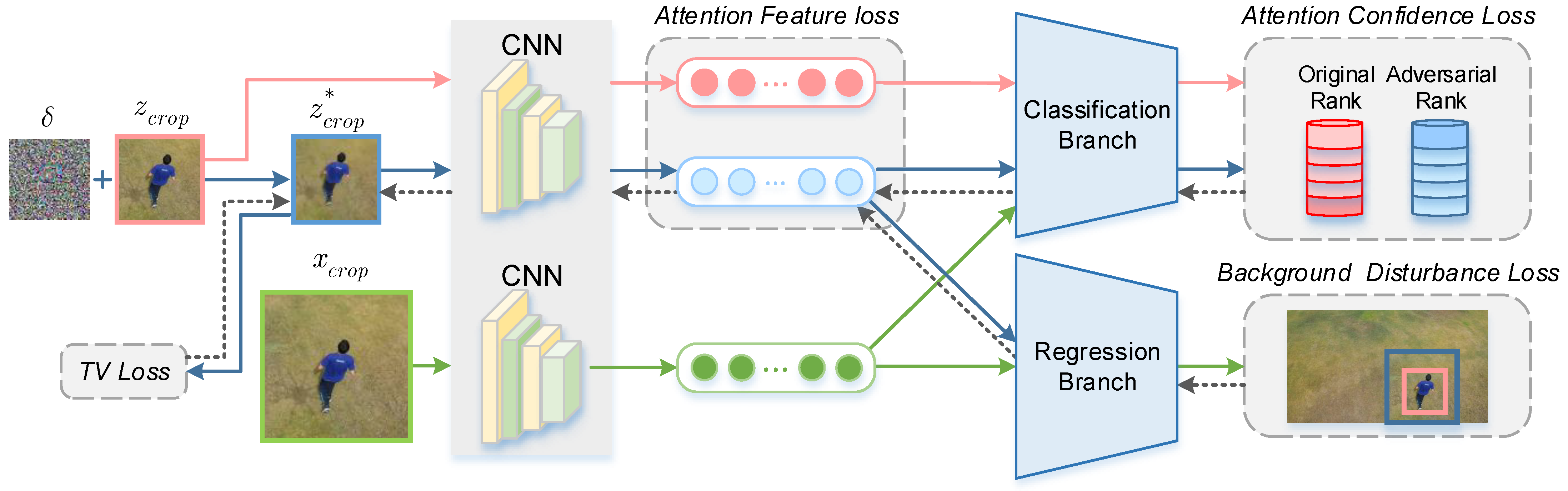

- We propose a novel one-shot adversarial attack method to explore the robustness of remote sensing object tracking models. Our attention-enhanced one-shot attack only attacks the template frame of each video. It generates a unique perturbation for each video which saves a batch of time and is more practical in real-time attacks.

- The effectiveness of our proposed attack method is verified on UAV remote sensing video. Our method optimizes perturbation via attention feature loss to force the generated adversarial samples dissimilar to the raw image and attention confidence loss to suppress or stimulate the different confidence scores, using these two loss functions to optimize an imperceptible perturbation to fool the tracker into getting wrong results.

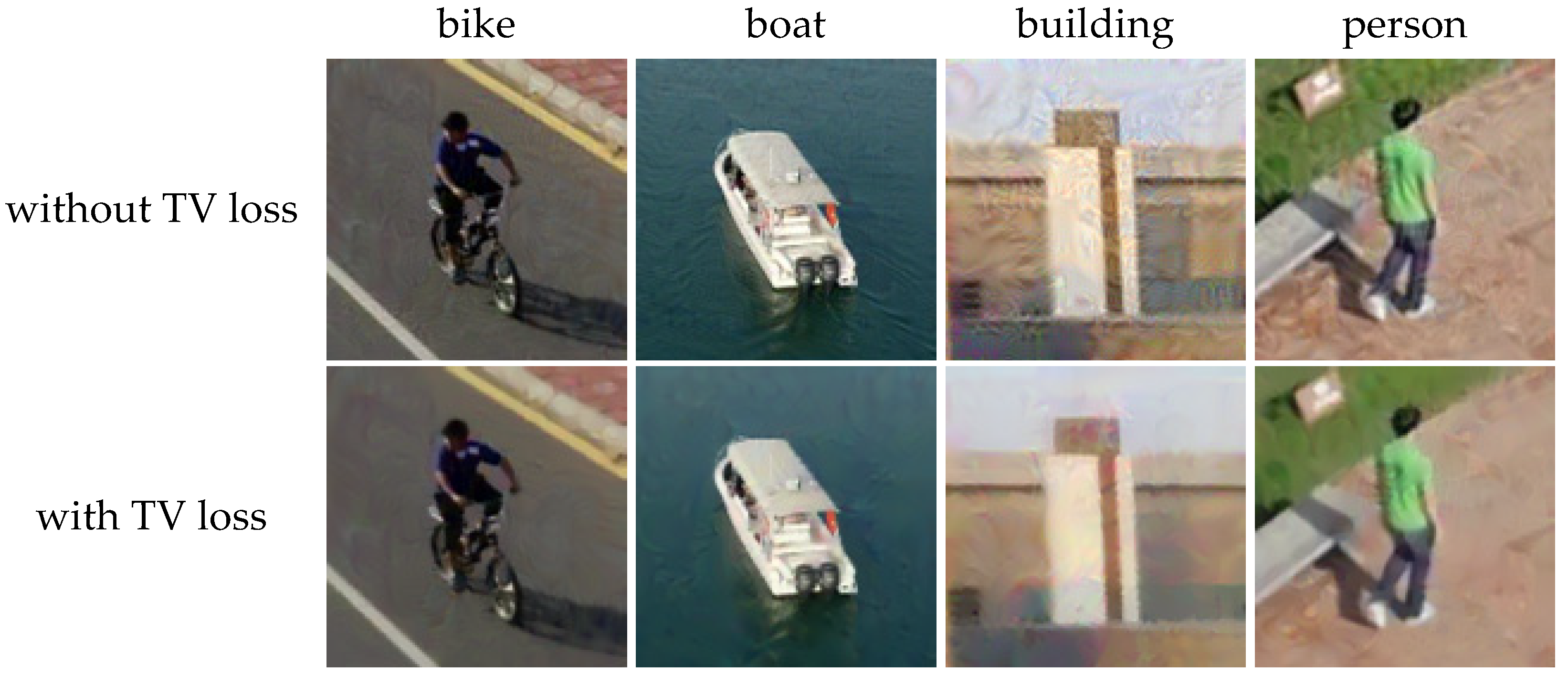

- In addition, considering the high-altitude shooting of UAV remote sensing video leads to the characteristics of different sizes of targets, we also propose a background interference loss, which forces the tracker to consider the background information around the target, resulting in the tracker being interfered by redundant background features and unable to track the correct target. We also use TV loss to make the generated adversarial image more natural for the human eye.

2. Related Work

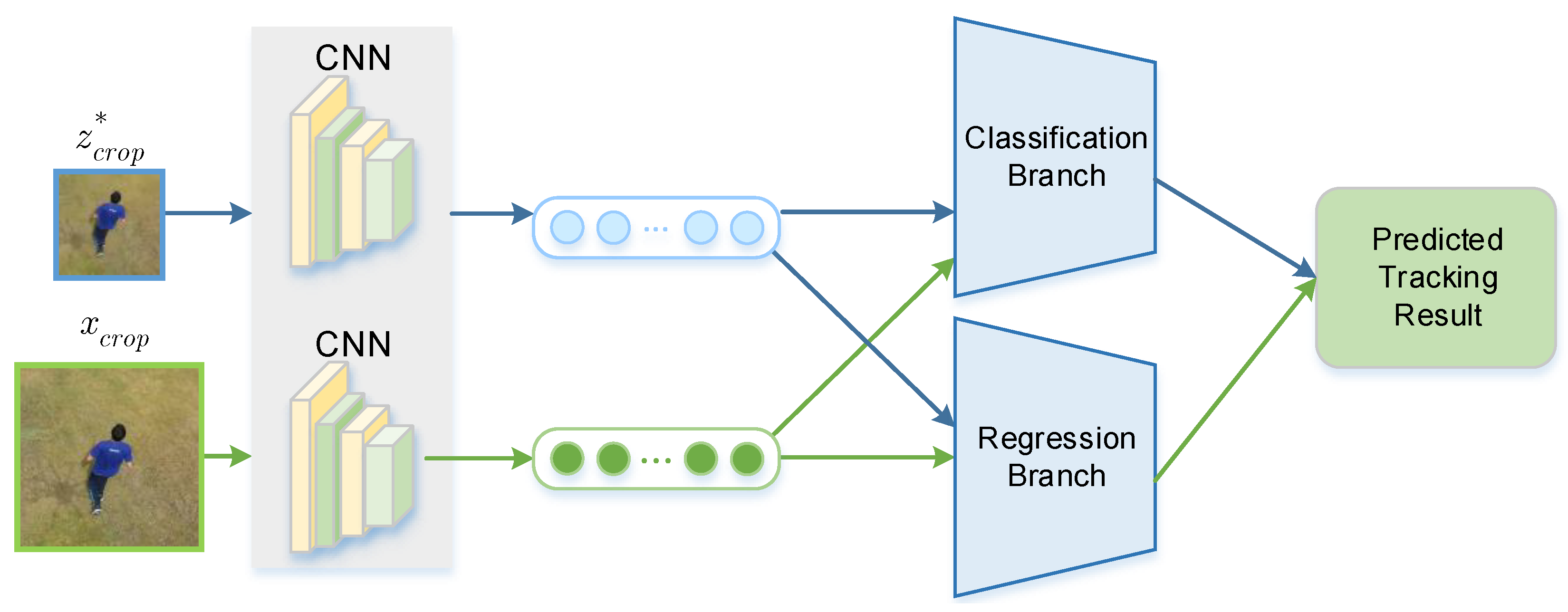

2.1. Visual Object Tracking

2.2. Adversarial Attacks

2.3. Adversarial Attacks in Remote Sensing

3. Attention-Enhanced One-Shot Attack

3.1. Motivation

3.2. Attention-Enhanced One-Shot Attack

3.2.1. Attention Feature Loss

3.2.2. Attention Confidence Loss

3.2.3. Background Disturbance Loss

3.2.4. Total Variation Loss

| Algorithm 1 Attention-Enhanced One-Shot Attack Method |

| Input: Original template target patch z and template search patch of the UAV video, Victim tracker (Siamese Network , classification branch and regression branch of RPN), Iteration Output: Adversarial template target patch ;

|

4. Experiments

4.1. Experimental Setup

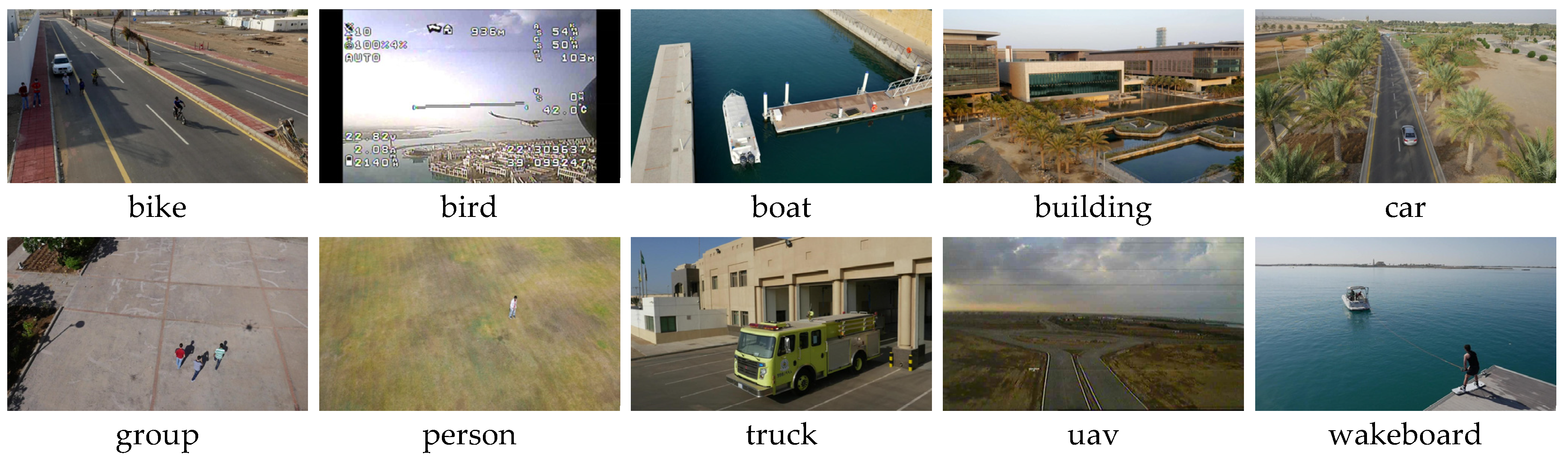

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Implementation Details

4.2. Quantitative Attack Results

4.2.1. Overall Comparison

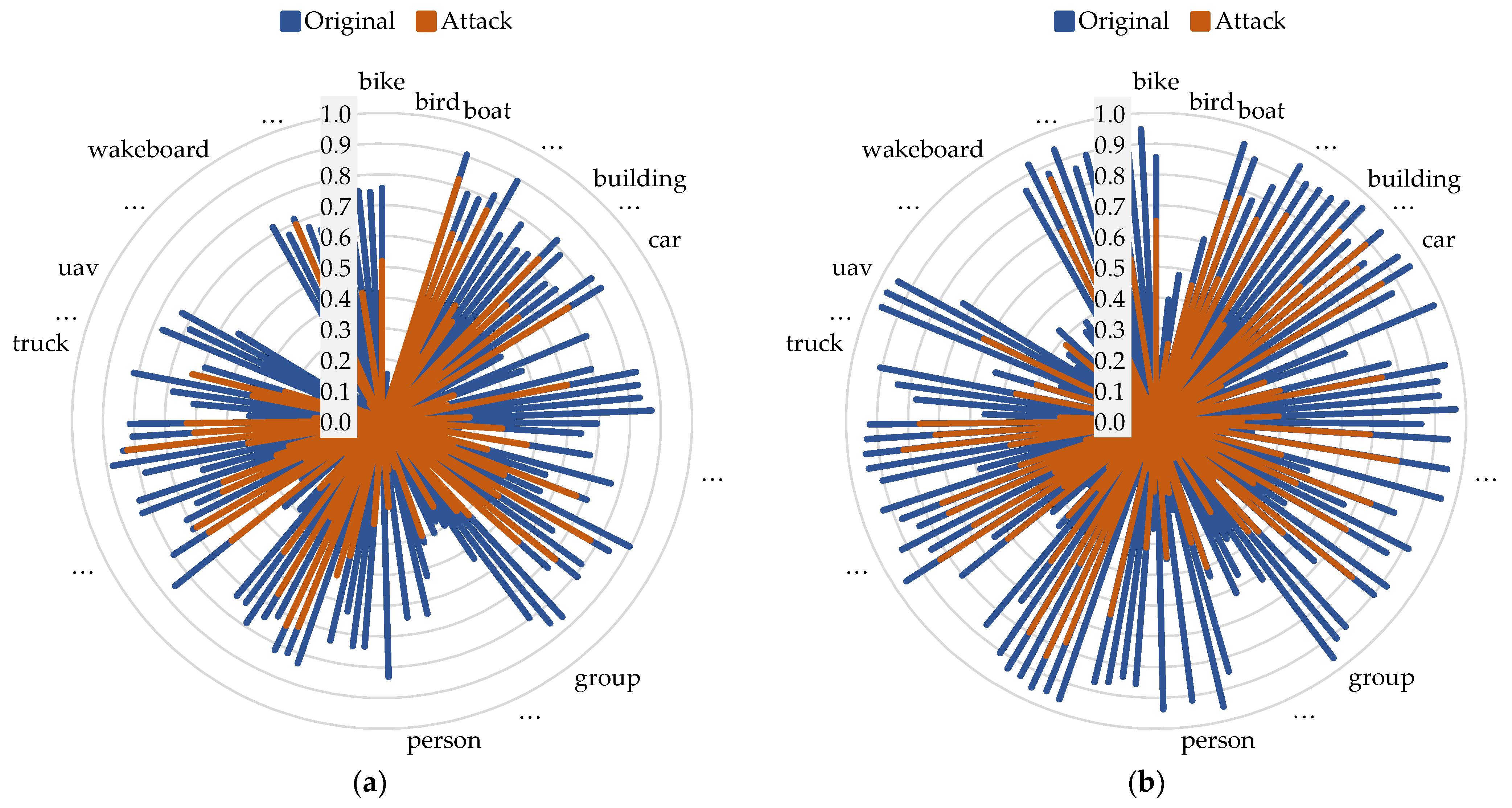

4.2.2. Comparison in Terms of Per-Class Success Rate and Precision

4.2.3. Comparison in Terms of Different Challenge Points

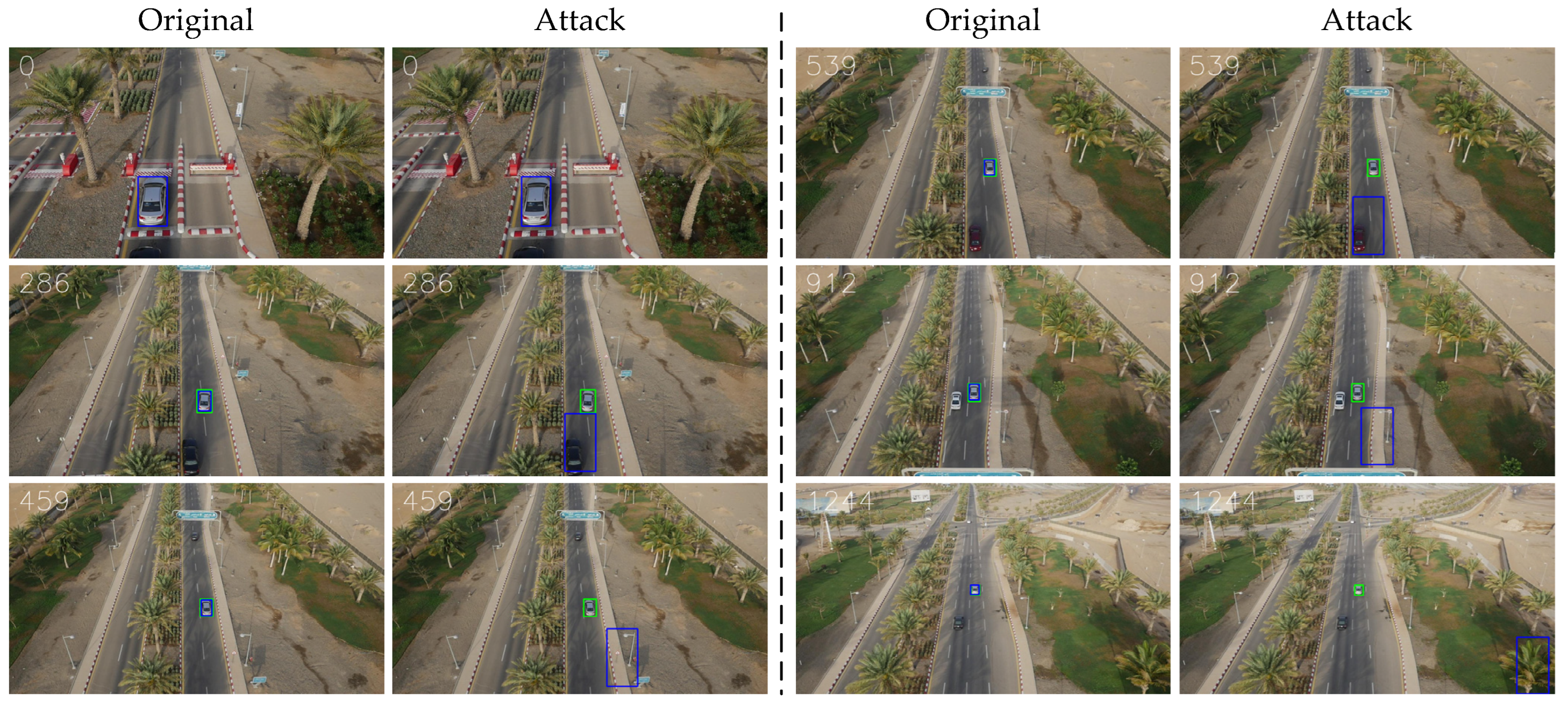

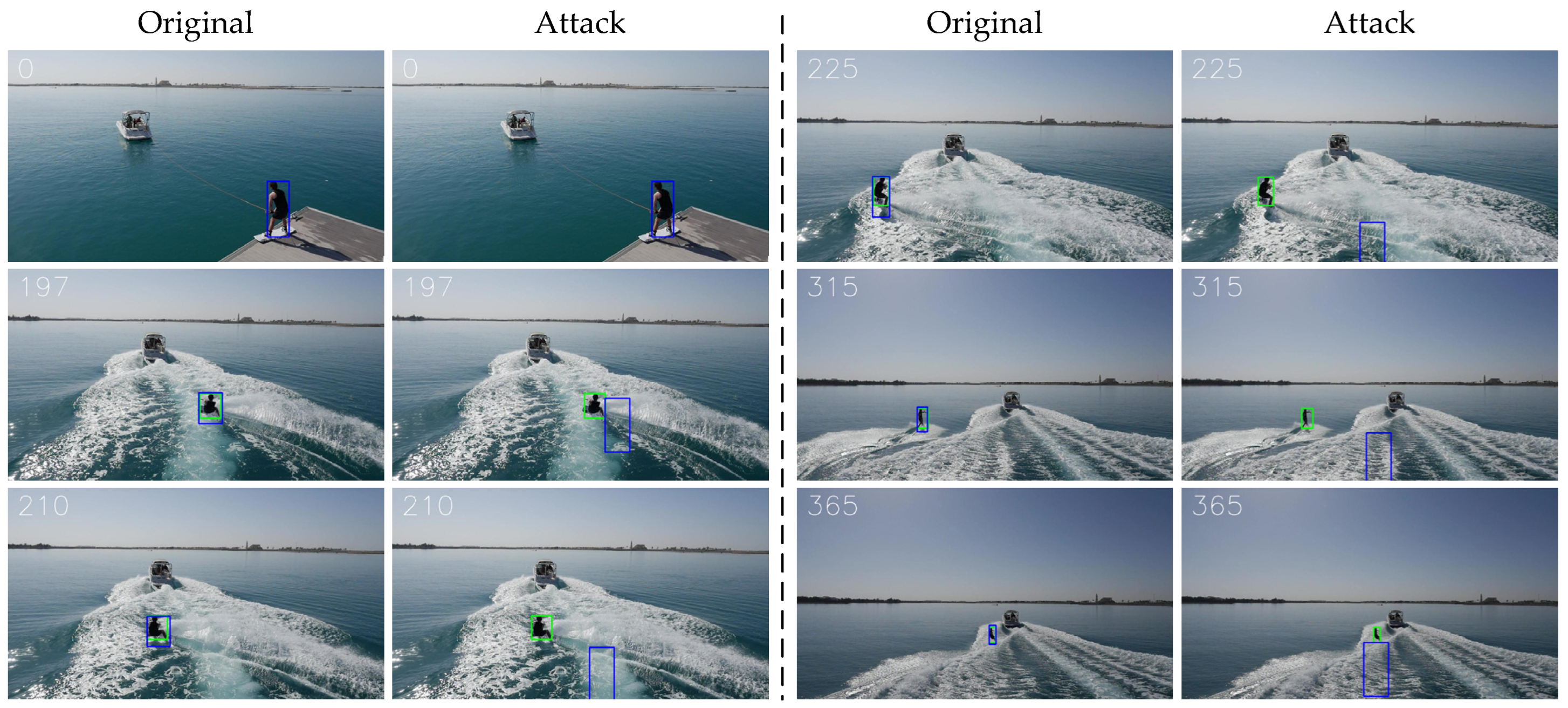

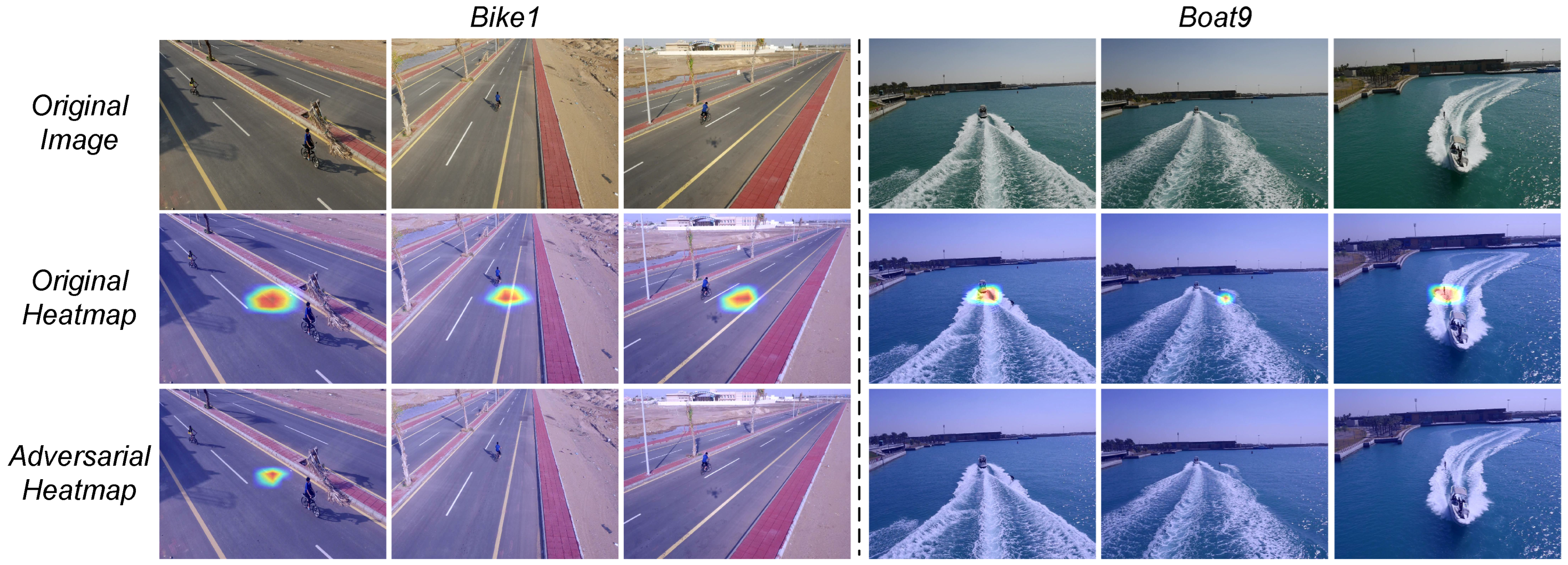

4.3. Visual Results

4.4. Ablation Studies

4.5. Comparison with SOTA Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gaffey, C.; Bhardwaj, A. Applications of Unmanned Aerial Vehicles in Cryosphere: Latest Advances and Prospects. Remote Sens. 2020, 12, 948. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A Review on Deep Learning in UAV Remote Sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102456. [Google Scholar] [CrossRef]

- Cherif, E.; Hell, M.; Brandmeier, M. DeepForest: Novel Deep Learning Models for Land Use and Land Cover Classification Using Multi-Temporal and -Modal Sentinel Data of the Amazon Basin. Remote Sens. 2022, 14, 5000. [Google Scholar] [CrossRef]

- Qian, X.; Zhang, N.; Wang, W. Smooth GIoU Loss for Oriented Object Detection in Remote Sensing Images. Remote Sens. 2023, 15, 1259. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, B.; Liu, Y.; Guo, J. Global Feature Attention Network: Addressing the Threat of Adversarial Attack for Aerial Image Semantic Segmentation. Remote Sens. 2023, 15, 1325. [Google Scholar] [CrossRef]

- Wu, D.; Song, H.; Fan, C. Object Tracking in Satellite Videos Based on Improved Kernel Correlation Filter Assisted by Road Information. Remote Sens. 2022, 14, 4215. [Google Scholar] [CrossRef]

- Wu, J.; Cao, C.; Zhou, Y.; Zeng, X.; Feng, Z.; Wu, Q.; Huang, Z. Multiple Ship Tracking in Remote Sensing Images Using Deep Learning. Remote Sens. 2021, 13, 3601. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully Convolutional Siamese Networks for Object Tracking. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Volume 9914, pp. 850–865. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking With Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-Aware Siamese Networks for Visual Object Tracking. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume 11213, pp. 103–119. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese Visual Tracking With Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 4282–4291. [Google Scholar] [CrossRef]

- Cui, Y.; Song, T.; Wu, G.; Wang, L. MixFormerV2: Efficient Fully Transformer Tracking. arXiv 2023, arXiv:2305.15896. [Google Scholar] [CrossRef]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, EMNLP 2020—Demos, Online, 16–20 November 2020; Liu, Q., Schlangen, D., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards Deep Learning Models Resistant to Adversarial Attacks. In Proceedings of the 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Carlini, N.; Wagner, D.A. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar] [CrossRef]

- Thys, S.; Ranst, W.V.; Goedemé, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 49–55. [Google Scholar] [CrossRef]

- Gao, R.; Guo, Q.; Juefei-Xu, F.; Yu, H.; Fu, H.; Feng, W.; Liu, Y.; Wang, S. Can You Spot the Chameleon? Adversarially Camouflaging Images from Co-Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 2140–2149. [Google Scholar] [CrossRef]

- Liao, Q.; Wang, X.; Kong, B.; Lyu, S.; Yin, Y.; Song, Q.; Wu, X. Fast Local Attack: Generating Local Adversarial Examples for Object Detectors. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Mei, H.; Ji, G.; Wei, Z.; Yang, X.; Wei, X.; Fan, D. Camouflaged Object Segmentation With Distraction Mining. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 19–25 June 2021; pp. 8772–8781. [Google Scholar] [CrossRef]

- Xie, C.; Wang, J.; Zhang, Z.; Zhou, Y.; Xie, L.; Yuille, A.L. Adversarial Examples for Semantic Segmentation and Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1378–1387. [Google Scholar] [CrossRef]

- Yang, J.; Xu, R.; Li, R.; Qi, X.; Shen, X.; Li, G.; Lin, L. An Adversarial Perturbation Oriented Domain Adaptation Approach for Semantic Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 12613–12620. [Google Scholar]

- Jia, S.; Ma, C.; Song, Y.; Yang, X. Robust Tracking Against Adversarial Attacks. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12364, pp. 69–84. [Google Scholar] [CrossRef]

- Guo, Q.; Xie, X.; Juefei-Xu, F.; Ma, L.; Li, Z.; Xue, W.; Feng, W.; Liu, Y. SPARK: Spatial-Aware Online Incremental Attack Against Visual Tracking. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12370, pp. 202–219. [Google Scholar] [CrossRef]

- Liang, S.; Wei, X.; Yao, S.; Cao, X. Efficient Adversarial Attacks for Visual Object Tracking. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12371, pp. 34–50. [Google Scholar] [CrossRef]

- Yan, X.; Chen, X.; Jiang, Y.; Xia, S.; Zhao, Y.; Zheng, F. Hijacking Tracker: A Powerful Adversarial Attack on Visual Tracking. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2897–2901. [Google Scholar] [CrossRef]

- Nakka, K.K.; Salzmann, M. Temporally Transferable Perturbations: Efficient, One-Shot Adversarial Attacks for Online Visual Object Trackers. arXiv 2020, arXiv:2012.15183. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 445–461. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, D.; Zhang, J.; Huang, K. Universal Adversarial Perturbations Against Object Detection. Pattern Recognit. 2021, 110, 107584. [Google Scholar] [CrossRef]

- Wang, Y.; Lv, H.; Kuang, X.; Zhao, G.; Tan, Y.; Zhang, Q.; Hu, J. Towards a Physical-World Adversarial Patch for Blinding Object Detection Models. Inf. Sci. 2021, 556, 459–471. [Google Scholar] [CrossRef]

- Yan, B.; Wang, D.; Lu, H.; Yang, X. Cooling-Shrinking Attack: Blinding the Tracker With Imperceptible Noises. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 987–996. [Google Scholar] [CrossRef]

- Guo, Q.; Cheng, Z.; Juefei-Xu, F.; Ma, L.; Xie, X.; Liu, Y.; Zhao, J. Learning to Adversarially Blur Visual Object Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10819–10828. [Google Scholar] [CrossRef]

- Ding, L.; Wang, Y.; Yuan, K.; Jiang, M.; Wang, P.; Huang, H.; Wang, Z.J. Towards Universal Physical Attacks on Single Object Tracking. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), Online, 2–9 February 2021; pp. 1236–1245. [Google Scholar]

- Xu, Y.; Bai, T.; Yu, W.; Chang, S.; Atkinson, P.M.; Ghamisi, P. AI Security for Geoscience and Remote Sensing: Challenges and Future Trends. IEEE Geosci. Remote. Sens. Mag. 2023, 11, 60–85. [Google Scholar] [CrossRef]

- Czaja, W.; Fendley, N.; Pekala, M.J.; Ratto, C.; Wang, I. Adversarial Examples in Remote Sensing. In Proceedings of the 26th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems (SIGSPATIAL), Seattle, WA, USA, 6–9 November 2018; pp. 408–411. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, G.; Li, Q.; Li, H. Adversarial Example in Remote Sensing Image Recognition. arXiv 2019, arXiv:1910.13222. [Google Scholar]

- Bai, T.; Wang, H.; Wen, B. Targeted Universal Adversarial Examples for Remote Sensing. Remote Sens. 2022, 14, 5833. [Google Scholar] [CrossRef]

- Xu, Y.; Ghamisi, P. Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L. Assessing the Threat of Adversarial Examples on Deep Neural Networks for Remote Sensing Scene Classification: Attacks and Defenses. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1604–1617. [Google Scholar] [CrossRef]

- Chen, L.; Xiao, J.; Zou, P.; Li, H. Lie to Me: A Soft Threshold Defense Method for Adversarial Examples of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Sun, X.; Cheng, G.; Pei, L.; Li, H.; Han, J. Threatening Patch Attacks on Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–10. [Google Scholar] [CrossRef]

- Lu, M.; Li, Q.; Chen, L.; Li, H. Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection. Remote Sens. 2021, 13, 4078. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Qi, J.; Bin, K.; Wen, H.; Tong, X.; Zhong, P. Adversarial Patch Attack on Multi-Scale Object Detection for UAV Remote Sensing Images. Remote Sens. 2022, 14, 5298. [Google Scholar] [CrossRef]

- Deng, B.; Zhang, D.; Dong, F.; Zhang, J.; Shafiq, M.; Gu, Z. Rust-Style Patch: A Physical and Naturalistic Camouflage Attacks on Object Detector for Remote Sensing Images. Remote Sens. 2023, 15, 885. [Google Scholar] [CrossRef]

- Wei, X.; Yuan, M. Adversarial pan-sharpening attacks for object detection in remote sensing. Pattern Recognit. 2023, 139, 109466. [Google Scholar] [CrossRef]

- Fu, C.; Li, S.; Yuan, X.; Ye, J.; Cao, Z.; Ding, F. Ad2Attack: Adaptive Adversarial Attack on Real-Time UAV Tracking. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Mahendran, A.; Vedaldi, A. Understanding Deep Image Representations by Inverting Them. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5188–5196. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Anitiga, L.; Desmaison, A.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.G.; Zhu, M.; Zhmoginov, A.; Chen, L. Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

| Tracker | Precision (%) | Success Rate (%) | ||

|---|---|---|---|---|

| Original | Attack | Original | Attack | |

| SiamRPN | 79.3 | 25.6 | 59.5 | 17.6 |

| DaSiamRPN | 78.7 | 39.2 | 57.8 | 26.7 |

| SiamRPN++(A) | 77.4 | 42.6 | 58.0 | 29.0 |

| SiamRPN++(R) | 79.8 | 54.7 | 60.5 | 39.0 |

| SiamRPN++(M) | 79.3 | 57.4 | 59.8 | 37.6 |

| Challenge Point | Success Rate (%) | Precision (%) | ||

|---|---|---|---|---|

| Original | Attack | Original | Attack | |

| Aspect Ratio Change | 54.2 | 24.9 | 74.1 | 36.3 |

| Background Clutters | 41.9 | 16.7 | 61.5 | 30.0 |

| Camera Motion | 58.8 | 25.9 | 78.2 | 37.0 |

| Fast Motion | 49.0 | 20.9 | 67.7 | 30.9 |

| Full Occlusion | 32.9 | 18.3 | 54.9 | 37.8 |

| Illumination Variation | 53.0 | 22.8 | 72.3 | 33.4 |

| Low Resolution | 42.3 | 12.0 | 65.0 | 25.8 |

| Out of View | 51.3 | 27.8 | 69.4 | 41.7 |

| Partial Occlusion | 48.0 | 24.3 | 67.3 | 38.0 |

| Scale Variation | 55.5 | 27.5 | 74.6 | 40.3 |

| Similar Object | 50.8 | 27.5 | 71.1 | 43.7 |

| Viewpoint Change | 59.1 | 29.5 | 77.5 | 39.9 |

| SiamRPN++ (ResNet-50) | Precision (%) | Success Rate (%) |

|---|---|---|

| Original | 79.8 | 60.5 |

| Random Noise | 77.1 | 58.3 |

| Attack by | 62.2 | 45.4 |

| Attack by | 74.6 | 54.7 |

| Attack by | 57.4 | 41.6 |

| Attack by + | 59.4 | 43.1 |

| Attack by + | 55.7 | 39.8 |

| Attack by + | 55.6 | 39.7 |

| Attack by ++ | 54.5 | 38.4 |

| Attack by +++ | 54.7 | 39.0 |

| Method | SiamRPN | SiamRPN++(R) | ||

|---|---|---|---|---|

| Precision (%) | Success Rate (%) | Precision (%) | Success Rate (%) | |

| Original | 79.3 | 59.5 | 79.8 | 60.5 |

| FGSM | 56.2 | 45.7 | 71.8 | 51.4 |

| C&W | 47.9 | 44.8 | 59.3 | 43.6 |

| Proposed | 25.6 | 17.6 | 54.7 | 39.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Yin, G. Attention-Enhanced One-Shot Attack against Single Object Tracking for Unmanned Aerial Vehicle Remote Sensing Images. Remote Sens. 2023, 15, 4514. https://doi.org/10.3390/rs15184514

Jiang Y, Yin G. Attention-Enhanced One-Shot Attack against Single Object Tracking for Unmanned Aerial Vehicle Remote Sensing Images. Remote Sensing. 2023; 15(18):4514. https://doi.org/10.3390/rs15184514

Chicago/Turabian StyleJiang, Yan, and Guisheng Yin. 2023. "Attention-Enhanced One-Shot Attack against Single Object Tracking for Unmanned Aerial Vehicle Remote Sensing Images" Remote Sensing 15, no. 18: 4514. https://doi.org/10.3390/rs15184514

APA StyleJiang, Y., & Yin, G. (2023). Attention-Enhanced One-Shot Attack against Single Object Tracking for Unmanned Aerial Vehicle Remote Sensing Images. Remote Sensing, 15(18), 4514. https://doi.org/10.3390/rs15184514