Radar HRRP Open Set Target Recognition Based on Closed Classification Boundary

Abstract

:1. Introduction

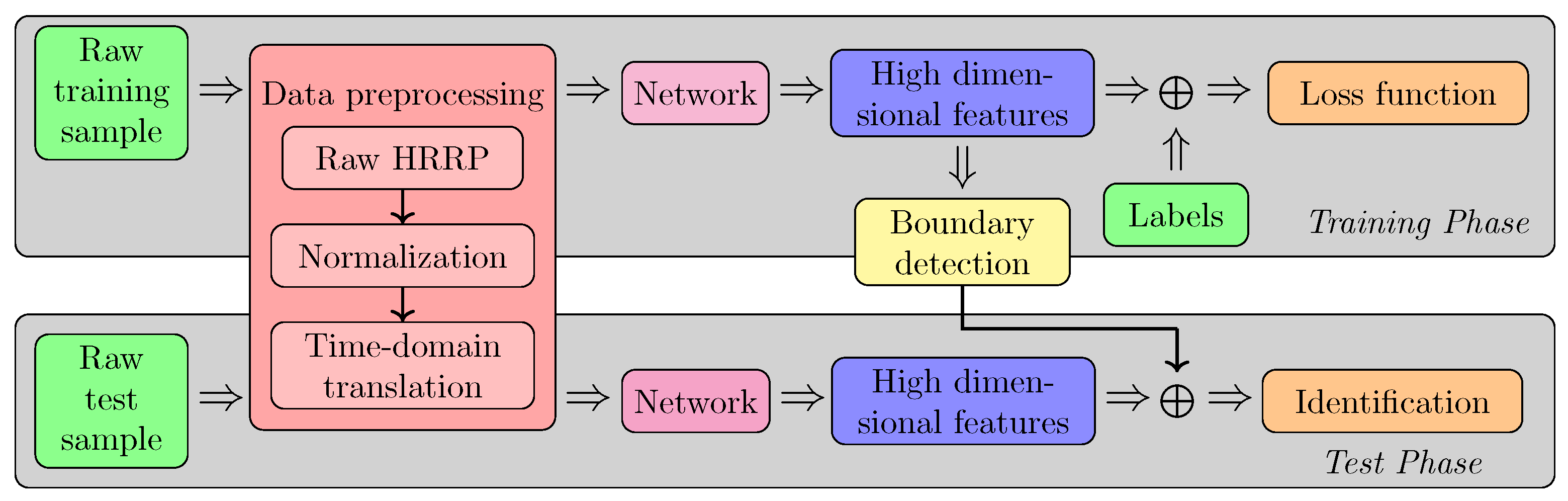

2. Materials

3. Methods

3.1. Data Preprocessing

3.1.1. Intensity Sensitivity

3.1.2. Translation Sensitivity

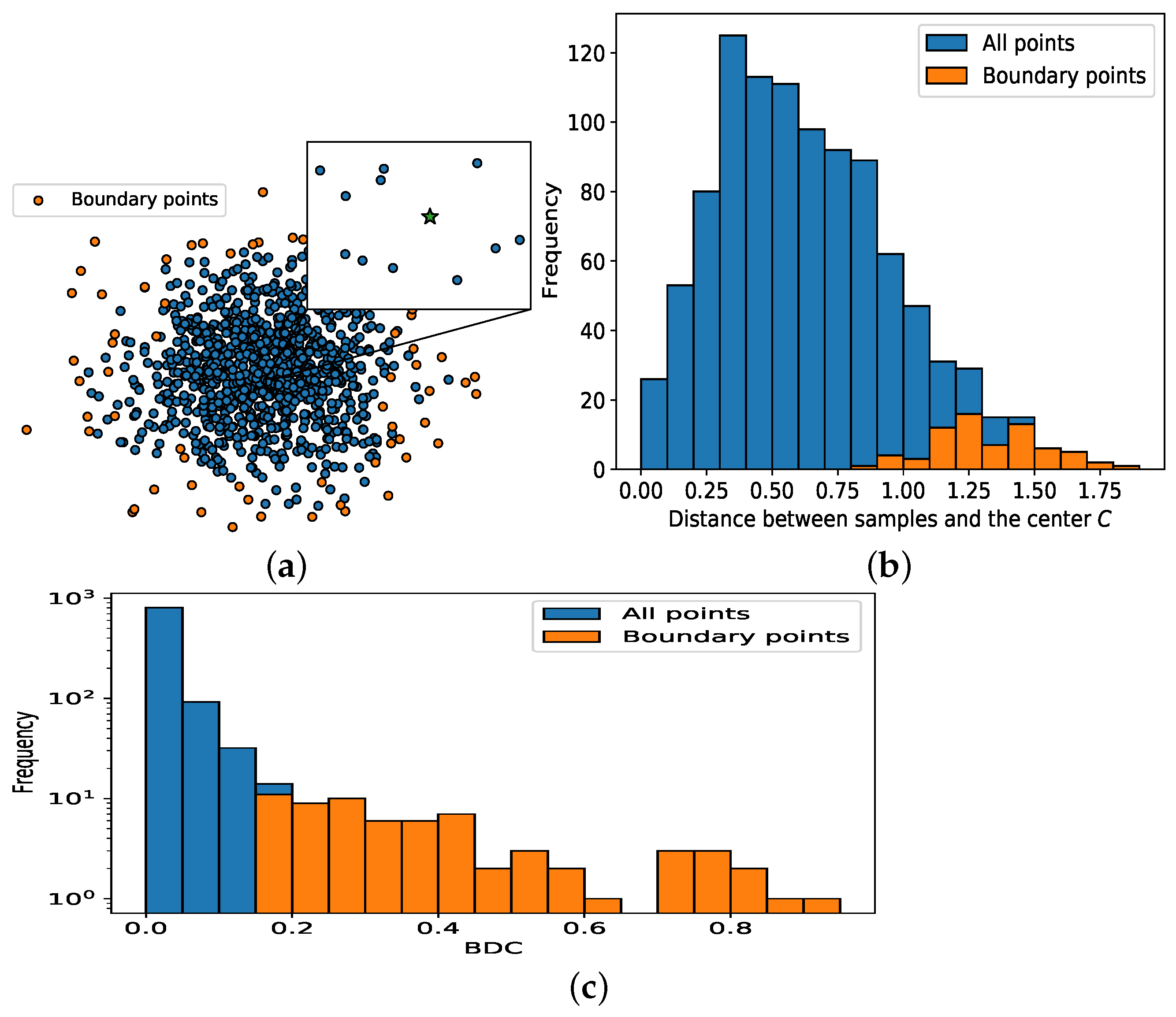

3.2. Boundary Detection Algorithm

| Algorithm 1 Boundary detection algorithm. |

|

3.3. Identification Procedure

| Algorithm 2 Identification algorithm. |

|

4. Results and Discussion

4.1. Experimental Settings

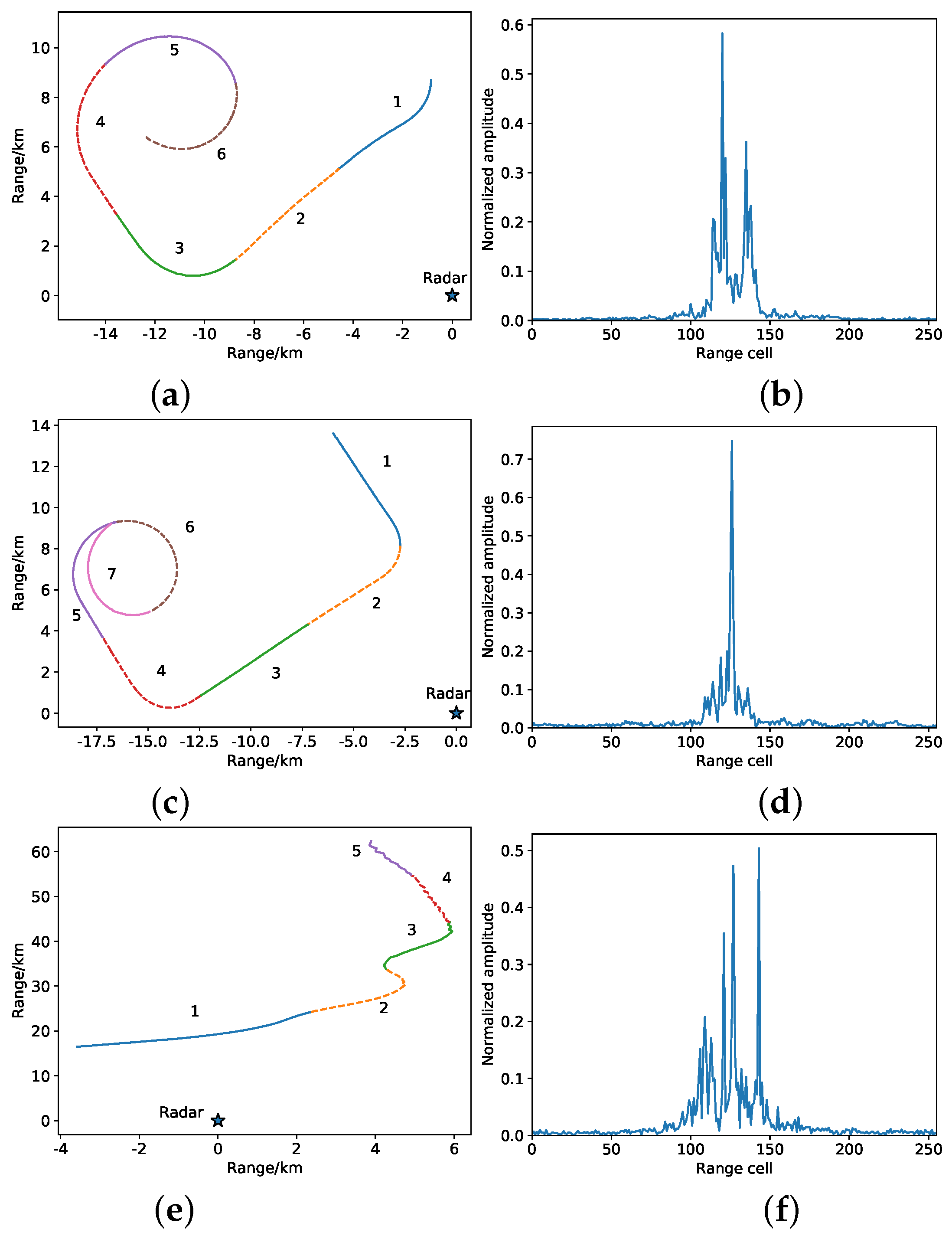

4.1.1. Data

4.1.2. Implementation Settings

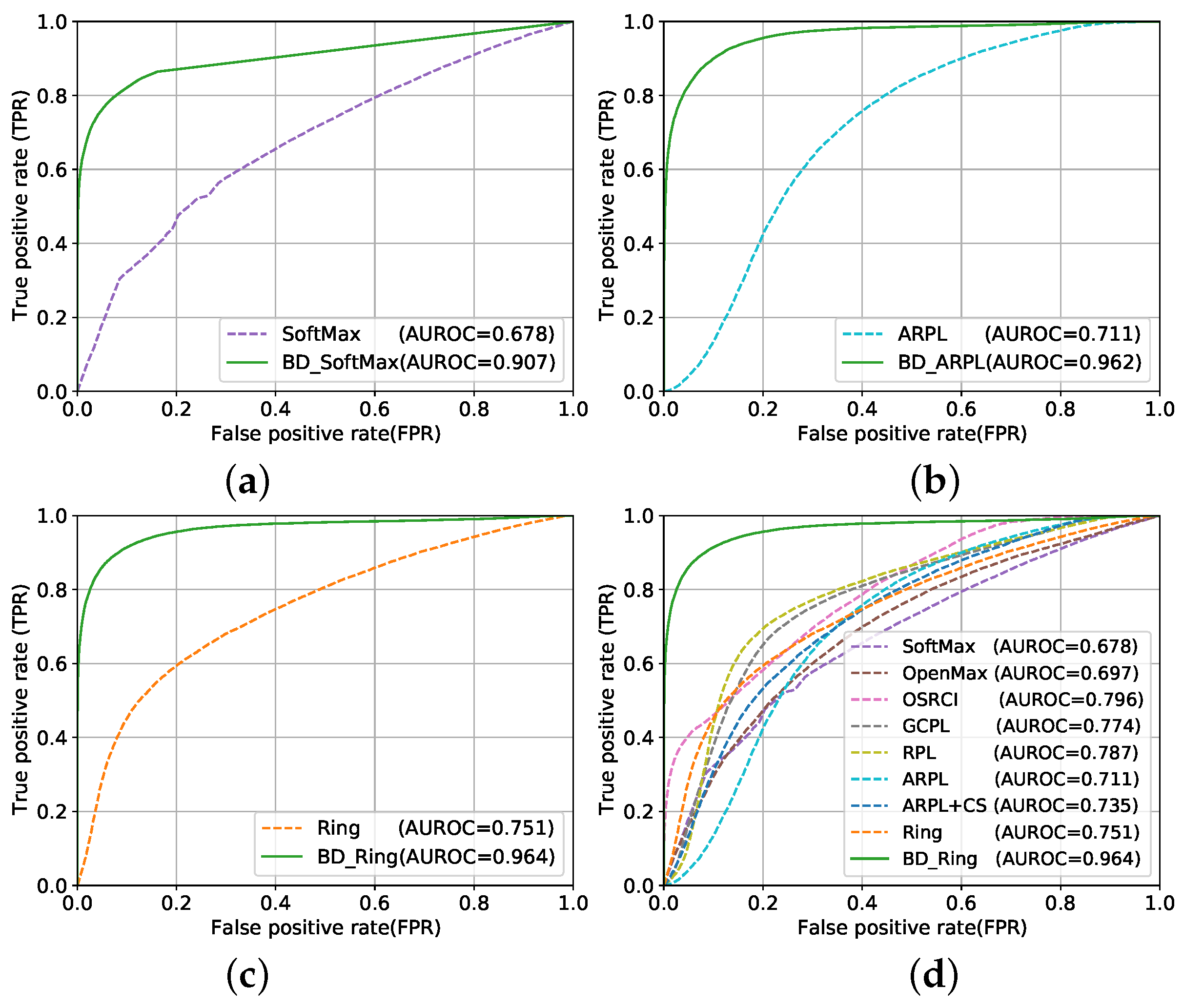

4.2. Identification Evaluation

4.3. Category Ablation Experiment

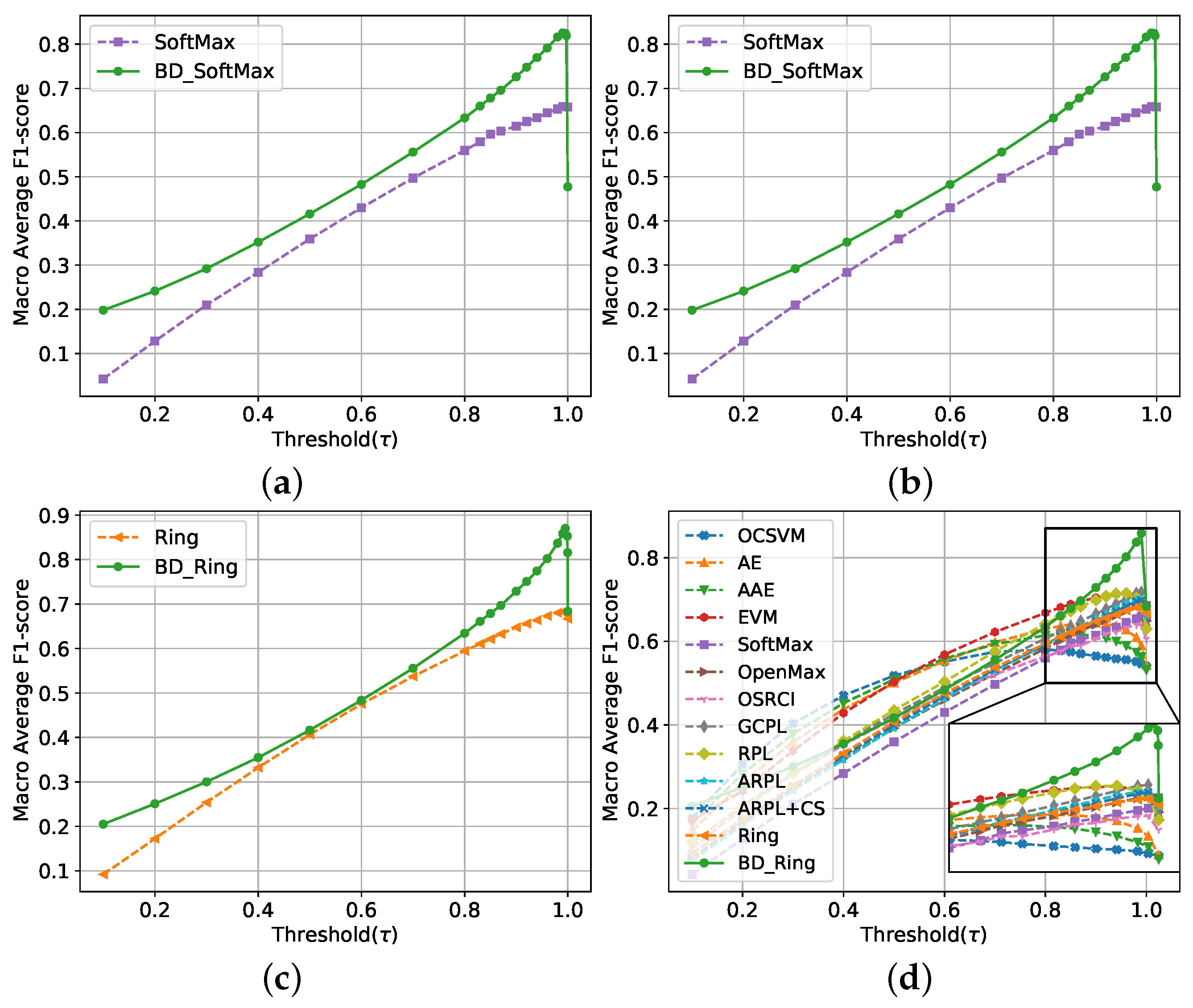

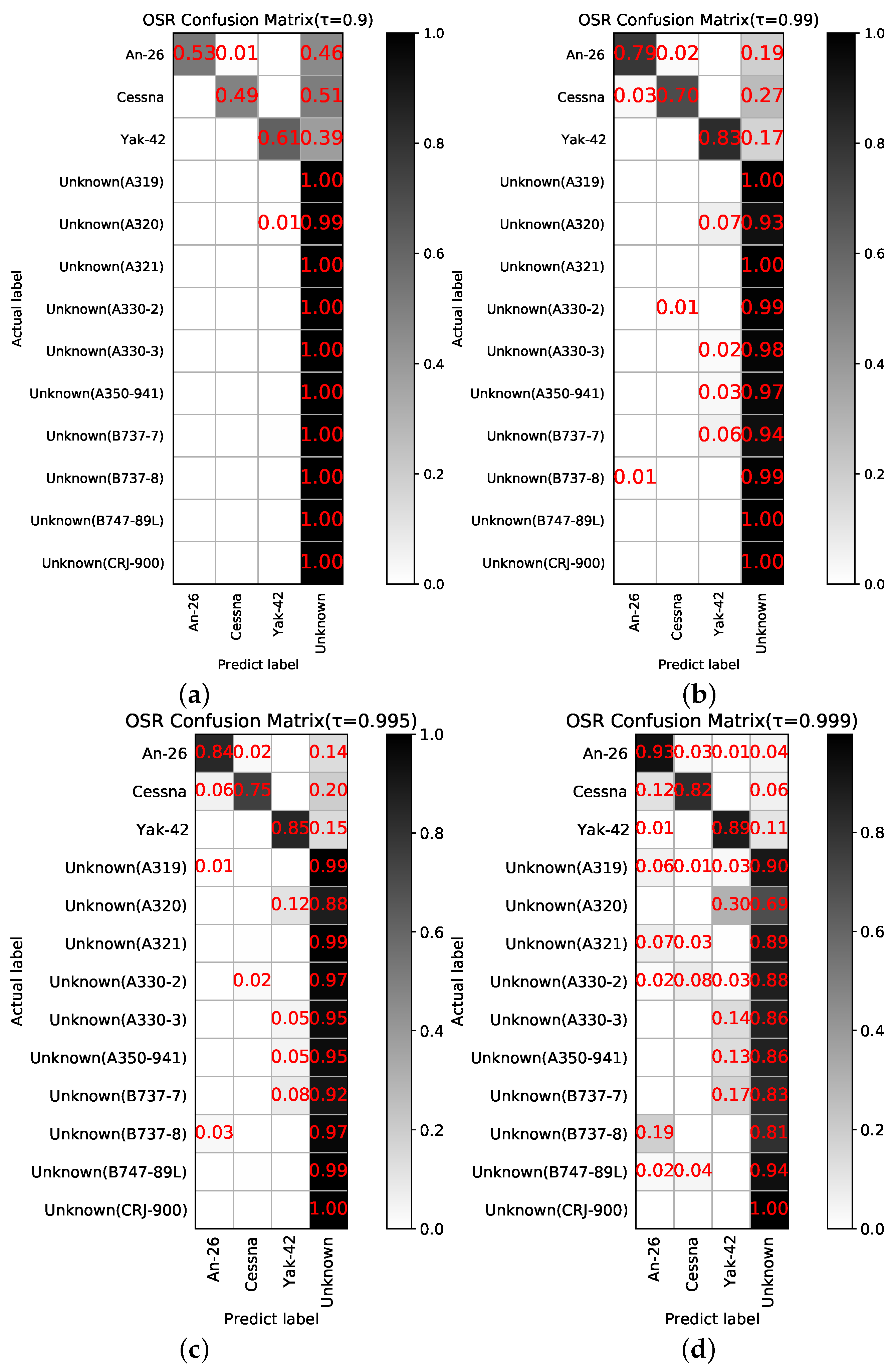

4.4. Threshold Discussion

4.5. Feature Visualization Analysis

4.6. Computational Complexity

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HRRP | High-resolution range profile |

| RATR | Radar automatic target recognition |

| CSR | Closed set recognition |

| OSR | Open set recognition |

| SVM | Support vector machine |

| SVDD | Support vector data description |

| OCSVM | One class support vector machine |

| GAN | Generative adversarial network |

| GCPL | Generalized convolutional prototype learning |

| RPL | Reciprocal points learning |

| ARPL | Adversarial reciprocal points learning |

| AE | Auto-encoder |

| k-NNs | k-nearest neighbor objects |

| BDC | Boundary detection coefficient |

| Distribution equilibrium coefficient | |

| Distance correction coefficient | |

| AUROC | Area under the receiver operating characteristic |

| ROC | receiver operating characteristic |

| BD_SoftMax | Boundary detection SoftMax |

| BD_ARPL | Boundary detection adversarial reciprocal points learning |

| BD_Ring | Boundary detection Ring |

| BD_GCPL | Boundary detection generalized convolutional prototype learning |

References

- Liu, X.; Wang, L.; Bai, X. End-to-End Radar HRRP Target Recognition Based on Integrated Denoising and Recognition Network. Remote Sens. 2022, 14, 5254. [Google Scholar] [CrossRef]

- Lin, C.-L.; Chen, T.-P.; Fan, K.-C.; Cheng, H.-Y.; Chuang, C.-H. Radar High-Resolution Range Profile Ship Recognition Using Two-Channel Convolutional Neural Networks Concatenated with Bidirectional Long Short-Term Memory. Remote Sens. 2021, 13, 1259. [Google Scholar] [CrossRef]

- Pan, M.; Liu, A.; Yu, Y.; Wang, P.; Li, J.; Liu, Y.; Lv, S.; Zhu, H. Radar hrrp target recognition model based on a stacked cnn–bi-rnn with attention mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Chen, W.; Chen, B.; Peng, X.; Liu, J.; Yang, Y.; Zhang, H.; Liu, H. Tensor rnn with bayesian nonparametric mixture for radar hrrp modeling and target recognition. IEEE Trans. Signal Process. 2021, 69, 1995–2009. [Google Scholar] [CrossRef]

- Guo, D.; Chen, B.; Chen, W.; Wang, C.; Liu, H.; Zhou, M. Variational temporal deep generative model for radar hrrp target recognition. IEEE Trans. Signal Process. 2020, 68, 5795–5809. [Google Scholar] [CrossRef]

- Scheirer, W.; Rocha, A.; Sapkota, A.; Boult, T. Toward open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1757–1772. [Google Scholar] [CrossRef] [PubMed]

- Chai, J.; Liu, H.; Bao, Z. New kernel learning method to improve radar hrrp target recognition and rejection performance. J. Xidian Univ. 2009, 36, 5–8. [Google Scholar]

- Zhang, X.; Wang, P.; Feng, B.; Du, L.; Liu, H. A new method to improve radar hrrp recognition and outlier rejection performances based on classifier combination. Acta Autom. Sin. 2014, 40, 348–356. [Google Scholar]

- Wang, Y.; Chen, W.; Song, J.; Li, Y.; Yang, X. Open set radar hrrp recognition based on random forest and extreme value theory. In Proceedings of the International Conference on Radar, Nanjing, China, 17–19 October 2018. [Google Scholar]

- Jain, L.P.; Scheirer, W.J.; Boult, T.E. Multi-class open set recognition using probability of inclusion. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Schlkopf, B.; Williamson, R.C.; Smola, A.J.; Shawe-Taylor, J.; Platt, J.C. Support vector method for novelty detection. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 29 November–4 December 1999. [Google Scholar]

- Scheirer, W.J.; Jain, L.P.; Boult, T.E. Probability models for open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2317–2324. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roos, J.D.; Shaw, A.K. Probabilistic svm for open set automatic target recognition on high range resolution radar data. In Proceedings of the SPIE Defense + Security, Baltimore, MD, USA, 17–21 April 2016. [Google Scholar]

- Scherreik, M.; Rigling, B. Multi-class open set recognition for sar imagery. In Proceedings of the SPIE Defense + Security, Baltimore, MD, USA, 17–21 April 2016. [Google Scholar]

- Tian, L.; Chen, B.; Guo, Z.; Du, C.; Peng, Y.; Liu, H. Open set hrrp recognition with few samples based on multi-modality prototypical networks. Signal Process. 2022, 193, 108391. [Google Scholar] [CrossRef]

- Ma, X.; Ji, K.; Zhang, L.; Feng, S.; Xiong, B.; Kuang, G. An open set recognition method for sar targets based on multitask learning. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, W.; Zheng, X.; Wei, Y. A novel radar target recognition method for open and imbalanced high-resolution range profile. Digit. Signal Process. 2021, 118, 103212. [Google Scholar] [CrossRef]

- Dang, S.; Cao, Z.; Cui, Z.; Pi, Y. Open set sar target recognition using class boundary extracting. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar, Xiamen, China, 26–29 November 2019. [Google Scholar]

- Chen, W.; Wang, Y.; Song, J.; Li, Y. Open set hrrp recognition based on convolutional neural network. J. Eng. 2019, 21, 7701–7704. [Google Scholar] [CrossRef]

- Giusti, E.; Ghio, S.; Oveis, A.H.; Martorella, M. Open set recognition in synthetic aperture radar using the openmax classifier. In Proceedings of the IEEE Radar Conference, New York City, NY, USA, 21–25 March 2022. [Google Scholar]

- Bendale, A.; Boult, T.E. Towards open set deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Los Alamitos, CA, USA, 26 June–1 July 2016. [Google Scholar]

- Neal, L.; Olson, M.; Fern, X.; Wong, W.; Li, F. Open set learning with counterfactual images. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 8–13 December 2014. [Google Scholar]

- Yang, H.M.; Zhang, X.Y.; Yin, F.; Liu, C.L. Robust classification with convolutional prototype learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Yang, H.M.; Zhang, X.Y.; Yin, F.; Yang, Q.; Liu, C.L. Convolutional prototype network for open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2358–2370. [Google Scholar] [CrossRef]

- Chen, G.; Qiao, L.; Shi, Y.; Peng, P.; Li, J.; Huang, T.; Pu, S.; Tian, Y. Learning open set network with discriminative reciprocal points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Chen, G.; Peng, P.; Wang, X.; Tian, Y. Adversarial reciprocal points learning for open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 8065–8081. [Google Scholar] [CrossRef]

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J. Stacked convolutional auto-encoders for hierarchical feature extraction. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2011, Espoo, Finland, 14–17 June 2011. [Google Scholar]

- Wan, J.; Bo, C.; Xu, B.; Liu, H.; Lin, J. Convolutional neural networks for radar hrrp target recognition and rejection. EURASIP J. Adv. Signal Process. 2019, 5. [Google Scholar] [CrossRef] [Green Version]

- Oza, P.; Patel, V.M. C2ae: Class conditioned auto-encoder for open-set recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Sun, X.; Yang, Z.; Zhang, C.; Peng, G.; Ling, K.V. Conditional gaussian distribution learning for open set recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Ge, Z.; Demyanov, S.; Chen, Z.; Garnavi, R. Generative openmax for multi-class open set classification. CoRR 2017, abs/1707.07418. [Google Scholar]

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, I. Adversarial autoencoders. Proceedings of International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Rudd, E.M.; Jain, L.P.; Scheirer, W.J.; Boult, T.E. The extreme value machine. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 762–768. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Pal, D.K.; Savvides, M. Ring loss: Convex feature normalization for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Maaten, L.; Hinton, G. Visualizing non-metric similarities in multiple maps. Mach. Learn. 2012, 87, 33–55. [Google Scholar] [CrossRef]

| Class | Training Samples | Test Samples |

|---|---|---|

| An-26 | 17,334 | 10,400 |

| Cessna | 17,333 | 13,000 |

| Yak-42 | 14,650 | 7800 |

| Method | AUROC | ||||

|---|---|---|---|---|---|

| OCSVM [11] | 66.7 | - | - | - | - |

| AE [28] | 70.5 | - | - | - | - |

| AAE [33] | 76.4 | - | - | - | - |

| EVM [34] | 79.8 | - | - | - | - |

| SoftMax [22] | 67.8 | 90.7 | 85.9 | 79.6 | 72.0 |

| OpenMax [21] | 69.7 | - | - | - | - |

| OSRCI [22] | 79.6 | - | - | - | - |

| GCPL [24] | 77.4 | 94.5 | 94.6 | 94.6 | 94.4 |

| RPL [26] | 78.7 | 93.6 | 93.9 | 93.9 | 93.8 |

| ARPL [27] | 71.1 | 96.2 | 96.2 | 96.2 | 95.0 |

| ARPL + CS [27] | 73.5 | 93.3 | 93.4 | 93.3 | 91.9 |

| Ring [35] | 75.1 | 96.4 | 96.4 | 96.4 | 96.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, Z.; Wang, P.; Liu, H. Radar HRRP Open Set Target Recognition Based on Closed Classification Boundary. Remote Sens. 2023, 15, 468. https://doi.org/10.3390/rs15020468

Xia Z, Wang P, Liu H. Radar HRRP Open Set Target Recognition Based on Closed Classification Boundary. Remote Sensing. 2023; 15(2):468. https://doi.org/10.3390/rs15020468

Chicago/Turabian StyleXia, Ziheng, Penghui Wang, and Hongwei Liu. 2023. "Radar HRRP Open Set Target Recognition Based on Closed Classification Boundary" Remote Sensing 15, no. 2: 468. https://doi.org/10.3390/rs15020468

APA StyleXia, Z., Wang, P., & Liu, H. (2023). Radar HRRP Open Set Target Recognition Based on Closed Classification Boundary. Remote Sensing, 15(2), 468. https://doi.org/10.3390/rs15020468