1. Introduction

Land desertification is a major ecological problem facing arid and semi-arid regions worldwide [

1]. It often occurs in mid-latitude regions, directly or indirectly affects more than 100 countries worldwide, involves more than one billion people, causes losses of up to USD 10 billion per year, and is ranked as one of the most serious environmental and social problems in the world [

2,

3]. According to the statistics released by the United Nations regarding land desertification, the problem of land desertification faced by China is particularly serious, which is mainly concentrated in the western and northern regions of China, severely restricting local economic development and placing great pressure upon the grassland ecological environment [

4]. Among these regions, the Hangjin Banner in the Inner Mongolia Autonomous Region is a key area for the prevention and control of land desertification disasters in China [

5]. The Hangjin Banner is rich in vegetation resources, which are mainly dominated by tree species with strong drought tolerance and low shrubs. The topography of the region mainly consists of hills and plateaus, so there are high elevations, complex terrain, and a wide range of topography [

6]. Therefore, when monitoring land desertification in this region, one often faces the problems of low efficiency and high labor costs, which are not conducive to the prevention and management of land desertification.

With the rapid development of remote sensing technology, UAV remote sensing technology has become more and more mature, which is widely used in remote sensing vegetation classification research by virtue of its low cost and high accuracy in obtaining remote sensing images [

7]. Scholars at home and abroad have utilized UAV remote sensing technology to classify urban vegetation [

8], grassland vegetation [

9,

10], forest vegetation [

11,

12], wetland vegetation [

13], crops [

14,

15], and other vegetation, and all of them have achieved good results. UAV remote sensing technology has also gradually been combined with geological exploration and forestry resource surveys, and compared with the traditional field survey method, it can not only monitor the distribution of vegetation but also accurately identify the growth of vegetation, which has become an efficient and accurate means of remote monitoring [

16]. In the future, UAV remote sensing technology will also be used for the monitoring of farmland, the assessment of crop growth, and the monitoring of other natural disasters, as well as in other applications [

17]. Meanwhile, high-resolution satellite remote sensing images continue to surpass the meter and sub-meter accuracy benchmarks [

18]. Among the existing technologies, high-resolution satellite remote-sensing images have the ability to dynamically monitor land desertification on a large scale [

19]. High-resolution satellite remote sensing images are used to establish vegetation cover models in a study area and analyze the areas that have undergone or are about to face land desertification disasters [

20,

21]. According to the relationship between vegetation coverage and desertification degree, the degree of desertification is graded, with FVC > 0.8 indicating non-desertification (potential desertification); 0.6 < FVC ≤ 0.8 refers to mild desertification; 0.4 < FVC ≤ 0.6 is moderate desertification; 0.2 < FVC ≤ 0.4 refers to severe desertification; and FVC ≤ 0.2 indicates extremely severe desertification [

22]. Therefore, areas with vegetation coverage (FVC) less than 0.3 are designated as key monitoring areas for land desertification issues. Subsequently, visible remote sensing images from drones in a study area are utilized to extract desert vegetation information by processing the images, which are used to evaluate the degree of land desertification in the area [

23,

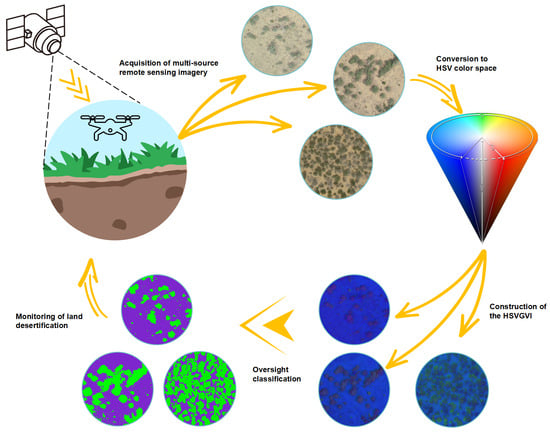

24]. Therefore, extracting desert vegetation data through a combination of multi-source remote sensing data based on high-resolution satellite remote sensing images and UAV visible remote sensing images can help us dynamically monitor and combat land desertification, improve monitoring efficiency, and significantly reduce the workload of monitoring personnel.

In the process of extracting vegetation information, constructing a vegetation index is an indispensable and important technical means for extracting vegetation coverage and monitoring land desertification via UAV visible light images. Wang Xiaoqin [

25] and others proposed a new vegetation index model, the VDVI, by combining the vegetation and non-vegetation characteristics of UAV visible images and drawing on the principle of NDVI construction; the extraction accuracy of this index can reach more than 90%. Li Dongsheng [

26] and others validated both the EXG and VDVI, and their accuracies can reach more than 90%, which indicates that these indices are basically able to accurately extract vegetation information. Bareth G [

27] and others proposed the RGBVI, which is applied to the extraction of vegetation cover in agricultural fields, and its accuracy can reach more than 85%. However, due to the complex and harsh environment in deserts, it is difficult to adapt the vegetation index commonly used in cities and villages to the extraction of desert vegetation. In particular, in the desert area of Northwest China, due to the strong light and dry climate, the shadow texture produced by a tall tree canopy shows a darker color. Vegetation in desert areas has fewer stems and leaves and is mostly dry shrubs [

28]. Therefore, the vegetation index model constructed using the RGB color space is often limited by the shadow texture of vegetation when extracting vegetation data, which causes the problem of misclassification and leads to large errors [

29]. This is due to the fact that visible images are based on RGB color space, in which the correlation between R, G, and B channels is strong and not easy to analyze quantitatively, so much so that the classification results cannot provide accurate data for land desertification monitoring [

30]. For complex desert environments, it is crucial to ensure the accuracy of vegetation data extraction and the applicability of the vegetation index model [

31,

32,

33]. Since the HSV color space model focuses more on color representation, and this model is less affected by light, the H-value varies greatly between different feature types and is also relatively consistent with the subjective perception of color by the human eye. It is able to capture the fine green vegetation well and also fully detect the vegetation under a shadow texture cover, so it has great potential in feature recognition and classification. Therefore, the HSVGVI was established on the basis of the HSV color space by using the channel enhancement method, which makes full use of the advantage that each variable in the HSV space can be analyzed independently and quantitatively and reduces the influence of strong light and shadow texture so that we can better extract the vegetation information and dynamically monitor the changing trend of land desertification in desert areas with complex ground conditions.

4. Results

4.1. Building Index Models

Based on the construction methods of various vegetation index models in the previous section, the EXG (excess green index), RGBVI (red–green–blue vegetation index), MGRVI (modified green–red vegetation index), NGRDI (normalized green–red difference index), and VDVI (visible-band difference vegetation index) models were constructed in the RGB color space through band combination calculation. The HSVVI (hue–saturation–value vegetation index) and HSVGVI (hue–saturation–value green enhancement vegetation index) models were constructed based on the HSV color space. All vegetation index models are shown in

Figure 7.

4.2. Supervision Classification of Each Model

After constructing the vegetation index model, the supervised classification of different features was carried out based on a support vector machine (SVM), the aim of which was to divide the study samples into two categories (vegetation category and bare soil category) with maximum spacing and find the hyperplane that maximized the boundary between the two categories to achieve classification. By finding a set of support vectors as the closest training sample points to the hyperplane, these support vectors are used to determine the classification boundaries and make classification decisions [

64]. To ensure the accuracy of supervised classification, the separation between the training samples of different regions of interest in the supervised classification samples was kept greater than 1.9. To ensure the accuracy of supervised classification, a computerized ROI separability tool was used to compute the separation between any classes when separating different ground objects. Separability was based on the Jeffries–Matusita distance and transition separability to measure the separability between different categories. Its range was [0, 2]. The greater the separation, the better the discriminatory ability, and a separation degree of greater than 1.8 was considered satisfactory whereas that greater than 1.9 was considered accurate [

65]. Based on the spatial resolution of the UAV, the area of a single pixel was calculated. By means of field surveys, validation sample squares were established on a per-pixel basis. The feature attributes (vegetation or bare soil) that accounted for more than 50% of the features within the sample squares were recorded as attributes per pixel. Sample-by-sample vectorization was performed based on the percentage of each type of feature within each sample square. Finally, validation samples were obtained from the feature information in each pixel. Using this method, the validation samples of multi-shaded areas and vegetation-dense areas were obtained for subsequent accuracy validation.

Figure 8 shows the supervised classification results of these seven vegetation indices compared with the validation samples (green color indicates vegetation and purple color indicates bare soil):

Based on the two different types of study areas, preliminary findings can be revealed by comparing and analyzing the supervised classification results in

Figure 7 and the raw UAV image data: In the multi-shaded type region, due to the influence of high-intensity light, the branching and leafy tree canopy produces a deep shadow texture, which covers a considerable area of low shrub vegetation on the ground surface, and due to the large correlation of the three channels in the RGB color space, which is impossible to quantitatively analyze, a large number of misclassification problems occur, which seriously affect the accuracy of the vegetation extraction. The EXG, NGRDI, MGRVI, VDVI, and RGBVI cannot be used to extract low vegetation areas under shadow coverage, resulting in some vegetation pixels being misclassified as bare soil pixels. On the other hand, the

HSVVI and HSVGVI models constructed based on the HSV color space, in which the color types in the image are only controlled using the H channel, are relatively independent and have a larger degree of differentiation; thus, they can better detect the vegetation under shadow coverage.

The densely vegetated areas are located in arid and semi-arid regions with mostly low and dry shrub vegetation, and the grass on the ground is too sparse and has low chlorophyll content. This results in poor green light reflectance and leads to the inability to recognize some of the vegetated areas. NGRDI, MGRVI, and HSVGVI models can maintain a certain accuracy in detecting vegetation in grassland areas because these three vegetation indices enhance the green channel during the model construction. All other vegetation indices have more serious misclassification problems, resulting in generally low accuracy in detecting vegetation cover in the study area.

4.3. Calculation and Statistics of the Percentage of Physical Characteristics of Each Place

In this paper, reference data such as the total area of the study samples, specific vegetation area, and non-vegetation area were obtained through field investigations, which were used to evaluate the accuracy of the feature coverage determined with each vegetation index model. The following calculation method was used for feature coverage: the ratio of the area of two types of features after supervised classification using each vegetation index model to the total area of the study area is taken as the coverage of these features in the study area, as shown in Equation (7):

where

is the coverage of a feature,

is the area of a feature, and

is the total area of the study area.

Based on the above calculation process, the percentage of data for each feature and the vegetation cover of each vegetation index after supervised classification can be obtained. By comparing and analyzing them with the reference data, the ability of various vegetation indices to extract vegetation information and monitor the process of land desertification can be preliminarily judged. Vegetation coverage statistics for multi-shaded and densely vegetated areas are presented in

Table 2.

4.4. Accuracy Evaluation and Error Analysis of Supervised Classification Results

4.4.1. Accuracy Assessment and Construction of Confusion Matrix

In this study, the entire sample area was first divided into a grid by individual pixels, and the area occupied by individual pixels was calculated based on the spatial resolution of UAV images. Then, a field survey was conducted to establish validation sample squares based on the area of each pixel. The feature attributes (vegetation or bare soil) with more than 50% of the area within a sample square were labeled as attributes for that sample square. Subsequently, the feature information of each sample square was vectorized, and the sample squares were vectorized into validation sample points, which were integrated and used to construct validation samples for supervised classification. Next, the UAV visible images were visually interpreted, and the interpretation results were compared with the validation sample in order to further modify and correct any unreasonable points in the validation sample. Finally, this validation sample was used to evaluate the accuracy of the supervised classification results. In addition, we constructed a confusion matrix. The overall accuracy, producer accuracy, and user accuracy were used as accuracy evaluation indicators, and the specific construction method is shown in Equations (8)–(10).

where

P is the total number of samples,

k is the total number of categories,

is the number of correctly categorized samples,

is the number of samples in category

, and

is the number of samples predicted to be in category

.

4.4.2. Error Analysis

To further verify the accuracy of the supervised classification results, the following equation was utilized for error analysis:

where

is the vegetation coverage rate after the supervised classification of each vegetation index, and

is the vegetation coverage rate measured in the field. Due to the fact that

was used as a precision evaluation control experiment for the supervised classification results of various vegetation indices, detailed field measurements were conducted by delineating the study area to ensure the accuracy of

.

In summary, the results of accuracy evaluation, error analysis, and confusion matrix for the multi-shaded vegetation areas and densely vegetated areas are shown in

Table 3,

Table 4,

Table 5 and

Table 6, respectively. ‘Vegetation’ and ‘bare soil’ in

Table 4 and

Table 6 indicate the number of vegetation samples and bare soil samples, respectively.

4.5. Analysis of Supervised Classification Results

According to the supervised classification results, it can be seen that in the multi-shaded vegetation area, although the supervised classification accuracies of all vegetation indices are above 90%, a significant error occurs, which is mainly due to the following three factors: (1) a misclassification problem occurs in the multi-shaded area due to the coverage of the shaded texture; (2) a misclassification problem occurs in the densely vegetated area due to the presence of a large number of dry shrubs; and (3) the percentage of vegetation area in the region is much smaller than the percentage of bare soil area, leading to the overall supervised classification accuracy being falsely high.

Based on this, we verified the accuracy of vegetation detection by constructing confusion matrices, as well as the overall accuracy, producer accuracy, user accuracy, and error analysis models; in this case, the error analysis is based only on the percentage of vegetation obtained, so it is more intuitive to determine the vegetation cover. The high accuracy of producers and low accuracy of users indicate that although the visible vegetation index model can be used to roughly identify vegetation coverage, there is a very serious misclassification problem, and its accuracy makes it difficult to use this model for land desertification monitoring. In order to further explore whether the HSVGVI and other vegetation indices may misclassify as a result of shadow texture, dry shrub vegetation, etc., the study area was quantitatively divided again, the influence factors were amplified, and the percentage of the vegetation sample area was increased so as to ensure that the supervised classification results were intuitive and applicable to the evaluation of accuracy.

4.6. Comparison between Vegetation Samples under Shadow Coverage and Dry Shrub Vegetation Samples

In order to further validate the misclassification problem described in the previous section, the vegetation samples under shadow coverage and dry shrub vegetation samples were divided in the study area. The specific division principles were as follows: (1) Since the number of vegetation images in the original sample accounted for a small percentage and the number of non-vegetation images accounted for a large percentage, even if the problem of misclassification of vegetation images occurred, the impact on the final accuracy evaluation results would be small, resulting in the accuracy presenting a false high. Therefore, increasing the percentage of vegetation area can better and more realistically reflect the accuracy problem due to misclassification and can also ensure the intuitiveness and authoritativeness of supervised classification accuracy. (2) The vegetation samples under shadow coverage were divided, and the shadow-texture-influencing factors were amplified. (3) The vegetation samples of low shrubs with fewer stems and leaves were divided, and the influencing factors for dry shrubs were amplified. By using the above division principles, the samples were obtained after amplifying the influencing factors, which could better show the advantages of the HSVGVI in shadow elimination and vegetation detection.

Figure 9a shows the vegetation samples under shadow coverage, and

Figure 9b shows the dry shrub vegetation samples.

4.7. Proportion and Accuracy Verification of Objects in Different Regions

By analyzing the supervised classification results of vegetation samples under shadow coverage and dry shrub vegetation samples, the proportion of objects in each region with these two samples was obtained.

Table 7 shows the vegetation coverage statistics for the shaded coverage and dry shrub areas.

Based on the supervised classification results and actual field measurement data, we constructed a confusion matrix and calculated the overall classification accuracy, producer accuracy, and user accuracy. The specific accuracy evaluation is shown in

Table 8,

Table 9,

Table 10 and

Table 11.

Table 8 is the accuracy evaluation results of vegetation samples under shadow coverage, and

Table 9 is its confusion matrix.

Table 10 is the accuracy evaluation results of vegetation samples of dry shrubs, and

Table 11 is its confusion matrix. ‘Vegetation’ and ‘bare soil’ in

Table 9 and

Table 11 indicate the number of vegetation samples and bare soil samples, respectively.

According to

Table 8 and

Table 10, by increasing the percentage of vegetation area, the supervised classification accuracy of each vegetation index model is no longer falsely high due to fluctuations in the values and is more intuitive and authoritative. However, there is still a large error, which reflects that the presence of shaded texture and low shrubs containing fewer green leaves have a greater impact on the accuracy of vegetation detection.

In the vegetation samples from areas under shaded coverage, the vegetation indices constructed based on the RGB color space are greatly affected by the shadow texture, and their overall accuracies are generally low: The detection errors of vegetation coverage are all greater than 40%. Among the different vegetation indices, although the total accuracy of the NGRDI can reach 84.29%, and the producer accuracy can reach 84.58%, its user accuracy is only 82.63%, indicating that there are many misclassification problems. In addition, the producer accuracy of other RGB-space vegetation indices is generally low, but the user accuracy can reach more than 90%, which indicates that there are many misclassification problems in the classification process. This is due to the masking of shadow texture, and many vegetation pixels are “lost” in the classification. These RGB spatial vegetation indices can only extract the more obvious vegetation pixels, but it is difficult to extract the vegetation pixels covered by shadow textures. On the other hand, the supervised classification accuracy of the HSVGVI is as high as 97.78%, its vegetation cover detection error is only 8.21%, the producer’s accuracy coefficient is 89.58%, and the user’s accuracy is 99.61%. This indicates that it has a better extraction and classification accuracy, and compared with the RGB-space vegetation indices, the HSVGVI can eliminate the effect of shaded textures, which makes it more suitable for the detection of desert vegetation.

In the dry shrub vegetation samples, the overall accuracy of vegetation indices in RGB color space is lower than 85%, generally around 70%, and the extraction error of vegetation cover is more than 25%; the producer accuracy fluctuates between 37% and 72%, and the user accuracy is above 90%. Since the VDVI is more sensitive to the green wave band, its classification accuracy can reach 83.88% with an error of 25.44%; the producer accuracy is 71.61%, and the user accuracy is 96.05%. The phenomenon that the producer accuracy is lower, whereas the user accuracy is higher in the RGB vegetation indices also indicates that the vegetation containing fewer stems and leaves is difficult to detect, which leads to a serious misclassification problem. However, the HSVGVI has the advantages of the quantitative analysis of relatively independent hues in the HSV color space as well as green channel enhancement, so the overall accuracy, producer accuracy, and user accuracy can be up to 94.45%, 89.58%, and 99.61%, respectively. The error analysis is only 10.00%, which indicates that this index is suitable for classifying low shrubs with fewer green leaves and solves the problem of misclassification observed with vegetation indices in the RGB color space.

In conclusion, the HSVGVI not only inherits the unique advantages of the HSV color space but also has the ability to enhance the green channel and greatly reduce the influence of dark shadow texture, which enables us to extract the low shrub vegetation data with fewer green leaves more clearly, and the overall accuracy, producer’s accuracy, and user’s accuracy can be achieved at a high level.

5. Discussion

5.1. Comparative Analysis of Various Samples in the Research Area

In this study, the proportion of vegetation and non-vegetation, the classification accuracy, and the producer accuracy in the two groups of study areas were determined separately. By comparing and analyzing the above data, the following conclusions are drawn: By establishing the second group of research areas, the two influencing factors of shadow texture and dry shrub vegetation were further amplified. By observing the trends of the overall accuracy, producer accuracy, and user accuracy of the vegetation indices constructed based on the RGB color space, it was found that the overall accuracy and producer accuracy coefficients decreased significantly, and the error gradually increased, but the user accuracy still remained at a certain level. This indicates that these vegetation indices can extract some more “obvious” (not affected by influencing factors) vegetation samples in the image, but there is a significant misclassification problem, as both shadow-covered vegetation pixels and dry vegetation pixels are misclassified as bare soil pixels, resulting in lower overall accuracy and producer accuracy. On the other hand, the overall accuracy of the HSVGVI remains above 95%, the error rate is not more than 10%, and the user accuracy and producer accuracy are above 89%. In summary, the results show that the HSVGVI has the ability of shadow elimination and high-precision vegetation detection and thus is a better solution to the two major problems faced in desert vegetation detection.

5.2. Analysis of Land Desertification in Hangjin Banner

Through the vegetation cover model, it can be inferred that the land desertification problem in the Hangjin Banner is more serious, dominated by areas of medium and severe land desertification. For monitoring land desertification in the Hangjin Banner, we took a multi-shaded vegetation area and a densely vegetated area as examples, and the vegetation coverage of these two samples determined using the HSVGVI was 0.18 (extremely desertified area) and 0.39 (moderately desertified area). In the FVC model of high-definition satellite remote sensing images, the vegetation coverage of these two samples was determined by inputting the geographic coordinates, and the vegetation coverage of these two samples was 0.3 (severely desertified area). High-resolution satellite remote sensing images could only roughly estimate the vegetation cover, and their accuracy was not sufficient to accurately classify land desertification in the sample area. Therefore, we monitored land desertification by combining multi-source remote sensing images, using high-resolution satellite images to initially locate the areas with land desertification problems, and then extracted the vegetation information in the area by using UAV visible remote sensing images and the HSVGVI, so as to determine the grading of the degree of land desertification with high precision and provide data and technical support for the monitoring and prevention of land desertification in the Hangjin Banner.

6. Conclusions

The purpose of this paper is to combine high-resolution satellite remote sensing images and UAV visible images to evaluate the land desertification status of the Hangjin Banner, Bayannur City, Northwest China, as the study area. First, high-resolution remote sensing images were used to establish a vegetation coverage model to locate land desertification areas (FVC < 0.3). Then, UAV visible light images were used to extract desert vegetation information, which made the evaluation of land desertification more efficient and precise, with cheaper labor and less time-intensiveness.

Due to the strong light in the desert, the resulting shadow texture is deeper, and the shading of the low vegetation areas as a result of the shadow texture seriously affects the accuracy of vegetation extraction. In addition, due to climatic reasons, deserts are mostly dry shrubs with fewer stems and leaves, which also leads to the serious misclassification of vegetation indices in the RGB color space. For this reason, this paper proposes a new vegetation index, the HSVGVI, which is based on the HSV color space and is determined by using the channel enhancement method, which can eliminate the shadow texture to a greater extent. At the same time, it inherits the unique advantages of the HSV color space (the relative independence of the H channel), so it has a high sensitivity to the classification of green vegetation. Compared with the vegetation index models constructed based on the RGB color space, the HSVGVI shows higher accuracy and better adaptability in desert vegetation detection. The accuracy of the HSVGVI was verified by constructing a confusion matrix; calculating the overall accuracy, producer accuracy, and user accuracy; performing an error analysis; and using the vegetation index in the RGB color space as a control experiment. In order to further validate the advantages of the HSVGVI over the traditional vegetation index, this study focused on the difficult factors in the new research samples (vegetation samples under shadow coverage and dry shrub vegetation samples) through quantitative analysis and research methods. It was found that the overall accuracy of the vegetation index classifications based on the RGB color space was generally lower than 80%, the producer accuracy was generally lower than 70%, and the error was generally greater than 25%. By contrast, the classification accuracy of the HSVGVI could reach more than 95%, the producer accuracy was more than 89%, the user accuracy could reach more than 95%, and the error analysis was controlled below 10%. Thus, the influence of shadow texture is eliminated and the problem of misclassification of bare soil is solved.

In summary, the HSVGVI has the advantage of the HSV color space, allowing us to control the color in the image only through the H channel. At the same time, the HSVGVI has a high sensitivity in terms of recognizing green vegetation and can accurately recognize the green elements in the vegetation of dry shrubs. Therefore, the HSVGVI can provide high-precision vegetation information for land desertification assessment through UAV visible remote sensing images, and the HSVGVI model is more convenient to construct and has higher classification accuracy than the vegetation index models constructed based on the RGB color space.