Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision

Abstract

:1. Introduction

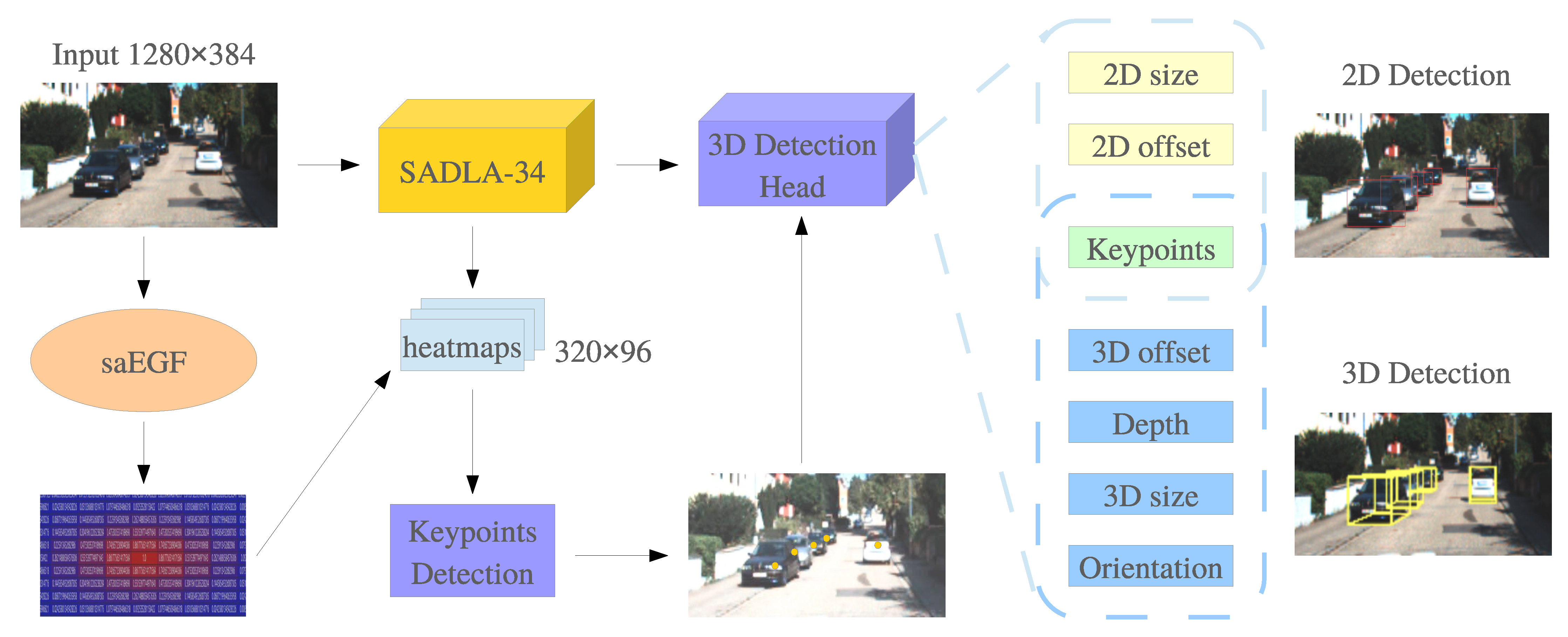

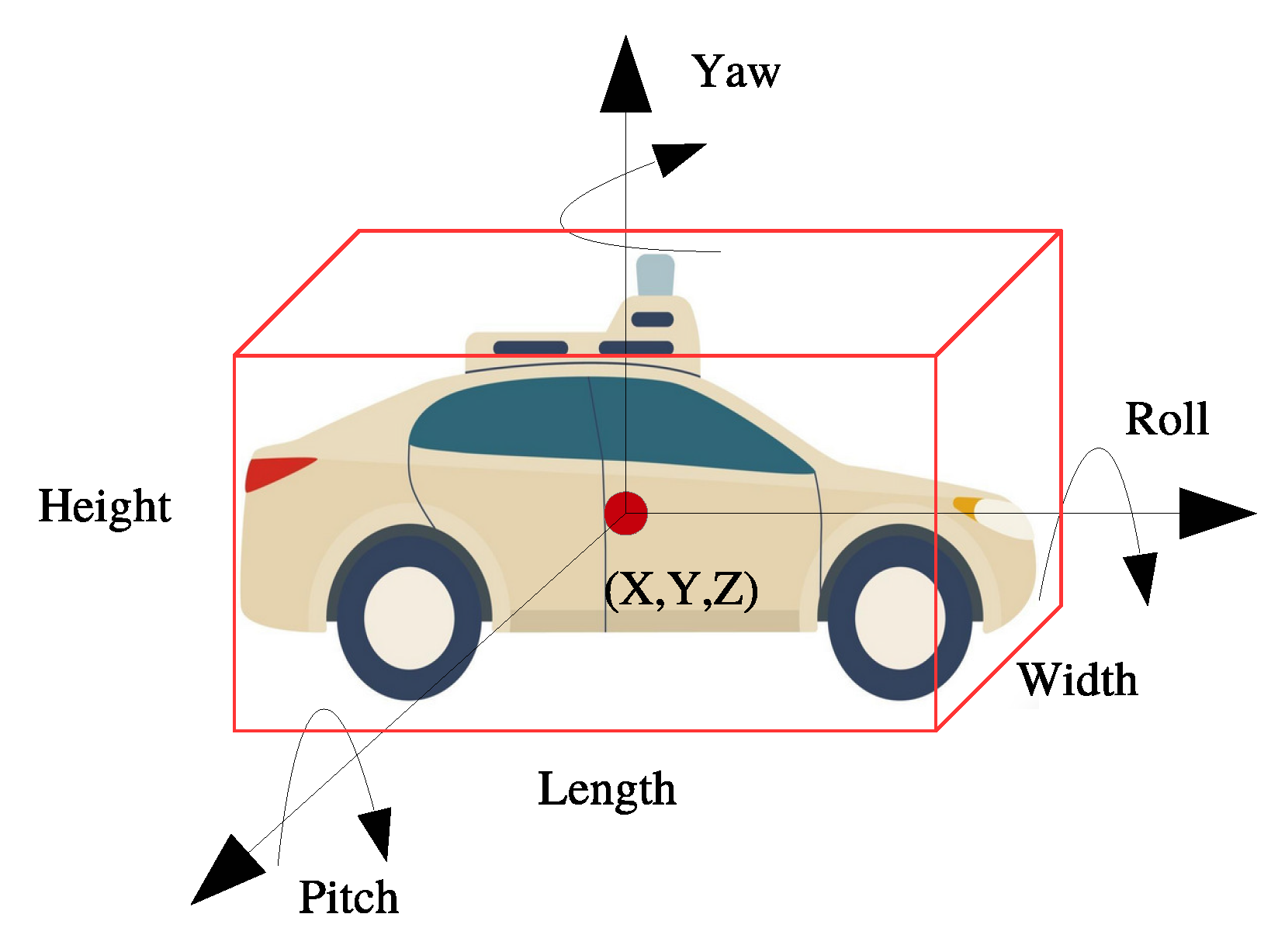

- This paper proposes an anchor-free and keypoint-based 3D object detection framework with a monocular camera, namely Keypoint3D. We projected 3D objects’ geometric center points from world coordinate system to 2D image plane, and leveraged the projected points as keypoints for geometric constraint on objects localization. Considering the difficulty of keypoints positioning on objects with high length-width ratios, we proposed self-adapting ellipse Gaussian filters (saEGF) to adapt to various object shapes. Keypoint3D also introduced a yaw angle regression method in a Euclidean space, resulting in a closed mathematical space and avoiding singularities.

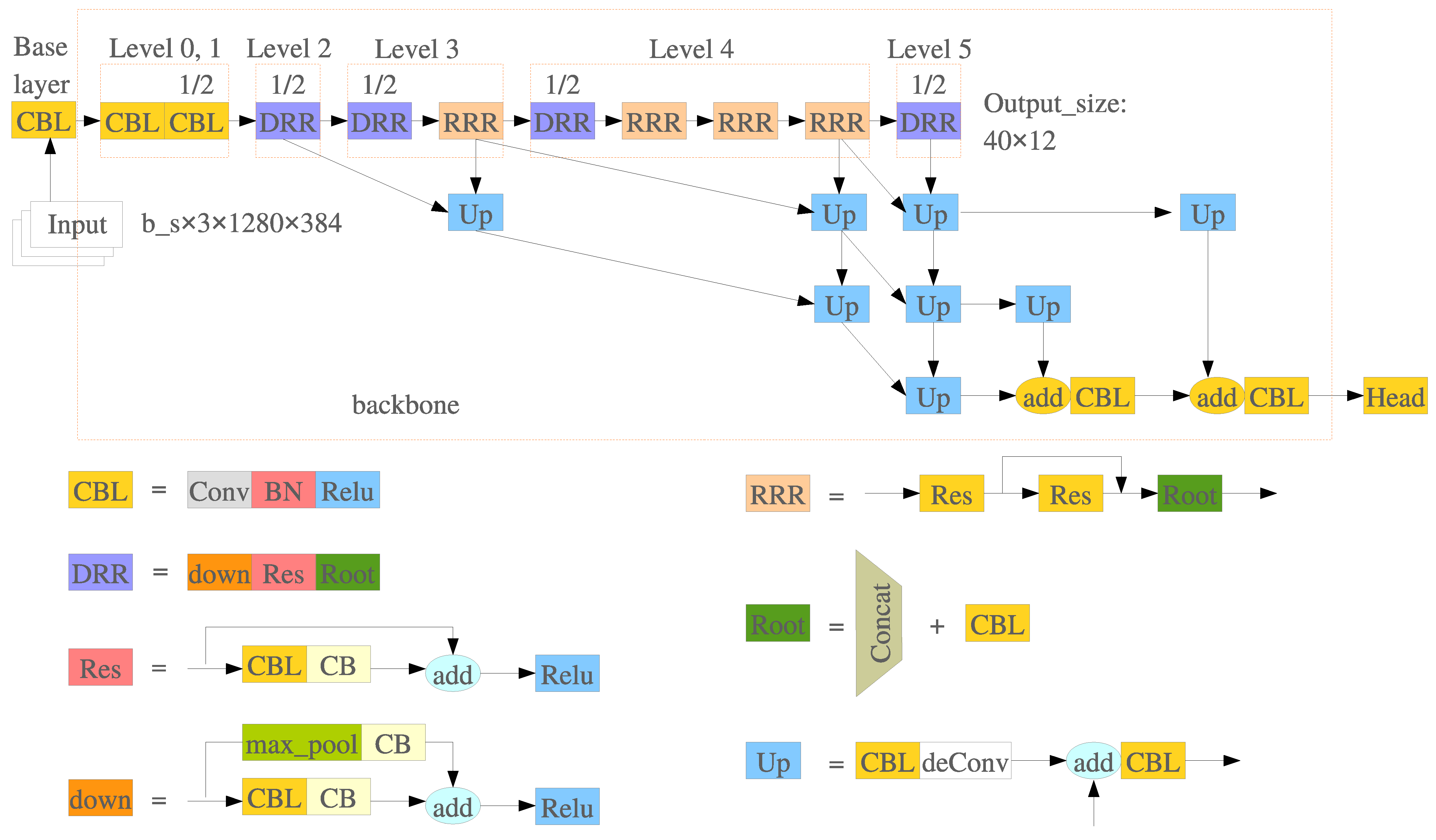

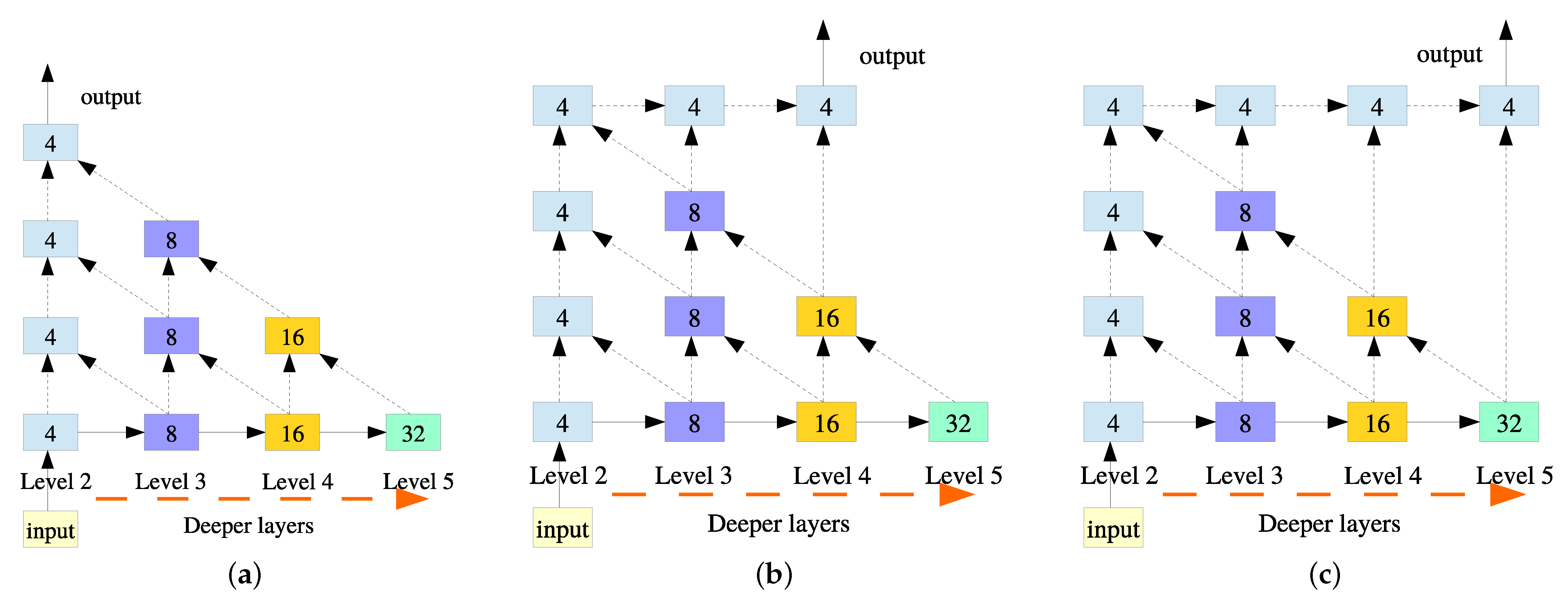

- We tried various variations of DLA-34 backbone and improved the hierarchical deep aggregation structure. We pruned redundancy aggregation branches to propose a semi-aggregation DLA-34 (SADLA-34) network and achieved better detection performance. Deformable convolution network (DCN) is adopted to replace the traditional CNN operators at the aggregation structure of SADLA-34 backbone for a great improvement of receptive field and enhance robustness to affine transformations.

- Numerous experiments on the KITTI 3D object detection dataset have proven the effectiveness of our proposed method. Keypoint3D can complete highly accurate 3D object detection of cars, pedestrians, and cyclists in real-time. Additionally, our method can easily be applied to practical driving scenes and achieved high-quality results.

2. Related Work

2.1. Methods Using Different Sensors

2.1.1. Lidar-Based Methods

2.1.2. Camera-Based Methods

2.1.3. Multi-Sensor Fusion Methods

2.2. Methods Using Different Networks

2.2.1. Anchor-Based Methods

2.2.2. Anchor-Free Methods

3. Materials and Methods

3.1. Data Collection and Analysis

3.2. Keypoint Detection

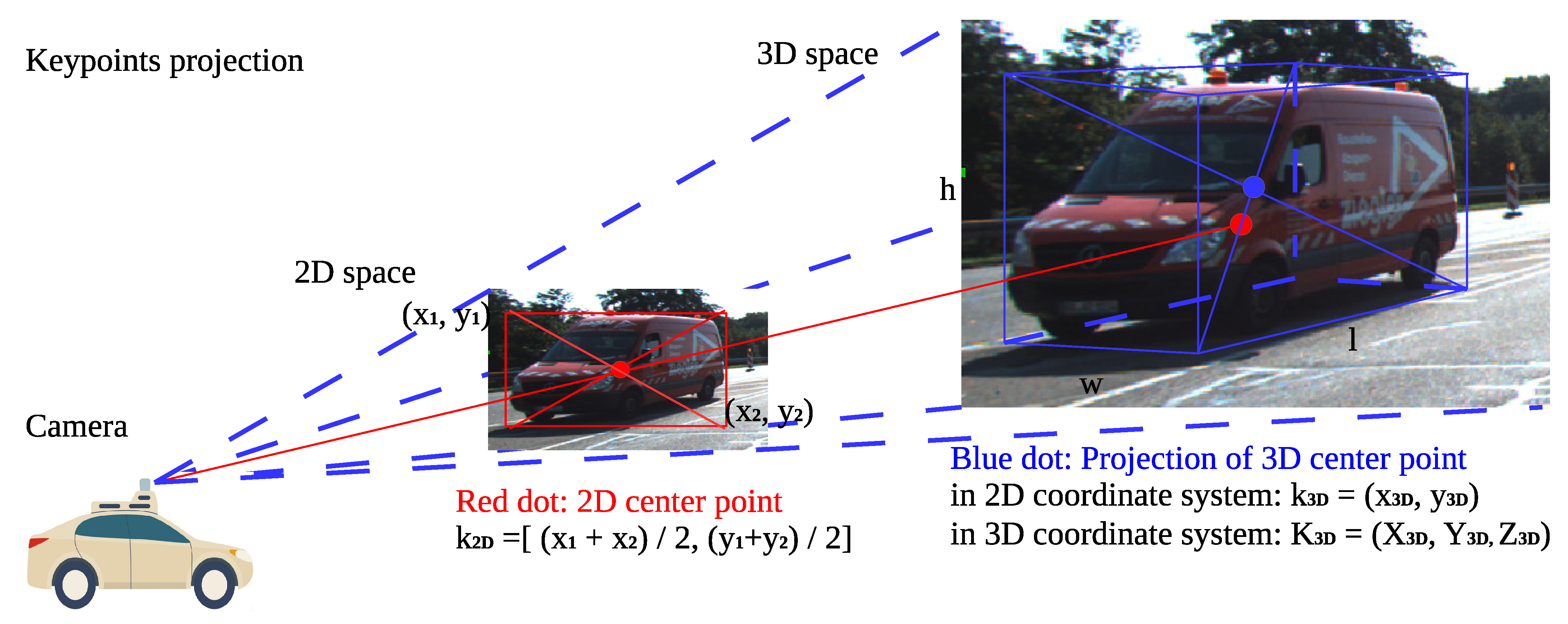

3.2.1. 3D Geometric Keypoint Projection

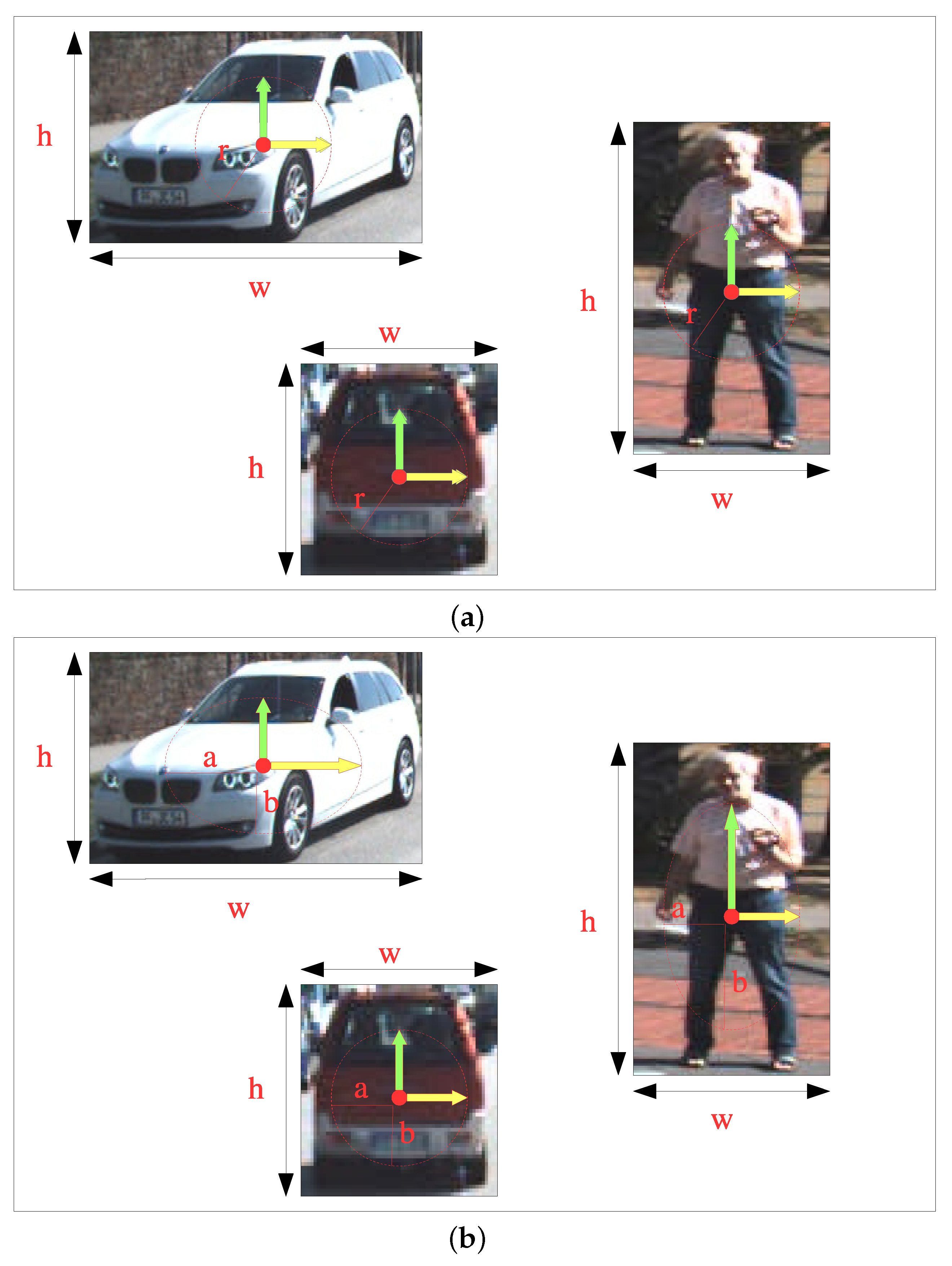

3.2.2. Heatmap

3.2.3. Self-Adapting Ellipse Gaussian Filter

3.3. Backbone

3.3.1. Semi-Aggregation Network Structure

3.3.2. Deformable Convolution

3.4. Detection Head

3.4.1. 3D Detection

3.4.2. 2D Detection

3.5. Loss Function

3.5.1. Classification Loss

3.5.2. Regression Loss

4. Results

4.1. Implementation Details

4.2. Detection Performance Evaluation

4.3. Ablation Studies

4.4. Qualitative Results

4.4.1. Qualitative Results on the KITTI Validation Set

4.4.2. Qualitative Results on the KITTI Test Set

4.4.3. Qualitative Results on Real Driving Scenes

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A survey on 3D object detection methods for autonomous driving applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef] [Green Version]

- Lu, N.; Cheng, N.; Zhang, N.; Shen, X.; Mark, J.W. Connected vehicles: Solutions and challenges. IEEE Internet Things J. 2014, 1, 289–299. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep learning approaches applied to remote sensing datasets for road extraction: A state-of-the-art review. Remote Sens. 2020, 12, 1444. [Google Scholar] [CrossRef]

- Russell, B.J.; Soffer, R.J.; Ientilucci, E.J.; Kuester, M.A.; Conran, D.N.; Arroyo-Mora, J.P.; Ochoa, T.; Durell, C.; Holt, J. The ground to space calibration experiment (G-SCALE): Simultaneous validation of UAV, airborne, and satellite imagers for Earth observation using specular targets. Remote Sens. 2023, 15, 294. [Google Scholar] [CrossRef]

- Gagliardi, V.; Tosti, F.; Bianchini Ciampoli, L.; Battagliere, M.L.; D’Amato, L.; Alani, A.M.; Benedetto, A. Satellite remote sensing and non-destructive testing methods for transport infrastructure monitoring: Advances, challenges and perspectives. Remote Sens. 2023, 15, 418. [Google Scholar] [CrossRef]

- Guo, X.; Cao, Y.; Zhou, J.; Huang, Y.; Li, B. HDM-RRT: A fast HD-map-guided motion planning algorithm for autonomous driving in the campus environment. Remote Sens. 2023, 15, 487. [Google Scholar] [CrossRef]

- Mozaffari, S.; AI-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep learning-based vehicle behavior prediction for autonomous driving applications: A review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 33–47. [Google Scholar] [CrossRef]

- Jiang, Y.; Peng, P.; Wang, L.; Wang, J.; Wu, J.; Liu, Y. LiDAR-based local path planning method for reactive navigation in underground mines. Remote Sens. 2023, 15, 309. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X. 3D object detection for autonomous driving: A survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. STD: Sparse-to-dense 3D Object Detector for Point Cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1951–1960. [Google Scholar] [CrossRef]

- Wang, Z.; Jia, K. Frustum ConvNet: Sliding Frustums to Aggregate Local Point-wise Features for Amodal 3D Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 1742–1749. [Google Scholar] [CrossRef]

- Gählert, N.; Wan, J.J.; Jourdan, N.; Finkbeiner, J.; Franke, U.; Denzler, J. Single-shot 3D Detection of Vehicles from Monocular RGB Images via Geometry Constrained Keypoints in Real-time. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Las Vegas, GA, USA, 19 October–13 November 2020; pp. 437–444. [Google Scholar] [CrossRef]

- Qian, R.; Garg, D.; Wang, Y.; You, Y.; Belongie, S.; Hariharan, B.; Campbell, M.; Weinberger, K.Q.; Chao, W. End-to-end Pseudo-LiDAR for Image-based 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 5881–5890. [Google Scholar] [CrossRef]

- Sun, J.; Chen, L.; Xie, Y.; Zhang, S.; Jiang, Q.; Zhou, X.; Bao, H. Disp R-CNN: Stereo 3D Object Detection via Shape Prior Guided Instance Disparity Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10545–10554. [Google Scholar] [CrossRef]

- Chen, Y.; Shu, L.; Shen, X.; Jia, J. DSGN: Deep Stereo Geometry Network for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 12533–12542. [Google Scholar] [CrossRef]

- Briñón-Arranz, L.; Rakotovao, T.; Creuzet, T.; Karaoguz, C.; EI-Hamzaoui, O. A methodology for analyzing the impact of crosstalk on LiDAR measurements. IEEE Sens. J. 2021, 1–4. [Google Scholar] [CrossRef]

- Zablocki, É.; Ben-Younes, H.; Pérez, P.; Cord, M. Explainability of deep vision-based autonomous driving systems: Review and challenges. Int. J. Comput. Vis. 2022, 130, 2425–2452. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 10–16 October 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Elaksher, A.; Ali, T.; Alharthy, A. A quantitative assessment of LiDAR data accuracy. Remote Sens. 2023, 15, 442. [Google Scholar] [CrossRef]

- Simony, M.; Milzy, S.; Amendey, K.; Gross, H.M. Complex-YOLO: Real-time 3D Object Detection on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar] [CrossRef]

- Qin, Z.; Wang, J.; Lu, Y. MonoGRNet: A general framework for monocular 3D object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5170–5184. [Google Scholar] [CrossRef]

- Yan, C.; Salman, E. Mono3D: Open source cell library for monolithic 3-D integrated circuits. IEEE Trans. Circuits Syst. 2018, 65, 1075–1085. [Google Scholar] [CrossRef]

- Brazil, G.; Liu, X. M3D-RPN: Monocular 3D Region Proposal Network for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9286–9295. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Wang, L.; Liu, M. YOLOStereo3D: A Step Back to 2D for Efficient Stereo 3D Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13018–13024. [Google Scholar] [CrossRef]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 4603–4611. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar] [CrossRef]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10386–10393. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-means clustering algorithm. J. R. Stat. Soc. C Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Mousavian, A.; Anguelov, D.; Flynn, J. 3D bounding box estimation using deep learning and geometry. arXiv 2017, arXiv:1612.00496. [Google Scholar] [CrossRef]

- Chabot, F.; Chaouch, M.; Rabarisoa, J.; Teuliere, C.; Chateau, T. Deep Manta: A Coarse-to-fine Many Task Network for Joint 2D and 3D Vehicle Analysis from Monocular Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2040–2049. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H. Pointpillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 5–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar] [CrossRef]

- Li, P.; Zhao, H.; Liu, P.; Cao, F. RTM3D: Real-time Monocular 3D Detection from Object Keypoints for Autonomous Driving. In Proceedings of the European Conference on Computer Vision (ECCV), Online, 23–28 August 2020; pp. 644–660. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11618–11628. [Google Scholar] [CrossRef]

- Patil, A.; Malla, S.; Gang, H.; Chen, Y. The H3D Dataset for Full-Surround 3D Multi-Object Detection and Tracking in Crowded Urban Scenes. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9552–9557. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2446–2454. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep Layer Aggregation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 2403–2412. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wang, R.; Shivanna, R.; Cheng, D.Z.; Jain, S.; Lin, D.; Hong, L.; Chi, E.H. DCN V2: Improved Deep and Cross Network and Practical Lessons for Web-scale Learning to Rank Systems. In Proceedings of the Web Conference, Ljubljana, Slovenia, 12–23 April 2021; pp. 1785–1797. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. arXiv 2014, arXiv:1406.2283. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection From RGB-D Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar] [CrossRef]

- Xu, B.; Chen, Z. Multi-level Fusion based 3D Object Detection from Monocular Image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2345–2353. [Google Scholar] [CrossRef]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View Aggregation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Kundu, K.; Zhu, Y.; Ma, H.; Fidler, S.; Urtasun, R. 3D object proposals using stereo imagery for accurate object class detection. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1259–1272. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Dataset | Scenes | Classes | Frames | 3D Boxes | Year |

|---|---|---|---|---|---|

| nuScenes [47] | 1000 | 23 | 40K | 1.4M | 2019 |

| H3D [48] | 160 | 8 | 27K | 1.1M | 2019 |

| Waymo [49] | 1150 | 4 | 200K | 112M | 2020 |

| KITTI [50] | 50 | 8 | 15K | 200K | 2012 |

| FPS | |||||||

|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | ||

| MonoGRNet [32] | - | - | - | 50.5 | 37.0 | 30.8 | 16.7 |

| Mono3D [33] | 92.3 | 88.7 | 79.0 | 25.2 | 18.2 | 15.5 | - |

| M3D-RPN [34] | 90.2 | 83.7 | 67.7 | 49.0 | 39.6 | 33.0 | 6.2 |

| F-PointNet (Mono) [55] | - | - | - | 66.3 | 42.3 | 38.5 | 5 |

| MF3D [56] | - | - | - | 47.9 | 29.5 | 26.4 | 8.3 |

| AVOD (Mono) [57] | - | - | - | 57.0 | 42.8 | 36.3 | - |

| CenterNet [45] | 97.1 | 87.9 | 79.3 | 19.5 | 18.6 | 16.6 | 15.4 |

| CenterNet (3dk) | 87.1 | 85.6 | 69.8 | 39.9 | 31.4 | 30.1 | 15.4 |

| Keypoint3D (Ours) | 95.8 | 87.3 | 77.8 | 48.1 | 39.1 | 32.5 | 18.9 |

| Car | Pedestrian | Cyclist | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |

| CenterNet [45] | 19.5 | 18.6 | 16.5 | 21.0 | 16.8 | 15.8 | 19.5 | 11.8 | 10.9 |

| CenterNet (3dk) | 39.9 | 31.4 | 30.1 | 31.3 | 20.4 | 17.5 | 23.1 | 16.4 | 15.9 |

| Keypoint3D (Ours) | 48.1 | 39.1 | 32.5 | 37.9 | 23.9 | 19.6 | 30.4 | 23.0 | 21.1 |

| Method | Sensors | Easy | Mod. | Hard |

|---|---|---|---|---|

| 3DOP [58] | Stereo | 55.0 | 41.3 | 34.6 |

| Mono3D [33] | Mono | 30.5 | 22.4 | 19.2 |

| MF3D [56] | Mono | 55.0 | 36.7 | 31.3 |

| M3D-RPN [34] | Mono | 55.4 | 42.5 | 35.3 |

| AVOD [57] | Mono | 61.2 | 45.4 | 38.3 |

| CenterNet [45] | Mono | 31.5 | 29.7 | 28.1 |

| CenterNet (3dk) | Mono | 46.8 | 37.9 | 32.7 |

| Keypoint3D (Ours) | Mono | 52.6 | 39.5 | 33.2 |

| 3D Keypoint | SADLA-34 | SADLA-34-DCN | saEGF | Eulerian Angle | mAP | FPS |

|---|---|---|---|---|---|---|

| √ | × | × | × | × | 31.4 | 15.4 |

| √ | √ | × | × | × | 35.7 | 19.6 |

| √ | √ | √ | × | × | 36.1 | 18.0 |

| √ | √ | √ | √ | × | 38.9 | 18.0 |

| √ | √ | √ | √ | √ | 39.1 | 18.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Gao, Y.; Hong, Q.; Du, Y.; Serikawa, S.; Zhang, L. Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision. Remote Sens. 2023, 15, 1210. https://doi.org/10.3390/rs15051210

Li Z, Gao Y, Hong Q, Du Y, Serikawa S, Zhang L. Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision. Remote Sensing. 2023; 15(5):1210. https://doi.org/10.3390/rs15051210

Chicago/Turabian StyleLi, Zhen, Yuliang Gao, Qingqing Hong, Yuren Du, Seiichi Serikawa, and Lifeng Zhang. 2023. "Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision" Remote Sensing 15, no. 5: 1210. https://doi.org/10.3390/rs15051210

APA StyleLi, Z., Gao, Y., Hong, Q., Du, Y., Serikawa, S., & Zhang, L. (2023). Keypoint3D: Keypoint-Based and Anchor-Free 3D Object Detection for Autonomous Driving with Monocular Vision. Remote Sensing, 15(5), 1210. https://doi.org/10.3390/rs15051210