Abstract

High-resolution remote sensing images (HRRSIs) cover a broad range of geographic regions and contain a wide variety of artificial objects and natural elements at various scales that comprise different image contexts. In semantic segmentation tasks based on deep convolutional neural networks (DCNNs), different resolution features are not equally effective for extracting ground objects with different scales. In this article, we propose a novel context-driven feature-focusing network (CFFNet) aimed at focusing on the multi-scale ground object in fused features of different resolutions. The CFFNet consists of three components: a depth-residual encoder, a context-driven feature-focusing (CFF) decoder, and a classifier. First, features with different resolutions are extracted using the depth-residual encoder to construct a feature pyramid. The multi-scale information in the fused features is then extracted using the feature-focusing (FF) module in the CFF decoder, followed by computing the focus weights of different scale features adaptively using the context-focusing (CF) module to obtain the weighted multi-scale fused feature representation. Finally, the final results are obtained using the classifier. The experiments are conducted on the public LoveDA and GID datasets. Quantitative and qualitative analyses of state-of-the-art (SOTA) segmentation benchmarks demonstrate the rationality and effectiveness of the proposed approach.

1. Introduction

The semantic segmentation of HRRSIs is to categorize each pixel in remote sensing (RS) images. With the rapid development of RS acquisition technology, semantic segmentation applications of HRRSIs have become more widespread [1,2,3]. The semantic segmentation’s robustness and the generalization ability of RS images are crucial for applications such as mapping, land use, earth observation, and land cover. However, an HRRSI contains a large amount of ground object information, which presents diversity and complexity, and the multi-scale objects and the inconsistent class distributions, as well as complex background samples, make the semantic segmentation of HRRSI challenging.

The RS community has developed various methods for semantic segmentation as technology has advanced, including traditional approaches (e.g., distance-based measures) [4] and machine learning methods (e.g., SVM [5] and random forests [6]). However, those approaches’ rely on the quality of hand-crafted features (e.g., SIFT [7], the visual vocabulary of BoW [8], and SURF [9]) and mid-level semantic features, and are typically used to classify images in a small range of data, which limits their adaptability and flexibility [10] to handle HRRSI’s multi-scale objects and inconsistent class distribution.

Deep learning (DL), in contrast to traditional methods, can learn high-level semantic information, which has attracted the RS community’s interest [11,12]. Compared to the traditional approach of hand-designed features, which can extract different types of information such as temporal period, spectrum, spatial context, and the interaction of different land-use categories [13], DCNNs have a well-established deep multilayer structure and possess a powerful potential to capture nonlinear and hierarchical feature representations intelligently.

For RS semantic segmentation, two types of frameworks are commonly used: fully convolutional network (FCN)-based techniques and encoder–decoder architectures. The encoder extracts the image feature information with the DCNN model in the encoder-decoder architecture, and the decoder processes the feature information to obtain high-level feature information related to object categorization. FCN-based methods typically include a contracting path and an expanding path, producing high-level feature maps and reconstructing the pixel-wise segmentation mask by using single [14] or multilevel [15] upsampling procedures. These multiscale techniques [3,16,17] are constrained by information flow bottlenecks, despite their powerful representation abilities. The encoder’s low-level and fine-grained detailed feature maps, for example, are added with the decoder’s high-level and coarse-grained semantic information without further refinement, resulting in insufficient feature exploitation and discrimination. Furthermore, due to constant downsampling procedures, these approaches lose spatial context information and significantly rely on the DCNNs’ top layer’s abstract features. For the semantic segmentation challenge of HRRSIs, especially when varied scales and orientations of the same land cover objects are presented, this contextual information (e.g., object correlation, spatial location, and object scale) might enhance necessary information while suppressing unnecessary information.

Despite the significant advancements made by DCNN-based techniques in the field of HRRSI semantic segmentation, there are still some issues with HRSSI semantic segmentation development:

- (1)

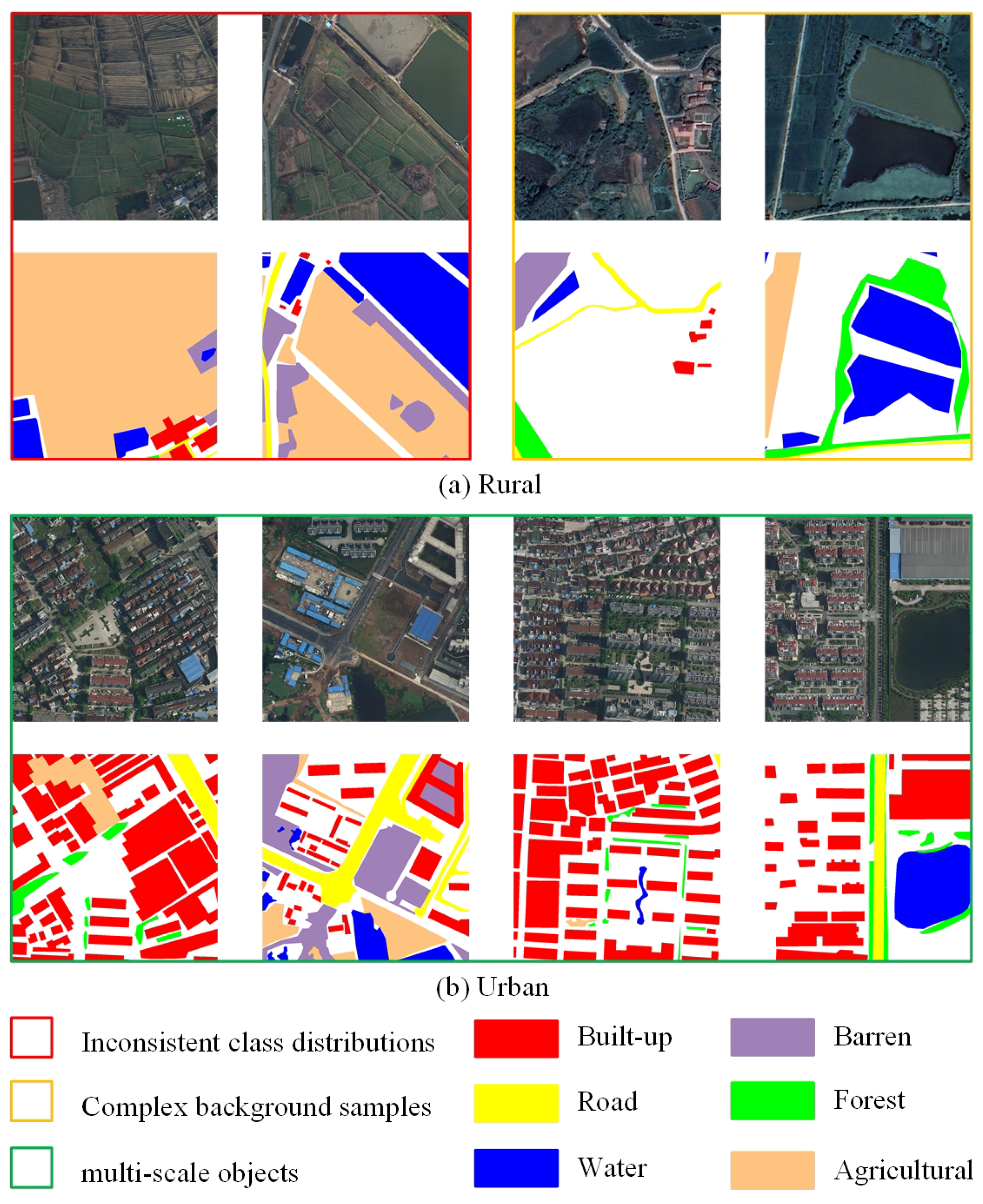

- There is a lack of efficient feature-focusing methods for multi-scale ground objects. HRRSI scenes typically include a wide range of artificial objects and natural elements, such as built-up, roads, barren land, impermeable surfaces, and trees, as shown in Figure 1. Buildings in urban environments are very changeable and typically closely aligned, while buildings in rural regions are simpler and arranged more haphazardly. Meanwhile, compared to rural regions, urban roads are generally wider and more complicated. Moreover, in urban scenes, water is generally represented as rivers or lakes, whereas rural areas are more likely to present ponds and ditches. Although combining low- and high-level features [16,17] can increase semantic segmentation performance, most previous studies applied this combined method directly to the entire HRRSI scene without focusing on ground objects at different scales to extract multi-scale information from the fused features further. Therefore, how a feature-focusing extraction method for multi-scale objects needs to be further addressed.

Figure 1. Examples of input images and their corresponding ground truth (GT). (a) For rural areas and (b) for urban areas. The rectangular boxes with different colors illustrate the potential problems of HRRSI semantic segmentation. In GT, the white area is the background.

Figure 1. Examples of input images and their corresponding ground truth (GT). (a) For rural areas and (b) for urban areas. The rectangular boxes with different colors illustrate the potential problems of HRRSI semantic segmentation. In GT, the white area is the background. - (2)

- There is a lack of efficient feature-focusing methods for image context. In the DCNN-based semantic segmentation task, different resolution features have different effects on extracting ground objects at different scales. Having enough local background is beneficial for detecting small and densely aligned objects; however, for extensive and regular farms or other natural elements, local information may be redundant and bring additional interference to the classification. Therefore, the importance of features at different scales is not the same for different ground objects. Directly using fused features for segmentation can affect the performance of the model.

This paper proposes a novel framework, the context-driven feature-focusing network (CFFNet), for the semantic segmentation of HRRSIs to overcome the aforementioned issues. The network aims at focusing on the multi-scale ground object in fused features of different resolutions. The network contains three parts: a depth-residual encoder, a context-driven feature-focusing (CFF) decoder, and a classifier. The feature-focusing (FF) module and the context-focusing (CF) module are the two components of the CFF decoder. The framework first builds a feature pyramid by using the depth-residual encoder to extract different resolution features. The multi-scale information in the fused features is then extracted using the feature-focusing (FF) module in the CFF decoder, followed by computing the attention weights of different scale features adaptively using the context-focusing (CF) module to obtain the weighted multi-scale fused feature representation. Finally, the final results are obtained using the classifier.

The main contributions of this paper are as follows.

- (1)

- The novel FF module is proposed, which designs a multiscale convolution kernel group aimed at focusing on multi-scale ground objects in the fused features, extracting multi-scale spatial information at a more detailed level more efficiently and learning richer multi-scale feature representations.

- (2)

- The novel CF module is proposed, which adaptively calculates focus weights based on the image context, and the weights enhance the scale features related to the image and suppress the scale features irrelevanted to the image.

- (3)

- The CFFNet is proposed for the HRRSIs’ semantic segmentation. The network enhances the multi-scale feature representation capability of the model by focusing on multi-scale features that are associated with image context. Extensive experiments are conducted for multiclass (e.g., buildings, roads, farmland, and water) RS segmentation. The experimental results show that the proposed method outperforms SOTA methods and demonstrate the proposed framework’s rationality and effectiveness.

The remainder of this paper is organized as follows. Section 2 introduces the relevant studies on multi-scale feature fusion- and attention-based semantic segmentation and presents the proposed framework and its components. In Section 3, experiments and comparisons of our method to a set of benchmark methods are discussed. Finally, Section 4 draws conclusions.

2. Materials and Methods

2.1. Related Work

2.1.1. Multi-Scale Feature Fusion-Based Semantic Segmentation Approaches

The information relating to the contour features of the input image is lost layer by layer as the model’s depth increases. The feature map and information transmission between the encoding and decoding layers are carried out via the traditional DCNN-based semantic segmentation model, which connects them directly. As a result, the information from the input image is captured during the encoding stage with the same receptive field at each position’s feature map. The receptive field needs for objects of various sizes and volumes, on the other hand, are frequently diverse. Since it is difficult to adapt a perfectly consistent single receptive field to the segmentation problem of diverse scales of objects in real scenes, multi-scale feature fusion has been widely explored and utilized.

Multi-scale feature fusion is a technique for solving the problem of obtaining features at different scales by correlating low-level features obtained during the encoding stage and then performing multi-scale feature fusion to allow the encoding to obtain features with the different receptive fields. Image pyramid [18] and feature pyramid models [19,20] are the two most common types of multi-scale feature fusion. In classical data image processing, the picture pyramid model is commonly utilized. Multi-branch structures are common image pyramid models in semantic segmentation, such as BiSeNet [18], ICNet [21], and others. These models take images at different resolutions and input them into various modules or processing branches, extract features under various receptive fields by processing images of various resolutions, and then fuse the features to complete the output results with the spatial contour detail information that is missing. The image pyramid maximizes the utilization of various forms of information in multi-scale images, mimics how the human eye gathers information from images of varying resolution sizes, and better complements multi-scale information. However, the model uses more memory due to the image pyramid’s parallel network structure.

Feature pyramids solve the problem of large memory consumption of image pyramids, and the main idea is to use feature maps with different resolutions to fuse and obtain features with different receptive fields. A typical structure that utilizes the information of different layers of feature maps is the U-Shape type network [20,22,23] with skip connections. ASPP [24] and PPM [25] are two feature pyramid models that have been widely employed in the semantic segmentation field. U-net [20] sums low- and high-level features using skip connections (i.e., additional network connections between the encoder and decoder). However, directly summing low- and high-level features yields insufficient information, and FPN-based semantic segmentation methods [16,17] suffer from the same problem. To solve this problem, skip connections in U-Net++ [23] are replaced by nested and dense skip connections, which improves skip connection capabilities and reduces the encoder and decoder’s semantic gap among them. However, the network’s multiscale features cannot be fully extracted by either the original U-Net or U-Net++. As a result, U-Net 3+ [22] designed full-scale skip connections to overcome this limitation, albeit at a significant computational cost. Additionally, the feature maps produced by different layers are assumed to have identical weights in all channels using full-scale skip connections. However, the discrimination levels of features created at different stages are frequently different [3]. Zhao et al. [25] were the first to propose the PPM. The encoder’s features are first obtained by adaptive pooling with various resolutions, transformed via a number of convolutions to produce new features, and then restored to the original resolution by bilinear interpolation, and the feature values are finally fused by stacking to complete the fusion of different receptive field features. The ASPP model [24] employs a similar strategy to produce feature maps with the varied receptive field by employing atrous convolution with different expansion rates, followed by stacking the feature maps.

2.1.2. Attention-Based Semantic Segmentation Approaches

In essence, the attention mechanism is a system that emphasizes the region of interest while suppressing irrelevant background regions using a set of weighting factors that the network autonomously learns and weighs. Attention mechanisms can be divided into two groups in the computer vision (CV) field: hard and soft attention. Hard attention, a stochastic prediction that focuses on dynamic changes, has limited application because it is infinitesimally small. Soft attention, on the other hand, is differentiable everywhere, i.e., it can be obtained by training neural networks using gradient descent and hence has a wide range of applications. The mainstream attention mechanisms can be split into three types based on several dimensions (e.g., channel, location, time, and category): channel attention [26,27], spatial attention [28], and self-attention [29].

The purpose of channel attention is to automatically calculate each feature channel’s importance through network learning, model the relationship between different channels (feature maps), and then assign weighting factors to each channel to emphasize the crucial features and squelch the unimportant ones. SE-Net [27] is a notable effort in this domain, which uses feature rescaling to adaptively alter the feature response between channels. Additionally, SK-Net [30], which draws inspiration from both the SE-block [27] and the Inception-block [31], considers multi-scale feature representation by introducing multiple convolutional kernel branches to learn feature map attention at various scales, enabling the network to concentrate more on the crucial scale features. Furthermore, by optimizing the fully connected (FC) layer operations in the SE module with a 1-dimensional sparse convolution technique, ECA-Net [32] significantly lowers the number of parameters while maintaining a comparable performance.

Through a spatial transformation module, spatial attention tries to strengthen the important locations’ feature representation by translating the input image’s spatial information into another space while retaining the essential information and by weighting the output to enhance interested regions while weakening irrelevant background regions. Classical work in this domain is CBAM [33], which bridges a spatial attention module (SAM) to the original channel attention. The authors experimentally verify that the channel-then-space strategy is superior to the space-then-channel or channel-space parallel approaches in terms of performance. In addition, the double attention module proposed by A2-Net [34] and the variation attention module scSE [35] inspired by SE-Net [27], among others, are similar upgraded modules.

Self-attention [29] aims to reduce the dependence on external information and to use, as much as possible, the information inherent within the features for attentional interaction. It first appeared in Google’s proposed Transformer architecture [29]. Later, Wang et al. [36] applied it to the CV domain and introduced the non-local (NL) module, which successfully captures long-range feature relationships by modeling the global context using the self-attention method. Many enhancements have been made since the NL block was proposed. For example, DANet’s dual attention mechanism [37] applies the NL concept to both the spatial and channel domains, modeling spatial pixel points as well as channel features as context-inquiry statements. On the other hand, NL reduces the feature maps’ dimension by using the convolution operation. Unquestionably, such a global pixel-to-pixel pair-based modeling technique has a heavy computational cost. As a result, numerous efforts have been made to solve this problem, including CCNet [38], which designs and employs two cross-attention modules to replace the global pixel-to-pixel pair-based modeling. GC-Net [39] incorporates the SE mechanism [27] and attempts to replace the original spatial downsampling method with a simpler spatial attention module.

2.2. Methods

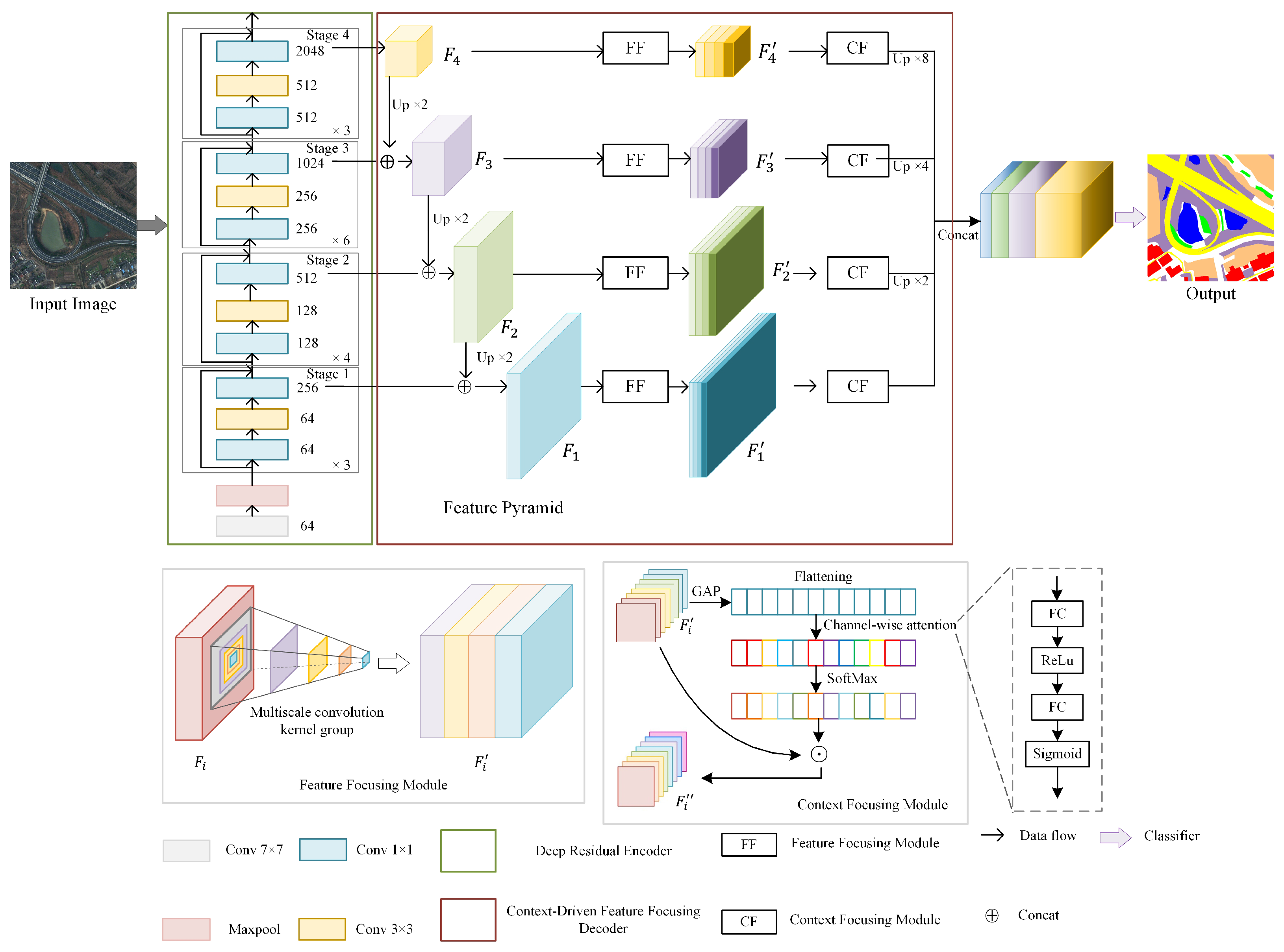

In this section, we introduce the proposed CFFNet. First, this framework introduces the commonly used DCNN feature extractor ResNet50 for extracting picture features. The proposal context-driven feature-focusing decoder is then made for further extracting multi-scale features and computing the weights of different scale features adaptively. Two components make up the decoder: the feature-focusing (FF) module and the context-focusing (CF) module. To obtain the final output, the feature representation that was activated by multi-scale features at various stages is finally concatenated together by channel dimension. The whole framework is shown in Figure 2.

Figure 2.

Overview of the proposed CFFNet framework, where Up is x times of upsampling and ’Concat’ means to concatenate features in the channel dimension.

2.2.1. Deep Residual Encoder

ResNet’s [40] complex residual modules give it a strong feature extraction capability. Numerous studies [2,13,41] have shown that a pre-trained ResNet backbone performs well in segmentation tasks. ResNet is used as an encoder in the suggested framework, albeit without fully connected (FC) layers. The feature map’s size is incrementally shrunk from the bottom up, as illustrated in Figure 2, and the dimensionality of the features is successively raised in a bottom-up fashion, both given an input image. Deep residual encoders frequently have a large number of residual blocks that produce output maps that are the same size and are organized by the network stages. As in ResNet50, there are 4 stages, each with 3, 4, 6, and 3 residual blocks, respectively. Since the strongest features should be found in each stage’s deepest layer, the features of different stages have different receptive fields, spatial resolutions, and semantic information. In this instance, each stage’s last layer’s feature is chosen. denotes the last features at Stages 1–4. Their feature dimensions are , and their output sizes are 1/4, 1/8, 1/16, and 1/32 of the input image. Because of ResNet50’s precision and effectiveness, it was adopted for this work. The CFFNet framework is also flexible, with alternative backbones available. Multiscale features can be extracted at various stages when compared with other models, such as VGG [42].

2.2.2. Context-Driven Feature-Focusing Decoder

RS scenes often contain objects at different scales, which require different semantic and spatial resolutions of features. In the FPN-based approaches, the fusing features of different stages enhance the model’s multi-scale feature representation capability. However, the features of a particular stage have different representation capabilities for different scales of objects. In order to fully exploit the feature representation ability of each stage for different scales of foreground, we propose the CFF decoder, as shown in Figure 2. In deep residual encoders, the features at different stages are extracted, where the features at stage i are denoted by . The decoder, similar to the original FPN, uses a top-down pathway and skip connections to generate the pyramidal features . Particularly, the encoder’s deepest-layer features are selected as the inputs. The features are obtained by reducing the channel dimension of using a convolution. The features are then upsampled by a factor of two. The upsampled features and the transformed features from the encoder are then concatenated in the channel dimension to produce . Typically, as the encoder’s higher resolution features are transformed by a convolution, they are channel-wise concatenated to the deepest-layer features , which are then gradually upsampled. The feature pyramid is acquired at the end. The processes can be written as follows:

where concatenates features in the channel dimension, upsamples the x with factor 2, denotes the decoder’s features’ transformed procedure, and denotes the skip connection.

Each stage in the feature pyramid has different spatial and semantic resolutions, whereas Stages 2, 3, and 4 also incorporate features with different spatial and semantic resolutions. Therefore, we designed the FF module and CF module to focus on the multi-scale information in them. The FF module receives and outputs . The FF module is used to extract the multi-scale features in the fused feature maps of different stages. are sent through the FF module to produce . The weighted feature representation is obtained by passing via the CF module. Important features can be adaptively activated by the CF module relying on the image context. Finally, the final feature map is generated by concatenating the in the channel dimension.

- (1)

- Feature-focusing module

In DCNN, the size of the receptive field is related to the size of the convolution kernel. Convolutional kernels of different sizes can extract different scales’ spatial and semantic information. In the FF module, we concatenate different sizes of convolutional kernels for extracting spatial and semantic information of multiple scales. Different sizes of convolutional kernels focus on features of different scales. These concatenated multi-scale convolutional kernels can not only extract multi-scale spatial information at a more detailed level but also build cross-channel interactions locally. However, the number of parameters will dramatically rise as the convolutional kernel size increases. To process the input tensor with multi-scale convolutional kernels without increasing the computational cost, we provide a new criterion for choosing the dimensions of features. The relationship between the number of multiscale kernels groups and the dimensions of the features can be written as

where is the input feature map ’s feature dimension, is the ’s feature dimension, , and j is the number of multi-scale convolutional kernel groups, . The following is the formula for the generation

where j can take any natural number smaller than C, the jth convolution kernel size , denotes the convolution operation, denotes the different scale of the feature map, and and denote the feature map ’s height and width, respectively. In the proposed framework, j is taken as 4, and , , , and are 1, 3, 5, and 7, respectively. In fact, the value of j and the value of can be customized for different conditions (e.g., image resolution, category, and object size).

By concatenating, can be obtained as

- (2)

- Context-Focusing Module

In the FF module, we use the convolution kernel group to extract information in the fused features in a more detailed manner. However, HRRSIs present diverse image contexts and cover a broad range of geographic areas, so foregrounds at various scales have different spatial and semantic features. Additionally, different resolution features are not equally effective for extracting ground objects at different scales. Thus, we designed the CF module to focus on the features related to the image context in multi-scale fused features.

Inspired by the channel attention [27], which can intelligently evaluate the significance of each channel, we designed the CF module to calculate focus weights. The weights represent the importance of each feature layer in multi-scale fused feature maps. Precisely, let denote the input multi-scale fused feature map, where , , and denote the ’s height, width, and feature dimension, respectively. Two components are used to calculate focus weights: the encoding of global information and the adaptive recalibration of channel-wise relations. Typically, the global average pooling (GAP) technique can be utilized to embed the global spatial information into a channel descriptor, generating channel-wise encoded data. Two FC layers then integrate the linear information between the channels more effectively. After the channel interactions, the activation function Sigmoid assigns weights to the channels. Finally, the SoftMax function re-calibrates the weights. The focus weight of the can be written as

where Softmax() denotes the Softmax function, Sigmoid() denotes the Sigmoid function, FC() denotes the FC layer operation, ReLu() denotes the Rectified Linear Activation Function [43], and G() denotes the GAP operation.

The GAP can be calculated as follows:

We then multiply by to obtain . The formula is as follows:

where ⊙ denotes the multiplication in the feature dimension.

Finally, we can obtain , , , and . As mentioned before, their corresponding feature map sizes are , , , and of that of the input image. To ensure that the feature maps are constant, , , and are upsampled by 2×, 4×, and 8×, respectively. The final feature representation, which is written as , is obtained by concatenating the upsampled , , , and in the feature dimension.

2.2.3. Map Prediction and Loss Function

Our framework aims to distinguish multiple classes (i.e., buildings, roads, water, and forest), and this is a multiclass semantic segmentation problem. In multiclass segmentation challenges, the softmax function is the most used classifier for obtaining normalized probabilities at the pixel level. The dense prediction map is then obtained by feeding H through the classifier, which is made up of two convolution modules and a softmax activation function. The convolution module consists of a convolution layer, a batch normalization layer, and an activation layer. Finally, the argmax function then returns the class ID that corresponds to the prediction map’s maximum probability value. Note that the size of the resulting prediction map is only 1/4 of the original image, so we need to upsample the prediction map to the input image size.

When addressing multiclass semantic segmentation problems, the CrossEntropyLoss (CE) loss function is commonly employed to compute the pixel-level difference between predicted and true labels for backward propagation. CE loss function is adopted in our CFFNet. It is defined as:

where i is the pixel index value; is the true label of pixel i, which is encoded in one-hot form; and the length is the number of categories. For example, there are 7 categories in total, and assuming that the true category of pixel i is 3, can be written as . is pixel i’s predicted value, which is also encoded in one-hot form.

3. Results and Discussion

3.1. Dataset Description

The proposed framework was evaluated on two RS image datasets: LoveDA [44] and GID [45].

The LoveDA dataset contains 5987 images of size and a resolution of 0.3 m, taken from the Google Earth platform in July 2016 in three cities (Wuhan, Changzhou, and Nanjing) and covering an area of 536.15 km2. The LoveDA dataset collects data according to two domains: urban and rural. The model’s generalization is limited by the inconsistent spatial distribution between urban and rural landscapes, and the multi-scale objects require the model’s multi-scale capture capability.

The GID dataset is divided into two parts: the fine land-cover classification set (GID-15) and the large-scale classification set (GID-5). The GID-5 contains five land cover categories, including built-up, forest, farmland, water, and meadow, with a total of 150 pixel-level annotated Gaofen-2 satellite RS images. These images cover an area of over 50,000 km2. The size of the Gaofen-2 satellite RS images is .

Those two datasets present a significant challenge to the model’s capacity to capture multiple scales, its generalization ability, and its robustness because they span a broader range than other RS semantic segmentation datasets and have objects with more diverse shapes, scales, layouts, and spectrums.

3.2. Implementation

The LoveDA dataset was divided by city (Nanjing (NJ), Changzhou (CZ), and Wuhan (WH)) and into rural and urban domains, and each domain was divided into training, validation, and test sets. The specific details of the LoveDA dataset division are shown in Table 1. For the semantic segmentation task, the training, validation, and test set of the urban and rural domains were combined. Thus, the training, validation, and test sets contain images from both urban and rural domains. That is, the training, validation, and test sets have 2522, 1669, and 1796 images, respectively.

Table 1.

The LoveDA dataset’s division.

For the GID dataset, we first divided 150 images into 90, 30, and 30 images according to a 3:1:1 ratio for the training, validation, and test sets. These images were then cropped to patches without overlapping. That is, there are 16,380, 5460, and 5460 images in the training, validation, and test sets, respectively.

We used the SGD optimizer during the training process with a momentum of 0.9 and a weight decay of . The initial learning rate was 0.01, and the “poly” schedule had a power of 0.9. The batch size was set to 8, and training epochs were set to 100. The training was distributed on two Nvidia Tesla V100 graphics cards. We used randomly rotated, mirrored, and cut patches of the input image for data augmentation. All of the networks’ backbones were pre-trained on ImageNet.

3.3. Evaluation Metrics

We used four standard metrics widely used to evaluate the performance of semantic segmentation: Mean Intersection over Union (mIoU), Intersection over Union (IoU), overall accuracy (OA), and Kappa. IoU is a composite metric. It is the ratio of the overlap area between the predicted map and the ground truth (GT) for a particular category to the union area. mIoU is the IoU value calculated over each category and then averaged. The OA measures the proportion of correctly categorized category pixels to all of the categories pixels. To gauge the robustness of the model, consistency testing uses the Kappa metric. All four evaluation metrics are computed pixel for pixel. Equations (9)–(13) are the definitions of these evaluation metrics.

where C is the total number of classes, and denotes the number of instances of class i that are predicted to be classes j.

3.4. Experiments on the GID Dataset

CFFNet was compared with the other SOTA segmentation methods, including FCN8S [14], UNet [20], PAN [46], DeepLab V3+ [41], UNet++ [23], LinkNet [47], PSPNet [25], FarSeg [48], and Factseg [49], to prove its effectiveness.

As can be seen in Table 2, CFFNet achieves the best mIoU, OA, and Kappa on the GID dataset. In addition, the two categories of built-up and background have significantly higher IoU values compared to the other methods, while the IoU values of the other categories (e.g., farmland, forest, meadow, and water) were comparable to them. Since the GID dataset covers a wide area, covering more than 60 cities in China, its background samples are more complex, covering richer and more varied multi-scale objects. Our method achieves the best background IoU, indicating that our method is more capable of extracting multi-scale information. In addition, the Kappa measures a model’s ability to handle the imbalance of category distribution, and our method achieves the best Kappa score without adding any training strategy and loss function, which is due to our method’s stronger ability to capture multi-scale information and better focus on small-scale objects in images.

Table 2.

The performance of different methods on the GID dataset. The highest score in each column is shown in bold.

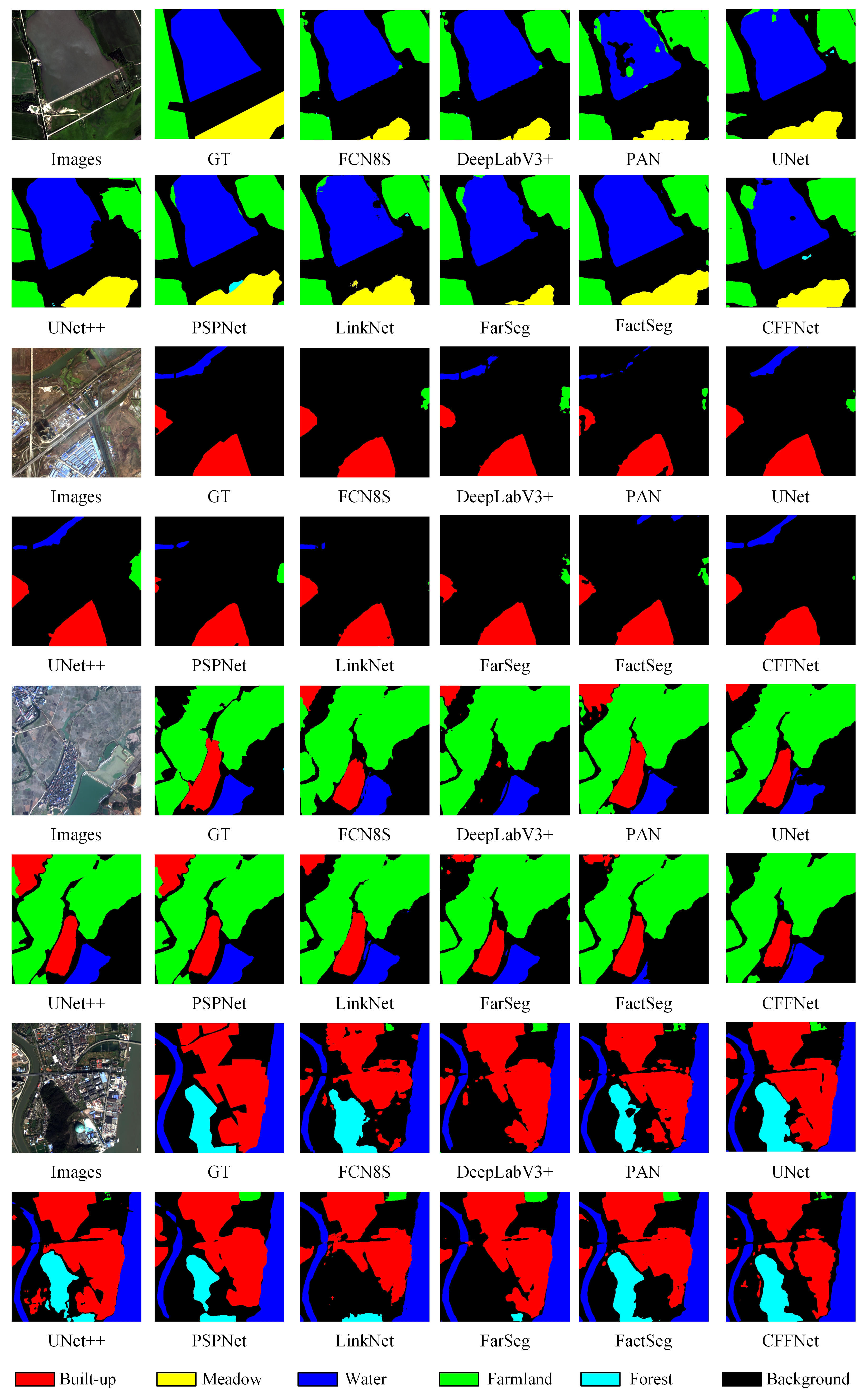

Figure 3 shows the visualization results of the GID dataset. Figure 3 shows that our method is more capable of focusing on small-scale objects. In the second image of Figure 3, only UNet++ and CFFNet correctly identified the water. Moreover, UNet++ is not as effective as CFFNet when dealing with small-scale objects in more complicated scenes, as seen in the fourth image in Figure 3, and it is unable to identify the water in the top left corner.

Figure 3.

Visualization results on the GID dataset.

3.5. Experiments on the LoveDA Dataset

Since the test set’s data from the LoveDA dataset is not annotated, the prediction results need to be uploaded to the specified website to obtain the results, but the website only gives the IoU and mIoU metrics. This is not sufficient to evaluate the model; other indicators are needed. Thus, we also performed comparison experiments on the validation set as well as ablation experiments in evaluating the effectiveness of different methods more comprehensively, and all of the methods were tested using the same parameters for the sake of fairness. Table 3 shows the results of the LoveDA test set on different methods. The performance of different methods on the LoveDA validation set is listed in Table 4.

Table 3.

The performance of different methods on the LoveDA test set. The highest score in each column is shown in bold.

Table 4.

The performance of different methods on the LoveDA validation set. The highest score in each column is shown in bold.

As can be seen in Table 3, CFFNet achieves the best mIoU on the test set, which is higher than that of FactSeg, the latest segmentation model proposed in the field of RS in 2022. In addition, the built-up, road, water, and barren categories have significantly higher IoU values compared to the other methods. The training and test sets’ data distribution might affect the performance of the model to some extent. A robust model will perform well despite the inconsistent data distribution. Even for the same sensor, the variability of data distribution from city to city and from rural area to urban area is large in different regions due to economic, geographic, demographic, and other factors. As shown in Table 1, the training set in the LoveDA dataset does not contain images of ChangZhou but images of ChangZhou account for more than half of the test set. CFFNet was able to achieve the best mIoU on the test set, indicating our method’s effectiveness and robustness.

The most popular evaluation metric for semantic segmentation is mIoU, which may be used to calculate the percentage of correctly classified pixels. However, the sample size of each category in the actual RS image semantic segmentation is frequently not distributed equally. The model can potentially privilege large categories and neglect small categories. For example, if there is 1 positive sample for every 9 negative samples, all of them are directly predicted to be negative, and the mIoU is . However, the positive samples are completely “discarded”. In this case, the overall mIoU is high, but some categories cannot be recalled at all. Therefore, a metric such as the Kappa, which penalizes the model’s “bias”, is required to evaluate the model. In the LoveDA validation set, CFFNet achieved the best mIoU, OA, and Kappa, and the Kappa was higher than that of FCN8S, DeepLabV3+, PAN, UNet, UNet++, PSPNet, LinkNet, FarSeg, and FaceSeg by , , , , , , , , and , respectively. This further illustrates that our model can handle inconsistent class distributions better without using any special training strategy or modifying the loss function.

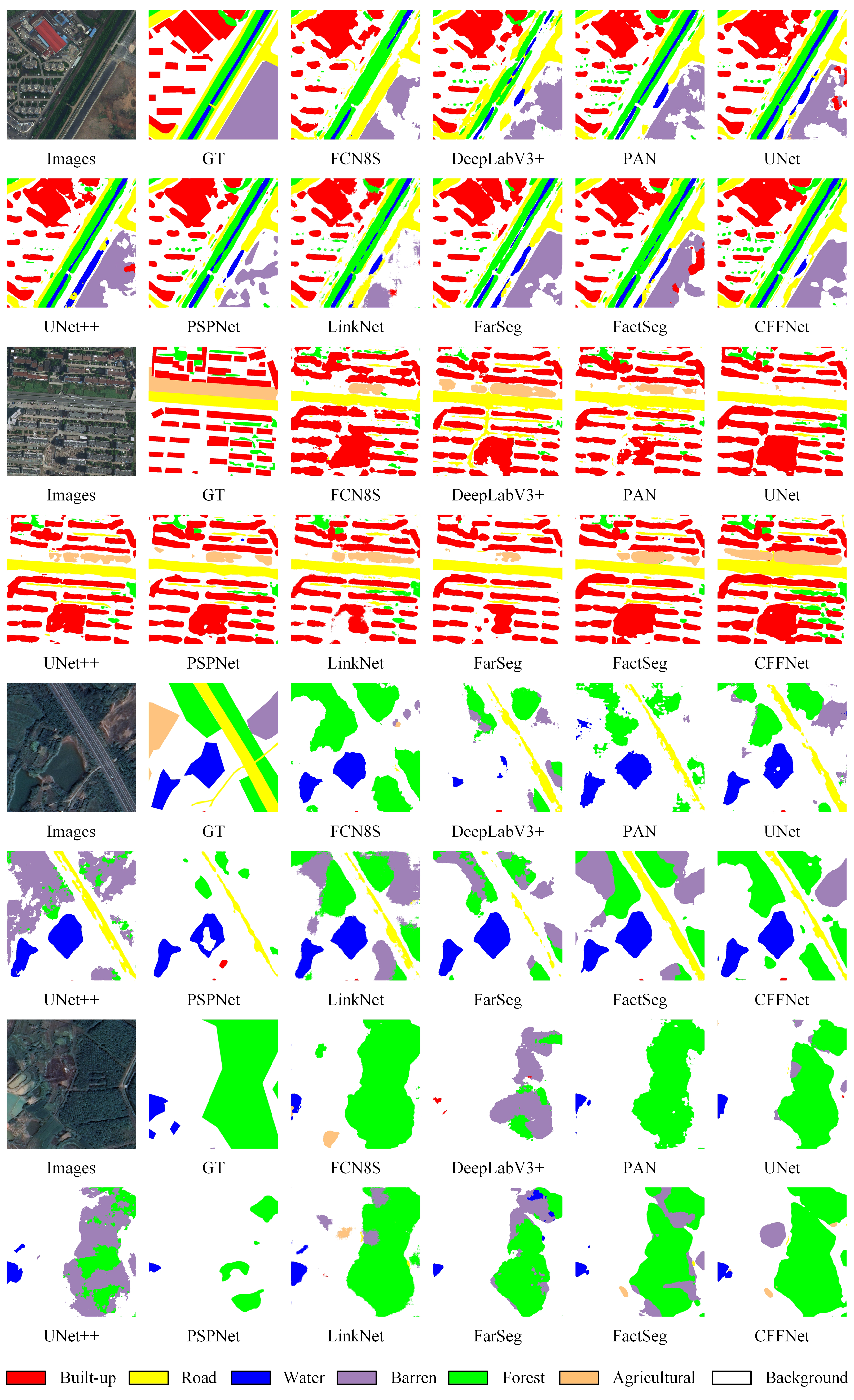

Figure 4 shows the visualization results of the LoveDA validation set. When extracting natural elements such as forest, agricultural, barren, and water, as shown in Row 5–8 of Figure 4, the results of CFFNet extraction are closer to the reality and the extracted ground objects are more complete without many misclassifications or object boundary and surface discontinuities, such as those found with DeepLabV3, UNet, UNet++, LinkNet, FarSeg, FactSeg, PAN, and PSPNet. In addition, although FCN8S does not have the above problem when extracting ground objects, its recall rate is not as good as that of CFFNet. For example, in the first image of Figure 4, FCN8S does not fully recognize the water; in the second image of Figure 4, FCN8S does not fully recognize the agricultural; in the third image of Figure 4, FCN8S does not recognize the roads and barren. When extracting natural elements in urban scenes, as shown in Row 1–4 of Figure 4, CFFNet’s performance outperforms the other methods. When extracting artificial objects (e.g., roads), as shown in the first and third images of Figure 4, CFFNet outperforms the other methods in terms of extracted road continuity.

Figure 4.

Visualization results on the LoveDA validation set.

In addition, we conducted experiments to analyze the proposed modules. The following are the settings for the experiment.

- (1)

- Baseline: The baseline method employs CE loss optimization. The baseline uses a modified ResNet50 as the encoder. The baseline’s decoder was a multiclass segmentation variation of an FPN, as described in Section 2.2.2, with a 3 × 3 convolution applied after F1, F2, F3, and F4, respectively.

- (2)

- Baseline + FF: This is the baseline with the FF module and CE loss optimization. The 3 × 3 convolution added after F1, F2, F3, and F4, respectively, in the baseline was removed.

- (3)

- Baseline + CF: This is the baseline with the CF module and CE loss optimization.

- (4)

- Ours: This is the full CFFNet framework.

The performance of each module is shown in Table 5. The baseline’s low performance of a mIoU shows that it has a limited capacity to handle the semantic segmentation of multi-scale objects in HRRSIs. Notably, the FF module only slightly improves the mIoU and OA by and , respectively. This is because, while the FF module can extract multi-scale features and handle multi-scale objects better, it does not further process these features, causing features that are not very useful to interfere with the segmentation results. Additionally, it is important to note that the baseline with the CF module reduces mIoU, OA, and Kappa by , , and , respectively. This is because, here, the CF module is assigning the baseline’s fused feature weights. However, the fused features in the baseline are directly fused with features of different resolutions without the further extraction of multi-scale information, resulting in the model’s worse performance when the CF module is added straight after the baseline. However, by extracting the multiscale information in the fused features in the baseline before the CF module, that is, the baseline with the FF and CF modules, namely, CFFNet, the performance of the model is significantly improved, with a and improvement in mIoU relative to the baseline and baseline with FF modules, respectively.

Table 5.

The performance of different modules. The highest score in each column is shown in bold.

4. Conclusions

This work proposes a context-driven feature-focusing network (CFFNet) for the semantic segmentation of HRRSIs. Precisely, the proposed network consists of three parts: a deep residual encoder, a CFF decoder, and a classifier. After extracting the deep semantic features using the deep residual encoder, we designed the CFF decoder to extract the multi-scale and weighted features. Next, a classifier is employed to produce dense output. In two benchmark HRRSI datasets, comparative experiments showed that CFFNet outperformed the SOTA DL-based semantic segmentation approaches, including FCN8S, UNet, PSPNet, LinkNet, DeepLabv3+, UNet++, PAN, FarSeg, and Factseg. The efficiency of each proposed module was demonstrated in ablation experiments.

Author Contributions

Conceptualization, Z.X.; methodology, X.T.; software, X.T.; validation, Z.W.; formal analysis, X.T.; investigation, X.T.; resources, Z.X.; data curation, Y.Z.; writing—original draft preparation, X.T.; writing—review and editing, D.L.; visualization, X.Q.; supervision, Z.X.; project administration, Z.X.; and funding acquisition, Z.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by “Science and Technology Project of National Bio Energy Co., Ltd.”, No. 52789921001M.

Data Availability Statement

Datasets relevant to our paper are available online.

Acknowledgments

The numerical calculations in this paper have been done on the supercomputing system in the Supercomputing Center of Wuhan University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cheng, X.; He, X.; Qiao, M.; Li, P.; Hu, S.; Chang, P.; Tian, Z. Enhanced contextual representation with deep neural networks for land cover classification based on remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102706. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, C.; Li, R.; Duan, C.; Meng, X.; Atkinson, P.M. Scale-Aware Neural Network for Semantic Segmentation of Multi-Resolution Remote Sensing Images. Remote Sens. 2021, 13, 5015. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Wang, L.; Zhang, C. Land cover classification from remote sensing images based on multi-scale fully convolutional network. Geo-Spat. Inf. Sci. 2022, 25, 1–17. [Google Scholar] [CrossRef]

- Li, N.; Huo, H.; Fang, T. A Novel Texture-Preceded Segmentation Algorithm for High-Resolution Imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2818–2828. [Google Scholar] [CrossRef]

- Vapnik, V.; Chervonenkis, A. A note on one class of perceptrons. Autom. Remote Control 1964, 25, 788–792. [Google Scholar]

- Pavlov, Y.L. Random Forests. Karelian Cent. Russ. Acad. Sci. Petrozavodsk. 1997, 45, 5–32. [Google Scholar]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91. [Google Scholar] [CrossRef]

- Teichmann, M.; Araujo, A.; Zhu, M.; Sim, J. Detect-to-Retrieve: Efficient Regional Aggregation for Image Search. CoRR 2018, arXiv:1812.01584. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Yang, Y.; Wang, X. Classification of Hyperspectral Image Based on Double-Branch Dual-Attention Mechanism Network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, W.; Deng, X.; Zhang, M.; Cheng, Q. Multilabel Remote Sensing Image Retrieval Based on Fully Convolutional Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 318–328. [Google Scholar] [CrossRef]

- Gao, L.; Liu, H.; Yang, M.; Chen, L.; Wan, Y.; Xiao, Z.; Qian, Y. STransFuse: Fusing Swin Transformer and Convolutional Neural Network for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10990–11003. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention Network for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-path Refinement Networks for High-Resolution Semantic Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5168–5177. [Google Scholar] [CrossRef]

- Tan, X.; Xiao, Z.; Wan, Q.; Shao, W. Scale Sensitive Neural Network for Road Segmentation in High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 533–537. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Zhang, C.; Duan, C.; Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 2022, 43, 1131–1155. [Google Scholar] [CrossRef]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral Segmentation Network for Real-Time Semantic Segmentation. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 10–13 September; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 334–349. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Ronneberger, O. Invited Talk: U-Net Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Bildverarbeitung für die Medizin 2017, Heidelberg, Germany, 12–14 March 2017; Maier-Hein, G., Fritzsche, K.H., Deserno, G., Lehmann, T.M., Handels, H., Tolxdorff, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; p. 3. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 418–434. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Zhang, H.; Dana, K.; Shi, J.; Zhang, Z.; Wang, X.; Tyagi, A.; Agrawal, A. Context Encoding for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7151–7160. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, k. Spatial Transformer Networks. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 8–12 December 2015; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28, p. 28. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Advances In Neural Information Processing Systems 30, Proceedings of the Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Advances in Neural Information Processing, Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Wu, W.; Zhang, Y.; Wang, D.; Leo, Y. SK-Net: Deep Learning on Point Cloud via End-to-End Discovery of Spatial Keypoints. In Proceedings of the Thirty-Fourth AAAI Conference On Artificial Intelligence, The Thirty-Second Innovative Applications of Artificial Intelligence Conference and the Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, ASSOC Advancement Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A(2)-Nets: Double Attention Networks. In Advances in Neural Information Processing Systems 31, (NIPS 2018), Proceedings of the 32nd Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 2–8 December 2018; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., CesaBianchi, N., Garnett, R., Eds.; Curran Publishing: Red Hook, NY, USA, 2018; Volume 31, p. 31. [Google Scholar]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent Spatial and Channel ‘Squeeze & Excitation’ in Fully Convolutional Networks. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2018, Granada, Spain, 16–20 September 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 421–429. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-Cross Attention for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Long Beach, CA, USA, 15–20 June 2019; pp. 603–612. [Google Scholar] [CrossRef]

- Lin, X.; Guo, Y.; Wang, J. Global Correlation Network: End-to-End Joint Multi-Object Detection and Tracking. arXiv 2021, arXiv:abs/2103.12511. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 833–851. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. arXiv 2021, arXiv:abs/2110.08733. [Google Scholar]

- Tong, X.Y.; Xia, G.S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. arXiv 2018, arXiv:1805.10180. [Google Scholar]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017. [Google Scholar]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A. Foreground-Aware Relation Network for Geospatial Object Segmentation in High Spatial Resolution Remote Sensing Imagery. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4095–4104. [Google Scholar] [CrossRef]

- Ma, A.; Wang, J.; Zhong, Y.; Zheng, Z. FactSeg: Foreground Activation-Driven Small Object Semantic Segmentation in Large-Scale Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).