UAV-Based Remote Sensing for Detection and Visualization of Partially-Exposed Underground Structures in Complex Archaeological Sites

Abstract

1. Introduction

- Develop a UAV-based remote sensing platform for the acquisition of image and LiDAR data for the documentation of isolated, complex archaeological sites rich in underground structures, such as cisterns and the basements of buildings.

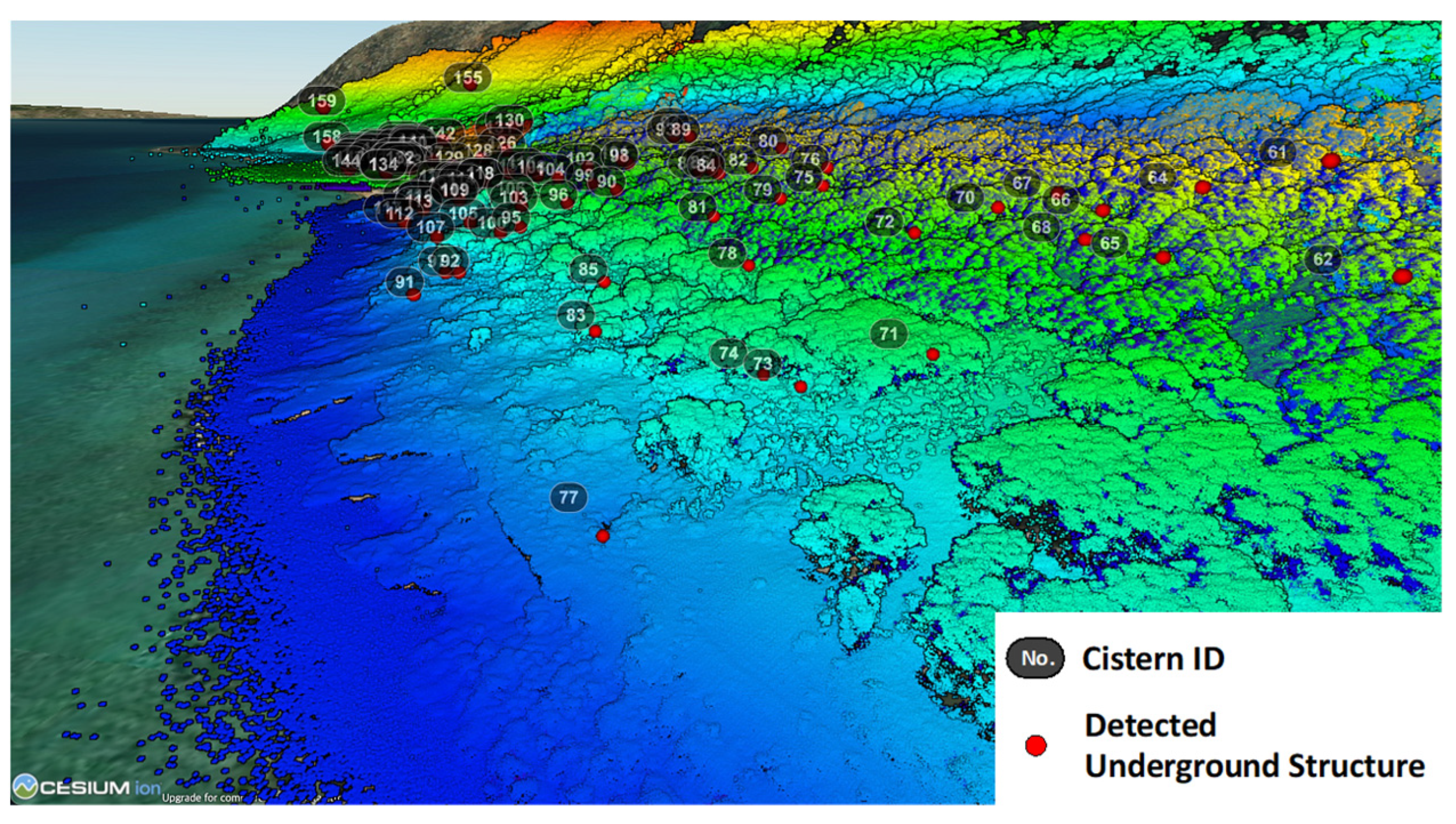

- Develop a robust terrain model generation strategy that can handle rugged terrains with sudden elevation changes, dense vegetation cover, and/or the presence of underground structures.

- Develop a detection strategy for identifying underground structures in LiDAR point clouds.

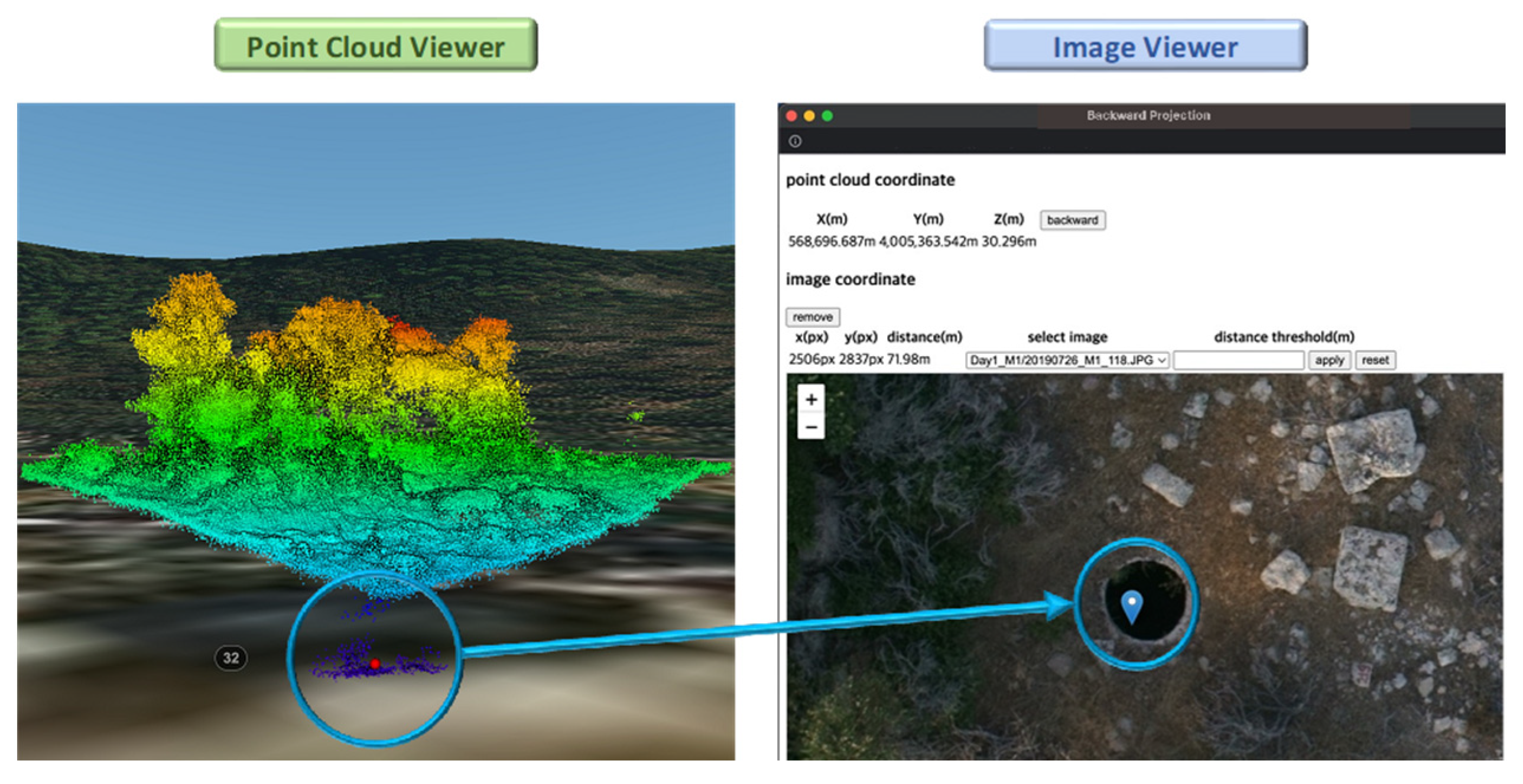

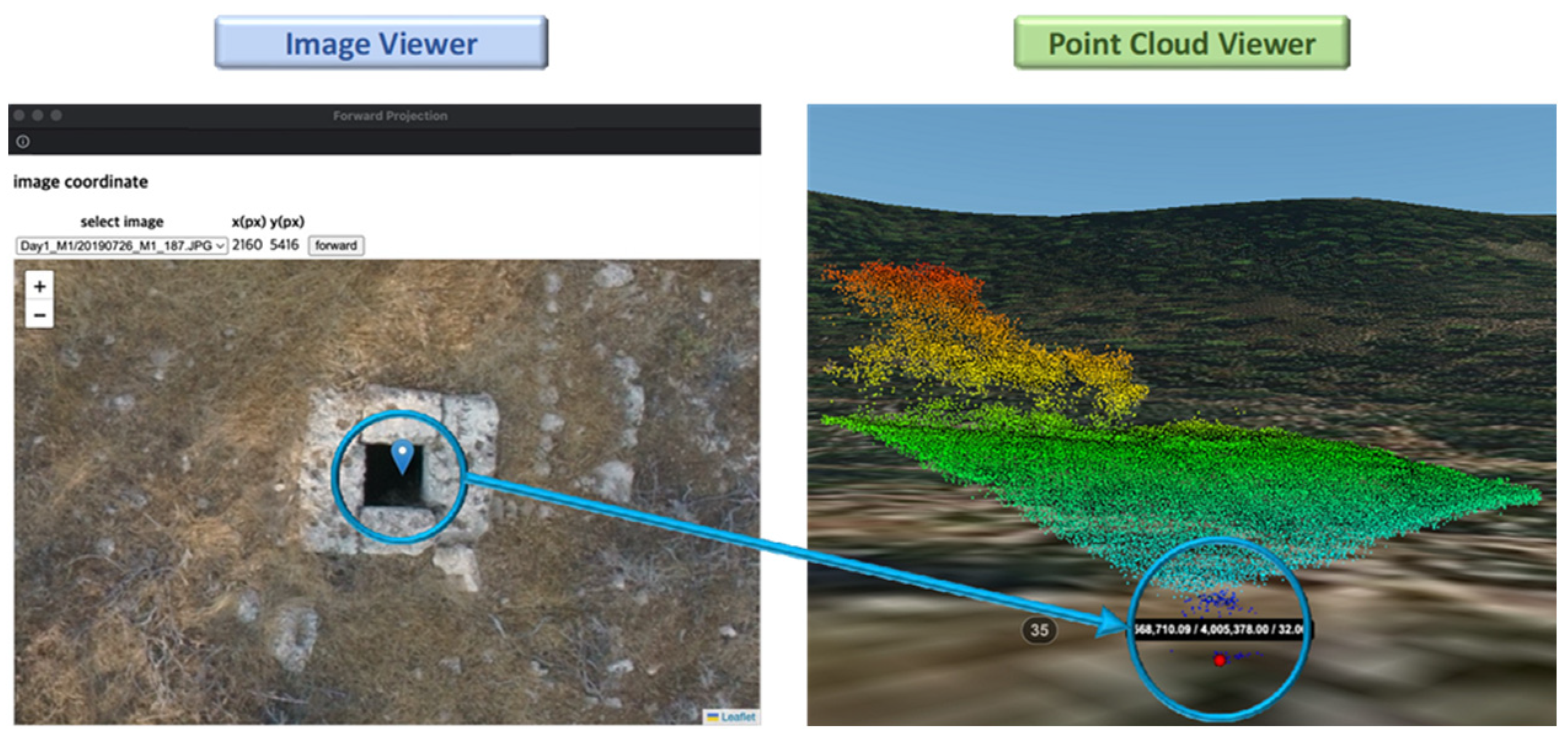

- Develop a web-based visualization portal for illustrating image and LiDAR data together with derived products while providing the end-users with easy-to-use switching between imaging and LiDAR data.

- Illustrate the performance of the developed strategies using real datasets captured over a complex archaeological site.

2. Data Acquisition System, Study Site, and Dataset Description

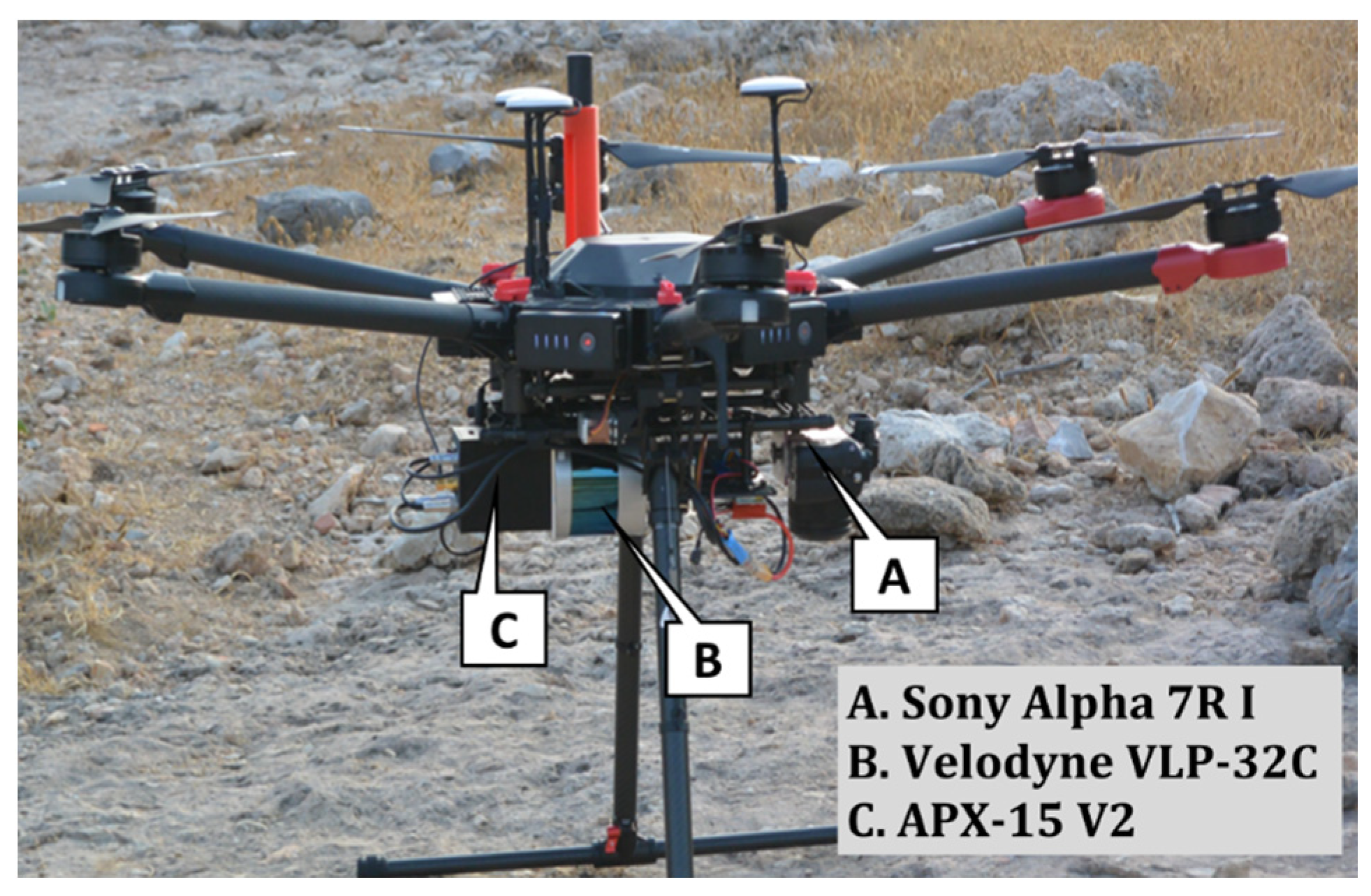

2.1. UAV-Based Mobile Mapping System

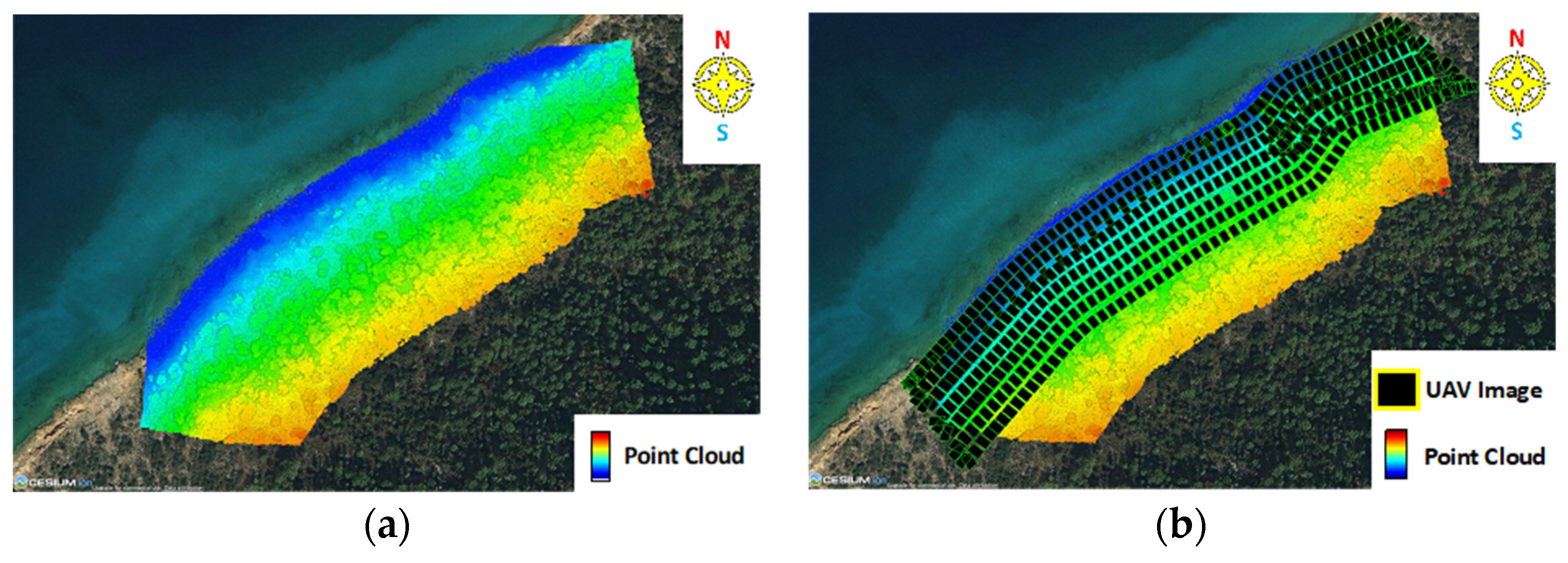

2.2. Study Site and Dataset Description

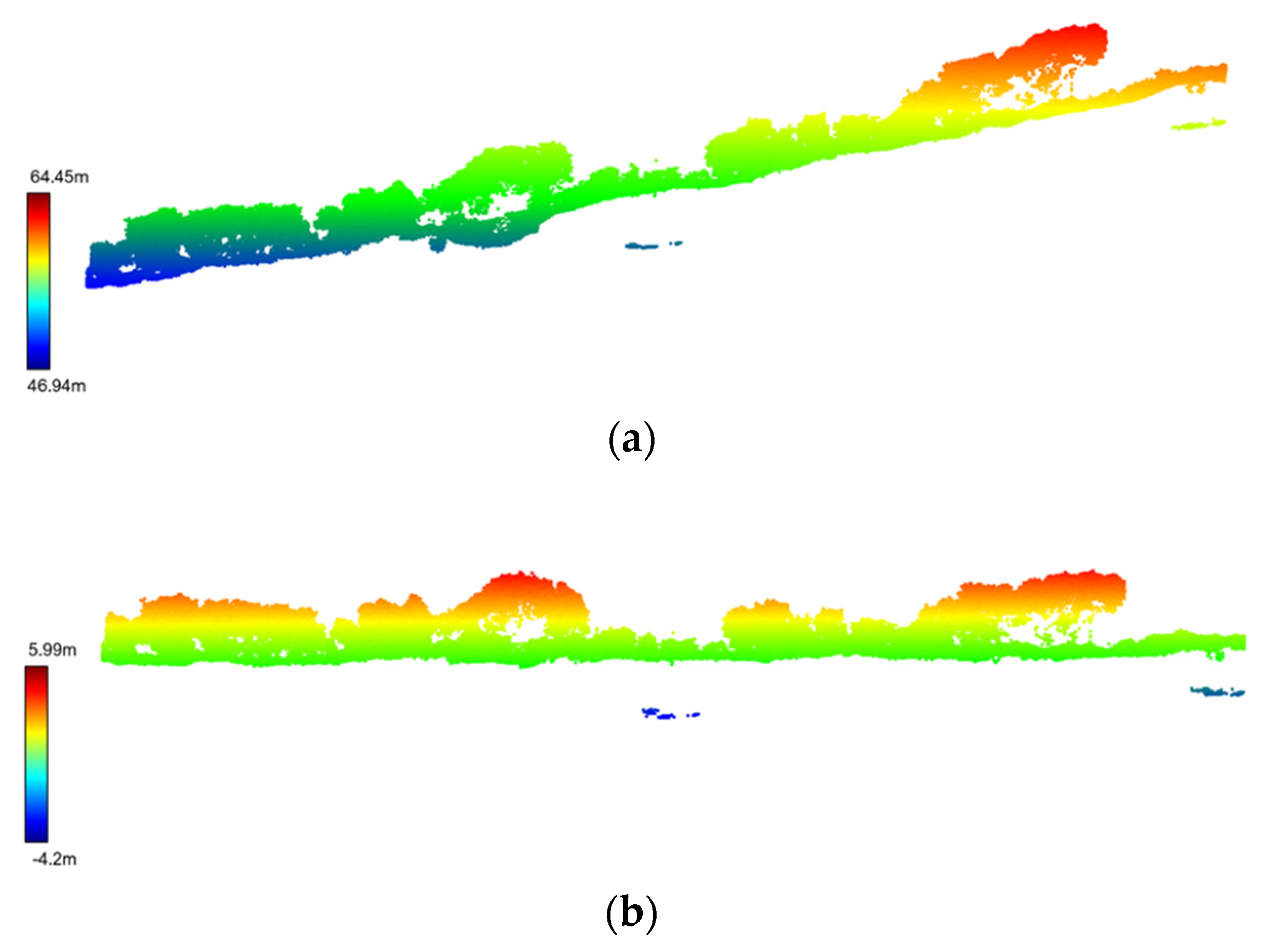

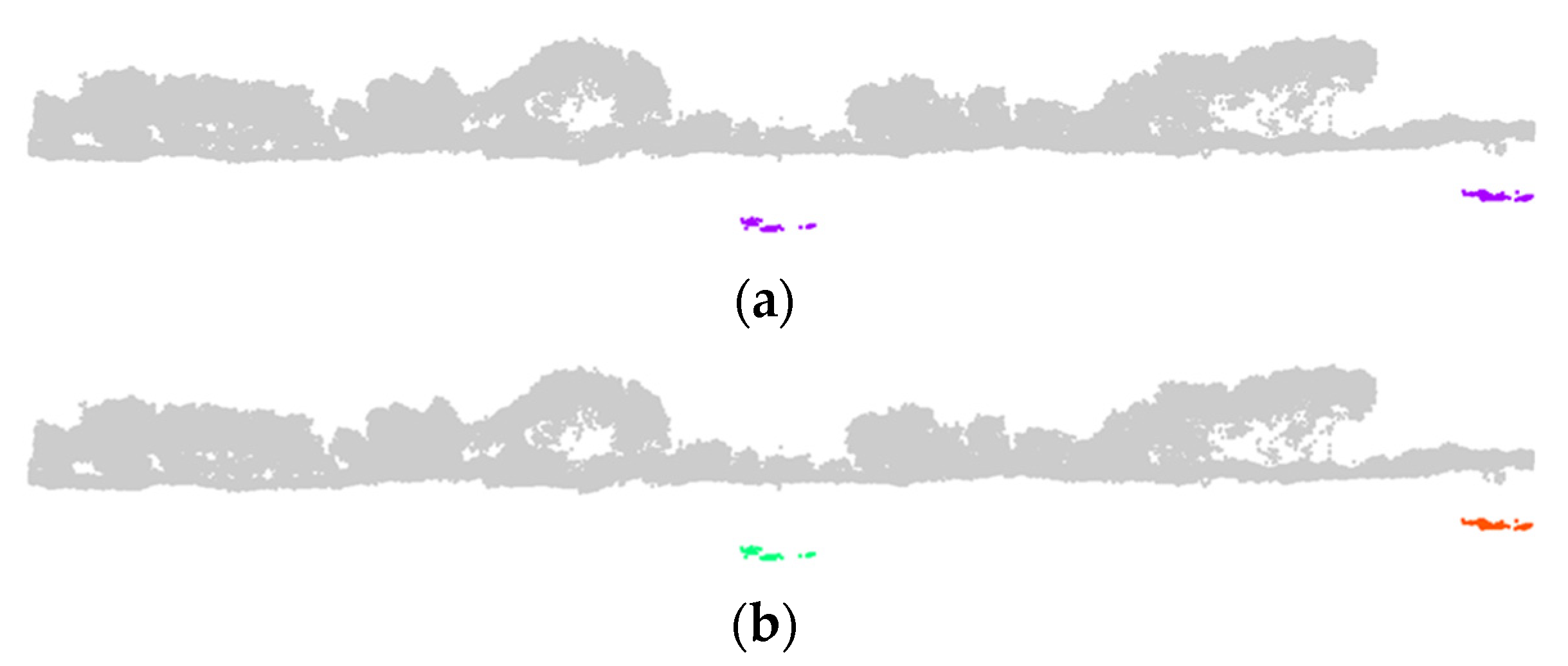

3. Methodology

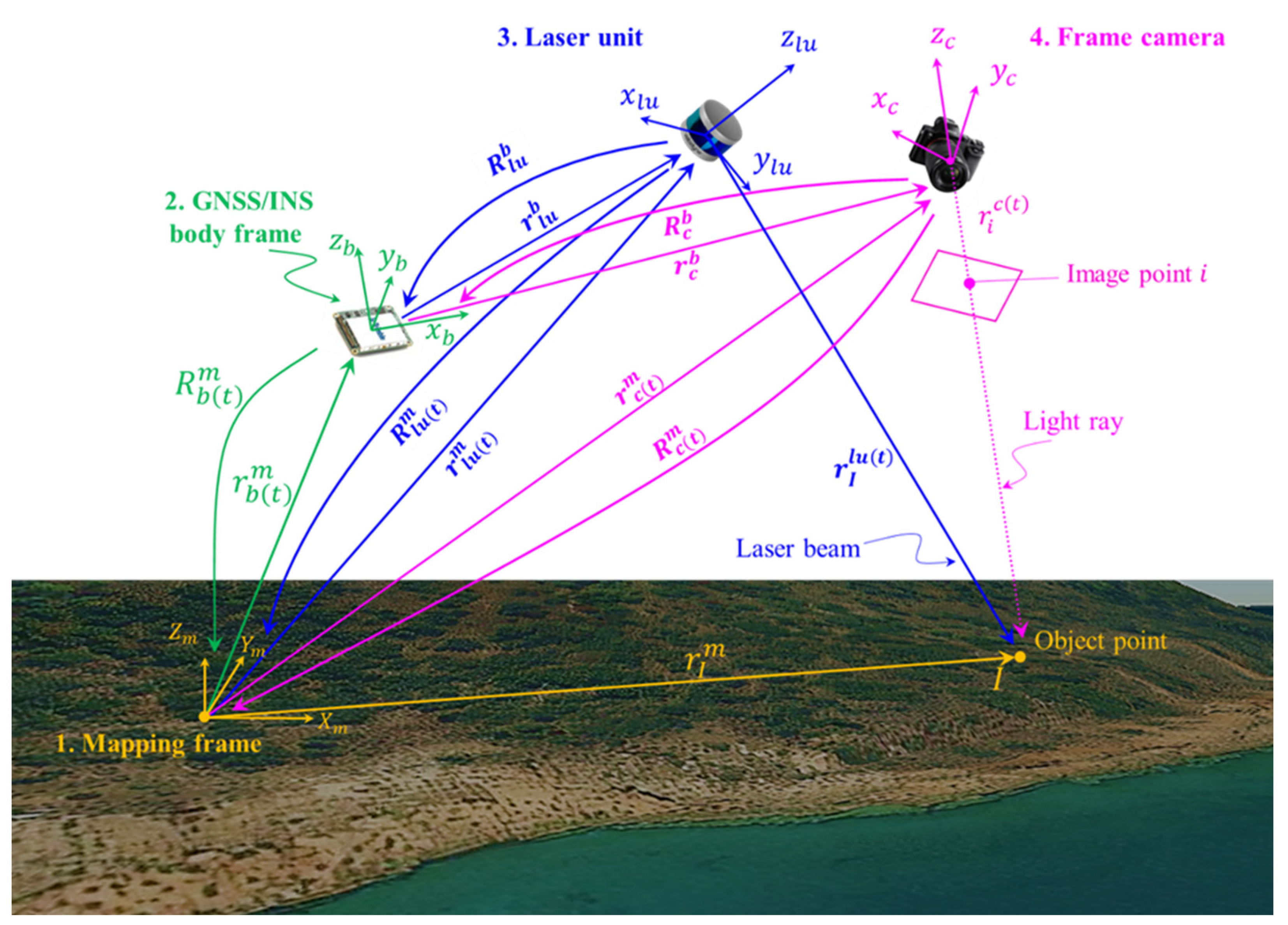

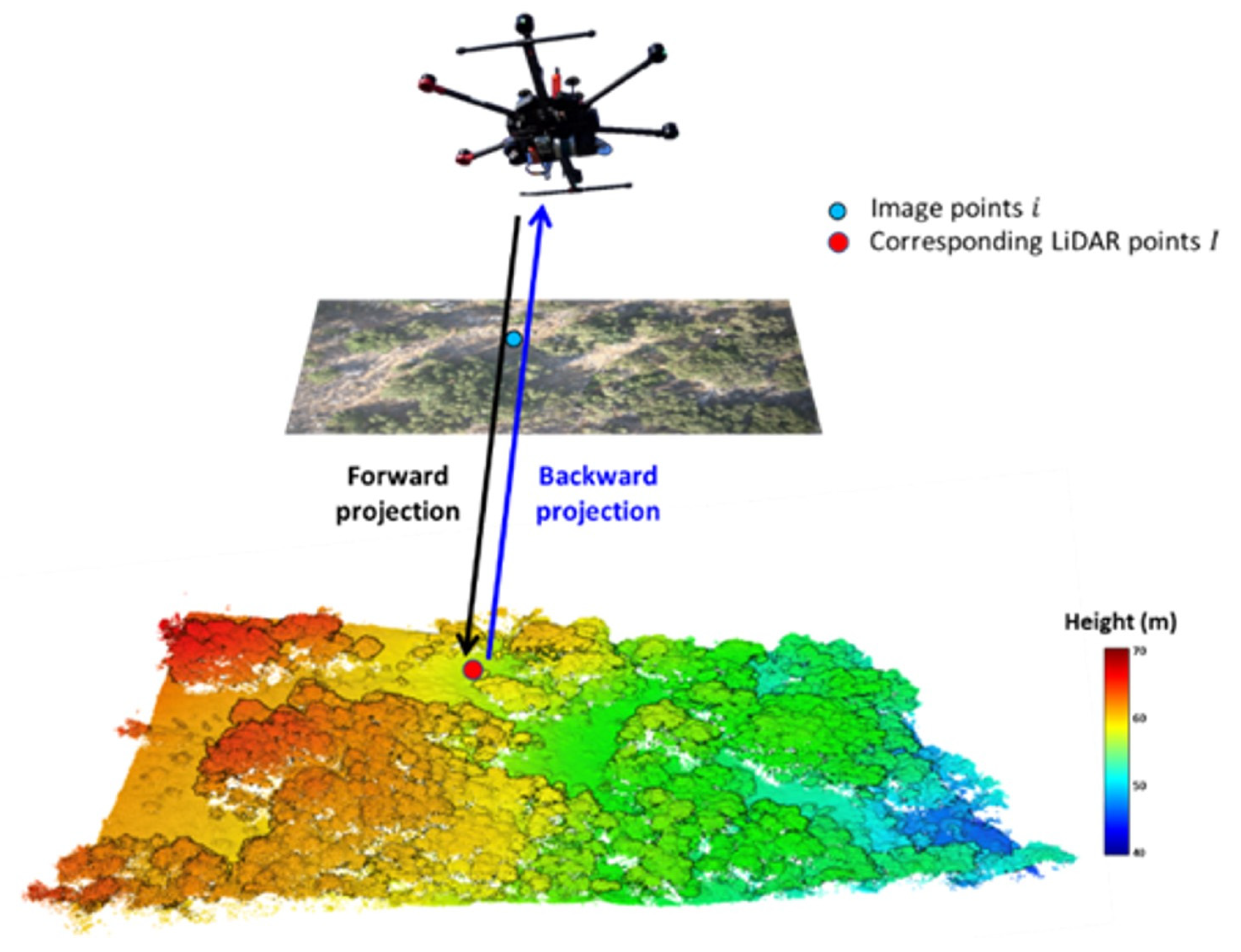

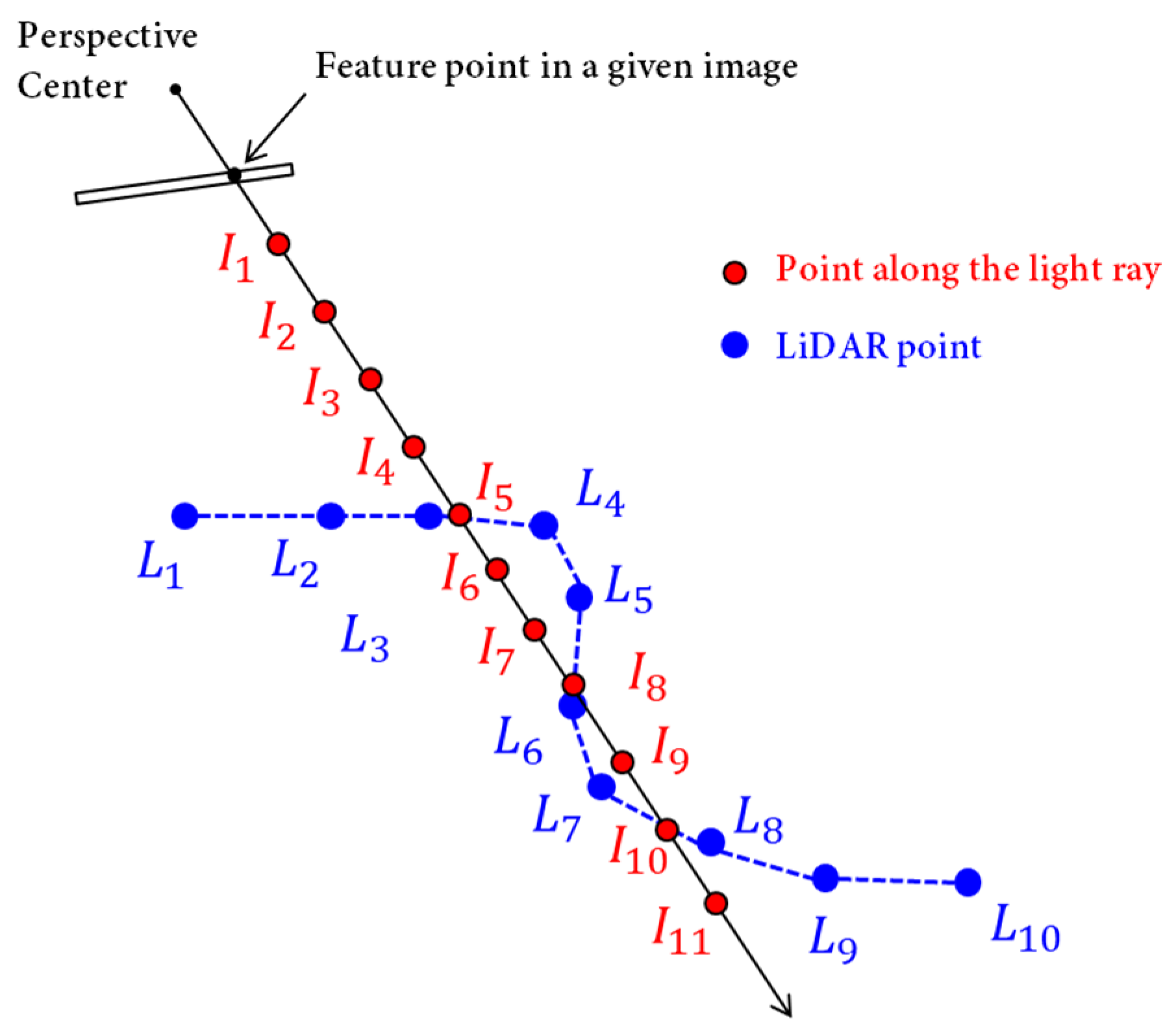

3.1. Point Positioning Equations for GNSS/INS-Assisted LiDAR and Imaging Systems

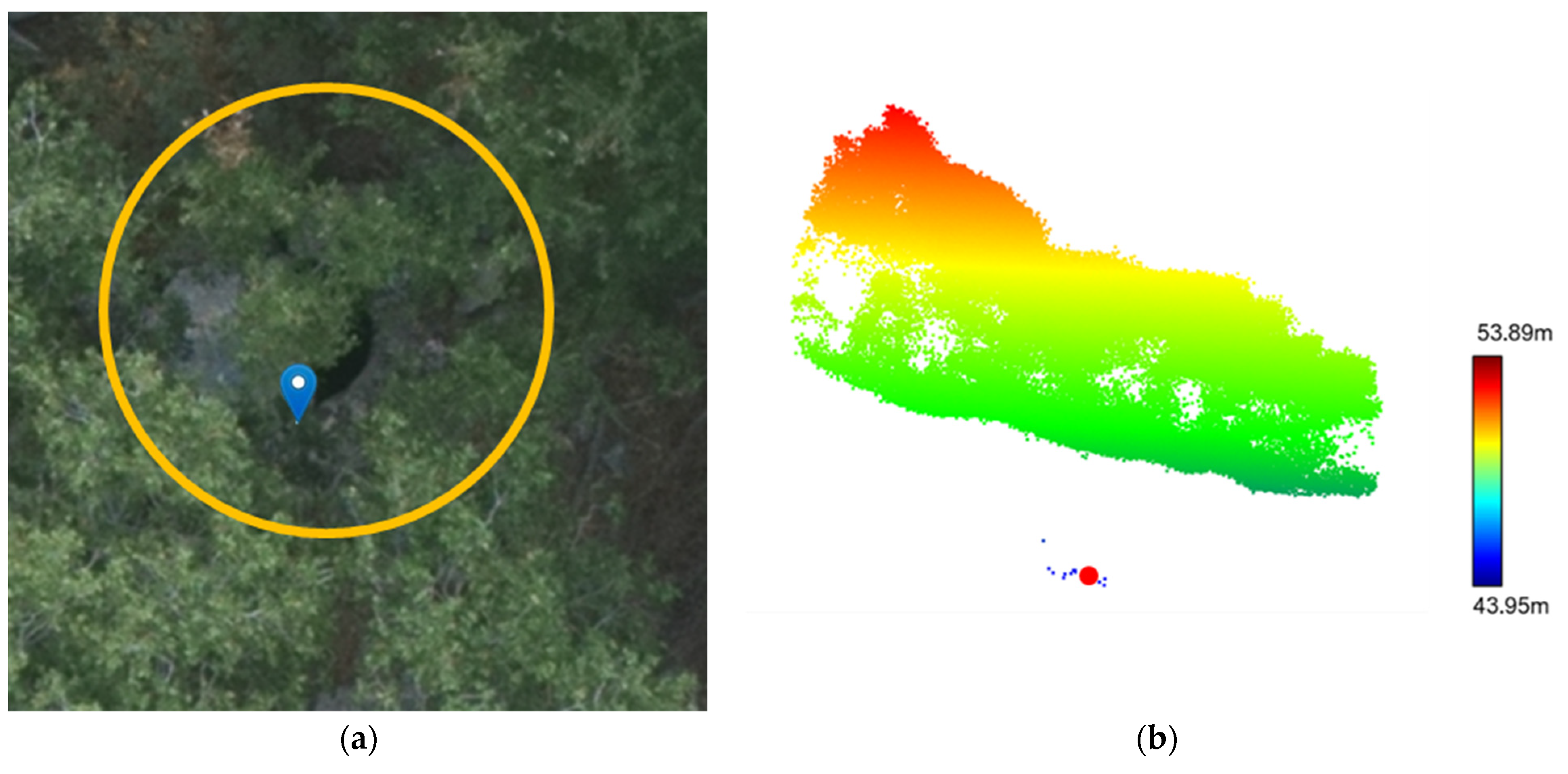

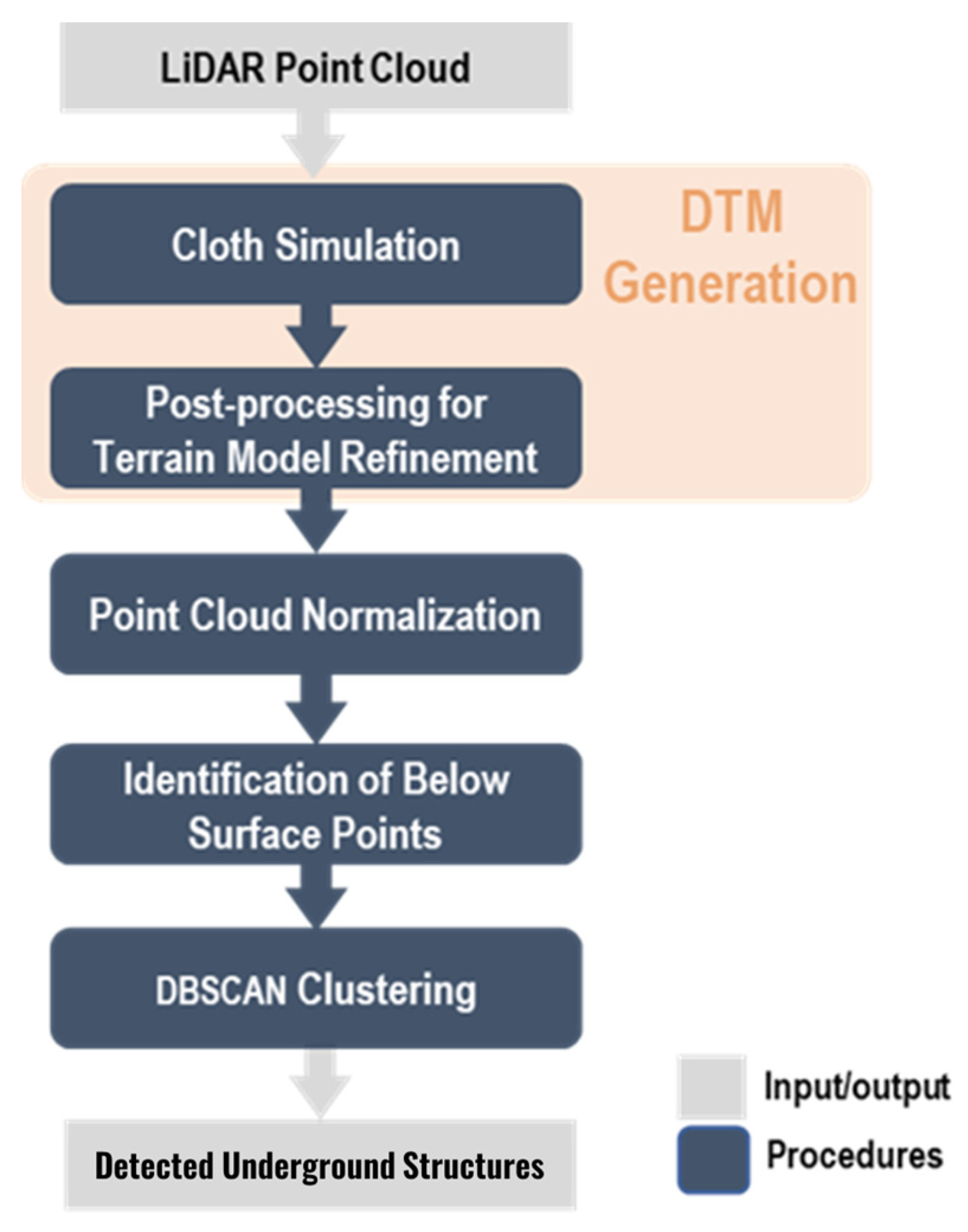

3.2. Underground Structure Detection

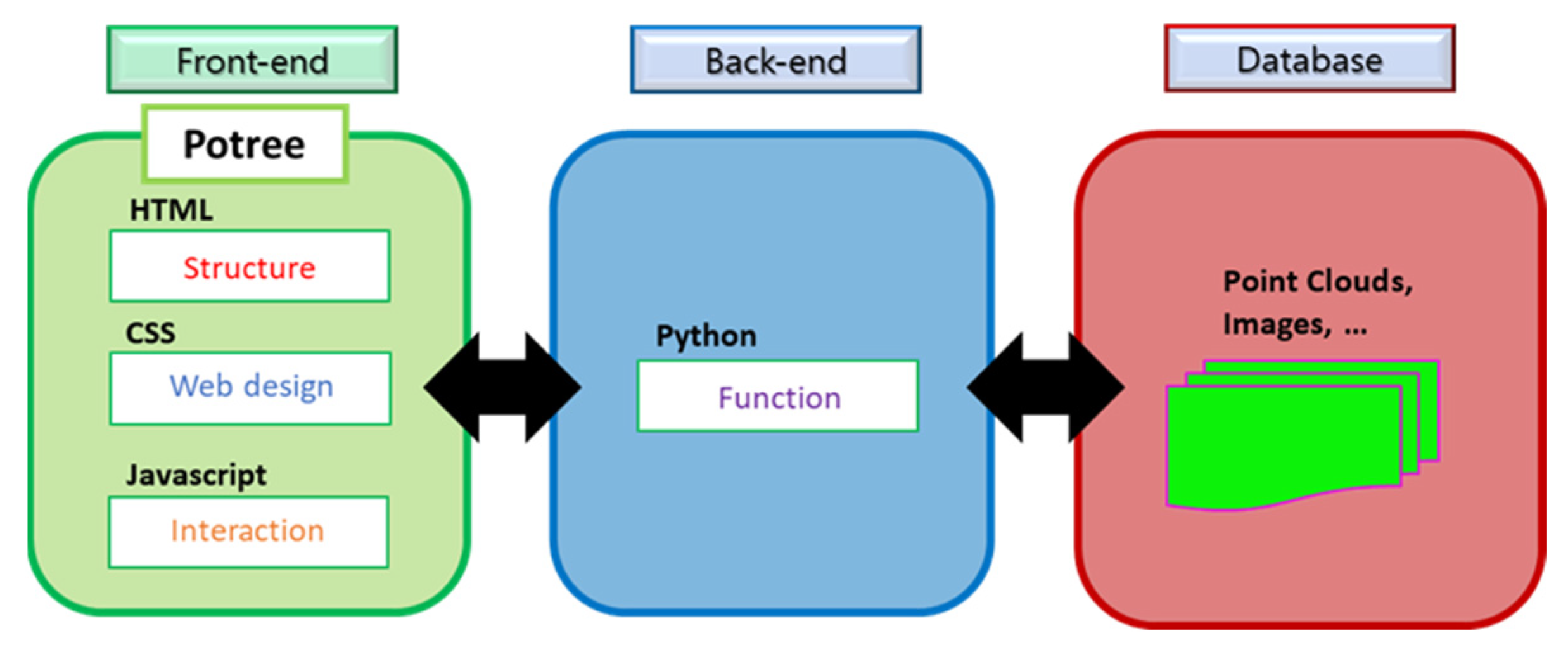

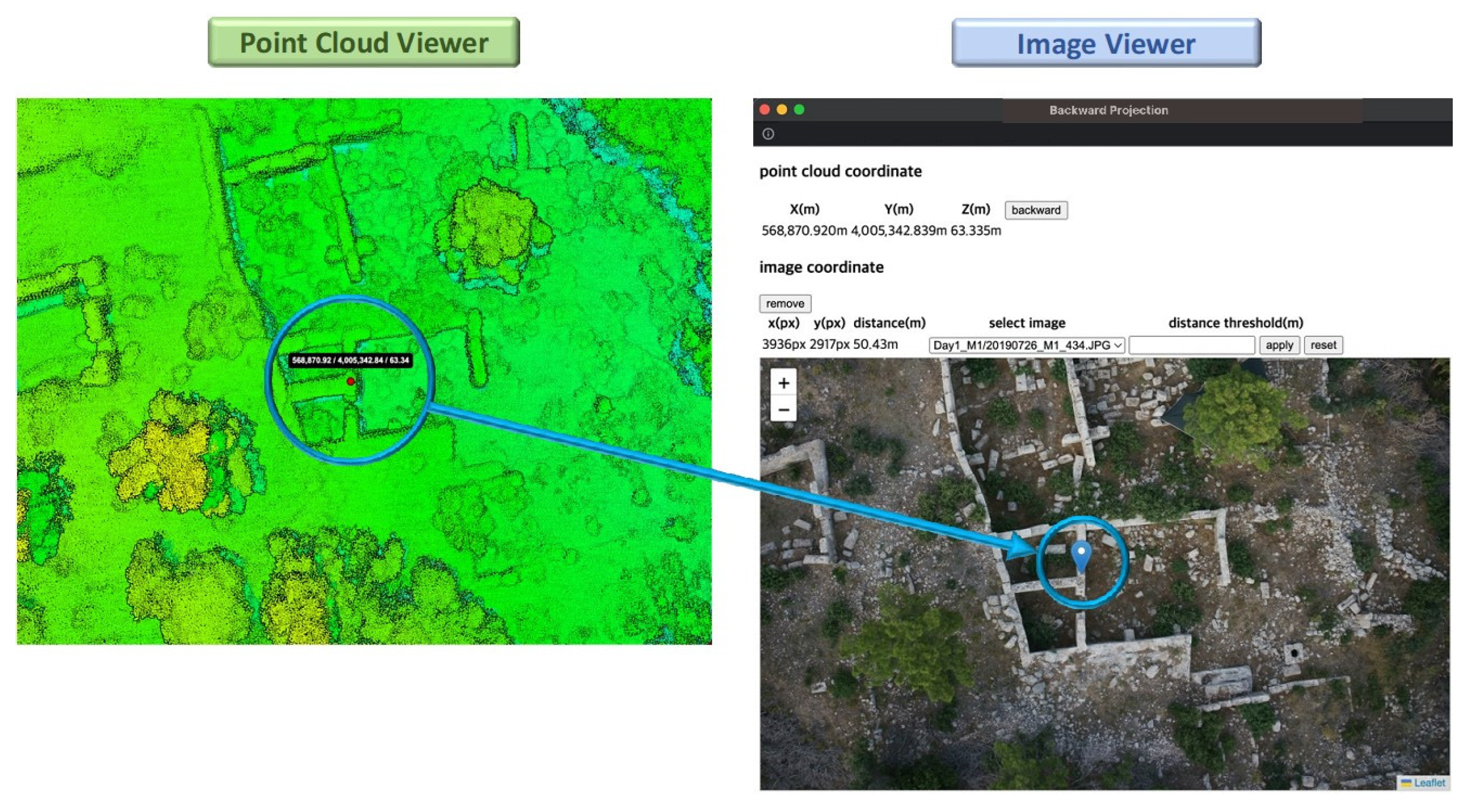

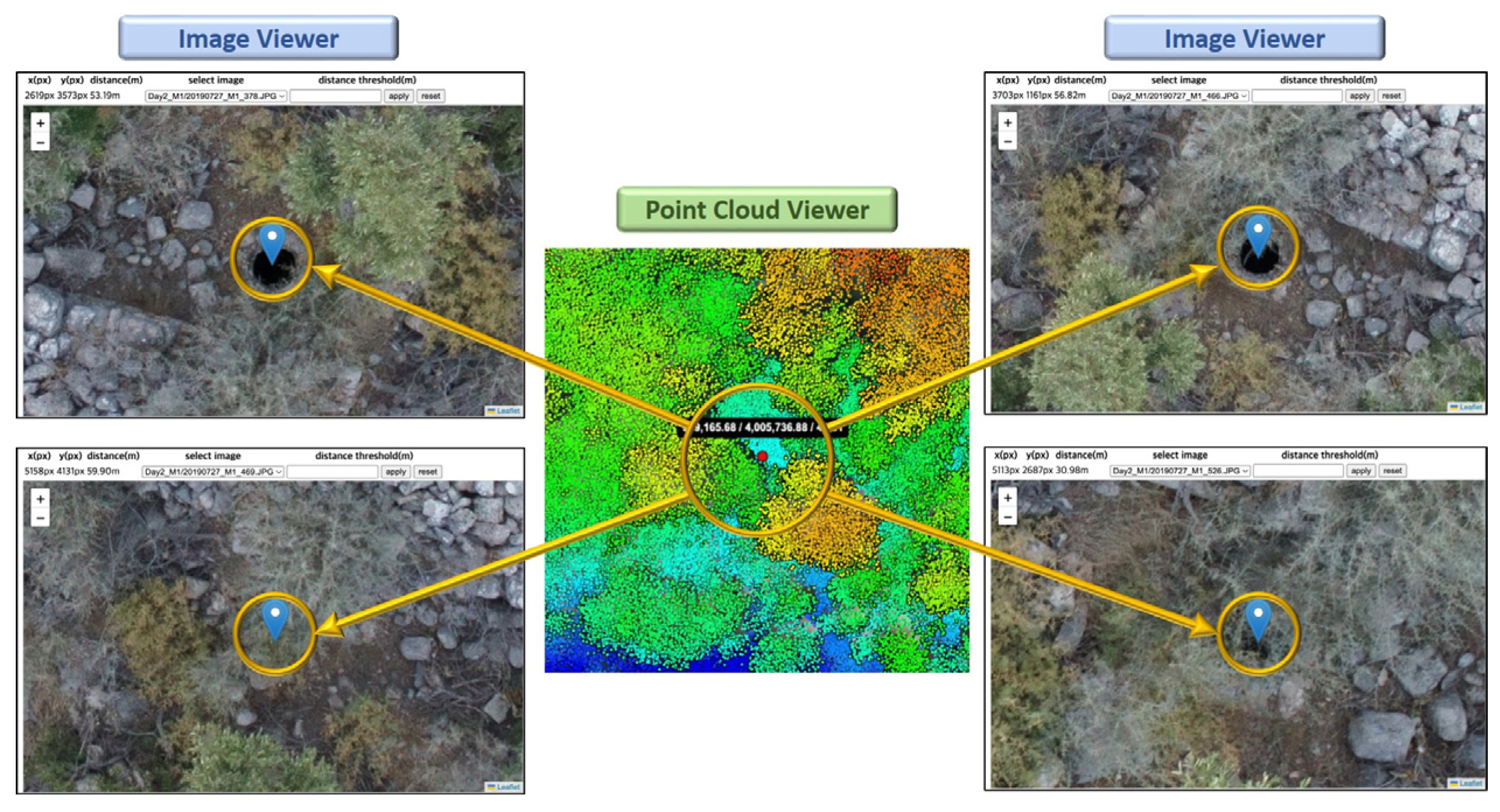

3.3. Web-Visualization Portal

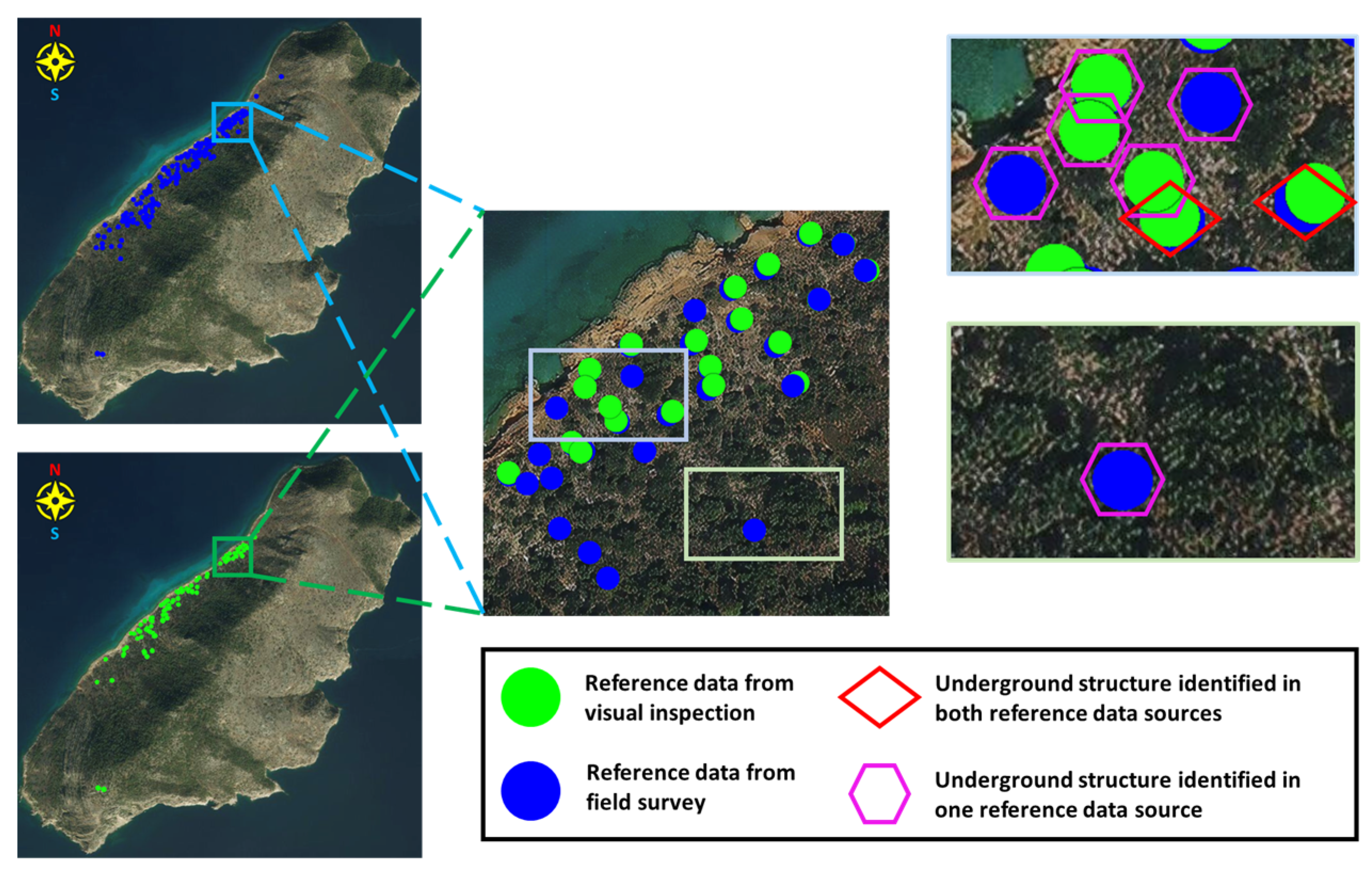

3.4. Establishing a Reference Dataset and Accuracy Assessment

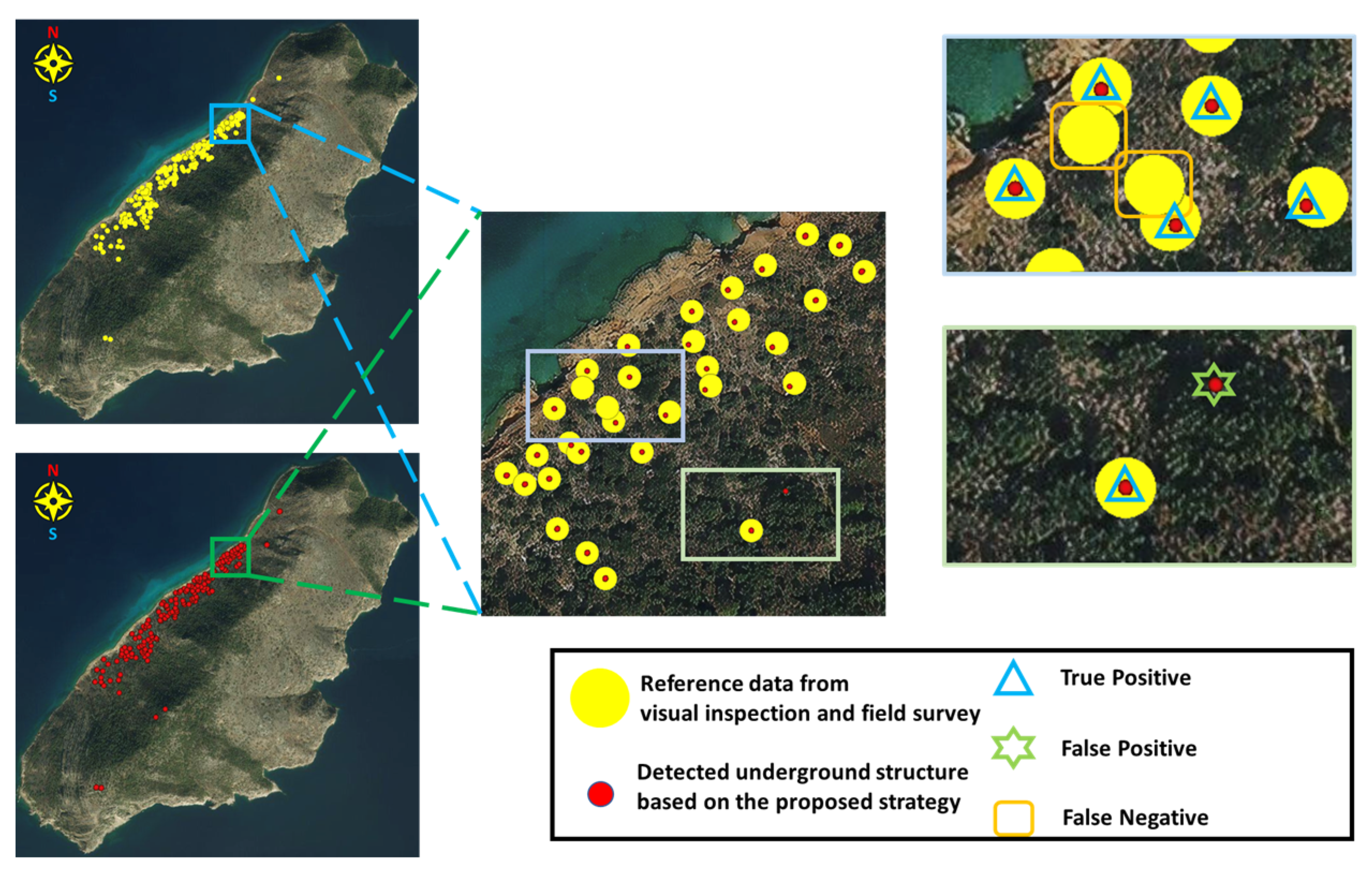

4. Experimental Results and Discussion

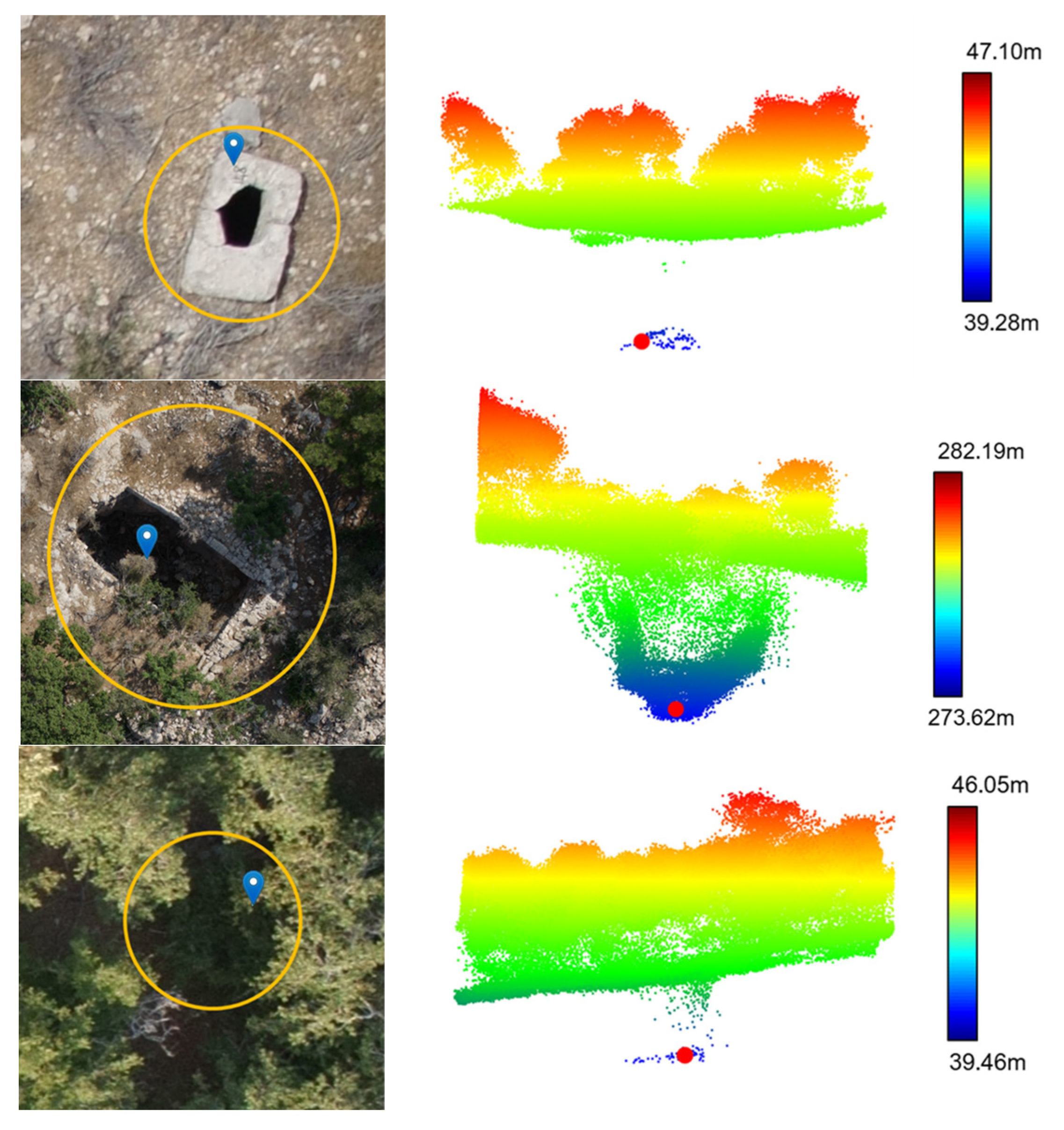

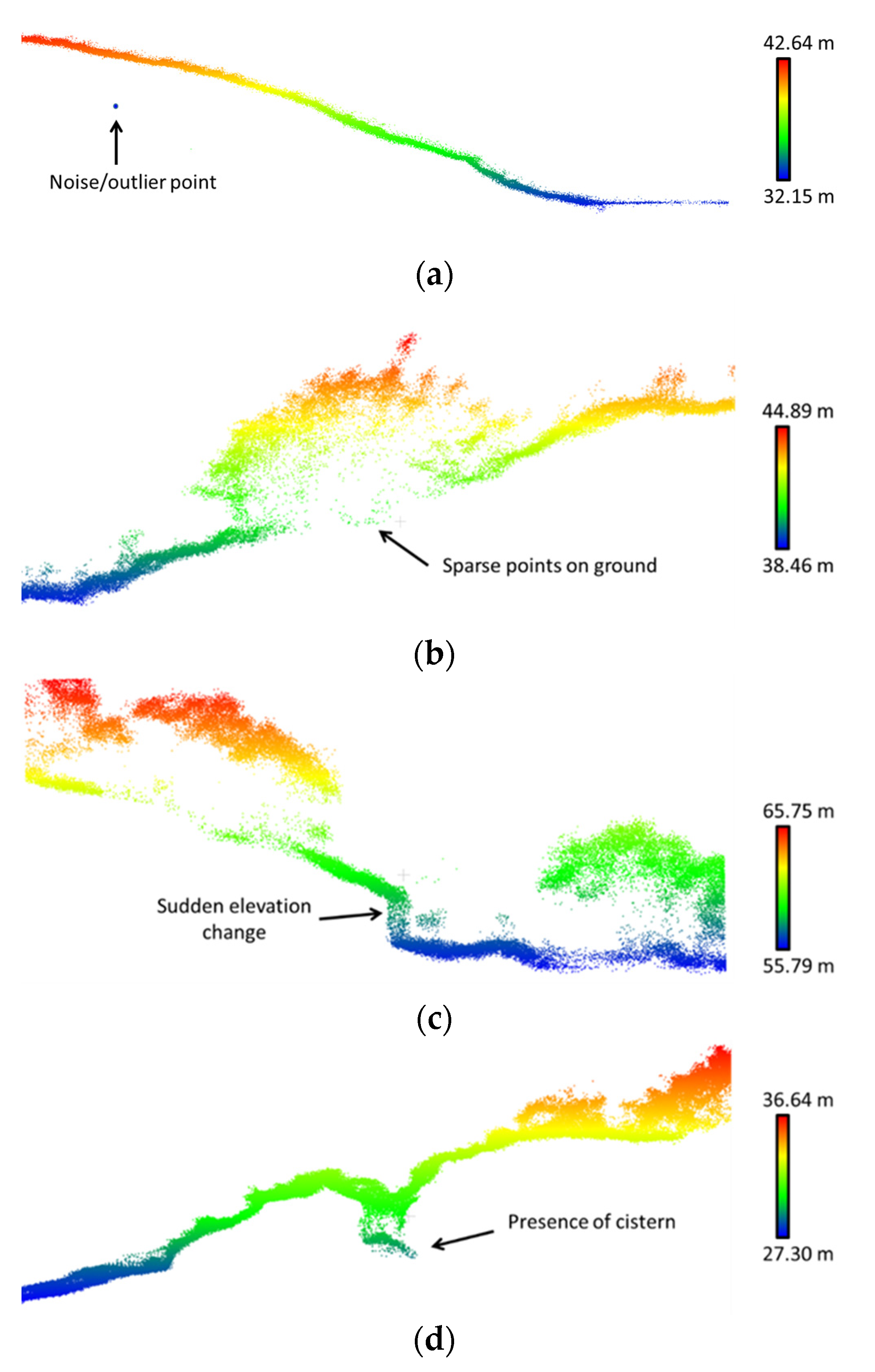

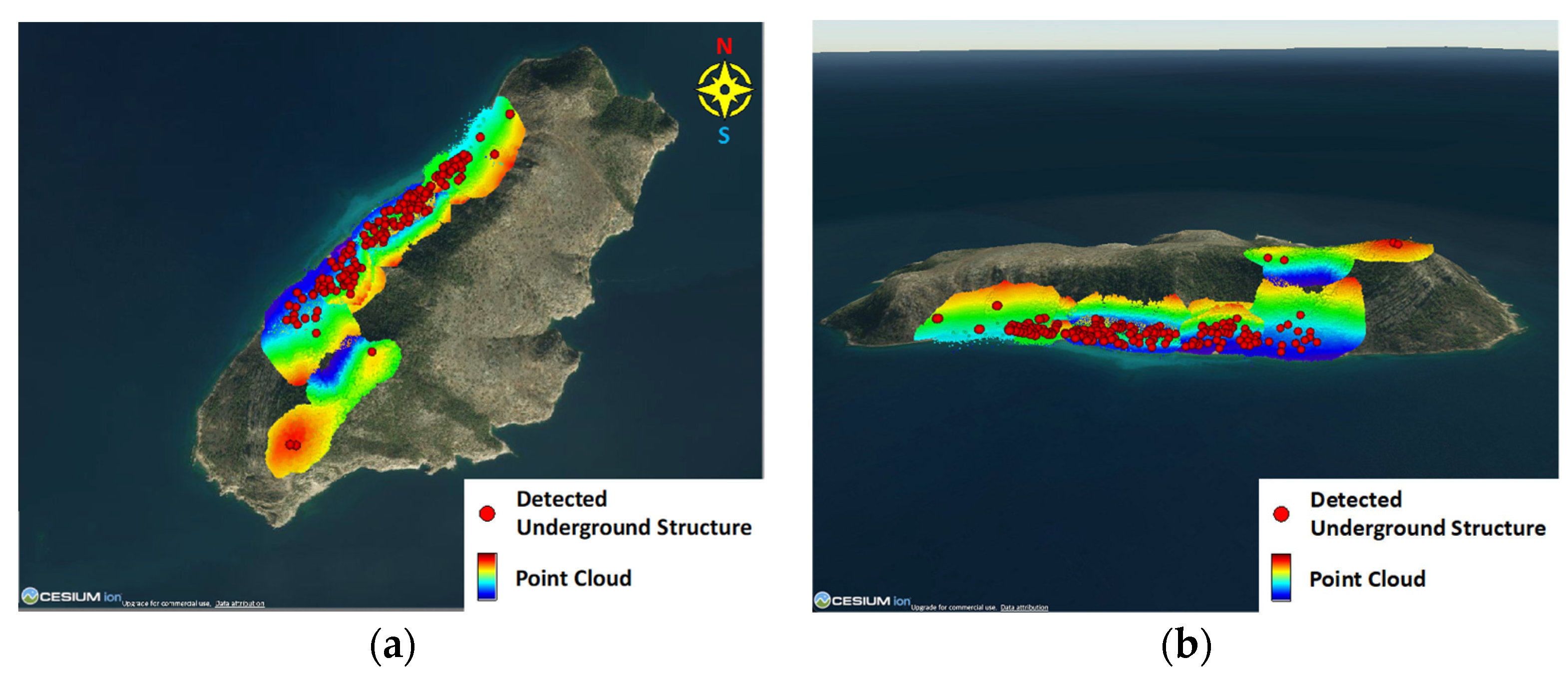

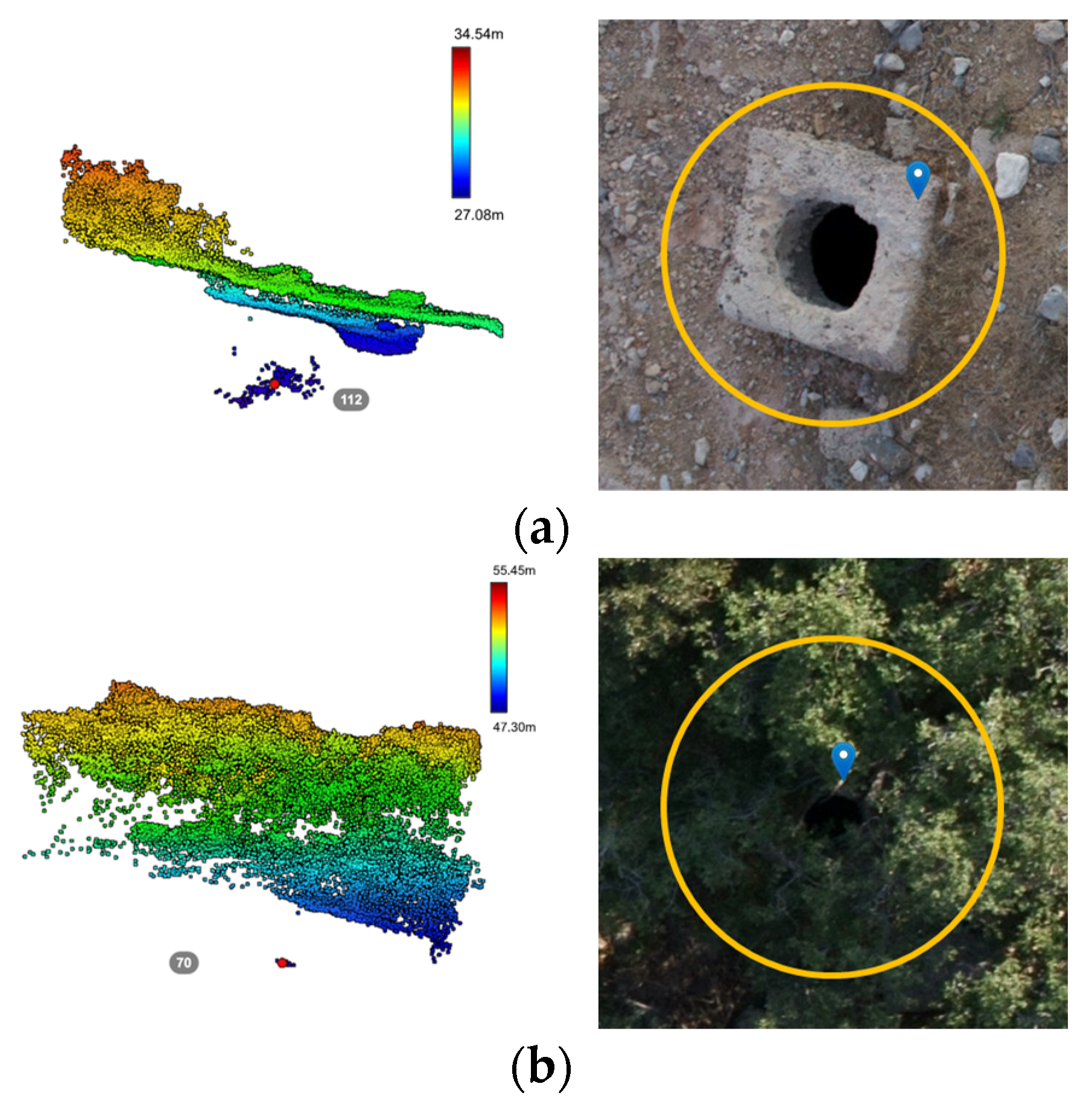

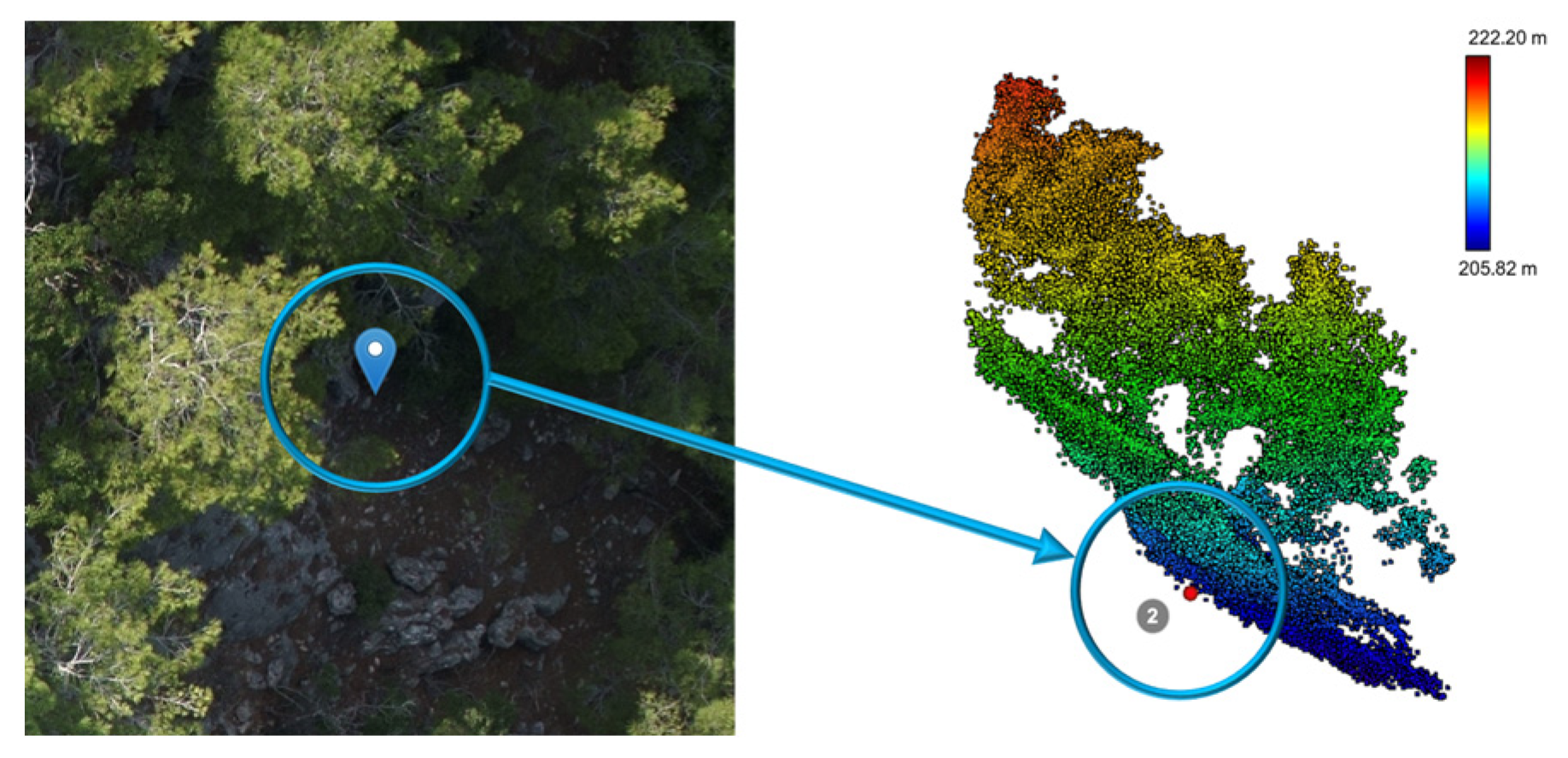

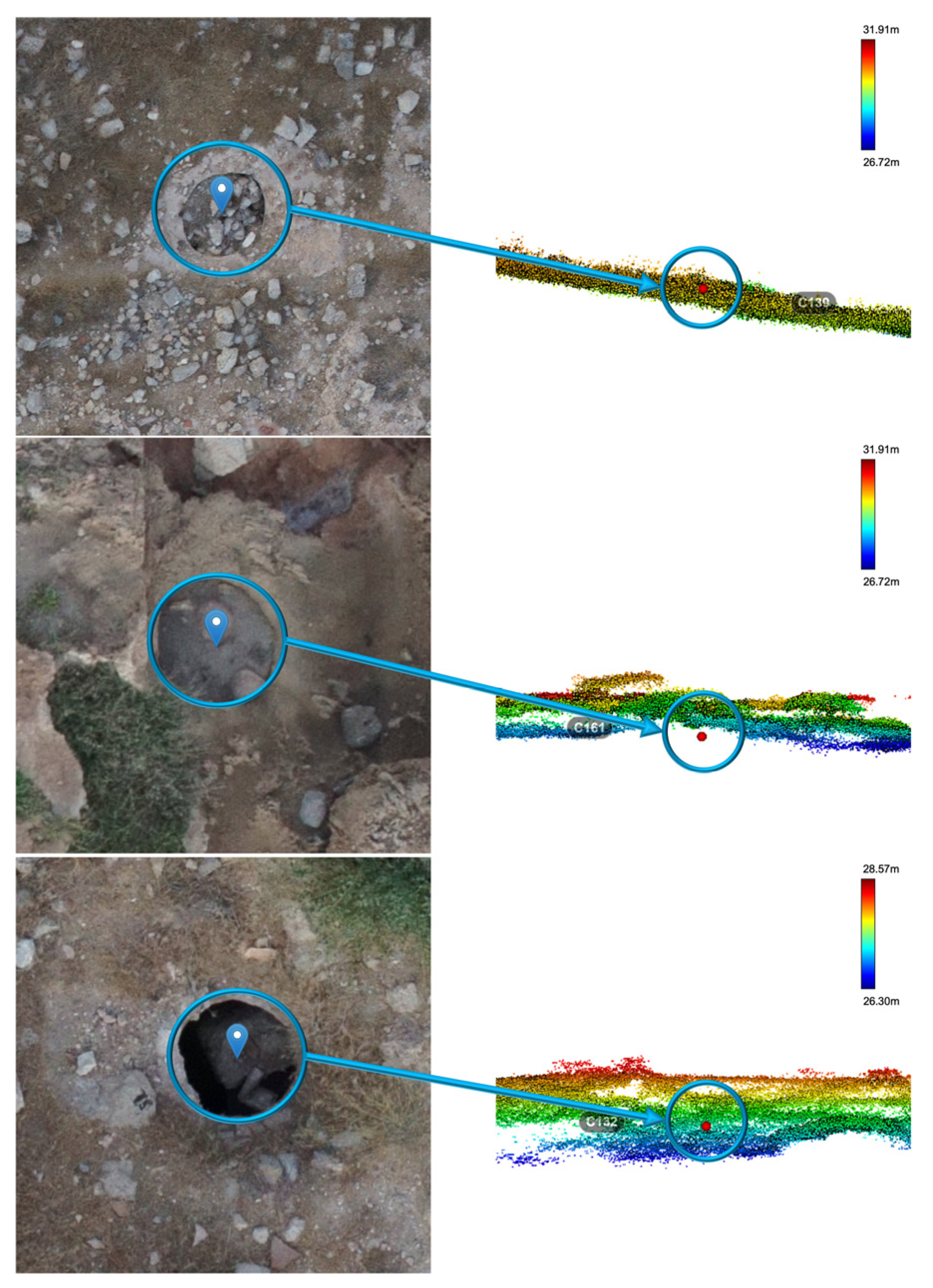

4.1. Qualitative Evaluation

4.2. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Binford, L.R. Archaeology as anthropology. In American Antiquity; Society for American Archaeology: Washington, DC, USA, 1962; Volume 28, pp. 217–225. [Google Scholar]

- Butzer, K.W. Environment and Archaeology; Aldine: Chicago, IL, USA, 1964. [Google Scholar]

- Clarke, D.L. Analytical Archaeology; Routledge: London, UK, 2014. [Google Scholar]

- Argyrou, A.; Agapiou, A. A Review of Artificial Intelligence and Remote Sensing for Archaeological Research. Remote Sens. 2022, 14, 6000. [Google Scholar] [CrossRef]

- Herz, N.; Garrison, E.G. Geological Methods for Archaeology; Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- Renfrew, C.; Bahn, P. Archaeology: Theories, Methods and Practice; Thames and Hudson: London, UK, 2012. [Google Scholar]

- Chen, F.; You, J.; Tang, P.; Zhou, W.; Masini, N.; Lasaponara, R. Unique performance of spaceborne SAR remote sensing in cultural heritage applications: Overviews and perspectives. Archaeol. Prospect. 2018, 25, 71–79. [Google Scholar] [CrossRef]

- Lozić, E. Application of Airborne LiDAR Data to the Archaeology of Agrarian Land Use: The Case Study of the Early Medieval Microregion of Bled (Slovenia). Remote Sens. 2021, 13, 3228. [Google Scholar] [CrossRef]

- Štular, B.; Lozić, E.; Eichert, S. Airborne LiDAR-derived digital elevation model for archaeology. Remote Sens. 2021, 13, 1855. [Google Scholar] [CrossRef]

- Luo, L.; Wang, X.; Guo, H.; Lasaponara, R.; Zong, X.; Masini, N.; Wang, G.; Shi, P.; Khatteli, H.; Chen, F.; et al. Airborne and spaceborne remote sensing for archaeological and cultural heritage applications: A review of the century (1907–2017). Remote Sens. Environ. 2019, 232, 111280. [Google Scholar] [CrossRef]

- Orcutt, J. Earth System Monitoring, Introduction. In Earth System Monitoring: Selected Entries from the Encyclopedia of Sustainability Science and Technology; Springer: New York, NY, USA, 2013; pp. 1–5. [Google Scholar]

- Zaina, F.; Tapete, D. Satellite-Based Methodology for Purposes of Rescue Archaeology of Cultural Heritage Threatened by Dam Construction. Remote Sens. 2022, 14, 1009. [Google Scholar] [CrossRef]

- Lin, J.; Wang, M.; Yang, J.; Yang, Q. Landslide identification and information extraction based on optical and multispectral uav remote sensing imagery. IOP Conf. Ser. Earth Environ. Sci. 2017, 57, 012017. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Osco, L.P.; Marcato, J., Jr.; Ramos, A.P.M.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A review on deep learning in UAV remote sensing. Int. J. Appl. Earth Observ. Geoinf. 2021, 102, 102456. [Google Scholar] [CrossRef]

- Sothe, C.; Dalponte, M.; Almeida, C.M.D.; Schimalski, M.B.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; Tommaselli, A.M.G. Tree species classification in a highly diverse subtropical forest integrating UAV-based photogrammetric point cloud and hyperspectral data. Remote Sens. 2019, 11, 1338. [Google Scholar] [CrossRef]

- Zang, W.; Lin, J.; Wang, Y.; Tao, H. Investigating small-scale water pollution with UAV remote sensing technology. In Proceedings of the World Automation Congress 2012, Puerto Vallarta, Mexico, 24–28 June 2012. [Google Scholar]

- Lo Brutto, M.; Burruso, A.; D’Argenio, A. Uav Systems for Photogrammetric Data Acquisition of Archaeological Sites. Int. J. Herit. Digit. Era 2012, 1 (Suppl. S1), 7–13. [Google Scholar] [CrossRef]

- Ebolese, D.; Lo Brutto, M.; Dardanelli, G. UAV Survey for the Archaeological Map of Lilybaeum (Marsala, Italy). Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W11, 495–502. [Google Scholar] [CrossRef]

- Muñoz-Nieto, A.L.; Rodriguez-Gonzalvez, P.; Gonzales-Aguilera, D.; Fernandez-Hernandez, J.; Gomez-Lahoz, J.; Picon-Cabrera, I.; Herrero-Pascual, J.S.; Hernandez-Lopez, D. UAV Archaeological Reconstruction: The Study Case of Chamartin Hillfort (Avila, Spain). ISPRS Ann. Photogr. Remote Sens. Spatial Inf. Sci. 2014, II-5, 259–265. [Google Scholar] [CrossRef]

- Fernndez-Hernndez, J.; Gonzlez-Aguilera, D.; Rodrguez-Gonzlvez, P.; Juan, M.-T. Image-Based Modelling from Unmanned Aerial Vehicle (UAV) Photogrammetry: An Effective, Low-Cost Tool for Archaeological Applications. Archaeometry 2015, 57, 128–145. [Google Scholar] [CrossRef]

- Peña-Villasenín, S.; Gil-Docampo, M.; Juan, O.-S. Professional SfM and TLS vs a simple SfM photogrammetry for 3D modelling of rock art and radiance scaling shading in engraving detection. J. Cult. Herit. 2019, 37, 238–246. [Google Scholar] [CrossRef]

- Levick, S.R.; Whiteside, T.; Loewensteiner, D.A.; Rudge, M.; Bartolo, R. Leveraging TLS as a calibration and validation tool for MLS and ULS mapping of savanna structure and biomass at landscape-scales. Remote Sens. 2021, 13, 257. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, W.; Mellado, N.; Grussenmeyer, P.; Li, R.; Chen, Y.; Wan, P.; Zhang, X.; Cai, S. Automated markerless registration of point clouds from TLS and structured light scanner for heritage documentation. J. Cult. Herit. 2019, 35, 16–24. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Coastal mapping using DJI Phantom 4 RTK in post-processing kinematic mode. Drones 2020, 4, 9. [Google Scholar] [CrossRef]

- Zang, Y.; Yang, B.; Li, J.; Guan, H. An accurate TLS and UAV image point clouds registration method for deformation detection of chaotic hillside areas. Remote Sens. 2019, 11, 647. [Google Scholar] [CrossRef]

- Monterroso-Checa, A.; Moreno-Escribano, J.C.; Gasparini, M.; Conejo-Moreno, J.A.; Domínguez-Jiménez, J.L. Revealing Archaeological Sites under Mediterranean Forest Canopy Using LiDAR: El Viandar Castle (husum) in El Hoyo (Belmez-Córdoba, Spain). Drones 2021, 5, 72. [Google Scholar] [CrossRef]

- Masini, N.; Abate, N.; Gizzi, F.T.; Vitale, V.; Amodio, A.M.; Sileo, M.; Biscione, M.; Lasaponara, R.; Bentivenga, M.; Cavalcante, F. UAV LiDAR Based Approach for the Detection and Interpretation of Archaeological Micro Topography under Canopy—The Rediscovery of Perticara (Basilicata, Italy). Remote Sens. 2022, 14, 6074. [Google Scholar] [CrossRef]

- Schroder, W.; Murtha, T.; Golden, C.; Scherer, A.K.; Broadbent, E.N.; Zambrano, A.M.A.; Herndon, K.; Griffin, R. UAV LiDAR Survey for Archaeological Documentation in Chiapas, Mexico. Remote Sens. 2021, 13, 4731. [Google Scholar] [CrossRef]

- Doyle, C.; Luzzadder-Beach, S.; Beach, T. Advances in remote sensing of the early Anthropocene in tropical wetlands: From biplanes to lidar and machine learning. Prog. Phys. Geogr. Earth Environ. 2022, 03091333221134185. [Google Scholar] [CrossRef]

- Kadhim, I.; Abed, F.M. The Potential of LiDAR and UAV-Photogrammetric Data Analysis to Interpret Archaeological Sites: A Case Study of Chun Castle in South-West England. ISPRS Int. J. Geo-Inf. 2021, 10, 41. [Google Scholar] [CrossRef]

- Enríquez, C.; Jurado, J.M.; Bailey, A.; Callén, D.; Collado, M.J.; Espina, G.; Marroquín, P.; Oliva, E.; Osla, E.; Ramos, M.I.; et al. The UAS-based 3D image characterization of Mozarabic church ruins in Bobastro (Malaga), Spain. Remote Sens. 2020, 12, 2377. [Google Scholar] [CrossRef]

- Temizer, T.; Nemli, G.; Ekizce, E.G.; Ekizce, A.E. 3D documentation of a historical monument using terrestrial laser scanning case study: Byzantine Water Cistern, Istanbul. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2013, 5, W2. [Google Scholar] [CrossRef]

- Willis, A.; Sui, Y.; Ringle, W.; Galor, K. Design and implementation of an inexpensive LIDAR scanning system with applications in archaeology. In Three-Dimensional Imaging Metrology; SPIE: Bellingham, WA, USA, 2009. [Google Scholar]

- Varinlioğlu, G.; Kaye, N.; Jones, M.R.; Ingram, R.; Rauh, N.K. The 2016 Dana Island Survey: Investigation of an Island Harbor in Ancient Rough Cilicia by the Boğsak Archaeological Survey. Near East. Archaeol. 2017, 80, 50–59. [Google Scholar] [CrossRef]

- LiDAR and Archaeology. 2022. Available online: https://education.nationalgeographic.org/resource/lidar-and-archaeology/ (accessed on 18 March 2023).

- Exclusive: Laser Scans Reveal Maya “Megalopolis” Below Guatemalan Jungle. 2018. Available online: https://www.nationalgeographic.com/history/article/maya-laser-lidar-guatemala-pacunam (accessed on 18 March 2023).

- Yan, L.; Liu, H.; Tan, J.; Li, Z.; Chen, C. A multi-constraint combined method for ground surface point filtering from mobile lidar point clouds. Remote Sens. 2017, 9, 958. [Google Scholar] [CrossRef]

- Hui, Z.; Hu, Y.; Yevenyo, Y.Z.; Yu, X. An improved morphological algorithm for filtering airborne LiDAR point cloud based on multi-level kriging interpolation. Remote Sens. 2016, 8, 35. [Google Scholar] [CrossRef]

- LI, H. LiDAR point cloud morphological filtering based on adaptive slope. Site Investig. Sci. Technol. 2017, 2, 26–29. [Google Scholar]

- Xiao-Qian, C.; Hong-Qiang, Z. Lidar Point Cloud Data Filtering based on Regional Growing. Remote Sens. Nat. Res. 2009, 20, 6–8. [Google Scholar]

- Vosselman, G. Slope based filtering of laser altimetry data. Int. Arch. Photogramm. Remote Sens. 2000, 33, 935–942. [Google Scholar]

- Sithole, G.; Vosselman, G. Filtering of laser altimetry data using a slope adaptive filter. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2001, 34, 203–210. [Google Scholar]

- Geng, J.; Yu, K.; Xie, Z.; Zhao, G.; Ai, J.; Yang, L.; Yang, H.; Liu, J. Analysis of spatiotemporal variation and drivers of ecological quality in Fuzhou based on RSEI. Remote Sens. 2022, 14, 4900. [Google Scholar] [CrossRef]

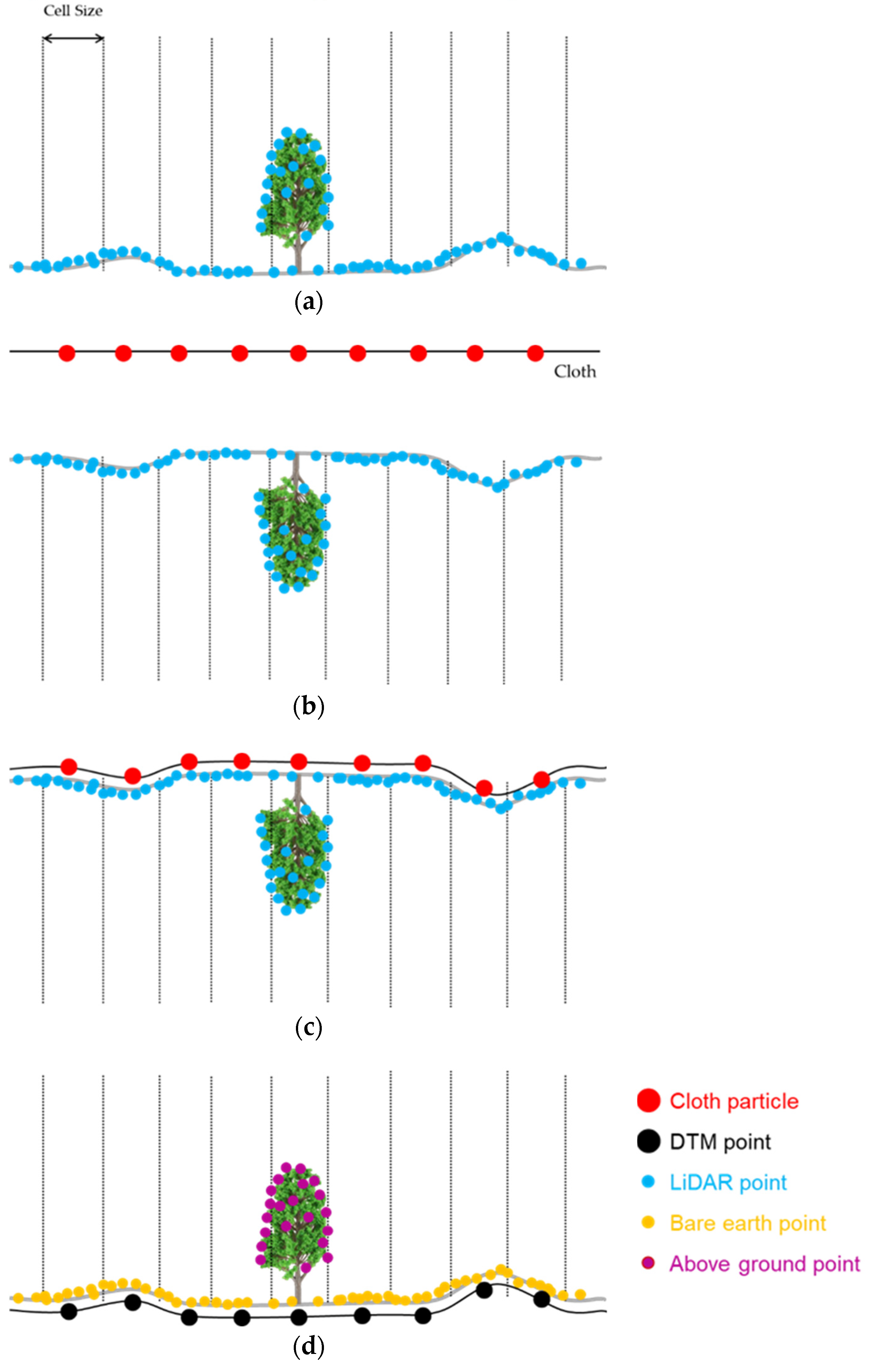

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

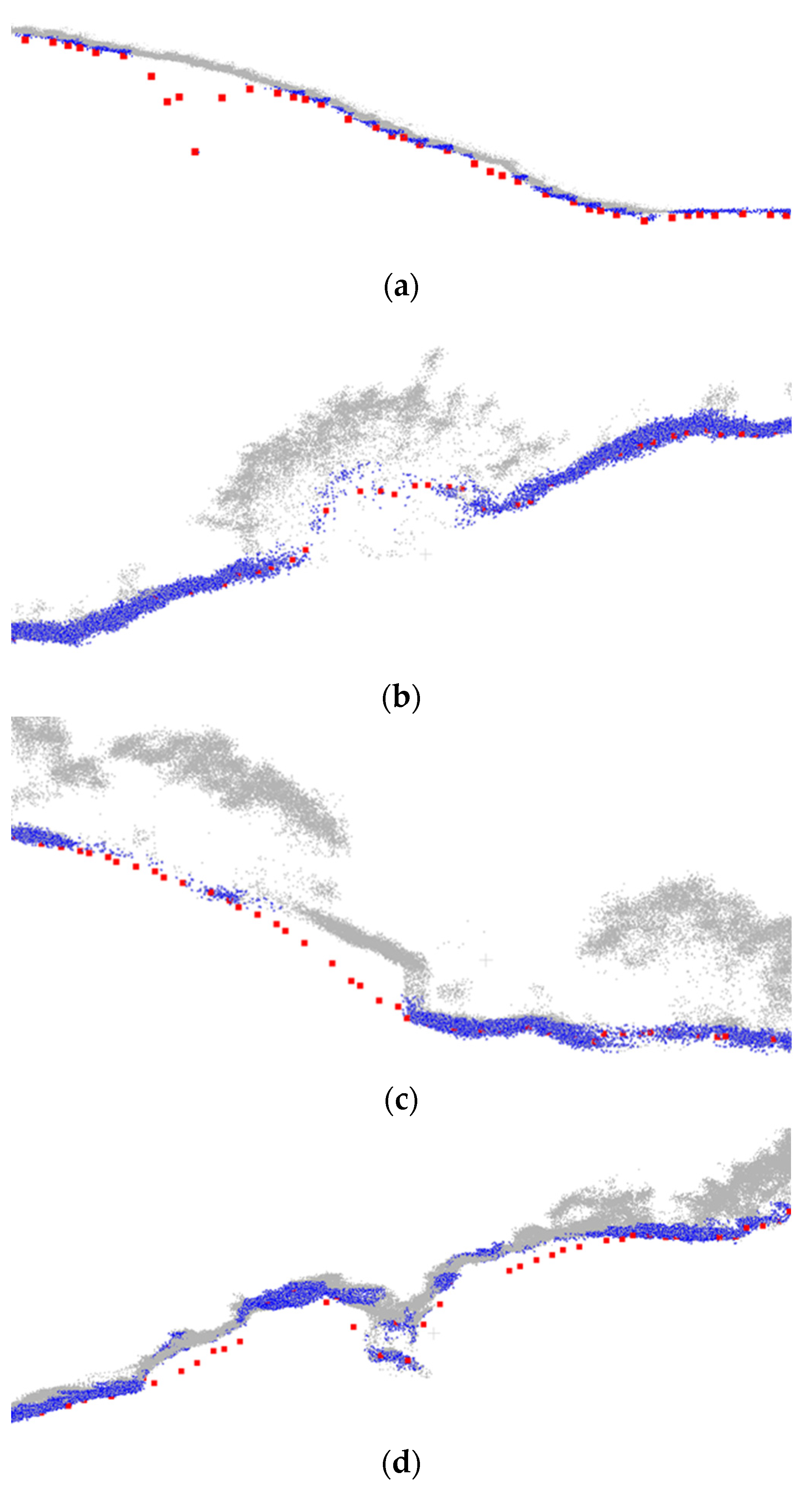

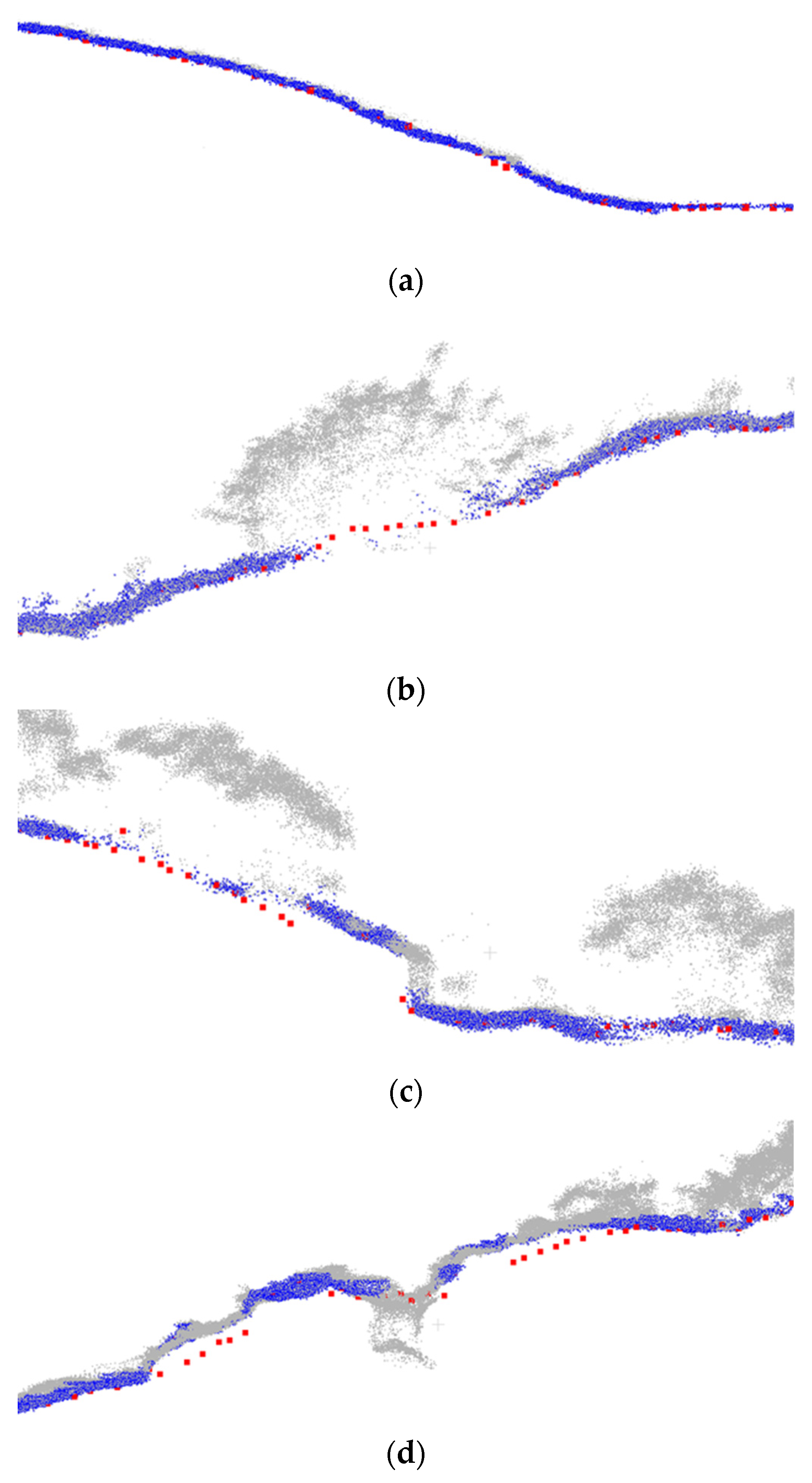

- Lin, Y.-C.; Manish, R.; Bullock, D.; Habib, A. Comparative analysis of different mobile LiDAR mapping systems for ditch line characterization. Remote Sens. 2021, 13, 2485. [Google Scholar] [CrossRef]

- Ren, L.; Tang, J.; Cui, C.; Song, R.; Ai, Y. An Improved Cloth Simulation Filtering Algorithm Based on Mining Point Cloud. In Proceedings of the 2021 International Conference on Cyber-Physical Social Intelligence (ICCSI), Beijing, China, 18–20 December 2021. [Google Scholar]

- Serifoglu Yilmaz, C.; Yilmaz, V.; Güngör, O. Investigating the performances of commercial and non-commercial software for ground filtering of UAV-based point clouds. Int. J. Remote Sens. 2018, 39, 5016–5042. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, S.-C.; Whitman, D.; Shyu, M.-L.; Yan, J.; Zhang, C. A progressive morphological filter for removing nonground measurements from airborne LIDAR data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 872–882. [Google Scholar] [CrossRef]

- Zhang, K.; Whitman, D. Comparison of three algorithms for filtering airborne lidar data. Photogramm.Eng. Remote Sens. 2005, 71, 313–324. [Google Scholar] [CrossRef]

- Axelsson, P. DEM generation from laser scanner data using adaptive TIN models. Int. Arch. Photogramm. Remote Sens. 2000, 33, 110–117. [Google Scholar]

- Evans, J.S.; Hudak, A.T. A multiscale curvature algorithm for classifying discrete return LiDAR in forested environments. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1029–1038. [Google Scholar] [CrossRef]

- Streutker, D.R.; Glenn, N.F. LiDAR measurement of sagebrush steppe vegetation heights. Remote Sens. Environ. 2006, 102, 135–145. [Google Scholar] [CrossRef]

- Mongus, D.; Žalik, B. Parameter-free ground filtering of LiDAR data for automatic DTM generation. ISPRS J. Photogramm. Remote Sens. 2012, 67, 1–12. [Google Scholar] [CrossRef]

- Bolkas, D.; Naberezny, B.; Jacobson, M. Comparison of sUAS Photogrammetry and TLS for Detecting Changes in Soil Surface Elevations Following Deep Tillage. J. Surv. Eng. 2021, 147, 04021001. [Google Scholar] [CrossRef]

- Bohak, C.; Slemenik, M.; Kordež, J.; Marolt, M. Aerial LiDAR data augmentation for direct point-cloud visualisation. Sensors 2020, 20, 2089. [Google Scholar] [CrossRef] [PubMed]

- Maravelakis, E.; Konstantaras, A.; Kabassi, K.; Chrysakis, I.; Georgis, C.; Axaridou, A. 3DSYSTEK web-based point cloud viewer. In Proceedings of the IISA 2014, The 5th International Conference on Information, Intelligence, Systems and Applications, Chania, Greece, 7–9 July 2014. [Google Scholar]

- Sehnal, D.; Bittrich, S.; Deshpande, M.; Svobodová, R.; Berka, K.; Bazgier, V.; Velankar, S.; Burley, S.K.; Koča, J.; Rose, A.S. Mol* Viewer: Modern web app for 3D visualization and analysis of large biomolecular structures. Nucleic Acids Res. 2021, 49, W431–W437. [Google Scholar] [CrossRef]

- Velodyne. UltraPuck Data Sheet. 2018. Available online: https://hypertech.co.il/wp-content/uploads/2016/05/ULTRA-Puck_VLP-32C_Datasheet.pdf (accessed on 18 January 2023).

- Sony. ILCE-7R Specifications. 2021. Available online: https://www.sony.com/electronics/support/e-mount-body-ilce-7-series/ilce-7r/specifications (accessed on 7 February 2023).

- Trimble. APX-15 UAV. 2019. Available online: https://www.applanix.com/downloads/products/specs/APX15_UAV.pdf (accessed on 7 February 2023).

- Trimble. POSPAC UAV. 2020. Available online: https://www.applanix.com/downloads/products/specs/POSPac-UAV.pdf (accessed on 7 February 2023).

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T. Simultaneous system calibration of a multi-lidar multicamera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Revelles, J.; Urena, C.; Lastra, M. An efficient parametric algorithm for octree traversal. J. WSCG 2000, 8, 1–3. [Google Scholar]

- Cai, S.; Zhang, W.; Liang, X.; Wan, P.; Qi, J.; Yu, S.; Yan, G.; Shao, J. Filtering airborne LiDAR data through complementary cloth simulation and progressive TIN densification filters. Remote Sens. 2019, 11, 1037. [Google Scholar] [CrossRef]

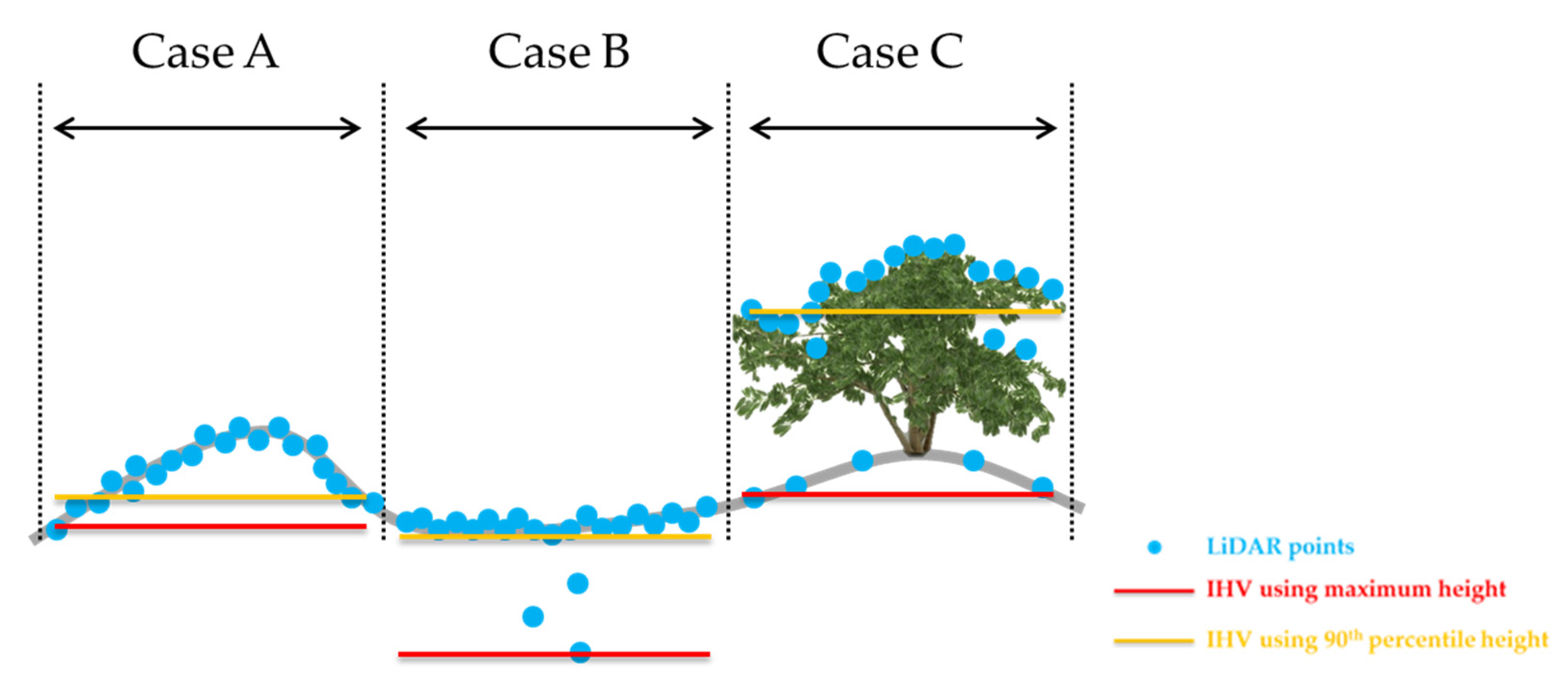

- Zhang, W.; Cai, S.; Liang, X.; Shao, J.; Hu, R.; Yu, S.; Yan, G. Cloth simulation-based construction of pit-free canopy height models from airborne LiDAR data. For. Ecosyst. 2020, 7, 1. [Google Scholar] [CrossRef]

- Yilmaz, C.S.; Yilmaz, V.; Gungor, O. Ground filtering of a uav-based point cloud with the cloth simulation filtering algorithm. In Proceedings of the International Conference on Advances and Innovations in Engineering (ICAIE), Elazig, Turkey, 10–12 May 2017. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Diploma Thesis, Vienna University of Technology, Vienna, Austria, 2016. [Google Scholar]

- Nesbit, P.R.; Durkin, P.R.; Hubbard, S.M. Visualization and sharing of 3D digital outcrop models to promote open science. GSA Today 2020, 30, 4–10. [Google Scholar] [CrossRef]

| Approach | Pros | Cons | Reference |

|---|---|---|---|

| Image-based |

|

| [4,18,21,30,31] |

| LiDAR-based |

|

| [28,30] |

| Dataset | Flying Height (m) | Average Speed (m/s) | Flight Time (min) | No. of Images | No. of Points (million) | Spatial Coverage (ha) |

|---|---|---|---|---|---|---|

| Day1 *-M1 | 45–65 | 6.0 | 13 | 514 | 76 | 6.5 |

| Day1 *-M2 | 30–50 | 5.8 | 10 | 518 | 87 | 7.8 |

| Day2 *-M1 | 45 | 5.0 | 15 | 597 | 89 | 8.3 |

| Day2 *-M2 | 60–80 | 5.0 | 15 | 607 | 58 | 9.7 |

| Day2 *-M3 | 30–80 | 5.1 | 12 | 578 | 77 | 6.4 |

| Day3 *-M1 | 45–50 | 5.5 | 15 | 590 | 96 | 6.6 |

| Day3 *-M2 | 50–100 | 5.7 | 15 | 686 | 85 | 14.0 |

| Day3 *-M3 | 60–90 | 5.0 | 14 | 625 | 145 | 13.0 |

| Day4 *-M1 | 50–90 | 5.0 | 15 | 587 | 103 | 7.8 |

| Day4 *-M2 | 22–40 | 3.0 | 17 | 663 | 115 | 2.0 |

| Total Number of Detected Underground Structures | True Positives | False Positives | False Negatives | ||

|---|---|---|---|---|---|

| 169 | In total: 164 | 5 | 24 | ||

| easy_img | hard_img | no_img | |||

| 93 | 70 | 1 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, Y.-H.; Shin, S.-Y.; Rastiveis, H.; Cheng, Y.-T.; Zhou, T.; Liu, J.; Zhao, C.; Varinlioğlu, G.; Rauh, N.K.; Matei, S.A.; et al. UAV-Based Remote Sensing for Detection and Visualization of Partially-Exposed Underground Structures in Complex Archaeological Sites. Remote Sens. 2023, 15, 1876. https://doi.org/10.3390/rs15071876

Shin Y-H, Shin S-Y, Rastiveis H, Cheng Y-T, Zhou T, Liu J, Zhao C, Varinlioğlu G, Rauh NK, Matei SA, et al. UAV-Based Remote Sensing for Detection and Visualization of Partially-Exposed Underground Structures in Complex Archaeological Sites. Remote Sensing. 2023; 15(7):1876. https://doi.org/10.3390/rs15071876

Chicago/Turabian StyleShin, Young-Ha, Sang-Yeop Shin, Heidar Rastiveis, Yi-Ting Cheng, Tian Zhou, Jidong Liu, Chunxi Zhao, Günder Varinlioğlu, Nicholas K. Rauh, Sorin Adam Matei, and et al. 2023. "UAV-Based Remote Sensing for Detection and Visualization of Partially-Exposed Underground Structures in Complex Archaeological Sites" Remote Sensing 15, no. 7: 1876. https://doi.org/10.3390/rs15071876

APA StyleShin, Y.-H., Shin, S.-Y., Rastiveis, H., Cheng, Y.-T., Zhou, T., Liu, J., Zhao, C., Varinlioğlu, G., Rauh, N. K., Matei, S. A., & Habib, A. (2023). UAV-Based Remote Sensing for Detection and Visualization of Partially-Exposed Underground Structures in Complex Archaeological Sites. Remote Sensing, 15(7), 1876. https://doi.org/10.3390/rs15071876