3.3. Orientation Enhancement Module

As is in Deformable DETR’s original design, the MSDA module reduces computational complexity from quadratic to linear by calculating attentions from sparsely located sampling points. Let a single-scale input feature map be

, the input content queries be

where

denotes the number of queries, and the reference points of this feature map be

. For the

q-th content query

, the offsets for the

h-th attention head

is calculated as:

where

denotes linear mapping through fully connected layers. The attention weights are calculated as:

Therefore, the Deformable Attention (DA) for the

q-th content query is calculated as:

where the ‘@’ sign denotes collecting

C-dimensional features at given 2-D locations from

x,

and

denote the channel-wise weight adjustment. The Multi-scale Deformable Attention (MSDA) for

l-th level of multi-scale feature maps is calculated as:

where

L is the total number of feature map layers,

denotes normalized

with values between 0 and 1, and the

operator denotes re-scaling

coordinates to the scale of the

l-th layer of multi-scale feature maps.

We can find from the above, that in the calculation of Deformable Attention, the orientation is not considered since this mechanism is initially proposed in natural image horizontal box detection which doesn’t require orientation information. Moreover, it requires additional designs on the mechanism itself to incorporate orientation information in place.

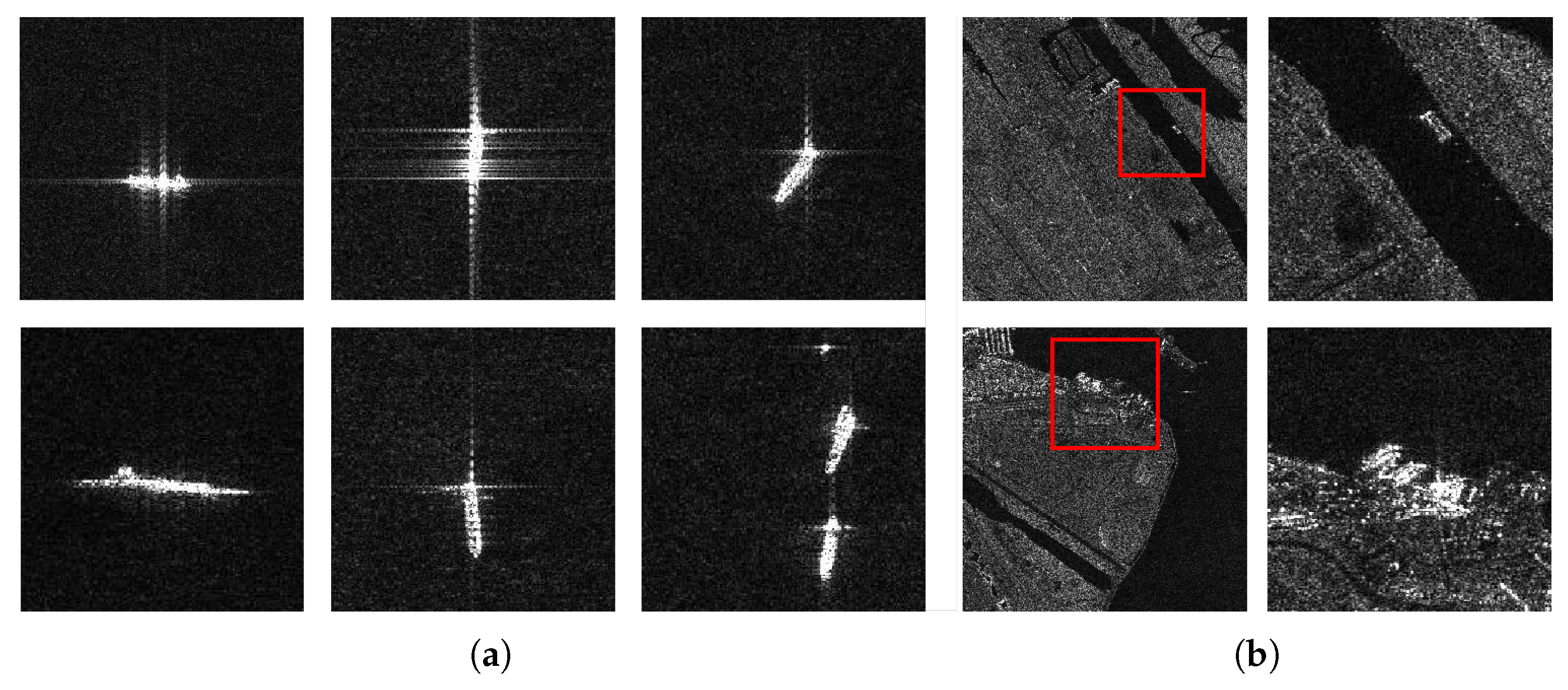

Meanwhile, different from objects in optical images that are rotation-invariant. Objects in SAR images do not share this property as we have observed variations in patterns of objects of relatively similar scales and aspect ratios, as is shown in

Figure 1a and

Figure 2. The characteristics of SAR objects require the learning of more effective representations. Moreover, as is shown in

Figure 1b, ships are vulnerable to land clutters and sea clutters. Focusing only on the local features of ships and ignoring the relationship between image contexts makes it impossible to effectively attend to the semantic features of the image. Therefore, to deal with learning more effective representations and learning from image context at the same time, we propose the Orientation Enhancement Module (OEM) to enhance orientation-related information without redesigning the mechanism.

As is shown in

Figure 5a, the OEM is an extension of the original MSDA by leveraging the Rotation-Sensitive convolution mechanism to capture orientation-related information for ships under different layout conditions and the self-attention mechanism to capture global pixel-wise dependencies. The OEM mainly consists of two parts: the Rotation-Sensitive convolution calculation and the channel-wise feature re-weighting with self-attention. In the first part, the Rotation-Sensitive Module (RSM) is used, with its structure shown in

Figure 5b. It extracts rotation information using the Oriented Response Convolution [

52], which calculates standard convolution feature maps with rotated convolution kernels of different angles. It also uses the ORPool operation to select maximum activation from ORConv calculations. In the second part, the self-attention calculations on channels are performed to adjust the original encoder memory sequence with the rotation-related information learned through the first stage.

Let

be the

i-th level of multi-scale feature maps and

N be the number of orientation channels used in ORConv. We first get the output feature map

with the Active Rotation Filter (ARF)

F through standard convolution by:

where

denotes applying ARF with rotation angle

as convolution kernel on feature map

. Then, the ORPool operation is performed via max-pooling as:

After the extraction process of rotation-related information, for each layer, we perform channel-wise feature re-weighting by first applying self-attention calculation on

as:

where

,

and

are linear projection matrices,

d is a constant value used as scale modulator of the product of

q and

k inputs. Then we concatenate attention weights from each layer and reduce the number of channels through fully connected networks as:

where

denotes the encoder memory sequence and

denotes it after being processed. The

operation denotes the channel-wise concatenation that yields a tensor with

channels given attention inputs from all

L levels.

is the fully connected sub-network that maps

L attention weights with

C channels to one vector with

C channels.

Through these operations, OEM is designed to be a parallel re-weighting extension outside the encoder structure. OEM extracts Rotation-Sensitive attributes of SAR objects that are not present in the encoder sequence to initially highlight the targets. Then, it learns to enhance them, as well as suppressing irrelevant features in the background via multi-layer channel-wise re-weighting to render the represented objects more salient to downstream detector heads, leading to easier detection of these objects.

3.4. Grouped Relation Contrastive Loss

The Content De-noising mechanism is introduced to facilitate learning of more robust class prototypes and bounding-box representations. The collection of these class prototypes is called a label book in their implementations and consists of embedding vectors with quantities equal to the number of classes. Label book is used to initialize components of decoder input queries used in label de-noising. The label book is updated through backpropagation at each training iteration to acquire more representative class prototypes. However, as we further investigate this mechanism, we discovered two major issues:

Class prototypes spontaneously become less discriminative as the training process goes on. (Values of Confusion Matrices rise as epoch number increases), as can be deduced from

Figure 6a.

Class prototypes have learned to adjust to better represent classes but have failed to learn to better discriminate between each other. When not back-propagating basic contrastive loss, we discovered natural descent on this loss value, as is shown in

Figure 6a, but CM values still rise even when we add basic contrastive loss to explicitly designate distinctiveness as an optimization factor, as is changed in a similar way, like in

Figure 6b.

Therefore, we conclude from the above that in the label de-noising part of the Content De-noising mechanism, the distinctiveness of class prototypes themselves is neglected. This imposes difficulties on the classification branch when the class A label is flipped as class B, but prototypes of class A and class B are similar. Since prototypes of class A and B are close to each other in vector space, the query corresponding to this prototype will not be correctly de-noised and the classification loss on this query will be undesirably low and wrongly propagated.

To better address the aforementioned issues, we seek to utilize both the intra-class and inter-class relationships of all class prototypes within the label book, in order to facilitate better representation learning of CDN mechanism. Considering the binary classification of the foreground class and the background class, we add additional representations called groups or patterns to better reserve different aspects of class features. When calculating relationships for groups, on the one hand, we seek to increase the distance with the most similar inter-class group so that representations that are most likely leading to confusions between the foreground and the background will be suppressed. On the other hand, we also seek to shrink the distance for least similar intra-class group so that the all groups responsible for the same category will learn to include confused features and to better separate them in feature space. To fulfill this purpose, we formulate our method as follows.

The original contrastive loss is first used in unsupervised learning and aims to facilitate learning of representations invariant to different views of the same instance by making positive pairs attracted and negative pairs separated. This loss can be formulated as:

where

is a feature vector belonging to class at index

c and

is another class

c vector which can be the same as

. In our case, we replace

f to

q to represent queries responsible for predictions, and

g to

b to represent label book embeddings that have the same number of feature dimensions as

q. To apply contrastive loss on

q of containing duplicated classes and

b, the loss is formulated as:

where

denotes the

i-

th vector of

. To address the aforementioned problem that representations in the label book failed to learn to distinguish themselves from each other, we add self-relation as a component of the total contrastive loss and we denote the total loss as:

We first observed a performance increase in the DOTA 1.0 dataset [

53], which is an optical dataset that has a total of 15 classes, after applying Equation (

11) as a loss term, while we also observed the desired effect of this initial loss, as is shown in

Figure 6.

But ship detection in SAR images only recognizes objects as foreground objects or background which means significantly fewer classes. To deal with this issue, we direct our focus to intra-class variations, which is common in datasets with over-generalized classes. We discover in

Figure 1a that pattern differences exist regardless of the ships’ orientation and background noise patterns are equally diverse, and the visual confusion between ships and background noises become more frequent in near-shore scenes, as is shown in

Figure 1b. We conclude that considerable intra-class variations are present in both the foreground class and the background class, and hence it is natural to modify this loss to enable multiple representations for each class.

Given number of patterns per class as

K, we have the expanded label book

, where

is the total number of classes. Now, given queries

, we first normalize by:

We calculate similarities of the intra-class representations of the

g-th group

by:

which means selecting the least discriminative representation of a different class to enlarge the distance between compared

C-dimensional vectors. Discrepancies of inter-class representations

are calculated as:

where

denotes any single class that is not class

c. It means shrinking the distance between the most discriminative representation of the same class and the current vector. We do the same for self-relations among grouped representations and hence formulated the Relational Loss (RL) term as:

Hence, we obtain the total Grouped Relation Contrastive Loss (GRC Loss) as:

The whole process of training with GRC Loss is shown in Algorithm 1. The algorithm isolates calculations between matched queries and each group of representations from matched queries themselves or the label book.

| Algorithm 1: Training with GRC Loss |

Require: Queries from Encoder , classification scores of each query , Label book , predictions of model , number of encoder queries to be selected , number of classes , number of patterns in each group K- 1:

Get sorted scores = sort (, order = descend, dimension = last) - 2:

Get sorted = sort () with sorted scores - 3:

Get selected queries q = collect (sorted , amount = ), with number of queries N - 4:

Get matched labels L = Hungarian Assignment (, ground-truths) - 5:

Get normalized self-relations and cross-relations by Equation ( 12) - 6:

Set numerator A and denominator B to zero - 7:

for b is label book b or selected queries q do - 8:

for to do - 9:

Generate binary mask , where is 1, otherwise 0 - 10:

Do the same as above to get - 11:

Select with mask and with - 12:

Calculate and with Equations ( 13) and ( 14) - 13:

Add to A and to B - 14:

end for - 15:

Calculate with - 16:

Add to total loss - 17:

end for - 18:

Return

|

For a more intuitive view of the entire loss, we present in

Figure 7 calculations of GRC Loss with two classes with a pattern number of three and four matched queries. Each color represents the mask generated for each class of a group. Tiles with darker colors represent relation values selected by the

function in Equations (

13) and (

14). Therefore, calculations for each group are isolated from each other but are parallel for each type of query within the group. Our GRC Loss achieved the desired effect in our initial DOTA test, as is shown in

Figure 6c, and we will further discuss its effects on SAR objects and internal attributes in

Section 4 and

Section 5.