Enhanced Land-Cover Classification through a Multi-Stage Classification Strategy Integrating LiDAR and SIF Data

Abstract

:1. Introduction

2. Materials

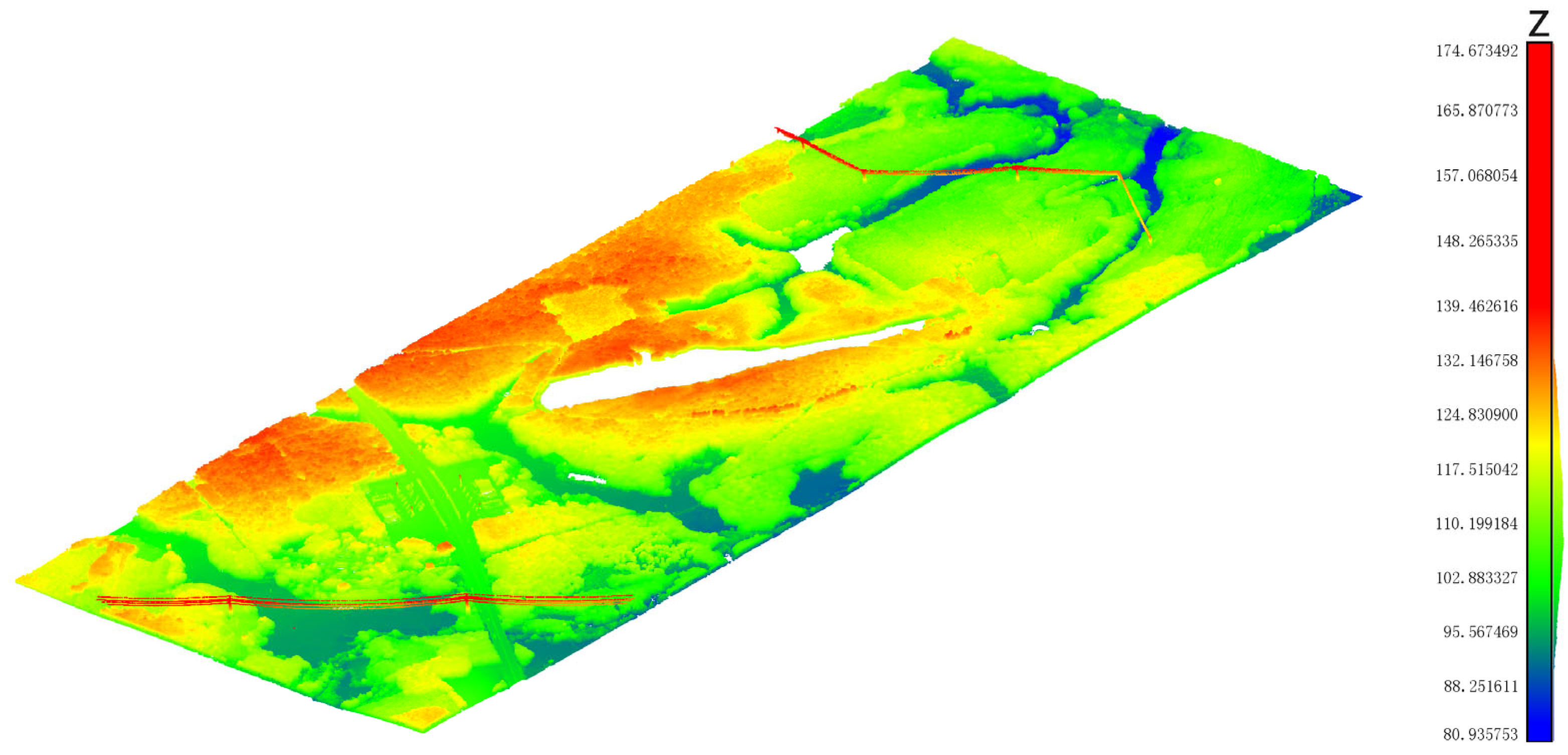

2.1. Study Area

2.2. Experimental Data

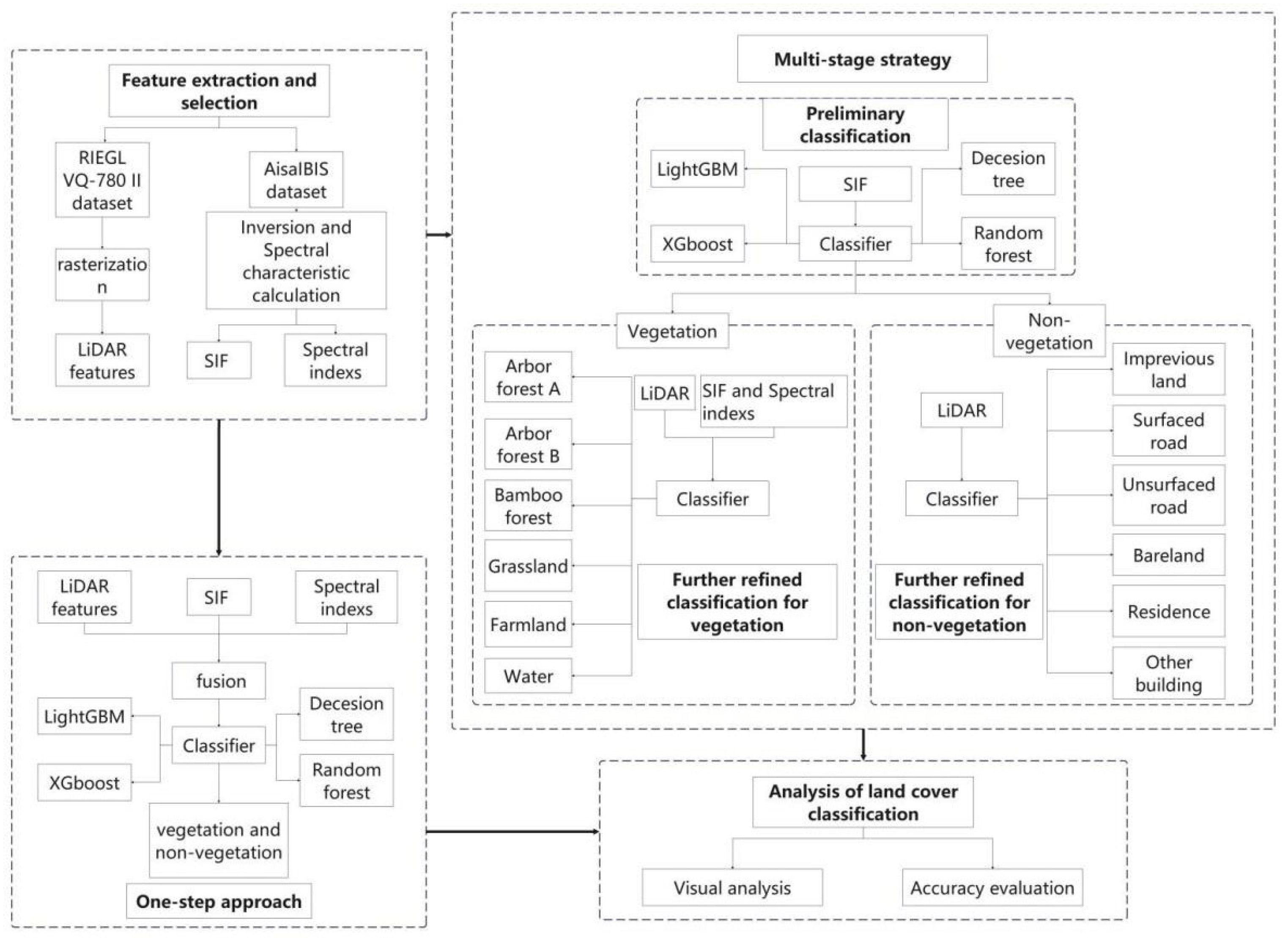

3. Methods

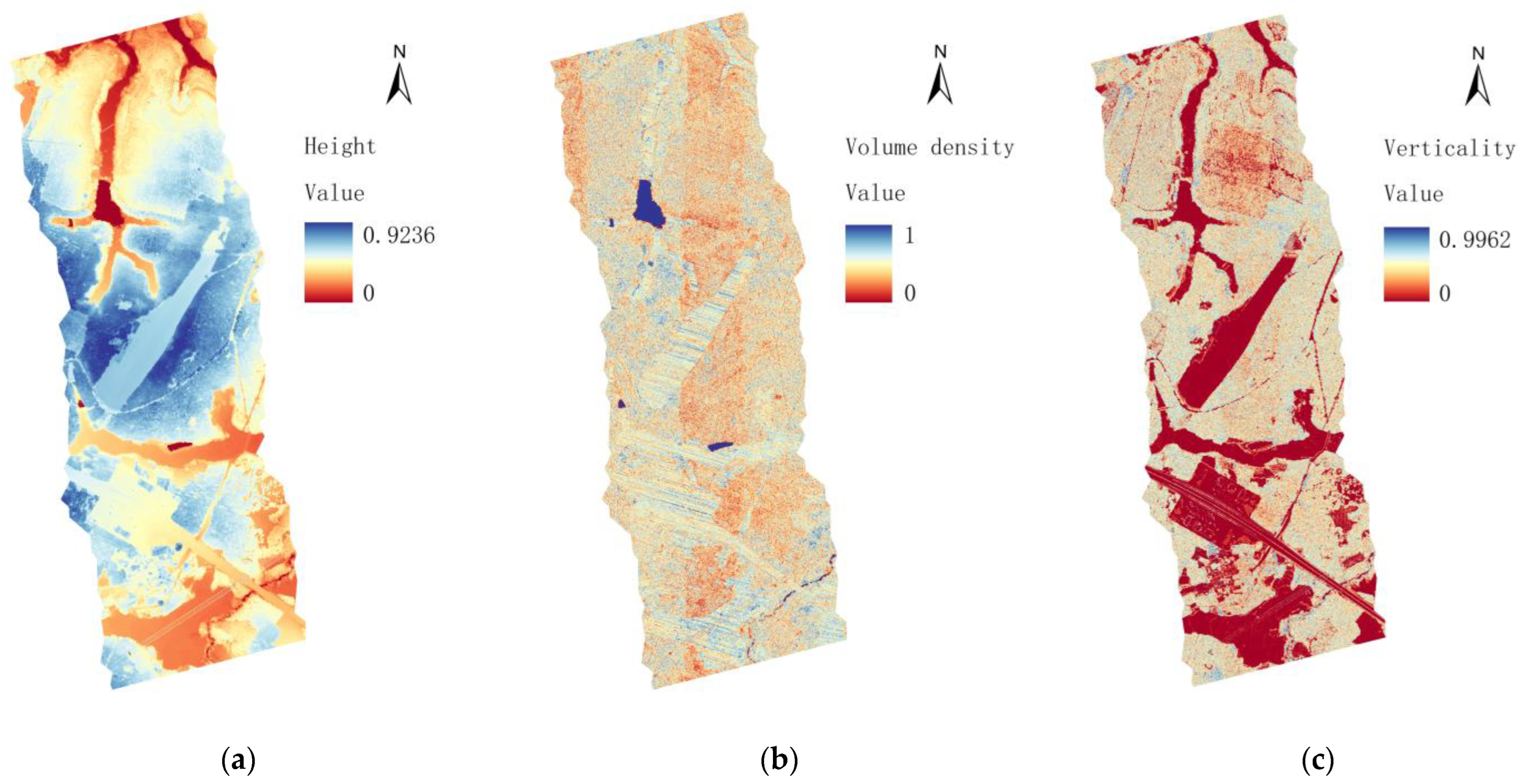

3.1. Feature Extraction

3.1.1. LiDAR Feature Extraction

3.1.2. SIF Acquisition and Feature Extraction

3.2. Land-Cover Classification

4. Results

4.1. Feature Extraction

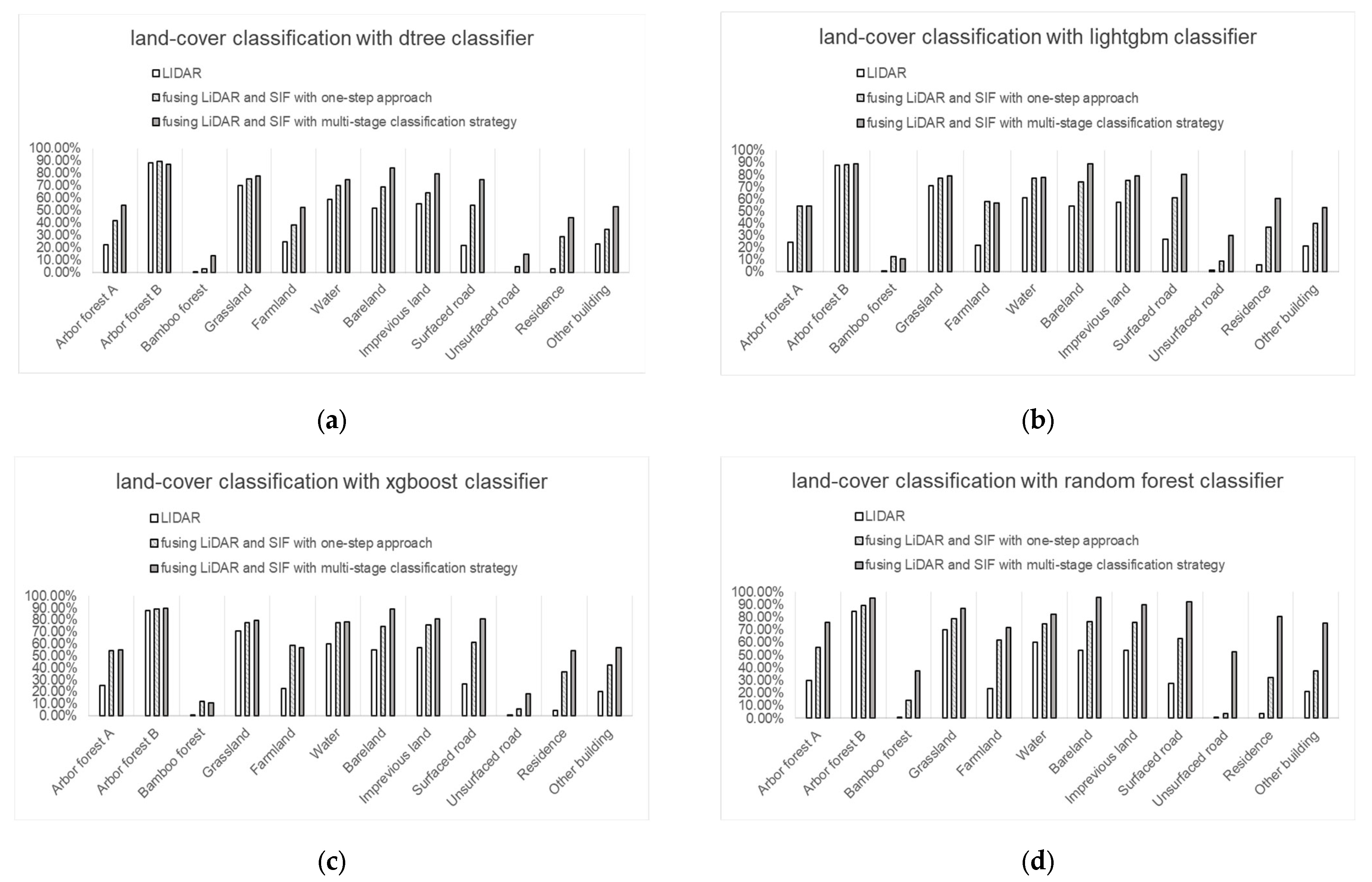

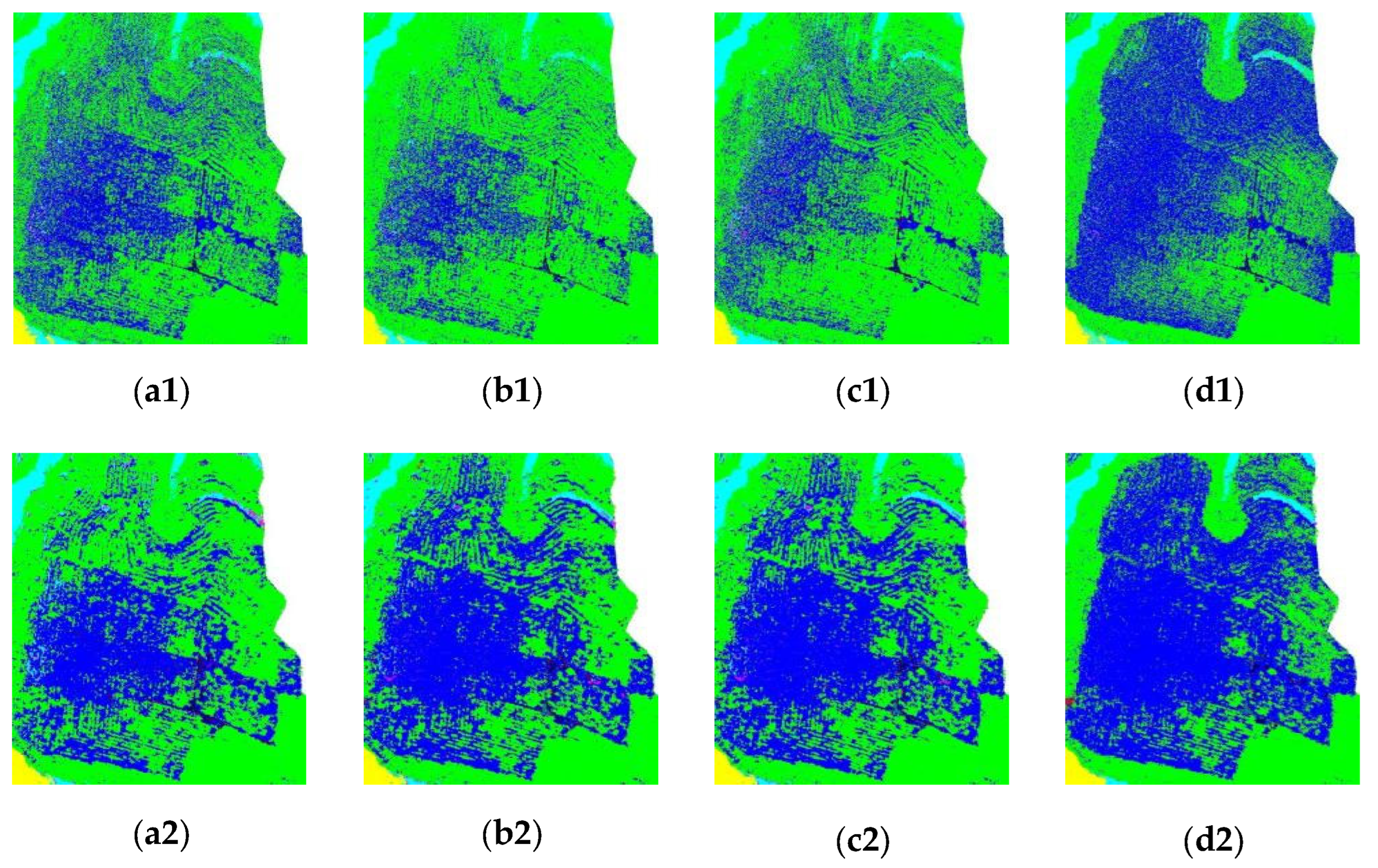

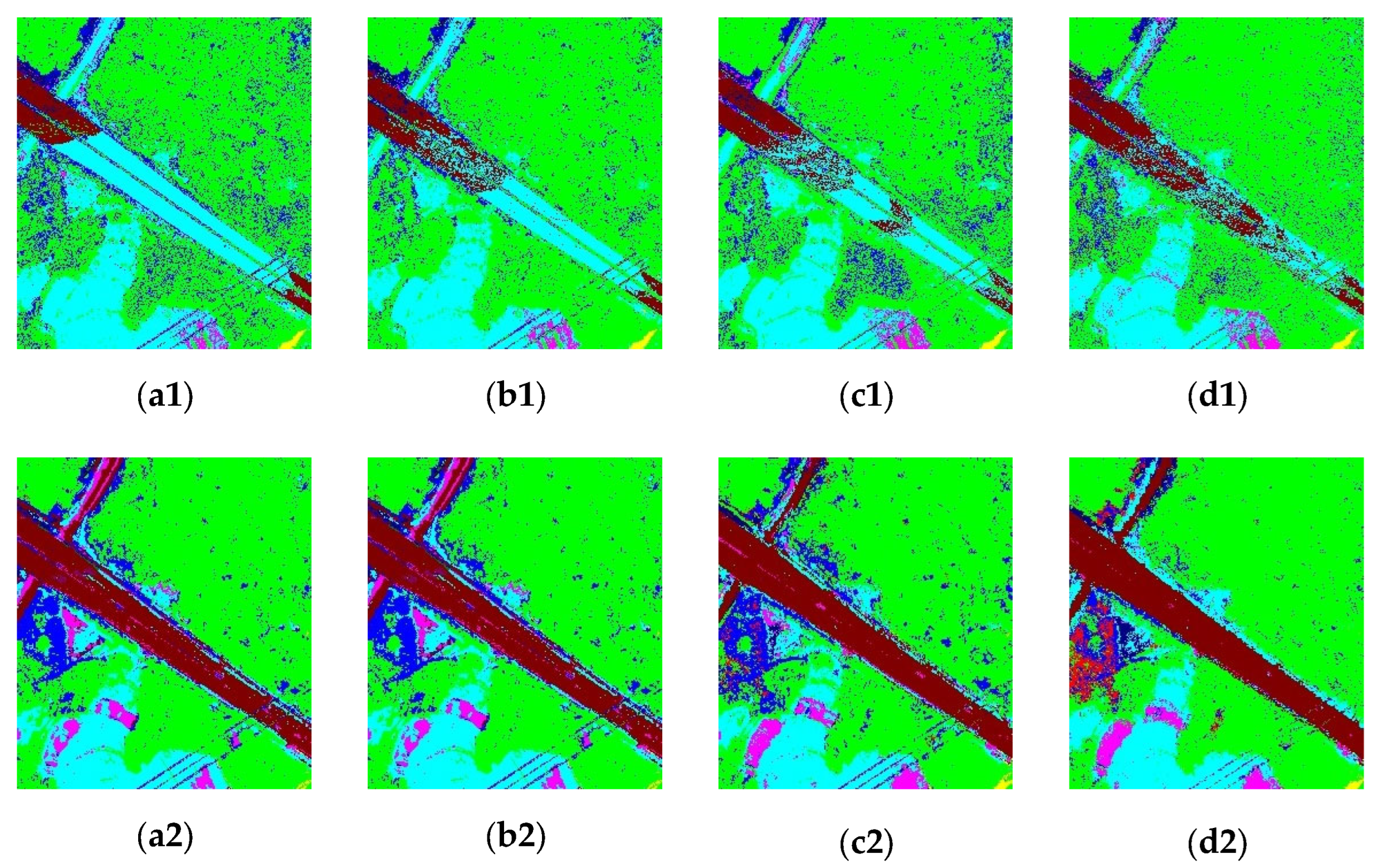

4.2. Classification of Solely LiDAR and the Combined LiDAR-SIF Data with One-Step Approach

4.3. Classification of the Combined LiDAR and SIF Data with Multi-Stage Classification Strategy

5. Discussion

5.1. Analysis of the SIF-Enhanced Classification Effectiveness Using LiDAR Data

5.2. Exploration of the Classification Results through Fusion of LiDAR and SIF Data Utilizing Multi-Stage Classification Strategy

5.3. Limitations and Expectations for the Current Research

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pan, S.; Guan, H.; Chen, Y.; Yu, Y.; Nunes Gonçalves, W.; Marcato Junior, J.; Li, J. Land-Cover Classification of Multispectral LiDAR Data Using CNN with Optimized Hyper-Parameters. ISPRS J. Photogramm. Remote Sens. 2020, 166, 241–254. [Google Scholar] [CrossRef]

- Antonarakis, A.S.; Richards, K.S.; Brasington, J. Object-Based Land Cover Classification Using Airborne LiDAR. Remote Sens. Environ. 2008, 112, 2988–2998. [Google Scholar] [CrossRef]

- Object-Based Land Cover Classification Using High-Posting-Density LiDAR Data. GISci. Remote Sens. 2008, 45, 209–228. Available online: https://www.tandfonline.com/doi/abs/10.2747/1548-1603.45.2.209 (accessed on 18 March 2024). [CrossRef]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban Land Cover Classification Using Airborne LiDAR Data: A Review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Sebastian, G.; Vattem, T.; Lukic, L.; Bürgy, C.; Schumann, T. RangeWeatherNet for LiDAR-Only Weather and Road Condition Classification. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 777–784. [Google Scholar]

- Swatantran, A.; Tang, H.; Barrett, T.; DeCola, P.; Dubayah, R. Rapid, High-Resolution Forest Structure and Terrain Mapping over Large Areas Using Single Photon Lidar. Sci. Rep. 2016, 6, 28277. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through Fog without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11682–11692. [Google Scholar]

- Wallace, A.M.; Halimi, A.; Buller, G.S. Full Waveform LiDAR for Adverse Weather Conditions. IEEE Trans. Veh. Technol. 2020, 69, 7064–7077. [Google Scholar] [CrossRef]

- Song, J.-H.; Han, S.-H.; Yu, K.Y.; Kim, Y.-I. Assessing the Possibility of Land-Cover Classification Using Lidar Intensity Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 259–262. [Google Scholar]

- Zhou, W. An Object-Based Approach for Urban Land Cover Classification: Integrating LiDAR Height and Intensity Data. IEEE Geosci. Remote Sens. Lett. 2013, 10, 928–931. [Google Scholar] [CrossRef]

- Rossini, M.; Nedbal, L.; Guanter, L.; Ač, A.; Alonso, L.; Burkart, A.; Cogliati, S.; Colombo, R.; Damm, A.; Drusch, M.; et al. Red and Far Red Sun-induced Chlorophyll Fluorescence as a Measure of Plant Photosynthesis. Geophys. Res. Lett. 2015, 42, 1632–1639. [Google Scholar] [CrossRef]

- Jonard, F.; De Canniere, S.; Brüggemann, N.; Gentine, P.; Gianotti, D.S.; Lobet, G.; Miralles, D.G.; Montzka, C.; Pagán, B.R.; Rascher, U. Value of Sun-Induced Chlorophyll Fluorescence for Quantifying Hydrological States and Fluxes: Current Status and Challenges. Agric. For. Meteorol. 2020, 291, 108088. [Google Scholar] [CrossRef]

- Aasen, H.; Van Wittenberghe, S.; Sabater Medina, N.; Damm, A.; Goulas, Y.; Wieneke, S.; Hueni, A.; Malenovskỳ, Z.; Alonso, L.; Pacheco-Labrador, J. Sun-Induced Chlorophyll Fluorescence II: Review of Passive Measurement Setups, Protocols, and Their Application at the Leaf to Canopy Level. Remote Sens. 2019, 11, 927. [Google Scholar] [CrossRef]

- Sun, Y.; Frankenberg, C.; Wood, J.D.; Schimel, D.S.; Jung, M.; Guanter, L.; Drewry, D.T.; Verma, M.; Porcar-Castell, A.; Griffis, T.J.; et al. OCO-2 Advances Photosynthesis Observation from Space via Solar-Induced Chlorophyll Fluorescence. Science 2017, 358, eaam5747. [Google Scholar] [CrossRef]

- Daumard, F.; Goulas, Y.; Champagne, S.; Fournier, A.; Ounis, A.; Olioso, A.; Moya, I. Continuous Monitoring of Canopy Level Sun-Induced Chlorophyll Fluorescence during the Growth of a Sorghum Field. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4292–4300. [Google Scholar] [CrossRef]

- Pinto, F.; Celesti, M.; Acebron, K.; Alberti, G.; Cogliati, S.; Colombo, R.; Juszczak, R.; Matsubara, S.; Miglietta, F.; Palombo, A.; et al. Dynamics of Sun-induced Chlorophyll Fluorescence and Reflectance to Detect Stress-induced Variations in Canopy Photosynthesis. Plant Cell Environ. 2020, 43, 1637–1654. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Guan, L.; Liu, X. Directly Estimating Diurnal Changes in GPP for C3 and C4 Crops Using Far-Red Sun-Induced Chlorophyll Fluorescence. Agric. For. Meteorol. 2017, 232, 1–9. [Google Scholar] [CrossRef]

- Chen, S.; Huang, Y.; Wang, G. Detecting Drought-Induced GPP Spatiotemporal Variabilities with Sun-Induced Chlorophyll Fluorescence during the 2009/2010 Droughts in China. Ecol. Indic. 2021, 121, 107092. [Google Scholar] [CrossRef]

- Daumard, F.; Champagne, S.; Fournier, A.; Goulas, Y.; Ounis, A.; Hanocq, J.-F.; Moya, I. A Field Platform for Continuous Measurement of Canopy Fluorescence. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3358–3368. [Google Scholar] [CrossRef]

- Yang, P.; van der Tol, C.; Verhoef, W.; Damm, A.; Schickling, A.; Kraska, T.; Muller, O.; Rascher, U. Using Reflectance to Explain Vegetation Biochemical and Structural Effects on Sun-Induced Chlorophyll Fluorescence. Remote Sens. Environ. 2019, 231, 110996. [Google Scholar] [CrossRef]

- Watanachaturaporn, P.; Arora, M.K.; Varshney, P.K. Multisource Classification Using Support Vector Machines. Photogramm. Eng. Remote Sens. 2008, 74, 239–246. [Google Scholar] [CrossRef]

- Pedergnana, M.; Marpu, P.R.; Dalla Mura, M.; Benediktsson, J.A.; Bruzzone, L. Classification of Remote Sensing Optical and LiDAR Data Using Extended Attribute Profiles. IEEE J. Sel. Top. Signal Process. 2012, 6, 856–865. [Google Scholar] [CrossRef]

- Xu, L.; Shi, S.; Gong, W.; Chen, B.; Sun, J.; Xu, Q.; Bi, S. Mapping 3D Plant Chlorophyll Distribution from Hyperspectral LiDAR by a Leaf-Canopyradiative Transfer Model. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103649. [Google Scholar] [CrossRef]

- Xu, L.; Shi, S.; Gong, W.; Shi, Z.; Qu, F.; Tang, X.; Chen, B.; Sun, J. Improving Leaf Chlorophyll Content Estimation through Constrained PROSAIL Model from Airborne Hyperspectral and LiDAR Data. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103128. [Google Scholar] [CrossRef]

- Teo, T.-A.; Huang, C.-H. Object-Based Land Cover Classification Using Airborne Lidar and Different Spectral Images. TAO Terr. Atmos. Ocean. Sci. 2016, 27, 491. [Google Scholar] [CrossRef]

- Lu, D.; Hetrick, S.; Moran, E. Land Cover Classification in a Complex Urban-Rural Landscape with QuickBird Imagery. Photogramm. Eng. Remote Sens. 2010, 76, 1159–1168. [Google Scholar] [CrossRef] [PubMed]

- Buján, S.; González-Ferreiro, E.; Reyes-Bueno, F.; Barreiro-Fernández, L.; Crecente, R.; Miranda, D. Land Use Classification from Lidar Data and Ortho-Images in a Rural Area. Photogramm. Rec. 2012, 27, 401–422. [Google Scholar] [CrossRef]

- Brown, D.G.; Johnson, K.M.; Loveland, T.R.; Theobald, D.M. Rural land-use trends in the conterminous united states, 1950–2000. Ecol. Appl. 2005, 15, 1851–1863. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote Sensing Technology for Mapping and Monitoring Land-Cover and Land-Use Change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Tilahun, A.; Teferie, B. Accuracy Assessment of Land Use Land Cover Classification Using Google Earth. Am. J. Environ. Prot. 2015, 4, 193–198. [Google Scholar] [CrossRef]

- Jansen, L.J.; Di Gregorio, A. Land-Use Data Collection Using the “Land Cover Classification System”: Results from a Case Study in Kenya. Land. Use Policy 2003, 20, 131–148. [Google Scholar] [CrossRef]

- Vargo, J.; Habeeb, D.; Stone, B., Jr. The Importance of Land Cover Change across Urban–Rural Typologies for Climate Modeling. J. Environ. Manag. 2013, 114, 243–252. [Google Scholar] [CrossRef]

- RIEGL—Produktdetail. Available online: http://www.riegl.com/nc/products/airborne-scanning/produktdetail/product/scanner/69/ (accessed on 19 March 2024).

- Miko Scientists Confirm Specim’s AisaIBIS Reliably Measures Plant Stress. Specim 2015. Available online: https://www.specim.com/scientists-confirm-specims-aisaibis-reliably-measures-plant-stress/ (accessed on 19 March 2024).

- Jakubowski, M.K.; Li, W.; Guo, Q.; Kelly, M. Delineating Individual Trees from LiDAR Data: A Comparison of Vector-and Raster-Based Segmentation Approaches. Remote Sens. 2013, 5, 4163–4186. [Google Scholar] [CrossRef]

- Budei, B.C.; St-Onge, B. Variability of Multispectral Lidar 3D and Intensity Features with Individual Tree Height and Its Influence on Needleleaf Tree Species Identification. Can. J. Remote Sens. 2018, 44, 263–286. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Z.; Peterson, J.; Chandra, S. The Effect of LiDAR Data Density on DEM Accuracy. In Proceedings of the 17th International Congress on Modelling and Simulation (MODSIM07), Canberra, Australia, 10–13 December 2007; Modelling and Simulation Society of Australia and New Zealand: Canberra, Australia, 2007. [Google Scholar]

- Chehata, N.; Guo, L.; Mallet, C. Airborne Lidar Feature Selection for Urban Classification Using Random Forests. In Proceedings of the Laserscanning, Paris, France, 1–2 September 2009. [Google Scholar]

- Ramadhani, A.N.R. An Analysis of the Three-Dimensional Modelling Using LiDAR Data and Unmanned Aerial Vehicle (UAV) (Case Study: Institut Teknologi Sepuluh Nopember, Sukolilo Campus). In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2023; Volume 1127, p. 012010. [Google Scholar]

- Brodu, N.; Lague, D. 3D Terrestrial Lidar Data Classification of Complex Natural Scenes Using a Multi-Scale Dimensionality Criterion: Applications in Geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Automatic Detection and Mapping of Highway Guardrails from Mobile Lidar Point Clouds|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/abstract/document/9553055 (accessed on 19 March 2024).

- Daumard, F.; Goulas, Y.; Ounis, A.; Pedrós, R.; Moya, I. Measurement and Correction of Atmospheric Effects at Different Altitudes for Remote Sensing of Sun-Induced Fluorescence in Oxygen Absorption Bands. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5180–5196. [Google Scholar] [CrossRef]

- Damm, A.; Guanter, L.; Laurent, V.C.; Schaepman, M.E.; Schickling, A.; Rascher, U. FLD-Based Retrieval of Sun-Induced Chlorophyll Fluorescence from Medium Spectral Resolution Airborne Spectroscopy Data. Remote Sens. Environ. 2014, 147, 256–266. [Google Scholar] [CrossRef]

- Plascyk, J.A.; Gabriel, F.C. The Fraunhofer Line Discriminator MKII-an Airborne Instrument for Precise and Standardized Ecological Luminescence Measurement. IEEE Trans. Instrum. Meas. 1975, 24, 306–313. [Google Scholar] [CrossRef]

- Plascyk, J.A. The MK II Fraunhofer Line Discriminator (FLD-II) for Airborne and Orbital Remote Sensing of Solar-Stimulated Luminescence. Opt. Eng. 1975, 14, 339. [Google Scholar] [CrossRef]

- Zhang, Y.; Guanter, L.; Berry, J.A.; Joiner, J.; Van Der Tol, C.; Huete, A.; Gitelson, A.; Voigt, M.; Köhler, P. Estimation of Vegetation Photosynthetic Capacity from Space-based Measurements of Chlorophyll Fluorescence for Terrestrial Biosphere Models. Glob. Chang. Biol. 2014, 20, 3727–3742. [Google Scholar] [CrossRef]

- Yang, H.; Yang, X.; Zhang, Y.; Heskel, M.A.; Lu, X.; Munger, J.W.; Sun, S.; Tang, J. Chlorophyll Fluorescence Tracks Seasonal Variations of Photosynthesis from Leaf to Canopy in a Temperate Forest. Glob. Chang. Biol. 2017, 23, 2874–2886. [Google Scholar] [CrossRef]

- Guanter, L.; Zhang, Y.; Jung, M.; Joiner, J.; Voigt, M.; Berry, J.A.; Frankenberg, C.; Huete, A.R.; Zarco-Tejada, P.; Lee, J.-E.; et al. Global and Time-Resolved Monitoring of Crop Photosynthesis with Chlorophyll Fluorescence. Proc. Natl. Acad. Sci. USA 2014, 111, E1327–E1333. [Google Scholar] [CrossRef]

- Song, Y.-Y.; Ying, L.U. Decision Tree Methods: Applications for Classification and Prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar] [PubMed]

- Charbuty, B.; Abdulazeez, A. Classification Based on Decision Tree Algorithm for Machine Learning. J. Appl. Sci. Technol. Trends 2021, 2, 20–28. [Google Scholar] [CrossRef]

- Myles, A.J.; Feudale, R.N.; Liu, Y.; Woody, N.A.; Brown, S.D. An Introduction to Decision Tree Modeling. J. Chemom. 2004, 18, 275–285. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Biau, G. Analysis of a Random Forests Model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Bui, Q.-T.; Chou, T.-Y.; Hoang, T.-V.; Fang, Y.-M.; Mu, C.-Y.; Huang, P.-H.; Pham, V.-D.; Nguyen, Q.-H.; Anh, D.T.N.; Pham, V.-M. Gradient Boosting Machine and Object-Based CNN for Land Cover Classification. Remote Sens. 2021, 13, 2709. [Google Scholar] [CrossRef]

- McCarty, D.A.; Kim, H.W.; Lee, H.K. Evaluation of Light Gradient Boosted Machine Learning Technique in Large Scale Land Use and Land Cover Classification. Environments 2020, 7, 84. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A Highly Efficient Gradient Boosting Decision Tree. Adv. Neural Inf. Process. Syst. 2017, 30, 1–9. [Google Scholar]

- Torlay, L.; Perrone-Bertolotti, M.; Thomas, E.; Baciu, M. Machine Learning–XGBoost Analysis of Language Networks to Classify Patients with Epilepsy. Brain Inf. 2017, 4, 159–169. [Google Scholar] [CrossRef]

- Ramraj, S.; Uzir, N.; Sunil, R.; Banerjee, S. Experimenting XGBoost Algorithm for Prediction and Classification of Different Datasets. Int. J. Control Theory Appl. 2016, 9, 651–662. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

| Vegetation | Non-Vegetation | Overall Accuracy | |

|---|---|---|---|

| Decision tree classifier | 96.45% | 84.77% | 96.41% |

| LightGBM classifier | 97.95% | 79.04% | 96.81% |

| XGBoost classifier | 97.97% | 78.70% | 96.79% |

| Random forest classifier | 98.92% | 83.69% | 97.71% |

| Data | LiDAR | LiDAR and Multi-Spectral Image | LiDAR and SIF | ||

|---|---|---|---|---|---|

| Method | One-Step Approach | One-Step Approach | Multi-Stage Strategy | One-Step Approach | Multi-Stage Strategy |

| Decision tree classifier | 76.07% | 76.89% | 80.53% | 80.78% | 83.52% |

| LightGBM classifier | 76.36% | 82.00% | 82.49% | 83.38% | 86.25% |

| XGBoost classifier | 76.36% | 82.16% | 82.69% | 83.59% | 86.48% |

| Random forest classifier | 83.31% | 88.91% | 89.36% | 90.37% | 92.45% |

| Arbor Forest A | Arbor Forest B | Bamboo Forest | Grassland | Farmland | Water | Bareland | Imprevious land | Surfaced Road | Unsurfaced Road | Residence | Other Building | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Arbor forest A | 58.69% | 36.88% | 0.02% | 1.82% | 0.56% | 0.01% | 0.98% | 0.56% | 0.45% | 0.00% | 0.01% | 0.03% |

| Arbor forest B | 3.54% | 93.85% | 0.01% | 1.42% | 0.32% | 0.15% | 0.38% | 0.12% | 0.19% | 0.00% | 0.00% | 0.01% |

| Bamboo forest | 18.74% | 68.70% | 4.29% | 5.29% | 1.04% | 0.31% | 0.91% | 0.26% | 0.41% | 0.00% | 0.03% | 0.02% |

| Grassland | 4.50% | 13.75% | 0.02% | 77.36% | 1.60% | 0.17% | 0.67% | 0.91% | 0.95% | 0.00% | 0.01% | 0.05% |

| Farmland | 7.50% | 22.52% | 0.03% | 25.22% | 40.58% | 0.13% | 2.39% | 0.53% | 1.08% | 0.00% | 0.01% | 0.02% |

| Water | 2.06% | 21.44% | 0.03% | 10.58% | 1.20% | 63.84% | 0.38% | 0.07% | 0.38% | 0.00% | 0.00% | 0.00% |

| Bareland | 7.18% | 16.34% | 0.02% | 1.56% | 0.95% | 0.09% | 65.72% | 5.78% | 2.07% | 0.00% | 0.04% | 0.26% |

| Imprevious land | 5.68% | 6.66% | 0.02% | 0.79% | 0.16% | 0.01% | 16.80% | 67.68% | 1.77% | 0.00% | 0.05% | 0.38% |

| Surfaced road | 9.52% | 29.13% | 0.03% | 8.32% | 2.07% | 0.03% | 6.06% | 4.48% | 40.24% | 0.00% | 0.06% | 0.05% |

| Unsurfaced road | 23.85% | 50.09% | 0.08% | 6.21% | 2.71% | 0.05% | 8.24% | 2.77% | 3.70% | 1.96% | 0.21% | 0.13% |

| Residence | 32.53% | 25.83% | 0.14% | 1.80% | 1.51% | 0.01% | 10.85% | 6.90% | 7.46% | 0.01% | 12.74% | 0.21% |

| Other building | 15.83% | 32.65% | 0.07% | 3.15% | 1.76% | 0.43% | 10.38% | 6.36% | 1.17% | 0.01% | 0.13% | 28.06% |

| Arbor Forest A | Arbor Forest B | Bamboo Forest | Grassland | Farmland | Water | Bareland | Imprevious Land | Surfaced Road | Unsurfaced Road | Residence | Other Building | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Arbor forest A | 56.48% | 38.96% | 0.58% | 1.77% | 0.63% | 0.00% | 0.66% | 0.10% | 0.73% | 0.01% | 0.07% | 0.01% |

| Arbor forest B | 6.85% | 90.16% | 0.37% | 1.40% | 0.40% | 0.10% | 0.37% | 0.07% | 0.23% | 0.00% | 0.03% | 0.02% |

| Bamboo forest | 30.38% | 45.97% | 14.12% | 5.06% | 2.12% | 0.17% | 0.91% | 0.09% | 0.75% | 0.01% | 0.38% | 0.02% |

| Grassland | 6.06% | 10.16% | 0.26% | 78.92% | 2.02% | 0.19% | 0.67% | 0.80% | 0.80% | 0.00% | 0.04% | 0.08% |

| Farmland | 7.68% | 11.66% | 0.47% | 15.59% | 62.39% | 0.12% | 0.79% | 0.38% | 0.74% | 0.01% | 0.14% | 0.02% |

| Water | 1.91% | 14.56% | 0.22% | 5.78% | 1.69% | 75.23% | 0.07% | 0.00% | 0.54% | 0.00% | 0.00% | 0.00% |

| Bareland | 5.80% | 8.31% | 0.37% | 1.51% | 1.06% | 0.08% | 76.50% | 3.69% | 2.23% | 0.01% | 0.21% | 0.22% |

| Imprevious land | 2.87% | 2.97% | 0.04% | 0.55% | 0.57% | 0.00% | 13.57% | 76.15% | 1.90% | 0.01% | 0.73% | 0.64% |

| Surfaced road | 7.42% | 16.07% | 0.23% | 2.63% | 1.74% | 0.16% | 6.45% | 1.80% | 63.23% | 0.02% | 0.19% | 0.07% |

| Unsurfaced road | 34.64% | 28.60% | 1.06% | 3.95% | 5.46% | 0.00% | 11.90% | 1.55% | 6.40% | 3.86% | 2.13% | 0.45% |

| Residence | 22.67% | 10.54% | 0.66% | 0.97% | 3.46% | 0.00% | 7.08% | 5.06% | 17.04% | 0.03% | 32.29% | 0.20% |

| Other building | 12.80% | 17.09% | 0.32% | 2.14% | 2.59% | 0.03% | 12.38% | 9.60% | 3.97% | 0.16% | 1.41% | 37.50% |

| Arbor Forest A | Arbor Forest B | Bamboo Forest | Grassland | Farmland | Water | Bareland | Imprevious Land | Surfaced Road | Unsurfaced Road | Residence | Other Building | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Arbor forest A | 75.77% | 22.30% | 0.28% | 1.16% | 0.32% | 0.00% | 0.07% | 0.00% | 0.09% | 0.00% | 0.00% | 0.00% |

| Arbor forest B | 3.24% | 95.46% | 0.15% | 0.79% | 0.19% | 0.06% | 0.06% | 0.00% | 0.04% | 0.00% | 0.00% | 0.00% |

| Bamboo forest | 23.66% | 33.54% | 37.57% | 3.70% | 1.16% | 0.11% | 0.08% | 0.00% | 0.17% | 0.00% | 0.01% | 0.00% |

| Grassland | 5.01% | 6.76% | 0.16% | 86.97% | 0.85% | 0.11% | 0.03% | 0.01% | 0.10% | 0.00% | 0.00% | 0.00% |

| Farmland | 6.58% | 10.15% | 0.19% | 10.88% | 71.84% | 0.08% | 0.08% | 0.01% | 0.18% | 0.00% | 0.00% | 0.00% |

| Water | 1.90% | 10.54% | 0.15% | 4.05% | 0.90% | 82.35% | 0.04% | 0.00% | 0.06% | 0.00% | 0.00% | 0.00% |

| Bareland | 0.16% | 0.24% | 0.01% | 0.10% | 0.09% | 0.01% | 95.65% | 2.20% | 1.09% | 0.01% | 0.21% | 0.22% |

| Imprevious land | 0.32% | 0.29% | 0.02% | 0.09% | 0.05% | 0.00% | 7.52% | 89.74% | 1.00% | 0.01% | 0.50% | 0.45% |

| Surfaced road | 0.63% | 0.65% | 0.01% | 0.40% | 0.26% | 0.01% | 4.04% | 1.31% | 92.43% | 0.02% | 0.18% | 0.07% |

| Unsurfaced road | 4.11% | 4.02% | 0.49% | 1.18% | 2.72% | 0.00% | 18.13% | 2.89% | 6.67% | 52.64% | 6.18% | 0.98% |

| Residence | 1.50% | 1.45% | 0.34% | 0.16% | 0.39% | 0.00% | 5.79% | 2.82% | 6.64% | 0.11% | 80.40% | 0.38% |

| Other building | 0.59% | 1.09% | 0.06% | 0.38% | 0.41% | 0.04% | 10.05% | 7.75% | 2.65% | 0.13% | 1.60% | 75.26% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, A.; Shi, S.; Man, W.; Qu, F. Enhanced Land-Cover Classification through a Multi-Stage Classification Strategy Integrating LiDAR and SIF Data. Remote Sens. 2024, 16, 1916. https://doi.org/10.3390/rs16111916

Wang A, Shi S, Man W, Qu F. Enhanced Land-Cover Classification through a Multi-Stage Classification Strategy Integrating LiDAR and SIF Data. Remote Sensing. 2024; 16(11):1916. https://doi.org/10.3390/rs16111916

Chicago/Turabian StyleWang, Ailing, Shuo Shi, Weihui Man, and Fangfang Qu. 2024. "Enhanced Land-Cover Classification through a Multi-Stage Classification Strategy Integrating LiDAR and SIF Data" Remote Sensing 16, no. 11: 1916. https://doi.org/10.3390/rs16111916

APA StyleWang, A., Shi, S., Man, W., & Qu, F. (2024). Enhanced Land-Cover Classification through a Multi-Stage Classification Strategy Integrating LiDAR and SIF Data. Remote Sensing, 16(11), 1916. https://doi.org/10.3390/rs16111916