Abstract

Urban planning has, in recent years, been significantly assisted by remote sensing data. The data and techniques that are used are very diverse and are available to government agencies as well as to private companies that are involved in planning urban and peri-urban areas. Synthetic aperture radar data are particularly important since they provide information on the geometric and electrical characteristics of ground objects and, at the same time, are unaffected by sunlight (day–night) and cloud cover. SAR data are usually combined with optical data (fusion) in order to increase the reliability of the terrain information. Most of the existing relative classification methods have been reviewed. New techniques that have been developed use decorrelation and interferometry to record changes on the Earth’s surface. Texture-based features, such as Markov random fields and co-occurrence matrices, are employed, among others, for terrain classification. Furthermore, target geometrical features are used for the same purpose. Among the innovative works presented in this manuscript are those dealing with tomographic SAR imaging for creating digital elevation models in urban areas. Finally, tomographic techniques and digital elevation models can render three-dimensional representations for a much better understanding of the urban region. The above-mentioned sources of information are integrated into geographic information systems, making them more intelligent. In this work, most of the previous techniques and methods are reviewed, and selected papers are highlighted in order for the reader-researcher to have a complete picture of the use of SAR in urban planning.

1. Introduction

In this introductory section, we delve into various facets related to the topic at hand, offering a comprehensive overview across multiple disciplines. We begin by shedding light on the features extracted from SAR (synthetic aperture radar) images specifically tailored for urban planning purposes. These features are meticulously chosen to capture the intricate details of urban environments, facilitating effective decision-making processes in urban development and management.

Moving forward, we explore the realm of classification and fusion algorithms utilized in SAR image analysis for delineating different categories within urban and suburban regions. These algorithms leverage advanced techniques to accurately identify and differentiate various urban features, such as buildings, roads, vegetation, and water bodies, among others. The synergistic fusion of information from multiple sources enhances the overall classification accuracy, enabling more precise urban mapping and analysis.

Furthermore, we delve into the significance of the decorrelation and interferometry properties inherent in SAR imagery. These properties play a pivotal role in detecting and classifying urban areas afflicted by natural disasters such as floods or earthquakes, as well as identifying structural damages in urban infrastructure. By leveraging the unique signatures captured by SAR, analysts can swiftly assess the extent of urban damage and formulate timely response strategies.

Lastly, we delve into the utilization of SAR data for the creation of digital elevation models (DEMs) and tomographic images in urban areas. DEM models derived from SAR data provide valuable insights into the topographic features of urban landscapes, aiding in urban planning, land management, and environmental monitoring. Additionally, SAR tomography techniques enable the reconstruction of three-dimensional images of urban structures, offering unprecedented levels of detail for various applications such as urban modeling and infrastructure assessment.

Overall, this introductory section serves as a comprehensive primer, laying the groundwork for further exploration into the intricate relationship between SAR imagery and urban environments.

1.1. Feature Extraction

Various types of features extracted from SAR data have been used so far for urban region segmentation, categorization, and change detection. In this paragraph, a collection of these types of features is presented. A Markov random field is employed in [1] to represent the urban road network as a feature when high-resolution SAR images are used. A Markov random field is a graphical model used to represent the probabilistic relationships between variables in a system. It is characterized by conditional independence properties, meaning that the probability of one variable depends only on the variables to which it is directly connected. Urban satellite SAR images are characterized by multiscale textural features, as introduced in [2]. Multiscale textural features refer to analyzing textures in an image or signal at multiple scales or levels of detail. This involves extracting information about the patterns, structures, and variations in texture across different spatial resolutions. It is commonly used in image processing, computer vision, and pattern recognition tasks to capture both local and global characteristics of textures. Texture-based discrimination is accurately achieved in the case of land cover classes based on the evaluation of the co-occurrence matrices and the parameters obtained as textural features. The authors in [3] retrieve information regarding the extension of the targets in range and azimuth based on the variation of the amplitude of the received radar signal. A new approach is proposed in [4] to determine the scattering properties of non-reflecting symmetric structures by means of circular polarization and correlation coefficients. In order to analyze the parameters necessary to estimate urban extent, a method is proposed in [5] and compared against reference global maps. A new method for automatically classifying urban regions into change and no change classes when using multitemporal spaceborne SAR data was based on a generalized version of the Kittler–Illingworth minimum-error thresholding algorithm [6]. In [7], the authors investigate a speckle denoising algorithm with nonlocal characteristics that associates local structures with global averaging when change detection is carried out in multitemporal SAR images. Urban heat island intensity is quantified in [8] based on SAR images and utilizing a specific local climate zone landscape classification system. The work in [9] aims at assessing the utility of spaceborne SAR images for enhancing global urban mapping. This is achieved through the implementation of a robust processing chain known as the KTH-Pavia Urban Extractor. Mutual information criteria employed by a filtering method were introduced in [10] in order to choose and classify the appropriate SAR decomposition parameters for classifying the site in Ottawa, Ontario. Mutual information measures the amount of information that one random variable contains about another random variable. It quantifies the degree of dependency between the variables. In [11], two methods were employed to evaluate urban area expansion at various scales. The first method utilized night-time images, while the second method relied on SAR imagery. SAR image co-registration was achieved by a fast and robust optical-flow estimation algorithm [12]. Urban land cover classification is carried out in [13] by comparing images from the Landsat OLI spaceborne sensor with the Landsat Thematic Mapper. The authors in [14] used rotated dihedral corner reflectors to form a decomposition method for PolSAR data over urban areas. Target decomposition methods are also employed in [15] for urban area detection using PolSAR images. Target decomposition methods refer to a class of techniques used in signal processing and data analysis to decompose a signal or dataset into constituent parts, often with the aim of isolating specific components of interest. Some common target decomposition methods include polarimetric decomposition techniques like Freeman–Durden decomposition and Cloude–Pottier decomposition, which are used to characterize and analyze polarimetric radar data. These methods help extract meaningful information about the scattering properties of targets or terrain surfaces from complex radar observations. The study discussed in [16] focuses on estimating future changes in land use/land cover due to rapid urbanization, which is known to significantly increase land surface temperatures in various regions around the world.

1.2. Classification Algorithms

Besides the features required for classifying urban regions using SAR images, the classification tools are equally important for this purpose. Classification approaches are revisited in this paragraph. In [17], the authors analyzed the potential of decision trees as a data mining procedure suitable for the analysis of remotely sensed data. Decision trees are a popular type of supervised machine learning algorithm used for both classification and regression tasks. They work by recursively partitioning the input space into smaller regions based on feature values, making decisions at each step based on the values of input features. The log-ratio change indicator thresholding is automated and made more effective in [18] for multitemporal single-polarization SAR images. In [19], a classification approach based on a deep belief network model for detailed urban mapping using PolSAR data is proposed. A deep belief network is a type of artificial neural network architecture composed of multiple layers of latent variables, typically arranged as a stack of restricted Boltzmann machines. It is an unsupervised learning model used for tasks like feature learning, dimensionality reduction, and generative modeling. The study conducted in [20] aims to assess the effectiveness of combining multispectral optical data and dual-polarization SAR for distinguishing urban impervious surfaces. An extended four-component model-based decomposition method is proposed in [21] for PolSAR data over urban areas by means of rotated dihedral corner reflectors. In [22] a new classification scheme is proposed that fully exploits SAR data for mapping urban impervious surfaces, avoiding methods that are restricted to optical images. In [23], how deep fully convolutional networks, operating as classifiers, can be designed to process multi-modal and multi-scale remote sensing data is studied. Deep fully convolutional networks are a type of neural network architecture designed for semantic segmentation tasks. A method employing deep convolutional neural networks is presented in [24] to overcome weaknesses in accuracy and efficiency for urban planning and land management. In [25], a highly novel joint deep learning framework, which combines a multilayer perceptron and a convolutional neural network, is recommended for land cover and land use classification. In [26], the authors review the major research papers regarding deep learning image classifiers and comment on their performance compared to support vector machine (SVM) classifiers. support vector machine classifiers are supervised learning models used for classification and regression tasks. SVMs are particularly effective for classification problems with two classes, but they can also be extended to handle multi-class classification. The study in [27] demonstrates that it is feasible to automatically and accurately carry out urban scene segmentation using high-resolution SAR data with the assistance of deep neural networks. The capability of visual and radar (SAR) data to accurately classify regions occupied by cities is tested in [28] by means of three supervised classification methods. Finally, since the fast changes in land cover/land use are a strong indication of global environmental change, it is important to monitor land cover/land use through maps [29].

1.3. Fusion of SAR and Multiband Images

Fusion techniques in various levels (raw data, features, and decisions) are very important to improve the information required for urban region characterization. Some recent fusion techniques that mainly combine visual and SAR images for urban planning are given in the following: A fusion method is proposed in [30], which carries out co-registration of SAR and optical images to provide an integrated map of an urban area. The method takes into consideration the fact that SAR point targets strongly characterize urban areas. In [31], InSAR and multi-spectral data are utilized for characterizing and monitoring urban areas, considering the underlying physics, geometry, and statistical behavior of the data. Change detection is faced in [32] by fusing multiple SAR images based on extracting and comparing pixel-based linear features. High-resolution interferometric SAR is shown in [33] to be an important basis for fusion with other remotely sensed data in urban analysis tasks. The work in [34] describes the capability of fusing optical and SAR images by means of an unsupervised procedure for operational rapid urban mapping. In the 2007 Data Fusion Contest organized by the IEEE Geoscience and Remote Sensing Data Fusion Technical Committee, SAR and optical data from satellite sensors were employed to extract land cover maps in and around urban areas. This was achieved by exploiting multitemporal and multisource coarse-resolution datasets [35]. The study presented in [36] had two main objectives. First, it aimed to compare the performances of various data fusion techniques with the goal of enhancing urban features. Second, it aimed to improve urban land cover classification by employing a refined Bayesian classification approach. The aim of the study in [37] was to combine Quickbird multispectral (MS) and RADARSAT SAR data to improve the mapping of the occupation and use of the land by city structures. The primary objective of [38] was to assess the integration of radar and optical data. This involved examining how different aspects of input data contributed to the performance of a random forest classifier in urban and peri-urban environments. The research in [39] focused on developing a novel procedure for utilizing coarse-resolution SAR images to determine both the extent of built-up areas and generate a map of the identified urban region. A novel and efficient approach was introduced in [40] to isolate robust city surfaces by combining visual and EM data for decision inference. In [41] the authors presented a convolutional neural network capable of identifying matching patches within complex urban scenes by means of fine resolution visual and SAR data. The research in [42] put forth a new methodology for assessing monthly changes in impervious surfaces by integrating time series data from Landsat and MODIS. The study in [43] primarily aims to determine the feasibility of mapping winter wheat during the growing season and assess whether incorporating SAR and optical data, both separately and combined, can enhance classification accuracy in urban agricultural areas. In [44], the key objectives are to explore the potential utility and combined benefits of using ESA Sentinel-1A C-band SAR data and Sentinel-2A MSI data for the classification and mapping of ecologically significant urban and peri-urban areas. Different integration levels between SAR and optical data are compared in [45] to provide a scientific reference for a variety of studies. A methodological framework is presented in [46] for combining optical and SAR data at a pixel-level fusion to improve urban land cover classification.

1.4. Decorrelation for Disaster Detection

Rapid changes in urban regions due to disasters can be recorded and analyzed by means of the correlation and coherence properties among the SAR pixels. Phase analysis, which is expressed by interferometry, is very closely related to the correlation and coherence properties of the pixels. Decorrelation is an attempt to decrease the autocorrelation within a single signal or the correlation between two signals. To achieve the minimum autocorrelation, the power spectrum of the signal is adjusted to resemble that of a white noise signal (whitening). On the other hand, coherence is a measure of the consistency and phase alignment between waves. In accordance with the above, interferometry is a technique that leverages the interference of superimposed waves to extract valuable information. It is used for precise measurements of microscopic displacements, monitoring refractive index changes, and characterizing surface irregularities. The above concepts have been applied to specific applications with SAR imagery, as described below.

The detection of damaged areas around Kobe city in Japan due to the 1995 earthquake was achieved by employing intensity correlation and coherence [47]. The correlation coefficients between pre-earthquake and post-earthquake data were evaluated as a normalized difference. Multi-temporal SAR images are utilized in [48] in an unsupervised change-detection method to detect new urban areas. This approach leverages both the coherence and intensity characteristics of SAR pixels. The study in [49] explores the hidden signatures in co-polarization coherence within the radar line of sight to investigate urban damage. A proposed damage index can differentiate between different levels of damage in urban patches. A high-resolution coherent change detection approach is introduced in [50] for identifying structural changes in urban areas by combining SAR imagery with GIS processing.

1.5. Interferometry for Change Detection in Urban Areas

The work in [51] demonstrates that individual buildings and infrastructure can be monitored for structural stress and seasonal deformation by means of the TerraSAR-X data because of the short revisiting time of the satellite (11 days). An optimal estimation method is presented in [52] for phase histories of distributed scatterers in urban areas. The method offers elevation and deformation accuracy comparable to persistent scatterer interferometry. The research presented in [53] explores the role of interferometric coherence in addition to intensity SAR data for mapping floods in both agricultural and urban environments. Interferometric data are effective in identifying areas with changes in water levels. The authors in [54] investigate the use of repeat-pass interferometric SAR for land cover classification. Their findings are that a greater loss of interferometric coherence exists as the time difference between two interferometric acquisitions increases. An unsupervised method for flood detection is presented in [55], which combines SAR intensity and interferometric coherence data.

1.6. Flood in Urban Areas

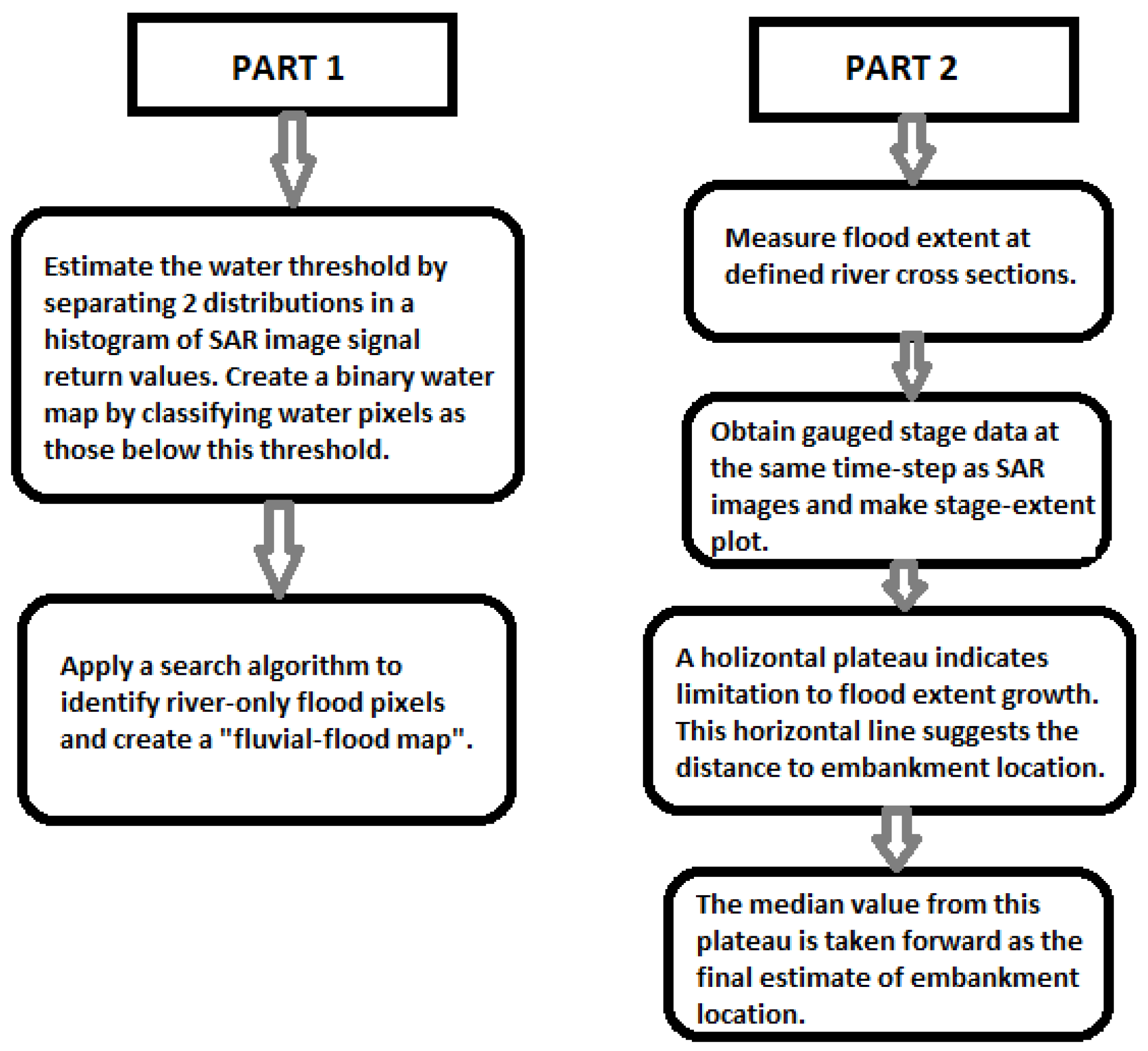

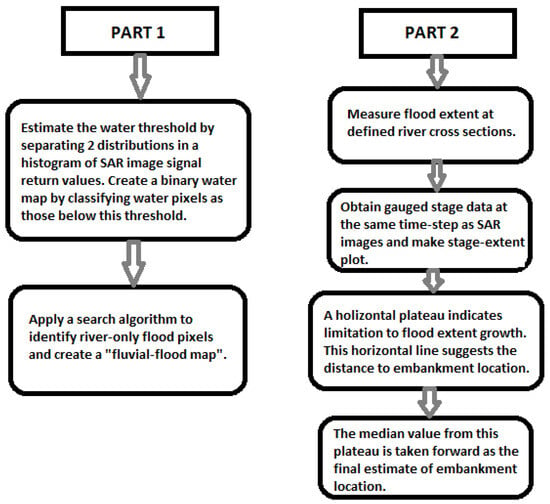

Flood dynamics in urban areas, as explained in [56], can be monitored using a unique dataset comprising eight space-borne SAR images, aerial photographs, and constrained mathematical models. In the study in [57], a complex urban area in Kuala Lumpur, Malaysia, was selected as a case study to simulate an extreme flooding event that occurred in 2003. Three different digital terrain models (DTMs) were structured and employed as input for a 2D urban flood model. The method presented in [58] had two main objectives: (a) to establish the connection among the water height and the flood range (SAR-derived flood images) for various river cross sections, and (b) to infer the location of embankments by identifying plateaus in these stage-extent plots. Airborne LiDAR data were employed to assess their estimation accuracy and reliability. A neuro-fuzzy flood mapping approach is presented in [59] by means of texture-enhanced single SAR images. The flood and non-flood categories are represented on a fuzzy inference system using Gaussian curves.

1.7. Digital Elevation Models and Tomographic Images of Urban Areas

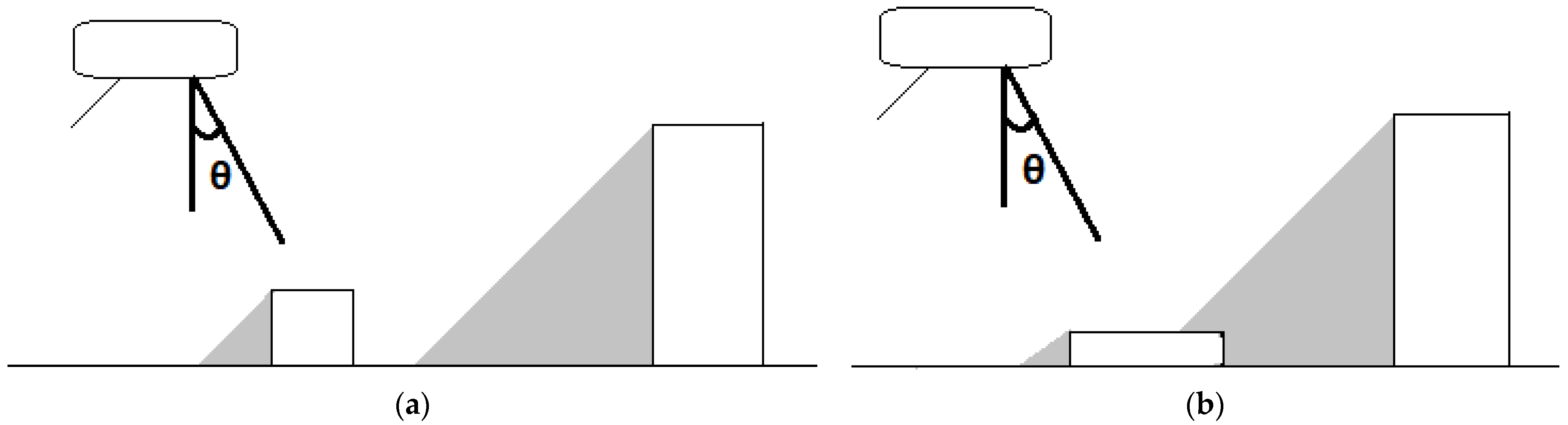

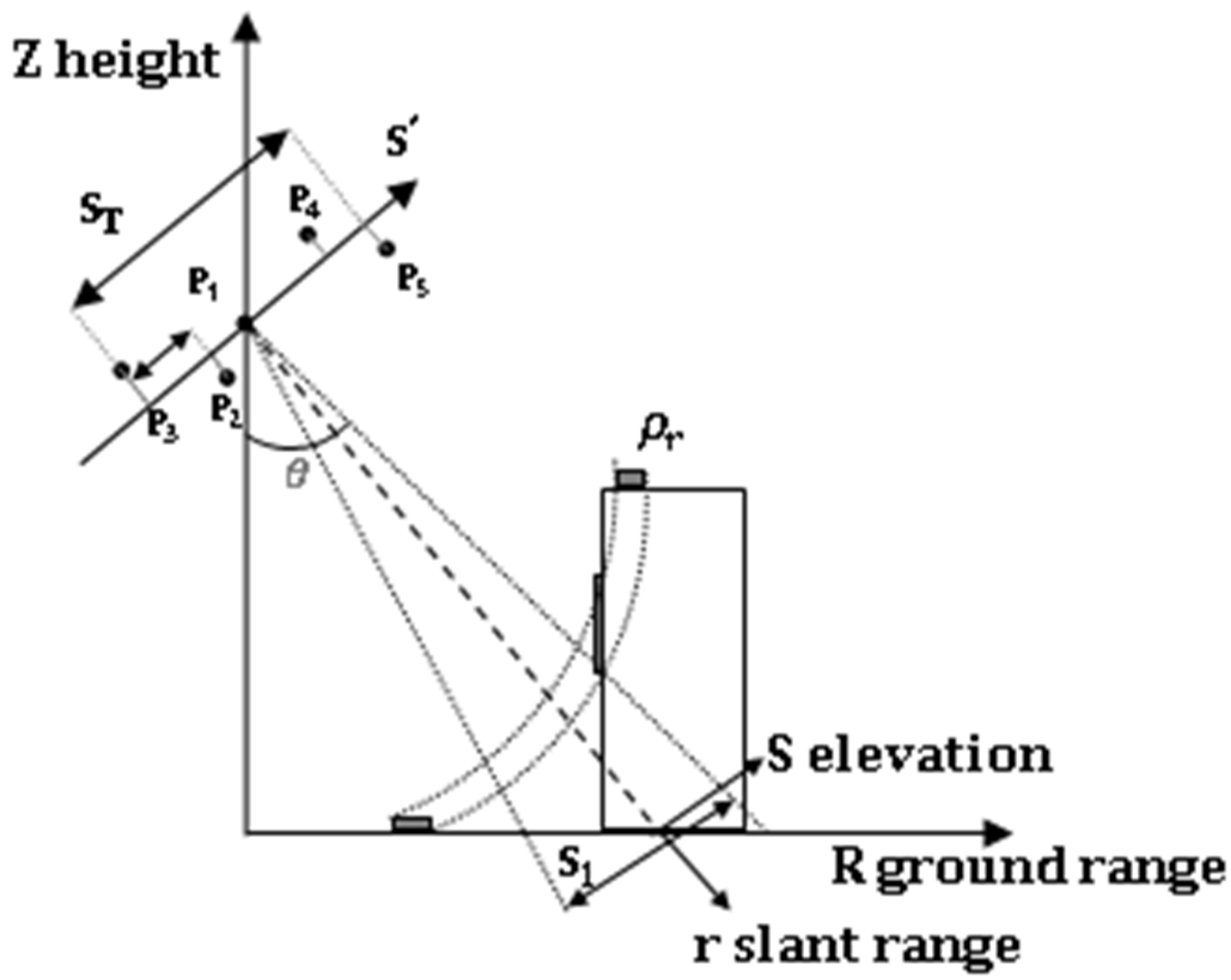

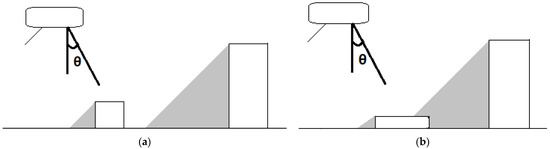

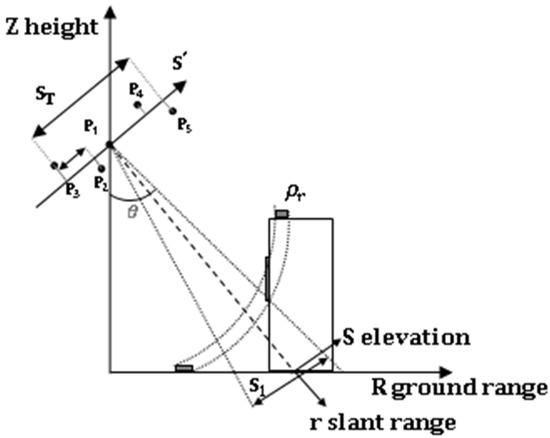

Since the SAR images contain coherency and interferometric information, they are suitable for supporting the construction of DTMs and tomographic representations. One of the main advantages of high-resolution SAR images is that their information is independent of weather conditions. However, the inherent slant range geometry prohibits the whole ground information from being recorded, leaving large shadow areas. In [60], a review of TomoSAR techniques is provided, presenting relevant applications for urban areas and especially on scatterer 3D localization, selection of reliable scatterers, and precise regaining of the underlying surface. The circular trajectory of a SAR sensor around a specific region gives the capability of constructing a DEM of the region [61]. The work in [62] introduces a validation of SAR tomography regarding the analysis of a simple pixel-scatterer that consists of two different scattering mechanisms. The authors in [63] explain the achievable tomographic quality using TerraSAR-X spotlight data in an urban environment. They introduce a new Wiener-type regularization method for the singular-value decomposition technique, which likely enhances the quality of the tomographic reconstruction. The work in [64] proposes a complete processing chain capable of providing a simple 3-D reconstruction of buildings in urban scenes from a pair of high-resolution optical and SAR images. The essential role of SAR is highlighted [65] for layover separation in urban infrastructure monitoring. The paper likely provides geometric and statistical analysis to underscore the significance of SAR data in addressing layover issues in urban environments. The introduction of a new method for DEM extraction in urban areas using SAR imagery is presented in [66]. For the retrieval of the real 3-D localization and motion of scattering objects, advanced interferometric methods like persistent scatterer interferometry and SAR tomography are required, as discussed in [67]. The work in [68] aimed at a precise TomoSAR reconstruction by incorporating a priori knowledge, thus significantly reducing the required number of images for the estimation. The work in [69] focuses on the investigation of applying a stereogrammetry pipeline to very-high-resolution SAR-optical image pairs. A novel method for integrating SAR and optical image types is presented in [70]. The approach involves automatic feature matching and combined bundle adjustment between the two image types, aiming to optimize the geometry and texture of 3D models generated from aerial oblique imagery. The authors in [71] introduce a planarity-constrained multi-view depth map reconstruction method that begins with image segmentation and feature matching and involves iterative optimization under the constraints of planar geometry and smoothness. The capabilities of PolSAR tomography in investigating urban areas are analyzed in [72]. A discussion of the likelihood of creating high-resolution maps of city regions using ground-SAR processing by means of millimeter-wave radars installed on conventional vehicles is given in [73].

With the maturity of deep learning techniques, many data-driven PolSAR representation methods have been proposed, most of which are based on convolutional neural networks (CNNs). Despite some achievements, the bottleneck of CNN-based methods may be related to the locality induced by their inductive biases. Considering this problem, the state-of-the-art method in natural language processing, i.e., transformer, is introduced into PolSAR image classification for the first time. Specifically, a vision transformer (ViT)-based representation learning framework is proposed in [74], which covers both supervised learning and unsupervised learning. The change detection of urban buildings is currently a hotspot in the research area of remote sensing, which plays a vital role in urban planning, disaster assessments, and surface dynamic monitoring. In [75], the authors propose a new approach based on deep learning for changing building detection, called CD-TransUNet. CD-TransUNet is an end-to-end encoding–decoding hybrid Transformer model that combines the UNet and Transformer. Generative adversarial networks (GANs), which can learn the distribution characteristics of image scenes in an alternative training style, have attracted attention. However, these networks suffer from difficulties in network training and stability. To overcome this issue and extract proper features, the authors in [76] introduce two effective solutions. Firstly, employing the residual structure and an extra classifier in the traditional conditional adversarial network to achieve scene classification. Then, a gradient penalty is used to optimize the loss convergence during the training stage. Last, they select GF-3 and Sentinel-1 SAR images to test the network. One remaining challenge in SAR-based flood mapping is the detection of floods in urban areas, particularly around buildings. To address it, in [77] an unsupervised change detection method is proposed that decomposes SAR images into noise and ground condition information and detects flood-induced changes using out-of-distribution detection. The proposed method is based on adversarial generative learning, specifically using the multimodal unsupervised image translation model. Reliable hydrological data ensure the precision of the urban waterlogging simulation. To reduce the simulation error caused by insufficient basic data, a multi-strategy method for extracting hydrological features is proposed in [78], which includes land use/land cover extraction and digital elevation model reconstruction.

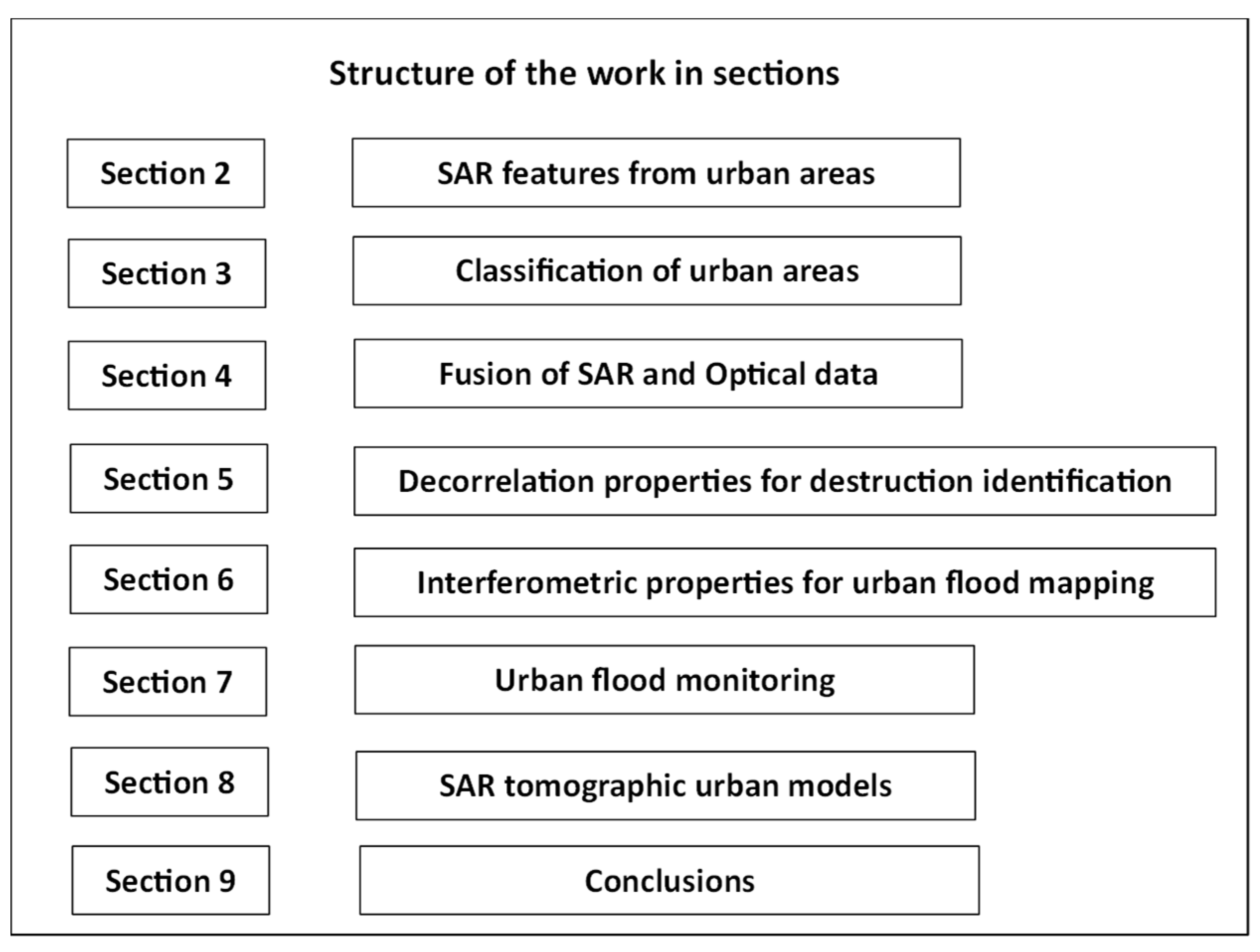

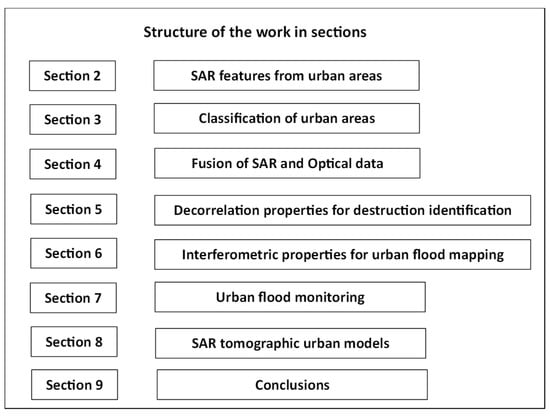

The material presented hereafter corresponds to indicative special topics from the literature. These topics are given in the corresponding sections, as depicted in Figure 1.

Figure 1.

Block diagram of the organization of the paper in sections.

2. SAR Features from Urban Areas

In this section, two types of features from SAR images for urban region classification are highlighted.

2.1. Textural SAR Features [2]

The objective described in this study is to employ multidimensional textural features as they are expressed by the co-occurrence matrices for distinguishing between various urban environments, following the approach outlined by Dell’Acqua and Gamba [79]. The proposal involves utilizing multiple scales and a training methodology to select the most suitable ones for a specific classification task.

In SAR images, textural characteristics have demonstrated significant promise. In contrast to optical images, SAR images are often affected by speckle noise, rendering individual pixel values unreliable. This underscores the enhanced appeal of textural operators for emphasizing the spatial patterns of backscatterers in SAR images. Utilizing supervised clustering on these features can help identify regions where buildings and other human-made structures exhibit similar patterns. This means that residential areas characterized by isolated scattering elements differ significantly from urban centers with densely packed scattering mechanisms, or commercial districts where strong radar responses are caused by a small number of high buildings.

The problem of region segmentation is very serious when the background is characterized by the stochastic nature of the SAR backscatter. This stochastic nature is the main reason that ordinary segmentation techniques cannot achieve high success in segmentation, especially in an urban environment. Possible solutions for SAR urban segmentation come from high-order statistics, the simplest of which are co-occurrence matrices.

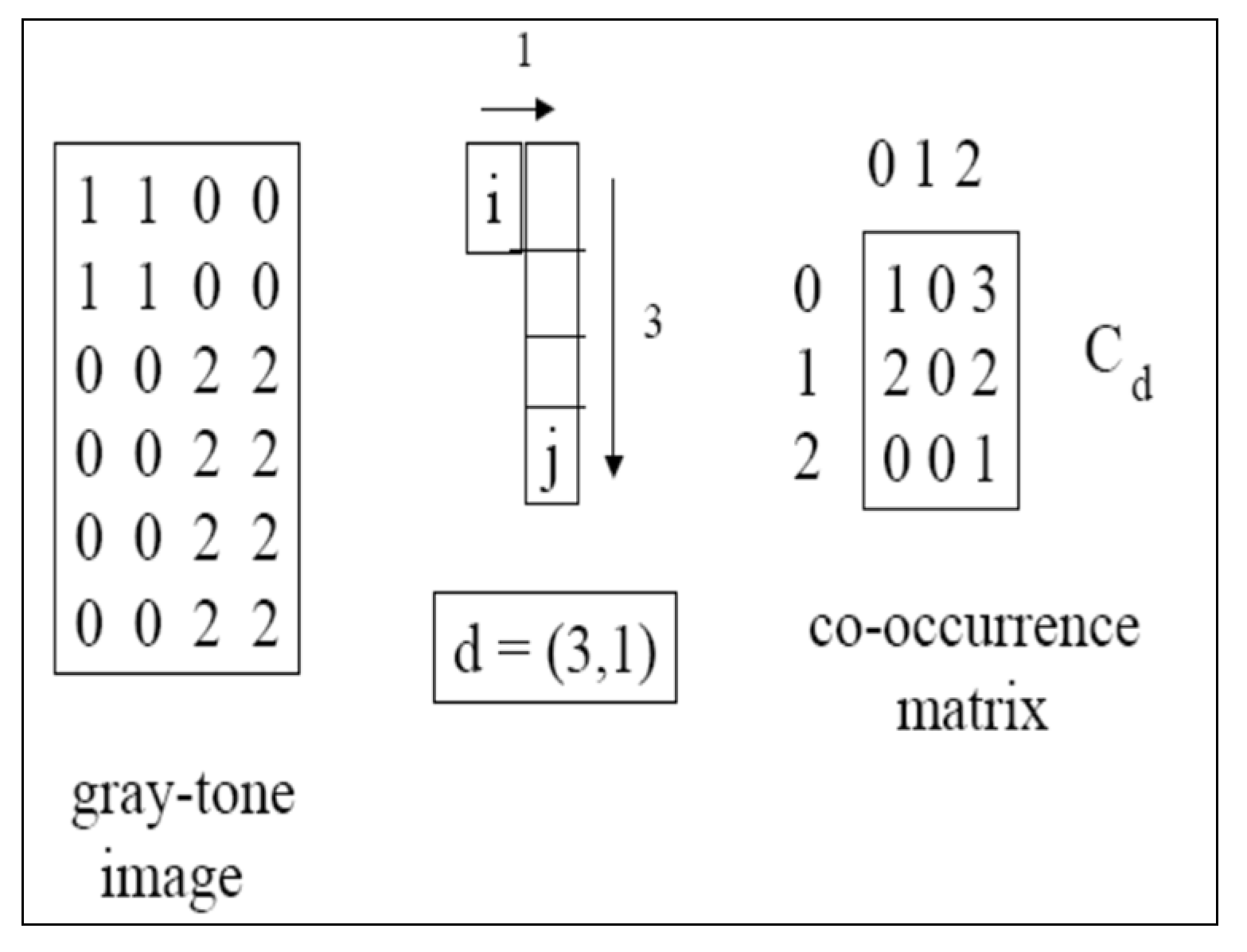

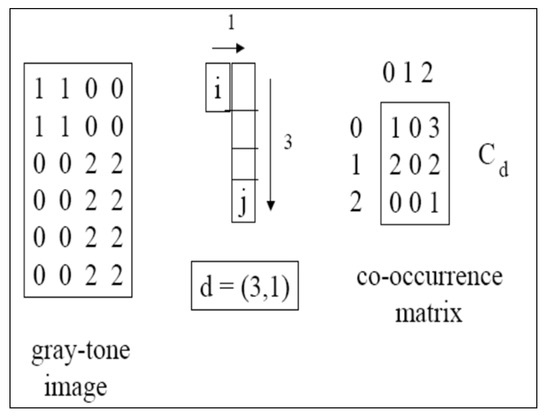

To calculate a co-occurrence matrix, you need to determine how frequently the values i and j co-occur inside a window for a pixel pair with specific relative locations, as depicted in Figure 2. These locations are defined by the horizontal and vertical relative displacements of the two pixels. This is the Cartesian form of displacement. Another way is to express the displacement in vector terms, giving its amplitude and the angle formed with the horizontal direction. Finally, it is very important to consider the size of the squared window as well as the number of quantization levels required to quantize the values of the pixels.

Figure 2.

Evaluation of a co-occurrence matrix. On the left are depicted the pixel values of the gray-tone image. The pixel pair is determined by the vector . The co-occurrence matrix is given on the right of the figure.

It is clear that a significant number of the above parameters are interconnected with scale factors. The unique parameter is irrelevant to the rest is the number of quantization levels, which correspond to the number of bits employed. The evaluation of the co-occurrence matrix is faster as the number of bits is smaller. Conversely, reducing the number of bits results in a greater loss of information. In urban SAR images, the gray-level histogram typically exhibits long tails due to the presence of strong scatterers. These strong scatterers generate exceptionally strong spikes in the images due to dihedral backscattering mechanisms [80], resulting in very high data values. Hence, the initial amplitude floating-point SAR images can be converted into integers with 256 levels prior to performing texture evaluation. The most important parameters are the distance and the direction. Direction becomes particularly crucial when dealing with anisotropic textures that require characterization. The common approach involves joining different directions and calculating the mean value of the textural parameters across these directions. In urban areas, it is important to note that the anisotropy observed in different parts of the scene tends to aggregate into a more general isotropic pattern in coarse-resolution satellite images. In such cases, distance becomes a crucial factor in distinguishing between textures that may consist of identical elements but with varying spatial arrangements.

The final parameter to consider is the extent of the co-occurrence window. It is widely acknowledged that a narrower window is preferable, primarily due to boundary-related concerns. Nevertheless, for precise texture evaluation, a window that changes continuously and gradually, with somewhat loosely defined boundaries, should be chosen. Therefore, the width is the procedural characteristic that only relies on the spatial scale of the environment of a city. In the example depicted in Figure 2, the width is 3 × 1.

The challenge here lies in the fact that the width needs to be evaluated by means of a testing process, and it may not be universally applicable to all images of the same region, incorporating the corresponding data from a multitemporal sequence of images captured by the same sensor. While it is evident that extremely uncommon window dimensions are unsuitable for classification purposes, some values in the vicinity of the chosen 21×21 squared window yield sometimes better results. Using a unique scale for textural processing can certainly diminish the characteristics of the classification map along the neighborhood of the zones, as it disregards evidence present at other scales. However, when multiple scales are considered, it necessitates the analysis of a substantial number of bands. To mitigate this issue, a common approach is to leverage feature space reduction procedures, originally developed for hyperspectral data, such as effective feature extraction via spectral-spatial filter discrimination analysis [81]. These techniques help reduce the dimensionality of the data. In this way, the very crucial textural features at various scales are retained, which serve as inputs to the classifier. Features extracted based on texture as well as multispectral information [81] enrich the information content to be processed in dimensionality reduction approaches.

In the experiments, test SAR data were utilized from the urban region located in Pavia, Northern Italy. The images were sourced from the ASAR sensor onboard the ENVISAT satellite, as well as from ERS-1/2 and RADARSAT-1. To facilitate data examination, a surface truth map was created by visually interpreting the same area with a fine-resolution optical satellite panchromatic image from IKONOS-2 with 1 m spatial resolution, acquired in July 2001. It is worth noting that when classifying using individual sensors, the classification accuracy hovered around 55%. However, combining the data from ENVISAT and ERS sensors proved to be highly successful, resulting in an overall accuracy of 71.5%. This joint use of ENVISAT and ERS data outperformed using ERS data alone, which achieved accuracies of approximately 62%, and also outperformed the classification based solely on the two ASAR images, which achieved an accuracy of 65.4%.

In conclusion, the study presented in [2] demonstrated that the characterization of an urban environment can be significantly enhanced by employing multiscale textural features extracted from satellite SAR data.

2.2. SAR Target Decomposition Features for Urban Areas [10]

Target decomposition parameters represent a highly effective approach for extracting both the geometrical and electrical properties of a target. These parameters are not of the same importance, and the main problems a researcher has to solve are:

- To determine the maximum number of these parameters, as they are derived from various methods.

- To access each of the derived parameters as far as their information content is concerned and to use the most important of them for urban classification.

Both issues are addressed in [10].

Cloude-Pottier’s incoherent target decomposition [82] has emerged as the most widely adopted method for decomposing scattering from natural extended targets. Unlike the Cloude-Pottier decomposition, which characterizes target scattering type with a real parameter known as Cloude , a decomposing technique by Touzi utilizes both the magnitude and the phase of the “complex” scatterer proposed in [83]. This approach provides an unambiguous means for characterizing target scattering. In order to preserve all available information, no averaging of scattering parameters is performed. Instead, target scattering parameters are characterized through a thorough analysis of each of the three eigenvector parameters, resulting in a total of 12 roll-invariant parameters. However, a smaller number of these invariant parameters are necessary for scatterer classification. Accordingly, there is a necessity for a technique for choosing the most meaningful parameters for efficient classification. This ideal subset, obtained by the optimum incoherent target decomposition (ICTD) method, guarantees correct classification without information loss. The selection process is based on mutual information theory, which is extensively detailed in [10]. This paper briefly introduces the Touzi decomposition method, followed by an exploration of Shannon’s mutual information theory. This theory is then applied to rank and select an optimal parameter subset for classifying city regions. Two different methods were introduced for selecting the appropriate parameter subset: the maximum mutual information (MIM) approach and the maximum relevancy and minimum redundancy (MRMR) approach. The two approaches are compared as far as their effectiveness in feature selection and region classification is concerned. The optimal subsets obtained through MIM and MRMR are subsequently utilized for urban site classification, employing support vector machines (SVM). The study uses polarimetric Convair 580 SAR data collected over an urban site in Ottawa, Ontario, to evaluate and assess the results of parameter selection and region classification.

Touzi decomposition, as described in reference [83], relies on the characteristic decomposition of the coherency matrix . In the case of a reciprocal target, this characteristic approach of decomposing the Hermitian-positive semi-definite target coherence matrix gives the possibility of interpreting it as the incoherent sum of up to three coherence matrices . These three matrices represent three distinct single scatterers, and each one is weighted by its respective positive real eigenvalue

Each eigenvector , which is related to a unique scattering mechanism, is represented by the four roll-invariant scattering parameters, as drawn below:

In this context, each individual coherent scatterer is defined by its scattering type, which is described in terms of polar coordinates and , along with the parameter representing the level of symmetry. The eigenvalue , which is normalized, indicates the proportion of energy associated with the scattering mechanism, denoted by the corresponding eigenvector .

The target scattering properties are uniquely characterized by analyzing each of the three eigenvector parameters obtained by the Touzi decomposition.

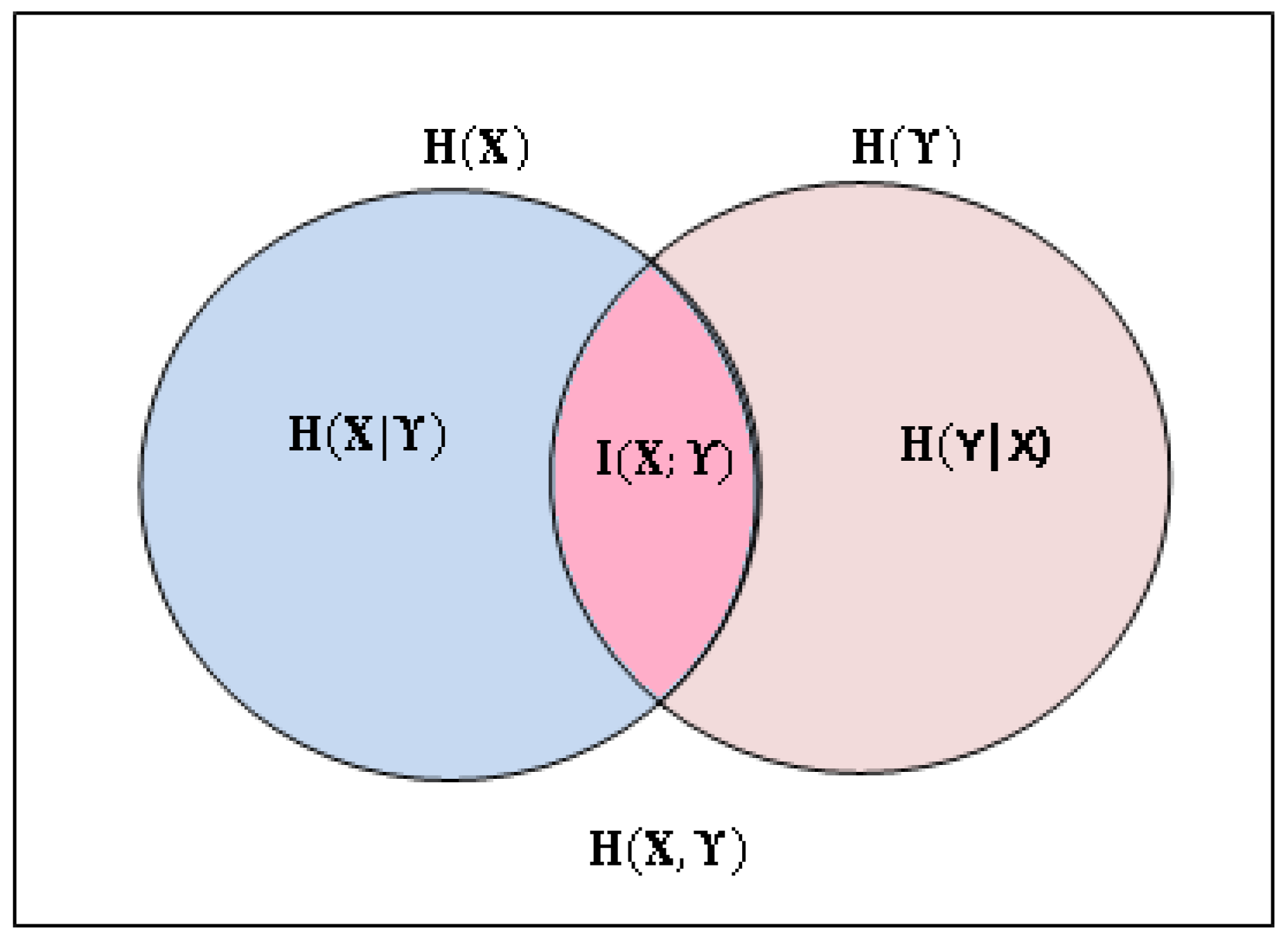

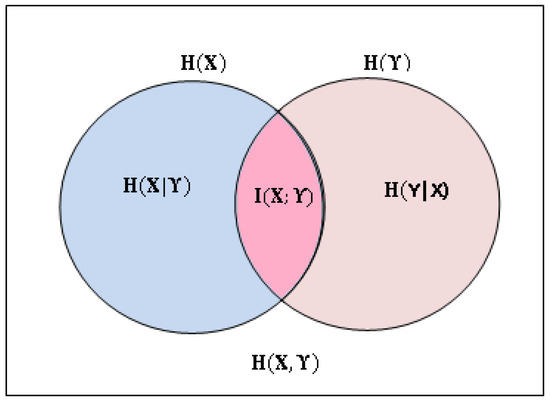

As described by Shannon [84], entropy, denoted as , serves as the fundamental measure of information, quantifying the uncertainty related to the random variable X. Mutual information, denoted by Shannon as is a metric used to account for both correlation as well as dissimilarity among two random variables and . When selecting among n features, the goal is to maximize the joint mutual information, . Estimating the distribution of , which is of a high dimension, can be quite challenging, and it is often assumed that is independent from all other features for estimating and ordering the features in descending order of importance, especially when we exclusively rely on mutual information criteria for feature selection. The association among the entropies and , as well as the mutual information for two random variables and is illustrated by the Venn diagram in Figure 3.

Figure 3.

The Venn diagram is the most suitable tool to demonstrate the association among variables X and Y.

The definition of the entropy , joint entropy , conditional entropy and mutual information of two random variables and , based on their probability density function and joint probability density function is given by Equation (3):

Mutual information quantifies the extent of information shared between two random variables, and . It is important to note that mutual information is nonnegative () and symmetric (). In the context of machine learning and feature selection, mutual information plays a significant role. It can be demonstrated that if X represents the set of input features while represents the target class, then the prediction error for Y based on is confined from below by Fano’s inequality [85]. For any predictor function applied to an input feature set , the lower bound for prediction error is as follows:

while it is bounded from above by Hellman–Raviv’s inequality [86]

According to (5), as the mutual information increases, the prediction error bound becomes minimum. This serves as the primary incentive for employing mutual information criteria for selecting features. The concept becomes even clearer when considering the Venn diagram depicted in Figure 3.

It is important to mention that an ideal set of features has to be individually relevant as well as exhibit minimal redundancy among themselves. As mentioned previously, for each chosen feature , the mutual information was maximized when selecting the required features from the complete feature set. The most straightforward method involves maximizing the mutual information, as defined in Equation (6), and subsequently arranging the features in descending order based on their relevance to the target class :

However, the MIM approach does not provide insights into the mutual relationships between the parameters. In contrast, the MRMR criteria select each new feature based on two key considerations: it should have the individual mutual information as high as possible, and it should contribute the lowest possible joint mutual information or redundancy. The number of preselected features is represented by the variable , and the candidate features by . The MRMR criterion is expressed as:

The features are assessed based on (6) and (7), while the classification of the urban area is carried out using the ranked set of these features. Accordingly, the ranked parameters are examined regarding their relevance to the target classification using the MIM criterion. Furthermore, their redundancy is evaluated in conjunction with preselected parameters using the MRMR criterion. The target classes considered are = {forest, bare ground, small housing, water}. This classification process helps identify which features are most informative and non-redundant for distinguishing between these specific classes in the urban area.

In summary, both the MIM and MRMR methods, which facilitate the ranking and selection of a minimal subset from the extensive set of ICTD parameters, demonstrate their effectiveness in achieving precise image classification. A comparative analysis was conducted to assess the effectiveness of these two approaches in feature selection and their ability to discriminate between classes. The optimal subset, determined through the MIM and MRMR criteria, was utilized for urban site classification using SVMs. The findings suggest that a minimum of eight parameters is necessary to achieve accurate urban feature classification. The chosen parameter set from the ranked features not only enhances our understanding of land use types but also reduces processing time for classification while increasing classification accuracy.

A significant contribution for using the backscattering mechanism of PolSAR data for urban land cover classification and impervious surfaces classification was investigated in [22] and provides similar classification results as the work in [10]. The paper proposes a new classification scheme by defining different land cover subtypes according to their polarimetric backscattering mechanisms. Three scenes of fully polarimetric Radsarsat-2 data in the cities of Shenzhen, Hong Kong, and Macau were employed to test and validate the proposed methodology. Several interesting findings were observed. First, the importance of different polarimetric features and land cover confusions was investigated, and it was indicated that the alpha, entropy, and anisotropy from the H/A/Alpha decomposition were more important than other features. One or two, but not all, components from other decompositions also contributed to the results. The accuracy assessment showed that impervious surface classification using PolSAR data reaches 97.48%.

3. Methods for Urban Area Classification Using SAR [28]

The classification of urban areas using SAR images has the following advantages and disadvantages, which must be considered thoroughly in order to reveal all the information available.

Advantages

- Give day and night images.

- Give images even if the area is covered by clouds.

- Give high-resolution information regarding the geometric and electric properties of the scatterers.

Disadvantages

- They are prone to speckle noise.

- They are affected by moisture and atmospheric conditions.

Different classification approaches give a large variety of performances in distinguishing urban land cover.

Cloud cover remains a persistent problem in the field of optical remote sensing, impeding the seamless and precise monitoring of urban land changes. An examination of more than a decade of ongoing data acquisitions using the Moderate Resolution Imaging Spectrometer (MODIS) revealed that, on average, approximately 67% of the Earth’s surface is cloud-covered [87]. Obtaining cloud-free remote sensing images is a rare occurrence, and this challenge is particularly pronounced in tropical and subtropical regions, which tend to experience frequent cloud cover and rainfall. On the contrary, SAR has emerged as a valuable complement to optical remote sensing, particularly in regions prone to cloud cover, thanks to its all-weather and day-and-night imaging capabilities. Fusion of optical and SAR images has been proposed so far based on various approaches and implemented separately in pixel level, feature level, and decision level fusion [45,88]. Fusing pixels involves directly overlaying optical and SAR data at the pixel level without extracting specific features. However, some studies have suggested that fusing optical and SAR data at the pixel level might not be ideal due to the fundamentally different imaging mechanisms employed by these two sensor types [89]. Merging information at the feature level involves the integration of this information obtained from both optical and SAR data. Most of the multi-sensor fusion techniques focus on merging features due to advanced technological and theoretical progress. Common methods employed for feature-level fusion encompass support vector machines [90], random forest [91], and deep learning techniques [92]. The fusion of decisions, on the other hand, involves classifying land cover by means of visual and SAR images separately and then inferring based on the obtained classification results. Methods for integrating decisions for visual and SAR data typically include techniques such as voting, the Dempster–Shafer theory, and Random Forest [40,93,94].

Indeed, a thorough investigation of the role of SAR in the presence of clouds, particularly its influence on optical images covered mostly by clouds and its impact on separating different land cover types in varying cloud conditions, is an area that requires further exploration. The fundamental question revolves around understanding how clouds precisely affect land cover recognition in urban land cover classification when integrating optical and SAR images. To address this, there is a need for a methodology to examine the mechanisms through which clouds affect urban land cover classification and allow for the quantification of the supplementary benefit of SAR data.

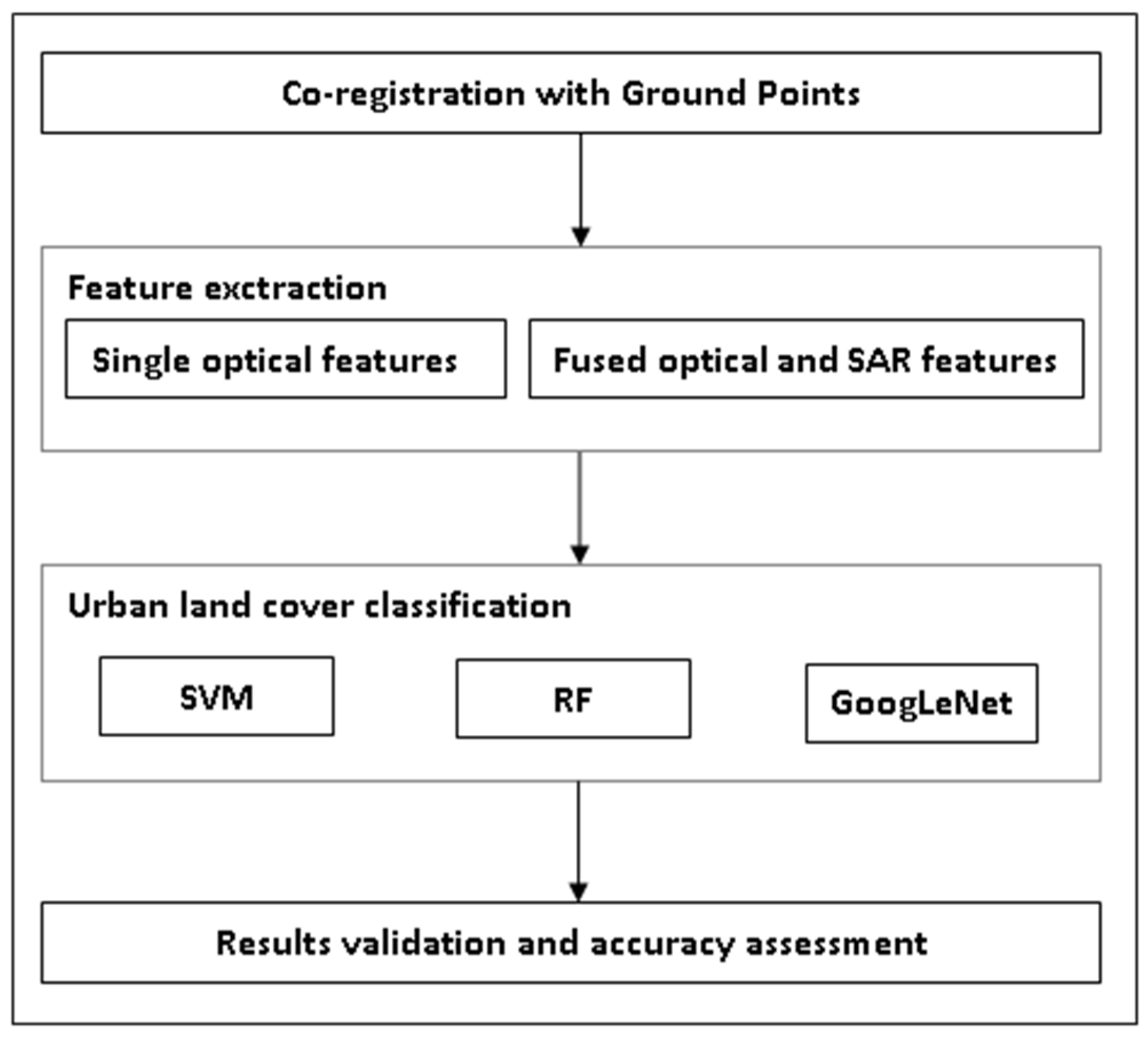

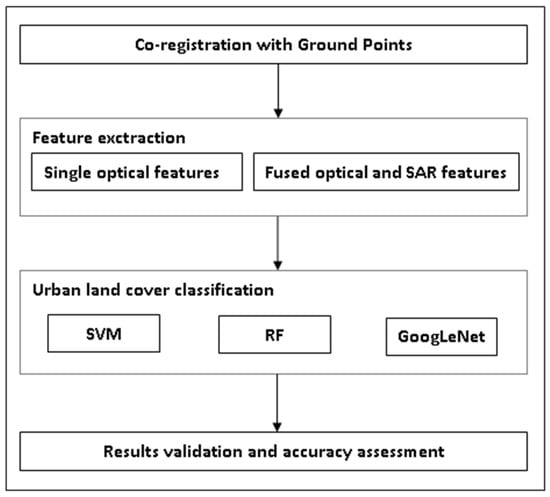

The area for exploitation is the Pearl River Delta in southern China. The methodology followed in this study comprises a novel sampling strategy aimed at extracting samples with varying degrees of cloud cover and constructing a dataset that allows for the quantitative assessment of the impact of cloud content on the analysis. Accordingly, both the optical and SAR images were subjected to individual preprocessing steps using appropriate techniques. Subsequently, these images were co-registered to ensure proper alignment. After co-registration, different features were used from the co-registered visual and SAR data. These extracted features would serve as the basis for further analysis and evaluation of land cover classification, taking into account cloud content variations. For the specific levels of cloud coverage, the three representative classifiers were employed to perform the urban land cover classification. Subsequently, validation procedures and accuracy assessments were conducted to evaluate the influence of cloud cover and assess the additional value of SAR data in discriminating between land cover categories (Figure 4).

Figure 4.

The methodology followed in this work comprises the three different tested classification schemes.

Different feature extraction phases were employed to acquire information from optical and SAR data. Preprocessing comes first before geocoding and co-registering the ALOS-2 with the Sentinel-2 data. In order not to destroy textural information, calibration and speckle noise reduction are first performed. After that, the ALOS-2 data and the polarimetric features are geocoded and co-registered with the Sentinel-2 images.

In the analysis, the set of optical features employed the original spectral signatures, the normalized difference vegetation index, and the normalized difference water index. In addition to these spectral features, textural information was extracted using the four features inherent in the gray-level co-occurrence matrix. Namely homogeneity, dissimilarity, entropy, and the angular second moment. Thus, a total of 12 × 4 feature layers were acquired. To reduce dimensionality, principal component analysis was applied, resulting in a final set of 18 optical feature layers. Features from SAR data were engaged to enhance the discrimination of different land cover types, particularly in the presence of cloud cover. Specifically, the backscattering polarization components HH, HV, VH, and VV, the polarization ratio, the coherence coefficient as well as the Freeman–Durden decomposition parameters [95], the Cloude–Pottier decomposition parameters [96] and the Yamaguchi four-component decomposition parameters [97] were employed. Optical and SAR features stacked together constitute a 44-dimensional feature vector. Normalization of the features is necessary to avoid potential biases.

To assess the effect of clouds on classifying land types, three distinct classification approaches were utilized:

- Support vector machine (SVM);

- Random forest;

- The typical supervised machine learning method.

These three classification algorithms were chosen due to their stable and well-documented performance in various classification tasks.

The SVM classifier operates by creating a decision boundary, often described as the “best separating hyperplane”, with the objective of maximizing the margin between two distinct classes or groups. This hyperplane is located within the n-dimensional classification space, where “n” represents the number of feature bands or dimensions. The SVM algorithm iteratively identifies patterns in the training data and determines the optimal hyperplane boundary that best separates the classes. This configuration is then applied to a different dataset for classification. In the context of this work, the dimensions of the classification space correspond to the available bands, while the separate pixels within a multiband image are represented by vectors.

The random forest training approach was proposed by Breiman in 2001 [91] since the grouping of aggregated classifiers outperforms a single classifier. Each decision tree is constructed when training samples are randomly selected and are called out-of-bag samples [98]. These samples can be fed to the decision tree for testing, which helps to de-correlate the trees and consequently reduce multicollinearity. Accordingly, different decision trees can be formed by means of different organization of the input data so that to build the random forest classifier.

GoogLeNet [99] is a very well-known deep convolutional neural network with 22 layers that was developed by Google. One of its significant contributions is the introduction of the inception architecture, which is based on the concept of using filters of different sizes in parallel convolutional layers. GoogLeNet, with its prototype architecture, was a substantial advancement in the field of deep learning and convolutional neural networks. It demonstrated that deep networks could be designed to be both accurate and computationally efficient, making them a valuable architecture for a wide range of computer vision tasks.

In order to investigate the performance of classifying land cover types under various percentages of cloud content, the study required datasets with different cloud contents. The key details about the dataset used for this purpose are the total of 43,665 labeled pixels representing five different land cover classes (vegetation (VEG), soil (SOI), bright impervious surface (BIS), dark impervious surface (DIS) and water (WAT)). Visual interpretation was carried out for labeling the above pixels. For the experiments, the 43,665 classified pixels in the specific image were employed to develop the dataset with specific cloud coverage (0, 6%, 30%, and 50%). The respective number of pixels is encountered when the cloud content corresponds to each of the above four cloud coverages. Each time, half of the pixels were randomly selected as training samples, and the other half were selected as testing.

Quantitative metrics, including the standard accuracy metrics of the overall accuracy, the confusion matrix, the producer’s accuracy, and the user’s accuracy, were employed to evaluate the results. Furthermore, two different assessment methods were employed to evaluate the effects of clouds on urban land cover classification, namely the overall accuracy to quantify the influences of different percentages of clouds on classification and the evaluation of the confusion of land covers under cloud-free and cloud-covered areas.

In general, the performance of this approach [28] is similar to other approaches in the literature, such as those in Refs [45,88,94]. These techniques present a combination of classification methods to improve the final urban identification performance.

4. Fusion Techniques of SAR and Optical Data for Urban Regions Characterization [40]

Fusion of different remotely sensed images of the same areas is very essential for improving land cover classification and object identification. Fusion techniques are intended to solve three main issues in land classification procedures.

- Combining mainly optical, thermal and radar (SAR) images in order to extract special information from each of these EM scatterings.

- Cover with complementary information land regions for which some of the data are missing (i.e., optical information obscured by cloud cover).

- Fused information at the pixel level, employing, if necessary, decision fusion techniques in order to decide about the type of land cover in fine resolution analysis.

Impervious surfaces refer to human-made features such as building rooftops, concrete, and pavement that do not allow water to penetrate the soil. They are crucial indicators in assessing urbanization and ecological environments. The increase in impervious surfaces can lead to various environmental challenges, including reduced green areas, water pollution, and the exacerbation of the urban heat island effect [100]. For the time being, medium/coarse-spatial resolution satellite images were used to calculate impervious surfaces [101,102,103].

Despite the existence of various mapping techniques, the majority of them were originally developed with a focus on optical imagery. The wide range of urban land covers, including those with similar spectral characteristics, makes it challenging to precisely assess impervious surfaces using optical images. To illustrate, there have been instances where water and shaded areas have been mistakenly identified as dark impervious surfaces. Consequently, the practice of fusing remotely sensed images from various sources was employed to capitalize on the unique attributes of different images with the aim of enhancing mapping accuracy [104]. Prior investigations have demonstrated that combining optical and SAR data can notably enhance image classification accuracy while also diminishing the potential for misidentifying urban impervious surfaces and other land cover categories. SAR data exhibits sensitivity to the geometric attributes of urban land surfaces, offering valuable supplementary information regarding shape and texture. It has been recognized as a significant data source when used in conjunction with visual images to characterize impervious surfaces.

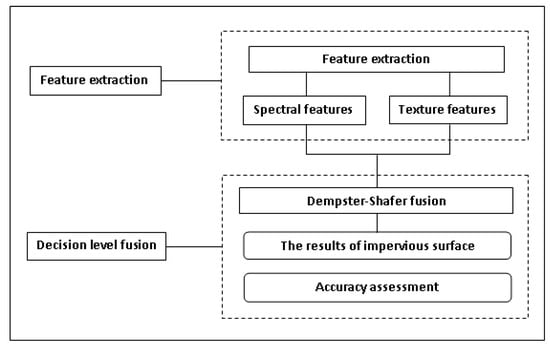

Presently, the fusion of visual and SAR images for unaffected surface mapping primarily occurs at pixel and feature levels. Nevertheless, pixel-level fusion encounters challenges with SAR images due to speckle noise, making it less suitable [105]. Moreover, feature-level fusion is susceptible to the effects of feature selection, potentially introducing ambiguities in classifying impervious surfaces. In this study, fusion of decisions pertains to the merging of classification outcomes originating from various data sources, such as optical or SAR data, in order to arrive at a conclusive land cover type determination for a given pixel. Leveraging the Dempster–Shafer (D-S) evidence theory [106,107], decision-level fusion has demonstrated promise as a possibly appropriate approach for classifying land cover types, despite being relatively underexplored in previous research. Fusing the classification outcomes from diverse data sources has exhibited advantages in image classification when compared to conventional Bayesian methods [108,109]. The D-S evidence theory considers unaffected surface estimations obtained from various data sources as independent indications and introduces measures of uncertainty for the combined unaffected surface datasets. Hence, the primary goal is to generate a precise urban impervious surface map through the decision-level integration of GF-1 and Sentinel-1A data, employing the Dempster–Shafer (D-S) theory. Additionally, this approach aims to offer in-depth assessments of the levels of uncertainty associated with impervious surface estimations. Initially, different land categories were individually classified from GF-1 (GaoFen-1 satellite) and Sentinel-1A data using the random forest (RF) methodology. Subsequently, these classifications were combined using the D-S fusion rules. Next, the types of land categories were additionally separated into two groups: non-impervious surfaces and impervious surfaces. To evaluate the accuracy of the estimations, a comparison was made between the estimated impervious surfaces and reference data gathered from Google Earth imagery. The surface under investigation encompasses the city of Wuhan, situated in the eastern Jianghan Plain. This area experiences a tropical humid climate characterized by substantial rainfall and distinct seasonal changes, with four clearly defined seasons.

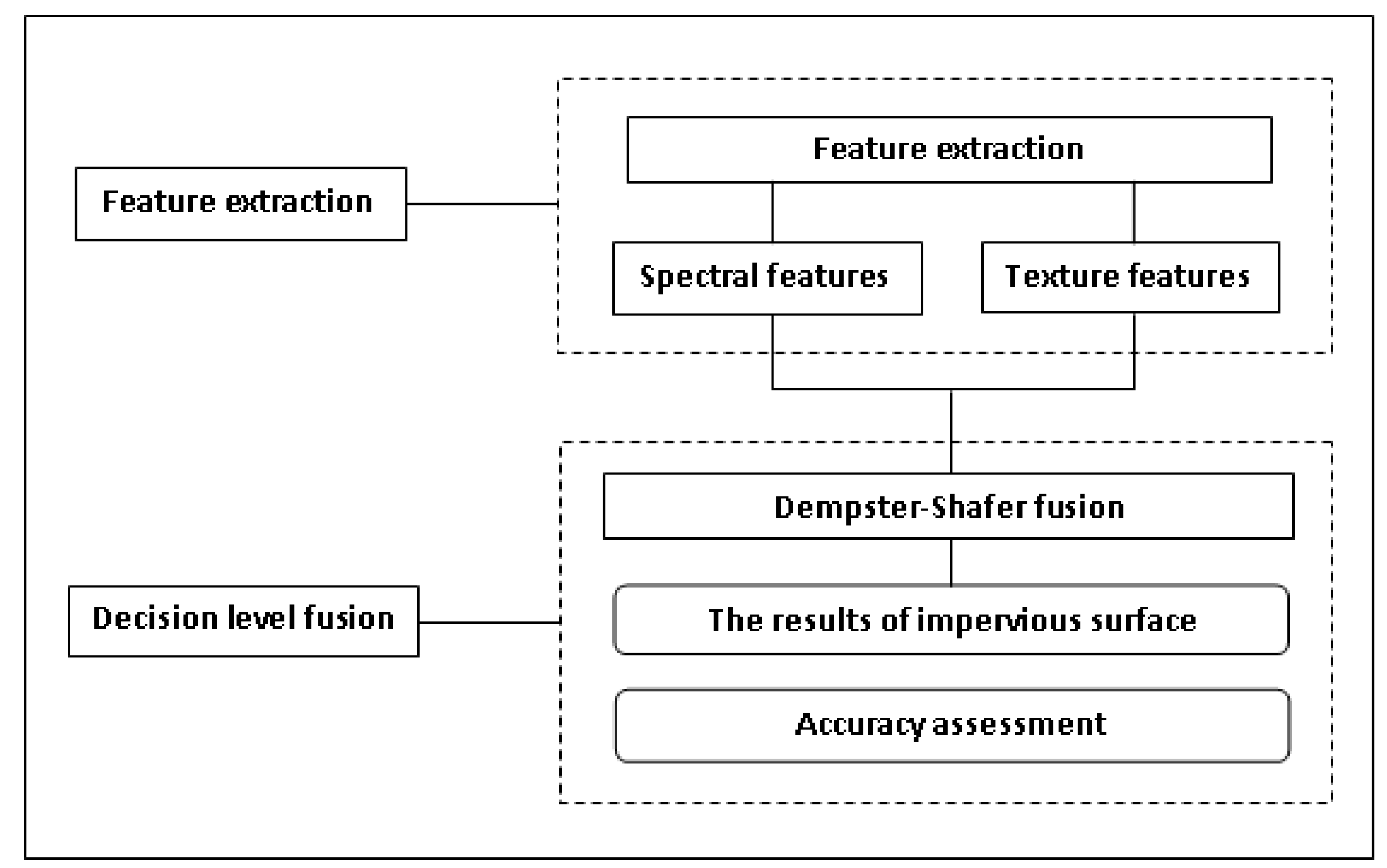

The process of fusing visual and SAR data based on decisions to evaluate urban unaffected surfaces was executed using the random forest classifier and evidence theory. Additionally, an analysis of the ambiguity levels for these impervious surfaces was conducted. Four key steps were undertaken to fuse visual and SAR images based on decisions. In the initial step, preprocessing was applied to ensure that both visual and SAR data were co-registered. Secondly, the extraction of spectral and textural characteristics from the GF-1 and Sentinel-1A images, respectively, was performed. Subsequently, unaffected surfaces were determined using the random forest classifier, employing data from four distinct sources. Finally, the unaffected surfaces described by these separate datasets were merged using the Dempster–Shafer theory. Figure 5 illustrates both the feature extraction phase and the decision fusion phase.

Figure 5.

The feature extraction step and the decision fusion step. Urban impervious surfaces can be obtained by fusing optical and SAR data.

Texture features are a set of measurements designed to quantify how colors or intensities are spatially arranged in an image. These features are highly important when interpreting SAR data for land cover classification and can be formed by means of the gray-level co-occurrence matrix, which was engaged to acquire textural information from Sentinel-1A images [110]. Additionally, spectral features, i.e., NDVI and NDWI, were obtained from GF-1 imagery and serve as an indicator of vegetation growth and to identify water areas, respectively. This fusion of information enhances the accuracy of land cover classification by capturing both the spatial patterns and spectral characteristics of the Earth’s surface.

The two quantities are evaluated as follows:

where R, G, and NIR correspond to the surface reflectivity in the red, green, and near-infrared spectral bands, respectively.

The random forest algorithm was initially developed as an extension of the decision tree classification model [12,91]. Then, grouping is carried out by building k decision trees (k is user-defined) using the selected N samples. As each decision tree produces a classification result, the ultimate classifier output is decided by taking into account the majority vote from all the decision trees [91,111]. The integration of urban unaffected surfaces from various datasets was carried out at the decision level, employing the Dempster–Shafer theory [107]. In the context of this theory, impervious surface estimations obtained from different satellite sensors are treated as unrelated pieces of proof, and the approach introduces considerations of ambiguity.

In summary, unaffected surfaces were created by combining formerly assessed unaffected surfaces obtained from separate image sources using the Dempster–Shafer fusion rules. Evaluation of the accuracy revealed that unaffected surfaces from urban regions derived from visual data achieved an improved classification performance of 89.68% compared to those derived from SAR data, which achieved a classification accuracy of 68.80%. The fusion of feature information with visual (or SAR) data improved the total classification performance by 2.64% (or 5.90%). Furthermore, the integration of unaffected surface datasets obtained from both GF-1 and Sentinel-1A data led to a substantial enhancement in overall classification accuracy, reaching 93.37%. When additional spectral and texture features were incorporated, the overall classification accuracy further improved to 95.33%.

Further reading with the presentation of various fusion methods as well as performance comparisons can be found in [104]. The procedures presented there are mainly based on pixel-level fusion and decision fusion.

5. Decorrelation Properties for Urban Destruction Identification [49]

One of the approaches used to determine building changes caused by disaster damage is to employ suitable scattering mechanisms and decomposition models and apply them to PolSAR data [112]. As anticipated, the polarimetric features derived from PolSAR data exhibit significant potential for detecting and assessing damaged areas. In the case of low-resolution polarimetric SAR images, which are well-suited for extensive injury assessment over large areas, the primary alterations observed in damaged urban areas involve a decrease in ground-wall structures. The destruction of these structures results in a decrease in the prevalent double-bounce scattering mechanism and disrupts the homogeneity of polarization orientation angles [113]. Two polarimetric damage indexes, developed through in-depth analysis of target scattering mechanisms, have proven effective in distinguishing urban areas with varying degrees of damage.

Consequently, the approach described in this section is intended to give solutions to the following problems:

- Determining changes in buildings caused by disaster damage in urban areas.

- Determining the decrease in ground-wall structures.

- Finding the appropriate indexes to distinguish among various degrees of damage.

Except for the features based on the polarimetric behavior of scattering mechanisms, the cross-channel polarimetric correlation is a crucial resource that holds the potential to unveil the physical characteristics of the observed scene [114]. The magnitude of the polarimetric correlation coefficient, often referred to as polarimetric coherence, has been employed in the examination of PolSAR data. Polarimetric coherence is significantly influenced by factors such as the chosen polarization combination, the local scatterer type, and the orientation of the target relative to the direction of PolSAR illumination. To comprehensively describe the rotation properties of polarimetric matrices, a uniform polarimetric matrix rotation theory was recently developed [115].

The examination of buildings before and after undergoing collapse damage is feasible because the primary distinction between an unharmed building and a collapsed one (which exhibits a rough surface) lies in the alteration of the ground-wall structure. The varying geometries of ground-wall structures and rough surfaces lead to significantly altered directional effects in the rotation domain along the radar’s line of sight. The effects related to directivity and stemming from ground-wall structures are considerably more pronounced compared to those arising from hard surfaces. As a result, the patterns related to polarimetric coherence and corresponding to buildings before and after destruction exhibit discernible differences, making them distinguishable from each other. Furthermore, ground-wall structures and rough surfaces primarily manifest double-bounce and odd-bounce scatterers, which are clearly associated with the combination of co-polarization components as seen in Pauli decomposition. A novel approach for urban damage assessment is being explored, and its uniqueness lies in the fact that it is not influenced by target orientation effects.

In PolSAR, the data acquired on a two-directional basis (horizontal and vertical—H, V polarizations) can be represented in the form of a scattering matrix, which is typically expressed as:

where the subscripts denote the transmitting and the receiving polarizations. If we want to transform the scattering matrix in the direction along the radar line of sight, we follow the relationship:

The superscript † represents the conjugate transpose, and the rotation matrix is

If the co-polarization components are combined in pairs as and , respectively, then we associate them with canonical surface scattering and double-bounce scattering according to Pauli decomposition logic. Accordingly, the co-polarization components and constitute the importance in the next explorations and their representations in the rotation domain are:

Polarimetric coherence can be expressed as the average of sufficient samples with similar behavior [114]. The coherence of co-polarization if rotation is not considered is:

where is the conjugate of , and denotes averaging over the available samples. The value of lies in the interval [0, 1].

The polarimetric coherence pattern [116] is highly effective for understanding, visualizing, and describing polarimetric coherence properties in the rotation field. In this context, the concept of co-polarization coherence pattern is defined as follows:

It is worth mentioning that the rotation angle includes the full range of the rotation domain. If the speckle is significantly eliminated through the estimation process, the co-polarization coherence has a rotation imprint that is totally defined by the elements and . Furthermore, the period of in the rotation field is found to be .

The primary distinction between an unharmed building and a collapsed one lies in the alteration of the ground-wall formation. Notably, the structural characteristics of a rough surface and a dihedral corner reflector, usually employed for modeling a building’s ground-wall structure, are significantly dissimilar. Consequently, their polarimetric coherence imprints in the rotation field can be distinguished from one another. Flat surfaces, theoretically, may exhibit roll-invariance, resulting in minimal co-polarization coherence fluctuations in the rotation field. On the other hand, dihedral structures display evident directivity effects, leading to distinct co-polarization coherence patterns.

The co-polarization coherence fluctuation is defined as:

where corresponds to the standard deviation. The calculation of the co-polarization coherence fluctuations are performed for the full scene. The quantities for the buildings are quite large compared to those from rough surfaces, a fact that is consistent with the previous analysis.

The urban damage index was evaluated using data from the significant Great East Japan earthquake and the subsequent tsunami, which caused extensive damage to coastal areas [117]. The study focuses on the Miyagi prefecture, one of the severely affected regions, for investigation. Multitemporal ALOS/PALSAR PolSAR datasets were collected, co-registered, and utilized for analysis. These datasets underwent an eight-look multi-looking process in the direction of azimuth to ensure consistent pixel sizes in both the azimuth and range directions. This uniformity facilitated comparisons between the corresponding PolSAR and visual images. After that, the SimiTest filter [118], applied with a 15 × 15 moving window, was used to mitigate speckle effects and calculate co-polarization coherence. In the case of flooded urban areas where there is minimal destruction of ground-wall structures, it is evident that the co-polarization coherence patterns, represented as , largely remain consistent before and after the event. These patterns typically exhibit shapes with four-lobes with significant coherence variations. Consequently, the co-polarization variation of the coherence, denoted as , tends to decrease for harmed urban patches after the destruction. Furthermore, the changes in coherence fluctuation, , can also serve as an indicator of the damage degree within the affected regions. As the extent of damage increases, the alterations in coherence fluctuation, become more pronounced, offering the potential to discriminate between different levels of urban damage.

The alterations in the two primary scattering mechanisms, namely, double-bounce scattering and ground-wall structures, are primarily manifested in changes to the co-polarization components and . These changes, in turn, result in different co-polarization coherence patterns in the rotation domain. For intact buildings, the ground-wall structure can be effectively represented by a dihedral corner reflector, which exhibits narrow directivity in the rotation domain. In contrast, for damaged buildings, the areas where the ground-wall structure has been compromised transform into rough surfaces. The directivity of rough surfaces is less sensitive compared to that of a dihedral corner reflector. Consequently, the fluctuation of co-polarization coherence, denoted as , decreases after damage occurs to urban patches. This reduction has the potential to reveal the changes that have taken place in these urban areas before and after the damaging event.

The co-polarization coherence variability, denoted as , represents the standard deviation of coherence values in the rotation field. To designate the coherence fluctuation imprint, it is calculated using the urban mask provided in [18]. When compared with the data before the destruction, the values of co-polarization coherence variability, , noticeably decrease over the damaged regions in the post-event scenario. Furthermore, as anticipated, the extent of the decrease in is closely related to the degree of damage observed in local urban regions. In order to emphasize this association, a damage index indicating the level of urban damage is proposed. This index is defined as the ratio of co-polarization coherence fluctuation before and after the damage event, as shown in the following equation:

where and correspond to pre- and post-destruction, respectively. For intact urban regions, the damage index is close to 1, while for destroyed regions, the index is more than 1.

Quantitative comparisons were conducted for ten selected destroyed urban areas and five urban segments affected by flooding. A 3 × 3 local window was employed to calculate the mean and the standard deviation of the proposed damage index . For the destroyed urban regions, it is evident that the index gets larger as the damage levels escalate. On the other hand, for the urban patches solely affected by flooding, the values of this index consistently remain around 1, indicating an undamaged condition. This aligns with the ground-truth data.

A fourth-order polynomial model is employed for fitting to form the analytical inversion relationship:

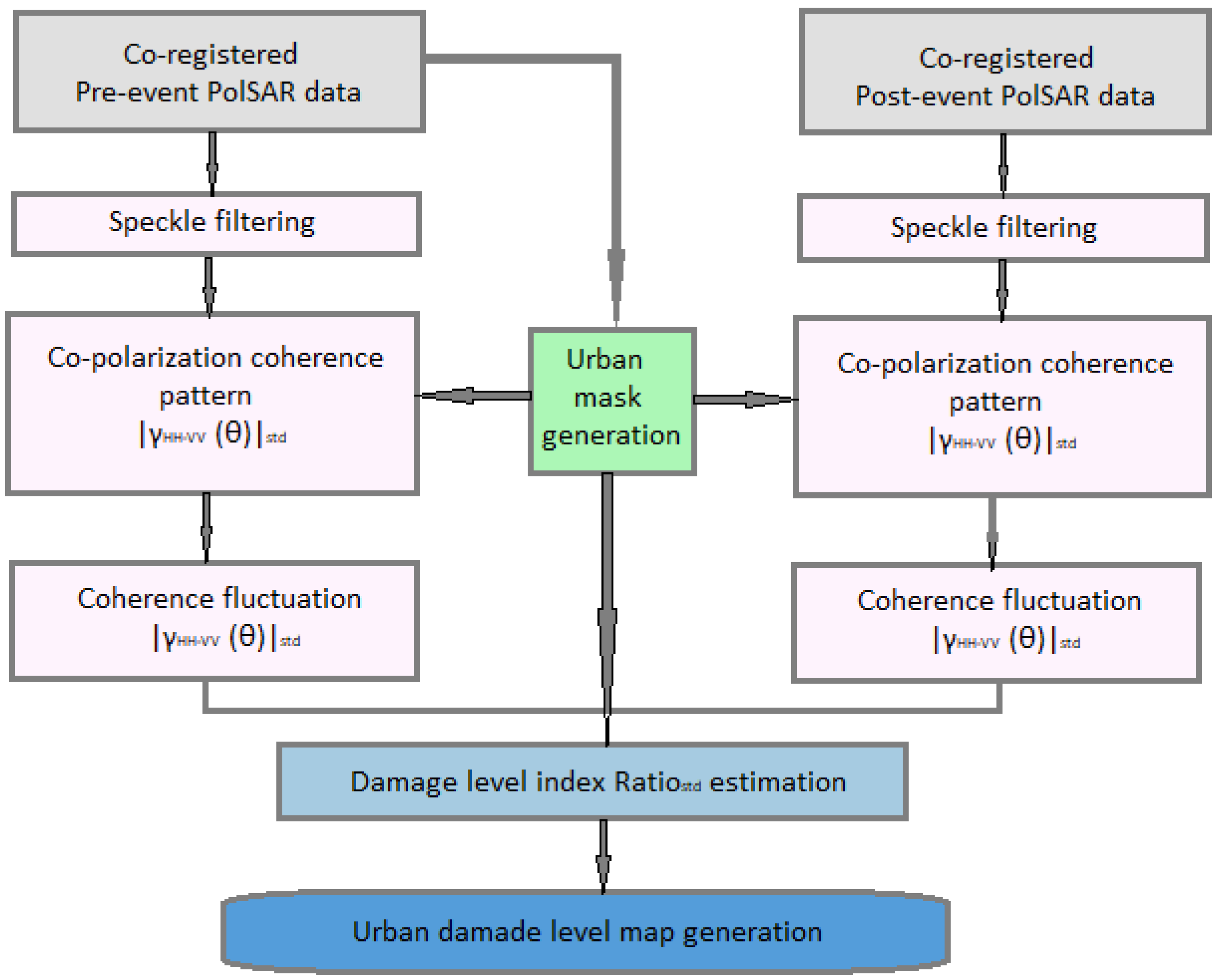

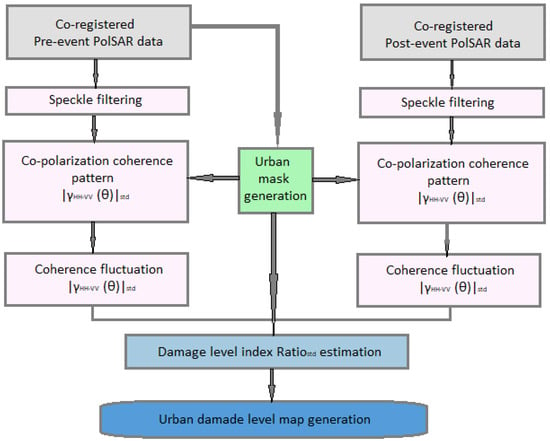

where is the destroyed urban degree, , and are weighting parameters. By employing the damage index defined in Equation (17) and the damage level inversion relationship given in Equation (18), an urban damage level mapping procedure has been developed. The flowchart illustrating this approach is presented in Figure 6.

Figure 6.

Block diagram depicting the evaluation of urban damage level mapping.

The damage level inversion relationship gives an urban damage level map covering the entire scene. This approach provides information on both the areas affected by damage and the specific levels of damage within those regions. Experiments have proven the effectiveness of this method, particularly in successfully identifying urban pieces with over 20% damage.

Other methods achieve similar classification performance as far as destruction areas are concerned. According to the scattering interpretation theory [107], a built-up area should respond to dominant double-bounce scattering induced by the ground-wall structures before the damage. However, after the tsunami, most of these buildings were swept away, and the ground-wall structures dramatically decreased. Therefore, this seriously damaged area should obviously be changed into dominant odd-bounce (surface) scattering. Damage level indexes developed from polarimetric scattering mechanism analysis techniques can be more robust than those based on intensity changes [113]. Two indicators from model-based decomposition and PO angle analysis are developed and examined in this work. A classification scheme [116] based on the combination of two kinds of features is established for quantitative investigation and application demonstration. Comparison studies with both UAVSAR and AIRSAR PolSAR data clearly demonstrate the importance and advantage of this combination for land cover classification. The classification accuracy presented in this work was 94.6%. A few examples of detection, determination, and evaluation of the damage to areas affected by the March 11th earthquake and tsunami in East Japan are given in [117]. They show the very promising potential of PolSAR for disaster observation. Detection of damaged areas can be done with only a single PolSAR observation after the disaster.

6. Interferometric Properties for Urban Flood Mapping [55]

In this work, interferometry and coherency are the main tools to distinguish flooded urban areas. This is coming from the basic electromagnetic properties of the PolSAR component (HH, HV, VH, and VV) to reflect in various kinds of areas and land covers.

Flooding is a pervasive and impactful natural disaster that has far-reaching effects on human lives, infrastructure, economies, and local ecosystems. Remote sensing data plays a pivotal role by providing a comprehensive view of large areas in a systematic manner, offering valuable insights into the extent and dynamics of floods. SAR sensors stand out as one of the most commonly used Earth observation sources for flood mapping, thanks to their ability to operate in all weather conditions and provide day–night imaging capabilities. The proliferation of SAR satellite missions has significantly reduced revisit periods and streamlined the process of rapidly mapping floods, particularly in the framework of emergency response attempts.

SAR-based flood mapping has been extensively studied and applied in rural areas [55]. The specular reflection that occurs on smooth water surfaces creates a dark appearance in SAR data, enabling floodwaters to be distinguished from dry land surfaces. In city regions with low slopes and a high proportion of unaffected surfaces, the vulnerability to flooding increases, posing significant risks to human lives and economic infrastructure. Thus, flood detection in urban areas presents considerable challenges for SAR because of complicated backscatterers associated with diverse building types and heights, vegetated areas, and varying road network topologies.

Several research studies have provided evidence that SAR interferometric coherence (γ) is a valuable source of information for urban flood mapping and can be instrumental in addressing specific challenges [119]. Urban areas are generally considered stable targets with high coherence properties. When it comes to the decorrelation of coherence, it is typically influenced more by the spatial separation between successive satellite orbits than the time gap between two SAR acquisitions [120]. The spatial distribution of scatterers within a resolution cell can be disrupted by the presence of standing floodwater between buildings. This disturbance leads to a reduction in the coherence of co-event pairs compared to pre-event pairs, making it possible to detect and map urban floods based on changes in coherence. In reference [55], a method is proposed for detecting floods in urban settings by utilizing SAR intensity and coherence data through Bayesian network fusion. This approach harnesses time series information from SAR intensity and coherence to map three key areas: non-obstructed-flooded regions, obstructed-flooded non-coherent areas, and obstructed-flooded coherent areas. What makes this method unique is its incorporation of the stability of scatterers in urban areas when merging intensity and coherence data. Additionally, the threshold values used in the process are understood directly from the data, giving a procedure that is automatic and independent of specific sensors as well as the regions under investigation.

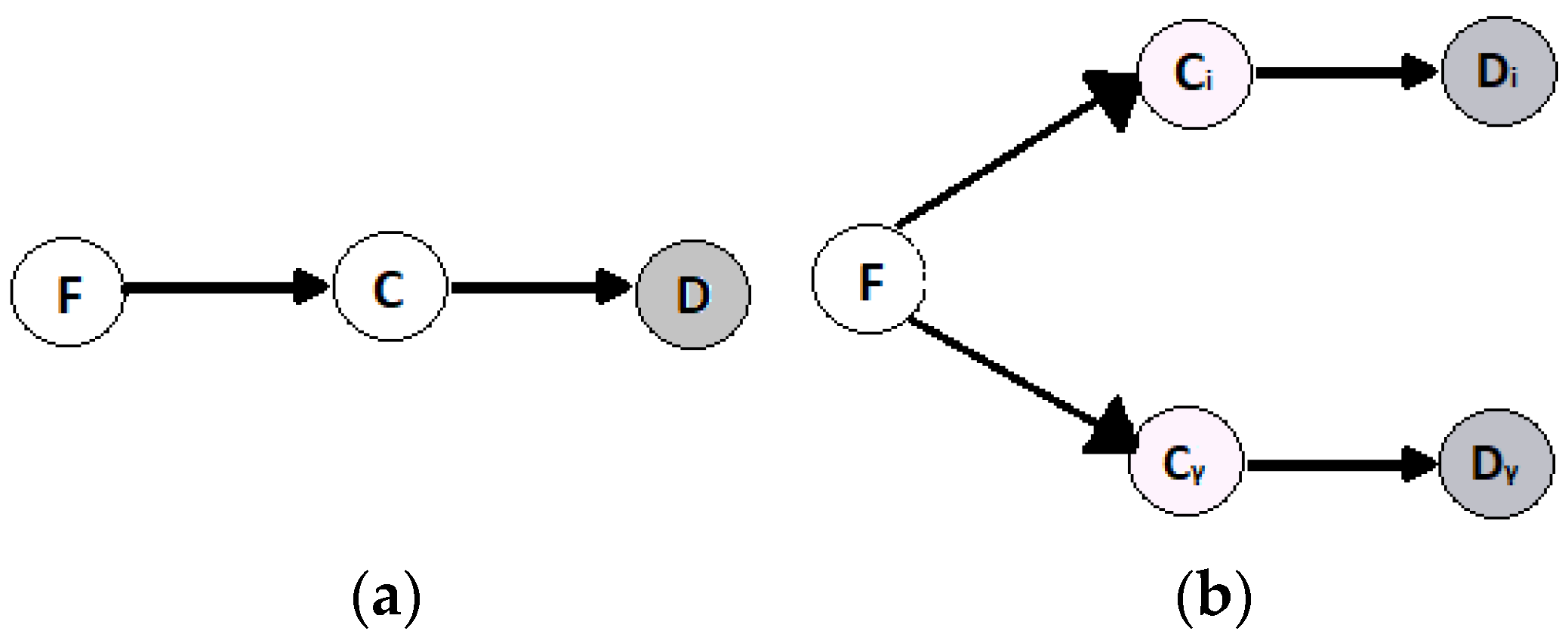

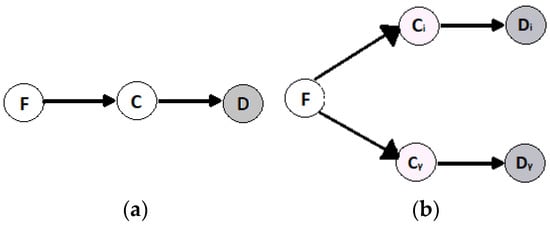

A Bayesian network is a probabilistic graphical model that provides a concise representation of the joint probability distribution of a predefined set of random variables. It is depicted as a directed acyclic graph (DAG), with nodes representing variables and links between nodes indicating dependencies among these variables [121]. The DAG explicitly defines conditional independence relationships among variables with respect to their ancestors. Assuming represent the random variables, the joint probability distribution within a Bayesian network can be expressed as follows: