Two-Dimensional Space-Variant Motion Compensation Algorithm for Multi-Hydrophone Synthetic Aperture Sonar Based on Sub-Beam Compensation

Abstract

:1. Introduction

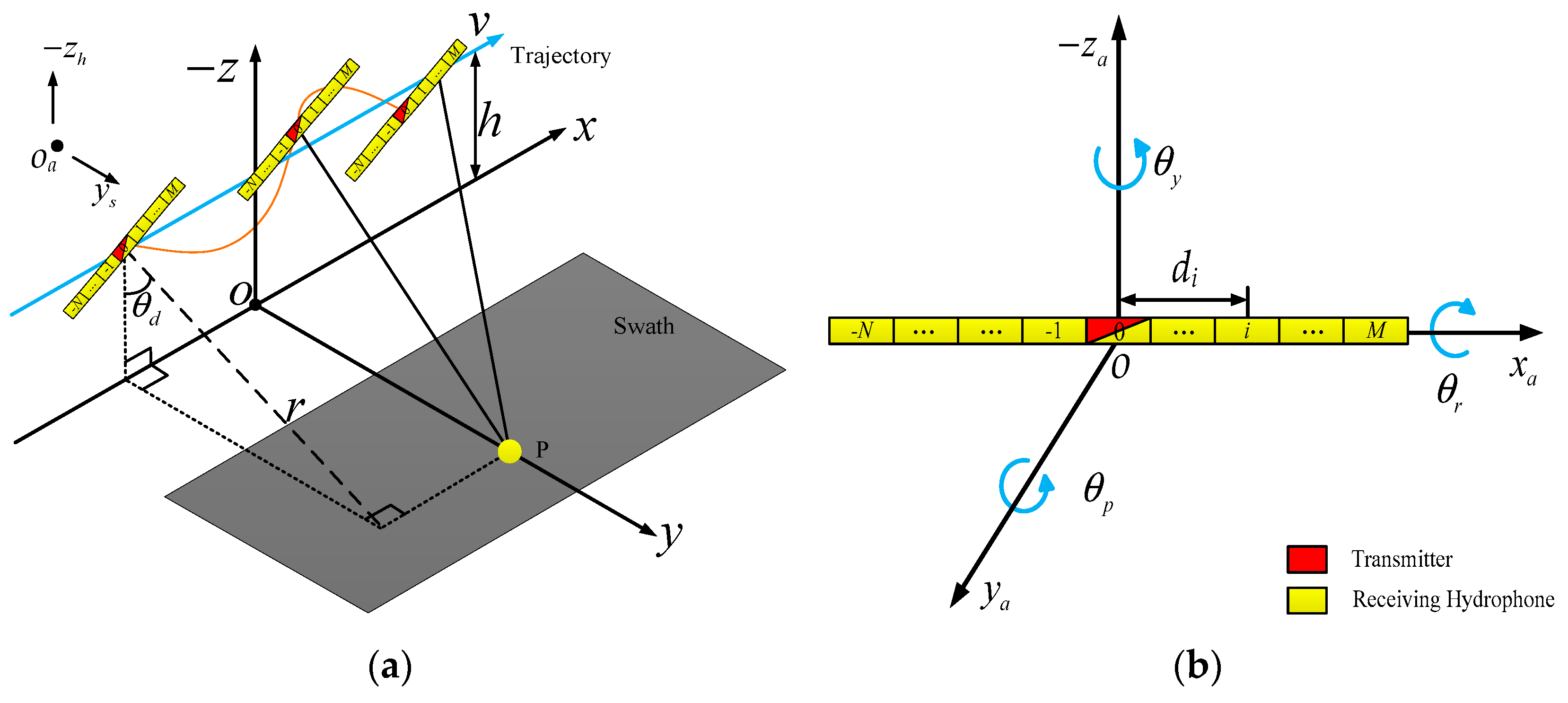

2. Range History Model

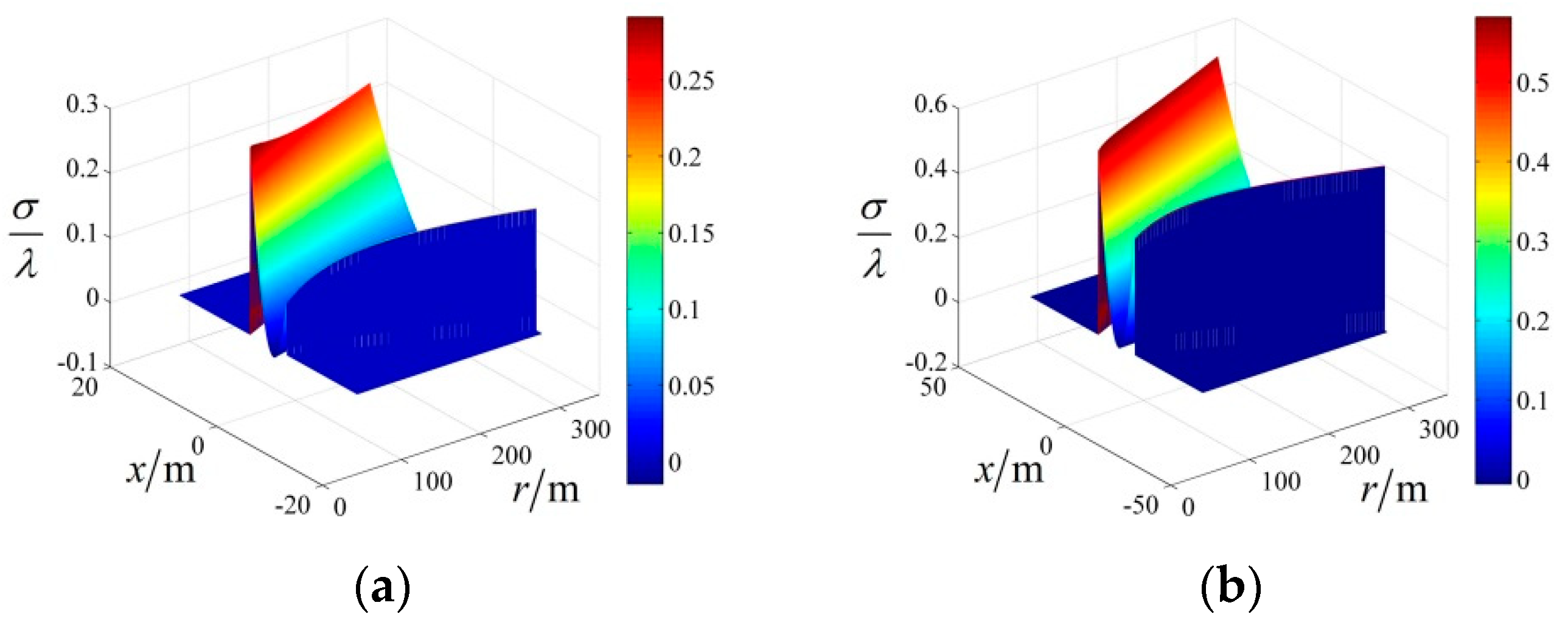

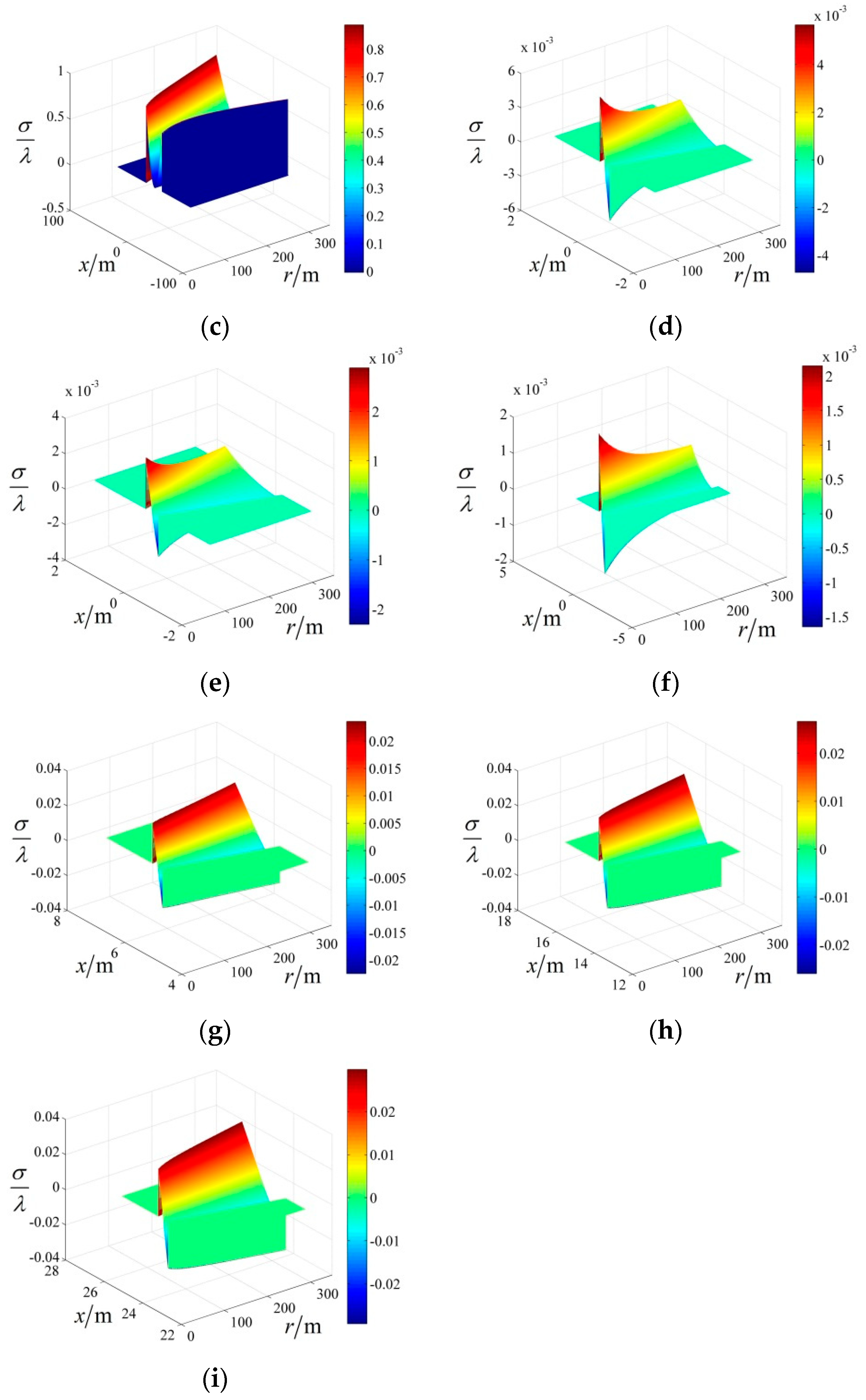

2.1. Exact Range History

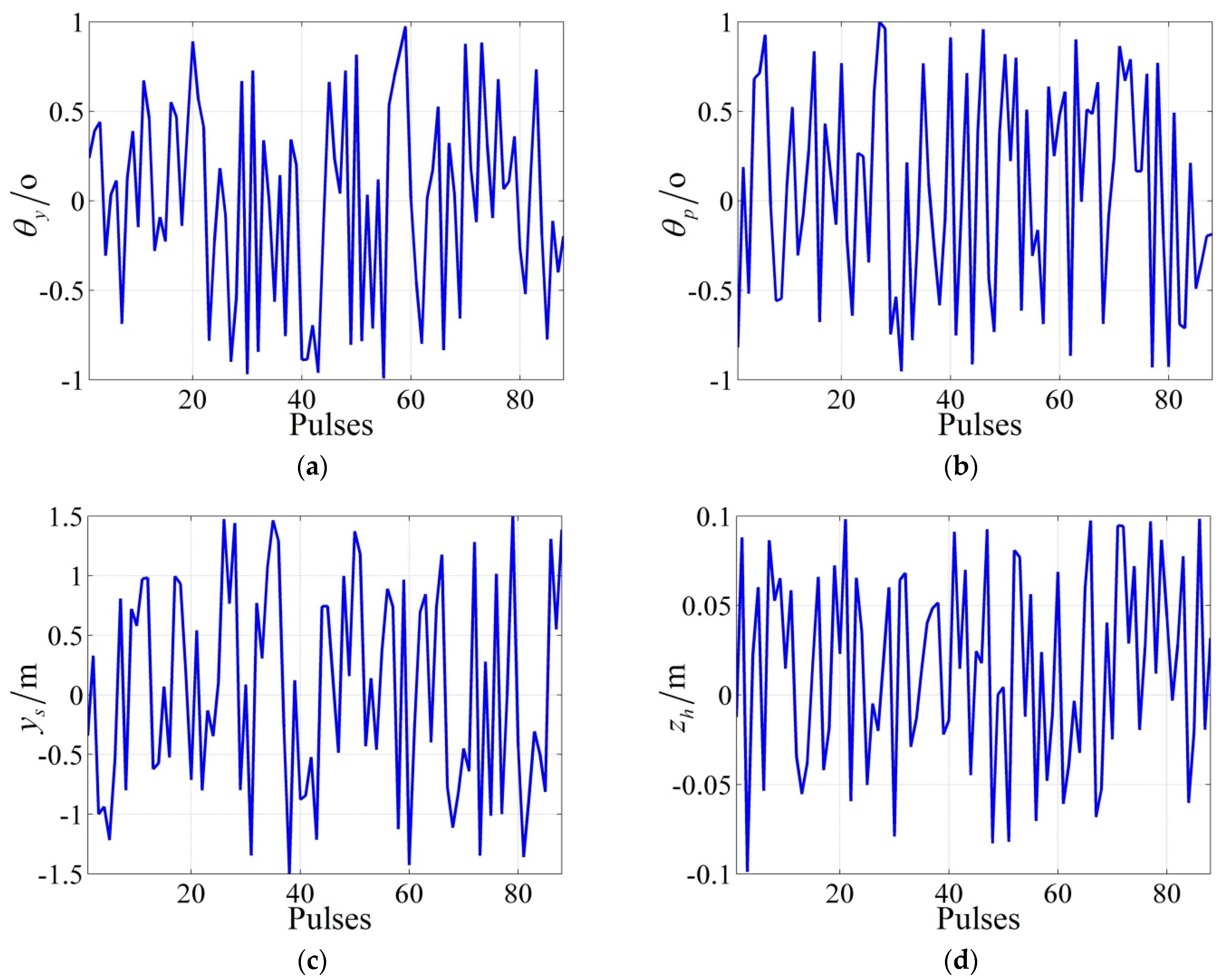

2.2. Exact Motion Error

2.3. Sub-Beam Range History

3. Signal Model

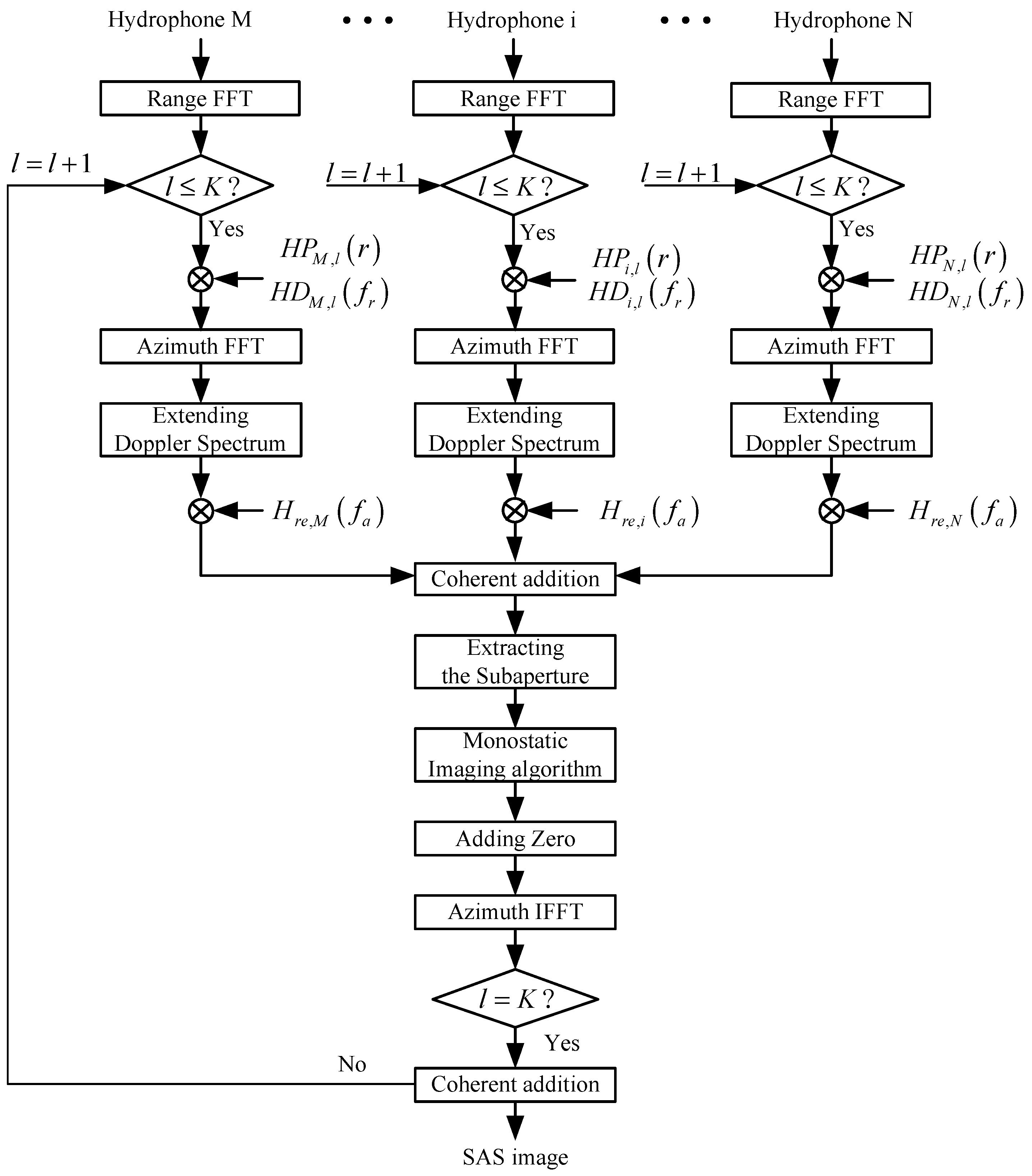

4. Development of the Motion Compensation

5. Validation Test

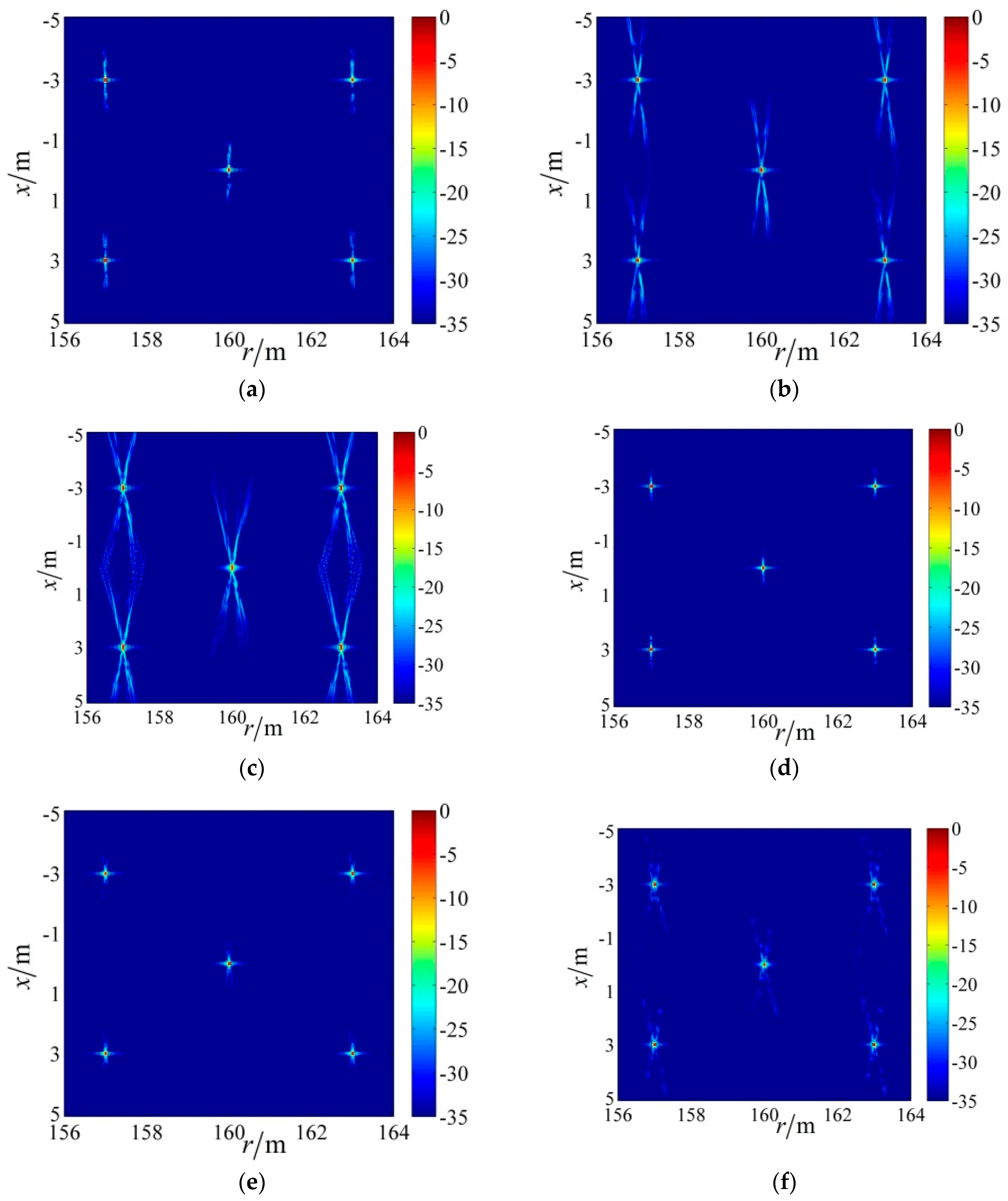

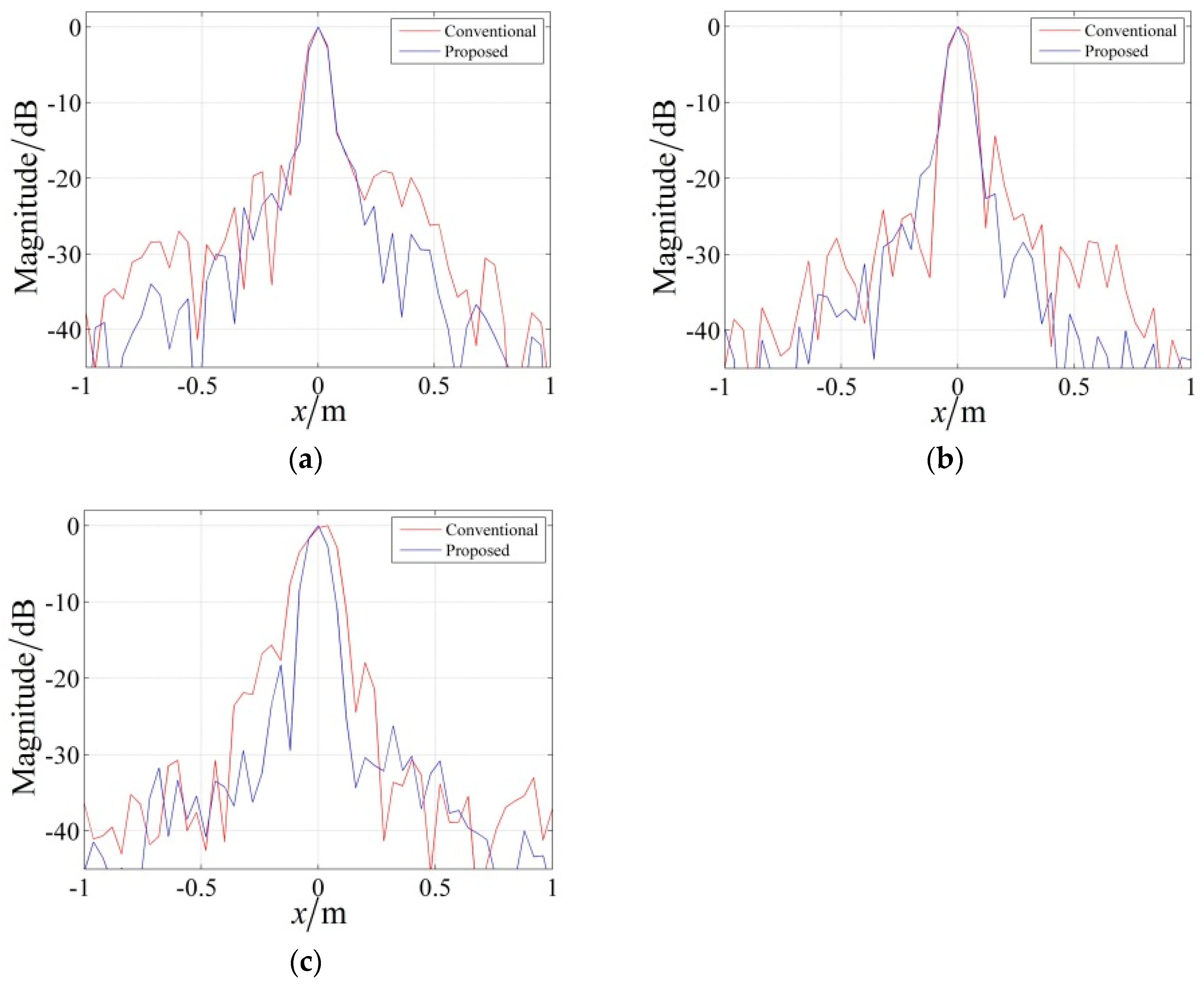

5.1. Exact Range History Imaging Results of Simulation Data

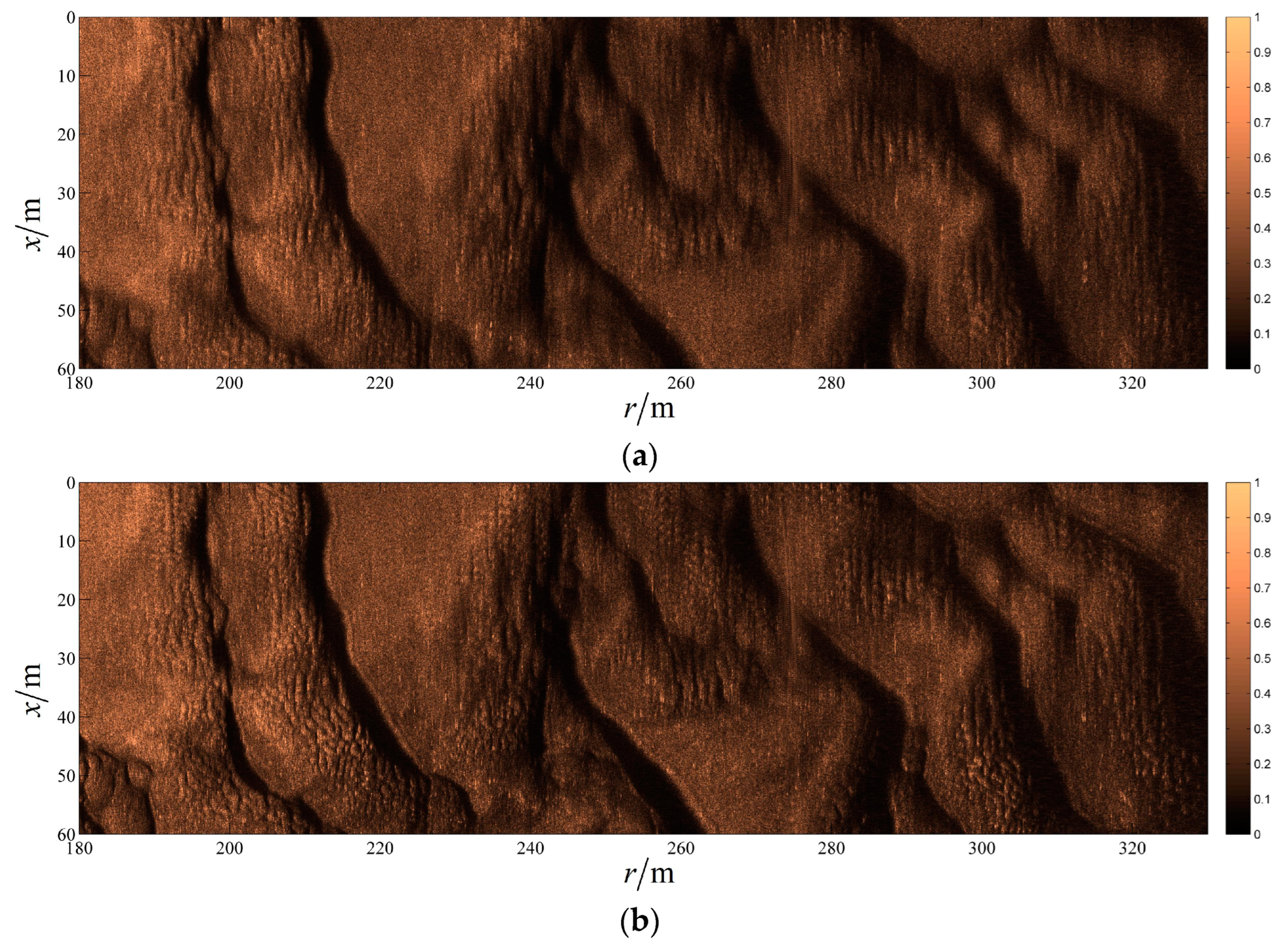

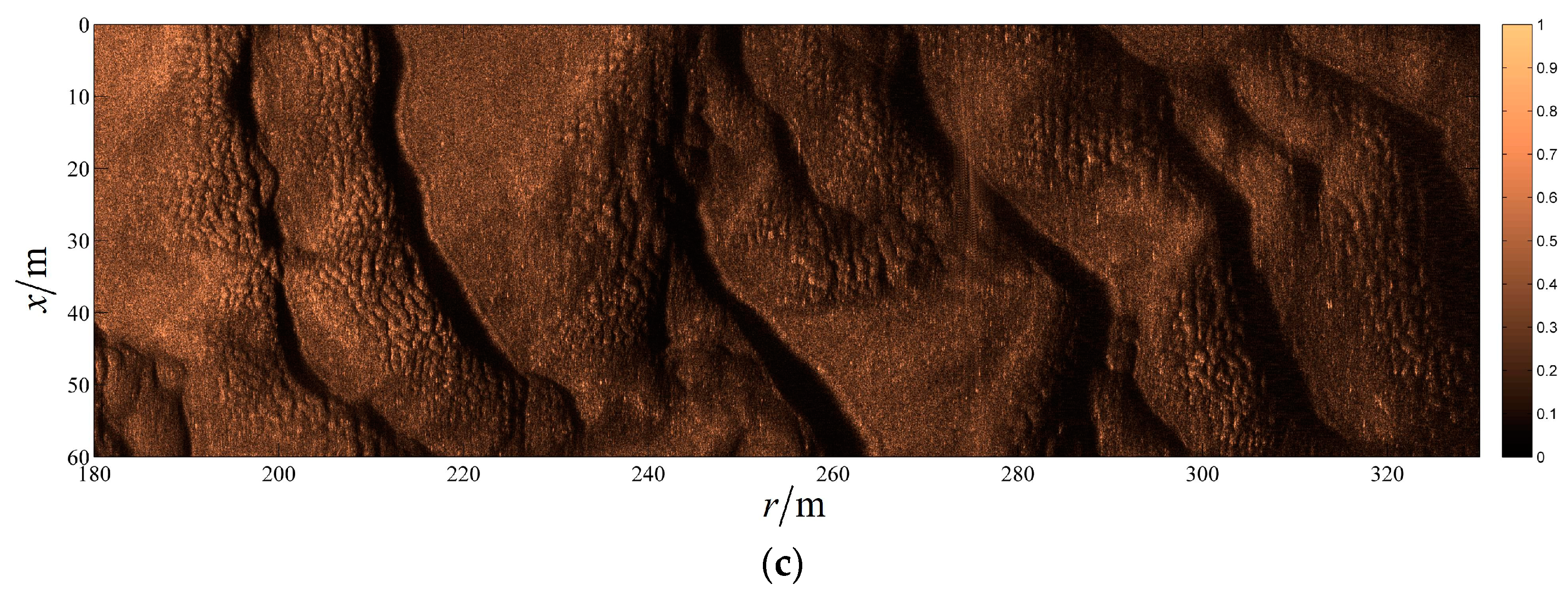

5.2. Imaging Results of Real Data

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hayes, M.P.; Gough, P.T. Synthetic Aperture Sonar: A Review of Current Status. IEEE J. Ocean. Eng. 2009, 34, 207–224. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, H.; Sun, H.; Ying, W. Multireceiver SAS imagery based on monostatic conversion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10835. [Google Scholar] [CrossRef]

- Zhang, X. An efficient method for the simulation of multireceiver SAS raw signal. Multimed. Tools Appl. 2023, 83, 37351–37368. [Google Scholar] [CrossRef]

- Scott, B.T.; Brett, B.; Sowmya, R.; Ethan, L.; Bradley, M.; Patrick, R. Environmentally adaptive automated recognition of underwater mines with synthetic aperture sonar imagery. J. Acoust. Soc. Am. 2021, 150, 851–863. [Google Scholar]

- Angeliki, X.; Yan, P. Compressive synthetic aperture sonar imaging with distributed optimization. J. Acoust. Soc. Am. 2019, 146, 1839–1850. [Google Scholar]

- Nadimi, N.; Javidan, R.; Layeghi, K. An Efficient Acoustic Scattering Model Based on Target Surface Statistical Descriptors for Synthetic Aperture Sonar Systems. J. Mar. Sci. Appl. 2020, 19, 494–507. [Google Scholar] [CrossRef]

- Piper, J.E.; Lim, R.; Thorsos, E.I.; Williams, K.L. Buried Sphere Detection Using a Synthetic Aperture Sonar. IEEE J. Ocean. Eng. 2009, 34, 485–494. [Google Scholar] [CrossRef]

- Callow, H.J. Signal Processing for Synthetic Aperture Sonar Image Enhancement. Ph.D. Thesis, Electrical and Electronic Engineering, University of Canterbury, Christchurch, New Zealand, 2003. [Google Scholar]

- Macedo, K.A.C.d.; Scheiber, R. Precise topography- and aperture-dependent motion compensation for airborne SAR. IEEE Geosci. Remote Sens. Lett. 2005, 2, 172–176. [Google Scholar]

- Prats, P.; Reigber, A.; Mallorqui, J.J. Topography-dependent motion compensation for repeat-pass interferometric SAR systems. IEEE Geosci. Remote Sens. Lett. 2005, 2, 206–210. [Google Scholar] [CrossRef]

- Zheng, X.; Yu, W.; Li, Z. A Novel Algorithm for Wide Beam SAR Motion Compensation Based on Frequency Division. In Proceedings of the 2006 IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; pp. 3160–3163. [Google Scholar]

- Lu, Q.; Huang, P.; Gao, Y.; Liu, X. Precise frequency division algorithm for residual aperture-variant motion compensation in synthetic aperture radar. Electron. Lett. 2019, 55, 51–53. [Google Scholar] [CrossRef]

- Lu, Q.; Gao, Y.; Huang, P.; Liu, X. Range- and Aperture-Dependent Motion Compensation Based on Precise Frequency Division and Chirp Scaling for Synthetic Aperture Radar. IEEE Sens. J. 2019, 19, 1435–1442. [Google Scholar] [CrossRef]

- Wu, H.; Tang, J.; Zhong, H. A moderate squint imaging algorithm for the multiple-hydrophone SAS with receiving hydrophone dependence. IET Radar Sonar Navig. 2019, 13, 139–147. [Google Scholar] [CrossRef]

- Wu, H.; Tang, J.; Zhong, H. A Correction Approach for the Inclined Array of Hydrophones in Synthetic Aperture. Sensors 2018, 18, 2000. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ying, W.; Dai, X. High-resolution imaging for the multireceiver SAS. J. Eng. 2019, 2019, 6057–6062. [Google Scholar] [CrossRef]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data: Algorithms and Implementation; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Zhang, X.; Yang, P.; Wang, Y. A Novel Multireceiver SAS RD Processor. IEEE Trans. Geosci. Remote Sens. 2023, 62, 4203611. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, P.; Wang, Y. LBF-based CS algorithm for multireceiver SAS. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1502505. [Google Scholar] [CrossRef]

- Cafforio, C.; Prati, C.; Rocca, F. SAR data focusing using seismic migration techniques. IEEE Trans. Aerosp. Electron. Syst. 1991, 27, 194–207. [Google Scholar] [CrossRef]

| Carrier Frequency (kHz) | 80,32,20 | Pulse Width (ms) | 20 | PRF (Hz) | 2.5 |

| Bandwidth (kHz) | 20 | Speed (m/s) | 2.5 | Hydrophone (m) | 0.08 |

| Altitude (m) | 30 | Transmitter (m) | 0.16 | Hydrophone number | 25 |

| Algorithm | Beamwidth | IRW/cm | PSLR/dB | ISLR/dB |

|---|---|---|---|---|

| The conventional algorithm | 6° | 8.71 | −17.80 | −11.32 |

| The proposed algorithm | 6° | 8.10 | −18.05 | −14.05 |

| The conventional algorithm | 15° | 9.99 | −14.80 | −9.08 |

| The proposed algorithm | 15° | 8.29 | −19.89 | −13.62 |

| The conventional algorithm | 24° | 14.98 | −16.01 | −7.95 |

| The proposed algorithm | 24° | 8.94 | −18.93 | −11.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Zhou, F.; Xie, Z.; Tang, J.; Zhong, H.; Zhang, J. Two-Dimensional Space-Variant Motion Compensation Algorithm for Multi-Hydrophone Synthetic Aperture Sonar Based on Sub-Beam Compensation. Remote Sens. 2024, 16, 2144. https://doi.org/10.3390/rs16122144

Wu H, Zhou F, Xie Z, Tang J, Zhong H, Zhang J. Two-Dimensional Space-Variant Motion Compensation Algorithm for Multi-Hydrophone Synthetic Aperture Sonar Based on Sub-Beam Compensation. Remote Sensing. 2024; 16(12):2144. https://doi.org/10.3390/rs16122144

Chicago/Turabian StyleWu, Haoran, Fanyu Zhou, Zhimin Xie, Jingsong Tang, Heping Zhong, and Jiafeng Zhang. 2024. "Two-Dimensional Space-Variant Motion Compensation Algorithm for Multi-Hydrophone Synthetic Aperture Sonar Based on Sub-Beam Compensation" Remote Sensing 16, no. 12: 2144. https://doi.org/10.3390/rs16122144