4.1. Road Scenario Identification Based on Electronic Horizon

Integrity refers to a system’s ability to promptly issue effective warnings to users when its measurements become unreliable for specific operations. As the integrity of civil GNSS is not sufficient for vehicle applications, it is important to identify GNSS signals with poor accuracy to maintain the accuracy and robustness of the vehicle localization system. Generally, Geometric Dilution of Precision (GDOP) is used as an indicator to describe the geometrical arrangement of GNSS satellite constellations [

39]. Selecting the satellite constellation with the lowest GDOP is preferred, as GNSS positioning with a low GDOP value typically results in better accuracy. For example, Ref. [

40] identifies GNSS availability using the GDOP values. However, calculating GDOP is a time- and power-consuming task that involves complicated transformations and inversion of measurement matrices. The environment information stored in HD maps can be effectively used to improve GNSS integrity.

Traditional car navigation systems rely on global maps. Over the past few decades, such maps have played crucial roles in helping drivers to select the optimal routes [

41,

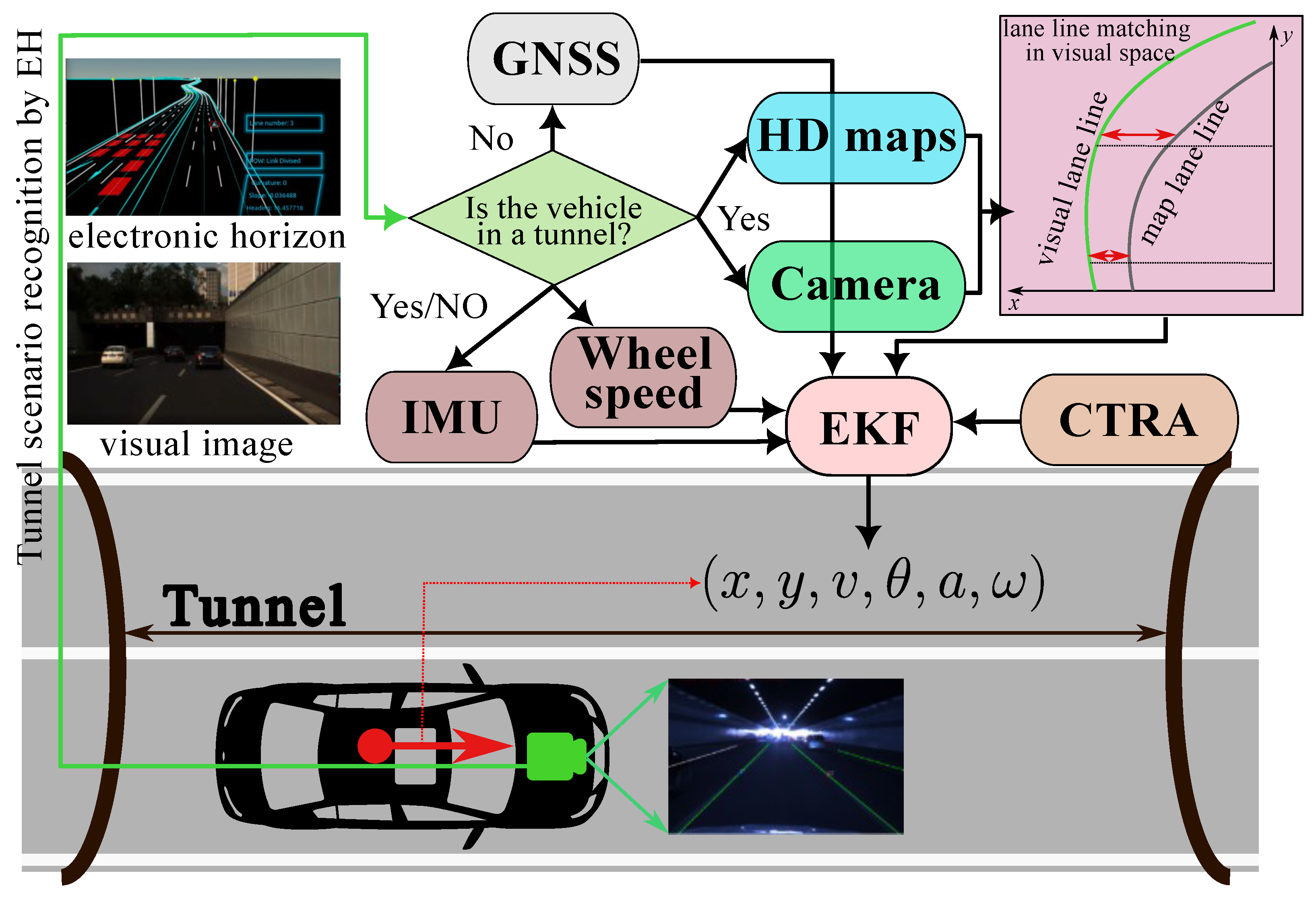

42]. However, an AV perceives surroundings from its own perspective rather than a global viewpoint. Therefore, it is more appropriate to apply HD maps to AVs using AV-centric views through the EH. A demonstration of the EH applied in our system is shown in

Figure 3.

The EH greatly reduces the amount of topology network data that need to be transmitted to applications, making the HD map applications more efficient. Various properties obtained from the HD maps can therefore be provided to indicate a vehicle’s surrounding environment along its driving route, including road types such as bridges and tunnels as well as the starting and ending positions of these tunnels. By using these pieces of information along with vehicular data, such as position and speed, it is possible to accurately identify the current road scene and predict the upcoming road scenarios.

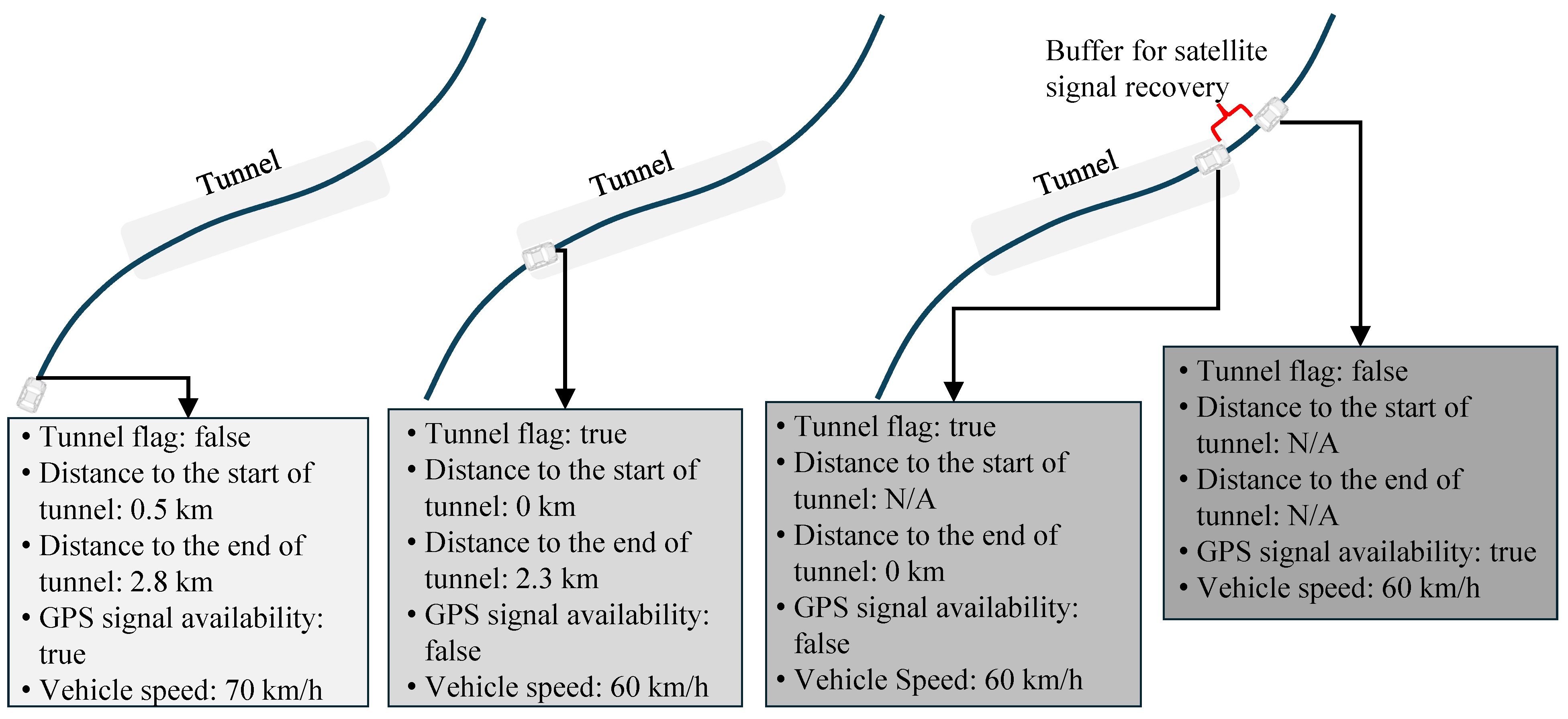

Specifically, using the EH and the current vehicle position, the distance to the upcoming tunnel can be determined, and the arrival time at the tunnel can be easily calculated.

Figure 4 illustrates the patterns of the tunnel scenario identification process as a vehicle drives through a tunnel.

4.2. Lane Line Recognition Based on Deep Learning Method

The iBiSeNet proposed in this paper is depicted in

Figure 5. This deep learning network is adopted for lane line recognition. The original BiSeNet [

36], developed by Megvii Technology, is a real-time semantic segmentation network. Using ResNet-18 as a base model, the fast version of BiSeNet achieved 74.7% mean IOU (Intersection over Union) with the CitiScapes verification datasets at 65.5 FPS [

36].

In this paper, we introduce two main modifications [

43] to the original BiSeNet:

We incorporate both local attention and multi-scale attention mechanisms, in contrast to the original BiSeNet, which uses only classic attention.

We restore images by 4 times, 4 times, and 8 times deconvolution in the last three output steps, whereas the original BiSeNet employs 8 times, 8 times, and 16 times upsampling in its last three output layers.

After these modifications, the IOU of our BiSeNet is improved to 89.69% for the white lane line recognition, as shown in

Table 1. Although the speed drops to 31 FPS, it still meets the real-time requirements in our application.

When the lane lines are recognized by the iBiSeNet, they are represented through cubic polynomials. To determine the optimal parameters of the recognized lane lines, a weighted least squares (WLS) algorithm [

44] is employed to find the best-fitting parameters

,

,

, and

for the cubic polynomial expressed by Equation (

1), where

denote the pixel coordinates in the image coordinate system.

As shown in

Figure 6, the iBiSeNet model effectively recognizes lane lines even in tunnel scenarios. The results of the cubic polynomial fitting are overlaid on the images, demonstrating the model’s accuracy in identifying and modeling the lane geometries.

4.3. Matching between Visual Lane Lines and HD Map Lane Lines

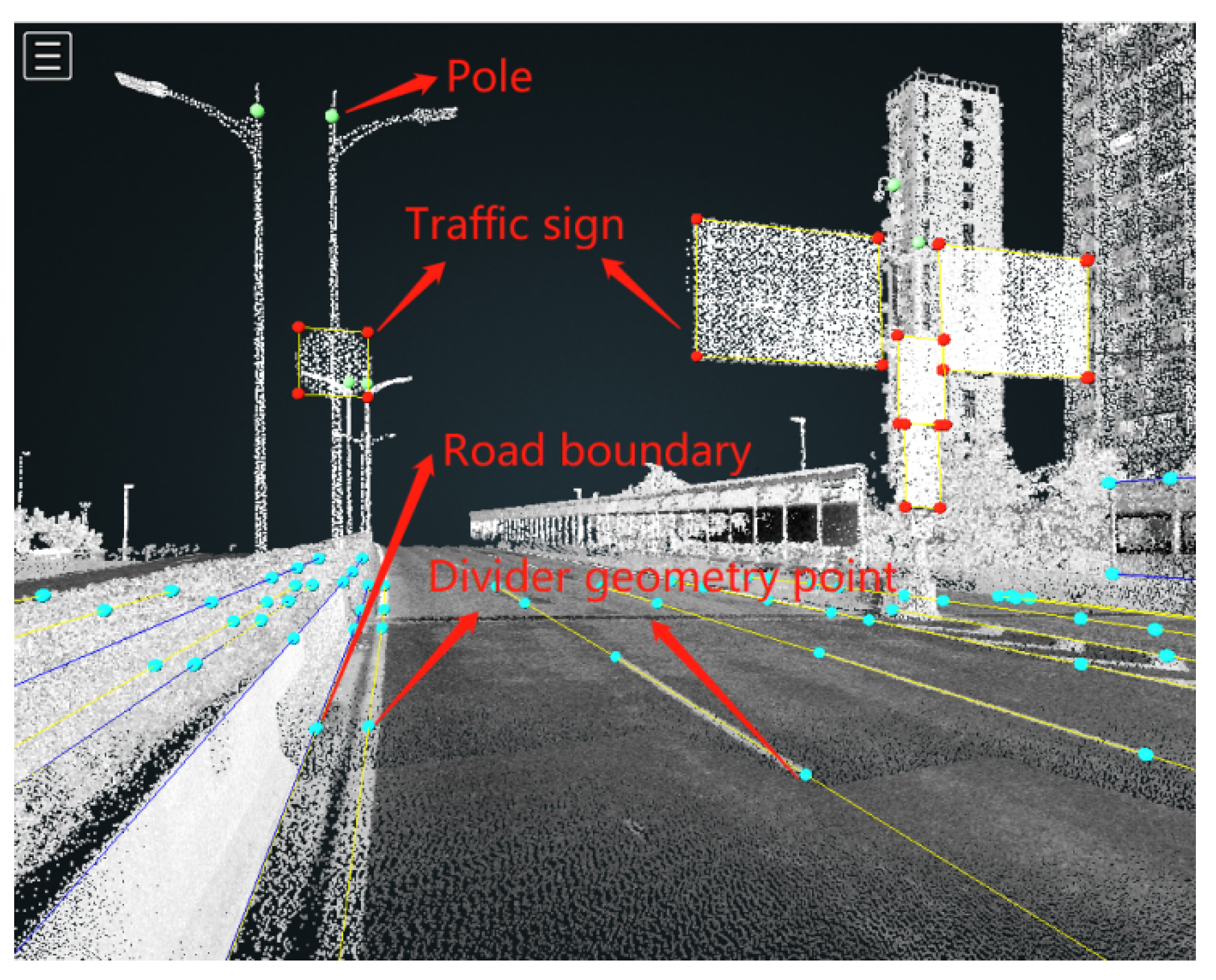

Since HD maps are pre-collected and produced, they can achieve a high spatial resolution of up to 20 cm. This accuracy is verified by using RTK control points and high-performance LiDAR point cloud data, as shown in

Figure 7.

In order to match HD map lane lines with the visual lane lines recognized by our smart camera, it is essential to transform them into the same coordinate system. In this paper, the HD map lane lines are transformed into the image coordinate system of the on-board camera. This projection involves several transformations between different coordinate systems. The detailed process is described as follows.

As the HD maps are represented in a world coordinate system, firstly, we need to transform the world coordinates

into camera body coordinates

through a rigid transformation using a rotation matrix

and a translation vector

:

This equation can be further written in a homogeneous coordinate form:

where

is called the extrinsics of the camera, which are calibrated using the classical vanishing method [

45], in this paper.

Then, the camera body coordinates are transformed into to image coordinates through:

where

f is the focal length of the camera.

The image coordinates are further transformed into pixel coordinates via:

where

and

are the pixel length in the

x and

y direction, and

is the principal point.

In summary, the transformation from the world coordinates

to pixel coordinates

is achieved through:

where

and

is called the intrinsics of the camera.

Once the HD map lane lines are projected to the pixel coordinate system, three deviation variables—vertical deviation

, horizontal deviation

, and yaw deviation

—are applied to match the lane lines of HD maps with those recognized by the camera. The matching process, illustrated in

Figure 8, is a typical nonlinear optimization problem. We seek the optimal vector, as expressed in Equation (

8), to minimize the differences between the projected HD map lane lines and the visually recognized lane lines at specified positions

.

Therefore, the following object function is designed:

where

is the

y coordinate data of map lane lines in visual space at position

, and

is the cubic polynomial function of a visual lane line that is recognized by the on-board camera.

We employ the Levenberg–Marquardt (LM) method [

46] to solve this object function, using feature points at

in this paper.

In order to match the visual lane line with the HD map lane line, we firstly calculate the deviated positions via applying the deviation vector

to the visual lane line that is described by Equation (

1):

At feature position

, the residual distance

between the map lane line and the visual lane line can be calculated through:

Substituting

into Equation (

10), we can calculate the residual distance at the first feature point:

and for

, the residual distance at the second feature point is calculated through:

These two residual distances

and

, functions of the deviation vector

, are used to feature the distance between the visual lane line and HD map lane line. To minimize the sum of these residual distances, matching these lane lines, the following object function is constructed:

This function can be solved through the LM algorithm. Firstly, the Jacobian of Equation (

14) is calculated through:

where

Then, according to the LM algorithm, the optimal

can be found using the following iteration equation:

where

is the damping parameter.

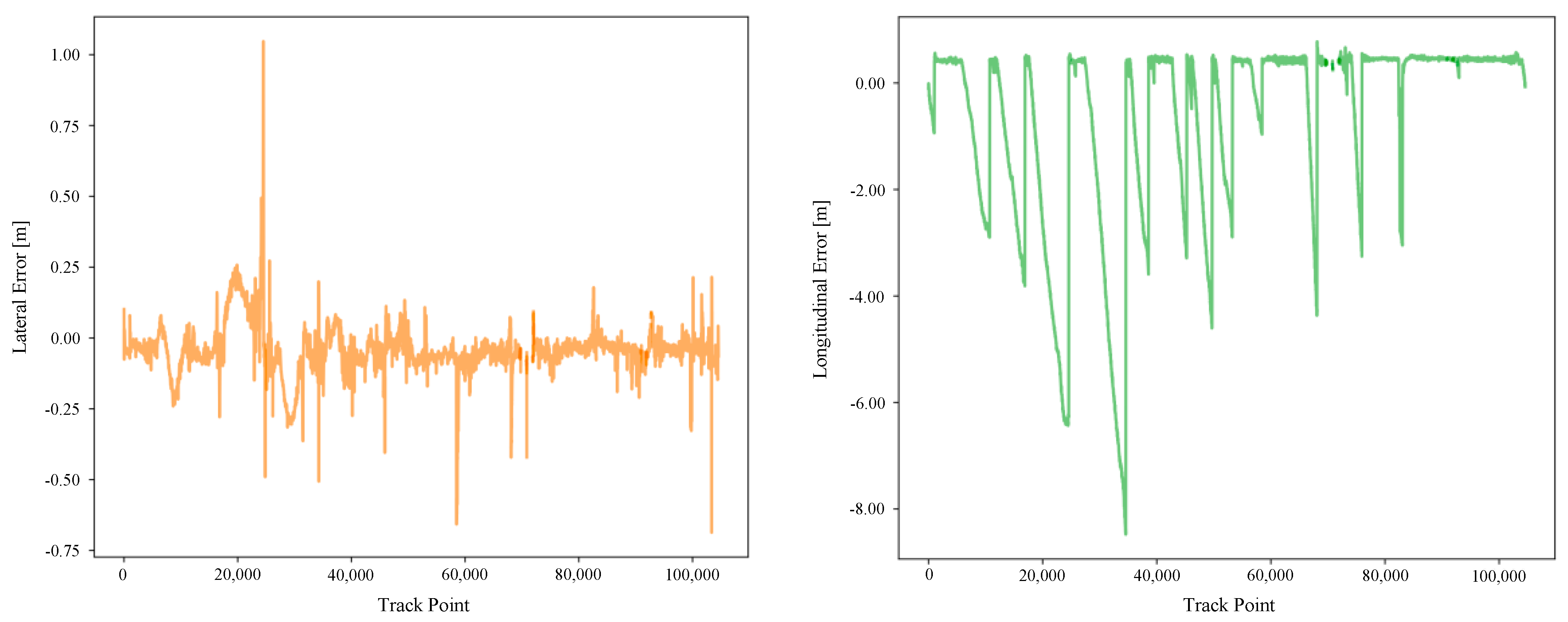

As the visual lane line and HD map lane line are matched, consequently, we are able to acquire accurate geometry data from HD maps to correct our vehicle pose even when GNSS data are unavailable.

4.4. Multi-Source Information Fusion for Vehicular Localization Using EKF

The EKF and unscented KF (UKF) are standard solutions for nonlinear state estimation. In EKF, the nonlinearities are approximated analytically through first-order Taylor expansion. In contrast, UKF uses a set of deterministically sampled sigma points to capture the true mean and covariance of the Gaussian random variables and, when propagated through the true nonlinearity, captures the posterior mean and covariance accurate to the third order (Taylor expansion) for any nonlinearity. This feature allows UKF to achieve accurate estimations in highly nonlinear and dynamic motion scenarios [

47].

However, in vehicle state estimation, the vehicle’s motion is approximately linear in a short time interval. Our previous study [

48] shows that the accuracy performance of EKF and UKF is close, whereas EKF demonstrates higher speed than UKF. Even in more complex vehicle motion prediction tasks [

49], EKF is primarily used. To ensure the system’s high efficiency, an EKF that cooperates with a constant turn rate and acceleration (

CTRA) model is employed in this paper. The system state is:

where

is the position coordinates,

the yaw angle,

the velocity,

the acceleration, and

the yaw rate at instant

k. The system is described by the

CTRA model as follows:

where

and

In our EKF, the vehicle state prediction is performed by the above

CTRA model:

and the covariance is predicted through:

where

is the known system covariance matrix, and

is the Jacobain of the

CTRA model.

As soon as the on-board sensor/visual matching measurements are available, the EKF’s prediction is corrected through:

where

is the measurement function and

is the Kalman gain matrix, which is as follows:

where

is the measurement covariance, and

is the Jacobian function of measurement model

. In this paper,

, due to the following measurement models that are applied in different scenarios being linear:

If a vehicle is outside a tunnel, the position measurements are directly derived from the GNSS at 10 Hz:

If the vehicle is driving in a tunnel, where the GNSS signals are unavailable, the vehicle’s pose measurements are obtained through vision-and-HD-map matching at 30 Hz:

The IMU and wheel speed measurements, which remain obtainable, are directly used to update the vehicle’s acceleration, yaw rate, and velocity at 100 Hz:

Finally, the covariance matrix is updated through:

The details of the EKF’s implementation, including initialization, parameter configurations, Jacobains, and time synchronization, can be found in [

48].