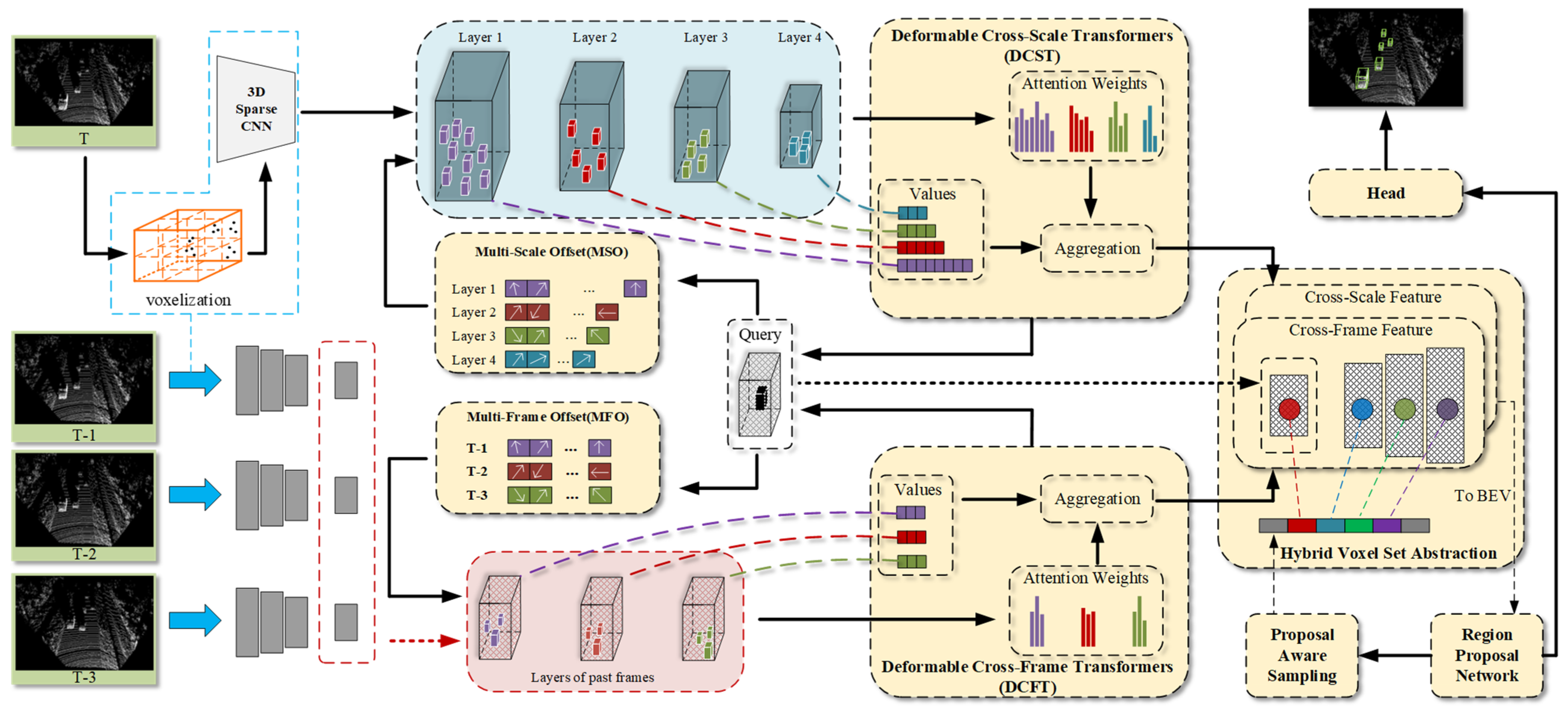

DS-Trans: A 3D Object Detection Method Based on a Deformable Spatiotemporal Transformer for Autonomous Vehicles

Abstract

1. Introduction

- (1)

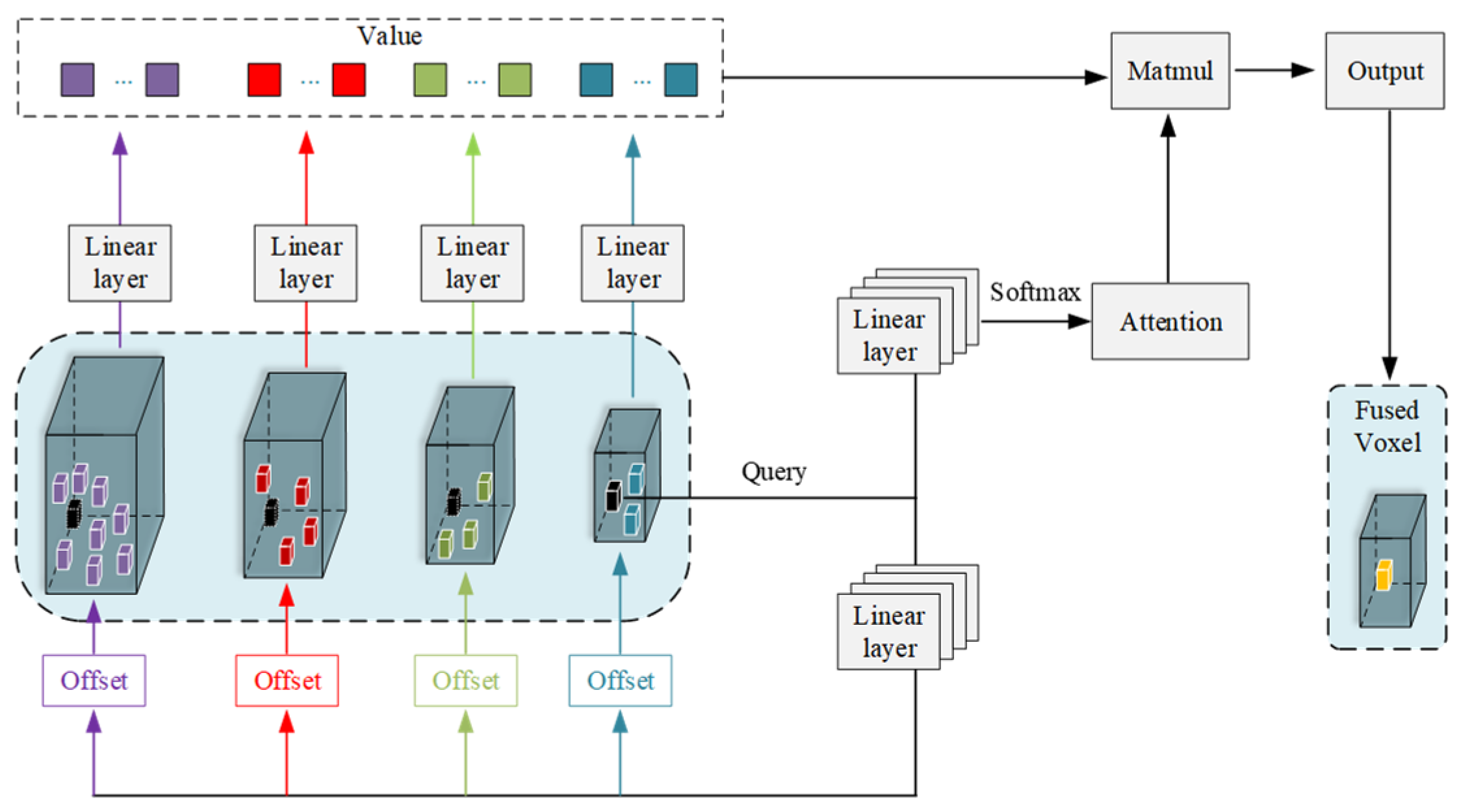

- Addressing the issue of fine-grained feature misalignment in deformable attention mechanisms, we propose a Deformable Cross-Scale Transformer (DCST) module. This module employs a multi-scale offset mechanism, enabling non-uniform sampling of features across different scales. This enhances the spatial information aggregation capability of the output features.

- (2)

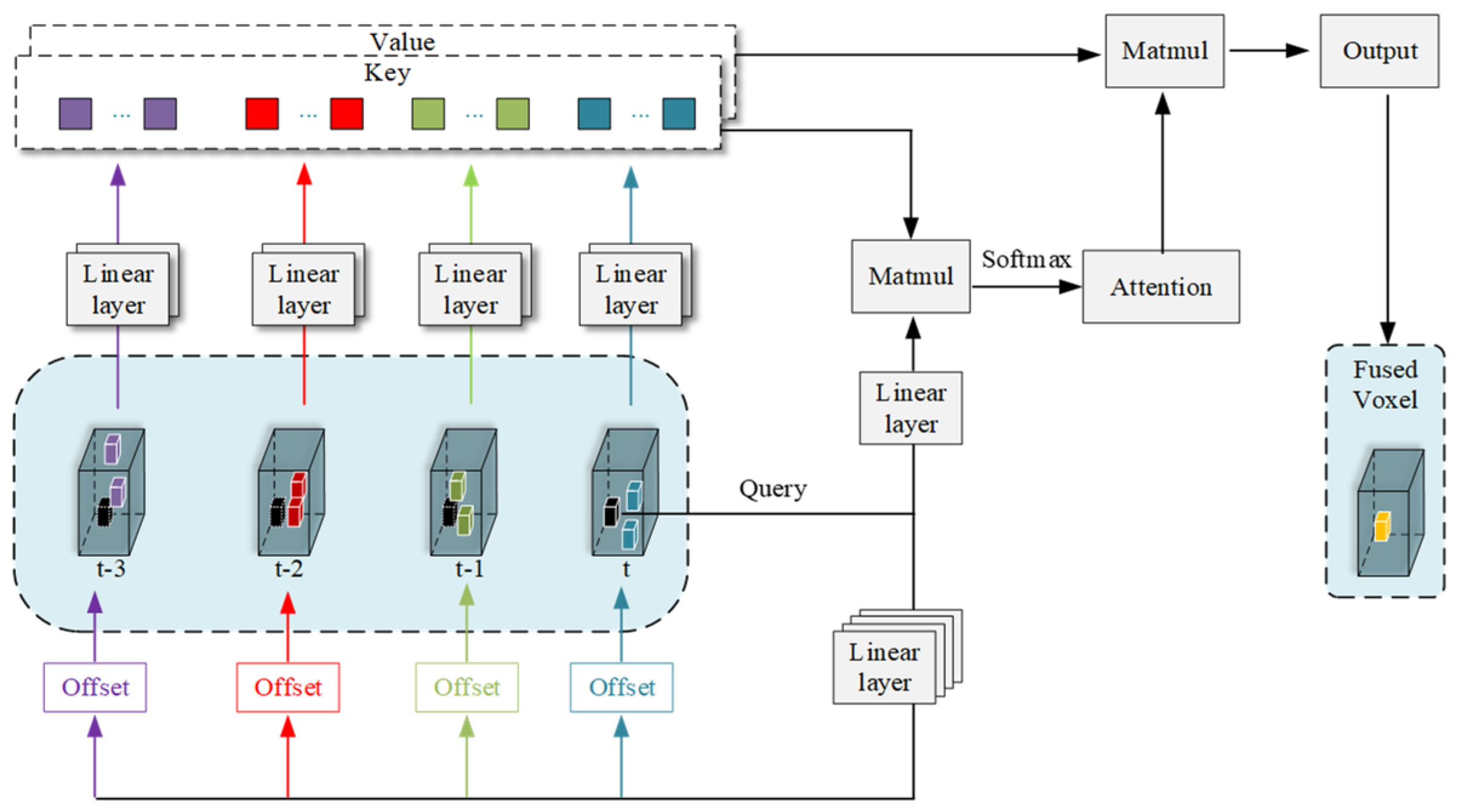

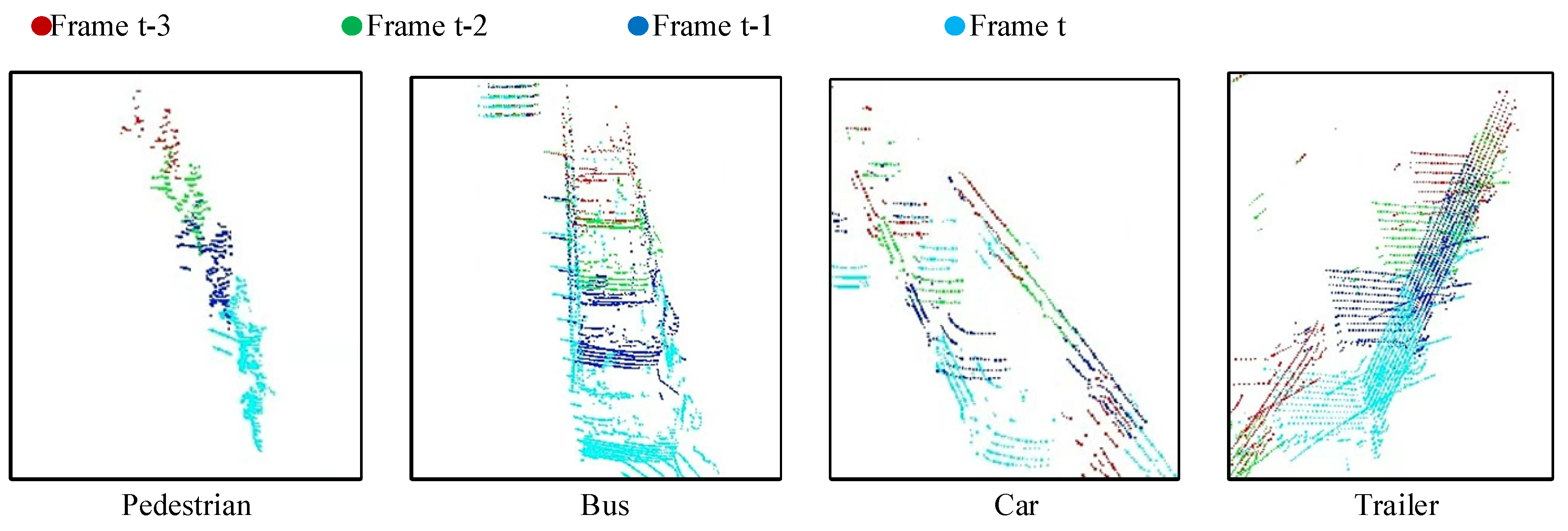

- Tackling the problem of feature misalignment caused by object motion in multi-frame feature fusion, we introduce a Deformable Cross-Frame Transformer (DCFT) module. Independent learnable offset parameters are assigned to different frames, allowing the model to adaptively associate dynamic features across multiple frames, thereby improving the model’s utilization of temporal information.

- (3)

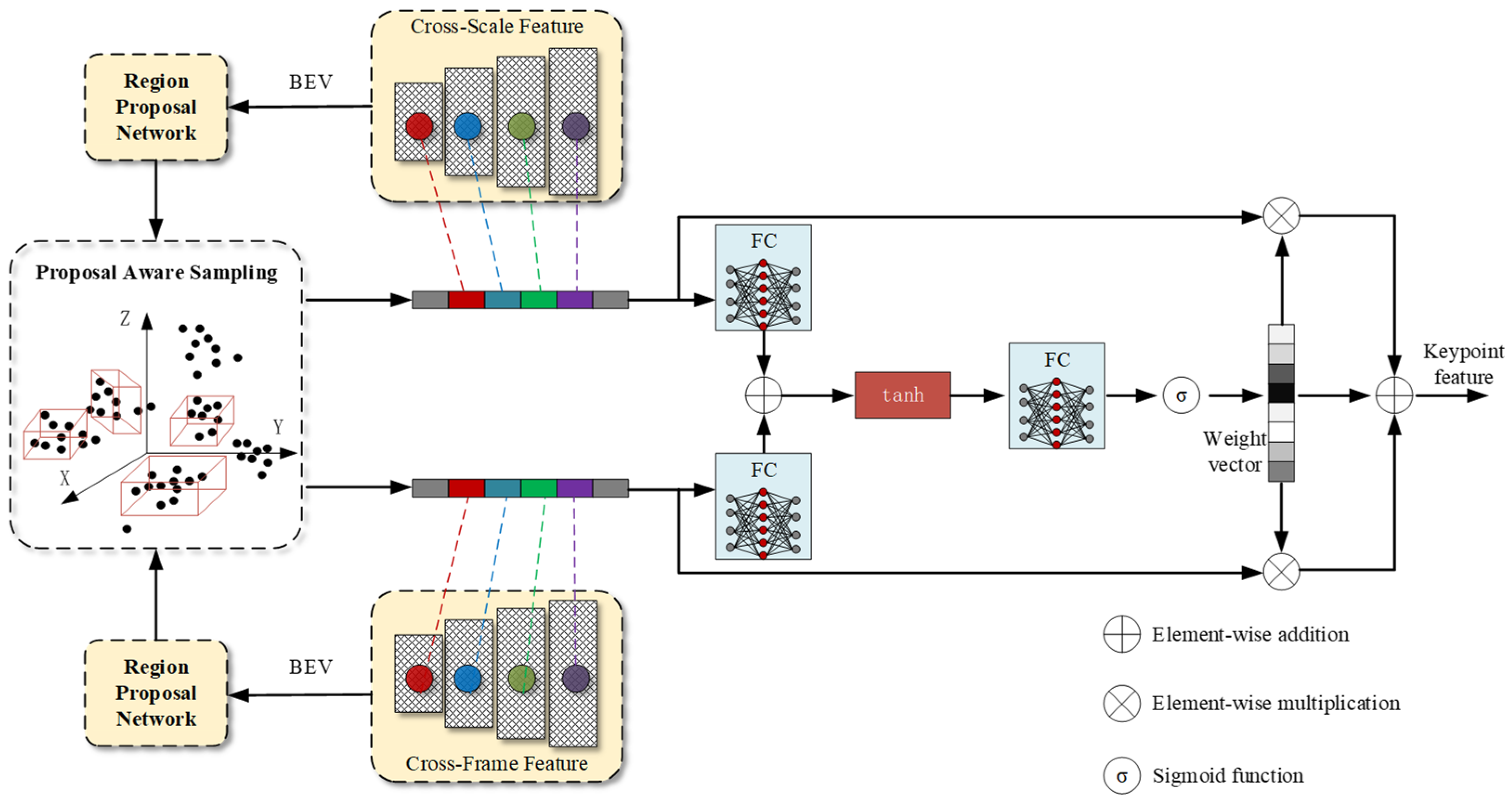

- To fully exploit information from cross-scale and cross-frame features, we present a Hybrid Voxel Set Extraction (HVSE) module. By predicting fusion weights for two types of feature vectors, the model adaptively obtains hybrid features containing both spatial and temporal information. We also devise a Proposal-Aware Sampling (PAS) algorithm to enhance foreground point recall rates, further optimizing the model’s feature extraction efficiency.

2. Related Work

2.1. 3D Object Detection Based on Multi-Frame Data

2.2. 3D Object Detection Based on Attention Mechanism

3. The Framework of the Deformable Spatiotemporal Transformer

3.1. 3D Feature Extraction

3.2. Deformable Cross-Scale Transformer Module

3.3. Deformable Cross-Frame Transformer Module

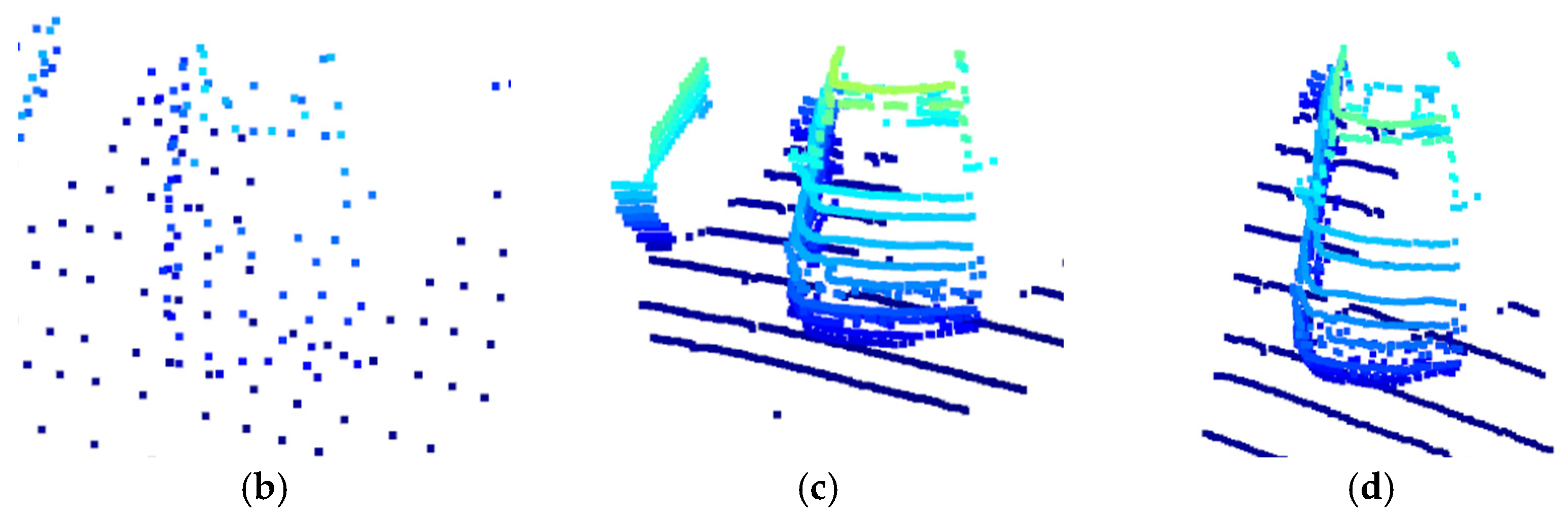

3.4. Proposal-Aware Sampling Module

3.5. Hybrid Voxel Set Extraction Module

4. Experiments

4.1. Experiment Setup

4.1.1. Voxelization

4.1.2. Public Dataset and Training Setup

- (a)

- nuScenes dataset

- (b)

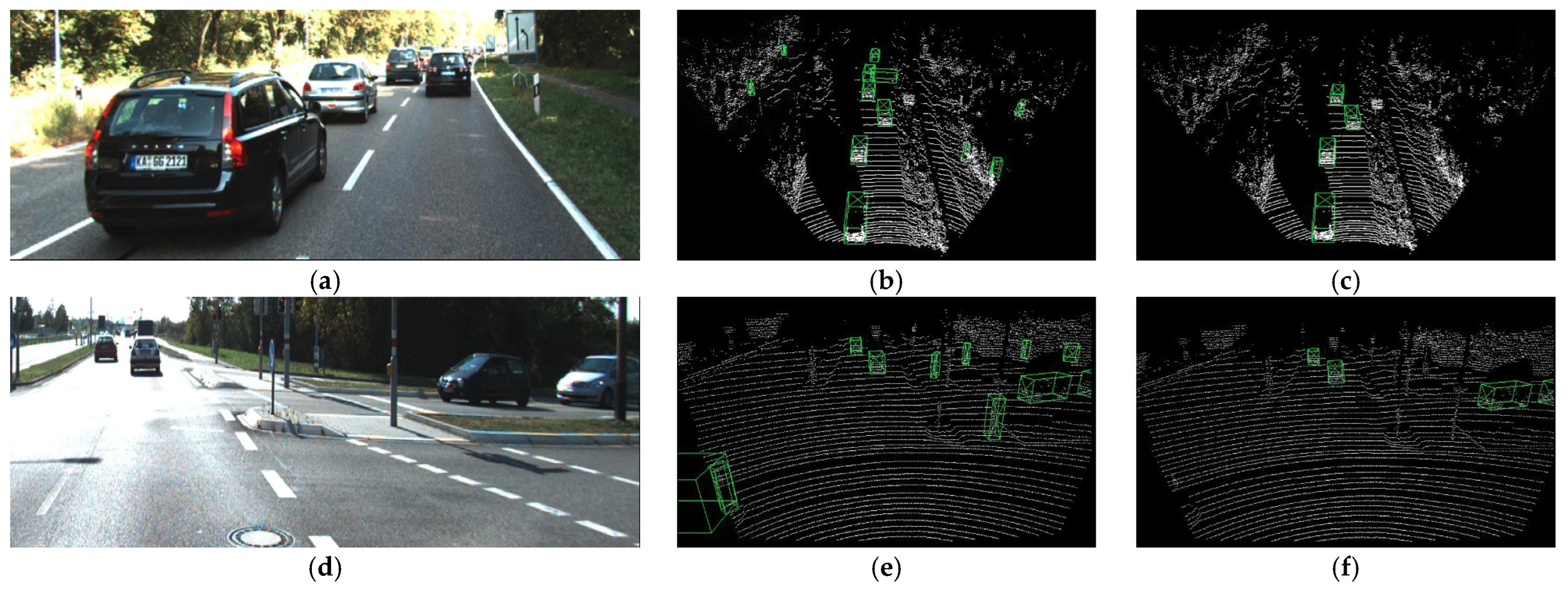

- KITTI dataset

- (c)

- Training setup

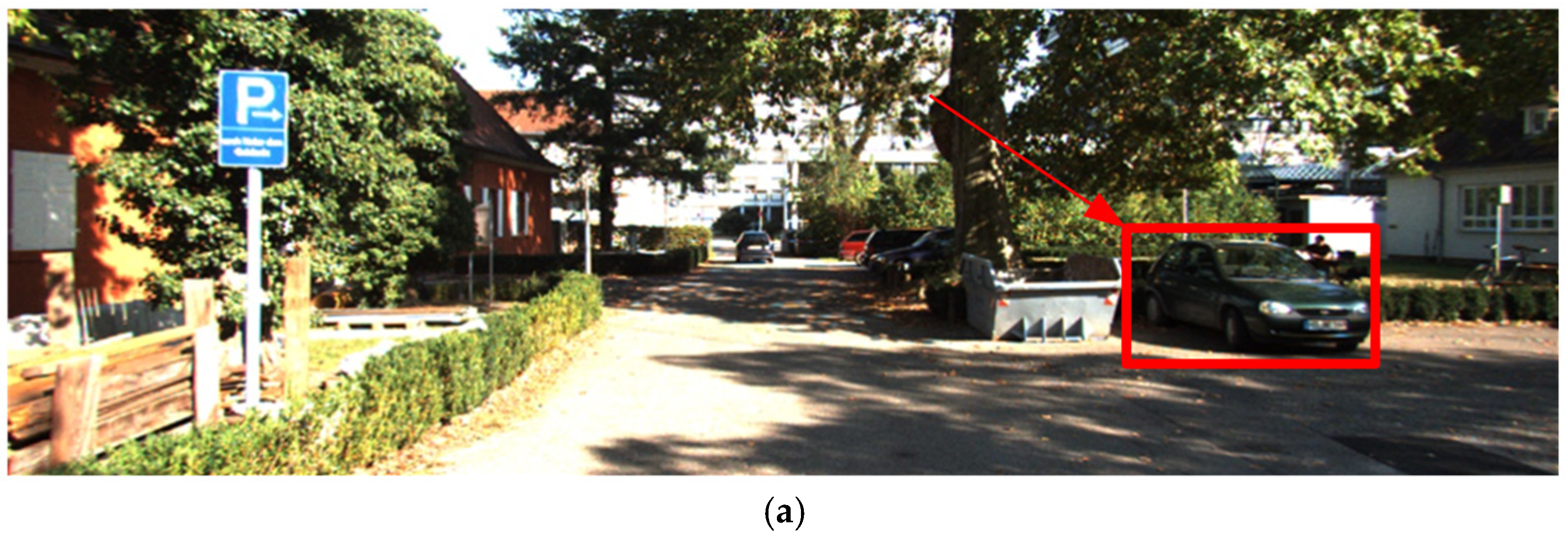

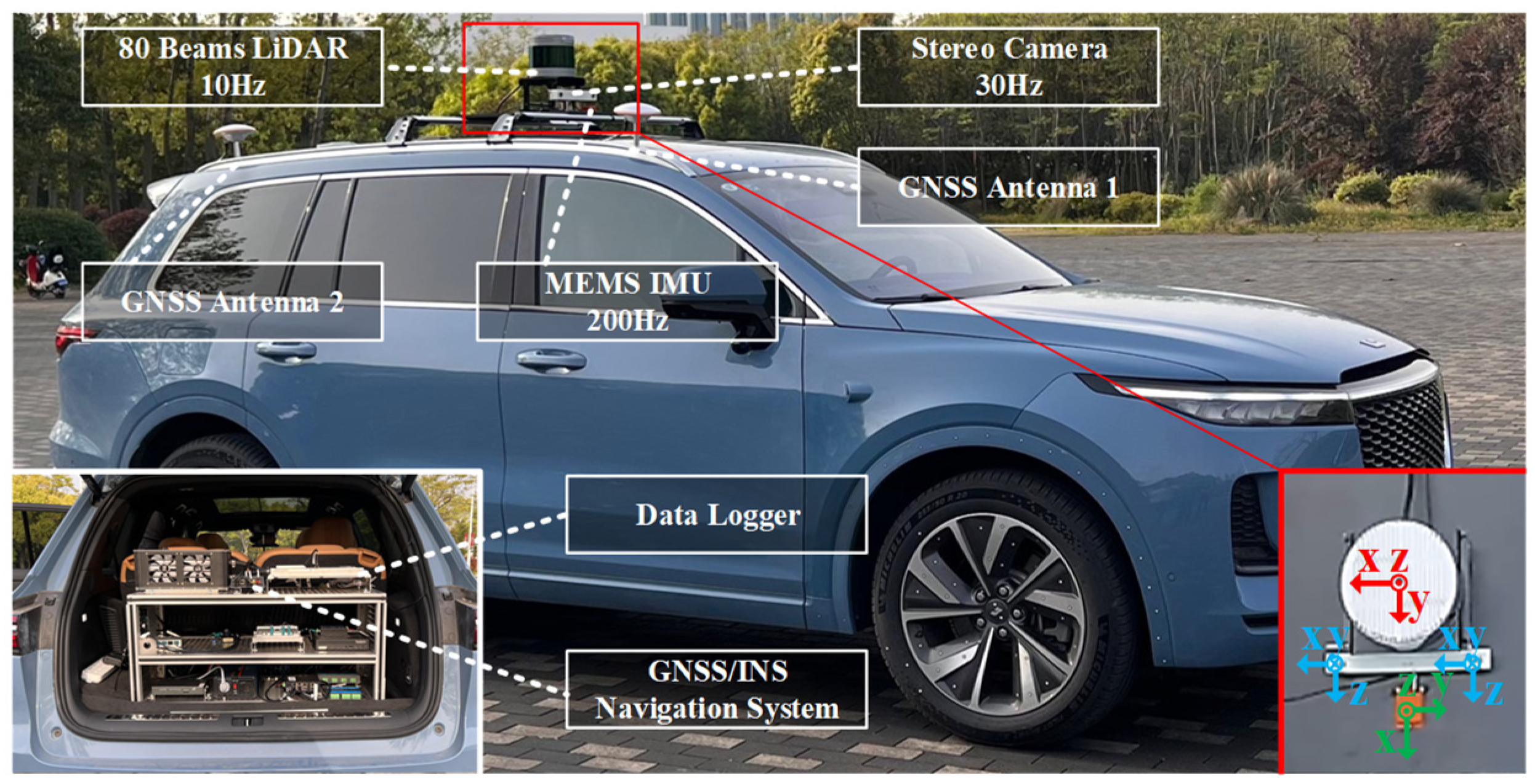

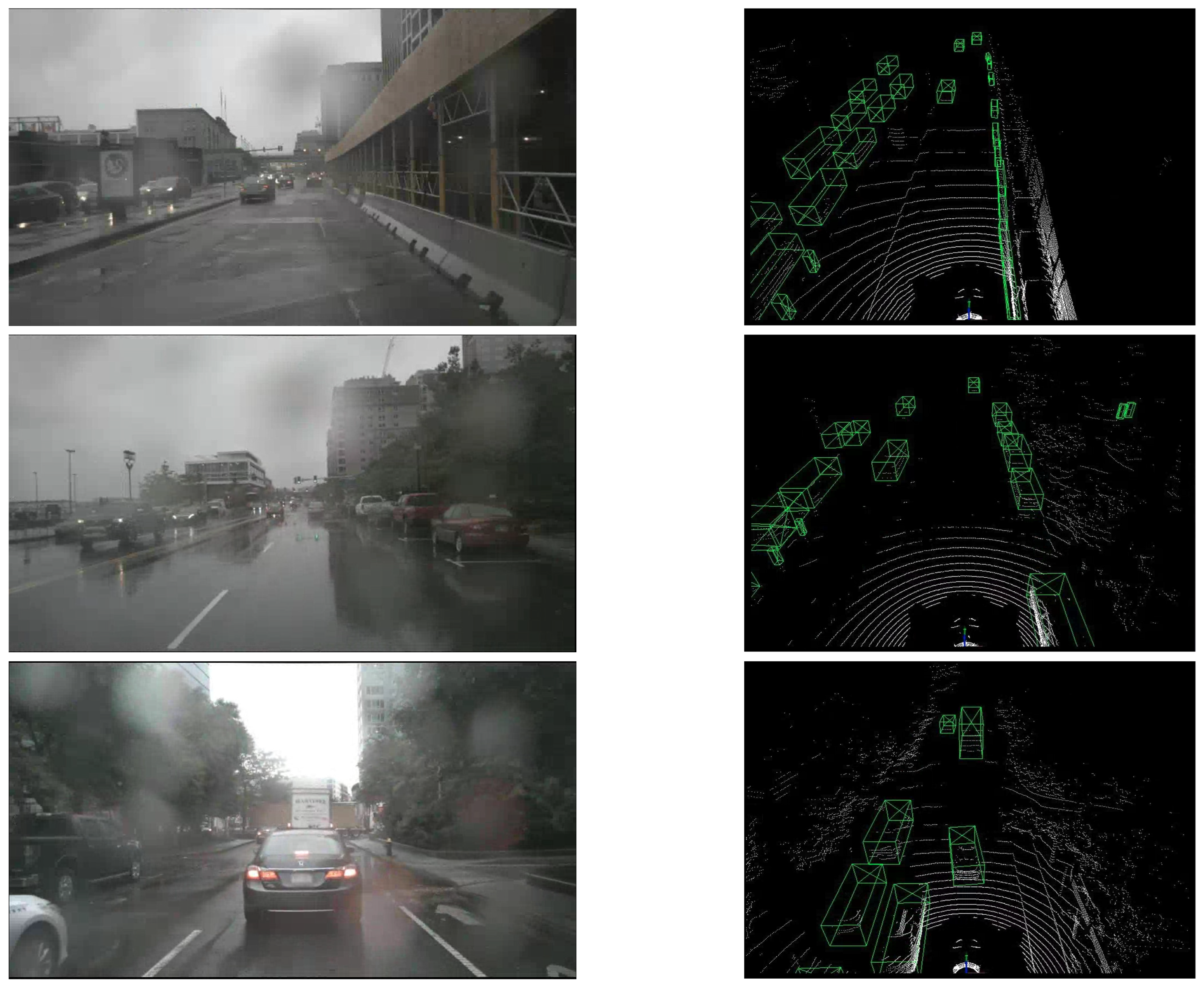

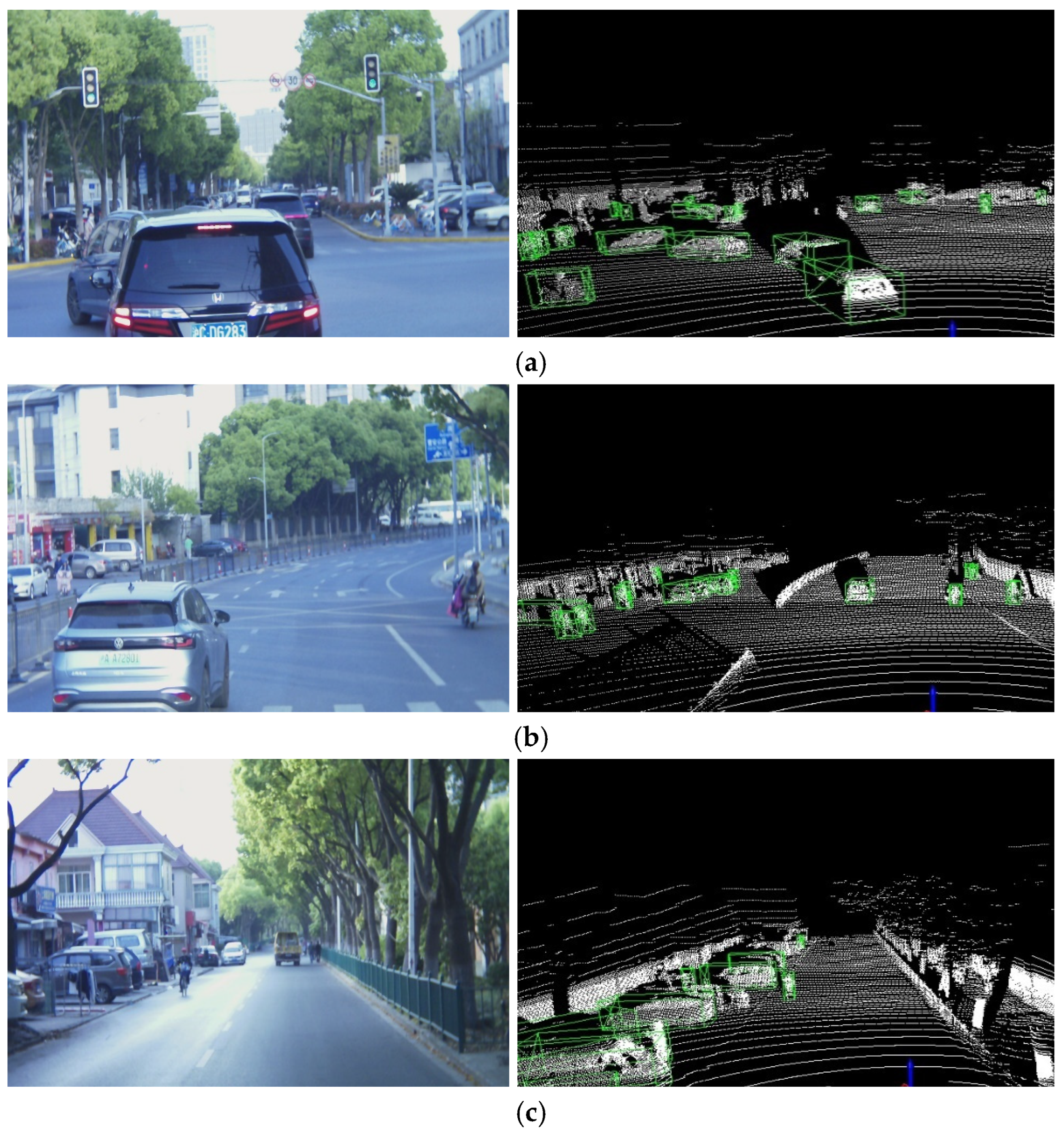

4.1.3. Self-Collected Dataset

4.2. Results of the nuScenes Dataset

4.3. Results of the KITTI Dataset

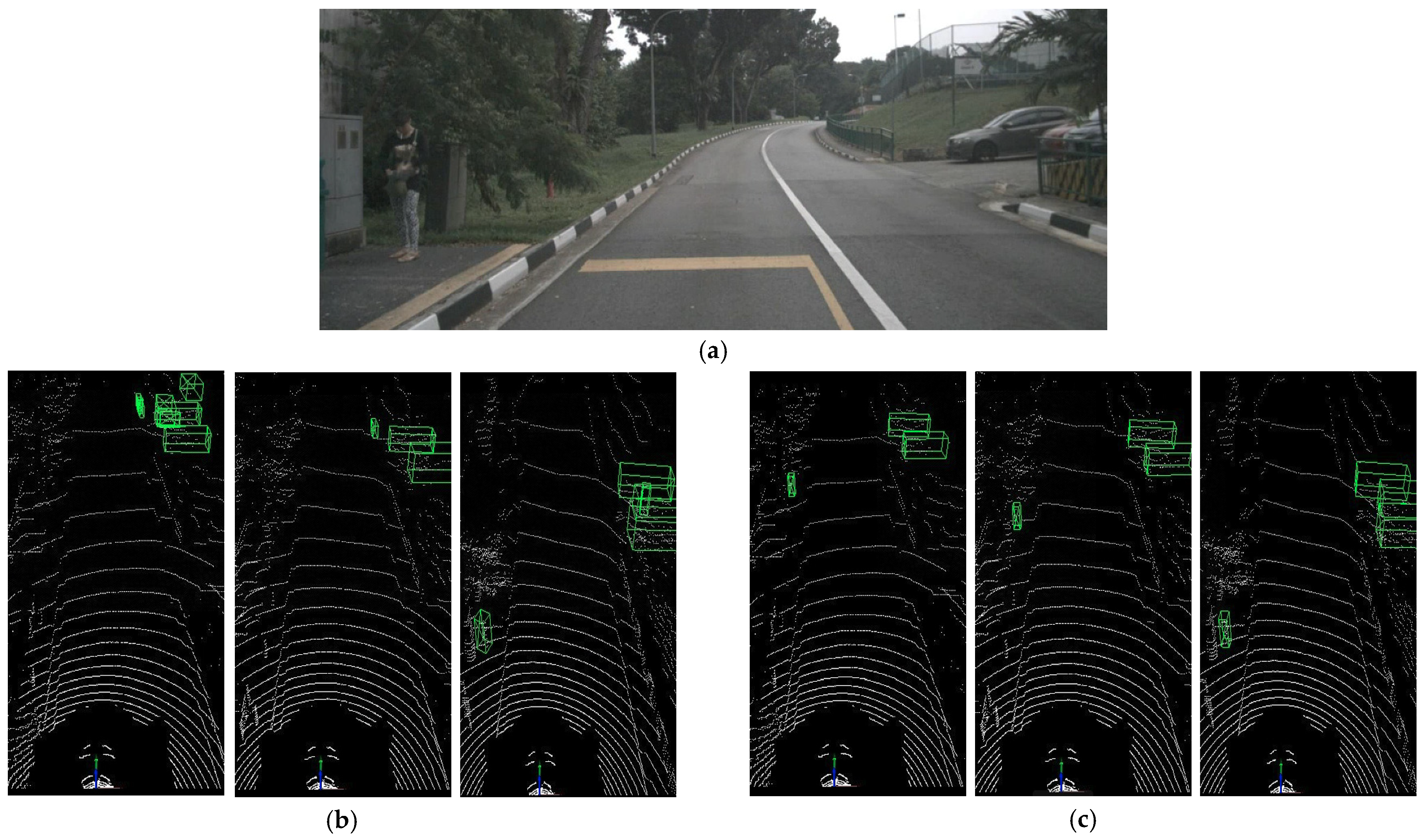

4.4. Results of the Self-Collected Dataset

4.5. Ablation Studies

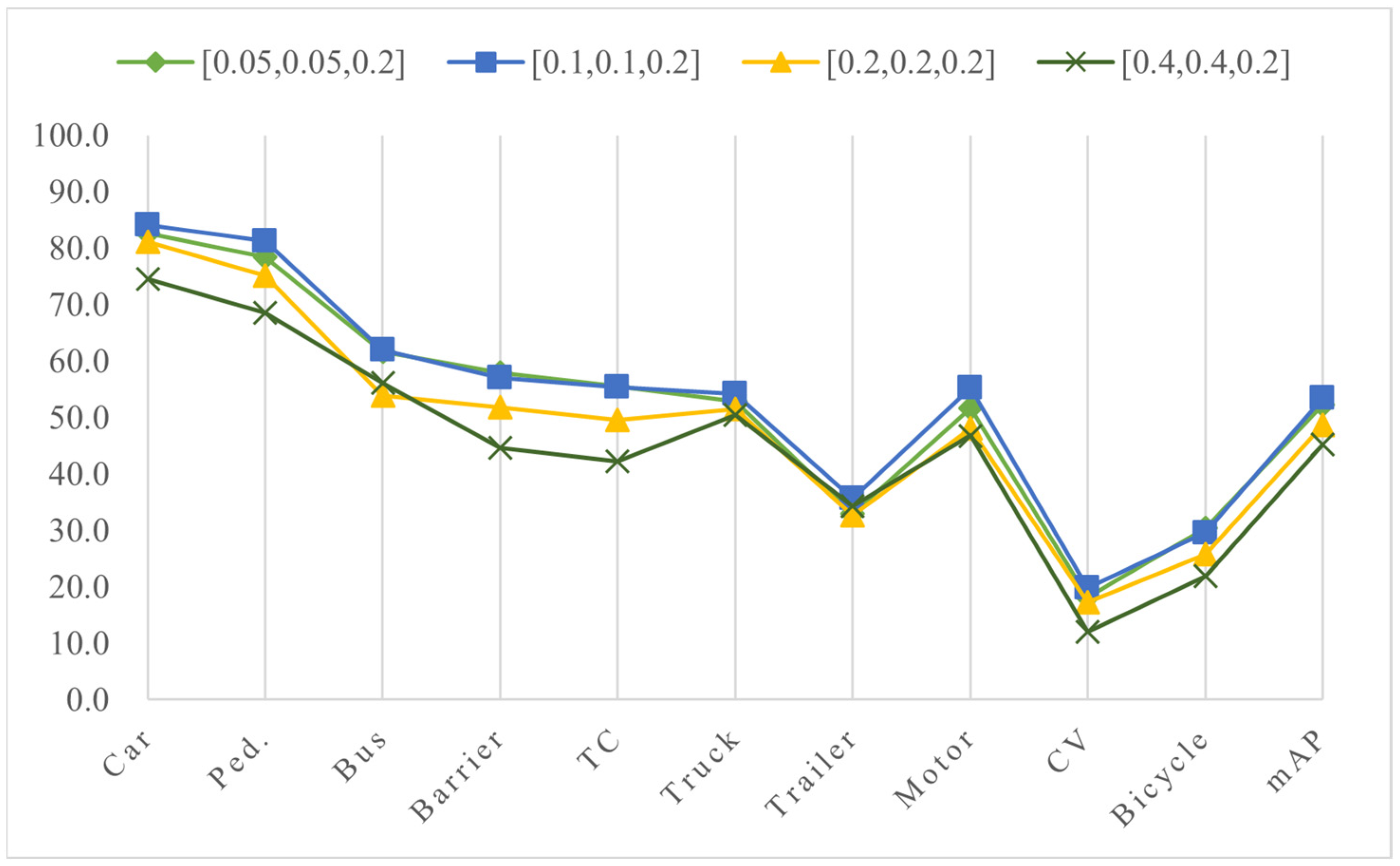

4.5.1. Ablation Experiments of the DCST Module

4.5.2. Ablation Experiments of the DCFT Module

4.5.3. Ablation Experiments of the PAS Module

4.5.4. Ablation Experiments of the HVSA Module

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tao, C.; He, H.; Xu, F.; Cao, J. Stereo Priori RCNN Based Car Detection on Point Level for Autonomous Driving. Knowl. -Based Syst. 2021, 229, 107346. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. FCOS3D: Fully Convolutional One-Stage Monocular 3D Object Detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), IEEE, Montreal, BC, Canada, 11–17 October 2021; pp. 913–922. [Google Scholar]

- Sun, J.; Chen, L.; Xie, Y.; Zhang, S.; Jiang, Q.; Zhou, X.; Bao, H. Disp R-CNN: Stereo 3D Object Detection via Shape Prior Guided Instance Disparity Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10548–10557. [Google Scholar]

- You, Y.; Wang, Y.; Chao, W.-L.; Garg, D.; Pleiss, G.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-LiDAR++: Accurate Depth for 3D Object Detection in Autonomous Driving. In Proceedings of the Eighth International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. DETR3D: 3D Object Detection from Multi-View Images via 3D-to-2D Queries. In Proceedings of the 5th Conference on Robot Learning, Baltimore, MD, USA, 11 January 2022; pp. 180–191. [Google Scholar]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3D Object Detection for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2147–2156. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 14–19 June 2020; pp. 10526–10535. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Pan, X.; Xia, Z.; Song, S.; Li, L.E.; Huang, G. 3D Object Detection with Pointformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7463–7472. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-Based 3D Single Stage Object Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11040–11048. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-CNN: Towards High Performance Voxel-Based 3D Object Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2021; Volume 35, pp. 1201–1209. [Google Scholar] [CrossRef]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection From RGB-D Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing Point Features with Image Semantics for 3D Object Detection. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Berlin, Germany, 2020; pp. 35–52. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10386–10393. [Google Scholar]

- Sindagi, V.A.; Zhou, Y.; Tuzel, O. MVX-Net: Multimodal VoxelNet for 3D Object Detection. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QU, Canada, 20–24 May 2019; pp. 7276–7282. [Google Scholar]

- Wang, C.; Ma, C.; Zhu, M.; Yang, X. PointAugmenting: Cross-Modal Augmentation for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2021; pp. 11794–11803. [Google Scholar]

- Wen, L.-H.; Jo, K.-H. Three-Attention Mechanisms for One-Stage 3-D Object Detection Based on LiDAR and Camera. IEEE Trans. Ind. Inform. 2021, 17, 6655–6663. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, R.; An, H.; Tao, C.; Lu, K. Anti-Noise 3D Object Detection of Multimodal Feature Attention Fusion Based on PV-RCNN. Sensors 2023, 23, 233. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep Learning in Multimodal Remote Sensing Data Fusion: A Comprehensive Review. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Gao, L.; Li, J.; Zheng, K.; Jia, X. Enhanced Autoencoders with Attention-Embedded Degradation Learning for Unsupervised Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5509417. [Google Scholar] [CrossRef]

- Li, J.; Zheng, K.; Liu, W.; Li, Z.; Yu, H.; Ni, L. Model-Guided Coarse-to-Fine Fusion Network for Unsupervised Hyperspectral Image Super-Resolution. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Li, J.; Zheng, K.; Li, Z.; Gao, L.; Jia, X. X-Shaped Interactive Autoencoders with Cross-Modality Mutual Learning for Unsupervised Hyperspectral Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5518317. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Zhao, L.; Xu, S.; Liu, L.; Ming, D.; Tao, W. SVASeg: Sparse Voxel-Based Attention for 3D LiDAR Point Cloud Semantic Segmentation. Remote Sens. 2022, 14, 4471. [Google Scholar] [CrossRef]

- Yang, B.; Liang, M.; Urtasun, R. HDNET: Exploiting HD Maps for 3D Object Detection. In Proceedings of the 2nd Conference on Robot Learning, PMLR, Zürich, Switzerland, 23 October 2018; pp. 146–155. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-Time 3D Object Detection from Point Clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Luo, W.; Yang, B.; Urtasun, R. Fast and Furious: Real Time End-to-End 3D Detection, Tracking and Motion Forecasting with a Single Convolutional Net. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3569–3577. [Google Scholar]

- Yang, Z.; Zhou, Y.; Chen, Z.; Ngiam, J. 3D-MAN: 3D Multi-Frame Attention Network for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2021; pp. 1863–1872. [Google Scholar]

- Yuan, Z.; Song, X.; Bai, L.; Wang, Z.; Ouyang, W. Temporal-Channel Transformer for 3D Lidar-Based Video Object Detection for Autonomous Driving. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2068–2078. [Google Scholar] [CrossRef]

- Huang, R.; Zhang, W.; Kundu, A.; Pantofaru, C.; Ross, D.A.; Funkhouser, T.; Fathi, A. An LSTM Approach to Temporal 3D Object Detection in LiDAR Point Clouds. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 266–282. [Google Scholar]

- Zhou, Z.; Zhao, X.; Wang, Y.; Wang, P.; Foroosh, H. CenterFormer: Center-Based Transformer for 3D Object Detection. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 496–513. [Google Scholar]

- Yin, J.; Shen, J.; Guan, C.; Zhou, D.; Yang, R. LiDAR-Based Online 3D Video Object Detection with Graph-Based Message Passing and Spatiotemporal Transformer Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11495–11504. [Google Scholar]

- Chen, X.; Shi, S.; Zhu, B.; Cheung, K.C.; Xu, H.; Li, H. MPPNet: Multi-Frame Feature Intertwining with Proxy Points for 3D Temporal Object Detection. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 680–697. [Google Scholar]

- Venkatesh, G.M.; O’Connor, N.E.; Little, S. Incorporating Spatio-Temporal Information in Frustum-ConvNet for Improved 3D Object Detection in Instrumented Vehicles. In Proceedings of the 2022 10th European Workshop on Visual Information Processing (EUVIP), Lisbon, Portugal, 11–14 September 2022; pp. 1–6. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: San Francisco, CA, USA, 2017; Volume 30. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, New York, NY, USA, 10 February 2022. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Luo, X.; Zhou, F.; Tao, C.; Yang, A.; Zhang, P.; Chen, Y. Dynamic Multitarget Detection Algorithm of Voxel Point Cloud Fusion Based on PointRCNN. IEEE Trans. Intell. Transp. Syst. 2022, 23, 20707–20720. [Google Scholar] [CrossRef]

- Qi, C.R.; Zhou, Y.; Najibi, M.; Sun, P.; Vo, K.; Deng, B.; Anguelov, D. Offboard 3D Object Detection from Point Cloud Sequences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 6134–6144. [Google Scholar]

- Zhao, L.; Guo, J.; Xu, D.; Sheng, L. Transformer3D-Det: Improving 3D Object Detection by Vote Refinement. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4735–4746. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, Z.; Cao, Y.; Hu, H.; Tong, X. Group-Free 3D Object Detection via Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2949–2958. [Google Scholar]

- Misra, I.; Girdhar, R.; Joulin, A. An End-to-End Transformer Model for 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2906–2917. [Google Scholar]

- Sheng, H.; Cai, S.; Liu, Y.; Deng, B.; Huang, J.; Hua, X.-S.; Zhao, M.-J. Improving 3D Object Detection with Channel-Wise Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2743–2752. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 7 June 2017; Volume 30. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection from Point Cloud with Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-Voxel Feature Set Abstraction with Local Vector Representation for 3D Object Detection. Int. J. Comput. Vis. 2021, 131, 531–551. [Google Scholar] [CrossRef]

- Ye, Y.; Chen, H.; Zhang, C.; Hao, X.; Zhang, Z. SARPNET: Shape Attention Regional Proposal Network for liDAR-Based 3D Object Detection. Neurocomputing 2020, 379, 53–63. [Google Scholar] [CrossRef]

- Wang, J.; Lan, S.; Gao, M.; Davis, L.S. InfoFocus: 3D Object Detection for Autonomous Driving with Dynamic Information Modeling. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 405–420. [Google Scholar]

- Simonelli, A.; Bulo, S.R.; Porzi, L.; Lopez-Antequera, M.; Kontschieder, P. Disentangling Monocular 3D Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1991–1999. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. PointPainting: Sequential Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4604–4612. [Google Scholar]

- Hu, P.; Ziglar, J.; Held, D.; Ramanan, D. What You See Is What You Get: Exploiting Visibility for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11001–11009. [Google Scholar]

- Luo, Z.; Zhang, G.; Zhou, C.; Liu, T.; Lu, S.; Pan, L. TransPillars: Coarse-To-Fine Aggregation for Multi-Frame 3D Object Detection. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) 2023, Waikoloa, HI, USA, 2–7 January 2023; pp. 4230–4239. [Google Scholar]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S.L. Categorical Depth Distribution Network for Monocular 3D Object Detection. arXiv 2021, arXiv:2103.01100. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

| Method | Data | Car | Ped. | Bus | Barrier | TC | Truck | Trailer | Motor. | CV | Bicycle | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SARPNET [54] | Single- frame | 59.9 | 69.4 | 19.4 | 38.3 | 44.6 | 18.7 | 18.0 | 29.8 | 11.6 | 14.2 | 32.4 |

| InfoFocus [55] | 77.9 | 63.4 | 44.8 | 47.8 | 46.5 | 31.4 | 37.3 | 29.0 | 10.7 | 6.1 | 39.5 | |

| MAIR [56] | 47.8 | 37.0 | 18.8 | 51.1 | 48.7 | 22.0 | 17.6 | 29.0 | 7.4 | 24.5 | 30.4 | |

| PointPillars [9] | 68.4 | 59.7 | 28.2 | 38.9 | 30.8 | 23.0 | 23.4 | 27.4 | 4.1 | 1.1 | 30.5 | |

| PointPainting [57] | 77.9 | 73.3 | 36.1 | 60.2 | 62.4 | 35.8 | 37.3 | 41.5 | 15.8 | 24.1 | 46.4 | |

| WYSIWYG [58] | 79.1 | 65.0 | 46.6 | 34.7 | 28.8 | 30.4 | 40.1 | 18.2 | 7.1 | 0.1 | 35.0 | |

| 3DVID [37] | Multi- frame | 79.7 | 76.5 | 47.1 | 48.8 | 58.8 | 33.6 | 43.0 | 40.7 | 18.1 | 7.9 | 45.4 |

| TCTR [34] | 83.2 | 74.9 | 63.7 | 53.8 | 52.5 | 51.5 | 33.0 | 54.0 | 15.6 | 22.6 | 50.5 | |

| TransPillars [59] | 84.0 | 77.9 | 62.0 | 55.1 | 55.4 | 52.4 | 34.3 | 55.2 | 18.9 | 27.6 | 52.3 | |

| DSTrans (Ours) | 86.2 | 78.3 | 60.9 | 58.2 | 54.9 | 54.2 | 35.1 | 57.4 | 19.8 | 28.7 | 53.4 |

| Method | Car | Ped. | Bicycle | mAP | Params. | Runtime |

|---|---|---|---|---|---|---|

| CaDDN [60] | 21.4 | 13.0 | 9.8 | 14.7 | 67.55 M | 0.57 s |

| PointPillars [9] | 77.3 | 52.3 | 62.7 | 64.1 | 4.83 M | 0.05 s |

| SECOND [8] | 78.6 | 53.0 | 67.2 | 66.3 | 5.32 M | 0.06 s |

| PointRCNN [61] | 78.7 | 54.4 | 72.1 | 68.4 | 4.09 M | 0.10 s |

| Part-A2 | 78.7 | 66.0 | 74.3 | 73.0 | 59.23 M | 0.14 s |

| PV-RCNN [7] | 83.6 | 57.9 | 70.5 | 70.7 | 13.12 M | 0.16 s |

| VoxelR-CNN [12] | 84.5 | - | - | - | 7.59 M | 0.05 s |

| DSTrans (w/o DCFT) | 84.8 | 62.7 | 73.1 | 73.5 | 15.23 M | 0.18 s |

| Car | Pedestrian | Bicycle | mAP | ||||

|---|---|---|---|---|---|---|---|

| √ | 81.7 | 58.2 | 69.7 | 69.9 | |||

| √ | 82.9 | 57.3 | 70.2 | 70.1 | |||

| √ | 82.3 | 59.6 | 71.3 | 71.1 | |||

| √ | 83.2 | 58.7 | 68.9 | 70.3 | |||

| √ | √ | √ | √ | 84.8 | 62.7 | 73.1 | 73.5 |

| Method | FFN | Car | Ped. | Bus | Barrier | TC | Truck | Trailer | Motor. | CV | Bicycle | mAP | Δ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointPillars [9] | 1 | 68.4 | 59.7 | 28.2 | 38.9 | 30.8 | 23.0 | 23.4 | 27.4 | 4.1 | 1.1 | 30.5 | - |

| 2 | 69.2 | 60.9 | 30.0 | 39.3 | 32.1 | 24.8 | 24.6 | 29.2 | 5.2 | 2.3 | 31.8 | 1.3 | |

| 3 | 70.1 | 60.4 | 30.7 | 38.7 | 31.4 | 22.1 | 23.8 | 30.5 | 5.7 | 2.9 | 31.6 | −0.1 | |

| 4 | 70.8 | 59.1 | 29.6 | 35.2 | 27.3 | 25.6 | 22.9 | 28.2 | 4.8 | 2.5 | 30.6 | −1.0 | |

| DSTrans | 1 | 79.4 | 69.2 | 58.6 | 47.1 | 45.3 | 48.4 | 28.9 | 50.2 | 15.4 | 18.9 | 46.1 | - |

| 2 | 81.5 | 72.7 | 59.1 | 50.4 | 48.7 | 51.3 | 29.6 | 53.4 | 17.2 | 23.4 | 48.7 | 2.6 | |

| 3 | 83.1 | 74.8 | 60.4 | 51.9 | 52.3 | 53.8 | 31.4 | 54.7 | 19.2 | 26.3 | 50.8 | 2.1 | |

| 4 | 84.2 | 77.3 | 60.9 | 52.7 | 52.9 | 54.2 | 32.6 | 55.2 | 19.8 | 27.2 | 51.7 | 0.9 |

| Method | Car | Ped. | Bus | Barrier | TC | Truck | Trailer | Motor. | CV | Bicycle | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|

| FPS | 81.7 | 72.1 | 58.6 | 45.4 | 44.6 | 48.3 | 30.2 | 49.8 | 14.7 | 23.9 | 46.9 |

| SPC | 83.6 | 76.4 | 58.9 | 51.3 | 50.7 | 53.8 | 33.7 | 53.9 | 16.5 | 25.3 | 50.4 |

| PAS | 84.2 | 77.3 | 60.9 | 52.7 | 52.9 | 54.2 | 32.6 | 55.2 | 19.8 | 27.2 | 51.7 |

| Method | Car | Ped. | Bus | Barrier | TC | Truck | Trailer | Motor. | CV | Bicycle | mAP |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Concat | 82.6 | 75.8 | 59.3 | 50.4 | 49.3 | 52.8 | 31.2 | 53.9 | 18.2 | 26.1 | 50.0 |

| HVSA | 84.2 | 77.3 | 60.9 | 52.7 | 52.9 | 54.2 | 32.6 | 55.2 | 19.8 | 27.2 | 51.7 |

| Increment | 1.6 | 1.5 | 1.6 | 2.3 | 3.6 | 1.4 | 1.4 | 1.3 | 1.6 | 1.1 | 1.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Y.; Xu, R.; Tao, C.; An, H.; Wang, H.; Sun, Z.; Lu, K. DS-Trans: A 3D Object Detection Method Based on a Deformable Spatiotemporal Transformer for Autonomous Vehicles. Remote Sens. 2024, 16, 1621. https://doi.org/10.3390/rs16091621

Zhu Y, Xu R, Tao C, An H, Wang H, Sun Z, Lu K. DS-Trans: A 3D Object Detection Method Based on a Deformable Spatiotemporal Transformer for Autonomous Vehicles. Remote Sensing. 2024; 16(9):1621. https://doi.org/10.3390/rs16091621

Chicago/Turabian StyleZhu, Yuan, Ruidong Xu, Chongben Tao, Hao An, Huaide Wang, Zhipeng Sun, and Ke Lu. 2024. "DS-Trans: A 3D Object Detection Method Based on a Deformable Spatiotemporal Transformer for Autonomous Vehicles" Remote Sensing 16, no. 9: 1621. https://doi.org/10.3390/rs16091621

APA StyleZhu, Y., Xu, R., Tao, C., An, H., Wang, H., Sun, Z., & Lu, K. (2024). DS-Trans: A 3D Object Detection Method Based on a Deformable Spatiotemporal Transformer for Autonomous Vehicles. Remote Sensing, 16(9), 1621. https://doi.org/10.3390/rs16091621