A Quantile-Conserving Ensemble Filter Based on Kernel-Density Estimation

Abstract

1. Introduction

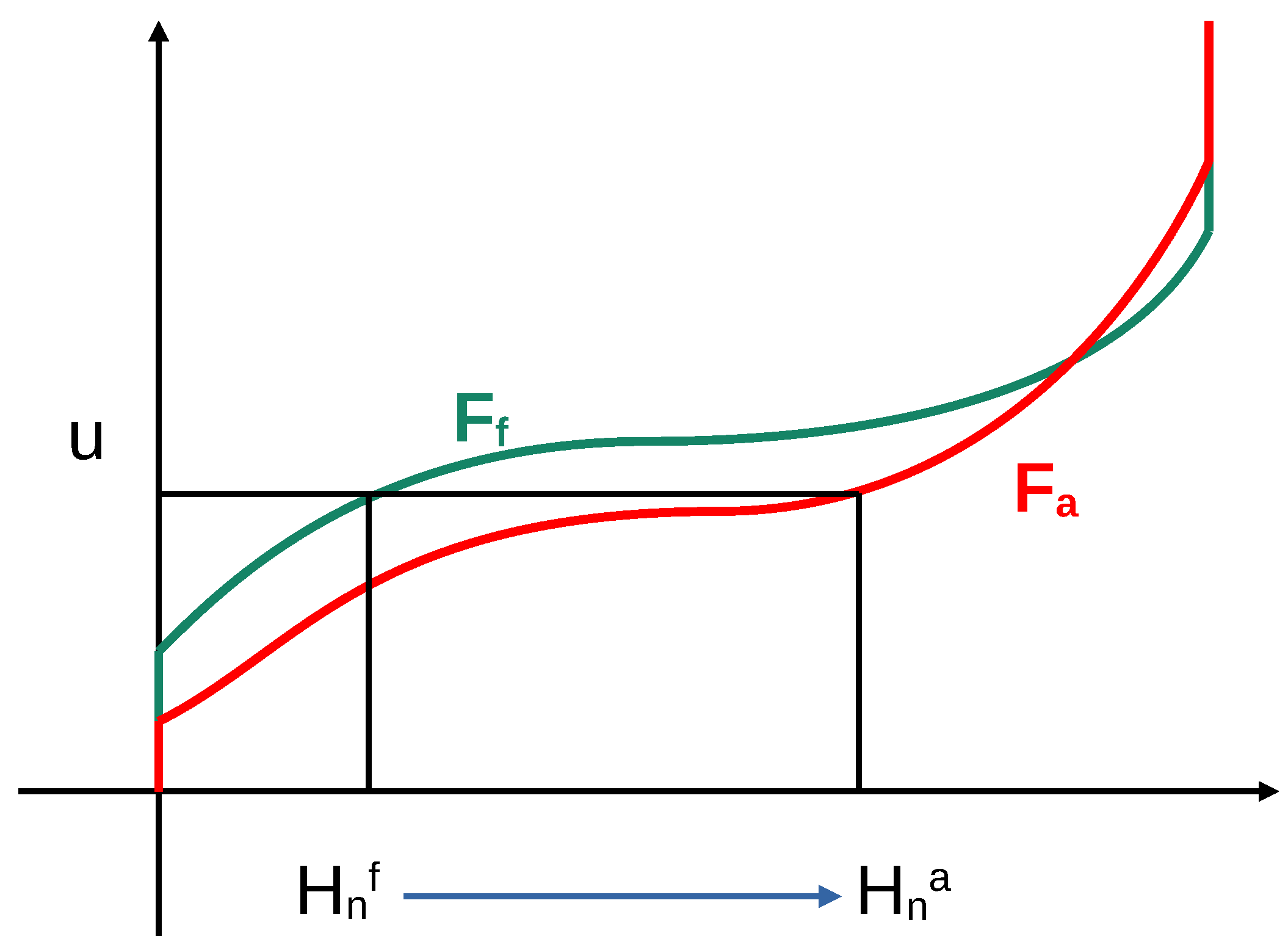

2. Mixed Delta-Kernel Distributions

2.1. Class Weights

2.2. Interior Probability Density Using Kernel-Density Estimation

2.3. Boundary Corrections on the Interior Probability Density

2.4. Cumulative Distribution Functions Using Quadrature and Sampling

2.4.1. Boundary Sampling

2.4.2. Quadrature

2.5. Application of the Quantile Function Using Root-finding

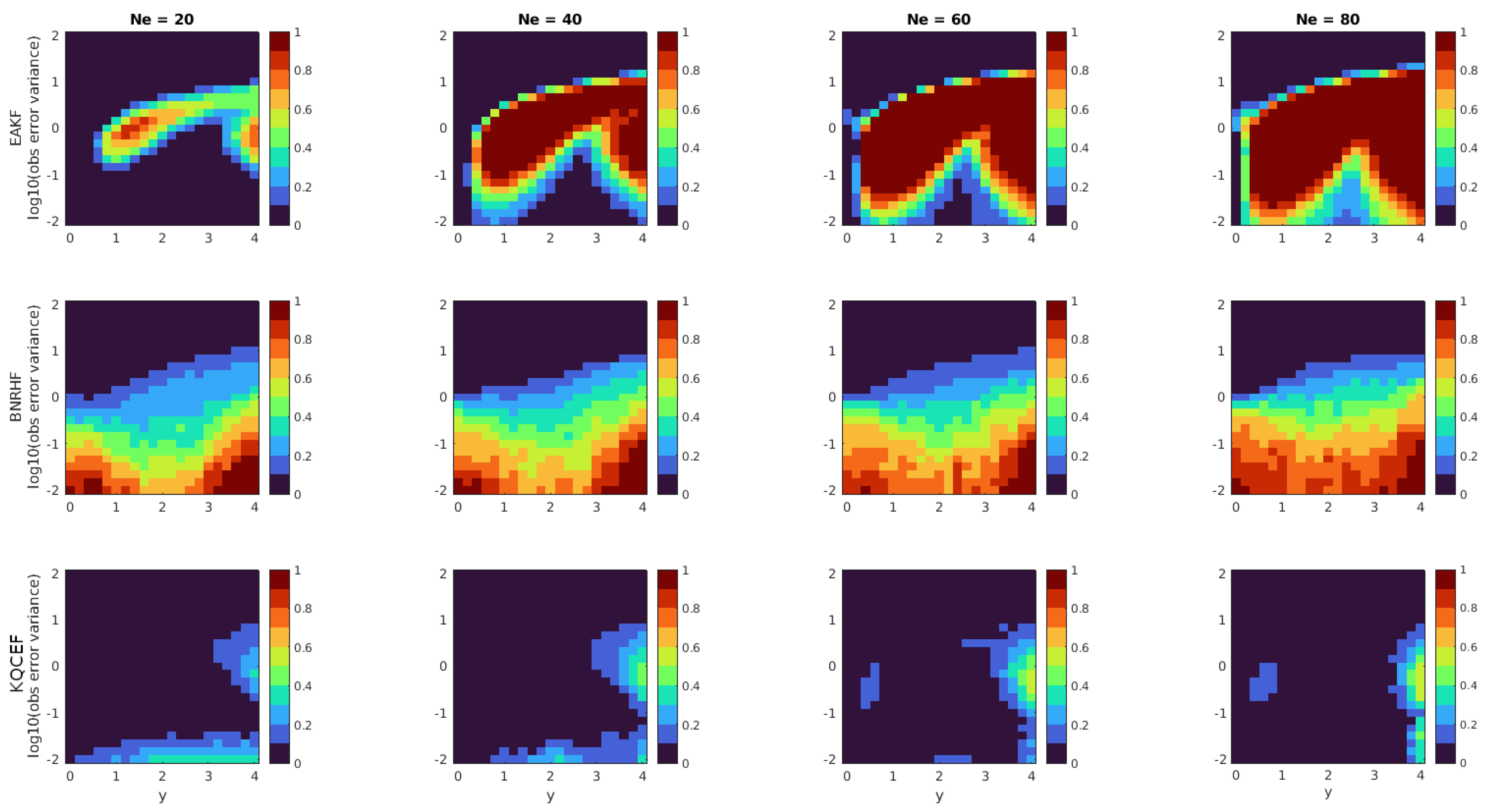

3. Non-Cycling Tests

- The Ensemble Adjustment Kalman Filter (EAKF; [15]),

- The Kernel-based QCEF (KQCEF) developed in the preceding section.

3.1. Normal Prior

3.2. Bi-Normal Prior

3.3. Mixed Prior

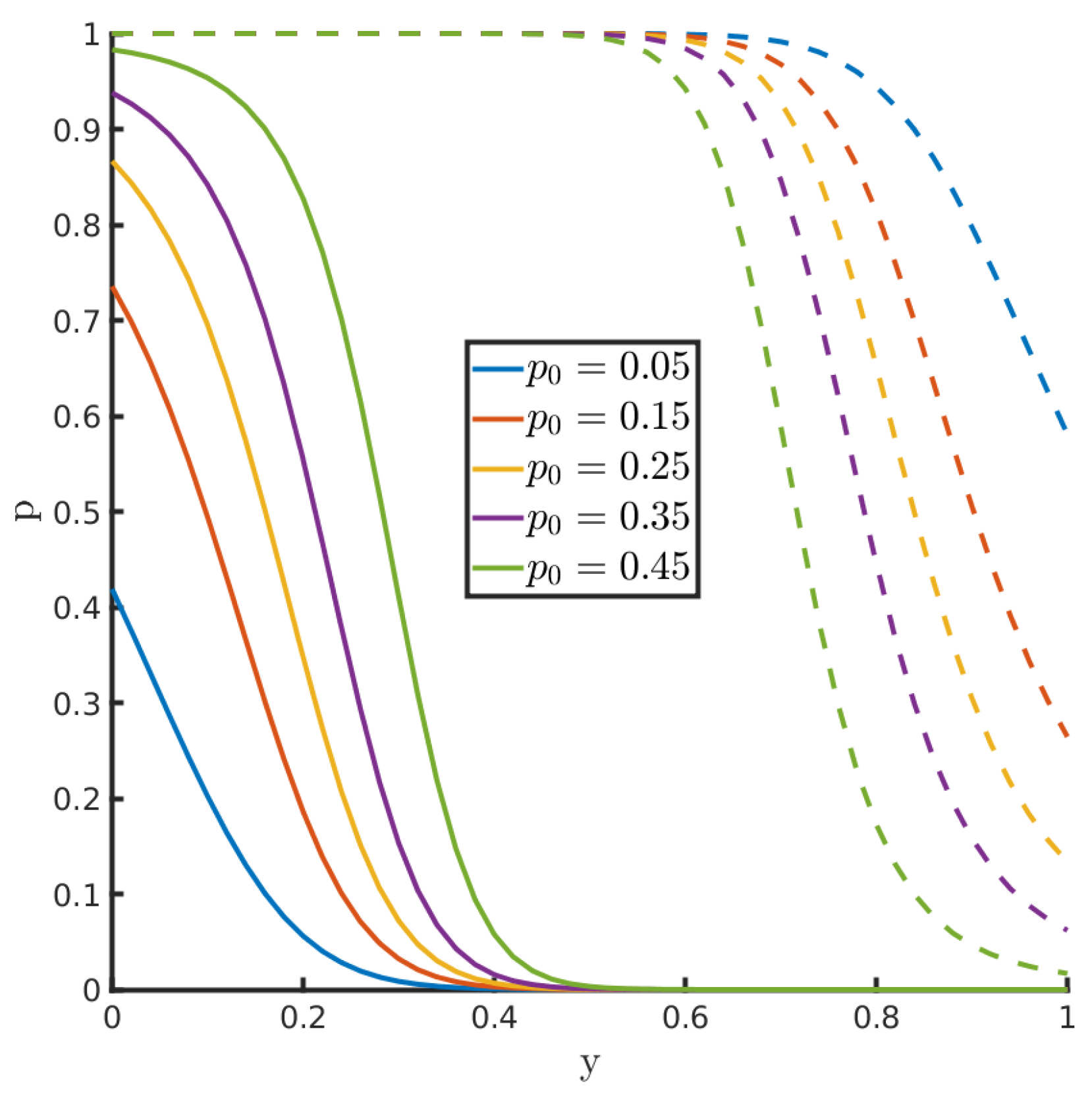

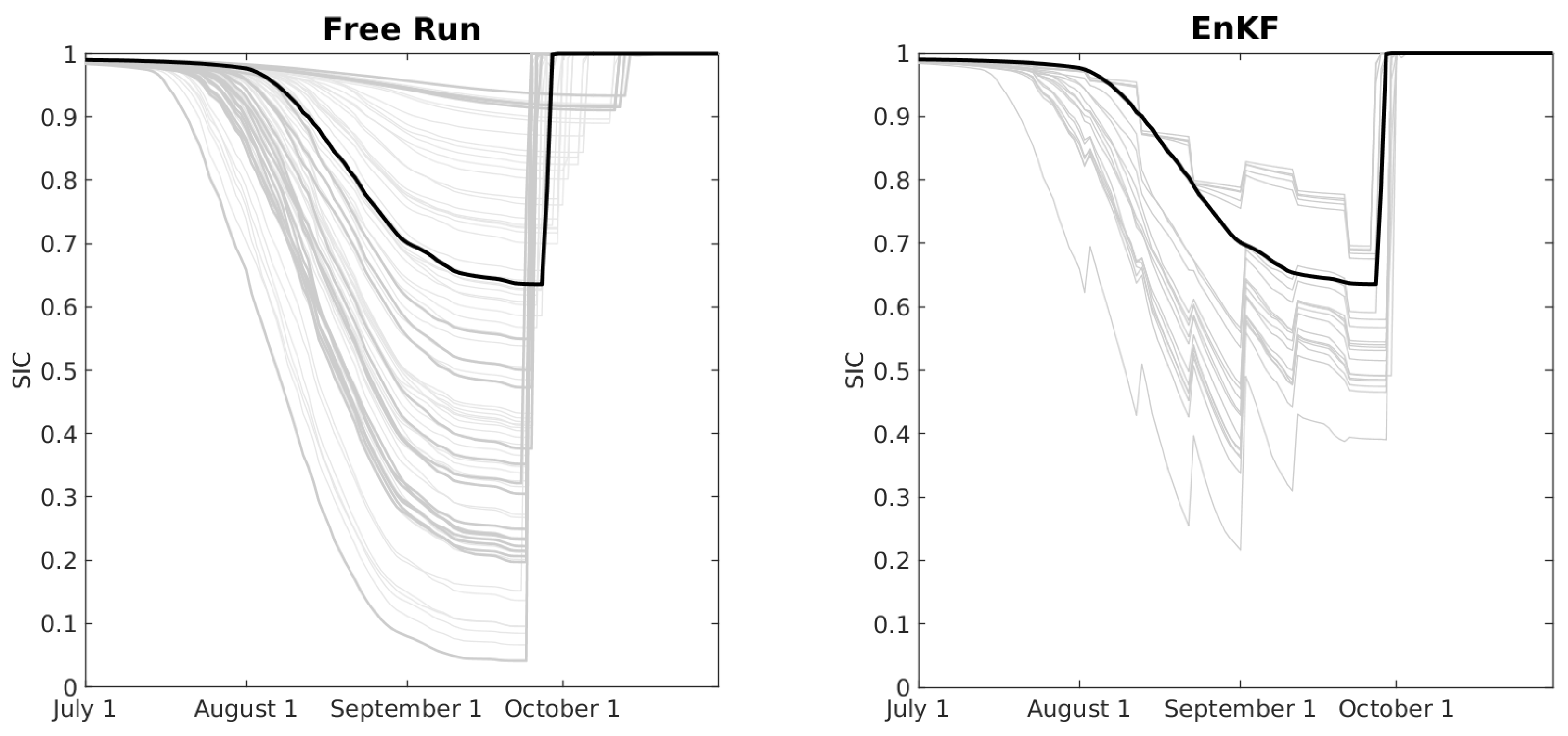

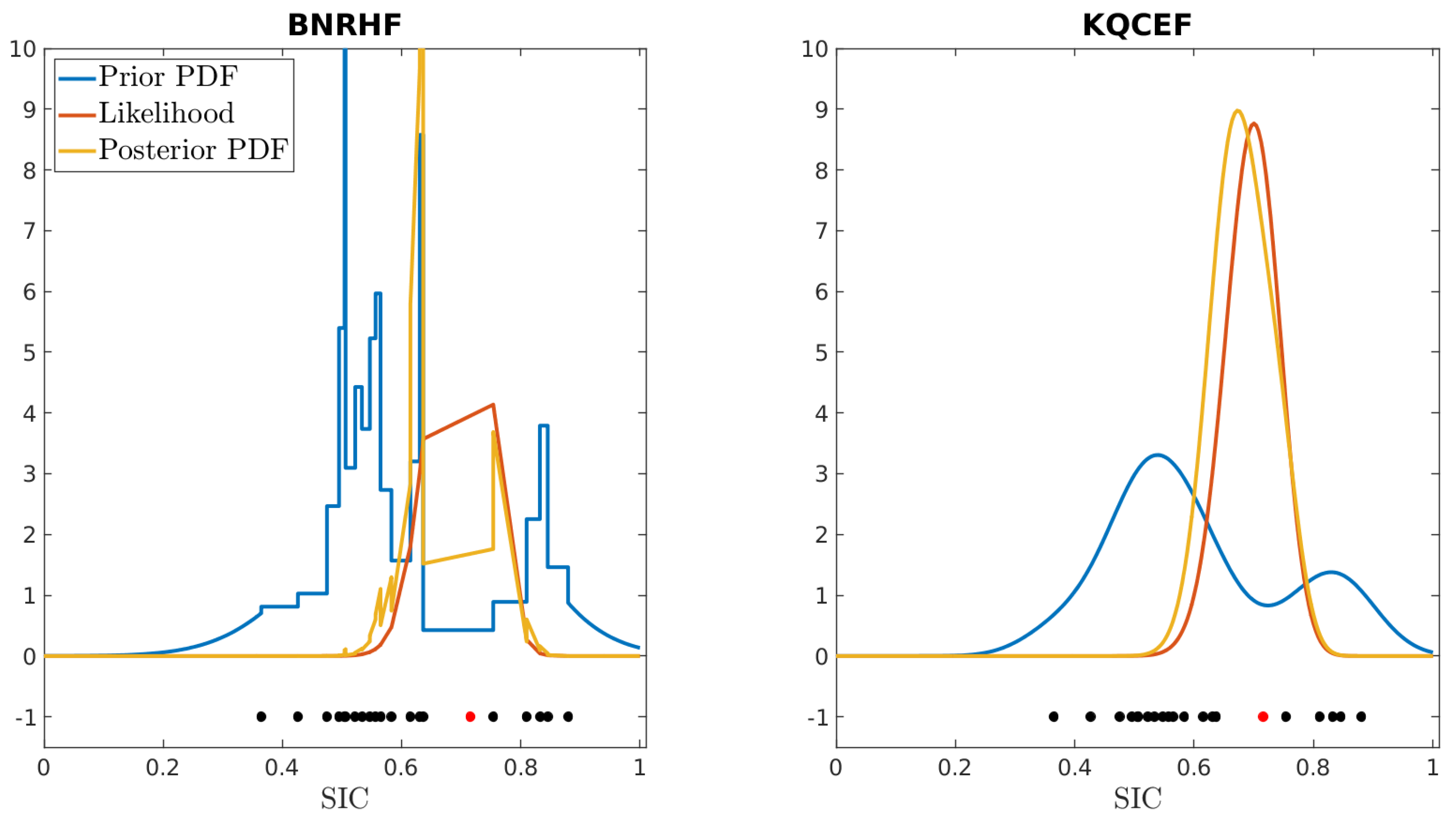

4. Application to Sea-Ice Concentration

4.1. SIC Likelihood

4.2. Experimental Design

- The R_snw nondimensional snow-albedo parameter is varied between and 0

- The rsnw_mlt melting snow-grain radius parameter is varied between and m

- The ksno parameter determining the thermal conductivity of snow varies between 0.2 and 0.35 W/m/degree.

Experimental Configurations

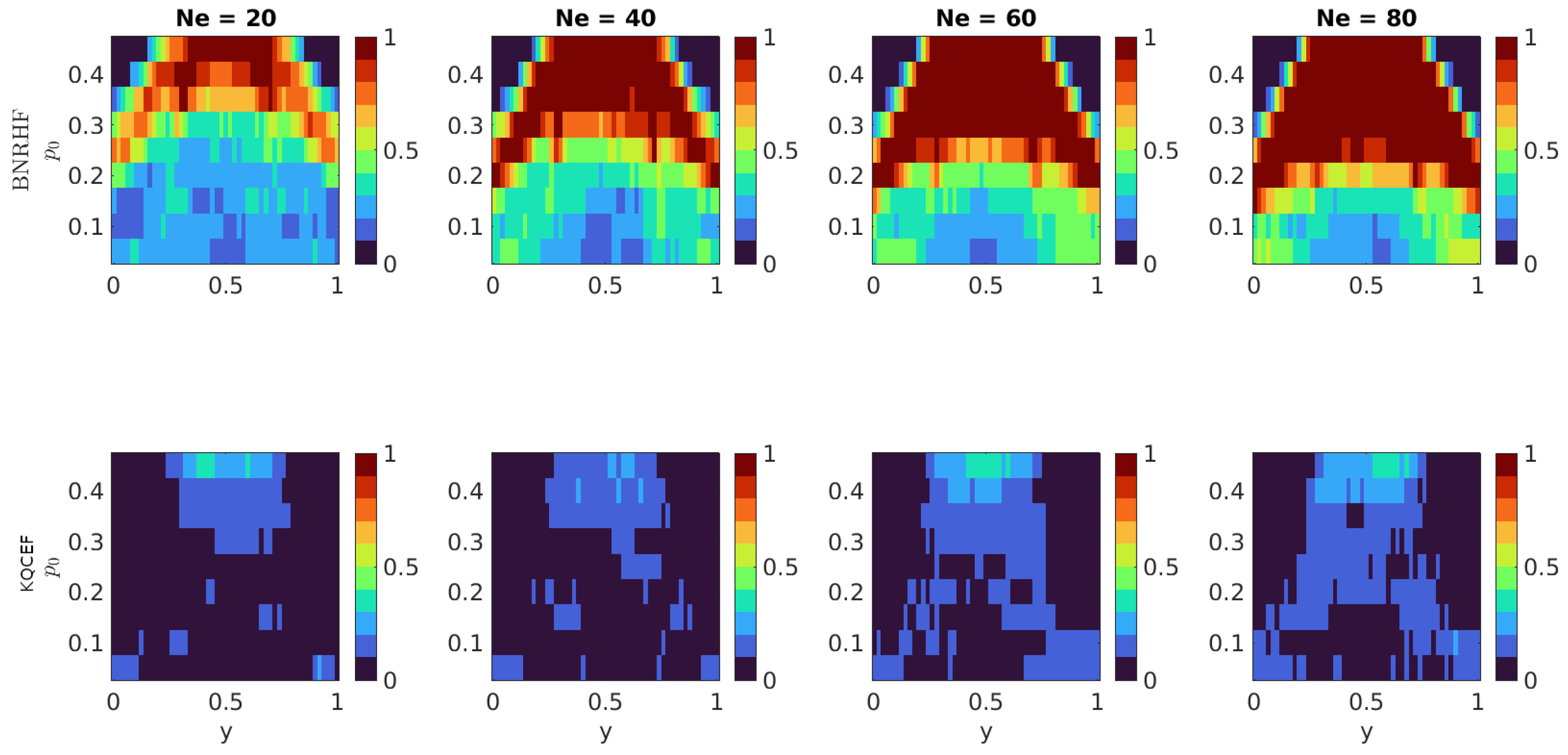

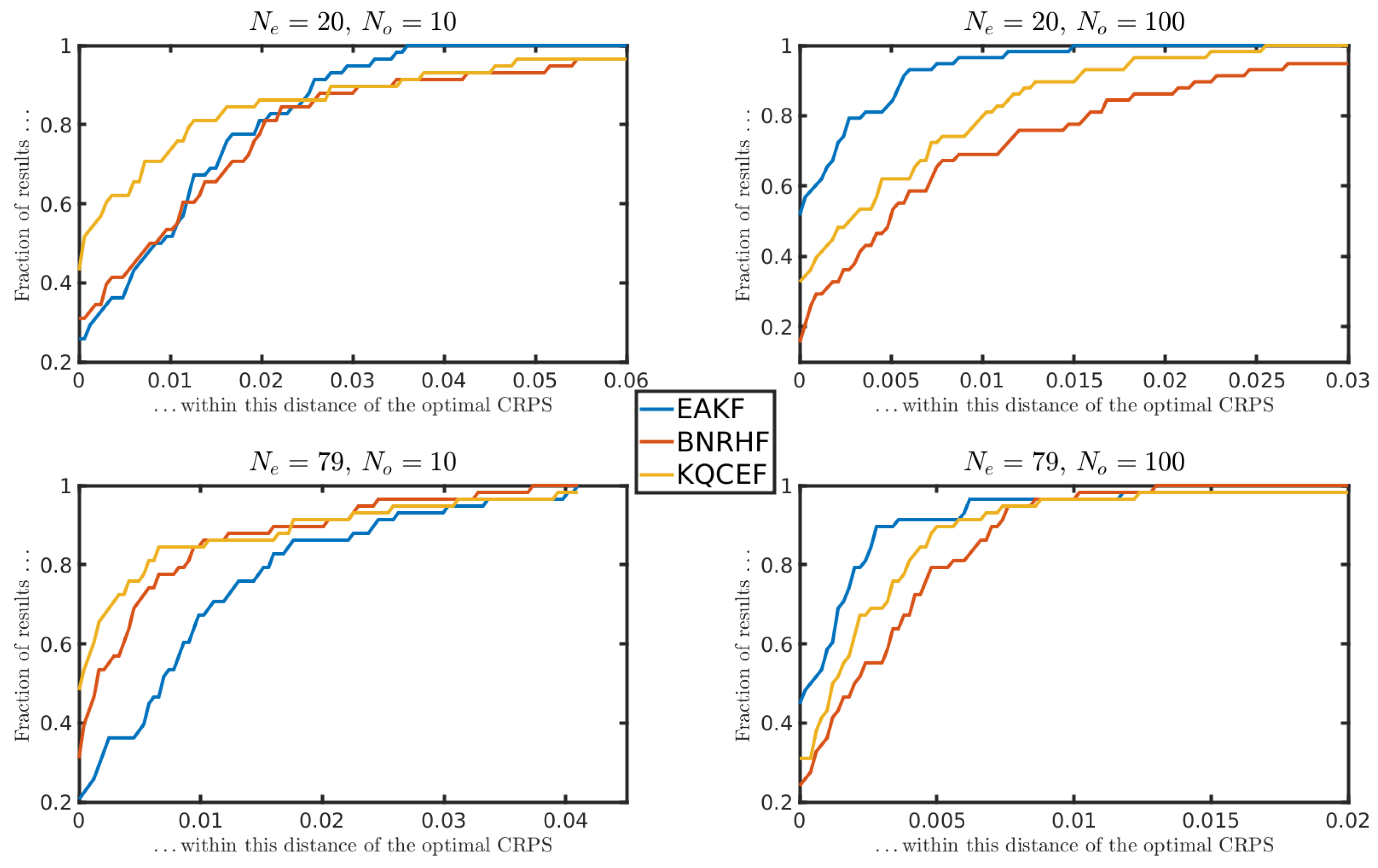

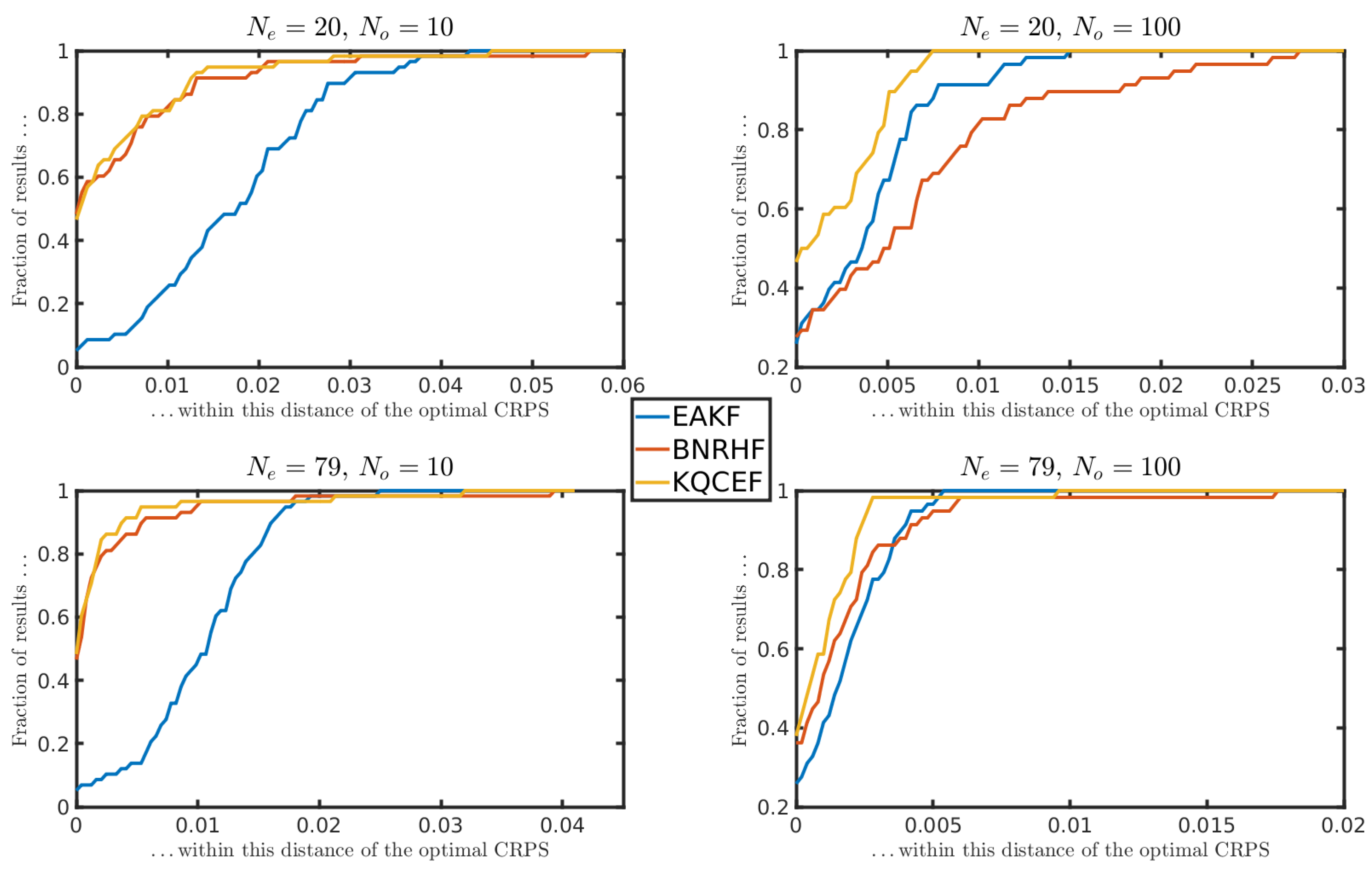

4.3. Results

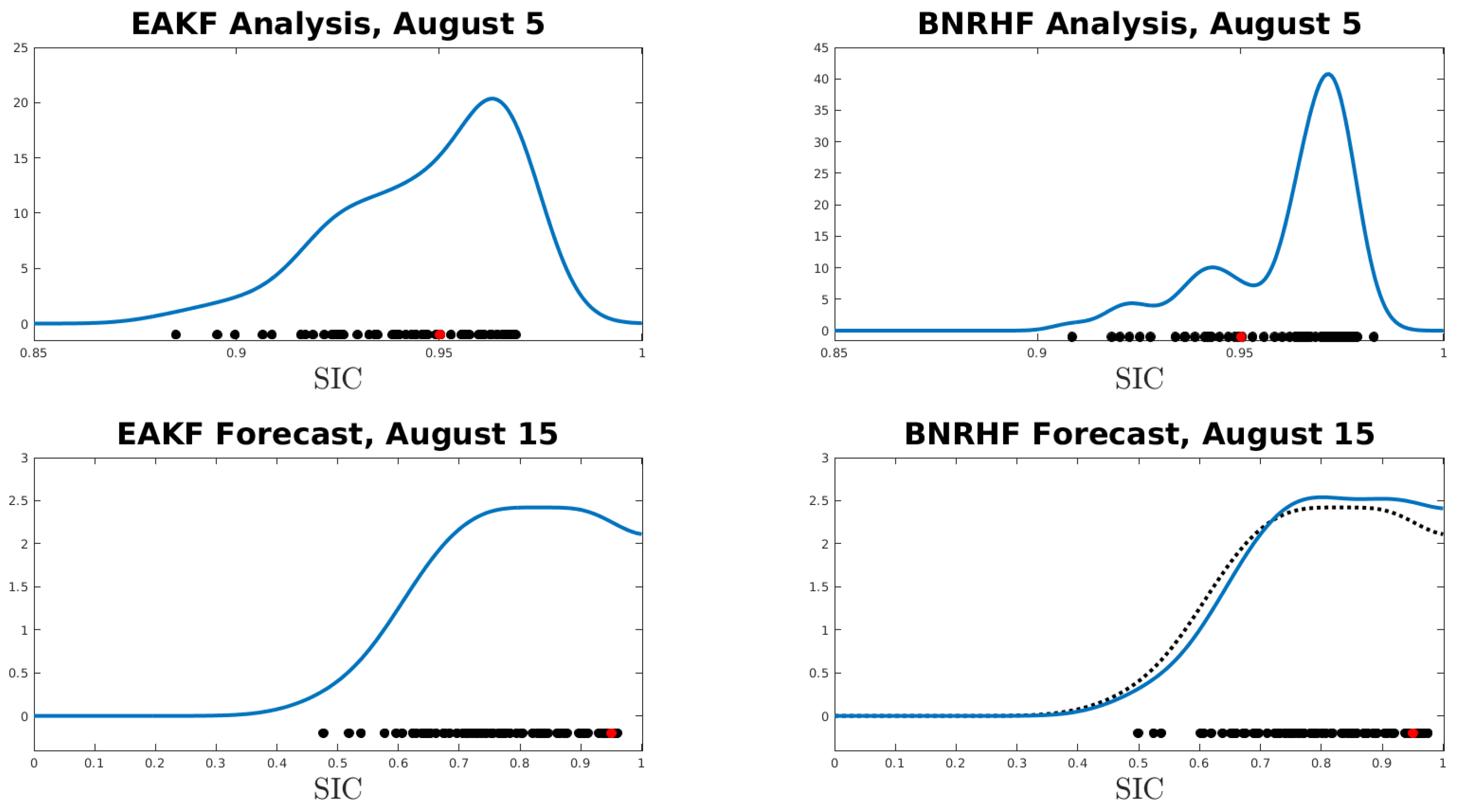

4.3.1. Analysis

4.3.2. Forecast

5. Discussion

- Both QCEF methods (BNRHF and KQCEF) outperform EAKF when the problem is non-Gaussian,

- KQCEF and BNRHF produce similar results when the ensemble size is large, but KQCEF tends to produce better results than BNRHF when the ensemble size is small, and

- The gap between KQCEF and BNRHF is largest when the ensemble size is small, and the observation error variance is also small compared to the prior variance.

5.1. Small Ensembles and Small Observation Errors

5.2. Forecast vs. Analysis Performance with Icepack

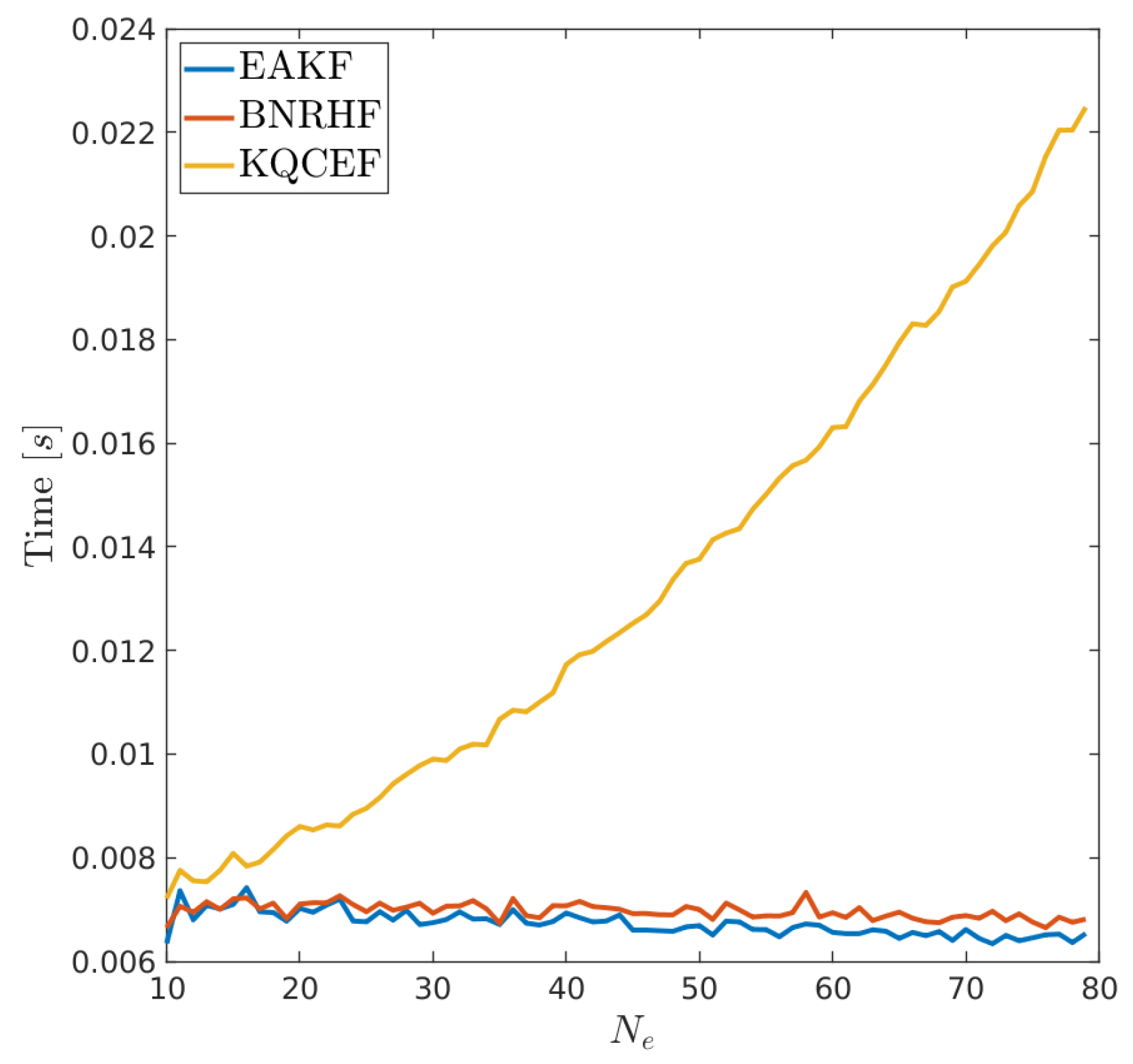

5.3. Computational Cost

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BRNHF | Bounded Normal Rank Histogram Filter |

| EAKF | Ensemble Adjustment Kalman Filter |

| EnKF | Ensemble Kalman Filter |

| KDE | Kernel-Density Estimation |

| KS | Kolmogorov–Smirnov |

| KL | Kullback–Leibler |

| KQCEF | Kernel-based Quantile-Conserving Ensemble Filter |

| QCEF | Quantile-Conserving Ensemble Filter |

Appendix A

References

- Evensen, G. Sequential data assimilation with a nonlinear quasi-geostrophic model using Monte Carlo methods to forecast error statistics. J. Geophys. Res.-Ocean. 1994, 99, 10143–10162. [Google Scholar] [CrossRef]

- Evensen, G. The ensemble Kalman filter: Theoretical formulation and practical implementation. Ocean Dyn. 2003, 53, 343–367. [Google Scholar] [CrossRef]

- Anderson, J.L. A local least squares framework for ensemble filtering. Mon. Weather Rev. 2003, 131, 634–642. [Google Scholar] [CrossRef]

- Grooms, I. A comparison of nonlinear extensions to the ensemble Kalman filter. Comput. Geosci. 2022, 26, 633–650. [Google Scholar] [CrossRef]

- Anderson, J.L. A Quantile-Conserving Ensemble Filter Framework. Part I: Updating an Observed Variable. Mon. Weather Rev. 2022, 150, 1061–1074. [Google Scholar] [CrossRef]

- Anderson, J.L. A non-Gaussian ensemble filter update for data assimilation. Mon. Weather Rev. 2010, 138, 4186–4198. [Google Scholar] [CrossRef]

- Anderson, J.L. A Quantile-Conserving Ensemble Filter Framework. Part II: Regression of Observation Increments in a Probit and Probability Integral Transformed Space. Mon. Weather Rev. 2023, 151, 2759–2777. [Google Scholar] [CrossRef]

- Anderson, J.; Riedel, C.; Wieringa, M.; Ishraque, F.; Smith, M.; Kershaw, H. A Quantile-Conserving Ensemble Filter Framework. Part III: Data Assimilation for Mixed Distributions with Application to a Low-Order Tracer Advection Model. Mon. Weather Rev. 2024. [Google Scholar] [CrossRef]

- Silverman, B. Density Estimation for Statistics and Data Analysis; CRC Press: Boca Raton, FL, USA, 1998. [Google Scholar]

- Epanechnikov, V.A. Non-parametric estimation of a multivariate probability density. Theory Probab. Its Appl. 1969, 14, 153–158. [Google Scholar] [CrossRef]

- Jones, M.C. Simple boundary correction for kernel density estimation. Stat. Comput. 1993, 3, 135–146. [Google Scholar] [CrossRef]

- Jones, M.; Foster, P. A simple nonnegative boundary correction method for kernel density estimation. Stat. Sin. 1996, 6, 1005–1013. [Google Scholar]

- Atkinson, K. An Introduction to Numerical Analysis; John Wiley & Sons: Hoboken, NJ, USA, 1991. [Google Scholar]

- Oliveira, I.F.; Takahashi, R.H. An enhancement of the bisection method average performance preserving minmax optimality. ACM Trans. Math. Softw. 2020, 47, 1–24. [Google Scholar] [CrossRef]

- Anderson, J.L. An ensemble adjustment Kalman filter for data assimilation. Mon. Weather Rev. 2001, 129, 2884–2903. [Google Scholar] [CrossRef]

- Berger, V.W.; Zhou, Y. Kolmogorov–Smirnov test: Overview. In Wiley Statsref: Statistics Reference Online; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Pérez-Cruz, F. Kullback-Leibler divergence estimation of continuous distributions. In Proceedings of the 2008 IEEE International Symposium on Information Theory, Toronto, ON, Canada, 6–11 July 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1666–1670. [Google Scholar]

- Bulinski, A.; Dimitrov, D. Statistical estimation of the Kullback–Leibler divergence. Mathematics 2021, 9, 544. [Google Scholar] [CrossRef]

- Bishop, C.H. The GIGG-EnKF: Ensemble Kalman filtering for highly skewed non-negative uncertainty distributions. Q. J. R. Meteorol. Soc. 2016, 142, 1395–1412. [Google Scholar] [CrossRef]

- Morzfeld, M.; Hodyss, D. Gaussian approximations in filters and smoothers for data assimilation. Tellus A Dyn. Meteorol. Oceanogr. 2019, 71, 1600344. [Google Scholar] [CrossRef]

- Grooms, I.; Robinson, G. A hybrid particle-ensemble Kalman filter for problems with medium nonlinearity. PLoS ONE 2021, 16, e0248266. [Google Scholar] [CrossRef] [PubMed]

- Wieringa, M.; Riedel, C.; Anderson, J.A.; Bitz, C. Bounded and categorized: Targeting data assimilation for sea ice fractional coverage and non-negative quantities in a single column multi-category sea ice model. EGUsphere 2023, 2023, 1–25. [Google Scholar]

- Hunke, E.; Allard, R.; Bailey, D.A.; Blain, P.; Craig, A.; Dupont, F.; DuVivier, A.; Grumbine, R.; Hebert, D.; Holland, M.; et al. CICE-Consortium/Icepack: Icepack 1.4.0; CICE-Consortium: Los Alamos, NM, USA, 2023. [Google Scholar] [CrossRef]

- Delworth, T.L.; Broccoli, A.J.; Rosati, A.; Stouffer, R.J.; Balaji, V.; Beesley, J.A.; Cooke, W.F.; Dixon, K.W.; Dunne, J.; Dunne, K.; et al. GFDL’s CM2 global coupled climate models. Part I: Formulation and simulation characteristics. J. Clim. 2006, 19, 643–674. [Google Scholar] [CrossRef]

- Rampal, P.; Bouillon, S.; Ólason, E.; Morlighem, M. neXtSIM: A new Lagrangian sea ice model. Cryosphere 2016, 10, 1055–1073. [Google Scholar] [CrossRef]

- Vancoppenolle, M.; Rousset, C.; Blockley, E.; Aksenov, Y.; Feltham, D.; Fichefet, T.; Garric, G.; Guémas, V.; Iovino, D.; Keeley, S.; et al. SI3, the NEMO Sea Ice Engine. 2023. Available online: https://doi.org/10.5281/zenodo.7534900 (accessed on 10 June 2024).

- Lisæter, K.A.; Rosanova, J.; Evensen, G. Assimilation of ice concentration in a coupled ice–ocean model, using the Ensemble Kalman filter. Ocean Dyn. 2003, 53, 368–388. [Google Scholar] [CrossRef]

- Lindsay, R.W.; Zhang, J. Assimilation of ice concentration in an ice–ocean model. J. Atmos. Ocean. Technol. 2006, 23, 742–749. [Google Scholar] [CrossRef]

- Stark, J.D.; Ridley, J.; Martin, M.; Hines, A. Sea ice concentration and motion assimilation in a sea ice-ocean model. J. Geophys. Res.-Ocean. 2008, 113, C05S91-1-19. [Google Scholar] [CrossRef]

- Metref, S.; Cosme, E.; Snyder, C.; Brasseur, P. A non-Gaussian analysis scheme using rank histograms for ensemble data assimilation. Nonlinear Proc. Geoph. 2014, 21, 869–885. [Google Scholar] [CrossRef][Green Version]

- Barth, A.; Canter, M.; Van Schaeybroeck, B.; Vannitsem, S.; Massonnet, F.; Zunz, V.; Mathiot, P.; Alvera-Azcárate, A.; Beckers, J.M. Assimilation of sea surface temperature, sea ice concentration and sea ice drift in a model of the Southern Ocean. Ocean Model. 2015, 93, 22–39. [Google Scholar] [CrossRef]

- Massonnet, F.; Fichefet, T.; Goosse, H. Prospects for improved seasonal Arctic sea ice predictions from multivariate data assimilation. Ocean Model. 2015, 88, 16–25. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, J.; Song, M.; Yang, Q.; Xu, S. Impacts of assimilating satellite sea ice concentration and thickness on Arctic sea ice prediction in the NCEP Climate Forecast System. J. Clim. 2017, 30, 8429–8446. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Bitz, C.M.; Anderson, J.L.; Collins, N.; Hendricks, J.; Hoar, T.; Raeder, K.; Massonnet, F. Insights on sea ice data assimilation from perfect model observing system simulation experiments. J. Clim. 2018, 31, 5911–5926. [Google Scholar] [CrossRef]

- Riedel, C.; Anderson, J. Exploring Non-Gaussian Sea Ice Characteristics via Observing System Simulation Experiments. EGUsphere 2023, 2023, 1–30. [Google Scholar] [CrossRef]

- Abdalati, W.; Zwally, H.J.; Bindschadler, R.; Csatho, B.; Farrell, S.L.; Fricker, H.A.; Harding, D.; Kwok, R.; Lefsky, M.; Markus, T.; et al. The ICESat-2 laser altimetry mission. Proc. IEEE 2010, 98, 735–751. [Google Scholar] [CrossRef]

- Johnson, N.L.; Kotz, S.; Balakrishnan, N. Continuous Univariate Distributions, Volume 2; John Wiley & Sons: Hoboken, NJ, USA, 1995; Volume 289. [Google Scholar]

- Raeder, K.; Hoar, T.J.; El Gharamti, M.; Johnson, B.K.; Collins, N.; Anderson, J.L.; Steward, J.; Coady, M. A new CAM6+ DART reanalysis with surface forcing from CAM6 to other CESM models. Sci. Rep. 2021, 11, 16384. [Google Scholar] [CrossRef] [PubMed]

- Danabasoglu, G.; Lamarque, J.F.; Bacmeister, J.; Bailey, D.; DuVivier, A.; Edwards, J.; Emmons, L.; Fasullo, J.; Garcia, R.; Gettelman, A.; et al. The community earth system model version 2 (CESM2). J. Adv. Model. Earth Syst. 2020, 12, e2019MS001916. [Google Scholar] [CrossRef]

- Urrego-Blanco, J.R.; Urban, N.M.; Hunke, E.C.; Turner, A.K.; Jeffery, N. Uncertainty quantification and global sensitivity analysis of the Los Alamos sea ice model. J. Geophys. Res.-Ocean. 2016, 121, 2709–2732. [Google Scholar] [CrossRef]

- Smith, G.C.; Allard, R.; Babin, M.; Bertino, L.; Chevallier, M.; Corlett, G.; Crout, J.; Davidson, F.; Delille, B.; Gille, S.T.; et al. Polar ocean observations: A critical gap in the observing system and its effect on environmental predictions from hours to a season. Front. Mar. Sci. 2019, 6, 434834. [Google Scholar] [CrossRef] [PubMed]

- Sakov, P.; Counillon, F.; Bertino, L.; Lisæter, K.; Oke, P.; Korablev, A. TOPAZ4: An ocean-sea ice data assimilation system for the North Atlantic and Arctic. Ocean Sci. 2012, 8, 633–656. [Google Scholar] [CrossRef]

- Kimmritz, M.; Counillon, F.; Bitz, C.; Massonnet, F.; Bethke, I.; Gao, Y. Optimising assimilation of sea ice concentration in an Earth system model with a multicategory sea ice model. Tellus A Dyn. Meteorol. Oceanogr. 2018, 70, 1–23. [Google Scholar] [CrossRef]

- Hersbach, H. Decomposition of the continuous ranked probability score for ensemble prediction systems. Weather Forecast 2000, 15, 559–570. [Google Scholar] [CrossRef]

- Gneiting, T.; Raftery, A.E. Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 2007, 102, 359–378. [Google Scholar] [CrossRef]

- Anderson, J.L.; Collins, N. Scalable implementations of ensemble filter algorithms for data assimilation. J. Atmos. Ocean. Technol. 2007, 24, 1452–1463. [Google Scholar] [CrossRef]

- Anderson, J.; Hoar, T.; Raeder, K.; Liu, H.; Collins, N.; Torn, R.; Avellano, A. The data assimilation research testbed: A community facility. Bull. Am. Meteorol. Soc. 2009, 90, 1283–1296. [Google Scholar] [CrossRef]

- Grooms, I. Data for “A Quantile-Conserving Ensemble Filter Based on Kernel Density Estimation”; figshare: Iasi, Romania, 2024. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grooms, I.; Riedel, C. A Quantile-Conserving Ensemble Filter Based on Kernel-Density Estimation. Remote Sens. 2024, 16, 2377. https://doi.org/10.3390/rs16132377

Grooms I, Riedel C. A Quantile-Conserving Ensemble Filter Based on Kernel-Density Estimation. Remote Sensing. 2024; 16(13):2377. https://doi.org/10.3390/rs16132377

Chicago/Turabian StyleGrooms, Ian, and Christopher Riedel. 2024. "A Quantile-Conserving Ensemble Filter Based on Kernel-Density Estimation" Remote Sensing 16, no. 13: 2377. https://doi.org/10.3390/rs16132377

APA StyleGrooms, I., & Riedel, C. (2024). A Quantile-Conserving Ensemble Filter Based on Kernel-Density Estimation. Remote Sensing, 16(13), 2377. https://doi.org/10.3390/rs16132377