Methods for Assessing the Effectiveness of Modern Counter Unmanned Aircraft Systems

Abstract

:1. Introduction

2. Definitions in the C-UAS Framework

3. Technologies

3.1. Evaluation of Selected DTI Technologies and DTI Systems Based on Fundamental Technical Parameters

- Operating frequency (wavelength)—this is a crucial parameter, especially when detecting small objects like UASs. Higher frequencies allow for the detection of smaller objects. When the size of the object is smaller than the wavelength, Rayleigh scattering occurs, and the detection efficiency significantly decreases as the size of the detected object diminishes. It should be noted that for the frequencies most used in radars for UAS detection, 10 GHz, the wavelength is 0.3 m. Conversely, higher frequencies are more heavily attenuated in the atmosphere by rain, fog, and snow, which limit the effective range of radars due to a reduction in reflected signal power.

- Radar output power—a higher radar power allows for an increased range. According to the equation describing the power of the signal received by the radar in relation to the distance from the object, the power decreases proportionally to the fourth power of the distance. At greater distances, the radar cross-section (RCS) (3 in Table 1), which is a measure of an object’s ability to reflect radar signals, becomes a critical parameter. This coefficient depends, among other factors, on the object’s orientation relative to the radar beam. A smaller reflective surface results in a weaker reflected signal. Most radars are designed to be used without special permission. To achieve this, radars must operate within legally specified frequencies and the corresponding maximum powers. Using radars outside these legal limits requires the user to obtain permission and pay for the use of the radio spectrum.

- Receiver sensitivity—this parameter allows for the radar’s range to be extended and detect more distant objects.

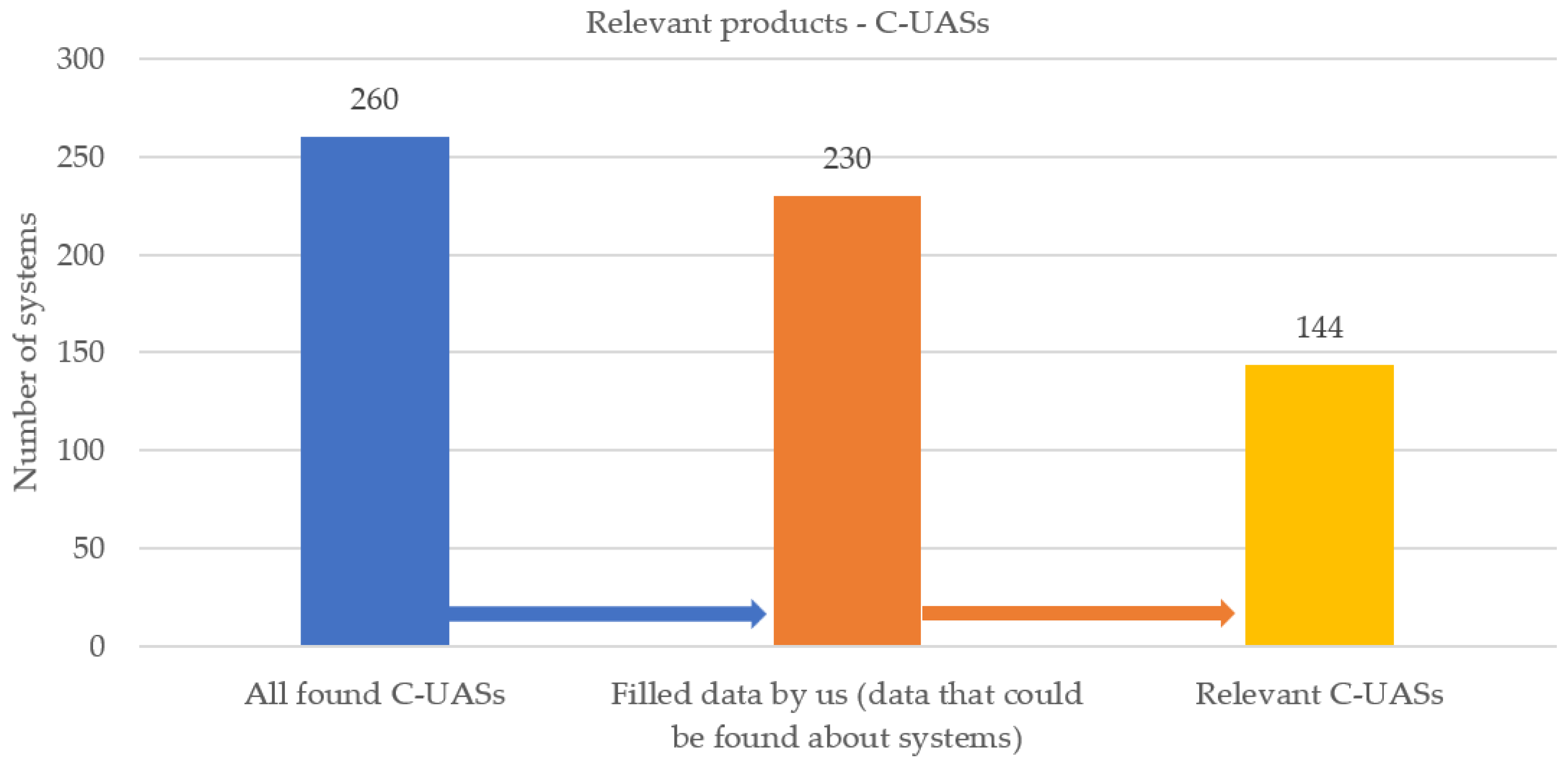

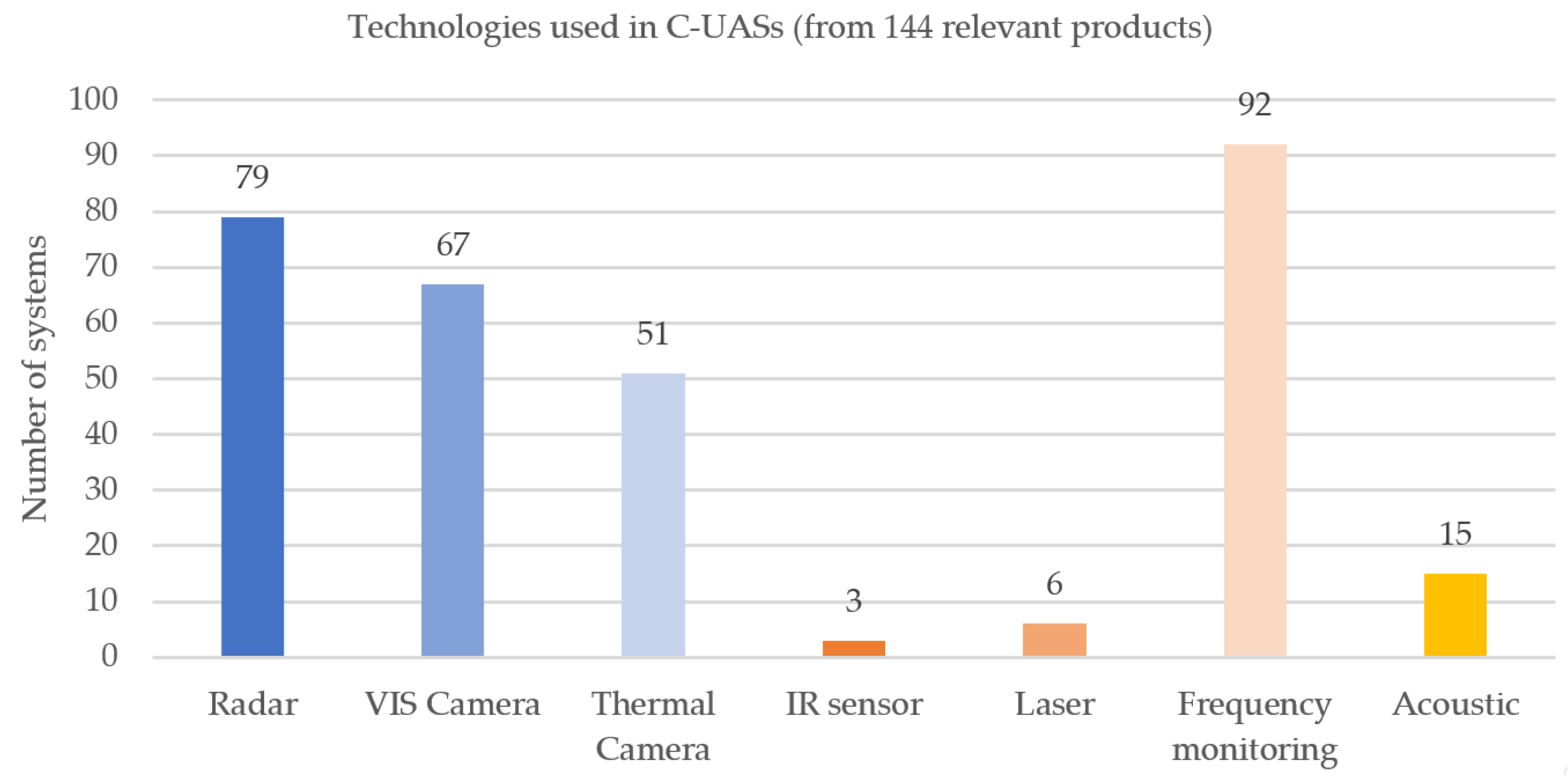

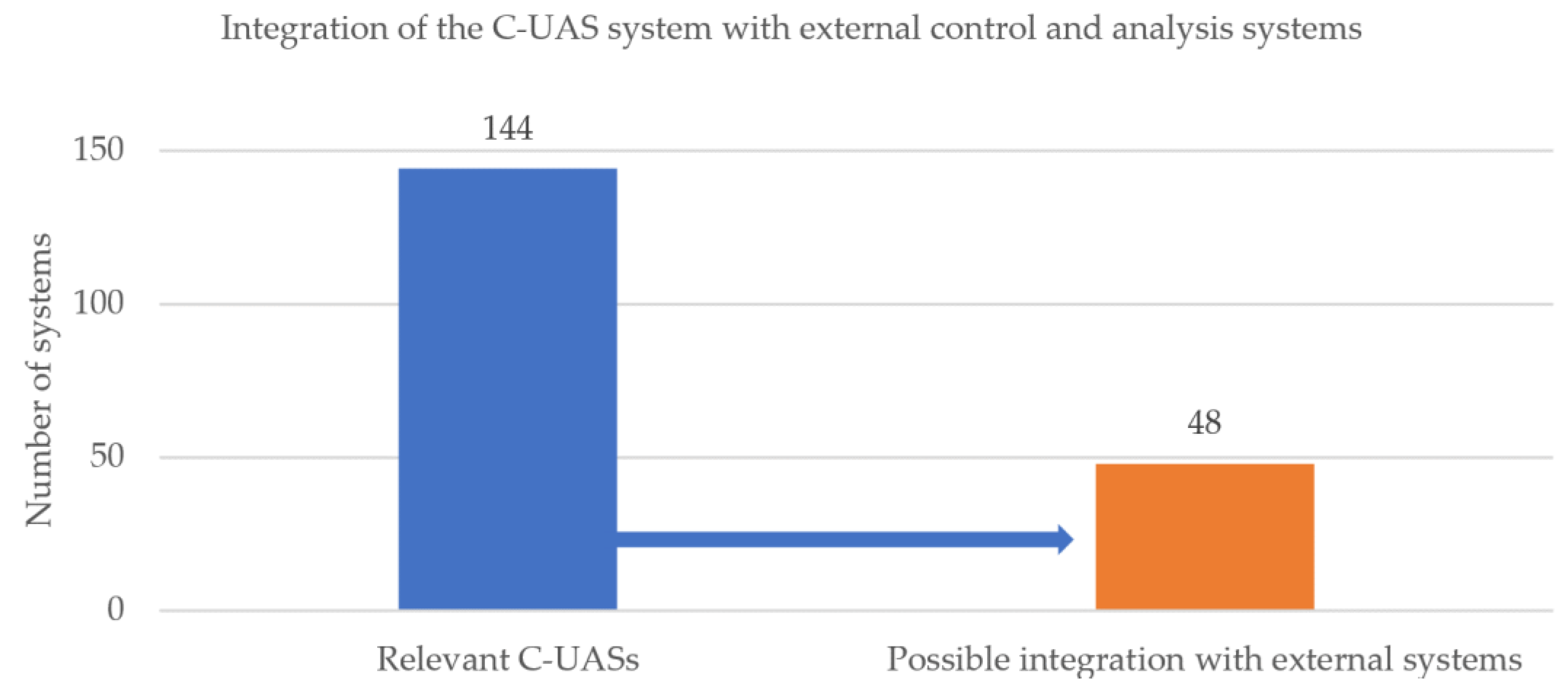

3.2. Relevant Products

- Technological changes in detection solutions;

- A termination of cooperation with technology suppliers in the case of multi-technology systems, as an economic and competitive factor in the market;

- Market verification of the offered solutions;

- The impact of the COVID-19 pandemic on the development of mainly small technology spin-offs.

- Lack of full-scale, detailed technical data.

- Several understatements as to the detection characteristics of the system (maximum and minimum speed of the detected UAS, spectral range, radiation power for active detection, etc.).

- No reference to the defined research samples concerning not so much the object’s size, but its characteristics adjusted to the specificity of detection. (This includes flight speed, weather conditions, and electromagnetic background levels).

- Ambiguity in defining the classes of objects, i.e., commercial drones vs. custom drones.

- General lack of descriptions of the operating system for multi-technology solutions.

3.3. Analysis of Current Technologies

- Not all detection methods enable 24/7 operation, especially at night.

- The specificity of the operation, particularly IR (infrared sensors) and VIS (daylight) cameras, do not allow detection in satisfactory imaging zones with appropriate resolution. These technologies are mainly used to implement other functions: tracking and identification.

- As an active detection method, the range of microwave radiation requires a thorough analysis of the possibility of using radar devices in terms of the approval for use (power, frequency) in a given scenario. Moreover, according to the properties of such radiation, a clear defining characteristic of a given device with respect to atmospheric conditions (rain, fog) is to be expected.

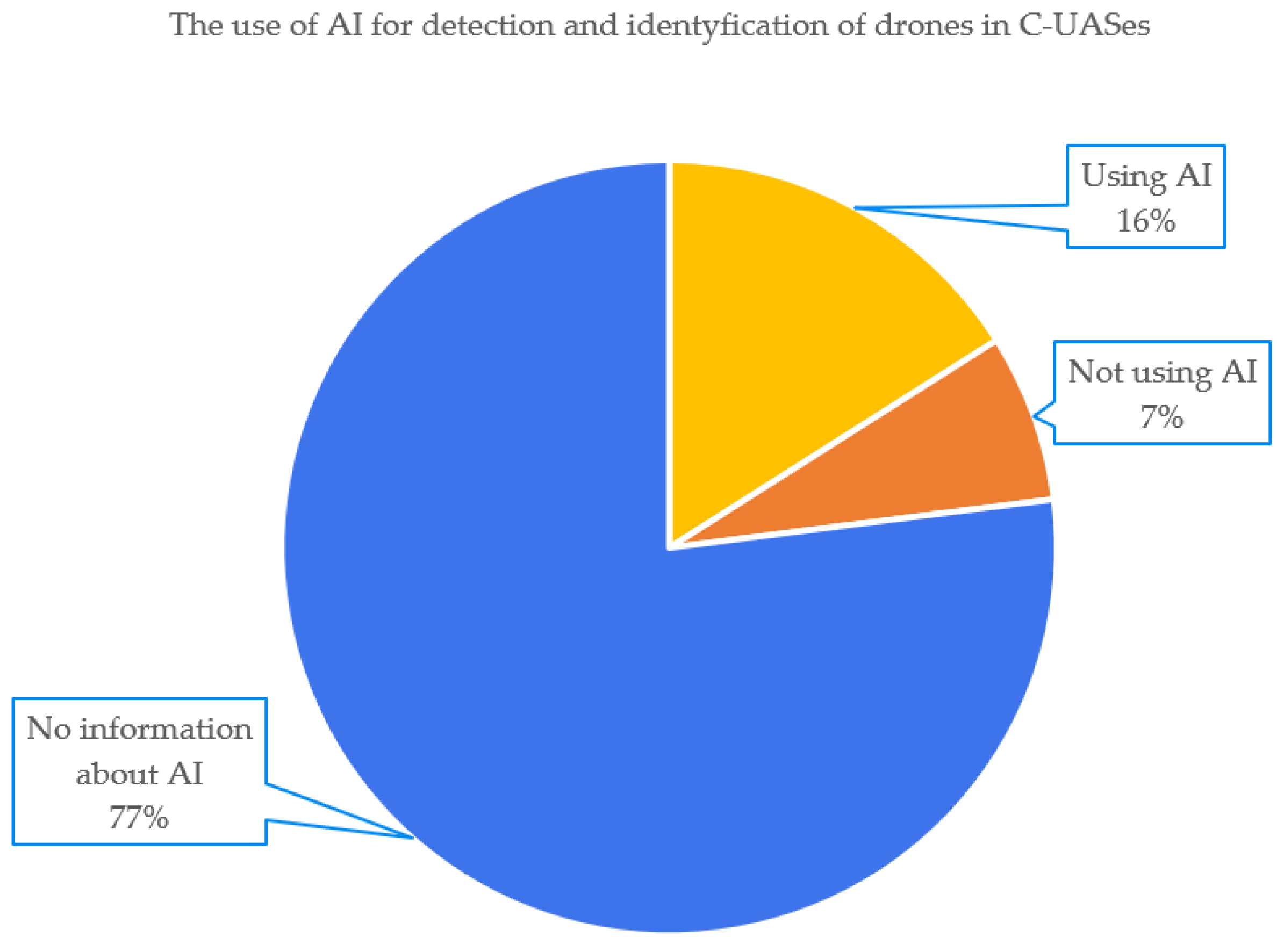

3.3.1. The Use of API

3.3.2. Other Parameters of C-UASs

3.4. Fusion of Technologies

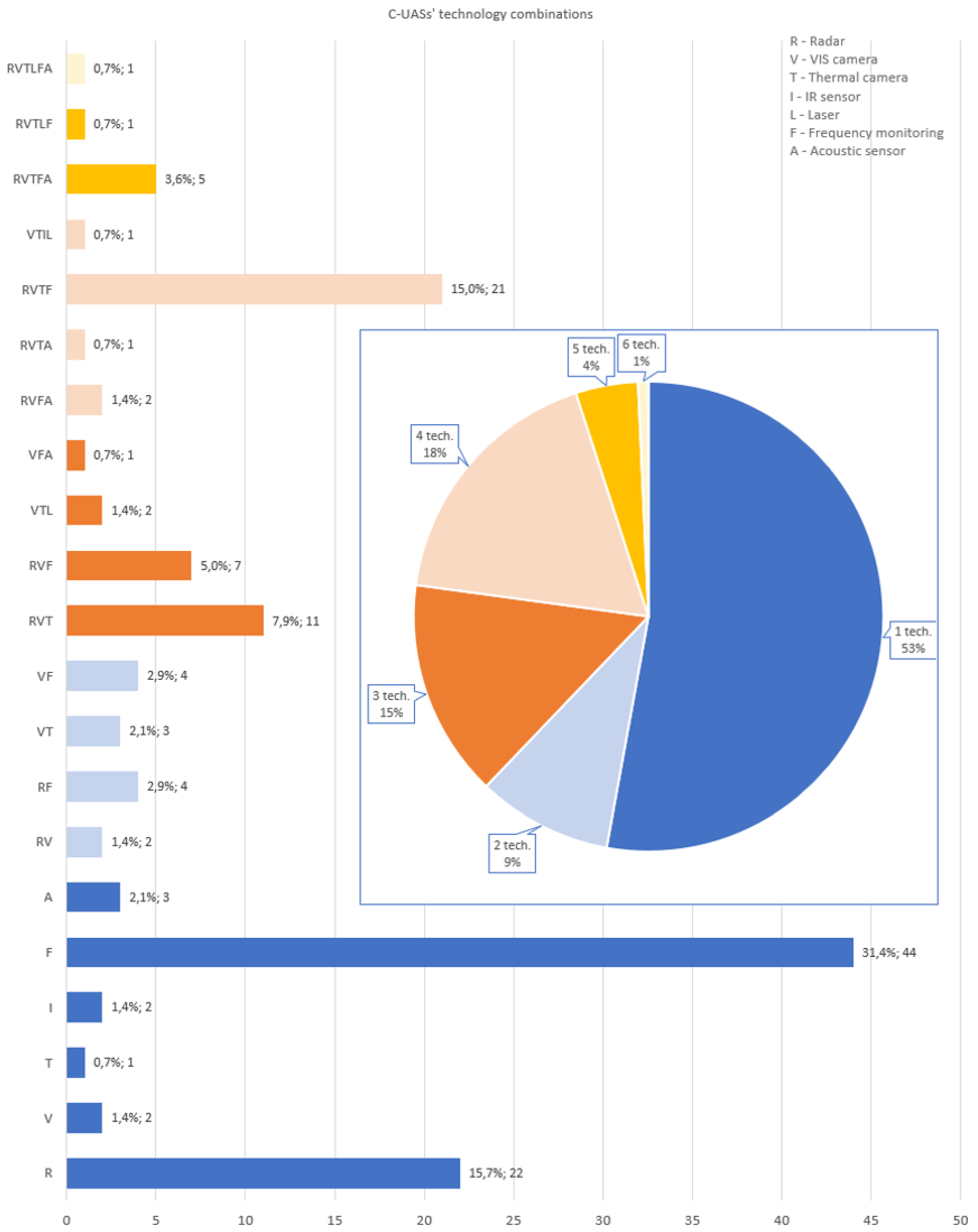

3.4.1. Combinations of Technologies Used in C-UASs

3.4.2. The Use of AI

3.5. Legal Aspects in C-UAS

4. Our Experiences

4.1. Limitations of Technologies

- Active detection method—possible interference with the radar signal.

- Active detection method—it is possible to detect the presence of a radar.

- The response characteristics of radars, depending on the weather conditions, strongly depend on the frequency of use. In general, the higher the frequency, the greater the attenuation of water molecules (fog, rain).

- The lower the frequency of the radars, the more difficult it is to detect small objects.

- The continuous wave (CW) radar movement of the object cross-wise to the radar is difficult to detect under certain conditions, especially for low-power radars [15].

- The disturbance may come from the multiplication of the real object in the case of echo reflection from the Earth’s surface or the atmosphere.

- In many cases, the interference signal reaching the radar receiver from longer distances exceeds the echo level of the useful target in its power. The ultimate performance characteristics of the detection system are solely contingent upon the quality of the firmware employed for analysis.

- Due to the shorter distance travelled by the interference signal (the useful signal must travel two times—from the transmitter to the interference source and back again—compared to the interference emitted by the target), the power emitted by the interference transmitter may be much smaller and, therefore, unable to effectively interfere with the working radar.

- The use of SPFA systems that can control the detection level in the presence of environmental disturbances can significantly reduce the detection range.

- These systems are designed for imaging under stable lighting conditions within the observation area, where radiation in the visible light spectrum is confined to a precisely defined level, and are not suitable for use at night;

- The limitations are strictly related to the choice of the lens and its parameters;

- The resolution of image detection relies on both the resolution of the sensor matrix and the focal length of the lens, which together determine the range of the observation field;

- The ability to indicate the distance of an object is limited only to predictions from the calculation of the potential size of the object;

- It is difficult (software) to indicate the coordinates of the object;

- The quality of object tracking depends on the adopted image analysis method;

- The dynamics of image lighting (clouds, sun, etc.) significantly affect the detection of the object in the image;

- Light reflections from dirt on the lens disqualify the solution from use.

- Poor image adjustment/sharpness; better quality available only in motor zoom systems.

- Thermal imaging cameras are passive, which means that they detect all infrared radiation coming from a target. This means that what the viewer sees through the camera is not limited to the heat emitted by the object but can also be the result of energy reflected from the surface they are looking at, coming from other sources.

- The identification ability of thermal imaging cameras is limited by weather phenomena. Fog, snow, and rain suppress infrared waves, which reduce the camera’s range of effective detection and imaging.

- Cloudy conditions strongly affect the interpretation of the image, especially of small objects over long distances.

- The key parameter of a thermal imaging camera is the MRTD, which means that cooled matrices are better for the imaging of objects.

- The limitation in use is closely related to the choice of the lens and its parameters.

- The resolution of image detection depends on the matching of the matrix resolution and the focal length of the lens (field of view range).

- The ability to indicate the distance of an object is limited only to predictions from the calculation of the potential size of the object.

- It is difficult (in terms of software) to indicate the coordinates of the object.

- The quality of object tracking depends on the adopted image analysis method.

- Firmware is most often closed under one manufacturer and the possibility of integration is limited to the functions provided by the SDK.

- The detectability of objects strictly depends on the emissivity of the material from which it was made. The ambient temperature is required to compensate for the radiation reflected from the object. If the emissivity of the object is low, then the correct setting of the ambient temperature is of key importance.

- There may be a problem with detecting and/or interpreting detected shiny objects.

- The limitations are similar to the limitations of thermal imaging cameras when the IR sensor is a rotating thermal imager;

- The rotation frequency of the thermal imaging camera is limited to a single Hz and the image analysis depends on comparing the sequence of image changes every second;

- The ability to indicate the distance of an object limited only to predictions from the calculation of the potential size of the object;

- The field of view (lens) and the size of the matrix as well as mounting on a rotating platform limit the resolution of the measurement over a long distance.

- The distance measurement may be disturbed by a drone other than the one intended;

- Drones cannot be tracked;

- The distance measurement may be distorted by unfavourable weather conditions such as a high extinction coefficient and insolation;

- The reflected power from the subject may be too low to filter out from the noise;

- The power radiated towards the object must be sufficiently high, but not too high, so as not to cause damage;

- The optical wavelength of the laser must be eye-safe (so as not to dazzle pilots/people, etc.);

- It is an active method, which means it is possible to detect the irradiation/lighting of the object;

- Scattering of the 1550 nm wavelength on water molecules (rainfall, fog, etc.).

- This type of system is prone to deliberate interference;

- The electronic-background-rich environment significantly reduces the detection efficiency;

- Most solutions only define possible threats in terms of direction without specifying the correct object distance;

- The detection, tracking, and identification of objects is limited by the presence of natural and artificial terrain obstacles creating covered zones;

- The detection range drops significantly due to interference from other devices (in an urbanised area);

- Most frequency-monitoring systems only detect Wi-Fi frequencies (2.4 GHz and 5 GHz) and, therefore, have a narrow detection range.

- This type of sensor has very large range limitations;

- Detection can be easily disturbed by noise/wind (both urbanised noisy areas and open spaces with blowing winds are unfavourable for this type of sensor);

- The elimination of non-linear acoustic disturbances, which hinders the interpretation of the tested signals, is problematic.

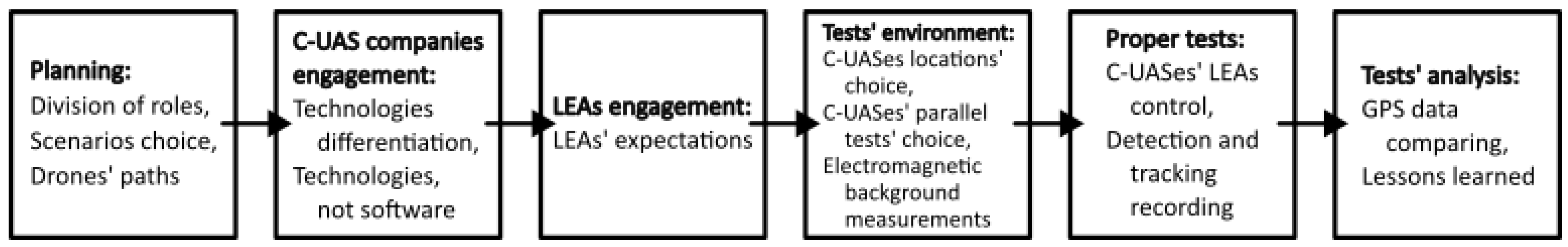

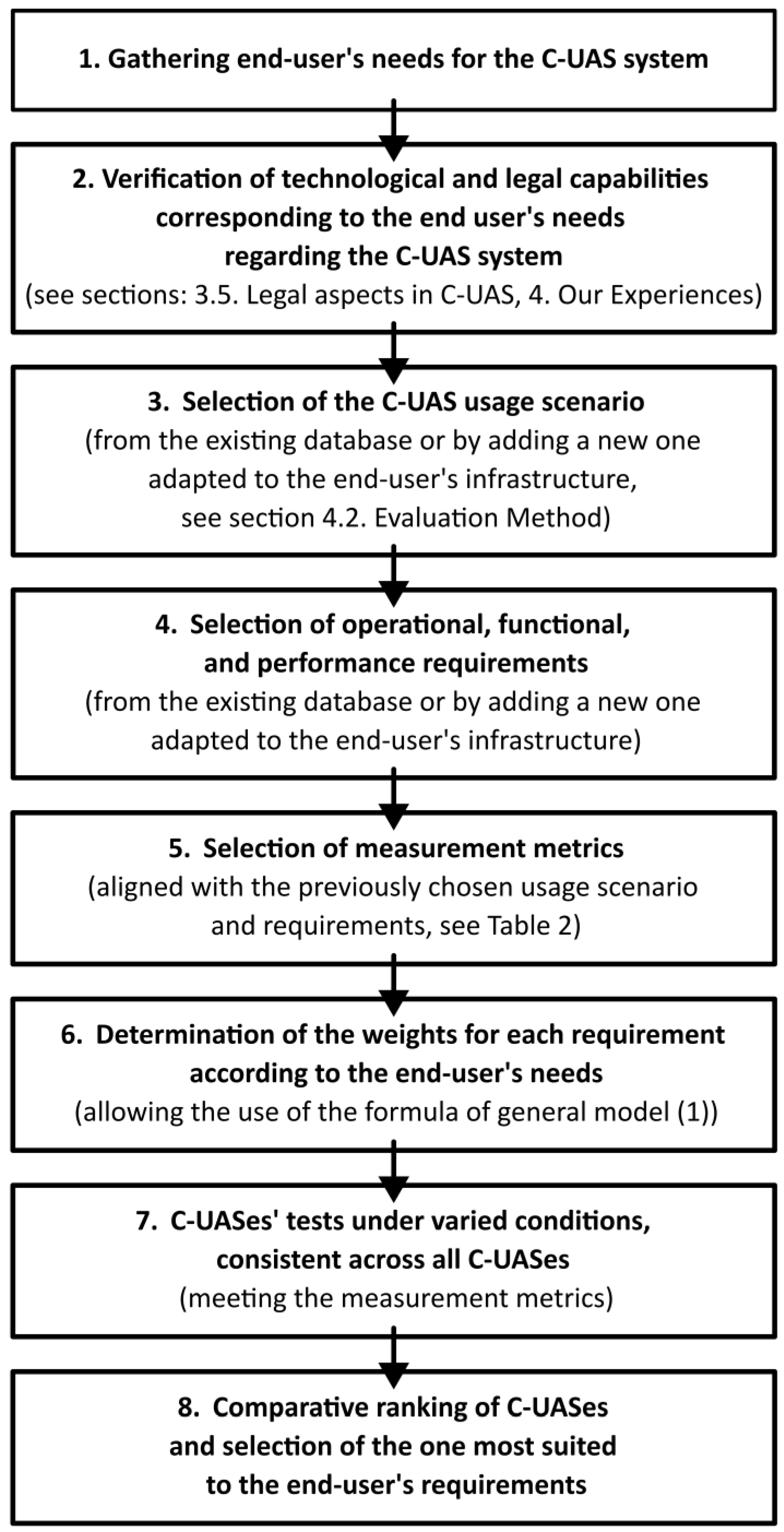

4.2. Evaluation Method

- Sensitive Sites/Critical National Infrastructure:

- 1.

- Prison;

- 2.

- Airport;

- 3.

- Nuclear plant;

- 4.

- Government building.

- Public Spaces Protection/Events:

- 5.

- Stadium;

- 6.

- Outdoor concert;

- 7.

- Outdoor political rally;

- 8.

- International summit.

- Border Protection:

- 9.

- Land border;

- 10.

- Maritime border.

- Specification of environmental conditions;

- Specification of the test object, i.e., UAS [16];

- Specification of the test site;

- Testable parameters for detection, tracking, and identification.

- Si is the aggregate evaluation of the ith C-UAS.

- wj is the weight of the jth functional or performance requirement.

- aij is the evaluation of the ith C-UAS according to the jth functional or performance requirement.

- n is the number of functional or performance requirements considered in the evaluation of the C-UAS.

5. A Simplified Algorithm for the Use of C-UAS Effectiveness Evaluation Methods

6. Conclusions

- Detection of drones moving within the test area at various angles relative to the ground;

- Determination of the minimum distances between two drones allowing for their distinguishability;

- Conducting dedicated tests for specific technologies and their various variants;

- Including flights of drones moving at speeds exceeding 120 km/h in the tests;

- Examining the impact of external electromagnetic interference on technologies used by C-UASs;

- Adapting test scenarios to potentially emerging new UAS and C-UAS technologies;

- Developing and integrating tests into an automatic/semi-automatic C-UAS testing system.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vargas-Ramírez, N.; Paneque-Gálvez, J. The Global Emergence of Community Drones (2012–2017). Drones 2019, 3, 76. [Google Scholar] [CrossRef]

- Franke, U. Drone Proliferation: A Cause for Concern? International Relations and Security Network (ISN), ETH Zürich: Zürich, Switzerland, 2014. [Google Scholar]

- Aksu, M.O.; Alwardt, C.; Berndsen, C.I.S.; Bollö, J.; Cochran, C.D.; Corum, J.; Dieckert, U.; Eckel, H.-A.; Grest, L.C.H.; Haider, L.C.A.; et al. A Comprehensive Approach to Countering Unmanned Aircraft Systems. Willis, C.M., Haider, L.C.A., Teletin, L.C.D.C., Wagner, L.C.D., Eds.; The Joint Air Power Competence Centre: Kalkar, Germany, 2021. [Google Scholar]

- Doroftei, D.; De Cubber, G. Qualitative and Quantitative Validation of Drone Detection Systems. In Proceedings of the International Symposium on Measurement and Control in Robotics ISMCR2018, Mons, Belgium, 26–28 September 2018. [Google Scholar]

- Buric, M.; De Cubber, G. Counter Remotely Piloted Aircraft Systems. MTA Rev. 2017, 27, 9–18. [Google Scholar]

- Lee, C.H.; Thiessen, C.; Van Bossuyt, D.L.; Hale, B. A Systems Analysis of Energy Usage and Effectiveness of a Counter-Unmanned Aerial System Using a Cyber-Attack Approach. Drones 2022, 6, 198. [Google Scholar] [CrossRef]

- Skolnik, M.I. Radar Handbook; McGraw-Hill: New York, NY, USA, 1990; ISBN 007057913X. [Google Scholar]

- Zyczkowski, M.; Szustakowski, M.; Ciurapinski, W.; Karol, M.; Markowski, P. Integrated Radar-Camera Security System: Range Test. In Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 23–27 April 2012; Volume 8361, pp. 493–502. [Google Scholar] [CrossRef]

- Rozantsev, A. Vision-Based Detection of Aircrafts and UAVs. Master’s Thesis, EPFL, Lausanne, Switzerland, 2017. [Google Scholar]

- Andraši, P.; Radišić, T.; Muštra, M.; Ivošević, J. Night-Time Detection of UAVs Using Thermal Infrared Camera. Transp. Res. Procedia 2017, 28, 183–190. [Google Scholar] [CrossRef]

- De Cubber, G.; Shalom, R.; Coluccia, A.; Borcan, O.; Chamrad, R.; Radulesku, T.; Izquierdo, E.; Gagov, Z. The SafeShore System for the Detection of Threat Agents in a Maritime Border Environment. In Proceedings of the IARP Workshop on Risky Interventions and Environmental Surveillance, Les Bons Villers, Belgium, 18–19 May 2017; pp. 1–4. [Google Scholar]

- Wang, J.; Liu, Y.; Song, H. Counter-Unmanned Aircraft System(s) (C-UAS) State of the Art, Challenges and Future Trends. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 4–29. [Google Scholar] [CrossRef]

- Mezei, J.; Molnar, A. Drone Sound Detection by Correlation. In Proceedings of the SACI 2016-11th IEEE International Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania, 12–14 May 2016; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2016; pp. 509–518. [Google Scholar]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the Current State of UAV Regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef]

- Li, C.J.; Ling, H. An Investigation on the Radar Signatures of Small Consumer Drones. IEEE Antennas Wirel. Propag. Lett. 2017, 16, 649–652. [Google Scholar] [CrossRef]

- Farlik, J.; Kratky, M.; Casar, J.; Stary, V. Multispectral Detection of Commercial Unmanned Aerial Vehicles. Sensors 2019, 19, 1517. [Google Scholar] [CrossRef] [PubMed]

| Technology | Capabilities (Defining or Measuring) | Limitations | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Detection | Speed | Direction | Altitude | Size | Distance | Tracking | Identification | Wind | Rain | Fog | Insolation | Electromagnetic Disturbance | |

| Radar | ✓ | ✓ 1 | ✓ | ✓/- 2 | ✓/- 3 | ✓ | ✓ | - | - | ✓/- | ✓/- | - | ✓/- |

| VIS Cam. | ✓/- 4 | - | ✓ | ✓ | ✓ | - | ✓ | ✓ | - | ✓ | ✓ | ✓/- | - |

| Thermal Cam. | ✓/- 4 | - | ✓ | ✓ | ✓ | - | ✓ | ✓ | - | ✓/- 5 | ✓/- 6 | - | - |

| IR | ✓/- 4 | - | ✓ | ✓ | ✓ | - | ✓ | ✓ | - | ✓/- 5 | ✓/- 6 | - | - |

| Laser | - | ✓/- 7 | - | ✓/- 8 | - | ✓ | - | - | - | ✓/- 5 | ✓/- 6 | ✓ | - |

| Frequency | ✓ | ✓/- 9 | ✓/- 9 | ✓/- 9 | - | ✓ | ✓ | ✓/- 9 | - | - | - | - | ✓ |

| Acoustic | ✓ | - | ✓/- 10 | - | - | - | ✓/- 10 | ✓ 11 | ✓ | ✓ | - | - | - |

| Type of Conditions | Parameter |

|---|---|

| Meteorological conditions | Medium surface wind |

| Visibility | |

| Height of the cloud base | |

| Air temperature and dew point temperature | |

| Pressure value (QNH, QFE) | |

| Average wind measured | |

| Air temperature measured | |

| Water surface temperature, if the scenario assumes its occurrence | |

| Illuminance | |

| Electromagnetic conditions | Average intensity of the electromagnetic field in the considered frequency range |

| Peak intensity of the electromagnetic field in the considered frequency range | |

| Acoustic conditions | Average sound level |

| Peak sound level and frequency at which it occurs |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brewczyński, K.D.; Życzkowski, M.; Cichulski, K.; Kamiński, K.A.; Petsioti, P.; De Cubber, G. Methods for Assessing the Effectiveness of Modern Counter Unmanned Aircraft Systems. Remote Sens. 2024, 16, 3714. https://doi.org/10.3390/rs16193714

Brewczyński KD, Życzkowski M, Cichulski K, Kamiński KA, Petsioti P, De Cubber G. Methods for Assessing the Effectiveness of Modern Counter Unmanned Aircraft Systems. Remote Sensing. 2024; 16(19):3714. https://doi.org/10.3390/rs16193714

Chicago/Turabian StyleBrewczyński, Konrad D., Marek Życzkowski, Krzysztof Cichulski, Kamil A. Kamiński, Paraskevi Petsioti, and Geert De Cubber. 2024. "Methods for Assessing the Effectiveness of Modern Counter Unmanned Aircraft Systems" Remote Sensing 16, no. 19: 3714. https://doi.org/10.3390/rs16193714