Joint Panchromatic and Multispectral Geometric Calibration Method for the DS-1 Satellite

Abstract

:1. Introduction

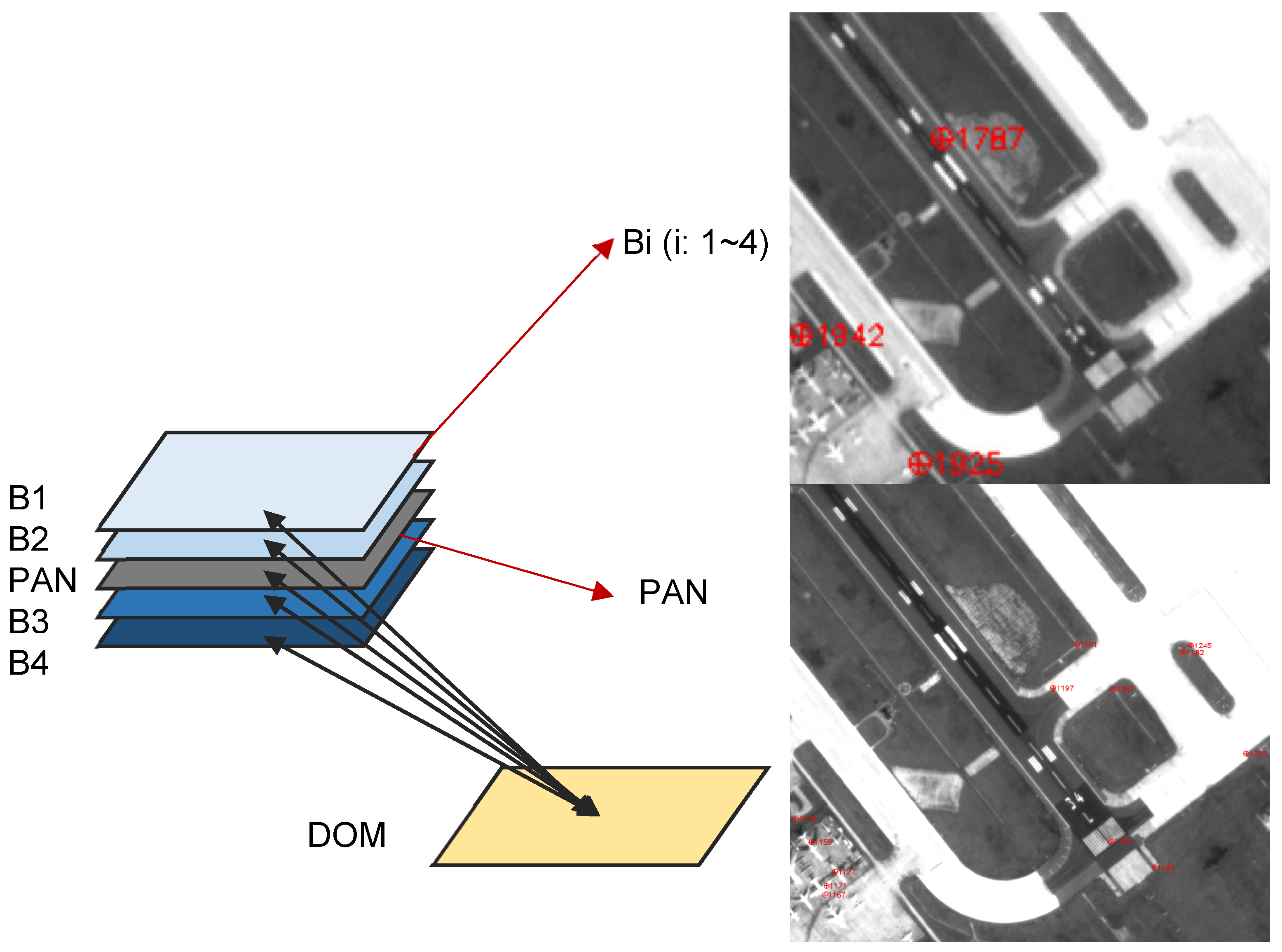

2. Proposed Method

2.1. Camera Model and Coordinate System Conversion

2.2. Multi-Class Corresponding Point Calibration Solving

3. Results and Discussion

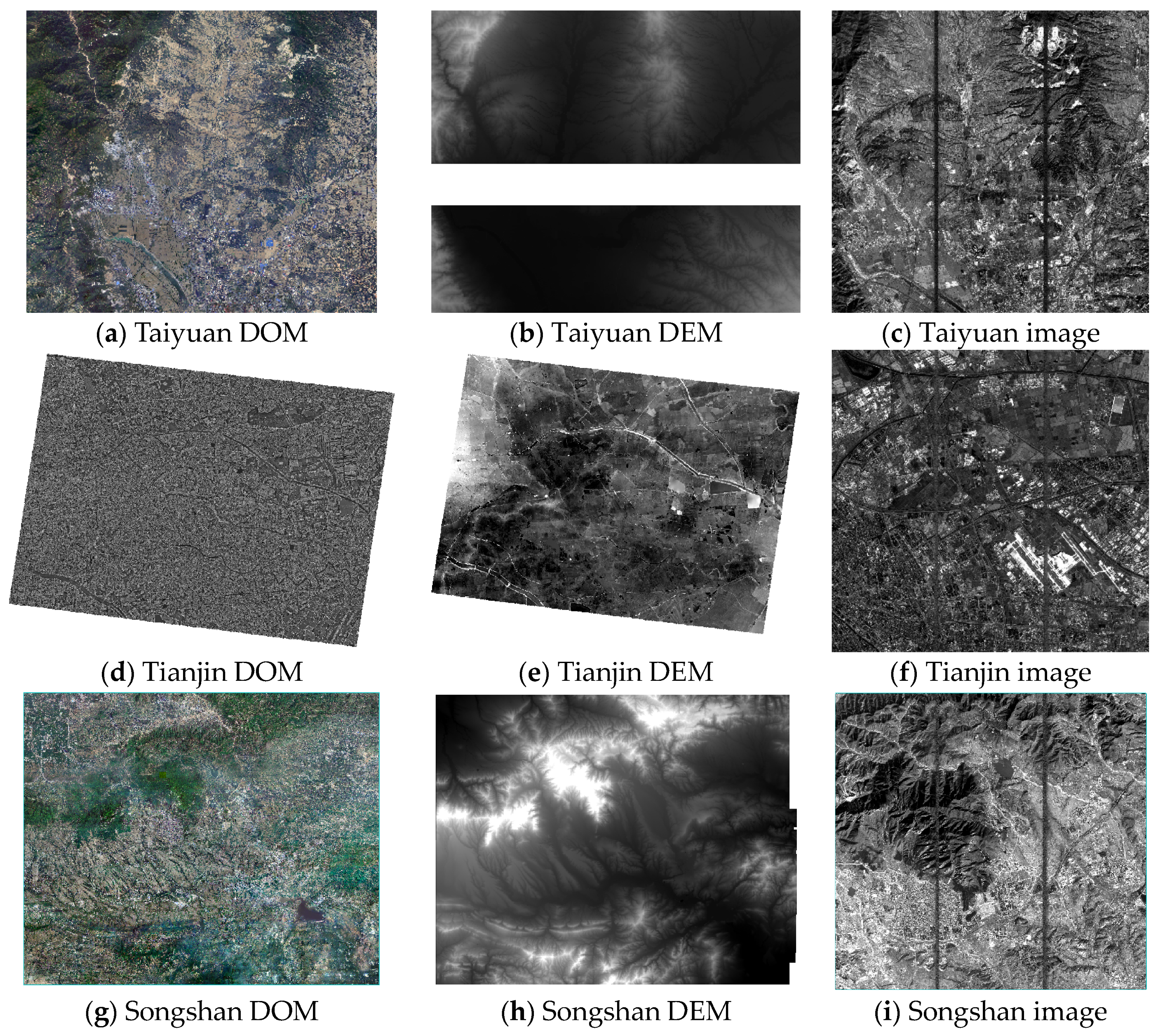

3.1. Data Sources

3.2. CCD Splicing Accuracy Verification

3.3. Geometric Positioning Accuracy Verification

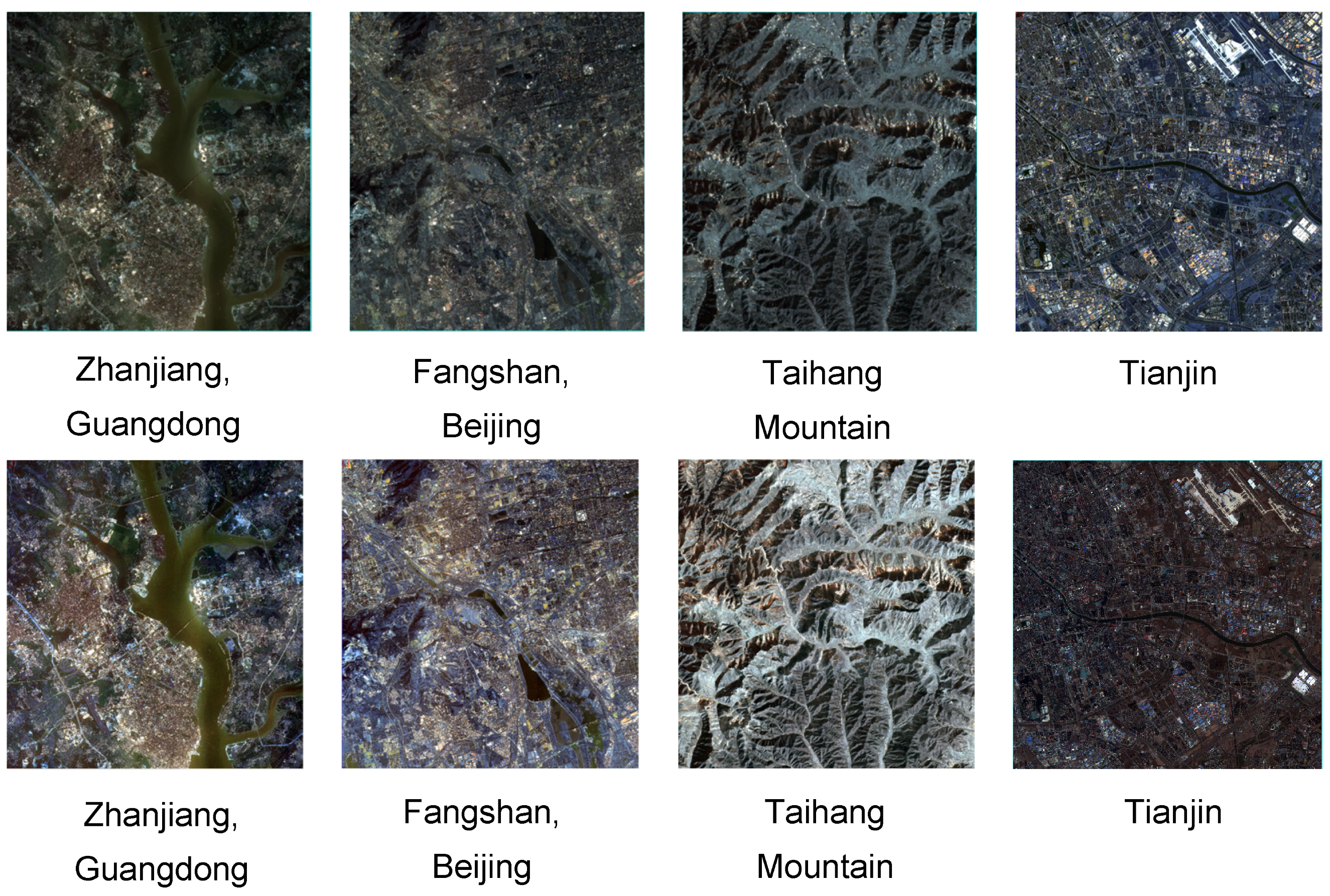

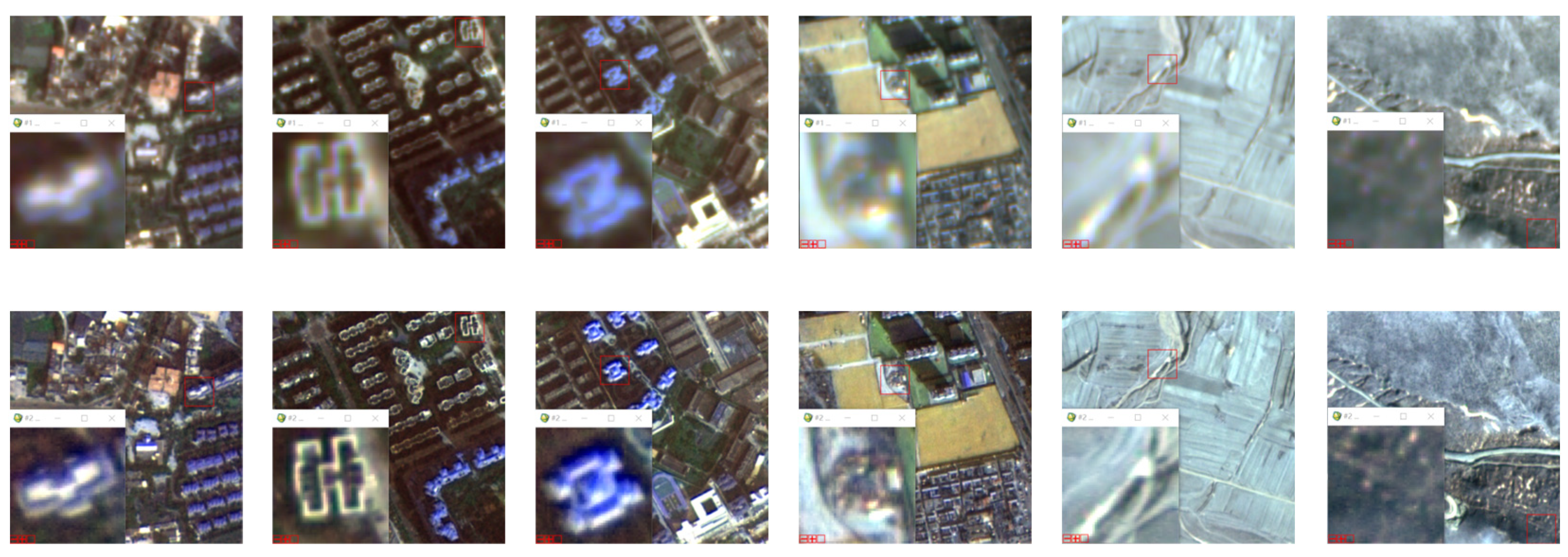

3.4. Consistent Registration Accuracy Validation for Different Spectral Bands

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dibs, H.; Mansor, S.; Ahmad, N.; Pradhan, B. Band-to-band registration model for near-equatorial Earth observation satellite images with the use of automatic control point extraction. Int. J. Remote Sens. 2015, 36, 2184–2200. [Google Scholar] [CrossRef]

- Miecznik, G.; Shafer, J.; Baugh, W.M.; Bader, B.; Karspeck, M.; Pacifici, F. Mutual information registration of multi-spectral and multi-resolution images of DigitalGlobe’s WorldView-3 imaging satellite. In Proceedings of the Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XXIII, Anaheim, CA, USA, 5 May 2017; pp. 328–339. [Google Scholar]

- Goforth, M.A. Sub-pixel registration assessment of multispectral imagery. In Proceedings of the SPIE Optics + Photonics, San Diego, CA, USA, 1 September 2006. [Google Scholar]

- Boukerch, I.; Farhi, N.; Karoui, M.S.; Djerriri, K.; Mahmoudi, R. A Dense Vector Matching Approach for Band to Band Registration of Alsat-2 Images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3401–3404. [Google Scholar]

- Wang, M.; Yang, B.; Jin, S. A registration method based on object-space positioning consistency for satellite multi-spectral image. J. Wuhan Univ. 2013, 38, 765–769. [Google Scholar]

- Jiang, Y.; Zhang, G.; Tang, X.; Zhu, X.; Huang, W.; Pan, H.; Qin, Q. Research on the high accuracy band-to-band registration method of ZY-3 multispectral image. Acta Geod. Cartogr. Sin. 2013, 42, 884–897. [Google Scholar]

- Wu, H.; Bai, Y.; Wang, L. On-orbit geometric calibration and accuracy verification of Jilin1-KFO1A WF camera. Opt. Precis. Eng. 2021, 29, 1769–1781. [Google Scholar] [CrossRef]

- Pan, H.; Tian, J.; Wang, T.; Wang, J.; Liu, C.; Yang, L. Band-to-Band Registration of FY-1C/D Visible-IR Scanning Radiometer High-Resolution Picture Transmission Data. Remote Sens. 2022, 14, 411. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, J.; Jiang, Y.; Zhou, P.; Zhao, Y.; Xu, Y. On-orbit geometric calibration and validation of Luojia 1-01 night-light satellite. Remote Sens. 2019, 11, 264. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, T.; Zheng, T.; Zhang, Y.; Li, L.; Yu, Y.; Li, L. On-Orbit Geometric Calibration and Performance Validation of the GaoFen-14 Stereo Mapping Satellite. Remote Sens. 2023, 15, 4256. [Google Scholar] [CrossRef]

- Jiang, Y.; Xu, K.; Zhao, R.; Zhang, G.; Cheng, K.; Zhou, P. Stitching images of dual-cameras onboard satellite. ISPRS J. Photogramm. Remote Sens. 2017, 128, 274–286. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Gruen, A. A Powerful image matching technique, South African Journal of Photogrammetry. Remote Sens. Cartogr. 1985, 14, 175–187. [Google Scholar]

- Wang, T.; Zhang, G.; Li, D.; Zhao, R.; Deng, M.; Zhu, T.; Yu, L. Planar block adjustment and orthorectification of Chinese spaceborne SAR YG-5 imagery based on RPC. Int. J. Remote Sens. 2018, 39, 640–654. [Google Scholar] [CrossRef]

| Location | Date | Satellite Side Swing (°) | Average Elevation (m) |

|---|---|---|---|

| Tianjin (Calibrated images) | 23 November 2021 | 0.2 | −3 |

| Songshan in Henan (Calibrated images) | 2 December 2021 | 7.04 | 526 |

| Taiyuan in Shanxi (Calibrated images) | 21 November 2021 | 1.24 | 323 |

| Location | Date | Satellite Side Swing (°) | Average Elevation (m) |

|---|---|---|---|

| Tianjin Calibration Field | 21 November 2021 | 0.2 | −3 |

| Fangshan, Beijing | 4 January 2022 | −4.83 | 202 |

| Taihangshan | 3 January 2022 | 3.13 | 1076 |

| Zhanjiang, Guangdong | 13 December 2021 | 3.29 | 4 |

| Beichen, Tianjin | 15 December 2021 | −13.62 | −2 |

| Location | Type of Calibration | Neighboring CCD Connection Point Accuracy (Pixels) |

|---|---|---|

| Tianjin (Calibration Field) | Panchromatic individual calibration | 0.082 |

| Multispectral individual calibration | 0.083 | |

| Joint panchromatic and multispectral calibration | 0.083 | |

| Fangshan, Beijing | Panchromatic individual calibration | 0.12 |

| Multispectral individual calibration | 0.14 | |

| Joint panchromatic and multispectral calibration | 0.15 | |

| Taihangshan | Panchromatic individual calibration | 0.23 |

| Multispectral individual calibration | 0.27 | |

| Joint panchromatic and multispectral calibration | 0.26 | |

| Zhanjiang, Guangdong | Panchromatic individual calibration | 0.094 |

| Multispectral individual calibration | 0.1 | |

| Joint panchromatic and multispectral calibration | 0.13 |

| Location | Type of Calibration | Positioning Accuracy (m) | ||

|---|---|---|---|---|

| Without Control | With Control | Number of Checkpoints | ||

| Tianjin (Calibrated field) | Panchromatic individual calibration | 0.69 | \ | \ |

| Multispectral individual calibration | 1.12 | \ | \ | |

| Joint panchromatic and multispectral calibration | 0.71 | \ | \ | |

| Fangshan, Beijing | Panchromatic individual calibration | 18.73 | 0.96 | 18 |

| Multispectral individual calibration | 21.63 | 1.16 | 18 | |

| Joint panchromatic and multispectral calibration | 18.82 | 0.98 | 18 | |

| Taihangshan | Panchromatic individual calibration | 19.83 | 1.02 | 6 |

| Multispectral individual calibration | 21.10 | 1.43 | 6 | |

| Joint panchromatic and multispectral calibration | 19.85 | 1.12 | 6 | |

| Zhanjiang, Guangdong | Panchromatic individual calibration | 4.28 | 0.73 | 21 |

| Multispectral individual calibration | 5.98 | 1.23 | 21 | |

| Joint panchromatic and multispectral calibration | 4.32 | 0.89 | 21 | |

| Beichen, Tianjin | Panchromatic individual calibration | 34.14 | 0.92 | 11 |

| Multispectral individual calibration | 37.03 | 1.46 | 11 | |

| Joint panchromatic and multispectral calibration | 35.12 | 0.97 | 11 | |

| Songshan, Henan | Panchromatic individual calibration | 3.86 | 0.85 | 843 |

| Multispectral individual calibration | 4.13 | 1.43 | 432 | |

| Joint panchromatic and multispectral calibration | 3.89 | 0.93 | 843 | |

| Taiyuan, Shanxi | Panchromatic individual calibration | 3.63 | 0.65 | 876 |

| Multispectral individual calibration | 3.74 | 1.06 | 412 | |

| Joint panchromatic and multispectral calibration | 3.68 | 0.79 | 876 | |

| Location | Type of Band | Band-to-Band Registration Accuracy (Pixels) | ||

|---|---|---|---|---|

| Min | Max | RMSE | ||

| Tianjin | Pan–Multi | 0.001 | 0.35 | 0.14 |

| Multi–Multi | 0.001 | 0.27 | 0.08 | |

| Fangshan, Beijing | Pan–Multi | 0.004 | 0.32 | 0.21 |

| Multi–Multi | 0.002 | 0.17 | 0.12 | |

| Taihangshan | Pan–Multi | 0.004 | 0.56 | 0.23 |

| Multi–Multi | 0.001 | 0.31 | 0.11 | |

| Zhanjiang, Guangdong | Pan–Multi | 0.004 | 0.43 | 0.16 |

| Multi–Multi | 0.002 | 0.53 | 0.10 | |

| Songshan, Henan | Pan–Multi | 0.003 | 0.57 | 0.20 |

| Multi–Multi | 0.002 | 0.46 | 0.13 | |

| Taiyuan, Shanxi | Pan–Multi | 0.003 | 0.53 | 0.24 |

| Multi–Multi | 0.002 | 0.24 | 0.12 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, X.; Zhang, X.; Liu, M.; Tian, J. Joint Panchromatic and Multispectral Geometric Calibration Method for the DS-1 Satellite. Remote Sens. 2024, 16, 433. https://doi.org/10.3390/rs16020433

Jiang X, Zhang X, Liu M, Tian J. Joint Panchromatic and Multispectral Geometric Calibration Method for the DS-1 Satellite. Remote Sensing. 2024; 16(2):433. https://doi.org/10.3390/rs16020433

Chicago/Turabian StyleJiang, Xiaohua, Xiaoxiao Zhang, Ming Liu, and Jie Tian. 2024. "Joint Panchromatic and Multispectral Geometric Calibration Method for the DS-1 Satellite" Remote Sensing 16, no. 2: 433. https://doi.org/10.3390/rs16020433

APA StyleJiang, X., Zhang, X., Liu, M., & Tian, J. (2024). Joint Panchromatic and Multispectral Geometric Calibration Method for the DS-1 Satellite. Remote Sensing, 16(2), 433. https://doi.org/10.3390/rs16020433