Disparity Refinement for Stereo Matching of High-Resolution Remote Sensing Images Based on GIS Data

Abstract

:1. Introduction

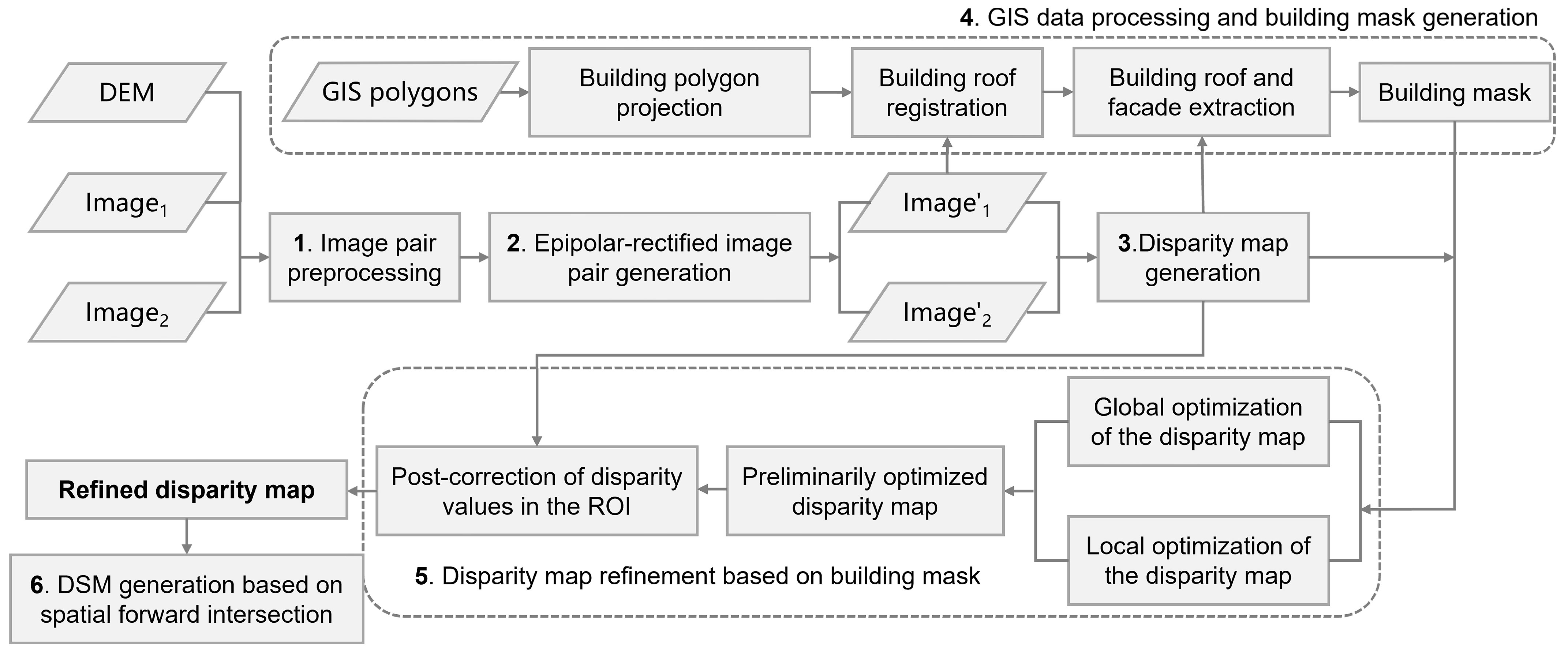

2. Materials and Methods

2.1. Image Pair Correction Processing and Initial Disparity Map Generation

2.2. GIS Vector Data Processing and Building Mask Generation

- 1.

- Building polygon projection. Initially, we extract each building vector within the geographical scope of the processing area from the GIS data, encompassing coordinates of vertex and building size. Subsequently, utilizing the DEM, refined RPCs, and the parameters of epipolar rectification, we transform all individual building polygons into the image space of the epipolar-rectified image. Thus, we obtain the initial building mask of the epipolar-rectified image, denoted as .

- 2.

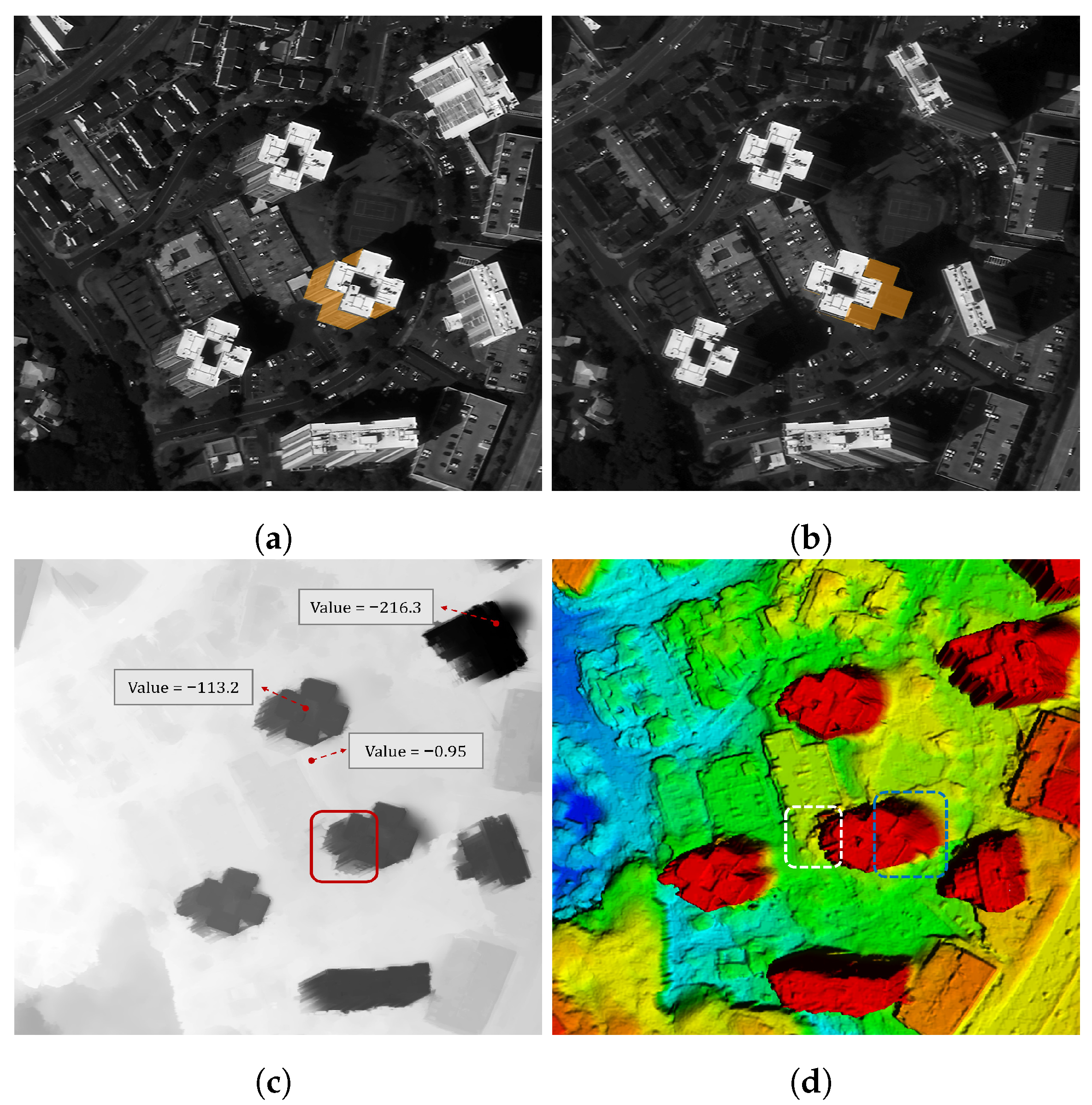

- Multi-modal and multi-building matching. For each building mask, we employ the multi-modal matching algorithm [29] to align the mask with the corresponding building roof in the epipolar-rectified image, and the registered building mask is denoted as . Moreover, to enhance the stability of the matching results, we crop the epipolar-rectified image based on the position of the building mask in , preserving solely the image content around the building target to mitigate the interference of external information in the image. Then, we check the registration accuracy after the building mask is rectified using the matching offset obtained from the multi-modal matching method. An example is illustrated in Figure 3. As shown in Figure 3a, noticeable offsets exist between the original building polygons and their corresponding building roofs in the image, and the offsets of each polygon are inconsistent. Moreover, these offsets may even exceed 100 pixels, posing significant challenges for the matching work. After registration using our method, the polygons are adjusted to align with the positions of the building roofs, as shown in Figure 3b.

- 3.

- Building facade extraction. Based on the registered building mask and RPCs, we calculate the offset of each building footprint relative to the roof in the image by utilizing the disparity values of the building roof and surrounding ground points from the initial disparity map. The process includes the following steps: (1) obtain the disparity values for the roof and ground, respectively; (2) utilize RPCs and the disparity values obtained in step (1) to estimate the roof height () and ground height (); and (3) by giving different height values ( and ) to the same point in object space, calculate the offset of the building footprint relative to the roof. As a result, we obtain the building footprint mask in the epipolar-rectified image, denoted as . By analyzing the offset between and , we obtain the building facade mask in the image, denoted as . It is essential to highlight that in this process, to mitigate the adverse impact of unmatched points on the offset calculation of the building footprint relative to the roof, we only consider pixels with high disparity confidence for the calculation. Hence, the confidence level of each pixel disparity value needs to be determined in advance.

- 4.

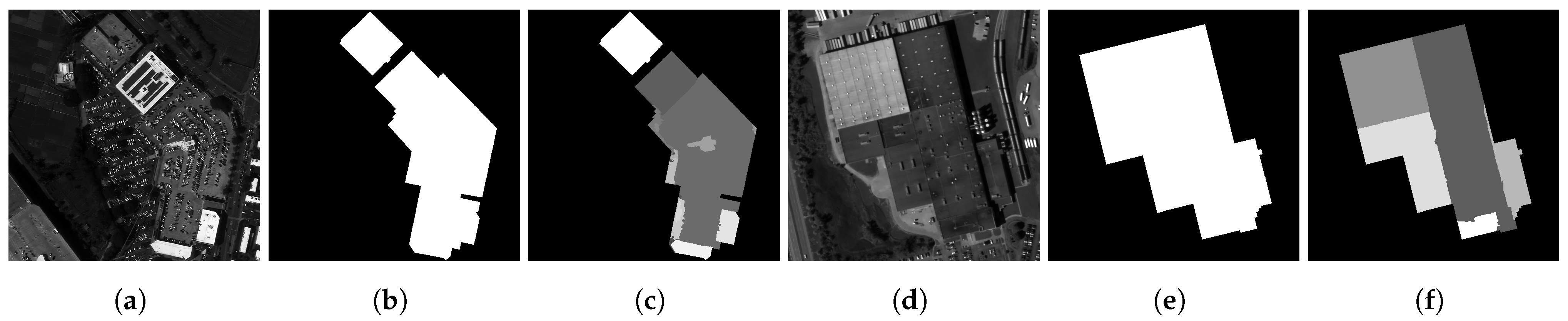

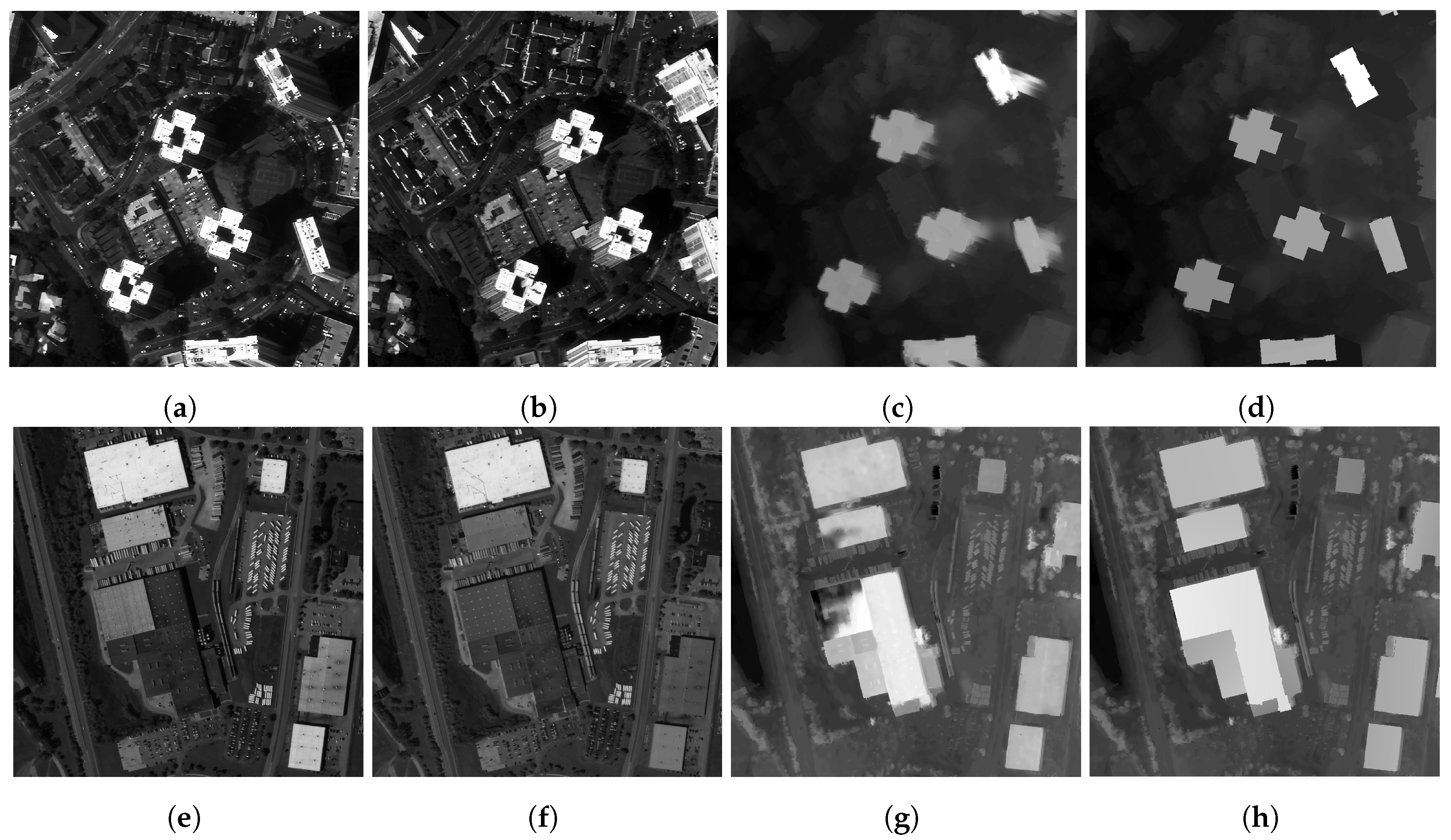

- Disparity-based building mask segmentation. Given that open-source building vectors provide only basic footprint shape information and are inadequate for the accurate reconstruction of buildings with complex roof structures, we perform a secondary segmentation on the building mask. We employ a statistical region merging-based segmentation method to extract multiple level height planes within each based on the disparity distribution within the roof superpixel [30]. For any two regions, and , the merging criterion is defined as follows:where . represents the number of pixels in the image area R. g denotes the gray level of the input data(usually set to 256). Q is employed to evaluate the possibility of merging two regions. This parameter plays a crucial role in controlling the number of regions in the segmentation result. Given that the data input in this paper is confined to a narrow range, primarily encompassing the roof, the scenario is relatively simple. In practical applications, we set Q to a smaller value, specifically 20. If P is true, the two regions will be merged, otherwise, they will remain separate. Figure 4 gives an example of disparity-based building mask segmentation. The building roofs illustrated in Figure 4a,d exhibit complex multi-layer structures. However, the existing building vector data merely label them into two polygons, as shown in Figure 4b,e. Through the secondary segmentation of the building roof vector based on disparity distribution, we obtain the roof mask that more accurately represents the building height, as shown in Figure 4c,f.

- 5.

- Building mask generation. Finally, by merging corresponding to each building vector with the secondary segmented , we derive the mask corresponding to all buildings within the range of the epipolar-rectified image.

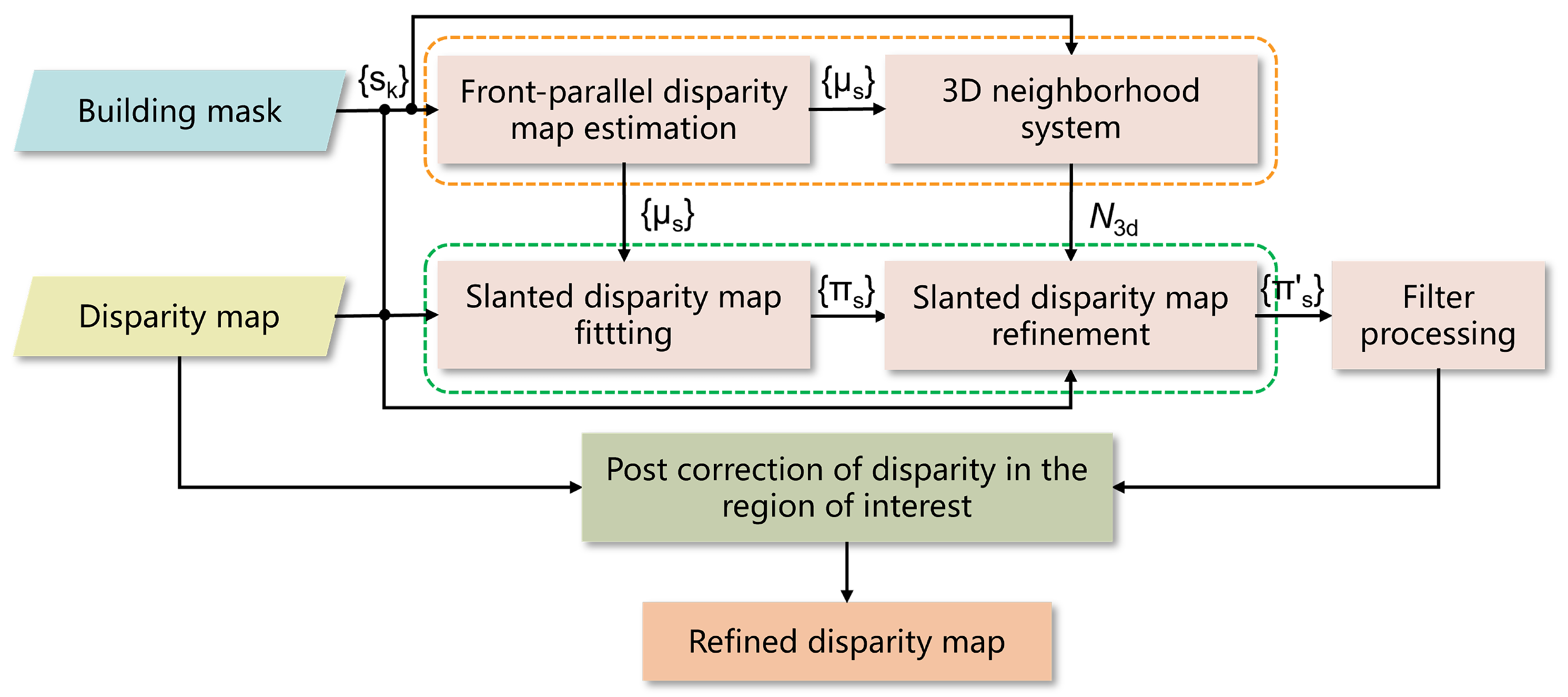

2.3. Disparity Map Refinement Based on Building Mask

2.4. Study Area and Data Preparation

3. Results

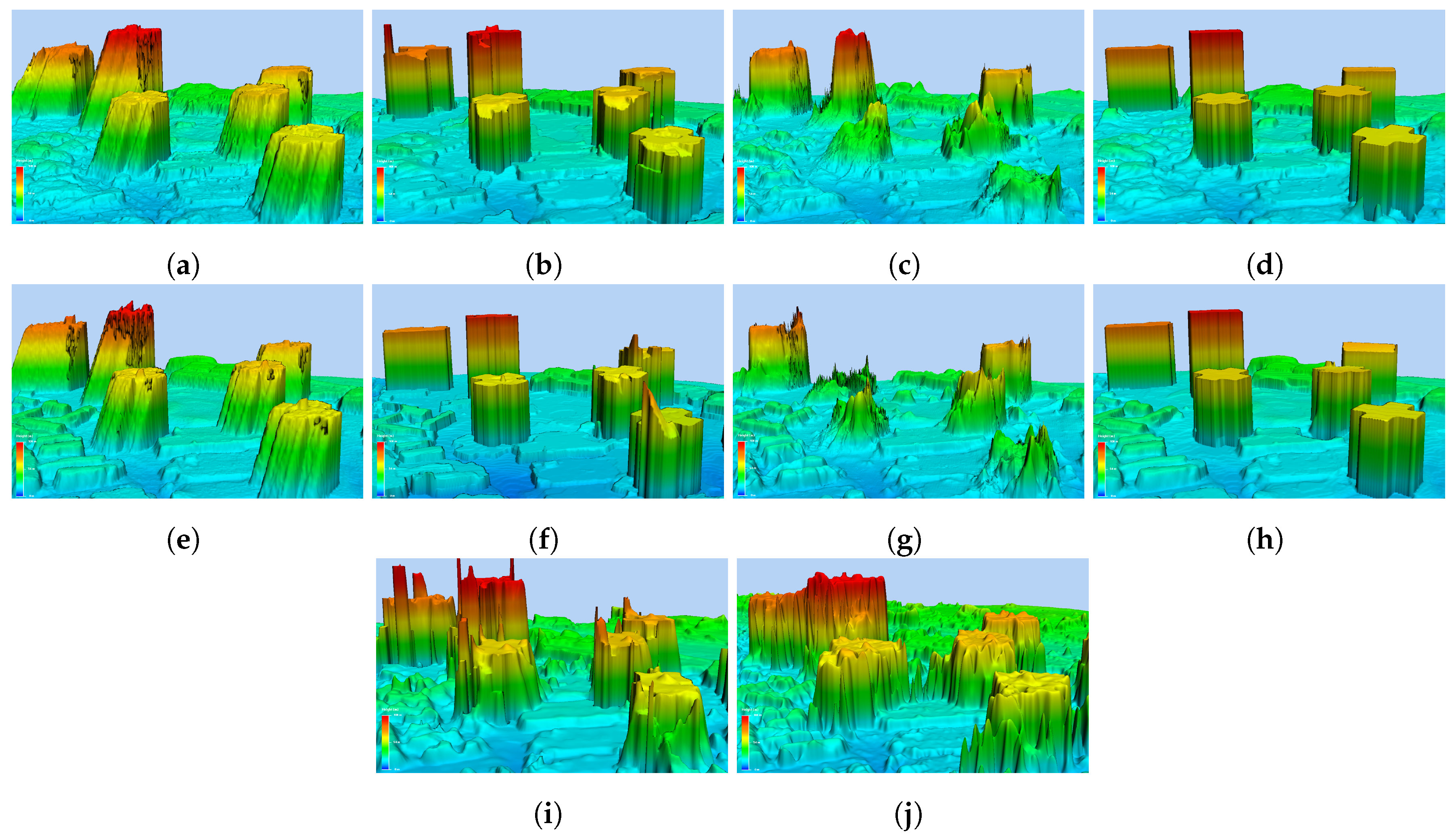

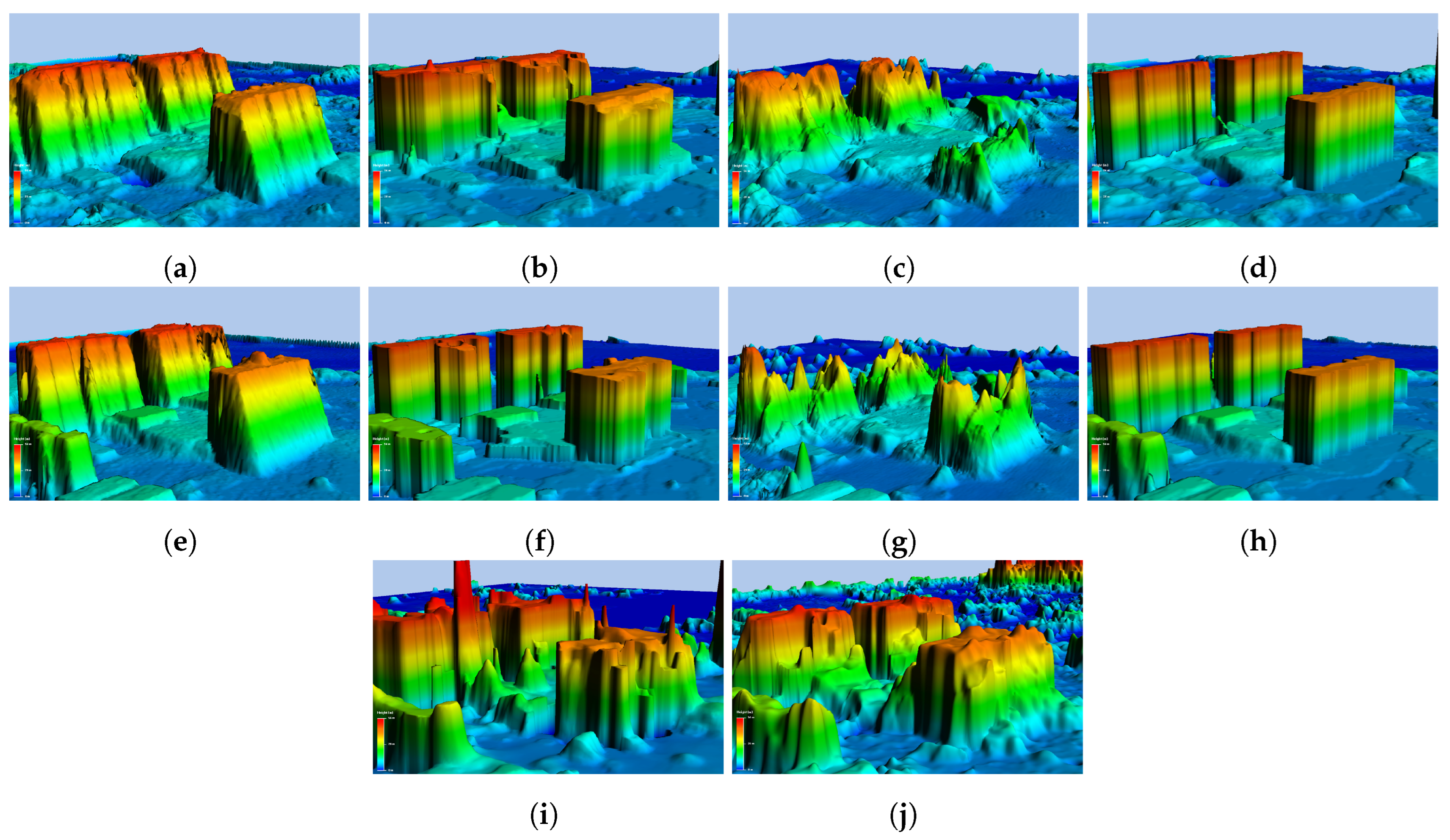

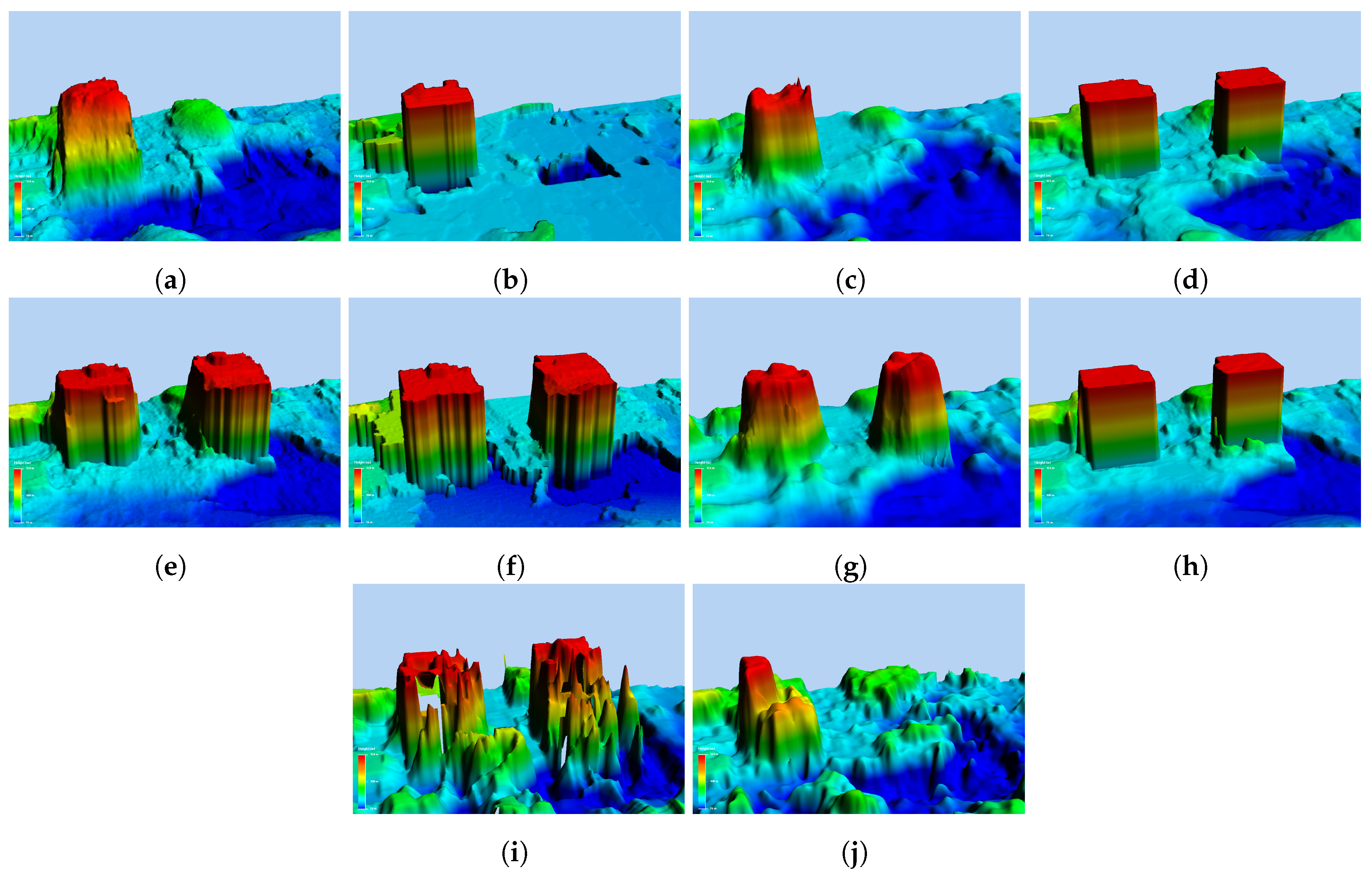

3.1. Comparison of Disparity Refinement Experimental Results

3.2. Comparison of the Accuracy of DSM Generated by Different Methods

4. Discussion

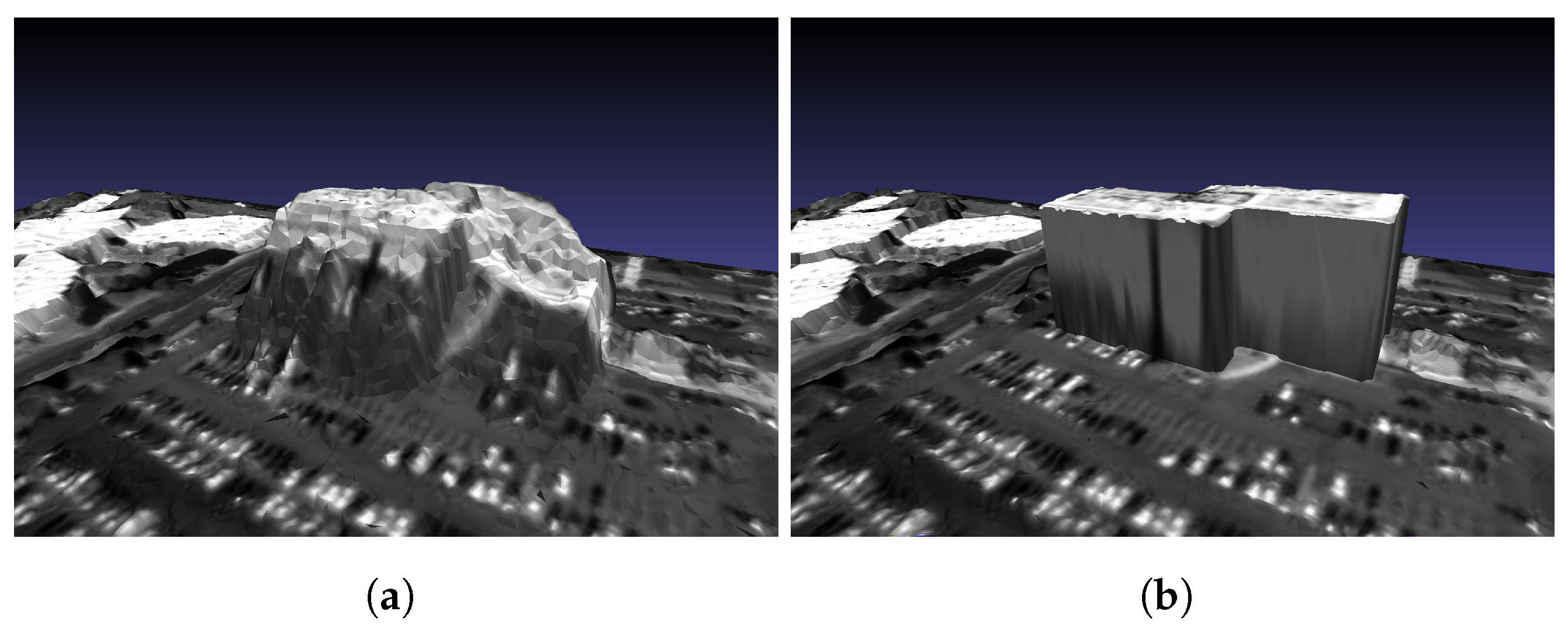

4.1. 3D Building Model Generation

4.2. Limitations

5. Conclusions

- 1.

- Replacing image segmentation information with open-source GIS data enhances the precision and regularity of building shapes, contributing to the generation of more accurate building models.

- 2.

- A method for matching GIS vectors with remote sensing images is proposed, handling the problem of offsets between building footprints and imaging locations.

- 3.

- By implementing a preliminary two-layer optimization of the disparity map, coupled with disparity post-correction of the building facades, the disparity values of building targets, particularly the disparity values within the building facades, are refined. This resolves the issue of inaccurate disparity estimation for super high-rise buildings.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hirschmüller, H. Accurate and Efficient Stereo Processing by Semi-Global Matching and Mutual Information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Shahbazi, M.; Sohn, G.; Theau, J. High-density stereo image matching using intrinsic curves. ISPRS J. Photogramm. Remote Sens. 2018, 146, 373–388. [Google Scholar] [CrossRef]

- Tan, X.; Sun, C.; Pham, T.D. Stereo matching based on multi-direction polynomial model. Signal Process. Image Commun. Publ. Eur. Assoc. Signal Process. 2016, 44, 44–56. [Google Scholar] [CrossRef]

- Zhan, Y.; Gu, Y.; Huang, K.; Zhang, C.; Hu, K. Accurate Image-Guided Stereo Matching With Efficient Matching Cost and Disparity Refinement. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1632–1645. [Google Scholar] [CrossRef]

- Tulyakov, S.; Ivanov, A.; Fleuret, F. Practical Deep Stereo (PDS): Toward applications-friendly deep stereo matching. Adv. Neural Inf. Process. Syst. 2018, 31, 5871–5881. [Google Scholar]

- Guo, X.; Yang, K.; Yang, W.; Wang, X.; Li, H. Group-Wise Correlation Stereo Network. 2019. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 3273–3282. [Google Scholar]

- Zbontar, J.; LeCun, Y. Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 2016, 17, 2287–2318. [Google Scholar]

- Schuster, R.; Wasenmuller, O.; Unger, C.; Stricker, D. Sdc-stacked dilated convolution: A unified descriptor network for dense matching tasks. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 2556–2565. [Google Scholar]

- Tao, R.; Xiang, Y.; You, H. A Confidence-Aware Cascade Network for Multi-Scale Stereo Matching of Very-High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1667. [Google Scholar] [CrossRef]

- Egnal, G.; Mintz, M.; Wildes, R.P. A stereo confidence metric using single view imagery with comparison to five alternative approaches. Image Vis. Comput. 2004, 22, 943–957. [Google Scholar] [CrossRef]

- Jang, W.S.; Ho, Y.S. Discontinuity preserving disparity estimation with occlusion handling. J. Vis. Commun. Image Represent. 2014, 25, 1595–1603. [Google Scholar] [CrossRef]

- Banno, A.; Ikeuchi, K. Disparity map refinement and 3D surface smoothing via Directed Anisotropic Diffusion. 2009. In Proceedings of the Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1870–1877. [Google Scholar]

- Huang, X.; Zhang, Y.J. An O (1) disparity refinement method for stereo matching. Pattern Recognit. 2016, 55, 198–206. [Google Scholar] [CrossRef]

- Mei, X.; Sun, X.; Zhou, M.; Jiao, S.; Zhang, X. On building an accurate stereo matching system on graphics hardware. In Proceedings of the Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 467–474. [Google Scholar]

- Ma, Z.; He, K.; Wei, Y.; Sun, J.; Wu, E. Constant Time Weighted Median Filtering for Stereo Matching and Beyond. In Proceedings of the Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Yan, T.; Gan, Y.; Xia, Z.; Zhao, Q. Segment-Based Disparity Refinement With Occlusion Handling for Stereo Matching. IEEE Trans. Image Process. 2019, 28, 3885–3897. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Z.; Gong, J. Generalized photogrammetry of spaceborne, airborne and terrestrial multisource remote sensing datasets. Acta Geod. Cartogr. Sin. 2021, 50, 11. [Google Scholar]

- Cao, Y.; Huang, X. A deep learning method for building height estimation using high-resolution multi-view imagery over urban areas: A case study of 42 Chinese cities. Remote Sens. Environ. 2021, 264, 112590. [Google Scholar] [CrossRef]

- Qi, F.; Zhai, J.Z.; Dang, G. Building height estimation using Google Earth. Energy Build. 2016, 118, 123–132. [Google Scholar] [CrossRef]

- Liu, C.; Huang, X.; Wen, D.; Chen, H.; Gong, J. Assessing the quality of building height extraction from ZiYuan-3 multi-view imagery. Remote Sens. Lett. 2017, 8, 907–916. [Google Scholar] [CrossRef]

- Wang, J.; Hu, X.; Meng, Q.; Zhang, L.; Wang, C.; Liu, X.; Zhao, M. Developing a Method to Extract Building 3D Information from GF-7 Data. Remote Sens. 2021, 13, 4532. [Google Scholar] [CrossRef]

- Dong, Y.; Li, Q.; Dou, A.; Wang, X. Extracting damages caused by the 2008 Ms 8.0 Wenchuan earthquake from SAR remote sensing data. J. Asian Earth Sci. 2011, 40, 907–914. [Google Scholar] [CrossRef]

- Pan, H.B.; Zhang, G.; Chen, T. A general method of generating satellite epipolar images based on RPC model. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, IGARSS 2011, Vancouver, BC, Canada, 24–29 July 2011. [Google Scholar]

- Xiong. Z.; Zhang. Y. Bundle Adjustment With Rational Polynomial Camera Models Based on Generic Method. IEEE Trans. Geosci. Remote Sens. 2011, 49, 190–202. [Google Scholar] [CrossRef]

- Wang, X.; Wang, F.; Xiang, Y.; You, H. A General Framework of Remote Sensing Epipolar Image Generation. Remote Sens. 2021, 13, 4539. [Google Scholar] [CrossRef]

- Liao, P.; Chen, G.; Zhang, X.; Zhu, K.; Gong, Y.; Wang, T.; Li, X.; Yang, H. A linear pushbroom satellite image epipolar resampling method for digital surface model generation. ISPRS J. Photogramm. Remote Sens. 2022, 190, 56–68. [Google Scholar] [CrossRef]

- Brox, T.; Malik, J. Large Displacement Optical Flow: Descriptor Matching in Variational Motion Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 500–513. [Google Scholar] [CrossRef]

- Shen, Z.; Dai, Y.; Rao, Z. CFNet: Cascade and Fused Cost Volume for Robust Stereo Matching. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13906–13915. [Google Scholar]

- Xiang, Y.; Tao, R.; Wan, L.; Wang, F.; You, H. OS-PC: Combining feature representation and 3-D phase correlation for subpixel optical and SAR image registration. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6451–6466. [Google Scholar] [CrossRef]

- Nock, R.; Nielsen, F. Statistical region merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1452. [Google Scholar] [CrossRef] [PubMed]

- Yamaguchi, K.; Hazan, T.; McAllester, D.; Urtasun, R. Continuous markov random fields for robust stereo estimation. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part V 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 45–58. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ni, K.; Jin, H.; Dellaert, F. GroupSAC: Efficient consensus in the presence of groupings. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 2193–2200. [Google Scholar]

- Ramm, F.; Topf, J.; Chilton, S. OpenStreetMap: Using and Enhancing the Free Map of the World; UIT Cambridge: Cambridge, UK, 2010. [Google Scholar]

- Snyder, G.I. 3D Elevation Program—Summary of Program Direction; Center for Integrated Data Analytics Wisconsin Science Center: Madison, WI, USA, 2012. [Google Scholar]

- Stucker, C.; Schindler, K. ResDepth: A deep residual prior for 3D reconstruction from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2022, 183, 560–580. [Google Scholar] [CrossRef]

- Imagery, A. Quick Terrain Modeler and Quick Terrain Reader, 2011. Available online: https://sensorsandsystems.com/quick-terrain-modeler-and-quick-terrain-reader (accessed on 1 December 2023).

- Franchis, C.D.; Meinhardtllopis, E.; Michel, J.; Morel, J.M.; Facciolo, G. An automatic and modular stereo pipeline for pushbroom images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 49–56. [Google Scholar] [CrossRef]

- Facciolo, G.; Franchis, C.D.; Meinhardt, E. MGM: A Significantly More Global Matching for Stereovision. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015. [Google Scholar]

- ENVI-IDL Technology Hall. Extract DSM and Point Cloud Data Based on SuperView-1 Stereo Pair Data in ENVI, 2022. Available online: https://www.cnblogs.com/enviidl/p/16595635.html (accessed on 5 January 2024).

| Method | MAE (m) | |||

|---|---|---|---|---|

| RoI-1 | RoI-2 | RoI-3 | RoI-4 | |

| LDOF (Hand-Crafted) | 2.20 | 1.86 | 2.55 | 5.58 |

| LDOF + SDR | 2.23 | 1.82 | 2.37 | 5.53 |

| LDOF + ResDepth | 1.74 | 1.89 | 2.52 | 5.14 |

| LDOF + Our method | 1.61 | 1.43 | 2.18 | 3.85 |

| CFNet (Deep-Learning) | 1.99 | 1.62 | 2.40 | 4.47 |

| CFNet + SDR | 2.22 | 1.27 | 2.39 | 5.20 |

| CFNet + ResDepth | 2.14 | 2.02 | 2.56 | 4.28 |

| CFNet + Our method | 1.59 | 1.17 | 2.01 | 3.71 |

| Method | MAE (m) | |||

|---|---|---|---|---|

| RoI-1 | RoI-2 | RoI-3 | RoI-4 | |

| MGM-s2p | 2.99 | 1.73 | 3.36 | 4.81 |

| ENVI | 3.40 | 1.88 | 3.46 | 5.71 |

| LDOF + Our method | 1.61 | 1.43 | 2.18 | 3.85 |

| CFNet + Our method | 1.59 | 1.17 | 2.01 | 3.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Jiang, L.; Wang, F.; You, H.; Xiang, Y. Disparity Refinement for Stereo Matching of High-Resolution Remote Sensing Images Based on GIS Data. Remote Sens. 2024, 16, 487. https://doi.org/10.3390/rs16030487

Wang X, Jiang L, Wang F, You H, Xiang Y. Disparity Refinement for Stereo Matching of High-Resolution Remote Sensing Images Based on GIS Data. Remote Sensing. 2024; 16(3):487. https://doi.org/10.3390/rs16030487

Chicago/Turabian StyleWang, Xuanqi, Liting Jiang, Feng Wang, Hongjian You, and Yuming Xiang. 2024. "Disparity Refinement for Stereo Matching of High-Resolution Remote Sensing Images Based on GIS Data" Remote Sensing 16, no. 3: 487. https://doi.org/10.3390/rs16030487

APA StyleWang, X., Jiang, L., Wang, F., You, H., & Xiang, Y. (2024). Disparity Refinement for Stereo Matching of High-Resolution Remote Sensing Images Based on GIS Data. Remote Sensing, 16(3), 487. https://doi.org/10.3390/rs16030487