Abstract

This article offers a comprehensive AI-centric review of deep learning in exploring landslides with remote-sensing techniques, breaking new ground beyond traditional methodologies. We categorize deep learning tasks into five key frameworks—classification, detection, segmentation, sequence, and the hybrid framework—and analyze their specific applications in landslide-related tasks. Following the presented frameworks, we review state-or-art studies and provide clear insights into the powerful capability of deep learning models for landslide detection, mapping, susceptibility mapping, and displacement prediction. We then discuss current challenges and future research directions, emphasizing areas like model generalizability and advanced network architectures. Aimed at serving both newcomers and experts on remote sensing and engineering geology, this review highlights the potential of deep learning in advancing landslide risk management and preservation.

1. Introduction

Landslides, a critical global geohazard, present substantial risks to both local communities and natural environments [1]. Triggered by a combination of natural phenomena like heavy rainfall, seismic activities, and human-induced factors such as deforestation and construction, they lead to significant soil and rock displacement [2]. Their unpredictable and widespread nature makes landslides a concern worldwide, with severe consequences including loss of life, infrastructural damage, and lasting environmental harm [3]. This situation underscores the urgent need for advanced detecting, monitoring, prediction and management methods to effectively tackle the complex challenges associated with landslides.

Traditional methods in landslide analysis, including empirical, statistical, and physical modeling, have provided foundational insights [4]. However, these approaches have notable limitations. Empirical methods, dependent on historical data, often fall short in predicting unexpected landslide events, particularly under changing climatic conditions [5,6]. Statistical models are useful for general trend analysis but face challenges in addressing the nonlinear aspects of landslide triggers [7,8]. Physical models, while informative, tend to oversimplify the complex real-world scenarios, which can hinder the accuracy of prediction [9,10]. Moreover, these traditional methods are limited in their capacity to process extensive datasets and adapt to the dynamic, changing nature of environmental conditions [11]. With the increasing frequency and severity of landslides, exacerbated by climate change and human activities, there is a growing necessity for more sophisticated, adapted, and precise predictive tools.

The recent advancements in remote sensing technologies have significantly impacted landslide analysis [12,13]. The availability of high-resolution satellite imagery allows for the detailed observation of Earth’s surface changes over time, which is crucial in detecting early signs of landslides. Synthetic Aperture Radar (SAR) data facilitate this process with their ability to assess surface textures and changes, regardless of weather conditions, providing crucial information about slope stability and erosion [14]. Synthetic Aperture Radar Interferometry (InSAR), with its ability to measure millimeter-level surface movements, significantly contributes to landslide analysis by providing precise measurements of ground deformation and early detection of potential landslide areas [15]. Additionally, multispectral imagery, which collects data across various wavelengths, is useful for analyzing soil composition and moisture content, both critical factors in determining landslide susceptibility [16]. However, traditional methods have inherent drawbacks in harnessing the full potential of these data sources. Their limited ability to process and interpret the vast and complex datasets generated by modern remote sensing technologies highlights the need for more sophisticated analytical tools [17].

Since 2010s, deep learning, a subset of artificial intelligence, is revolutionizing the landslide analysis [18]. Its ability to process and analyze vast amounts of complex, multi-dimensional data sets it apart from traditional methods. Deep learning algorithms can identify intricate patterns in data that are often imperceptible to human analysts or conventional analytical techniques [19]. This capability extends to various types of landslides, such as rockfalls, debris slides, or complex movements, as long as these activities are adequately represented in the observation data, which includes analyzing satellite imagery [20,21], weather patterns [22,23], and geological data to predict potential landslide occurrences [24,25]. Unlike traditional models, deep learning systems can continuously learn and improve their predictive capabilities, adapting to new data and changing environmental conditions. This ability is pivotal for applications such as landslide detection, mapping, susceptibility analysis, and displacement prediction. Comparative studies have consistently demonstrated the superiority of deep learning over traditional models, making it an indispensable tool in modern landslide risk assessment and mitigation strategies [26,27,28]. In this article, we explore the main frameworks of deep learning applied in landslide studies with remote sensing data, specifically in the tasks of landslide detection, mapping, susceptibility analysis, and displacement prediction. Through these case studies, we aim to highlight the potential of deep learning in landslide risk management, and indicate both challenges and opportunities for future research.

The key contributions of this review include the following:

- (1)

- We provide a detailed overview of deep learning, tracing its development from early concepts to the latest advancements. This detailed overview establishes a robust foundation in deep learning principles, crucial for understanding its applications in landslide studies with remote sensing.

- (2)

- We categorize deep learning tasks into five frameworks—classification, detection, segmentation, sequence, and hybrid. This structure not only provides a clear understanding of the application of these methods in earth and environment studies but also offers a novel perspective for readers, especially beneficial for remote-sensing experts who are, however, new to AI.

- (3)

- Our review stands out by the AI-centric approach in examining deep learning applications to landslides. Instead of a general task-based analysis, we scrutinize how specific deep learning frameworks are adeptly applied to various landslide-related tasks. This focused perspective provides insights into which frameworks are more suitable for overcoming particular challenges in studying landslides.

- (4)

- We discuss current challenges and highlight potential future research directions, contributing to the ongoing evolution of applying deep learning to landslides.

This review is organized as follows: Section 2 provides an in-depth overview of deep learning methodologies. Section 3 summarizes five prevalent deep learning frameworks used in various fields. Section 4 reviews the latest studies on deep learning approaches for studying landslides with remote sensing, illustrated corresponding to these frameworks. Section 5 discusses the challenges and potential future directions in the field, exploring both the limitations and opportunities of current methods. Finally, Section 6 concludes the paper, summarizing the main findings and insights.

2. Deep Learning: Methods, Models, Loss, Evaluation Metrics, Architectural Modules, and Implementing Strategies

2.1. Methods

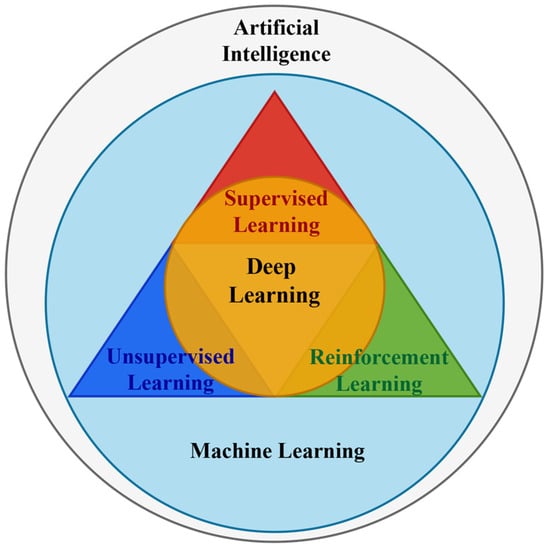

2.1.1. Framework of AI, ML, DL, and Learning Paradigms

In the evolution of artificial intelligence (AI), understanding the relationship between its key components—machine learning (ML), deep learning (DL), and fundamental learning paradigms—is crucial. AI, the overarching concept, encompasses the development of intelligent systems that mimic tasks typically requiring human intelligence. Within AI lies ML, which focuses on algorithms that learn from data to make predictions or decisions. Deep learning, a specialized branch of ML, employs deep neural networks for analyzing and learning from vast volumes of unstructured data, representing a more advanced stage of machine learning [19].

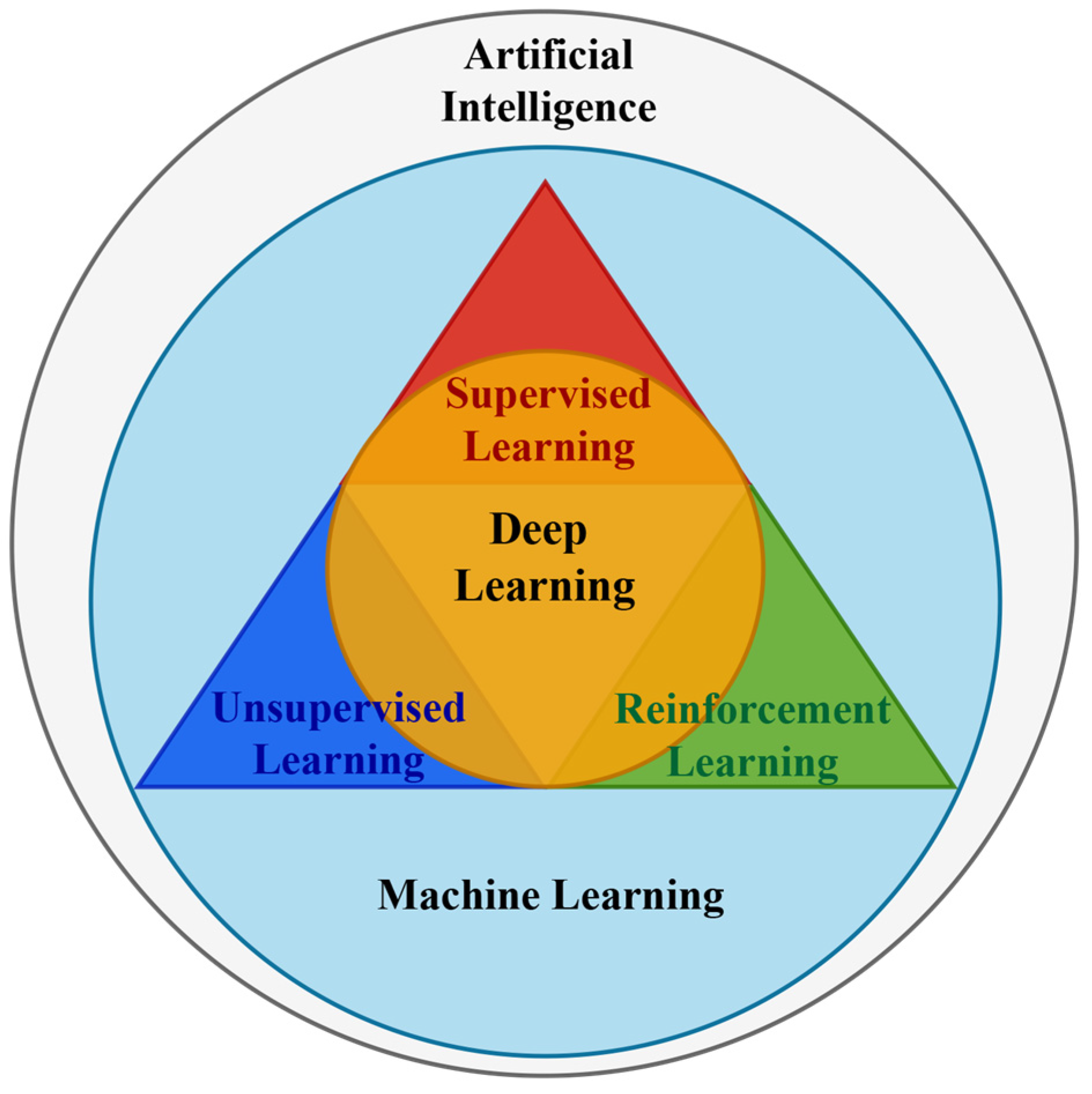

Crucially, both ML and DL encompass three key learning paradigms: supervised, unsupervised, and reinforcement learning, as shown in Figure 1. Each paradigm offers a distinct approach for training algorithms to analyze and learn from data. Therefore, while ML and DL primarily concentrate on the evolution of algorithms and data processing techniques, these learning paradigms provide the specific frameworks within which these technologies function and develop.

Figure 1.

Hierarchy of AI methods and learning paradigms.

2.1.2. Supervised Learning

Supervised learning, the most prevalent paradigm in ML, focuses on learning a latent function based on labeled input–output pairs. It infers a function from labeled training data, aiming to predict outcomes for unforeseen data. The typical supervised learning algorithm is represented as

where and represent the output and input, denotes the parameters of the model, and is the learned function. This paradigm is instrumental in fields like image and speech recognition, where the desired output is known, and the model is trained to emulate this mapping. In ML and DL, techniques such as linear regression, support vector machines (SVM), and convolutional neural networks (CNN) are classic examples. The strength of supervised learning is its effectiveness for specific, well-defined problems, but its reliance on extensive labeled datasets is a considerable limitation.

2.1.3. Unsupervised Learning

Different from supervised learning, unsupervised learning aims to identify patterns in data without pre-assigned labels. Its primary goal is to model the underlying structure or distribution of the data to gain deeper insights. Techniques in unsupervised learning vary widely. For example, clustering and dimensionality reduction methods like K-means and principal component analysis (PCA) are commonly used in ML for exploratory data analysis. In DL, approaches such as autoencoders are employed for more complex tasks like feature learning. Unsupervised learning is invaluable for discovering latent patterns in datasets but also faces challenges due to the subjective nature of its results and the difficulty in validating models without definitive labels.

2.1.4. Reinforcement Learning

Reinforcement learning is devised for training models to make decisions through trial-and-error interactions with an environment. This paradigm involves an agent learning to achieve a goal in a complex, uncertain environment by performing actions and observing the actions to verify their effectiveness. The learning process is guided by rewards and punishments, akin to how humans learn from real-world interactions [19]. Reinforcement learning is particularly effective in complex decision-making environments, such as gameplay, autonomous vehicles, and resource management, but it has limitations in balancing the trade-off between exploring new possibilities and exploiting known strategies, especially in scenarios with sparse or delayed rewards. The comparison of three learning paradigms is shown in Table 1.

Table 1.

Learning paradigm summarization.

2.2. Models

2.2.1. Introduction to Neural Network

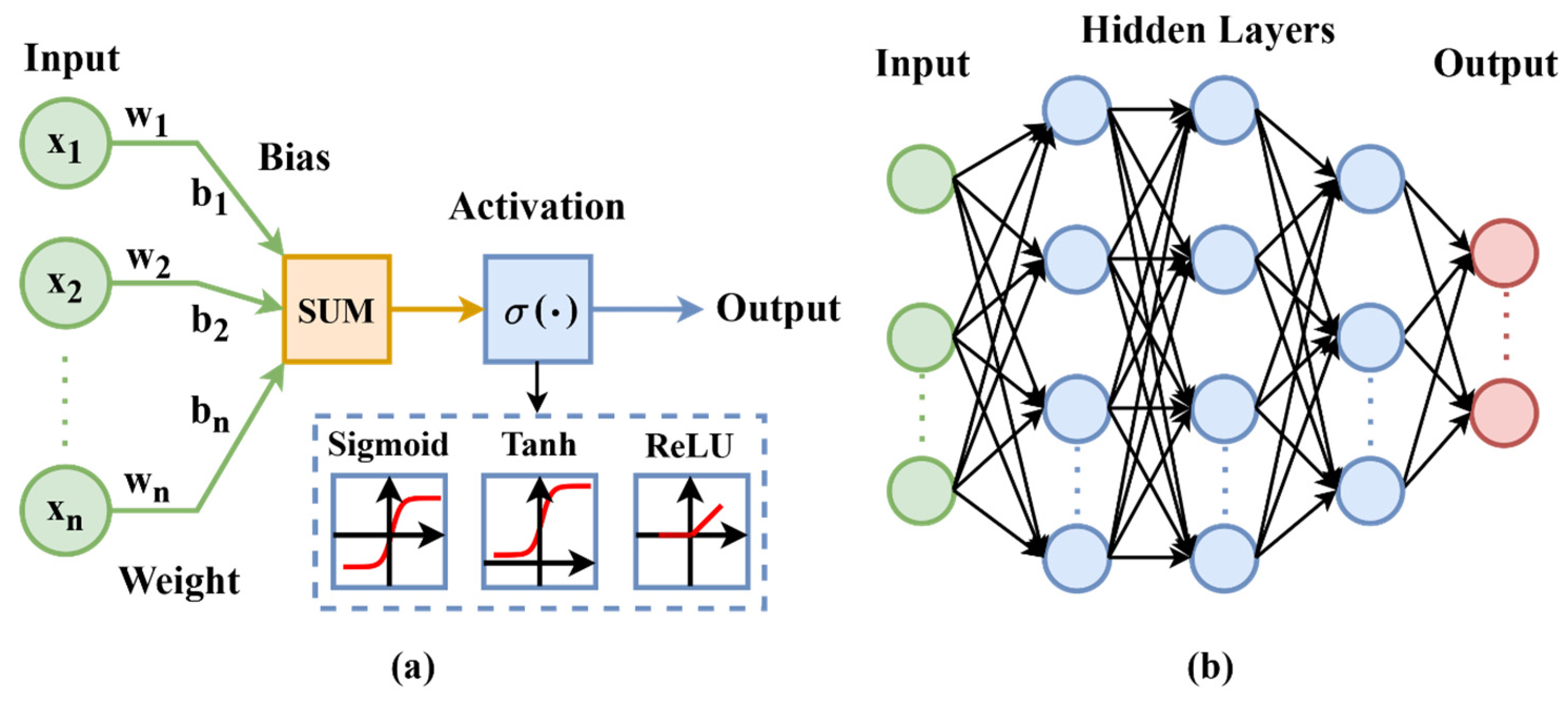

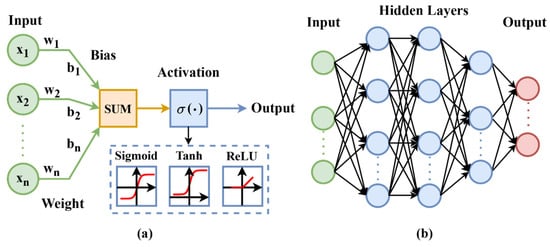

A neural network [29], pivotal in machine learning, draws inspiration from the complex biological neural networks in animal brains. At its most basic, a neural network, or Artificial Neural Network (ANN), consists of interconnected nodes or units that mirror the neurons in the brain. These are the simplest form of neural networks and are adapted for processing and analyzing complex data structures, as shown in Figure 2a. The core operation of an ANN is captured in the formula

where represents the output, signifies the input features, refers to the weights assigned to these features, is a bias term, and is the activation function. This formula is akin to how neurons in the brain process inputs to produce an output.

Figure 2.

Architectural diagrams of (a) neural network and (b) multi-layer perceptron.

ANNs typically consist of a single layer that directly connects the input to the output, which can limit their ability to handle highly complex tasks. To address this limitation, the field of neural network research has evolved to develop more complex structures such as the Multilayer Perceptron (MLP). An MLP expands upon the basic ANN structure by introducing one or more hidden layers between the input and output layers, allowing the network to learn more complex representations. Each layer in an MLP consists of nodes that function similarly to the basic ANN, but with the added capability to perform more sophisticated computations due to the deeper architecture, as illustrated in Figure 2b. Its architecture can be expressed as

where denotes the number of layers, is the input, and represent the weights and biases at layer , and is the activation function for that layer. MLPs demonstrate the capability to capture and represent complex, non-linear data patterns, marking a significant advancement in neural network development.

This formula is akin to how neurons in the brain process inputs to produce an output. Backpropagation, a key step in training neural networks, involves iteratively adjusting the weights of neural networks based on the computed gradients of the loss function. This process allows the network to learn and improve its performance with training. However, during the backpropagation process, the gradients of the networks may become extremely small, hindering the weight updates—particularly problematic in deeper networks, namely the “vanishing gradient problem”.

Activation functions are essential in neural networks, which introduces non-linearity and enables the network to represent complex data patterns beyond simple linear relationships. Widely used activation functions include the following.

Sigmoid: . It is suitable for binary classification, yet susceptible to the vanishing gradient problem, especially in deeper networks.

ReLU (Rectified Linear Unit): . It is known for accelerating convergence in deep networks and mitigating the vanishing gradient problem to some extent. However, it introduces the “dying ReLU problem”, where neurons can become inactive during training, leading to a complete loss of learning capability for those neurons.

Hyperbolic Tangent (tanh): . It projects outputs between −1 and 1, but is still subject to vanishing gradients.

2.2.2. Vision Models for Spatial Learning

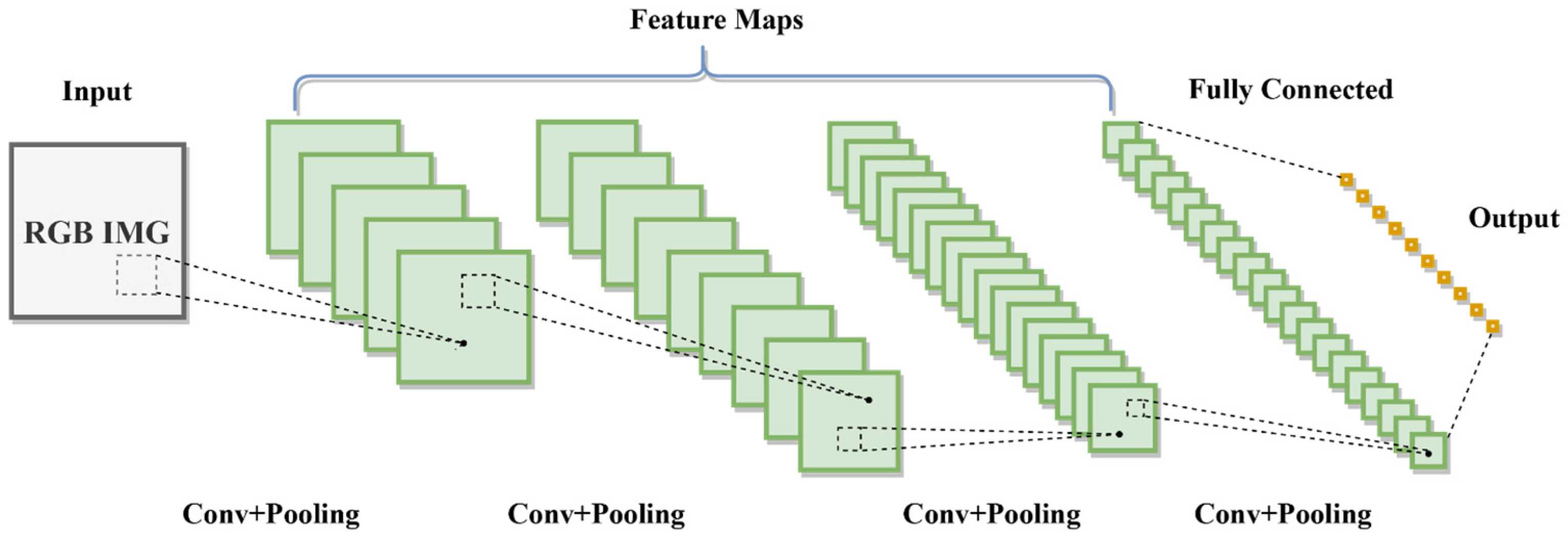

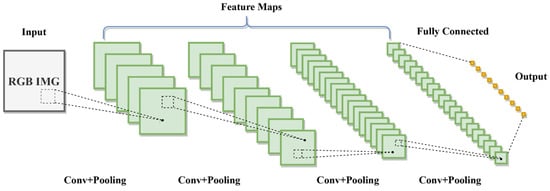

Spatial learning is focused on understanding the layout, context, and interrelationships within visual data, crucial for tasks like image recognition, object detection, and scene comprehension. CNNs have revolutionized spatial learning, significantly altering the way we interpret and analyze spatial patterns in images [30]. Their architecture consists of convolutional layers, followed by pooling and fully connected layers, enabling the efficient processing of grid-like data and extraction of vital features for various visual tasks, as shown in Figure 3. The convolution operation is defined as

where represents the input image, denotes the filters, and are the coordinates in the output feature map. This process involves sliding the filter kernel over the input signal , multiplying corresponding elements, and summing these products to generate new values in the output feature map.

Figure 3.

Convolutional neural network (CNN) architecture.

In the spatial feature learning, three tasks are predominant: image classification, object detection, and image segmentation. The evolution of these tasks reflects a parallel and interconnected development, influencing and progressing the others. Image classification, an early focus in computer vision, has been fundamental in neural networks’ advancement for image analysis. Initial models like LeNet [31] propelled the field, setting a benchmark for neural networks in image classification. This task established the basis for pattern recognition and feature extraction, influencing subsequent complex tasks. ResNet [32], a groundbreaking work, proposed skip connection and residual block, which enables the possibility of building deeper and more powerful networks. Advancements in image classification have also benefited other spatial learning tasks, contributing to the overall growth of the field.

Concurrently, object detection began to emerge as another significant area of focus. Although it built on the principles established in image classification, such as recognizing patterns and features, object detection introduced an additional challenge, i.e., locating and identifying multiple objects within a single image. This task necessitates a deeper understanding of spatial relationships, leading to innovative networks like the R-CNN [33,34,35] and the Yolo series [36,37,38]. Image segmentation, though seemingly more advanced, also developed alongside classification and detection. This task involves dividing an image into pixel-level segments, requiring detailed analysis and dense prediction. Representative networks like Fully Convolutional Networks (FCNs) [39] and U-Net [40], which introduced an encoder–decoder framework, paving a solid foundation in this field. The development of these segmentation networks was influenced by the ongoing progress in both image classification and object detection, showcasing the interdependency and parallel growth among these vision tasks.

Vision models are instrumental in automating the analysis of remote sensing imagery to detect, locate and map the key features of potential landslides. For instance, image classification models, proficient in identifying distinctive patterns associated with unstable terrain, play an important role in comprehensive landslide feature analysis. Object detection models, with their capability to swiftly pinpoint potential landslide features within large-scale satellite images, offer invaluable advantages in enhancing the efficiency of locating potential landslides. This rapid detection not only streamlines the mapping process but also ensures a focused assessment of the spatial distribution to potential landslides. Moreover, image segmentation models provide a detailed approach by accurately delineating the boundaries of landslide-affected areas. By outlining distinct regions within the imagery, these models enable quantitative measurements and change detection analysis, benefiting a nuanced understanding of the landslide characteristics.

2.2.3. Sequence Models for Temporal Learning

Sequence data analysis has long been a crucial aspect of machine learning, with applications ranging from natural language processing and financial forecasting to speech recognition. Over time, the field of sequence modeling has witnessed significant advancements in unraveling the complexities of temporal data.

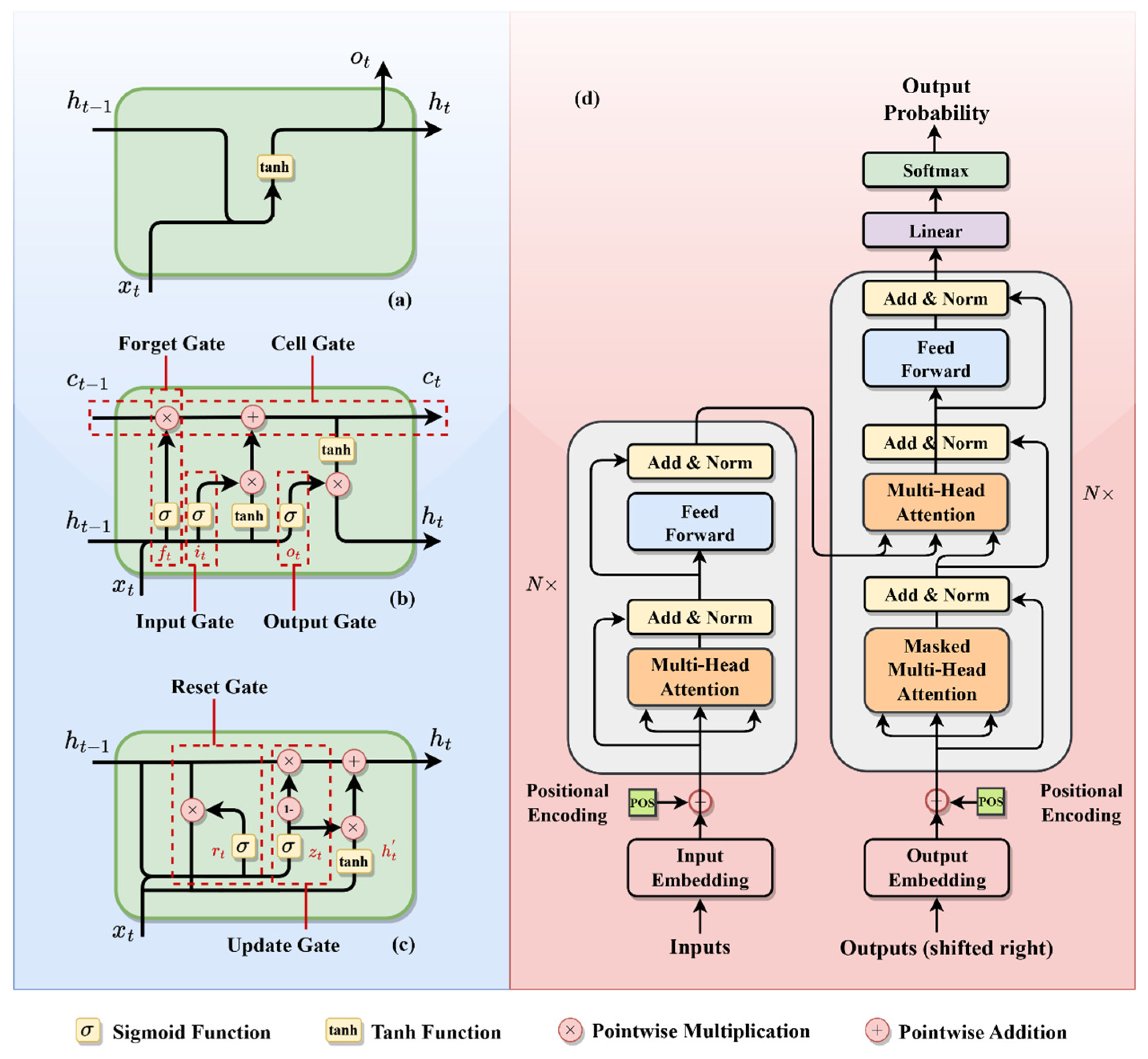

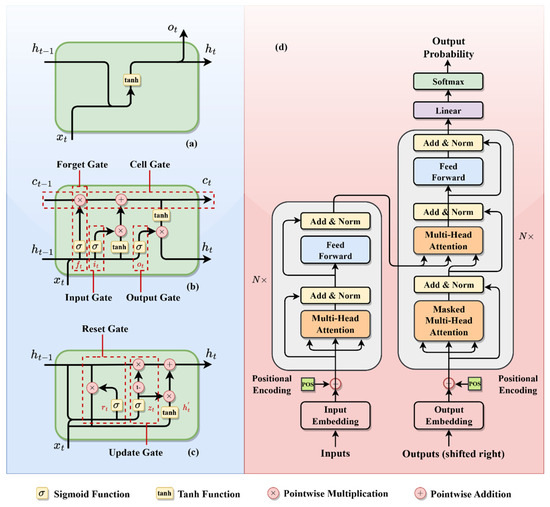

The initial breakthrough was the introduction of the Recurrent Neural Network (RNN) [41]. RNNs brought in the concept of self-loops, enabling the retention and processing of information over time steps, as shown in Figure 4a. They are expressed by the formula

where is the hidden state at time , is the input at time , and are weight matrices, is the bias, and is the activation function. While RNNs are effective at capturing short-term dependencies, they struggle with long-term sequences due to the similar vanishing gradient problem as in the spatial learning, making it hard to retain information over long periods.

Figure 4.

Architectural diagrams of sequence models: (a) RNN; (b) LSTM; (c) GRU; (d) transformer.

To overcome the RNN limitations, Long Short-Term Memory (LSTM) [42] networks were introduced. LSTMs featured a more intricate architecture, incorporating memory cells for selective information retention and forgetting, as shown in Figure 4b. LSTMs mitigated the vanishing gradient issue and significantly enhanced long-term dependency handling in sequence models. Another notable development is the Gated Recurrent Unit (GRU) [43], designed to simplify the LSTM structure while preserving its advantages. GRUs combine the forget and input gates into a single “update gate”, and merge the cell state and hidden state, shown in Figure 4c. GRUs present a more streamlined and less complex alternative to LSTMs.

Notably, the most significant paradigm shifts in sequence modeling emerged with the introduction of the transformer model, as shown in Figure 4d. Represented in the important paper “Attention Is All You Need” [44], transformers diverged from the traditional recurrence-based approaches entirely. Instead of relying on sequence recurrence, a transformer model applies the self-attention mechanisms to process sequences. This approach assigns varying degrees of significance to different parts of the input data, enabling the model to focus on relevant portions of the sequence for prediction. Transformers demonstrate exceptional performance in various tasks, particularly excelling in handling long sequences and parallelizing computations. However, they require substantial computational resources and large datasets.

In the context of landslide studies, sequence models are mainly used for the temporal data analysis. In applications such as landslide susceptibility mapping, these models leverage multi-source data, treating it as a sequential input that encompasses satellite imagery, geological data, and other relevant information. Assimilating data from diverse sources, they can capture intricate dependencies and patterns associated with landslides. For instance, the satellite imagery allows for mapping specific topographical changes that indicate potential landslide-prone areas. Geological data aid in discerning the ground composition, enhancing the models’ ability to predict susceptibility. For the landslide displacement prediction, sequence models excel their capabilities by training on historical displacement series. By scrutinizing past displacement patterns, these models could understand temporal evolutions of ground conditions. This learning process contributes to the accuracy and reliability of predictions related to potential displacements.

2.2.4. Generative Models

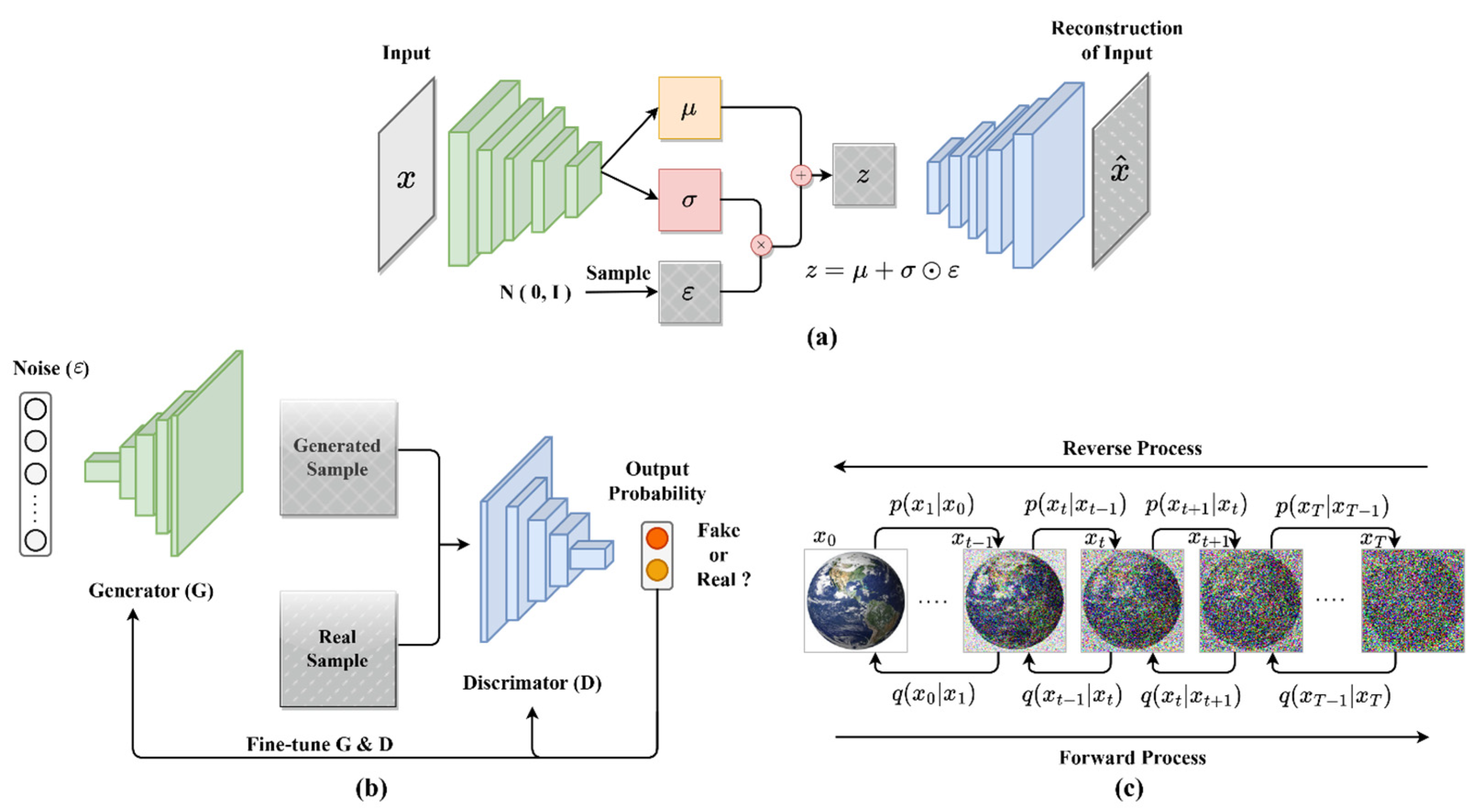

The evolution of generative models in machine learning exemplifies the pursuit to replicate and understand complex patterns in real-world data. This section introduces three seminal generative models: Generative Adversarial Networks (GANs) [45], Variational Autoencoders (VAEs) [46], and Diffusion Models [47], each representing a significant advancement in AI and addressing unique data generation challenges.

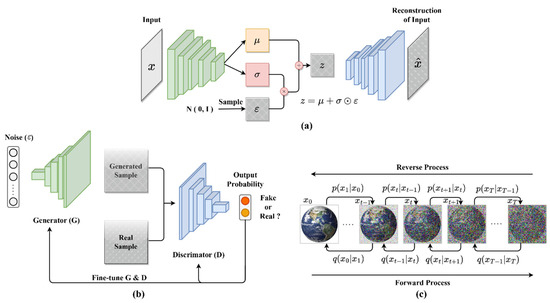

Introduced by Ian Goodfellow et al. in 2014, GANs emerged as a response to the need for sophisticated, high-quality data generation, particularly in image synthesis. They consist of two neural networks: the generator, which aims to produce data indistinguishable from real data, and the discriminator, tasked with differentiating between real and generated data, as shown in Figure 5a. GANs have revolutionized realistic image generation but face challenges like training instability and mode collapse, where the variety of outputs generated is limited.

Figure 5.

Architectural diagrams of generative models: (a) GAN; (b) VAE; (c) diffusion model.

Variational Autoencoders (VAEs), proposed by Kingma and Welling in 2013, address the challenge of understanding and encoding data distributions. Unlike GANs’ direct data generation approach, VAEs learn the data distribution and sample from it. They consist of an encoder, mapping input data to a latent space, and a decoder, reconstructing data from this latent representation, as shown in Figure 5b. VAEs are especially useful in applications that demand an understanding of latent data structures, such as semi-supervised learning, although they tend to produce slightly blurred outputs.

Diffusion Models, the latest innovation in generative modeling, focus on creating high-fidelity images through a unique, gradual process that transforms noise into a representation of the target data distribution. This process includes a forward phase of adding noise and a reverse phase of removing noise to regenerate the data, as shown in Figure 5c. While Diffusion Models excel in generating detailed and diverse outputs, their high computational demands and extended training and generation times pose limitations to their scalability and practical applications in some scenarios. A comparative analysis of three types of learning models is shown in Table 2.

Table 2.

Comparative analysis of spatial, sequence, and generative models in deep learning.

For landslide studies, generative models aim to addressing challenges related to data scarcity and imbalances. Specifically, these models are mainly used for data augmentation, where synthetic examples are generated to supplement existing datasets. This is particularly valuable for landslide studies where obtaining a large and diverse dataset is often challenging due to the infrequent occurrence of landslides and the diverse contributing factors. In this case, generative models not only enhance the quantity of available data but also improves the generalization capabilities of deep learning models, which is crucial for developing models that can effectively identify, classify, and predict landslides activities in regions with limited observations.

2.3. Loss and Optimizer

In machine learning, loss functions and optimizers are two essential components of the training process. The loss function measures a model’s performance by quantifying the error between its predictions and actual data, guiding the model towards accurate predictions. Optimizers are algorithms that aim to minimize this loss by adjusting the model’s parameters. This dynamic interplay between loss functions and optimizers is what drives the learning process of a model.

2.3.1. Loss

Loss functions vary based on different learning tasks. We will go over several commonly used loss functions in different scenarios. For classification tasks, which involve categorizing inputs into distinct classes, cross-entropy (CE) loss [48] is commonly used:

where is the actual label and is the predicted probability. CE loss is particularly effective in classification tasks as it penalizes incorrect classifications more significantly, driving the model towards precise classification. However, its sensitivity to dataset imbalances can be a challenge. In landslide studies, the CE loss is prevalent in landslide feature classification tasks, where its purpose is to discern features extracted from sources like satellite imagery or geological data, determining their association with landslides. It can be applied in both object-level tasks, like landslide detection, and in pixel-level tasks like precise landslide mapping. Moreover, it is also widely employed in determining the likelihood of the occurrence of a landslide event, which often refers to landslide susceptibility mapping.

In regression tasks, which predict continuous values, mean squared error (MSE) and Mean Absolute Error (MAE) are commonly used:

MSE highlights larger errors, while MAE provides a direct measure of error magnitude. Both are simple and interpretable, but may not fully capture complex real-world data distributions.

For segmentation tasks, particularly in medical imaging, Dice Loss [49] is always adopted for evaluating the overlap between predicted and true segmentation areas:

where and represent the predicted and actual segmentation areas, respectively. Dice Loss effectively addresses the problem of class imbalance and emphasizes spatial accuracy. In landslide studies, Dice Loss is often performed in the pixel-level classification task of landslide mapping. This task involves precisely delineating the boundaries of landslide-affected areas. Notably, Dice Loss is skilled of capturing fine-grained details at the pixel level, offering a distinct advantage over CE loss in spatially accurate delineation. This makes Dice Loss particularly suitable for tasks that demand intricate spatial analysis, such as landslide mapping in satellite imagery.

In image generation tasks, where the perceptual quality of the output is paramount, traditional regression losses may not be able to capture the nuanced aspects of image quality. These losses often focus on pixel-level accuracy, which does not necessarily translate to visually pleasing results. Therefore, structural similarity loss functions that better account for perceptual differences are introduced in such applications, for example, the Structural Similarity Index (SSIM) [50] and Peak Signal-to-Noise Ratio (PSNR) [51]. SSIM assesses perceptual differences between similar images, and PSNR evaluates reconstruction or compression quality. MSE is the mean-squared error between the reconstructed and original image. In landslide studies, generative loss functions, such as SSIM and PSNR, can be applied in tasks that involve the generation of synthetic remote-sensing images or maps. These loss functions are valuable when the perceptual quality of the generated outputs is highly considered. For instance, in scenarios where researchers seek to create realistic landslide occurrence maps or simulate potential landslide events, employing such loss functions allows for a nuanced evaluation of the visual fidelity of the generated content.

2.3.2. Optimizer

The evolution of optimizers in machine learning has been characterized by a series of innovations, each addressing specific challenges to enhance training efficiency and performance. Stochastic Gradient Descent (SGD) [19], one of the earliest optimization algorithms, forms the foundation of many subsequent developments. It updates model parameters using the gradient of the loss function calculated from individual samples. While effective in various scenarios, its simplicity can lead to slow convergence and oscillations.

To tackle the slow convergence and oscillations characteristic of SGD, the Momentum method [19] was introduced. This method accelerates SGD by moving it along relevant directions and reducing oscillations. Momentum achieves this by incorporating a velocity component, which is influenced by past gradients, thus ensuring more consistent reduction in the loss function.

Following Momentum, AdaGrad [52] was developed to address the uniform learning rate issue in SGD. Unlike its predecessors, AdaGrad adjusts the learning rate for each parameter individually, allowing for smaller updates for frequently occurring parameters and larger updates for infrequent ones. This adaptability made AdaGrad particularly suitable for datasets with sparse features.

RMSProp [53], an improvement on AdaGrad, was designed to perform better in non-convex optimization problems, which are common in deep learning. It modifies AdaGrad’s approach by using a moving average of squared gradients to normalize the gradient, thus helping the algorithm recover from steep areas and continue the learning process efficiently.

The most recent significant development is the Adam [54] optimizer, which combines the strengths of both Momentum and RMSProp. Adam maintains an exponentially decaying average of past squared gradients (like RMSProp) and an exponentially decaying average of past gradients (like Momentum). This hybrid approach allows Adam to adjust learning rates adaptively for each parameter, making it one of the most effective optimizers in various machine learning scenarios.

2.4. Evaluation Metrics

Model evaluation metrics are essential in the evaluation of models’ prediction, providing insights into how well a model performs. Their evolution aligns with the development of machine learning, transitioning from simple metrics like error rates to more sophisticated ones. Before delving into specific metrics, it is important to introduce the confusion matrix, particularly for classification tasks. The confusion matrix is a table used to describe the performance of a classification model on a set of test data for which the true values are known (see Table 3). For binary classification, it consists of four components:

Table 3.

Binary classification confusion matrix.

- TP (True Positives): correctly identified positive cases;

- TN (True Negatives): correctly identified negative cases;

- FP (False Positives): incorrectly identified positive cases;

- FN (False Negatives): incorrectly identified negative cases.

where, T (True) indicates correct predictions, F (False) incorrect predictions, P (Positive) the positive class, and N (Negative) the negative class.

In classification tasks in landslide applications, such as distinguishing loess landslides from their similar surrounding environments, the fundamental objective is to assess the model’s capability in accurately categorizing the data. Key metrics include Precision, Recall, Accuracy, and the F1-score [19]. Each of these metrics was developed to address specific aspects of a model’s performance in classification. For instance, precision measures the proportion of true positive predictions in the positive class predictions. Recall focuses on the model’s ability to identify all actual instance by measuring the proportion of true positive predictions in all actual positive instances. The F1-score, combining Precision and Recall, was introduced to provide a single metric that balances both. Accuracy calculates the proportion of correct predictions (both true positives and true negatives) out of all predictions.

In addition to these metrics, for dense classification tasks such as image segmentation, geometric metrics like the Intersection over Union (IoU) are widely used. This metric quantifies the accuracy of segmentation by measuring the area overlap between the predicted and true segments.

Regression tasks, which involve predicting continuous outcomes, necessitate different metrics. It is important to note that regression losses themselves can serve as metrics. These include but are not limited to metrics like the root-mean-squared error (RMSE), Mean Absolute Error (MAE), Full Reference Image Quality Assessment Metrics like the Structural Similarity Index (SSIM) [50] and Peak Signal-to-Noise Ratio (PSNR) [51], and Feature-based Image Quality Assessment Metrics like FID [55], each serving to quantify different aspects of the prediction accuracy.

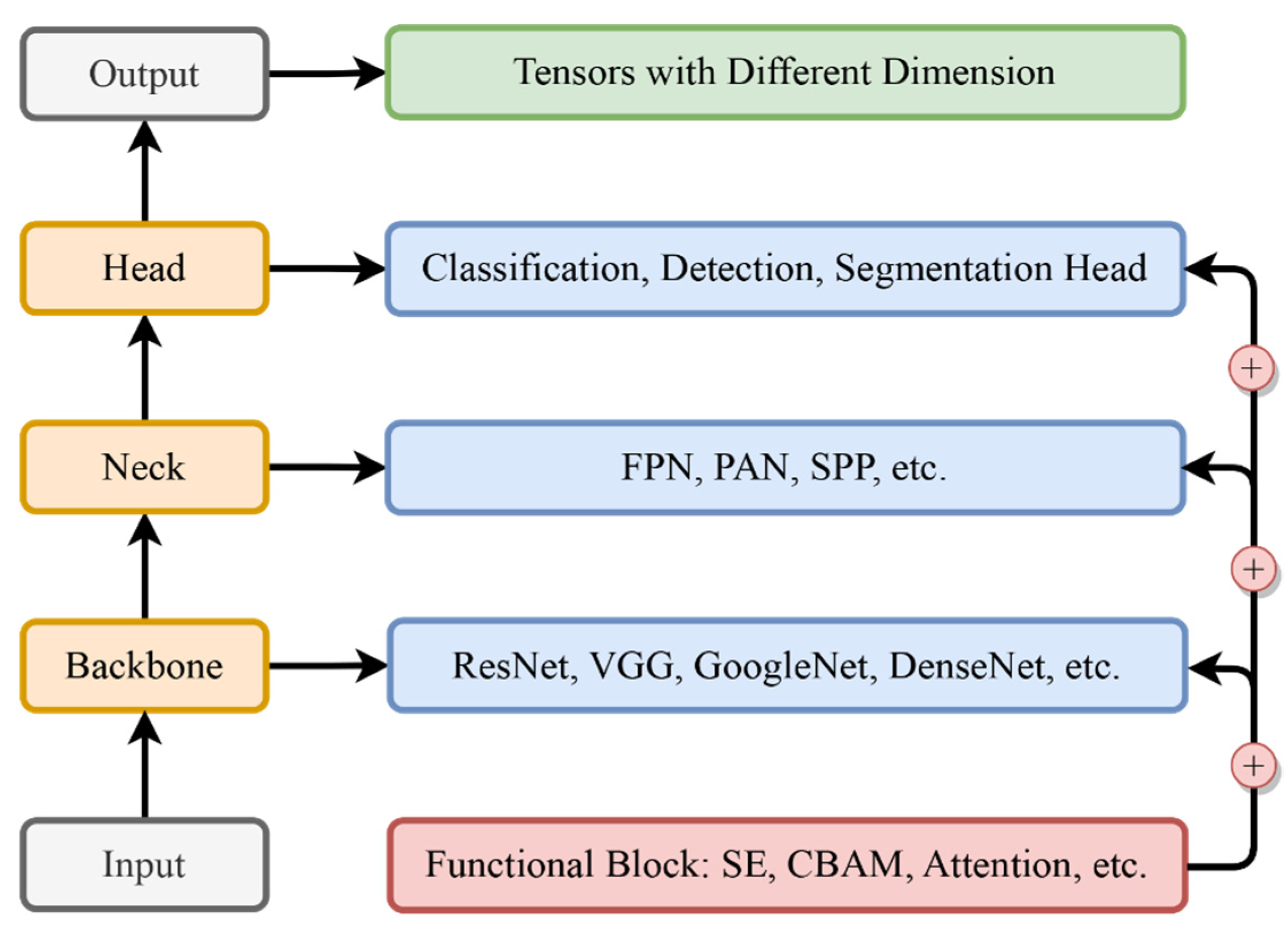

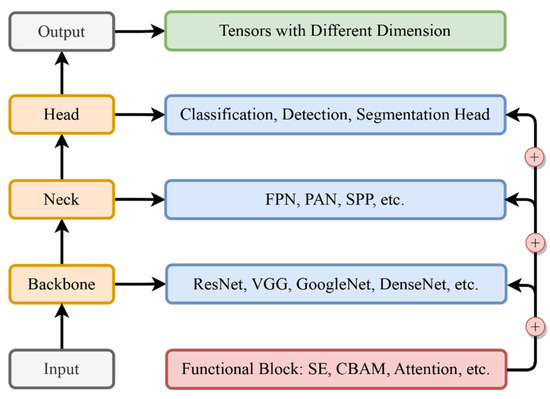

2.5. Architecture Modules in CNN

In CNN design, the architecture is not a singular entity but a complex assembly of interconnected modules, each integral to the network’s functionality and performance. At the core of this architectural framework are four primary components: the backbone, neck, head, and functional blocks. These elements, each with distinct roles and functionalities, contribute to the network’s capacity for processing and interpreting complex data, as shown in Figure 6. An examination of these modules provides a comprehensive understanding of the structure of contemporary CNNs. This analysis will focus on dissecting these internal modules, elucidating their importance in forming the foundation of CNN architecture.

Figure 6.

Architecture modules and interactions.

2.5.1. The Backbone: The Core of Feature Extraction

The backbone is the foundational module of a CNN architecture, primarily responsible for feature extraction. These features are essential for the subsequent layers and modules, which rely on them to make accurate predictions or classifications. The evolution of backbones reflects a journey towards deeper and more intricate networks, aiming to capture a wide range of features from the input data. Initially, the backbone module was relatively simple. Early designs like LeNet [31] were effective for less complex tasks, signifying the inception of feature extraction design. As the field developed, architectures evolved into more sophisticated structures. Networks like VGG [56], ResNet [32], and Inception [57] emerged, representing a significant leap in the backbone’s design. These advanced structures were capable of capturing a broader spectrum of features from input data, laying a robust foundation for subsequent processing stages.

2.5.2. The Neck: Bridging and Refining Features

The neck module in CNN serves as a crucial link between the backbone and head modules, playing a vital role in refining and reorganizing the extracted features. It is instrumental in tasks requiring a detailed understanding of spatial relationships and feature hierarchies, like object detection and segmentation, e.g., landslide detection and mapping. The primary function of the neck is to enhance the network’s spatial awareness by integrating and reorganizing features from various layers of the backbone, providing comprehensive and contextually relevant features to the head module. This process ensures the accuracy and reliability of the final output. Notable implementations of the neck module include the Feature Pyramid Network (FPN) [58], Path Aggregation Network (PAN) [59], and Spatial Pyramid Pooling (SPP) [60], known for their effectiveness and wide adoption.

2.5.3. The Head: Tailoring to Specific Tasks

The CNN’s head module is intricately designed to suit specific tasks like classification, detection, or segmentation, varying based on spatial resolution and channel dimension needs. For example, in classification tasks, the head module often includes fully connected layers thanks to their effectiveness in summarizing and interpreting the features extracted by previous layers, leading to a final prediction. This is essential for classifying images into distinct categories. In detection tasks, region proposal networks (RPNs) [35] are used in the head module for their proficiency in identifying and localizing various objects within an image, crucial for tasks that require pinpointing specific items. Similarly, in segmentation tasks, the head module might integrate convolutional layers designed to perform pixel-wise classification, essential for delineating the precise boundaries of different objects in the image. Such adaptability in design ensures that the module aligns with the unique requirements of each task.

2.5.4. Functional Blocks: The Vanguard of Enhancement

The development of functional blocks like attention, Atrous Spatial Pyramid Pooling (ASPP) [61], Squeeze-and-Excitation (SE) [62], and the Convolutional Block Attention Module (CBAM) [63] represents the forefront of network architecture innovation. These blocks can be integrated at various stages of the network to enhance overall performance. For example, attention blocks focus on relevant features while suppressing less useful ones. SPP blocks help the network achieve spatial invariance by pooling features at various scales. SE blocks adaptively recalibrate channel-wise feature responses. CBAM blocks combine channel and spatial attention mechanisms for a thorough feature refinement. The development of these blocks was driven by the need to address the limitations in CNNs regarding feature representation and adaptability.

Understanding architecture modules, including the backbone, neck, head, and functional blocks, is important for comprehending the design principles behind contemporary CNNs. In landslide studies, classification, detection, and segmentation are essential. The backbone, responsible for feature extraction, lays the groundwork by capturing essential information from remote-sensing imagery or geological data. Subsequently, the neck module refines and organizes these features, enhancing the spatial awareness, which is crucial for tasks like detecting and mapping the landslide-prone areas. Tailored to specific tasks, the head module aligns with the unique requirements from landslide classification, detection, or segmentation, offering adaptability to address the complexities of landslide features. The integration of functional blocks further enhances overall performance, providing a flexible approach to feature representation. As classical networks in deep learning may not always meet the specific requirements of real landslide tasks, a deep understanding of these modules empowers researchers to design networks tailored to practical landslide scenarios.

2.5.5. Implementing Strategies

In landslide research, effectively integrating deep learning requires a systematic approach tailored to the unique aspects of data. Initially, this involves choosing the right model architecture to suit the particular type of landslide data being analyzed. CNNs are typically preferred for analyzing spatial information in remote sensing images, thanks to their ability to handle complex spatial details. When dealing with time-series data that represent sequenced information associated with active landslides, models with recurrent layers, such as RNNs or LSTMs, are more apt given their strength in temporal data analysis. Researchers often employ robust frameworks like PyTorch, which offers extensive libraries for building and experimenting with models efficiently, making it a popular choice for developing and testing the networks.

Besides the model construction, effective management of the dataset is also important to the success of these models. It typically involves dividing the data into three segments, for example: 80% for training to establish learning patterns, 10% for validation to fine-tune the model, and the remaining 10% for testing to evaluate the network performance in an unbiased manner. Training deep learning models is an intricate process that goes beyond feeding data into algorithms. It requires thoughtful choices about loss functions and optimization algorithms, which are pivotal for guiding the learning process. Iterative hyperparameter tuning is also essential to refine and enhance model performance. Integral to avoiding overfitting are strategies like dropout and regularization; these not only prevent the model from memorizing the training data but also bolster its ability to generalize to new, unseen data.

The task of optimizing hyperparameters is particularly crucial and involves a careful balance to find the most effective model settings. Techniques such as grid search, random search, and Bayesian optimization are commonly used to explore the hyperparameter space. Regular validation is a key part of this process, ensuring that the model remains accurate and reliable. Early stopping is one such validation technique, which halts the training when improvement plateaus, preventing the potential of overfitting and helping to maintain the model’s ability to generalize [19]. By adhering to these outlined steps, researchers are equipped to develop and deploy robust deep learning models using platforms such as PyTorch. This systematic approach facilitates the navigation of the complexities involved in landslide studies, thereby enhancing the accuracy and reliability of research outcomes.

3. Overview of Deep Learning Frameworks

In this chapter, we provide an overview of five prevalent deep learning frameworks, each tailored for distinct tasks, including three spatial tasks—classification, detection, and segmentation—a temporal task—sequence framework—and a spatial–temporal task—hybrid framework. Each framework encompasses a range of representative models designed with specific architecture to meet unique requirements. Our focus is on reviewing their development, summarizing the essence of these networks within each framework, and highlighting their common structural and functional elements to offer a comprehensive understanding of their application in various contexts.

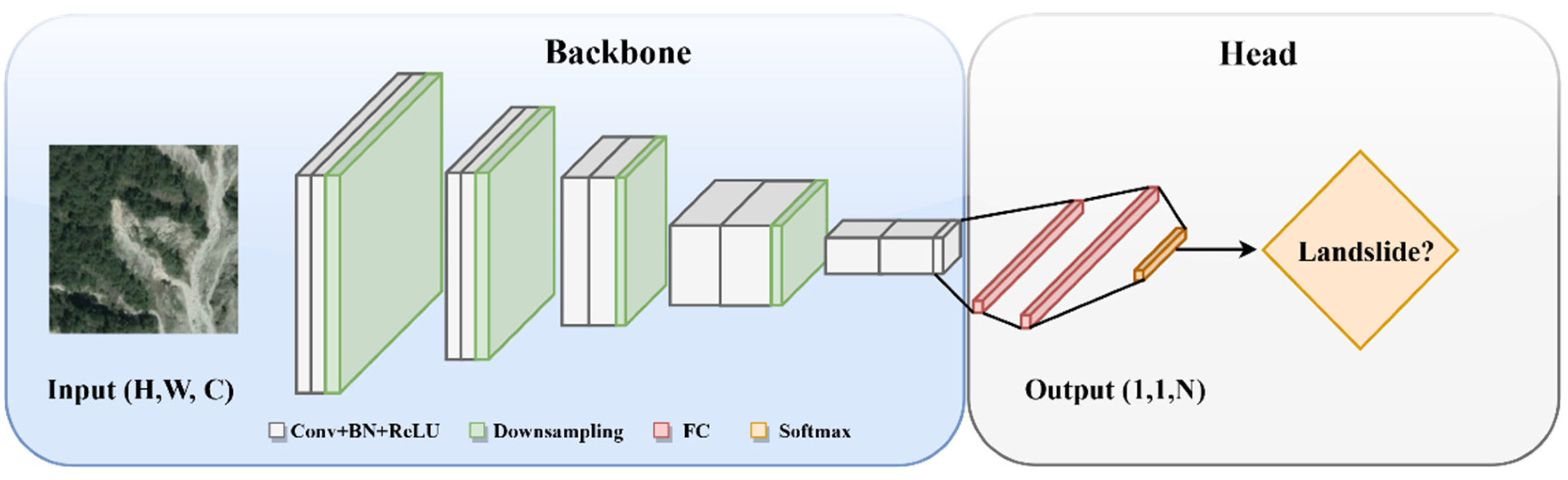

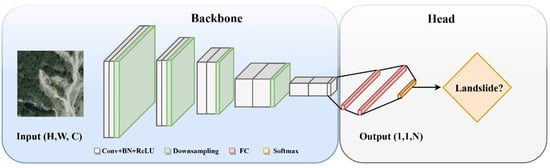

3.1. Deep Learning Classification Framework: The Bedrock of Feature Identification

Classification is a fundamental and essential task in computer vision. In landslide studies, classification tasks involve discerning features that are relevant to landslides, which encompasses activities like landslide susceptibility mapping. Classification networks typically encode the input into a 1 × 1 × N tensor, condensing spatial dimensions to a single point. This representation enables the network to make high-level predictions, such as identifying landslide presence or assessing the region susceptibility. Over years, these architectures have significantly evolved, influencing advancements in related vision tasks such as detection and segmentation, primarily through the shared utilization of backbone for effective feature extraction. The architectural diagram of the classification framework is shown in Figure 7.

Figure 7.

Architectural diagram of classification framework.

The evolution of classification networks in deep learning began with LeNet [31], a groundbreaking CNN model that set the stage for neural network applications in visual data processing. This evolution was propelled further by pivotal models like AlexNet, which showcased the efficacy of CNNs in image classification tasks. Subsequently, VGG [56] took a leap forward by exploring very deep architectures with small 3 × 3 convolutional filters, which further enhanced the network’s performance. ResNet [32], another milestone, innovatively addressed the vanishing gradient problem through its residual connections. These foundational networks not only solidified the core principles of CNNs but also markedly improved the capability to discern and categorize complex image patterns.

Simultaneously, exploration emerged to broaden network architectures. Models like ResNext [64] utilized grouped convolutions to expand the network’s structure, enhancing its capacity without a substantial increase in computational demands. In parallel, GoogleNet’s Inception modules [57] made strides in multi-scale feature extraction, showing improved results over previous architectures. Concurrently, lightweight networks like EfficientNet [65] and ShuffleNet [66] were proposed for working in resource-constrained settings, such as on mobile devices. More recently, the Vision Transformer (ViT) [67] gained huge attention, marking a transition in backbone architectures. Diverging from its predecessors, ViT adopts the transformer architecture, commonly used in Natural Language Processing (NLP), for image processing. This model has demonstrated notable results, particularly in data-rich environments, signifying a trend from traditional convolution-based methods to attention-based feature extraction.

For classification networks, the main focus is on devising the backbone architecture for effective feature extraction. This foundational work has facilitated their application in other spatial tasks, such as detection and segmentation. Generally, the neck component in these networks is integrated with the overall structure, smoothly transitioning from feature extraction to classification. The head of these networks consists of fully connected layers, often ending in a SoftMax layer (Figure 7), which is responsible for projecting the extracted features into a probability distribution across different classes. The output of these networks corresponds to the number of predefined classes. In such frameworks, cross-entropy is a commonly used loss function due to its efficacy in assessing classification accuracy.

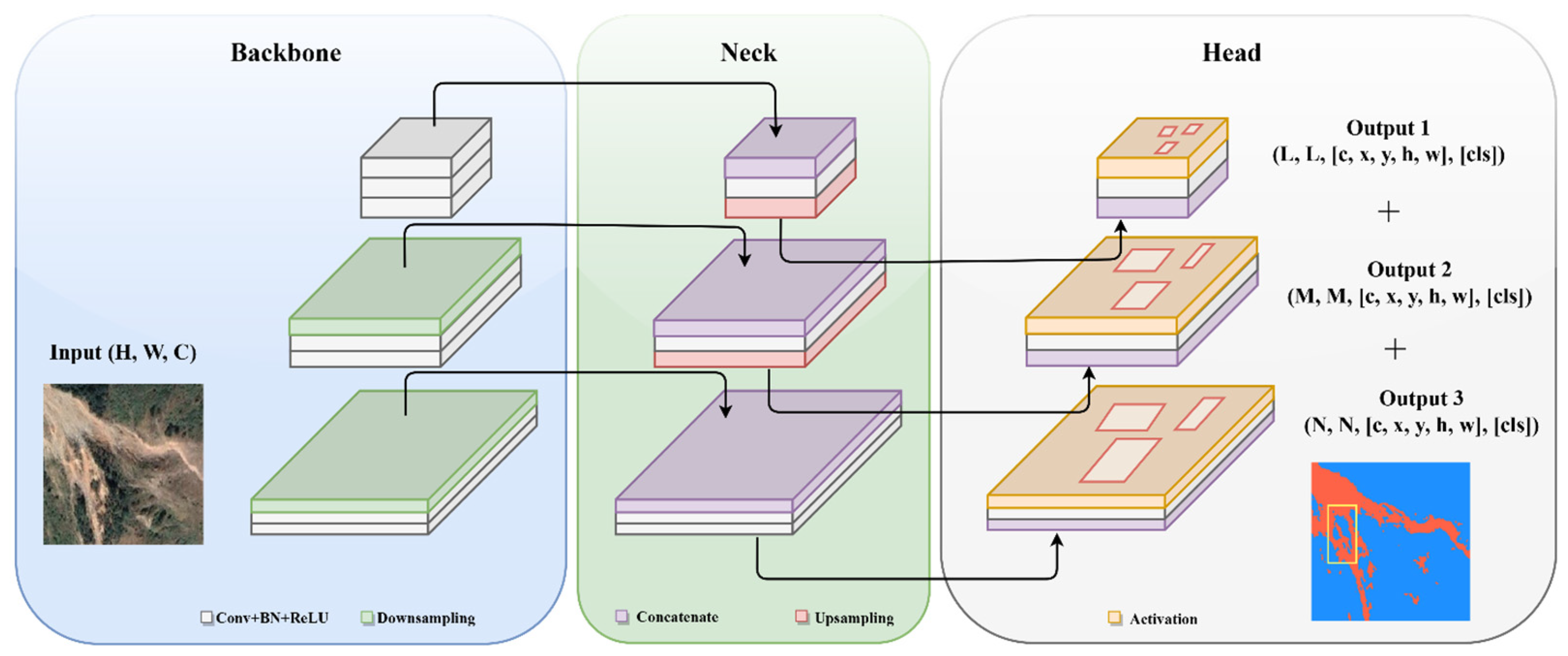

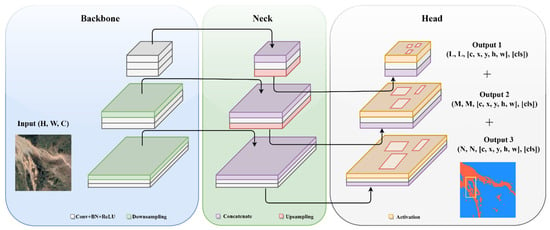

3.2. Deep Learning Detection Framework: Balancing Localization and Identification

Object detection is a crucial task in computer vision, distinct from simple image classification. In landslide studies, object detection is naturally involved in detecting and locating specific objects or features relevant to landslides. This encompasses the detection of potential landslides, precursor deformation, and other relevant elements within remote-sensing images or geological data. Object detection involves identifying and localizing multiple objects within an image, which requires a sophisticated design for its neck and head modules to handle the complexity. Consequently, a variety of architectural styles, such as two-stage, one-stage, anchor-free, and transformer-based detectors, have been developed. Each of these architectures has its unique approach, with varying strengths and methods to fulfill the requirements of object detection. The architectural diagram of the detection framework is shown in Figure 8.

Figure 8.

Architectural diagram of detection framework.

The development of object detection frameworks has undergone significant advancements, starting with the two-stage RCNN series [33,34,35], including RCNN, Fast RCNN, and Faster RCNN. These frameworks focus on generating region proposals for potential objects in an image and then classifying each region. While highly accurate, they are computationally intensive due to the two-step process. Seeking to balance speed and accuracy, one-stage detectors like the “Single Shot MultiBox Detector (SSD)” [68] and the “You Only Look Once (YOLO)” series [36,37,38] emerged. These models streamline the detection process, directly predicting object classes and locations in a single forward propagation of the network. While offering faster detection speeds, they sometimes compromise on accuracy compared to two-stage models, particularly in complex scenes or with small objects.

In parallel, efforts to transcend the constraints of traditional anchor-based approaches have led to the rise of anchor-free methods like CenterNet [69] and CornerNet [70]. These innovative techniques localize objects without depending on predefined anchor boxes, thus simplifying the model and reducing computational load. Alongside this, the involvement of transformer-based techniques, notably exemplified by DETR (Detection Transformer) [71], has significantly altered the landscape of object detection. The DETR leverages the power of transformers to improve object detection by effectively handling complex spatial relationships and long-range dependencies. This shift towards transformer-based methods represents a major evolution in object detection, offering a new perspective on handling spatial data complexities.

In the evolution of detection frameworks, two crucial components are the neck and head. The neck, often incorporating structures like Feature Pyramid Networks (FPN) [58] and Path Aggregation Network (PAN) [59], fuses multi-scale features from various layers of the backbone. This fusion is pivotal for detecting objects at different scales, merging vital information for both object localization and classification. The head has a dual function: localizing objects, typically via bounding box coordinates, and classifying them. To meet these dual requirements, a composite loss function, usually a combination of localization loss (such as Smooth L1) and classification loss (cross-entropy), is employed. This blended loss is fundamental for object detection frameworks, ensuring precise object classification and accurate spatial localization.

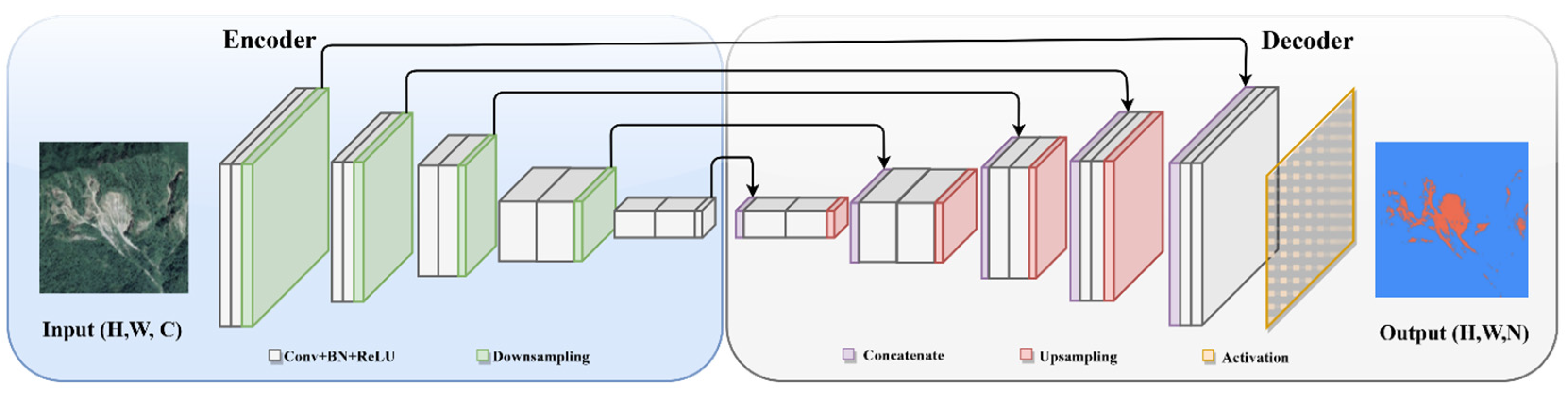

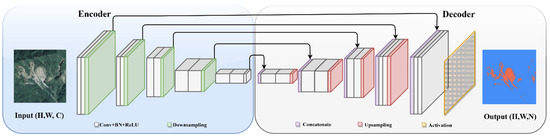

3.3. Deep Learning Segmentation Framework: The Intricacy of Pixel-Level Classification

The segmentation framework, characterized by pixel-wise classification, is inherently more complex than the classification framework due to its need for detailed spatial analysis at the pixel level. In landslide studies, segmentation is applied to delineate the precise boundaries of landslide-affected areas, aiding in a detailed and accurate spatial analysis. It is particularly useful for tasks such as landslide mapping, where the goal is to classify each pixel within an image to accurately identify and map areas impacted by landslides. The backbone in segmentation framework is also aimed at feature extraction, and commonly has similar choices like ResNet and VGG that are used in classification networks. Of course, these backbones might undergo slight modifications such as layer adjustments or block addition, to fit the specific requirements of the practical task. Unlike detection frameworks, the role of the neck in segmentation is not always distinct and it is often integrated within the backbone. Notable examples include the Atrous Spatial Pyramid Pooling (ASPP) [61], Pyramid Scene Parsing Network (PSPNet) [72] and Spatial Pyramid Pooling (SPP) [60], which are designed to enhance multi-scale feature awareness, enabling an accurate dense prediction. The architectural diagram of segmentation framework is shown in Figure 9.

Figure 9.

Architectural diagram of segmentation framework.

Distinguished from classification framework, segmentation networks aim for dense predictions, mostly necessitating output dimensions matching the input resolution, often represented as H × W × N, where H and W denote height and width, respectively. This structure is ideal for pixel-level tasks like landslide mapping, as it provides detailed spatial information. Therefore, after down-sampling via the backbone, it becomes crucial to up-sample the features back to their original resolution, requiring the use of a decoder. The evolution of decoders parallels the development of classic segmentation networks. This began with the Fully Convolutional Network (FCN) [39], which introduced transposed convolution layers for up-sampling, enabling end-to-end training and precise pixel-level predictions. Enhancements followed with FCN variants like FCN-8s, which integrated skip connections. These connections fused deep, coarse semantic information with shallow, fine appearance details, thereby augmenting spatial precision, particularly around object boundaries.

This progression continued with the advent of U-Net [40], which introduced a symmetric encoder–decoder architecture, where the decoder progressively up-samples feature maps while concatenating them with corresponding encoder feature maps, significantly improving localization capability. Following this, the DeepLab series (v1–v3+) [73,74,75] brought novel advancements. They introduced atrous (dilated) convolutions in both the encoder and decoder stages and incorporated Atrous Spatial Pyramid Pooling (ASPP) in the encoder, alongside a new decoder design focused on refining segmentation outcomes and edge clarity. Later developments, such as the Attention U-Net [76], expanded these innovations by embedding attention mechanisms within the decoder. This integration directed the model’s focus toward more relevant regions, and further enhanced precision in segmentations across diverse scenarios. Most lately, transformer-based models [77] have applied self-attention to capture long-range dependencies across the entire image, providing a global context for segmentation. These advancements indicate a trend towards networks that not only understand local features but also incorporate a wider image context, leading to more accurate and detailed segmentation results.

In segmentation frameworks, the choice of loss function can vary depending on the specific requirements of the task. Commonly used loss functions include pixel-wise cross-entropy, which is effective for classifying each pixel individually. Additionally, geometric loss functions like Dice Loss or IoU loss are employed to accurately capture the shapes and overlaps of segmented areas [49]. These loss functions can be used individually or in combination, depending on the need to balance pixel-level accuracy with the geometric representation of segmentation results.

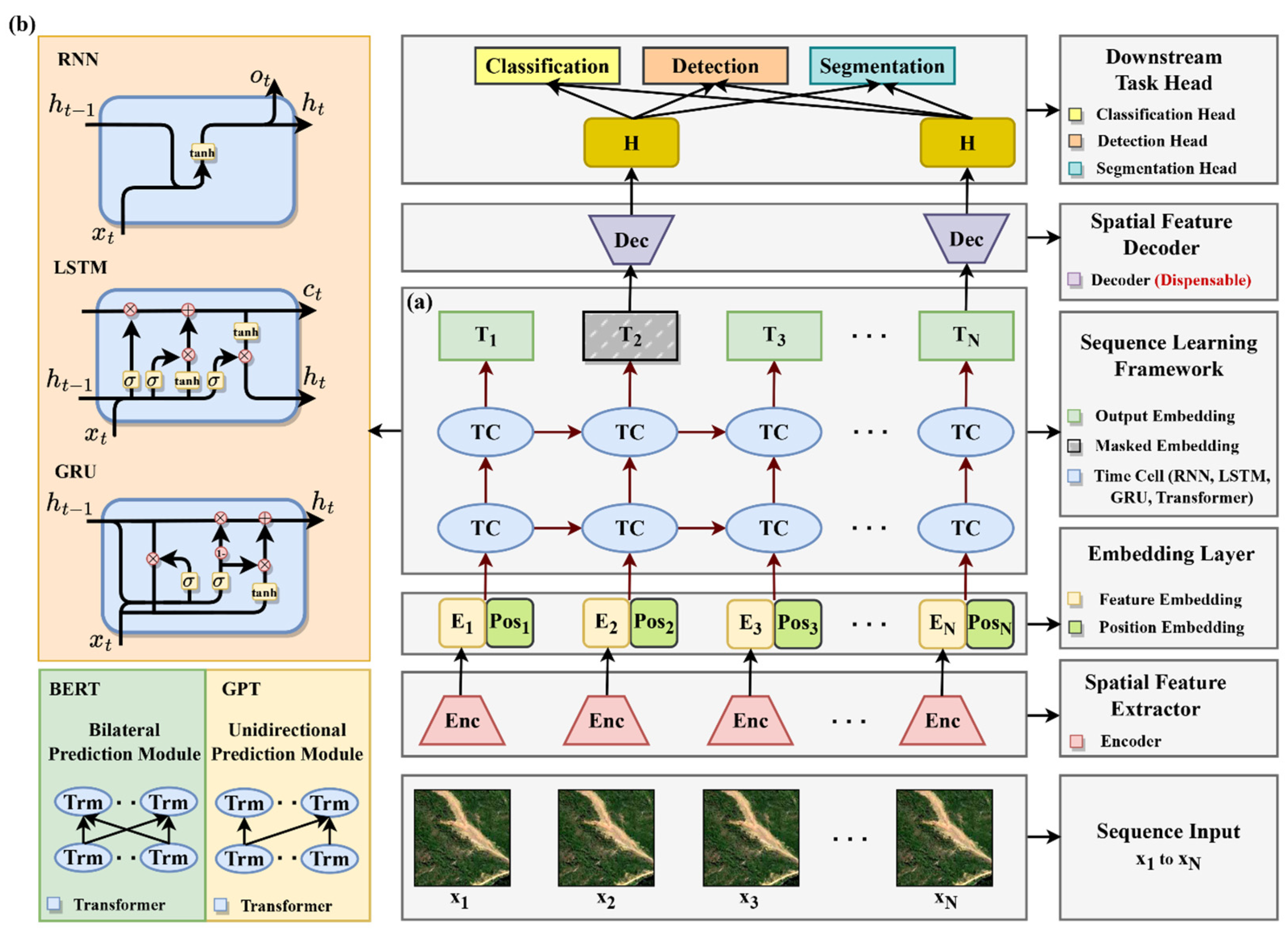

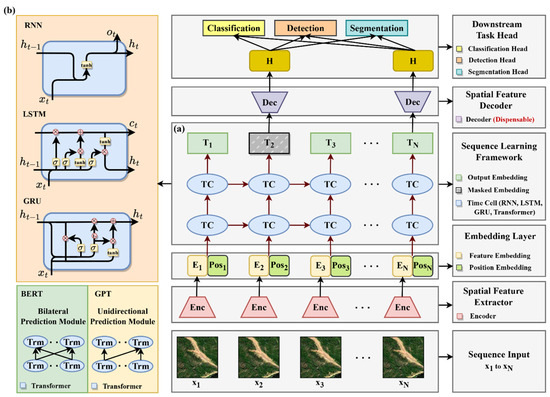

3.4. Deep Learning Sequence Framework: Contextual Data Modeling

Sequence data analyzing in deep learning represents a unique and challenging domain, distinct from the realms of classification, detection, and segmentation. It focuses on the temporal dynamics and dependencies within the contextual data, a critical aspect in a wide range of applications. In landslide studies, sequence data comprise a diverse input from multi-sources, including remote sensing, geo-environmental, meteorological, and topographical data, etc. Additionally, it also involves time-series data, such as remote-sensing images, and historical pointwise deformation measurements. This section delves into the evolution of deep learning sequence frameworks, tracing their development from initial models to the sophisticated networks we see today. The architectural diagram of sequence framework is shown in Figure 10a.

Figure 10.

Architectural diagram of hybrid framework: (a) sequence framework; (b) hybrid framework.

Being the initial representative work, Recurrent Neural Networks (RNNs) [41] provide a fundamental approach to sequence processing by integrating memory elements into neural networks. However, RNNs have encountered limitations in managing long-term dependencies, which led to the development of more advanced architectures. Long Short-Term Memory (LSTM) [42] networks overcame these challenges by incorporating gates that control information flow, thereby adeptly capturing extended dependencies in sequences. Subsequently, Gated Recurrent Units (GRUs) [43] were introduced as a more efficient variant, delivering similar functionalities to LSTMs but with a less complex architecture, which reduced computational requirements. A pivotal shift occurred with the involvement of the transformer model [44], which moved away from reliance on recurrent mechanisms. Leveraging self-attention mechanisms, transformers are capable of processing entire sequences in parallel, thus effectively capturing dependencies between sequence elements that are widely separated by many positions, surpassing the capabilities of their predecessors. This architecture has since become a foundational element, inspiring numerous subsequent innovations in sequence modeling.

The recent advances in deep learning for sequence data, especially in Natural Language Processing (NLP), have been characterized by employing transformer architecture and extensive data training. This transition began with the development of ELMo (Embeddings from Language Models) [78]. Although ELMo does not use transformer architecture, it laid the groundwork for creating deep, contextualized word representations. ELMo’s bidirectional LSTM structure was instrumental in generating word embeddings that comprehensively capture semantic attributes from surrounding text, paving the way for the later adoption of transformer-based models in NLP. This evolution was further propelled by the development of BERT (Bidirectional Encoder Representations from Transformers) [79] by Google. BERT, utilizing the transformer architecture, introduced an innovative bidirectional training method. This approach allows the model to contextualize information from both directions of a sentence, leading to a more nuanced understanding of language. BERT’s effectiveness in tasks such as question answering and language inference is largely due to this bidirectional processing, coupled with its training on extensive text datasets. Simultaneously, OpenAI’s GPT (Generative Pretrained Transformer) series [80,81,82,83], particularly GPT-4, showcased the extraordinary effectiveness of transformer-based models on a large-scale language dataset. Focusing primarily on unsupervised learning and trained on a diverse array of internet text, GPT models have demonstrated a remarkable ability to generate coherent and contextually relevant text sequences. The success of the GPT series has highlighted the capability of large transformer-based models to grasp and reproduce the intricacies of human language, marking a significant milestone in the field of NLP.

3.5. Deep Learning Hybrid Framework and Transfer Learning

As reviewed in the previous sections, the spatial frameworks are skilled at extracting and interpreting complex spatial patterns present in spatial data, adeptly handling tasks such as classification, detection, and segmentation. In contrast, sequence frameworks excel at processing and comprehending time-series data. Thus, combining these two frameworks to foster a hybrid framework, we can perform more complex and advanced monitoring and prediction tasks, which have the potential to enhance the accuracy and reliability of predictive results, and to improve the overall understanding in landslide dynamics. The architectural diagram of hybrid framework is shown in Figure 10b.

In such an integrated hybrid framework, the backbone of the spatial network primarily extracts spatial features from observational data collected at each time point. These features are then transformed into embeddings, configured to meet the sequence framework’s input requirements. This process ensures that the spatial characteristics of the data are captured comprehensively. Subsequently, these spatial embeddings are processed through a sequence framework to learn the contextual relationships. This step is crucial for uncovering latent connections and temporal patterns within the data. A variety of architectures, ranging from traditional models like RNN and LSTM to advanced models such as BERT and GPT, can be employed for this contextual learning. The choice of architecture depends on the complexity of the task and the nature of the data. Once the temporal relationships are established, the processed features are then fed into the corresponding head of the spatial framework. Here, the head can be selected corresponding to specific tasks at hand. For instance, we can install the classical detection heads, like the YOLO series, to conduct a landslide detection task, while for a landslide displacement prediction task, we typically adopt classification heads with regression loss to implement. This modular approach, separating the spatial framework into functional components like the backbone, neck, and head, and integrating them with sequence frameworks, provides high flexibility and convenience. It allows for the seamless assembly and reconfiguration of various network structures to meet the specific needs of diverse real-world tasks.

Notably, training such a model effectively requires a strategic approach, and transfer learning presents a viable solution. Initially, the spatial network can be trained using data acquired at a single time point. Once this training is complete, the parameters of the backbone and head will be frozen, and then they can be integrated with the chosen sequence framework to train the sequence framework individually. Finally, we can fine-tune the entire integrated system—both spatial and temporal components—to ensure the optimal training performance. This innovative integration of spatial and temporal frameworks offers an advanced tool for both real-time monitoring and predictive modeling. A comparative analysis of five prevalent deep learning frameworks is shown in Table 4.

Table 4.

Comparative analysis of deep learning frameworks.

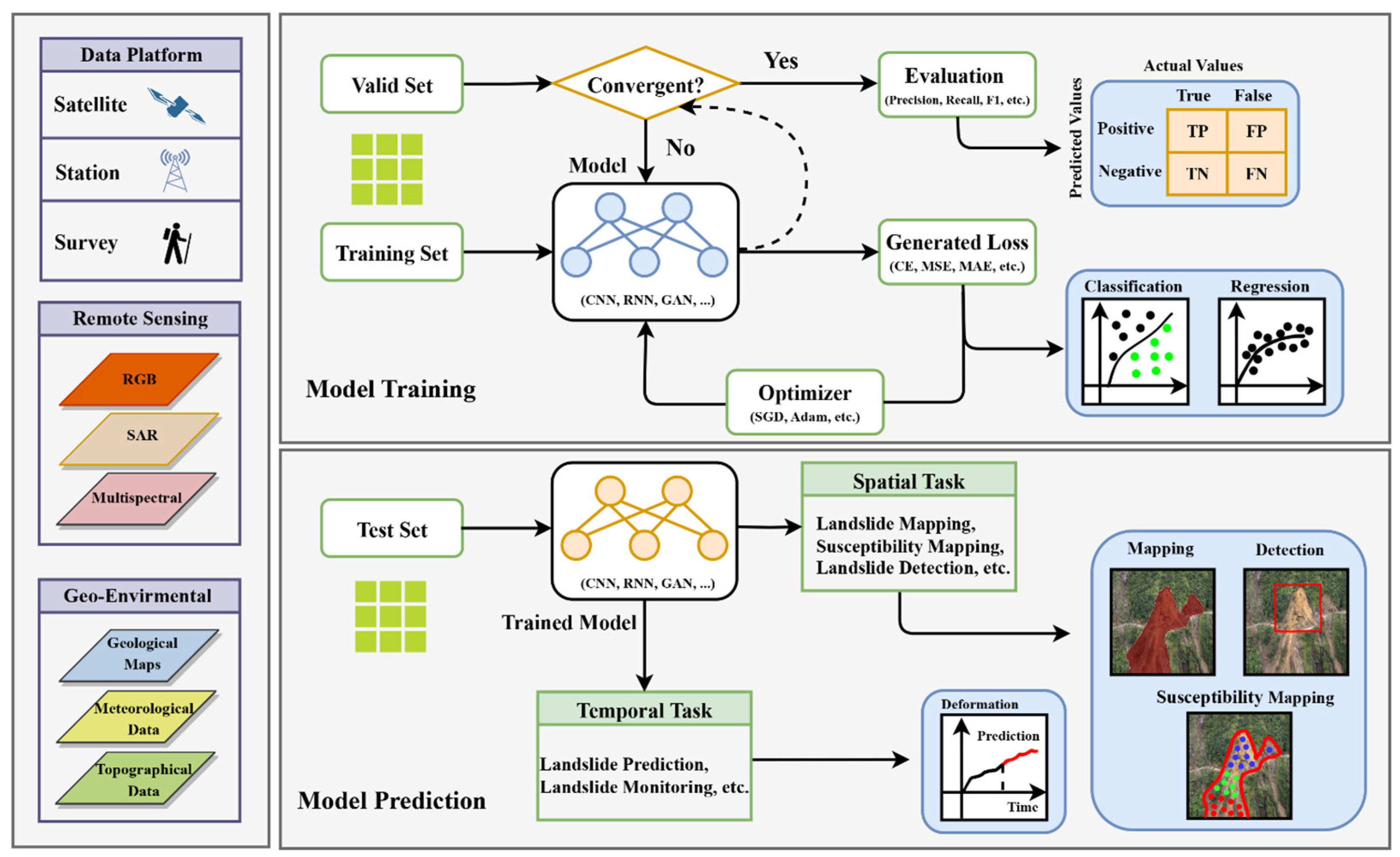

4. Deep Learning Frameworks Application for Landslides

4.1. Landslides

Landslides are among the most common and destructive natural disasters in mountainous areas, posing significant threats to human lives and properties. Alongside, they can cause damages to critical infrastructures like roads, bridges, and power lines [84]. They also lead to the destruction of vegetation, soil erosion, and land degradation. The frequency and impact of landslides depend on complex environmental and triggering factors [85]. Environmental factors such as soil composition and geological features play a crucial role in determining the possible locations of landslides. On the other hand, triggering factors like climatic changes and seismic events are pivotal in initiating these events. The intricate and nonlinear interplay of these factors challenges traditional methods for modeling their physical mechanisms and predicting their development, which has prompted researchers to pivot towards more effective, data-driven approaches [86]. Recent advancements in deep learning have shown significant capabilities in extracting landslide-related information from large datasets [87]. The amalgamation of deep learning with extensive remote sensing from satellites and in situ sensors has equipped researchers with a powerful tool for landslide research and prevention [88]. Efforts mainly can be divided into two aspects.

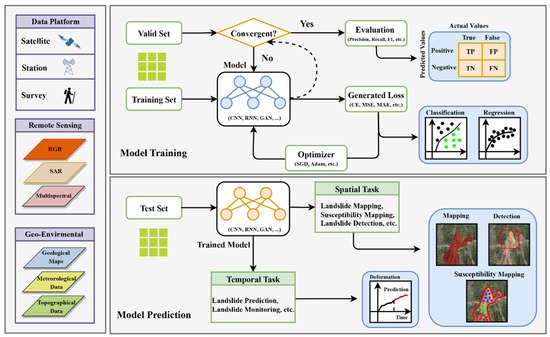

The first aspect focuses on known landslides, in which researchers employ multi-source data, including remote sensing data (SAR, optical, and multispectral sensors) and geo-environmental data (such as geological, hydrological, meteorological, and topographical data.) to predict potential landslides and assess their risk levels. This process is known as susceptibility mapping [89]. Another key effort is displacement prediction, which uses historical data on landslide movement and related environmental factors to forecast their future displacements [90]. Regarding the second aspect, scholars have aimed at addressing slow-moving landslides, and have developed algorithms for landslide detection and mapping from remote sensing images, each serving distinct yet complementary purposes. Landslide detection, taking an object perspective, uses algorithms to locate landslides and approximate their areas with bounding boxes [91]. This method is particularly effective for quickly identifying landslide locations in extensive surveys. In contrast, landslide mapping, adopting a pixel-based approach through segmentation techniques, offers a more granular analysis. It precisely defines the boundaries of each landslide at the pixel level, providing vital details for comprehensive risk assessments and mitigation strategies [92]. General data processing and the workflow using deep learning for landslide detection, mapping, susceptibility mapping, and displacement prediction is shown in Figure 11.

Figure 11.

Deep learning workflow for landslide studies.

In the following section, we will investigate the various deep learning frameworks suitable for each of four major tasks in landslide studies: landslide detection, mapping, susceptibility mapping, and displacement prediction. For each task, we highlight specific deep learning frameworks that are applicable and review related studies that have utilized these frameworks. We aim to introduce a clear perspective on the selection of frameworks for each task and provide insights into the latest achievements and performances of these techniques in the context of landslide risk management.

4.2. Landslide Detection (Object-Based)

Deep learning has revolutionized the field of remote sensing, particularly in challenging tasks such as landslide detection. This section delves into landslide detection using object-based deep learning approaches, e.g., a detection framework. Generally, a detection framework includes two-stage, one-stage, anchor free, and transformer-based detectors. A thorough review of the literature shows that most of its application in landslide detection is in the form of primary two-stage [33,34,35] and one-stage detectors [36,37,38], while anchor-free and transformer-based detectors are less common. This could be due to anchor-free detectors [69,70] and transformers [71] being new and not as widely applied. Here, we will explore the latest developments and applications in these technologies for landslide detection.

Two-stage detection networks typically involve a region proposal network (RPN) for generating candidate bounding boxes and then refine and classify these regions in a second stage, leading to potentially higher accuracy but slower inference times. Representative networks include RCNN, Fast RCNN, and Faster R-CNN [33,34,35]. Applied them into landslide detection, Guan et al. (2023) [93] used an improved Faster R-CNN to detect slope failures and landslides in the Huangdao District of Qingdao in China, where they introduced a multi-scale feature enhancement module into Faster R-CNN to enhance the network’s perception of different scales of landslides. The experiments showed that the improved model outperformed the traditional version, with an AP of 90.68%, F1-score of 0.94, recall of 90.68%, and precision of 98.17%. The authors applied it to detect geological hazards of slope failure in Huangdao District, and it only missed two landslides, demonstrating a high detection accuracy. To improve the accuracy of landslide detection in satellite images, Tanatipuknon et al. (2021) [94] combined two object detection models based on Faster R-CNN with a classification decision tree (DT). In detail, the first Faster R-CNN was trained on true color (RGB) images, and the other was trained with grayscale DEMs. The results from both models were employed by a DT to generate bounding boxes around landslide areas. Compared to those using either RGB or DEMs, this integrated approach showed improved performance in various evaluation metrics, demonstrating its effectiveness in landslide detection.

Due to the large difference in the landslide scale and the important characteristics of the landslide’s shallow layer, Zhang et al. (2022) [95] modified the original Faster R-CNN by incorporating the Feature Pyramid Network (FPN) with ResNet50. This integration aimed to address the challenges posed by the varying scales and shallow layer characteristics of landslides. Validated with a public landslide inventory from Bijie City, Guizhou, China, the modified model demonstrated a significant improvement in accuracy (87.10%) compared to that of VGG16 (70.20%), which indicates a promising method for future research. The InSAR technique has immense potential for detecting active landslides by its unique advantage in measuring subtle ground deformation. However, the operational application of InSAR for landslide detection in a wide area is still hindered by the high labor and time costs for the visual interpretation and manual editing of the InSAR-derived velocity maps. Aiming at this obstacle, Cai et al. (2023) [96] developed a method combining InSAR and a CNN, specifically using an improved Faster RCNN with attended ResNet-34 and FPN. Applied in Guizhou province, China, this approach successfully identified over 1600 active landslides from a substantial number of Sentinel-1 and PALSAR-2 images, demonstrating high precision and recall in various test areas. This method shows significant promise in efficiently updating landslide inventories and aiding in disaster prevention. To quickly detect landslide hazards for a timely emergency rescue, Yang et al. (2022) [97] proposed an enhanced Faster R-CNN for landslide detection. Specifically, this method has several aims, including image quality improvement, batch size elimination using group normalization, multiscale feature fusion with a Feature Pyramid Network, and employing a deep residual shrinkage network as the backbone to extract complex spatial features. Experimental results indicate a notable improvement in accuracy and average precision compared to the standard Faster R-CNN and other one-stage models, such as YOLOv4 and SSD, which proves the model’s effectiveness in landslide detection.

Different from two-stage detectors, one-stage detection networks directly predict bounding boxes and class scores in a single step using predefined anchor boxes, which makes them faster, simpler, and suitable for real-time applications. Representative networks include the YOLO series [36,37,38], SSD [68], and RetinaNet [98]. For their application in landslide detection, Fu et al. (2022) [99] presented a novel method for detecting slow-moving landslides from stacked phase-gradient maps, aiming at overcoming the limitations of phase unwrapping errors and atmospheric effects. In detail, they developed a burst-based, phase-gradient stacking algorithm to sum up phase gradients in short-temporal-baseline interferograms, then trained an Attention-YOLOv3 network with manually labeled landslides on the stacked phase gradient maps to achieve a quick and automatic detection. Applying this method to an area of approximately 180,000 km2 in southwestern China, they identified 3366 slow-moving landslides. By comparing these results with optical imagery and previously published landslide data for the region, their method proved to be precise and efficient in automatic detection across large areas. Notably, it unveiled about 10 additional counties with high landslide density, beyond the known high-risk areas, emphasizing the need for increased geohazard attention in these locations. This finding demonstrates the potential of their method for nation-wide slow-moving landslide detection, offering a crucial tool for improved geohazard monitoring and risk management. Regarding the need of an open, large, and widely recognized landslide dataset, Wang et al. (2021) [100] created a landslide dataset through open satellite image data, in which the landslide boundary was marked by professional engineering geologists. Building upon the foundational YOLOv5, they innovatively integrated Adaptively Spatial Feature Fusion (ASFF) and the Convolutional Block Attention Module (CBAM) to enrich the model’s capability to assimilate multi-scale feature information. This enhancement yielded a 1.64% improvement in model performance. Traditional field survey, while reliable for small-scale investigations, proves inadequate for large areas due to its cost and labor intensity. To cope with this limitation, an innovative approach [101] was proposed to detect and monitor these hazards in China’s high-mountain areas. The researchers firstly tested the feasibility of using SBAS-InSAR with Sentinel-1A data for landslide detection in the Yunnan–Myanmar border region. Subsequently, they employed YOLOv3 with Gaofen-2 images for further analysis. Applied in Fugong County, Yunnan Province, results showed that most landslides identified by manual interpretation were detected by SBAS-InSAR as true positives, accounting for 68.75% of the total references. This indicates that the majority of these were active during the study period and posed potential threats to the surrounding areas, underscoring the importance of such advanced detection methods for public and local authority awareness.

Two-stage detectors, though accurate, tend to be slower, whereas one-stage detectors offer faster detection but at a cost to accuracy. To concur this, an improved one-stage detection model [102], YOLO-SA, for use in emergency rescue and evaluation decision-making with high-spatial-resolution remote sensing images on mobile and embedded equipment, was proposed. In this study, two enhancements were presented, including adopting group convolution, ghost bottleneck modules to reduce parameters, and integrating an attention mechanism for improving accuracy. Tested in Qiaojia and Ludian counties, Yunnan, China, YOLO-SA outperformed various advanced models in terms of parameter efficiency, accuracy, and speed, which confirms its effectiveness in potential landslide detection in near-real time. Another challenge poses itself that in some scenarios, remote-sensing satellites may not be able to timeously obtain the image from the disaster areas due to the orbital cycle and weather impacts. Addressing this limitation, Yang et al. (2022) [103] utilized UAV images from the Nepal earthquake-affected area of Zhangmu Port and the transfer learning strategy to use their detection model, which aims to overcome the shortage of sufficient training data. Comparative analysis revealed that this approach surpassed the detection performance of the SSD model, offering an effective solution for quick and accurate landslide detection.

No matter whether a two-stage or one-stage detector, each actually possess unique strengths and limitations in the context of landslide detection. Researchers have established a comparative analysis of multiple classical detectors, providing valuable insights into their efficacy in the same task. For example, Wu et al. (2022) [104] tested representative algorithms like Faster R-CNN, YOLOv3, and SSD on a substantial landslide remote sensing dataset. Technical advantages and functional characteristics of each algorithm were thoroughly compared and analyzed. Their results demonstrated that while the Faster R-CNN algorithm offers higher accuracy, YOLOv3 and SSD are more suited for timely monitoring and practical applications due to their faster detection speeds. Similarly, Zhang et al. (2022) [105] constructed a comprehensive dataset using Google Earth imagery. They applied and evaluated the performance of various detectors including YOLOV5, Faster RCNN, EfficientNet, SSD, and a modified YOLOV5 embedded with CBAM and Ghost modules. The findings indicated that SSD was highly effective in detecting scenarios where only one landslide existed within an image. On the other hand, the modified YOLOV5 demonstrated proficiency in identifying scenarios with multiple landslide events in an image, which strikes a balance between detection capabilities and model complexity. Detecting loess landslides has always been challenging due to their similarity to the surrounding environment in optical images. To tackle this, Ju et al. (2022) [106] employed different deep learning methods for detecting loess landslides from Google Earth images. They established a database with 6111 interpreted landslides from three areas in Gansu Province, China. They evaluated three detection networks, including RetinaNet, YOLO v3, and Mask R-CNN. Their results showed that the detection accuracy is positively correlated to landslide areas; namely, larger landslides can be identified more accurately. Among the tested models, the Mask R-CNN demonstrated the highest accuracy, with an F1-score of 55.31%, which proves the viability of object detection methods in automated loess landslide detection from satellite imagery. A summary of case studies of landslide detection is shown in Table 5.

Table 5.

Summary of case studies of landslide detection.

4.3. Landslide Mapping (Pixel-Level)

Landslide mapping is a critical task for disaster management and mitigation. The complexity and variability of landslides make this task challenging for traditional methods. By treating landslide mapping as a pixel-level segmentation task, deep learning, with its advanced segmentation frameworks, offers a powerful solution. These frameworks can precisely and quickly delineate landslide-prone areas, improving the capability of emergent responses [107]. Here, we explore the latest achievement of landslide mapping using deep learning segmentation frameworks, highlighting how they transform our ability to identify and manage these natural hazards.