A Hybrid Index for Monitoring Burned Vegetation by Combining Image Texture Features with Vegetation Indices

Abstract

:1. Introduction

2. Dataset and Preprocessing

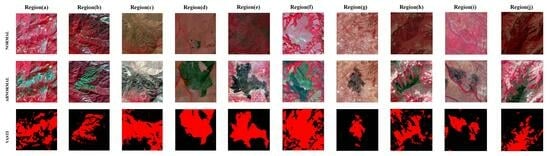

2.1. Abnormal Vegetation Satellite Dataset

2.2. Data Processing

3. Methodology

3.1. Selection of Texture Features

3.1.1. GLCM and Parameter Settings

3.1.2. Separability Analysis of Texture Features

3.2. Vegetation Indices Selection

3.2.1. Vegetation Indices Analysis

3.2.2. Separability Analysis of VIs

3.3. Vegetation Anomaly Spectral Texture Index

3.4. Validation Methods

4. Evaluation of the VASTI

4.1. Mapping of the VASTI and Other Indices

4.2. Validation of the VASTI

4.2.1. Comparison with the Components of VASTI

4.2.2. Comparison with Other Spectral and Textural Features

5. Discussion

5.1. Improvement of Combining VIs and Texture Features

5.2. Uncertainties of the VASTI

5.3. Merits and Limitations of the VASTI

6. Conclusions

- (1)

- We systematically investigated the response of different spatial texture features and spectral features to vegetation fires, tested the separability of texture features and spectral features for anomalous vegetation, and ultimately introduced a novel explicit index (VASTI) that incorporates both texture and spectral information. Unlike conventional spectral VIs, the VASTI considers the spatial connections between anomalous pixels and surrounding areas.

- (2)

- The UA, PA, and kappa coefficient of the VASTI improved by 5–10% compared to those of the GEMI and EVI in terms of the recognition results for the majority of the study areas. In some regions, the recognition accuracy of the VASTI was enhanced by 13–25% compared to that of a single texture feature.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Flannigan, M.; Amiro, B.; Logan, K.; Stocks, B.; Wotton, B. Forest fires and climate change in the 21st century. Mitig. Adapt. Strat. Glob. Chang. 2006, 186, 64–87. [Google Scholar] [CrossRef]

- Chu, T.; Guo, X. Remote Sensing Techniques in Monitoring Post-Fire Effects and Patterns of Forest Recovery in Boreal Forest Regions: A Review. Remote Sens. 2014, 6, 470–520. [Google Scholar] [CrossRef]

- Liu, S.C.; Zheng, Y.Z.; Dalponte, M.; Tong, X.H. A novel fire index-based burned area change detection approach using Landsat-8 OLI data. Eur. J. Remote Sens. 2020, 53, 104–112. [Google Scholar] [CrossRef]

- Bowman, D.M.J.S.; Balch, J.K.; Artaxo, P.; Bond, W.J.; Carlson, J.M.; Cochrane, M.A.; D’Antonio, C.M.; DeFries, R.S.; Doyle, J.C.; Harrison, S.P.; et al. Fire in the Earth system. Science 2009, 324, 481–484. [Google Scholar] [CrossRef] [PubMed]

- Chuvieco, E.; Congalton, R.G. Mapping and inventory of forest fires from digital processing of tm data. Geocarto Int. 1988, 3, 41–53. [Google Scholar] [CrossRef]

- Pereira, J.M.C.; Sousa, A.M.O.; Sá, A.C.L.; Martín, M.P.; Chuvieco, E. Regional-scale burnt area mapping in Southern Europe using NOAA-AVHRR 1 km data. In Remote Sensing of Large Wildfires; Springer: Berlin/Heidelberg, Germany, 1999; pp. 139–155. [Google Scholar]

- Mitri, G.H.; Gitas, I.Z. Fire type mapping using object-based classification of Ikonos imagery. Int. J. Wildland Fire 2006, 15, 457–462. [Google Scholar] [CrossRef]

- Rogan, J.; Franklin, J. Mapping burn severity in southern California using spectral mixture analysis. IEEE Int. Symp. Geosci. Remote Sens. 2001, 4, 1681–1683. [Google Scholar]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial-and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Mouillot, F.; Schultz, M.G.; Yue, C.; Cadule, P.; Tansey, K.; Ciais, P.; Chuvieco, E. Ten years of global burned area products from spaceborne remote sensing—A review: Analysis of user needs and recommendations for future developments. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 64–79. [Google Scholar] [CrossRef]

- Martín, M.P.; Gómez, I.; Chuvieco, E. Burnt Area Index (BAIM) for burned area discrimination at regional scale using MODIS data. For. Ecol. Manag. 2006, 234, S221. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Stein, A.; Bijker, W. Estimation of area burned by forest fires in Mediterranean countries: A remote sensing data mining perspective. For. Eco. Manag. 2011, 262, 1597–1607. [Google Scholar] [CrossRef]

- Cahoon, D.R., Jr.; Stocks, B.J.; Levine, J.S.; Cofer, W.R., III; Pierson, J.M. Satellite analysis of the severe 1987 forest fires in northern China and southeastern Siberia. J. Geophys. Res. Atmos. 1994, 99, 18627–18638. [Google Scholar] [CrossRef]

- Richards, J. Thematic mapping from multitemporal image data using the principal components transformation. Remote Sens. Environ. 1984, 16, 35–46. [Google Scholar] [CrossRef]

- Smith, A.M.S.; Drake, N.A.; Wooster, M.J.; Hudak, A.T.; Holden, Z.A.; Gibbons, C.J. Production of Landsat ETM+ reference imagery of burned areas within Southern African savannahs: Comparison of methods and application to MODIS. Int. J. Remote Sens. 2007, 28, 2753–2775. [Google Scholar] [CrossRef]

- Mukherjee, J.; Mukherjee, J.; Chakravarty, D. Detection of coal seam fires in summer seasons from Landsat 8 OLI/TIRS in Dhanbad. In Computer Vision, Pattern Recognition, Image Processing, and Graphics, Proceedings of the 6th National Conference, NCVPRIPG 2017, Mandi, India, 16–19 December 2017; Rameshan, R., Arora, C., Dutta Roy, S., Eds.; Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2018; Volume 841, pp. 529–539. [Google Scholar]

- Quintano, C.; Fernández-Manso, A.; Fernández-Manso, O. Combination of Landsat and Sentinel-2 MSI Data for Initial Assessing of Burn Severity. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 221–225. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Verstraeten, W.; Lhermite, S.; Goossens, R. Evaluating Landsat thematic mapper spectral indices for estimating burn severity of the 2007 Peloponnese wildfires in Greece. Int. J. Wildland Fire 2010, 19, 558–569. [Google Scholar] [CrossRef]

- Lhermitte, S.; Verbesselt, J.; Verstraeten, W.W.; Veraverbeke, S.; Coppin, P. Assessing intra-annual vegetation regrowth after fire using the pixel based regeneration index. J. Photogramm. Remote Sens. 2011, 66, 17–27. [Google Scholar] [CrossRef]

- Fernández-García, V.; Kull, C.A. Refining historical burned area data from satellite observations. Int. J. Appl. Earth Obs. Geoinf. 2023, 120, 103350. [Google Scholar] [CrossRef]

- Grigorov, B. GEMI—A Possible Tool for Identification of Disturbances in Confirerous Forests in Pernik Povince (Western Bulgaria). Civ. Environ. Eng. Rep. 2022, 32, 116–122. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Gitas, I.; Katagis, T.; Polychronaki, A.; Somers, B.; Goossens, R. Assessing post-fire vegetation recovery using red-near infrared vegetation indices: Accounting for background and vegetation variability. ISPRS J. Photogramm. Remote Sens. 2012, 68, 28–39. [Google Scholar] [CrossRef]

- Laws, K.I. Textured Image Segmentation. Ph.D. Thesis, University of Southern California, Los Angeles, CA, USA, 1980. [Google Scholar]

- Tuceryan, M.; Iain, A.K. Texture analysis. In Handbook of Pattern Recognition and Computer Vision; World Scientific Publishing: Singapore, 1993; pp. 235–276. [Google Scholar]

- Hossain, K.; Parekh, R. Extending GLCM to include Color Information for Texture Recognition. AIP Conf. Proc. 2010, 1298, 583–588. [Google Scholar]

- Tou, J.Y.; Tay, Y.H.; Lau, P.Y. One-dimensional Gray-level Co-occurrence Matrices for texture classification. In Proceedings of the 2008 International Symposium on Information Technology, Kuala Lumpur, Malaysia, 26–28 August 2008; pp. 1–6. [Google Scholar]

- Smith, J.; Lin, T.; Ranson, K.J. The Lambertian Assumption and Landsat Data. Photogramm. Eng. Remote Sens. 1980, 46, 1183–1189. [Google Scholar]

- Mitri, G.H.; Gitas, I.Z. A semi-automated object-oriented model for burned area mapping in the Mediterranean region using Landsat-TM imagery. Int. J. Wildland Fire 2004, 13, 367. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Fieguth, P.; Zhao, G.; Chellappa, R.; Pietikäinen, M. From BoW to CNN: Two decades of texture representation for texture classification. Int. J. Comput. Vis. 2019, 127, 74–109. [Google Scholar] [CrossRef]

- Seifi Majdar, R.; Ghassemian, H. A Probabilistic SVM Approach for Hyperspectral Image Classification Using Spectral and Texture Features. Int. J. Remote Sens. 2017, 38, 4265–4284. [Google Scholar] [CrossRef]

- Li, C.; Liu, Q.; Li, B.; Liu, L. Investigation of Recognition and Classification of Forest Fires Based on Fusion Color and Textural Features of Images. Forests 2022, 13, 1719. [Google Scholar] [CrossRef]

- Cao, X.; Chen, J.; Imura, H.; Higashi, O. An SVM-based method to extract urban areas from DMSP-OLS and SPOT VGT data. Remote Sens. Environ. 2009, 113, 2205–2209. [Google Scholar] [CrossRef]

- Yankovich, E.P.; Yankovich, K.S.; Baranovskiy, N.V.; Bazarov, A.V.; Sychev, R.S.; Badmaev, N.B. Mapping of vegetation cover using Sentinel-2 to estimate forest fire danger. In Proceedings of the Remote Sensing of Clouds and the Atmosphere XXIV, Strasbourg, France, 9–12 September 2019; Volume 11152. [Google Scholar]

- Mohammadpour, P.; Viegas, D.X.; Viegas, C. Vegetation mapping with random forest using sentinel 2 and GLCM texture feature—A case study for Lousã region, Portugal. Remote Sens. 2022, 14, 4585. [Google Scholar] [CrossRef]

- Champion, I.; Dubois-Fernandez, P.; Guyon, D.; Cottrel, M. Radar image texture as a function of forest stand age. Int. J. Remote Sens. 2008, 29, 1795–1800. [Google Scholar] [CrossRef]

- Niemi, M.T.; Vauhkonen, J. Extracting canopy surface texture from airborne laser scanning data for the supervised and unsupervised prediction of area-based forest characteristics. Remote Sens. 2016, 8, 582. [Google Scholar] [CrossRef]

- Irons, J.R.; Dwyer, J.L.; Barsi, J.A. The next landsat satellite: The landsat data continuity mission. Remote Sens. Environ. 2012, 122, 11–21. [Google Scholar] [CrossRef]

- Andela, N.; Morton, D.C.; Giglio, L.; Randerson, J.T. Global Fire Atlas with Characteristics of Individual Fires, 2003–2016; ORNL DAAC: Oak Ridge, TN, USA, 2019. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Franklin, S.E.; Maudie, A.J.; Lavigne, M.B. Using spatial co-occurrence texture to increase forest structure and species composition classification accuracy. Photgrammetric Eng. Remote Sens. 2001, 67, 849–855. [Google Scholar]

- Bai, Y.K.; Sun, G.M.; Li, Y.; Ma, P.F.; Li, G.; Zhang, Y.Z. Comprehensively analyzing optical and polarimetric SAR features for land-use/land-cover classification and urban vegetation extraction in highly-dense urban area. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102496. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Warner, T. Kernel-based texture in remote sensing image classification. Geogr. Compass. 2011, 5, 781–798. [Google Scholar] [CrossRef]

- Conners, R.W.; Trivedi, M.M.; Harlow, C.A. Segmentation of a high-resolution urban scene using texture operators. Comput. Vis. Graph. Image Process. 1984, 25, 273–310. [Google Scholar] [CrossRef]

- Bhattacharyya, A. On a measure of divergence between two multinomial populations. Indian. J. Stat. 1946, 7, 401–406. [Google Scholar]

- Moghadam, P.; Ward, D.; Goan, E.; Jayawardena, S.; Sikka, P.; Hernandez, E. Plant Disease Detection Using Hyperspectral Imaging. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, NSW, Australia, 29 November–1 December 2017. [Google Scholar]

- Wu, G.S.; Fang, Y.L.; Jiang, Q.Y.; Cui, M.; Li, N.; Ou, Y.M.; Diao, Z.H.; Zhang, B.H. Early identification of strawberry leaves disease utilizing hyperspectral imaging combing with spectral features, multiple vegetation indices and textural features. Comput. Electron. Agric. 2023, 204, 107553. [Google Scholar] [CrossRef]

- Buras, A.; Rammig, A.; Zang, C.S. Quantifying impacts of the 2018 drought on European ecosystems in comparison to 2003. Biogeosciences 2020, 17, 1655–1672. [Google Scholar] [CrossRef]

- Tavus, B.; Kocaman, S.; Gokceoglu, C. Flood damage assessment with Sentinel-1 and Sentinel-2 data after Sardoba dam break with GLCM features and Random Forest method. Sci. Total Environ. 2022, 816, 151585. [Google Scholar] [CrossRef] [PubMed]

- Carlson, T.N.; Ripley, D.A. On the Relation between NDVI, Fractional Vegetation Cover, and Leaf Area Index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A feedback based modification of the NDVI to minimize canopy background and atmospheric noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- Pearson, R.L.; Miller, L.D. Remote Mapping of Standing Crop Biomass for Estimation of Productivity of the Shortgrass Prairie. In Proceedings of the Eighth International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 2–6 October 1972. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing Prediction Power and Stability of Broadband and Hyperspectral Vegetation Indices for Estimation of Green Leaf Area Index and Canopy Chlorophyll Density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Galvão, L.S.; Formaggio, A.R.; Tisot, D.A. Discrimination of sugarcane varieties in Southeastern Brazil with EO-1 Hyperion data. Remote Sens. Environ. 2005, 94, 523–534. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef]

- Pu, R.; Gong, P.; Yu, Q. Comparative Analysis of EO-1 ALI and Hyperion, and Landsat ETM+ Data for Mapping Forest Crown Closure and Leaf Area Index. Sensors 2008, 8, 3744–3766. [Google Scholar] [CrossRef]

- Nidamanuri, R.; Garg, P.K.; Sanjay, G.; Vinay, D. Estimation of leaf total chlorophyll and nitrogen concentrations using hyperspectral satellite imagery. J. Agric. Sci. 2008, 146, 65–75. [Google Scholar]

- Pinty, B.; Verstraete, M.M. GEMI: A Non-Linear Index to Monitor Global Vegetation from Satellites. Vegetatio 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Remer, L.A. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Fornacca, D.; Ren, G.; Xiao, W. Evaluating the Best Spectral Indices for the Detection of Burn Scars at Several Post-Fire Dates in a Mountainous Region of Northwest Yunnan, China. Remote Sens. 2018, 10, 1196. [Google Scholar] [CrossRef]

- Barbosa, P.M.; Grégoire, J.-M.; Pereira, J.M.C. An algorithm for extracting burned areas from time series of AVHRR GAC data applied at a continental scale. Remote Sens. Environ. 1990, 69, 253–263. [Google Scholar] [CrossRef]

- Chafer, C.J.; Noonan, M.; Macnaught, E. The post-fire measurement of fire severity and intensity in the Christmas 2001 Sydney wildfires. Int. J. Wildland Fire 2004, 13, 227–240. [Google Scholar] [CrossRef]

- French, N.H.F.; Kasischke, E.S.; Hall, R.J.; Murphy, K.A.; Verbyla, D.L.; Hoy, E.E.; Allen, J.L. Using Landsat data to assess fire and burn severity in the North American boreal forest region: An overview and summary of results. Int. J. Wildland Fire 2008, 17, 443–462. [Google Scholar] [CrossRef]

- Chuvieco, E.; Opazo, S.; Sione, W.; Valle, H.D.; Anaya, J.; Di Bella, C.; Cruz, I.; Manzo, L.; Lopez, G.; Mari, N.; et al. Global burned-land estimation in Latin America using MODIS composite data. Ecol. Appl. 2008, 18, 64–79. [Google Scholar] [CrossRef]

- Hantson, S.; Padilla, M.; Corti, D.; Chuvieco, E. Strengths and weaknesses of MODIS hotspots to characterize global fire occurrence. Remote Sens. Environ. 2013, 131, 152–159. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Ghasemi, N.; Sahebi, M.R.; Mohammadzadeh, A. Biomass Estimation of a Temperate Deciduous Forest Using Wavelet Analysis. IEEE Trans. Geosci. Remote Sens. 2013, 51, 765–776. [Google Scholar] [CrossRef]

- Wu, Z.; He, H.; Liang, Y.; Cai, L.; Lewis, B. Determining relative contributions of vegetation and topography to burn severity from LANDSAT imagery. Environ. Manag. 2013, 52, 821–836. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Wang, D.; Li, R. Remote sensing image segmentation by combining spectral and texture features. IEEE Trans. Geosci. Remote Sens. 2013, 52, 16–24. [Google Scholar] [CrossRef]

- Soh, L.-K.T.C. Texture analysis of sar sea ice imagery using gray level co-occurrence matrices. IEEE Trans. Geosci. Remote Sens. 1999, 37, 780–795. [Google Scholar] [CrossRef]

- Ozdemir, I.; Karnieli, A. Predicting Forest Structural Parameters Using the Image Texture Derived from WorldView-2 Multispectral Imagery in a Dryland Forest, Israel. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 701–710. [Google Scholar] [CrossRef]

- Fang, G.; He, X.; Weng, Y.; Fang, L. Texture Features Derived from Sentinel-2 Vegetation Indices for Estimating and Mapping Forest Growing Stock Volume. Remote Sens. 2023, 15, 2821. [Google Scholar] [CrossRef]

- Baraldi, A.; Parmiggiani, F. Investigation of the textural characteristics associated with gray level cooccurrence matrix statistical parameters. IEEE Trans. Geosci. Remote Sens. 1995, 33, 293–304. [Google Scholar] [CrossRef]

- Duan, M.; Zhang, X. Using remote sensing to identify soil types based on multiscale image texture features. Comput. Electron. Agric. 2021, 187, 106272. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of Different Machine Learning Algorithms for Scalable Classification of Tree Types and Tree Species Based on Sentinel-2 Data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Armi, L.; Fekri-Ershad, S. Texture image analysis and texture classification methods—A review. arXiv 2019, arXiv:1904.06554. [Google Scholar]

- Guo, W.; Rees, W.G. Altitudinal forest-tundra ecotone categorisation using texture-based classification. Remote Sens. Environ. 2019, 232, 111312. [Google Scholar] [CrossRef]

- Mangeon, S.; Field, R.; Fromm, M.; McHugh, C.; Voulgarakis, A. Satellite versus ground-based estimates of burned area: A comparison between MODIS based burned area and fire agency reports over North America in 2007. Anthr. Rev. 2015, 3, 76–92. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.E.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Fraser, R.H.; Li, Z.; Cihlar, J. Hotspot and NDVI Differencing Synergy (HANDS): A New Technique for Burned Area Mapping over Boreal Forest. Remote Sens. Environ. 2000, 74, 362–376. [Google Scholar] [CrossRef]

- Mondini, C.A. Measures of Spatial Autocorrelation Changes in Multitemporal SAR Images for Event Landslides Detection. Remote Sens. 2017, 9, 554. [Google Scholar] [CrossRef]

- Wu, D.R.; Linders, J. A new texture approach to discrimination of forest clearcut, canopy, and burned area using airborne C-band SAR. IEEE Trans. Geosci. Remote Sens. 1999, 37, 555–563. [Google Scholar]

| Site Name | Longitude | Latitude | Land Cover Type | Number of Samples | Sample Size |

|---|---|---|---|---|---|

| EI Dorado | 120.8235°W | 38.9682°N | Mixed forest | 163 | 200 × 200 |

| Los Gatos | 121.8273°W | 37.1111°N | Evergreen Needleleaf forest | 52 | 200 × 200 |

| Inyo | 117.9242°W | 36.0431°N | Evergreen Needleleaf forest | 147 | 200 × 200 |

| Apure | 69.2507°W | 7.2310°N | Grasslands | 186 | 200 × 200 |

| Barh Azoum | 21.3424°E | 11.5677°N | Savannas | 223 | 200 × 200 |

| Kahemba | 18.8686°E | 7.2313°S | Woody savannas | 217 | 200 × 200 |

| Dirico | 21.2766°E | 17.3446°S | Savannas | 267 | 200 × 200 |

| Mpika | 31.8310°E | 11.5675°S | Woody savannas | 236 | 200 × 200 |

| Kusti | 32.0963°E | 11.5679°N | Croplands | 252 | 200 × 200 |

| Liangshan | 100.7287°E | 27.4312°N | Evergreen Needleleaf forest | 29 | 200 × 200 |

| Texture | Formula | Meaning |

|---|---|---|

| Mean | Reflects the degree of texture rules. The more regular the texture is, the larger the mean, and the less clutter there is, the smaller the mean. | |

| Standard Deviation | Reflects the mean deviation and the value of each pixel in the image. | |

| Contrast | Reflects the depth of the textural groove and the image sharpness. The visual impact is more distinct when the texture groove is deeper. | |

| Dissimilarity | Similar to the contrast, a higher local contrast indicates a higher dissimilarity. | |

| Homogeneity | Reflects the uniformity of the local grayscale of the image. The more uniform the grayscale is, the larger the cooperativity value. | |

| Energy | Reflects the uniformity of the image gray distribution. The more uniform the gray distribution of the image is, the larger the energy value. | |

| Correlation | Reflects the local gray image correlation. | |

| Autocorrelation | Reflects the consistency of the image texture. | |

| Entropy | Reflects the randomness of the image texture. The information entropy is at its maximum if all the values in the symbiosis matrix are equal; if the values are unequal, the information entropy is small. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, J.; Yao, Y.; Tang, Q.; Zhang, X.; Xu, J.; Yu, R.; Liu, L.; Xie, Z.; Ning, J.; Zhang, L. A Hybrid Index for Monitoring Burned Vegetation by Combining Image Texture Features with Vegetation Indices. Remote Sens. 2024, 16, 1539. https://doi.org/10.3390/rs16091539

Fan J, Yao Y, Tang Q, Zhang X, Xu J, Yu R, Liu L, Xie Z, Ning J, Zhang L. A Hybrid Index for Monitoring Burned Vegetation by Combining Image Texture Features with Vegetation Indices. Remote Sensing. 2024; 16(9):1539. https://doi.org/10.3390/rs16091539

Chicago/Turabian StyleFan, Jiahui, Yunjun Yao, Qingxin Tang, Xueyi Zhang, Jia Xu, Ruiyang Yu, Lu Liu, Zijing Xie, Jing Ning, and Luna Zhang. 2024. "A Hybrid Index for Monitoring Burned Vegetation by Combining Image Texture Features with Vegetation Indices" Remote Sensing 16, no. 9: 1539. https://doi.org/10.3390/rs16091539