Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review

Abstract

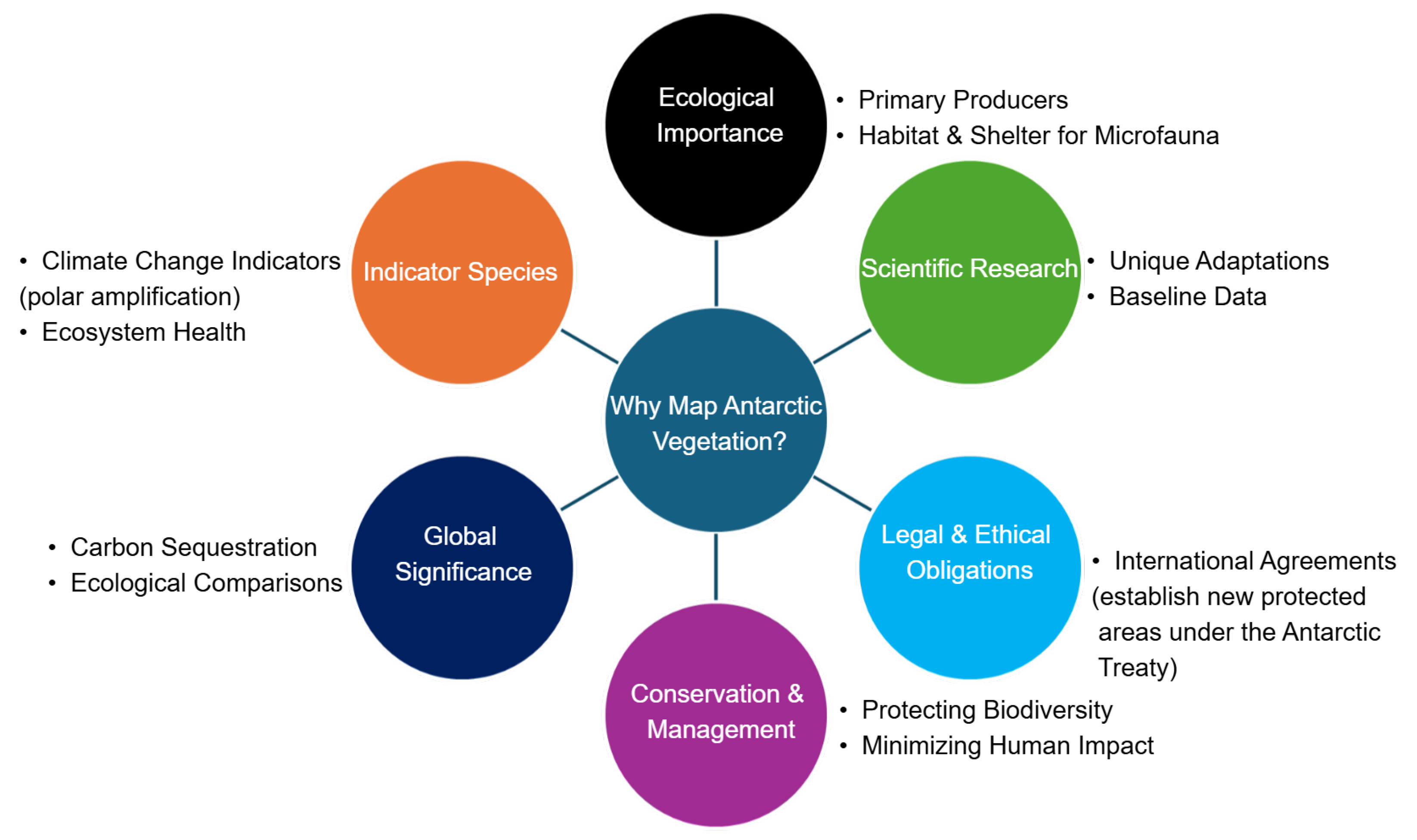

1. Introduction

Relevant Review Papers and Review Objectives

2. Bibliographic Analysis

3. Results and Discussion

- Section 3.1 focuses on UAV mapping of vegetation in polar regions, subdivided as follows:

- –

- Section 3.1.1: Analysis of study locations for polar vegetation mapping.

- –

- Section 3.1.2: Examination of Antarctic UAV flight guidelines, including key documents.

- –

- Section 3.1.3: Review of different UAV models employed in polar research.

- –

- Section 3.1.4: Discussion on the sensors utilised in these studies.

- –

- Section 3.1.5: Comparison of image processing algorithms for detecting and segmenting vegetation.

- –

- Section 3.1.6: Compilation of software used across these studies.

- Section 3.2 delves into semantic segmentation, structured into the following:

- –

- Section 3.2.1: Exploration of real-time semantic segmentation, focusing on its applications in vegetation mapping.

- –

- Section 3.2.2: Analysis of various vegetation indices used in Antarctic studies.

- Section 3.3 discusses the role of adaptive path planning in UAV mapping studies.

- Section 3.4 considers potential simulation software for modelling a scouting Antarctic UAV.

- Section 3.5 addresses the limitations and provides recommendations based on the reviewed UAV studies of Antarctic vegetation mapping.

3.1. Mapping of Vegetation in Polar Regions Using UAVs

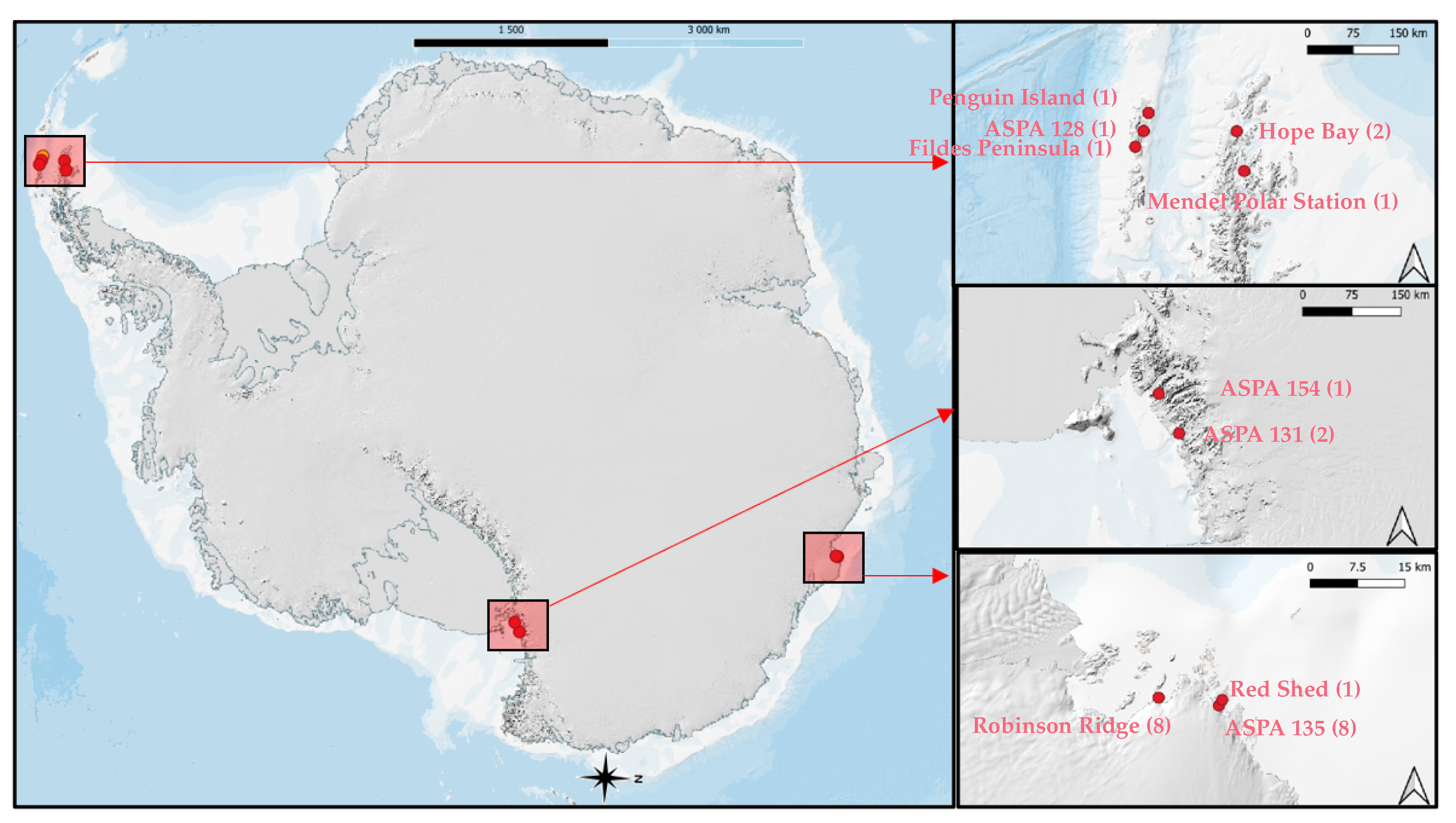

3.1.1. Study Locations

3.1.2. Flight Guidelines

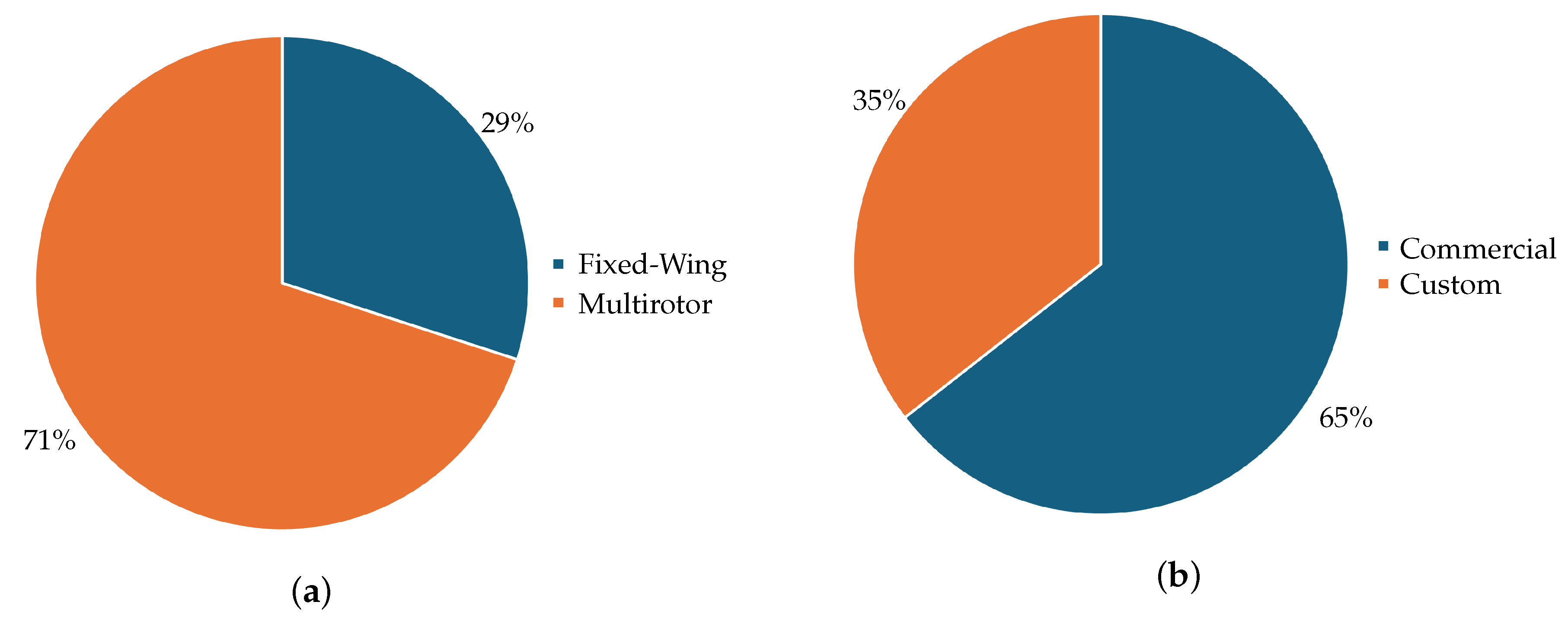

3.1.3. UAV Types

3.1.4. Sensors

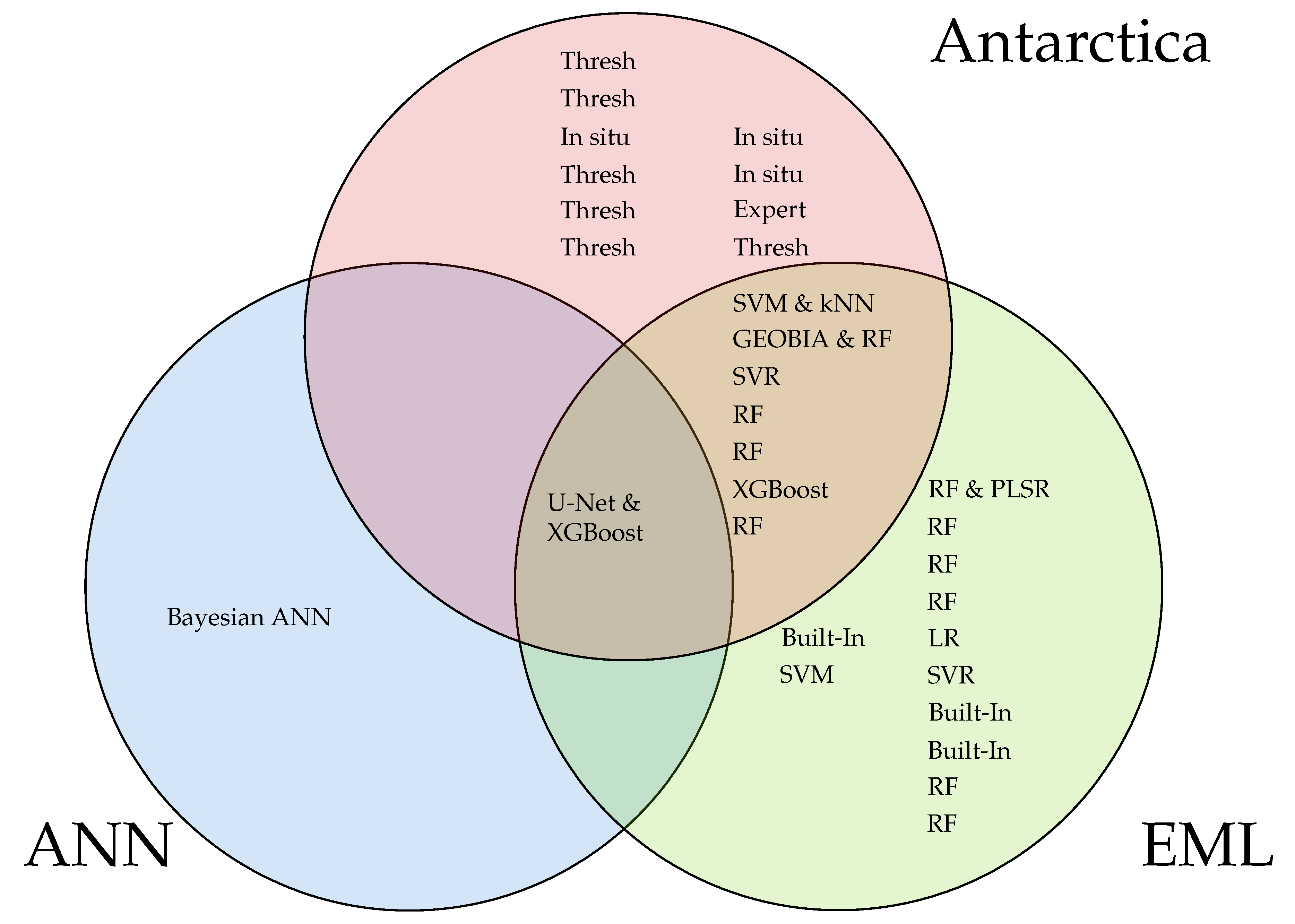

3.1.5. Mapping Methods

3.1.6. Software Used in Polar Vegetation Studies

3.2. Semantic Segmentation

3.2.1. Real-Time Semantic Segmentation

3.2.2. Vegetation Indices for Enhancing Vegetation Segmentation in Antarctica

3.3. Adaptive Path Planning

3.4. Simulation

3.5. Challenges and Possible Solutions for Antarctic Vegetation Mapping

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACBR | Antarctic Conservation Biogeographic Regions |

| AGL | Above Ground Level |

| AIoT | Artificial Intelligence of Things |

| ANN | Artificial Neural Network |

| ASPA | Antarctic Specially Protected Area |

| AUD | Australian Dollar |

| EML | Established Machine Learning |

| CNN | Convolutional Neural Network |

| DEM | Digital Elevation Model |

| DL | Deep Learning |

| DSM | Digital Surface Model |

| EVI | Enhanced Vegetation Index |

| ExG | Excess Green Index |

| FCN | Fully Connected Network |

| FW | Fixed-Wing |

| GLI | Green Leaf Index |

| GNDVI | Green Normalised Difference Vegetation Index |

| GNSS | Global Navigation Satellite System |

| GP | Gaussian process |

| GSD | Ground Sampling Distance |

| GT | Ground Truth |

| HSI | Hyperspectral Imagery |

| KNN | k-Nearest Neighbours |

| LR | Logistic Regression |

| mIoU | Mean Intersection over Union |

| MLC | Maximum Likelihood Classification |

| MSAVI | Modified Soil Adjusted Vegetation Index |

| MSI | Multispectral Imagery |

| NDLI | Normalised Difference Vegetation Index |

| NDRE | Normalised Difference Red Edge Index |

| NDVI | Normalised Difference Vegetation Index |

| NGRDI | Normalised Green–Red Difference Index |

| NIR | Near-Infrared |

| PFT | Plant Functional Type |

| PLSR | Partial Least-Squares Regression |

| POMDP | Partially Observable Markov Decision Process |

| RE | Red Edge |

| RF | Random Forest |

| RGB | Red, Green, and Blue |

| RGBVI | Red Green Blue Vegetation Index |

| RIO | Research Integrity Online |

| ROC AUC | Receiver Operating Characteristic Area Under the Curve |

| ROI | Region of Interest |

| ROS | Robot Operating System |

| RPAS | Remotely Piloted Aircraft System |

| RTK | Real-Time Kinematic |

| SAEF | Securing Antarctica’s Environmental Future |

| SAR | Search and Rescue |

| SCAR | Scientific Committee on Antarctic Research |

| SMMI | Soil Moisture Monitoring Index |

| SRI | Soil-Adjusted Vegetation Index |

| SVM | Support Vector Machine |

| SVR | Support Vector Regression |

| TIR | Thermal Infrared |

| UAV | Unmanned Aerial Vehicle |

| VARI | Visible Atmospherically Resistant Index |

| VI | Vegetation Index |

| VNIR | Visible and Near Infrared |

| VTOL | Vertical Take-Off and Landing |

| XGBoost | Extreme Gradient Boosting |

| GEOBIA | Object-Based Image Analysis |

| ViT | Vision Transformer |

References

- Antarctic Treaty System. Secretariat of the Antarctic Treaty. (n.d.). Final Acts of the Antarctic Treaty Consultative Meeting (ATCM) and the Committee for Environmental Protection (CEP). Secretariat of the Antarctic Treaty. Available online: https://www.ats.aq/e/faflo.html (accessed on 1 October 2024).

- Hopkins, D.W.; Newsham, K.K.; Dungait, J.A. Primary production and links to carbon cycling in Antarctic soils. In Antarctic Terrestrial Microbiology: Physical and Biological Properties of Antarctic Soils; Springer: Berlin/Heidelberg, Germany, 2014; pp. 233–248. [Google Scholar]

- Jawak, S.D.; Luis, A.J.; Fretwell, P.T.; Convey, P.; Durairajan, U.A. Semiautomated detection and mapping of vegetation distribution in the antarctic environment using spatial-spectral characteristics of WorldView-2 imagery. Remote Sens. 2019, 11, 1909. [Google Scholar] [CrossRef]

- Folgar-Cameán, Y.; Barták, M. Evaluation of photosynthetic processes in Antarctic mosses and lichens exposed to controlled rate cooling: Species-specific responses. Czech Polar Rep. 2019, 9, 114–124. [Google Scholar] [CrossRef]

- Xie, A.; Zhu, J.; Kang, S.; Qin, X.; Xu, B.; Wang, Y. Polar amplification comparison among Earth’s three poles under different socioeconomic scenarios from CMIP6 surface air temperature. Sci. Rep. 2022, 12, 16548. [Google Scholar] [CrossRef]

- Bollard, B.; Doshi, A.; Gilbert, N.; Poirot, C.; Gillman, L. Drone Technology for Monitoring Protected Areas in Remote and Fragile Environments. Drones 2022, 6, 42. [Google Scholar] [CrossRef]

- Ren, G.; Wang, J.; Lu, Y.; Wu, P.; Lu, X.; Chen, C.; Ma, Y. Monitoring changes to Arctic vegetation and glaciers at Ny-Ålesund, Svalbard, based on time series remote sensing. Remote Sens. 2021, 13, 3845. [Google Scholar] [CrossRef]

- Tong, X.Y.; Lu, Q.; Xia, G.S.; Zhang, L. Large-scale land cover classification in gaofen-2 satellite imagery. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3599–3602. [Google Scholar]

- Boon, M.; Drijfhout, A.; Tesfamichael, S. Comparison of a fixed-wing and multi-rotor UAV for environmental mapping applications: A case study. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 47–54. [Google Scholar] [CrossRef]

- Hann, R.; Altstädter, B.; Betlem, P.; Deja, K.; Dragańska-Deja, K.; Ewertowski, M.; Hartvich, F.; Jonassen, M.; Lampert, A.; Laska, M.; et al. Scientific Applications of Unmanned Vehicles in Svalbard (UAV Svalbard); Technical Report; SIOS: Longyearbyen, Svalbard and Jan Mayen, 2021. [Google Scholar]

- Hann, R.; Betlem, P.; Deja, K.; Hartvich, F.; Jonassen, M.; Lampert, A.; Laska, M.; Sobota, I.; Storvold, R.; Zagórski, P. Update to scientific applications of unmanned vehicles in Svalbard (UAV Svalbard Update). In Svalbard Integrated Arctic Earth Observing System Place: Longyearbyen ISBN Book title: SESS report 2021—The State of Environmental Science in Svalbard—An annual report. Svalbard Integr. Arct. Earth Obs. Syst. 2022, 4, 74–86. [Google Scholar]

- Sun, X.; Wu, W.; Li, X.; Xu, X.; Li, J. Vegetation Abundance and Health Mapping Over Southwestern Antarctica Based on WorldView-2 Data and a Modified Spectral Mixture Analysis. Remote Sens. 2021, 13, 166. [Google Scholar] [CrossRef]

- Sotille, M.E.; Bremer, U.F.; Vieira, G.; Velho, L.F.; Petsch, C.; Simões, J.C. Evaluation of UAV and satellite-derived NDVI to map maritime Antarctic vegetation. Appl. Geogr. 2020, 125, 102322. [Google Scholar] [CrossRef]

- Casanovas, P.; Black, M.; Fretwell, P.; Convey, P. Mapping lichen distribution on the Antarctic Peninsula using remote sensing, lichen spectra and photographic documentation by citizen scientists. Polar Res. 2015, 34, 25633. [Google Scholar] [CrossRef]

- Raniga, D.; Amarasingam, N.; Sandino, J.; Doshi, A.; Barthelemy, J.; Randall, K.; Robinson, S.A.; Gonzalez, F.; Bollard, B. Monitoring of Antarctica’s Fragile Vegetation Using Drone-Based Remote Sensing, Multispectral Imagery and AI. Sensors 2024, 24, 1063. [Google Scholar] [CrossRef] [PubMed]

- Sandino, J.; Caccetta, P.A.; Sanderson, C.; Maire, F.; Gonzalez, F. Reducing object detection uncertainty from RGB and thermal data for UAV outdoor surveillance. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–16. [Google Scholar]

- Mora, J.D.S. Autonomous Decision-Making for UAVs Operating Under Environmental and Object Detection Uncertainty. Ph.D. Thesis, Queensland University of Technology, Brisbane City, Australia, 2022. [Google Scholar] [CrossRef]

- Galvez-Serna, J.; Mandel, N.; Sandino, J.; Vanegas, F.; Ly, N.; Flannery, D.T.; Gonzalez, F. Real-time Segmentation of Desiccation Cracks onboard UAVs for Planetary Exploration. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–12. [Google Scholar]

- Li, Y.; Qiao, G.; Popov, S.; Cui, X.; Florinsky, I.V.; Yuan, X.; Wang, L. Unmanned Aerial Vehicle Remote Sensing for Antarctic Research: A review of progress, current applications, and future use cases. IEEE Geosci. Remote Sens. Mag. 2023, 11, 73–93. [Google Scholar] [CrossRef]

- Pina, P.; Vieira, G. UAVs for science in Antarctica. Remote Sens. 2022, 14, 1610. [Google Scholar] [CrossRef]

- Holder, C.J.; Shafique, M. On efficient real-time semantic segmentation: A survey. arXiv 2022, arXiv:2206.08605. [Google Scholar]

- Broni-Bediako, C.; Xia, J.; Yokoya, N. Real-Time Semantic Segmentation: A brief survey and comparative study in remote sensing. IEEE Geosci. Remote Sens. Mag. 2023, 11, 94–124. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Adriano, B.; Broni-Bediako, C. OpenEarthMap: A Benchmark Dataset for Global High-Resolution Land Cover Mapping. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6254–6264. [Google Scholar]

- Cheng, J.; Deng, C.; Su, Y.; An, Z.; Wang, Q. Methods and datasets on semantic segmentation for Unmanned Aerial Vehicle remote sensing images: A review. ISPRS J. Photogramm. Remote Sens. 2024, 211, 1–34. [Google Scholar] [CrossRef]

- Yang, M.; Wang, Z.; Liang, L.; An, Q. Performance evaluation of semantic segmentation models: A cross meta-frontier DEA approach. J. Oper. Res. Soc. 2024, 75, 2283–2297. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-based navigation techniques for unmanned aerial vehicles: Review and challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Jones, M.R.; Djahel, S.; Welsh, K. Path-planning for unmanned aerial vehicles with environment complexity considerations: A survey. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Lucieer, A.; Robinson, S.A.; Turner, D. Unmanned aerial vehicle (UAV) remote sensing for hyperspatial terrain mapping of Antarctic moss beds based on structure from motion (SfM) point clouds. In Proceedings of the 34th International Symposium on Remote Sensing of Environment, Sydney, Australia, 10–15 April 2011; p. 1. [Google Scholar]

- Lucieer, A.; Robinson, S.; Turner, D.; Harwin, S.; Kelcey, J. Using a Micro-UAV for Ultra-High Resolution Multi-Sensor Observations of Antarctic Moss Beds. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XXII ISPRS Congress, Melbourne, Australia, 25 August–1 September 2012; Volume XXXIX-B1, pp. 429–433. [Google Scholar]

- Lucieer, A.; Turner, D.; King, D.H.; Robinson, S.A. Using an Unmanned Aerial Vehicle (UAV) to capture micro-topography of Antarctic moss beds. Int. J. Appl. Earth Obs. Geoinf. 2014, 27, 53–62. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.H.; Robinson, S.A. Spatial Co-Registration of Ultra-High Resolution Visible, Multispectral and Thermal Images Acquired with a Micro-UAV over Antarctic Moss Beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Bollard-Breen, B.; Brooks, J.D.; Jones, M.R.L.; Robertson, J.; Betschart, S.; Kung, O.; Craig Cary, S.; Lee, C.K.; Pointing, S.B. Application of an unmanned aerial vehicle in spatial mapping of terrestrial biology and human disturbance in the McMurdo Dry Valleys, East Antarctica. Polar Biol. 2015, 38, 573–578. [Google Scholar] [CrossRef]

- Malenovský, Z.; Lucieer, A.; King, D.H.; Turnbull, J.D.; Robinson, S.A. Unmanned aircraft system advances health mapping of fragile polar vegetation. Methods Ecol. Evol. 2017, 12, 1842–1857. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.; Robinson, S.A. Assessment of Antarctic moss health from multi-sensor UAS imagery with Random Forest Modelling. Int. J. Appl. Earth Obs. Geoinf. 2018, 68, 168–179. [Google Scholar] [CrossRef]

- Zmarz, A.; Rodzewicz, M.; Dąbski, M.; Karsznia, I.; Korczak-Abshire, M.; Chwedorzewska, K.J. Application of UAV BVLOS remote sensing data for multi-faceted analysis of Antarctic ecosystem. Remote Sens. Environ. 2018, 217, 375–388. [Google Scholar] [CrossRef]

- Miranda, V.; Pina, P.; Heleno, S.; Vieira, G.; Mora, C.; E G R Schaefer, C. Monitoring recent changes of vegetation in Fildes Peninsula (King George Island, Antarctica) through satellite imagery guided by UAV surveys. Sci. Total Environ. 2020, 704, 135295. [Google Scholar] [CrossRef]

- Turner, D.J.; Malenovskỳ, Z.; Lucieer, A.; Turnbull, J.D.; Robinson, S.A. Optimizing spectral and spatial resolutions of unmanned aerial system imaging sensors for monitoring Antarctic vegetation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3813–3825. [Google Scholar] [CrossRef]

- Sotille, M.E.; Bremer, U.F.; Vieira, G.; Velho, L.F.; Petsch, C.; Auger, J.D.; Simões, J.C. UAV-based classification of maritime Antarctic vegetation types using GEOBIA and random forest. Ecol. Inform. 2022, 71, 101768. [Google Scholar] [CrossRef]

- Váczi, P.; Barták, M. Multispectral aerial monitoring of a patchy vegetation oasis composed of different vegetation classes. UAV-based study exploiting spectral reflectance indices. Czech Polar Rep. 2022, 12, 131–142. [Google Scholar] [CrossRef]

- Sandino, J.; Bollard, B.; Doshi, A.; Randall, K.; Barthelemy, J.; Robinson, S.A.; Gonzalez, F. A green fingerprint of Antarctica: Drones, hyperspectral imaging, and machine learning for moss and lichen classification. Remote Sens. 2023, 15, 5658. [Google Scholar] [CrossRef]

- Zmarz, A.; Karlsen, S.R.; Kycko, M.; Korczak-Abshire, M.; Gołębiowska, I.; Karsznia, I.; Chwedorzewska, K. BVLOS UAV missions for vegetation mapping in maritime Antarctic. Front. Environ. Sci. Eng. China 2023, 11, 1154115. [Google Scholar] [CrossRef]

- Turner, D.; Cimoli, E.; Lucieer, A.; Haynes, R.S.; Randall, K.; Waterman, M.J.; Lucieer, V.; Robinson, S.A. Mapping water content in drying Antarctic moss communities using UAS-borne SWIR imaging spectroscopy. Remote Sens. Ecol. Conserv. 2024, 10, 296–311. [Google Scholar] [CrossRef]

- Fraser, R.H.; Olthof, I.; Lantz, T.C.; Schmitt, C. UAV photogrammetry for mapping vegetation in the low-Arctic. Arct. Sci. 2016, 2, 79–102. [Google Scholar] [CrossRef]

- Palace, M.; Herrick, C.; DelGreco, J.; Finnell, D.; Garnello, A.J.; McCalley, C.; McArthur, K.; Sullivan, F.; Varner, R.K. Determining Subarctic Peatland Vegetation Using an Unmanned Aerial System (UAS). Remote Sens. 2018, 10, 1498. [Google Scholar] [CrossRef]

- Meng, R.; Yang, D.; McMahon, A.; Hantson, W.; Hayes, D.; Breen, A.; Serbin, S. A UAS Platform for Assessing Spectral, Structural, and Thermal Patterns of Arctic Tundra Vegetation. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9113–9116. [Google Scholar]

- Yang, D.; Meng, R.; Morrison, B.D.; McMahon, A.; Hantson, W.; Hayes, D.J.; Breen, A.L.; Salmon, V.G.; Serbin, S.P. A Multi-Sensor Unoccupied Aerial System Improves Characterization of Vegetation Composition and Canopy Properties in the Arctic Tundra. Remote Sens. 2020, 12, 2638. [Google Scholar] [CrossRef]

- Thomson, E.R.; Spiegel, M.P.; Althuizen, I.H.J.; Bass, P.; Chen, S.; Chmurzynski, A.; Halbritter, A.H.; Henn, J.J.; Jónsdóttir, I.S.; Klanderud, K.; et al. Multiscale mapping of plant functional groups and plant traits in the High Arctic using field spectroscopy, UAV imagery and Sentinel-2A data. Environ. Res. Lett. 2021, 16, 055006. [Google Scholar] [CrossRef]

- Siewert, M.B.; Olofsson, J. UAV reveals substantial but heterogeneous effects of herbivores on Arctic vegetation. Sci. Rep. 2021, 11, 19468. [Google Scholar] [CrossRef]

- Eischeid, I.; Soininen, E.M.; Assmann, J.J.; Ims, R.A.; Madsen, J.; Pedersen, A.O.; Pirotti, F.; Yoccoz, N.G.; Ravolainen, V.T. Disturbance Mapping in Arctic Tundra Improved by a Planning Workflow for Drone Studies: Advancing Tools for Future Ecosystem Monitoring. Remote Sens. 2021, 13, 4466. [Google Scholar] [CrossRef]

- Orndahl, K.M.; Ehlers, L.P.W.; Herriges, J.D.; Pernick, R.E.; Hebblewhite, M.; Goetz, S.J. Mapping tundra ecosystem plant functional type cover, height and aboveground biomass in Alaska and northwest Canada using unmanned aerial vehicles. Arct. Sci. 2022, 8, 1165–1180. [Google Scholar] [CrossRef]

- Yang, D.; Morrison, B.D.; Hanston, W.; McMahon, A.; Baskaran, L.; Hayes, D.J.; Miller, C.E.; Serbin, S.P. Integrating very-high-resolution UAS data and airborne imaging spectroscopy to map the fractional composition of Arctic plant functional types in Western Alaska. Remote Sens. Environ. 2023, 286, 113430. [Google Scholar] [CrossRef]

- Riihimäki, H.; Luoto, M.; Heiskanen, J. Estimating fractional cover of tundra vegetation at multiple scales using unmanned aerial systems and optical satellite data. Remote Sens. Environ. 2019, 224, 119–132. [Google Scholar] [CrossRef]

- Vélez, S.; Martínez-Peña, R.; Castrillo, D. Beyond vegetation: A review unveiling additional insights into agriculture and forestry through the application of vegetation indices. J 2023, 6, 421–436. [Google Scholar] [CrossRef]

- Walshaw, C.V.; Gray, A.; Fretwell, P.T.; Convey, P.; Davey, M.P.; Johnson, J.S.; Colesie, C. A satellite-derived baseline of photosynthetic life across Antarctica. Nat. Geosci. 2024, 17, 755–762. [Google Scholar] [CrossRef]

- Harris, C.M.; Herata, H.; Hertel, F. Environmental guidelines for operation of Remotely Piloted Aircraft Systems (RPAS): Experience from Antarctica. Biol. Conserv. 2019, 236, 521–531. [Google Scholar] [CrossRef]

- SCAR’s Environmental Code of Conduct for Terrestrial Scientific Field Research in Antarctica. Resolution 5 (2018), Annex to ATCM XLI Final Report. 2018. Available online: https://scar.org/~documents/policy/antarctic-treaty/atcm-xl-and-cep-xx-2017-beijing-china/atcm40-att053?layout=default (accessed on 20 October 2024).

- Antarctic Remotely Piloted Aircraft Systems (RPAS) Operator’s Handbook; Prepared by the COMNAP RPAS Working Group, Version 27 November 2017; Secretariat of the Antarctic Treaty: Buenos Aires, Argentina, 2017.

- Garg, P.K. Characterisation of Fixed-Wing Versus Multirotors UAVs/Drones. J. Geomat. 2022, 16, 152–159. [Google Scholar] [CrossRef]

- Shi, W.; Wang, S.; Yue, H.; Wang, D.; Ye, H.; Sun, L.; Sun, J.; Liu, J.; Deng, Z.; Rao, Y.; et al. Identifying tree species in a warm-temperate deciduous forest by combining multi-rotor and fixed-wing unmanned aerial vehicles. Drones 2023, 7, 353. [Google Scholar] [CrossRef]

- Ricaud, P.; Medina, P.; Durand, P.; Attié, J.L.; Bazile, E.; Grigioni, P.; Guasta, M.D.; Pauly, B. In situ VTOL drone-borne observations of temperature and relative humidity over dome C, Antarctica. Drones 2023, 7, 532. [Google Scholar] [CrossRef]

- Jouvet, G.; Weidmann, Y.; Kneib, M.; Detert, M.; Seguinot, J.; Sakakibara, D.; Sugiyama, S. Short-lived ice speed-up and plume water flow captured by a VTOL UAV give insights into subglacial hydrological system of Bowdoin Glacier. Remote Sens. Environ. 2018, 217, 389–399. [Google Scholar] [CrossRef]

- Sunitha Devi, S.; Maheskumar, R.S. Antarctic weather and climate patterns. In Climate Variability of Southern High Latitude Regions, 1st ed.; CRC Press: Boca Raton, FL, USA, 2022; pp. 47–76. [Google Scholar]

- Zhou, Y.; Zhao, H.; Liu, Y. An evaluative review of the VTOL technologies for unmanned and manned aerial vehicles. Comput. Commun. 2020, 149, 356–369. [Google Scholar] [CrossRef]

- Neinavaz, E.; Schlerf, M.; Darvishzadeh, R.; Gerhards, M.; Skidmore, A.K. Thermal infrared remote sensing of vegetation: Current status and perspectives. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102415. [Google Scholar] [CrossRef]

- White, M. Monitoring Vegetation Biomass in Continental Antarctica: A Comparison of Hyper- and Multispectral Imagery. Master’s Thesis, The University of Edinburgh, Edinburgh, UK, 2020. Available online: https://era.ed.ac.uk/handle/1842/37639 (accessed on 15 October 2024).

- Podoprigorova, N.S.; Safonov, F.A.; Podoprigorova, S.S.; Tarasov, A.V.; Shikhov, A.N. Recognition of Forest Damage from Sentinel-2 Satellite Images Using U-Net, RandomForest and XGBoost. In Proceedings of the 2024 6th International Youth Conference on Radio Electronics, Electrical and Power Engineering (REEPE), Moscow, Russia, 29 February–2 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Garg, R.; Kumar, A.; Bansal, N.; Prateek, M.; Kumar, S. Semantic segmentation of PolSAR image data using advanced deep learning model. Sci. Rep. 2021, 11, 15365. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 11045, pp. 3–11. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 6230–6239. [Google Scholar]

- Yuan, W.; Wang, J.; Xu, W. Shift Pooling PSPNet: Rethinking PSPNet for Building Extraction in Remote Sensing Images from Entire Local Feature Pooling. Remote Sens. 2022, 14, 4889. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, L.; Liu, X.; Yan, P. An improved semantic segmentation algorithm for high-resolution remote sensing images based on DeepLabv3+. Sci. Rep. 2024, 14, 1–15. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6877–6886. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Álvarez, J.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Ball, J.E.; Anderson, D.T.; Chan, C.S., Sr. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. JARS 2017, 11, 042609. [Google Scholar] [CrossRef]

- Sandino, J.; Galvez–Serna, J.; Mandel, N.; Vanegas, F.; Gonzalez, F. Autonomous Mapping of Desiccation Cracks via a Probabilistic-based Motion Planner Onboard UAVs. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–14. [Google Scholar]

- Sandino, J.; Maire, F.; Caccetta, P.; Sanderson, C.; Gonzalez, F. Drone-Based Autonomous Motion Planning System for Outdoor Environments under Object Detection Uncertainty. Remote Sens. 2021, 13, 4481. [Google Scholar] [CrossRef]

- Cao, M.; Tang, F.; Ji, P.; Ma, F. Improved Real-Time Semantic Segmentation Network Model for Crop Vision Navigation Line Detection. Front. Plant Sci. 2022, 13, 898131. [Google Scholar] [CrossRef]

- Deng, J.; Zhong, Z.; Huang, H.; Lan, Y.; Han, Y.; Zhang, Y. Lightweight Semantic Segmentation Network for Real-Time Weed Mapping Using Unmanned Aerial Vehicles. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 2020, 10, 7132. [Google Scholar] [CrossRef]

- Revanasiddappa, B.; Arvind, C.S.; Swamy, S. Real-time early detection of weed plants in pulse crop field using drone with IoT. Int. J. Agric. Technol. 2020, 16, 1227–1242. [Google Scholar]

- Lan, Y.; Huang, K.; Yang, C.; Lei, L.; Ye, J.; Zhang, J.; Zeng, W.; Zhang, Y.; Deng, J. Real-Time Identification of Rice Weeds by UAV Low-Altitude Remote Sensing Based on Improved Semantic Segmentation Model. Remote Sens. 2021, 13, 4370. [Google Scholar] [CrossRef]

- Menshchikov, A.; Shadrin, D.; Prutyanov, V.; Lopatkin, D.; Sosnin, S.; Tsykunov, E.; Iakovlev, E.; Somov, A. Real-Time Detection of Hogweed: UAV Platform Empowered by Deep Learning. IEEE Trans. Comput. 2021, 70, 1175–1188. [Google Scholar] [CrossRef]

- Bo, W.; Liu, J.; Fan, X.; Tjahjadi, T.; Ye, Q.; Fu, L. BASNet: Burned Area Segmentation Network for Real-Time Detection of Damage Maps in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Behera, T.K.; Bakshi, S.; Sa, P.K. A Lightweight Deep Learning Architecture for Vegetation Segmentation using UAV-captured Aerial Images. Sustain. Comput. Inform. Syst. 2023, 37, 100841. [Google Scholar] [CrossRef]

- Sa, I.; Chen, Z.; Popović, M.; Khanna, R.; Liebisch, F.; Nieto, J.; Siegwart, R. weedNet: Dense Semantic Weed Classification Using Multispectral Images and MAV for Smart Farming. IEEE Robot. Autom. Lett. 2018, 3, 588–595. [Google Scholar] [CrossRef]

- Fernando, T.; Fookes, C.; Gammulle, H.; Denman, S.; Sridharan, S. Toward On-Board Panoptic Segmentation of Multispectral Satellite Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5402312. [Google Scholar] [CrossRef]

- Radočaj, D.; Šiljeg, A.; Marinović, R.; Jurišić, M. State of major vegetation indices in precision agriculture studies indexed in Web of science: A review. Agriculture 2023, 13, 707. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

- Saddik, A.; Latif, R.; El Ouardi, A.; Alghamdi, M.I.; Elhoseny, M. Improving Sustainable Vegetation Indices Processing on Low-Cost Architectures. Sustain. Sci. Pract. Policy 2022, 14, 2521. [Google Scholar] [CrossRef]

- Kahoush, M.; Yajima, Y.; Kim, S.; Chen, J.; Park, J.; Kangisser, S.; Irizarry, J.; Cho Yong, K. Analysis of Flight Parameters on UAV Semantic Segmentation Performance for Highway Infrastructure Monitoring. J. Comput. Civ. Eng. 2022, 885–893. [Google Scholar]

- Popović, M.; Vidal-Calleja, T.; Hitz, G.; Chung, J.J.; Sa, I.; Siegwart, R.; Nieto, J. An informative path planning framework for UAV-based terrain monitoring. Auton. Robots 2020, 44, 889–911. [Google Scholar] [CrossRef]

- Stache, F.; Westheider, J.; Magistri, F.; Stachniss, C.; Popović, M. Adaptive path planning for UAVs for multi-resolution semantic segmentation. Rob. Auton. Syst. 2023, 159, 104288. [Google Scholar] [CrossRef]

- Krestenitis, M.; Raptis, E.K.; Kapoutsis, A.C.; Ioannidis, K.; Kosmatopoulos, E.B.; Vrochidis, S. Overcome the Fear Of Missing Out: Active sensing UAV scanning for precision agriculture. Rob. Auton. Syst. 2024, 172, 104581. [Google Scholar] [CrossRef]

- Willis, A.R.; Brink, K.; Dipple, K. ROS georegistration: Aerial Multi-spectral Image Simulator for the Robot Operating System. arXiv 2022, arXiv:2201.07863. [Google Scholar]

- Singh, A.D.; Vanegas Alvarez, F. Simulating GPS-denied autonomous UAV navigation for detection of surface water bodies. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 1792–1800. [Google Scholar]

- Phadke, A.; Medrano, F.A.; Sekharan, C.N.; Chu, T. Designing UAV swarm experiments: A simulator selection and experiment design process. Sensors 2023, 23, 7359. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction and radiometric calibration of a 6-band multispectral imaging sensor for UAV remote sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 393–398. [Google Scholar] [CrossRef]

- Quinn, A. (n.d.). Monitoring Moss in a Sea of Ice. SAEF. Available online: https://arcsaef.com/story/monitoring-moss-in-a-sea-of-ice/ (accessed on 20 October 2024).

| Topic | Number of Sources Analysed | Year Span | Selection of Key Search Words |

|---|---|---|---|

| UAV vegetation mapping in Antarctica | 17 | 2011–2024 | Antarctica, Antarctic, |

| UAV vegetation mapping in the Arctic | 11 | 2016–2023 | Arctic, |

| Real-time semantic segmentation for vegetation mapping | 9 | 2018–2023 | Real-time, semantic segmentation, |

| Adaptive path planning for vegetation mapping | 4 | 2020–2024 | Adaptive, online, autonomous, path planning |

| All | 101 | All | vegetation, mapping, monitoring, survey, health, unmanned aerial vehicle, UAV, UAS, RPAS, drone, Remote sensing, |

| Year Range | Target | Mapping Objectives | UAV Platform | Sensors | Methodologies |

|---|---|---|---|---|---|

| 2011–2015 | Moss [28,29,30,31], cyanobacterial mats [32] | DEM creation [28], mapping [29,30,31,32], health assessment [29,30,31] | Multirotor [28,29,30,31], Fixed-wing [32] | RGB [28,30], MSI [29,31,32], TIR [31] | In situ [28,30], VI thresholding [29,31], expert annotation [32] |

| 2017–2020 | Moss [13,33,34,35,36,37], lichen [13,36], algae [13], tundra groups [35] | Mapping [13,33,34,35,36], health assessment [33,34], satellite & UAV synergy [13,36], spectral studies [37] | Multirotor [33,34,36,37], Fixed-wing [13,35] | RGB [35,36], MSI [13,34], HSI [33,37], TIR [34] | In situ [35], VI thresholding [13], RF [34,37], SVR & built-in [36], SVM & kNN [36] |

| 2022–2024 | Moss [6,15,38,39,40,41,42], lichen [6,15,38,40,41], cyanobacteria [6], algae [38], tundra groups [41] | Mapping [6,15,38,39,40,41], health assessment [15,40], water content mapping [42], | Multirotor [15,39,40,42], Fixed-wing [6,38,41] | RGB [6], MSI [6,38,39], TIR [15,40], HSI [40,42] | GEOBIA & RF [38], VI thresholding [6,39,41], XGBoost [15,40], U-Net [15], RF [42] |

| 2016–2023 (Arctic) | Tundra groups [7,43,44,45,46,47,48,49,50,51], moss [7,43,45,46,47,49,50,51], lichen [43,45,50,51], vegetation [52] | Mapping [7,43,44,45,46,47,48,49,50,51,52], satellite & UAV synergy [47,52], mapping height & biomass [50], damage assessment [49] | Multirotor [7,43,45,46,47,50,51,52], Fixed-wing [44,48,49] | RGB [43,44,51,52], MSI [47,48,49,50,50], HSI [45,46], TIR [45,46] | Built-In [43,45,46,48], Bayesian ANN [44], LR [52], RF [47,48,49,50,51], SVM [7] |

| Drone Type | Altitude Range | Altitude Std. Dev. | Speed Range | Speed Std. Dev. |

|---|---|---|---|---|

| (m) | (m) | (m/s) | (m/s) | |

| Multirotor | 89.0 (12) | 24.5 (12) | 1.1 (3) | 0.6 (3) |

| Fixed-wing | 80.0 (3) | 40.7 (3) | 11.5 (2) | 8.1 (2) |

| Sensor | Resolution (MP) | Number of Studies |

|---|---|---|

| 1 | 20 (3:2, 4:3, 16:9) | 4 [36,50,51,52] |

| 2 | 18 (3:2) | 4 [29,30,31,34] |

| 3 | 18 (3:2) | 2 [35,41] |

| Sensor | Bands | MSI Bandwidth (nm) | GSD (cm/pixel at 120 m AGL) | Number of Studies |

|---|---|---|---|---|

| 1 | B, G, R, RE, NIR | 32, 27, 14, 12, 57 | 7.7 | 5 [6,7,39,47,50] |

| 2 | 6 user-selectable bands: 450–1000 | 10 | 6.6 | 3 [29,31,34] |

| 3 | B, G, R, RE, NIR, therm | 32, 27, 14, 12, 57 | 5.2 (MSI) | 2 [15,40] |

| Sensor | Spectral Range (nm) | Spectral Resolution (nm) | Number of Studies |

|---|---|---|---|

| 1 | 400 to 1000 | 5.8 | 2 [33,37] |

| 2 | 350 to 1000 | 1.5 | 2 [45,46] |

| 3 | 900 to 1700 | 8 | 1 [42] |

| Sensor | Temperature Range (°C) | Sensitivity (mK) | Spectral Range (μm) | Operation Range (25 °C) | GSD (cm/pixel at 120 m AGL) | Number of Studies |

|---|---|---|---|---|---|---|

| 1 | −40 to 80 | 85 | 7.5 to 13.5 | −50 to 85 | 30.8 | 3 [29,31,34] |

| 2 | −40 to 140 | 20 | 7 to 14 | −40 to 80 | 6.58 | 2 [45,46] |

| 3 | N/A | <50 | 5 to 17 | N/A | 81 | 2 [15,40] |

| Classes | Precision (XGBoost) | Precision (U-Net) | Recall (XGBoost) | Recall (U-Net) | F1-Score (XGBoost) | F1-Score (U-Net) |

|---|---|---|---|---|---|---|

| Healthy Moss | 0.86 | 0.94 | 0.71 | 0.71 | 0.78 | 0.81 |

| Stressed Moss | 0.86 | 0.86 | 0.83 | 0.86 | 0.85 | 0.86 |

| Moribund Moss | 0.88 | 0.87 | 0.92 | 0.94 | 0.90 | 0.90 |

| Lichen | 0.94 | 0.95 | 0.91 | 0.85 | 0.93 | 0.90 |

| Non-Vegetation | 1.00 | 0.94 | 1.00 | 0.97 | 1.00 | 0.96 |

| Average | 0.91 | 0.91 | 0.87 | 0.87 | 0.89 | 0.89 |

| Seminal Work | Network Class | Number of Parameters | Strengths | Weaknesses |

|---|---|---|---|---|

| FCN (2015) [68] | FCN | 57 M (with AlexNet) [68] | – First CNN for semantic segmentation [69] | – Over-simplified decoder of FCN leads to a coarse-resolution segmentation, limiting the fidelity and accuracy [69] |

| UNet (2015) [70] | FCN with Encoder-Decoder | 7.76 M [71] | – Contracting paths: extracts hierarchical features [69] – Expanding path: learns more contextual information [69] – Relatively small model => reduces overfitting => suitable for smaller datasets | – Extracts local semantic features but does not model global information from the whole image [69] – Computationally expensive |

| PSPNet (2017) [72] | Pyramid Network (can have a transformer or CNN-based networks as backbones [73]) | 42.57 M [73] | – Pyramid Pooling Module aggregates context information at multiple scales => good at capturing local and global context | – Computationally expensive – Difficulty capturing local details [73] |

| DeepLab (2017) [74] | FCN with Dilated Convolutions | 20.5 M (DeepLab-LargeFOV) [74] | – Atrous convolutions => full resolution feature maps [74] – Atrous spatial pyramid pooling => effective segmentation of objects at multiple scales [74] – Localisation accuracy increased with a fully-connected Conditional Random Field [74] | – Struggles with small objects and often misjudges similar objects (DeepLab3+) [75] |

| SETR [76] | Transformer-based | 97.64 M [76] | – Global context modelling superior to other methods due to sequence-to-sequence modelling ability [69] | – Transformer-based encoder with a much higher computational complexity than CNN-based encoders [69] – ViT outputs single-scale low-resolution features instead of multi-scale ones [77] |

| Reference | Seg. Target | Seg. Model | UAV Type | Accuracy (%) | Inference Speed (fps) |

|---|---|---|---|---|---|

| [82] | Rice-field weeds | FCN-Alexnet | Multirotor | 62.80 (mIoU) | 4.50 |

| [83] | Parthenium weed plant in pulse crop fields | LinkNet-34 | Multirotor | 59.80 (mIoU) | 4.61 * |

| [84] | Rice-field weeds | MobileNetV2-Unet | Multirotor | 78.77 (mIoU) | 45.05 |

| [84] | Rice-field weeds | FFB-BiSeNetV2 | Multirotor | 80.28 (mIoU) | 40.16 |

| [85] | Hogweed | U-Net | Multirotor | 95.80 (ROC AUC) | 0.46 |

| [86] | Burned area | BASNet | N/A | 77.20 () | 5.00 * |

| [81] | Crop rows | Modified ENet | Ground-based | 90.90 (mIoU) | 17.00 * |

| [87] | Vegetation and road | LW-AerialSegNet | Multirotor | 82.00 (mIoU) | 75.20 * |

| [88] | Weed Mapping | SegNet | Multirotor | 80.00 (F1-score) | 1.80 |

| VI | Times Cited | Formula | Applications |

|---|---|---|---|

| NDVI | 9 | A measure of greenness/photosynthetic activity [90] | |

| GNDVI | 4 | More sensitive than NDVI in detecting chlorophyll content—used to detect early signs of stress in plants | |

| MSAVI | 4 | Used to minimise influence of soil brightness in areas of sparse vegetation | |

| EVI | 3 | Used in areas of high biomass, also useful for reducing the influence of atmospheric conditions (by using the Blue band) | |

| ExG | 3 | Useful for distinguishing between green vegetation and non-vegetation such as soil and rocks, especially for high-resolution images | |

| MTVI2 | 3 | Useful for environments with varying soil and vegetation conditions as it aims to account for soil interference | |

| NDRE | 3 | Sensitive to chlorophyll content so used for assessing plant health | |

| SRI | 3 | Used to account for soil background reflectance |

| Top Three VIs for Multispectral Data [15] | Feature Importance | Top Three VIs for Hyperspectral Data [40] | Feature Importance |

|---|---|---|---|

| MSAVI | 2 | SMMI (new) | 8 |

| GNDVI | 7 | MSAVI | 13 |

| LCI | 9 | NDLI (new) | 14 |

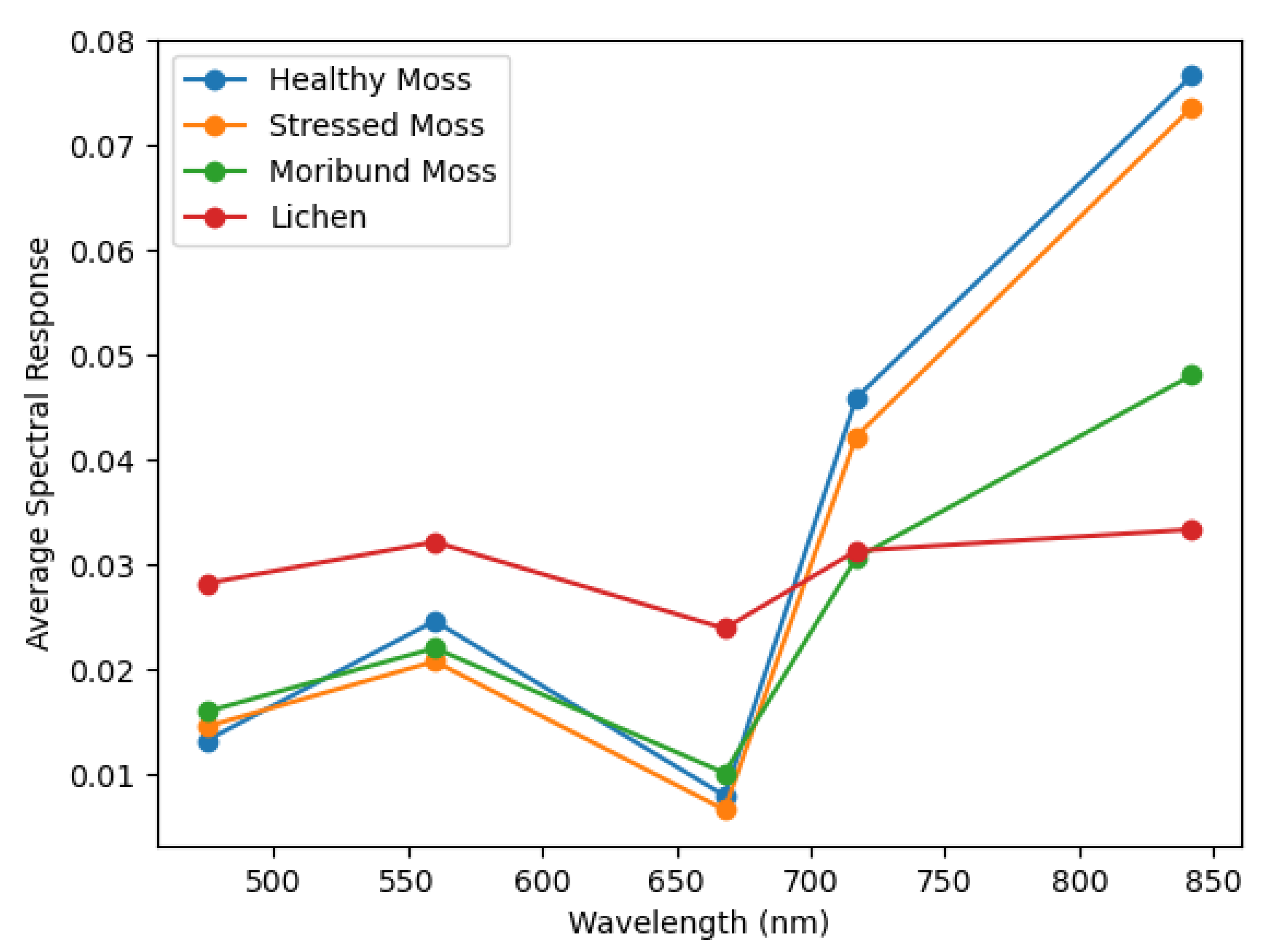

| Class | NDVI | Modified NDVI |

|---|---|---|

| Moss (healthy) | 0.811 | 0.448 |

| Moss (stressed) | 0.834 | 0.443 |

| Moss (moribund) | 0.651 | 0.352 |

| Lichen | 0.165 | 0.129 |

| Reference | Application | Segmentation Model | Data Type | Path Planning |

|---|---|---|---|---|

| [94] | RIT-18 (Gazebo) & mapping sugar beet field (real-world) | Modified SegNet | MSI & RGB | An evolutionary path planner to optimise trajectories based on maximising some objectives vs. cost (e.g., segmentation of vegetation vs. energy, time, or distance) |

| [79] | Mapping of desiccation cracks (real-world) | Modified ResNet18 | RGB, monochrome | POMDP with augmented belief tree solver |

| [95] | WeedMap & RIT-18 datasets (sim) | ERFNet | RGB & MSI | Gaussian process path planner to adjust UAV altitude for capturing finer details |

| [96] | Weedmap (Airsim) | U-Net with EfficientNetB1 backbone | RGB | Adjusts UAV speed based on quantity and confidence of target class detections, built-upon the Spanning-Tree-Coverage algorithm |

| Name | OS Support [99] | Open-Source | MSI Support | Weather Effect [99] |

|---|---|---|---|---|

| Gazebo | W, M, L | Yes | Requires modelling | Supports weather effects through community plugins |

| AirSim | W, M, L | Yes | Requires modelling | Large number of built-in functions for weather variations |

| Matlab Simulink (UAV Toolbox) | W, M, L | No | Can create custom sensors | Sun angle, time of day, fog, and rain by default |

| CoppeliaSim | W, M, L | Mostly | Provided API that allows custom plugins | No built-in support for weather effects |

| Study | Year | Limitations/Challenges | Recommendations/Future Studies |

|---|---|---|---|

| [28] | 2011 | N/A | (1) Use detailed terrain features from DEM to explore the relationship between moss die-back, caused by water stress, and terrain characteristics |

| [31] | 2014 | (1) Mini-MCA camera limitations: rolling shutter and sensor noise (2) Erroneous pixels due to high sensor noise and low light in shadows | N/A |

| [32] | 2015 | N/A | (1) Use MSI or HSI to yield estimates of vegetation activity and stress |

| [33] | 2017 | (1) Autopilot instruments were not operating reliably, so manual operation was required (2) SVR algorithms were trained with 2013 data but validated with measurements from 1999 | (1) Further laboratory and/or field studies to train SVR algorithms to monitor other ground-hugging vegetation communities such as Arctic tundra (2) Coupling their approach with other sensing techniques such as thermal mapping and/or spectral sensing of photosynthesis-related chlorophyll fluorescence emissions could be used for monitoring vegetation processes such as carbon assimilation and gross primary production (3) Further investigations of the unique spectral characteristics of different species |

| [35] | 2018 | (1) Lichen-covered surfaces were impossible to map on the UAV image (using RGB data) | N/A |

| [34] | 2018 | (1) MSI data suffered from sensor noise (2) Limited number of GTs | (1) NIR bands are essential for the health assessment of Antarctic moss beds (2) Need to demonstrate the transferability of the RF model to other Antarctic moss sites |

| [36] | 2019 | N/A | (1) Collect more GT in different terrestrial regions to allow extrapolation of model predictions to other sites (2) Future studies should use a multispectral camera for more accurate vegetation detections and RTK navigation to accelerate fieldwork (3) Future studies could apply the segmentation model to Sentinel-2 and Landsat resolution due to data availability that spans larger terrestrial regions and timescales |

| [37] | 2019 | N/A | (1) Validate recommended hyperspectral sensor (Photonfocus) in the field |

| [13] | 2020 | (1) Camera had low spectral resolution due to overlap of relatively wide spectral bands | (1) Use a true multispectral sensor with illumination compensation rather than using modified Bayer filters for possibly better vegetation discrimination (2) The number of undetected vegetation sites increases as the spatial resolution of the sensor decreases (3) Adjust class ranges for each scene and emphasise the importance of adjusted statistical parameters for selection |

| [39] | 2022 | N/A | (1) Future studies should target specific spectral signatures of particular species |

| [38] | 2022 | (1) S110 camera had radiometric and spectral limitations (high overlap between wide spectral bands) (2) Difficult remote sensing application | N/A |

| [42] | 2023 | (1) Spectra can often be noisy around 1300–1500 nm due to the absorption of water vapour in the atmosphere | (1) Collect new datasets to validate the model’s ability to generalise (2) A combination of sensors should be used to compare water content results with other moss health proxies |

| [40] | 2023 | (1) Hyperspectral data: limited spatial coverage and high computational demand | (1) Use MSI to scale methodology to larger areas and study the trade-off with HSI (2) Assess the applicability of the proposed workflow for broader ASPA mapping (3) Expand the labelled dataset to include more varied and less distinct vegetation classes |

| [41] | 2023 | (1) Unusable flight conditions (too much wind and snow) for a month (2) NDVI not useful in mapping brightly coloured lichens (likely due to high reflectance in visible and NIR bands) (3) Ordinary GPS led to accuracy errors for GTs | (1) Use cameras with more spectral information |

| [15] | 2024 | (1) Limited endurance of UAV flights (limited coverage, may require multiple missions). (2) Payload limits the types of sensors and equipment that can be deployed (3) Limited UAV operating windows due to extreme weather | (1) Exploration and refinement of ML techniques tailored to polar environments (2) Capture more high-resolution RGB data to enhance labelling and CNN classifier performance (3) Capture higher-resolution multispectral data to better distinguish plant species (4) Conduct a detailed analysis on how VIs vary between classes (5) Evaluate other ML algorithms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lockhart, K.; Sandino, J.; Amarasingam, N.; Hann, R.; Bollard, B.; Gonzalez, F. Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review. Remote Sens. 2025, 17, 304. https://doi.org/10.3390/rs17020304

Lockhart K, Sandino J, Amarasingam N, Hann R, Bollard B, Gonzalez F. Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review. Remote Sensing. 2025; 17(2):304. https://doi.org/10.3390/rs17020304

Chicago/Turabian StyleLockhart, Kaelan, Juan Sandino, Narmilan Amarasingam, Richard Hann, Barbara Bollard, and Felipe Gonzalez. 2025. "Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review" Remote Sensing 17, no. 2: 304. https://doi.org/10.3390/rs17020304

APA StyleLockhart, K., Sandino, J., Amarasingam, N., Hann, R., Bollard, B., & Gonzalez, F. (2025). Unmanned Aerial Vehicles for Real-Time Vegetation Monitoring in Antarctica: A Review. Remote Sensing, 17(2), 304. https://doi.org/10.3390/rs17020304