Abstract

Accurate information on crop planting and spatial distribution is critical for understanding and tracking long-term land use changes. The method of using deep learning (DL) to extract crop information has been applied in large-scale datasets and plain areas. However, current crop classification methods face some challenges, such as poor image time continuity, difficult data acquisition, rugged terrain, fragmented plots, and diverse planting conditions in complex scenes. In this study, we propose the Complex Scene Crop Classification U-Net (CSCCU), which aims to improve the mapping accuracy of staple crops in complex scenes by combining multi-spectral bands with spectral features. CSCCU features a dual-branch structure: the main branch concentrates on image feature extraction, while the auxiliary branch focuses on spectral features. In our method, we use the hierarchical feature-level fusion mechanism. Through the hierarchical feature fusion of the shallow feature fusion module (SFF) and the deep feature fusion module (DFF), feature learning is optimized and model performance is improved. We conducted experiments using GaoFen-2 (GF-2) images in Xiuwen County, Guizhou Province, China, and established a dataset consisting of 1000 image patches of size 256, covering seven categories. In our method, the corn and rice accuracies are 89.72% and 88.61%, and the mean intersection over union (mIoU) is 85.61%, which is higher than the compared models (U-Net, SegNet, and DeepLabv3+). Our method provides a novel solution for the classification of staple crops in complex scenes using high-resolution images, which can help to obtain accurate information on staple crops in larger regions in the future.

1. Introduction

Cultivated land is a crucial resource for human survival and development [1]. The rapid and accurate acquisition of cultivated land area is fundamental for real-time dynamic monitoring of crop areas, yield estimation, and the sustainable development of land resources [2,3,4]. Changes in the spatial distribution of agricultural land and crops directly impact food security [5]. Wheat, corn, and rice are the three most widely cultivated staple crops. Different countries and regions, based on local conditions, have developed unique agricultural products that leverage their strengths while mitigating their weaknesses, allowing them to build a strong reputation in the international market. Accurate information on cultivated land and its spatial distribution is essential for understanding and monitoring changes in land use over time.

Remote sensing technology emerged in the 1960s as an innovative earth science discipline featuring advanced and comprehensive detection capabilities. Over the years, the spatial, temporal, and spectral resolutions of remote sensing imagery have steadily improved [6,7]. A space–ground–air integrated remote sensing satellite observation system has been developed, enabling the processing, analysis, and application of diverse information. Remote sensing offers several advantages, including the ability to acquire vast amounts of data across multiple platforms and resolutions, along with extensive image coverage. It allows for timely and accurate acquisition of information on agricultural resources and disasters. Recently, a variety of remote sensing satellite data have been widely used in crop classification tasks [8,9,10,11,12].

Some researchers have utilized low-resolution remote sensing data to conduct regional or global mapping of cultivated land and crops, yielding promising results [13,14,15,16]. Moreover, in some comprehensive land use products based on remote sensing, cultivated land is often classified as one or more highly significant categories [17,18,19,20,21]. The challenge with classifying data from coarse-resolution imagery lies in its reliance solely on spectral information, as the size of objects is often significantly smaller than the pixel size. Consequently, most historical efforts for global cultivated land and crop mapping have focused on per-pixel classification methods [22,23,24,25]. In contrast, very high spatial resolution (VHR) multispectral images allow for the incorporation of more detailed spatial information.

Deep learning, as an emerging technology, has found extensive applications in various classification tasks, such as crop disease detection [26,27], plant classification [28], and crop identification [29]. Numerous studies have been conducted in the field of staple crop classification. For example, some researchers have utilized 3D CNNs to classify corn, rice, soybean, and wheat [30], while some researchers have employed UNet++ to identify winter wheat and garlic [31].

Although there have been many studies on cultivated land information extraction and crop classification, the current research mainly focuses on large-scale regions or plain areas, and there are relatively few studies on complex scenes. The challenges of crop classification using remote sensing images in complex scenes can be broadly divided into two areas: domain-specific challenges and data-related challenges.

Challenges in domain-specific areas mainly include differences and noise introduced by factors such as topography, weather conditions, crop health, cloud cover, and artificial buildings. The appearance of land types may be highly variable. In addition, compared with the crop growth cycle, the acquisition time of satellite images will also greatly affect the appearance of crops. The images of the same crop taken at different growth stages may look very different, while the images of different crops may look very similar. This leads to high interclass similarity and low intraclass similarity, which complicates the classification process.

Data challenges can be categorized into two main areas. One is the lack of large labeled data. Unlike other domains, such as natural images, which benefit from large labeled datasets like ImageNet [32], the remote sensing field has limited labeled data despite the regular collection of petabytes (PB) of data from various sensors. Also, obtaining labeled data in remote sensing is a challenging task. The other is the dependence on data due to high variability in data sources, such as temporal variability. Depending on when the images are acquired, weather conditions may introduce noise, such as cloud cover.

The dual-branch structure means that there are two branches running in parallel in the network framework. This structure can realize the combination of various advantageous strategies through the separate operation of the two branches, such as the combination of different backbones, the combination of different scales, the combination of different modal data, etc. Currently, some scholars have applied the dual-branch structure to the field of semantic segmentation [33,34], but the idea of a dual-branch network is still rare in the fine classification of crops in complex scenes with GaoFen-2 (GF-2) images.

Motivated by the discussions above, we proposed a multi-level fusion method for staple crop classification in complex scenes, referred to as the Complex Scene Crop Classification U-Net (CSCCU). This strategy aims to address the challenges mentioned in complex scenes. Specifically, we first developed a dual-branch model in which a shallow feature fusion (SFF) module is utilized to fuse the shallow-level features extracted from each convolution layer. The SFF module comprises two squeeze-and-excitation (SE) modules [35]. Then, a deep feature fusion (DFF) module composed of the attention module [36] is used to fuse the extracted deep-level contextual features at the bottom of the encoder. This multi-level hierarchical fusion method can better extract objects of different sizes and shapes.

The contribution of this study has three aspects, summarized as follows:

- (1)

- A novel multi-level hierarchical fusion method, CSCCU, for complex scenes is proposed. It uses a dual-branch structure and strengthens the representative features of different branch data through the feature fusion modules. Specifically, the features between different branches are strengthened by multi-level hierarchical fusion, which promotes the learning of semantic features at all levels, thus improving the semantic segmentation performance of remote-sensing images.

- (2)

- The introduction of spectral features into staple crop classification tasks is demonstrated to improve accuracy. Experiments confirm that incorporating spectral features into crop classification tasks enhances accuracy, providing valuable support for crop cultivation and research in these regions.

- (3)

- We obtained the fine classification map of staple crops in Xiuwen County using the proposed method.

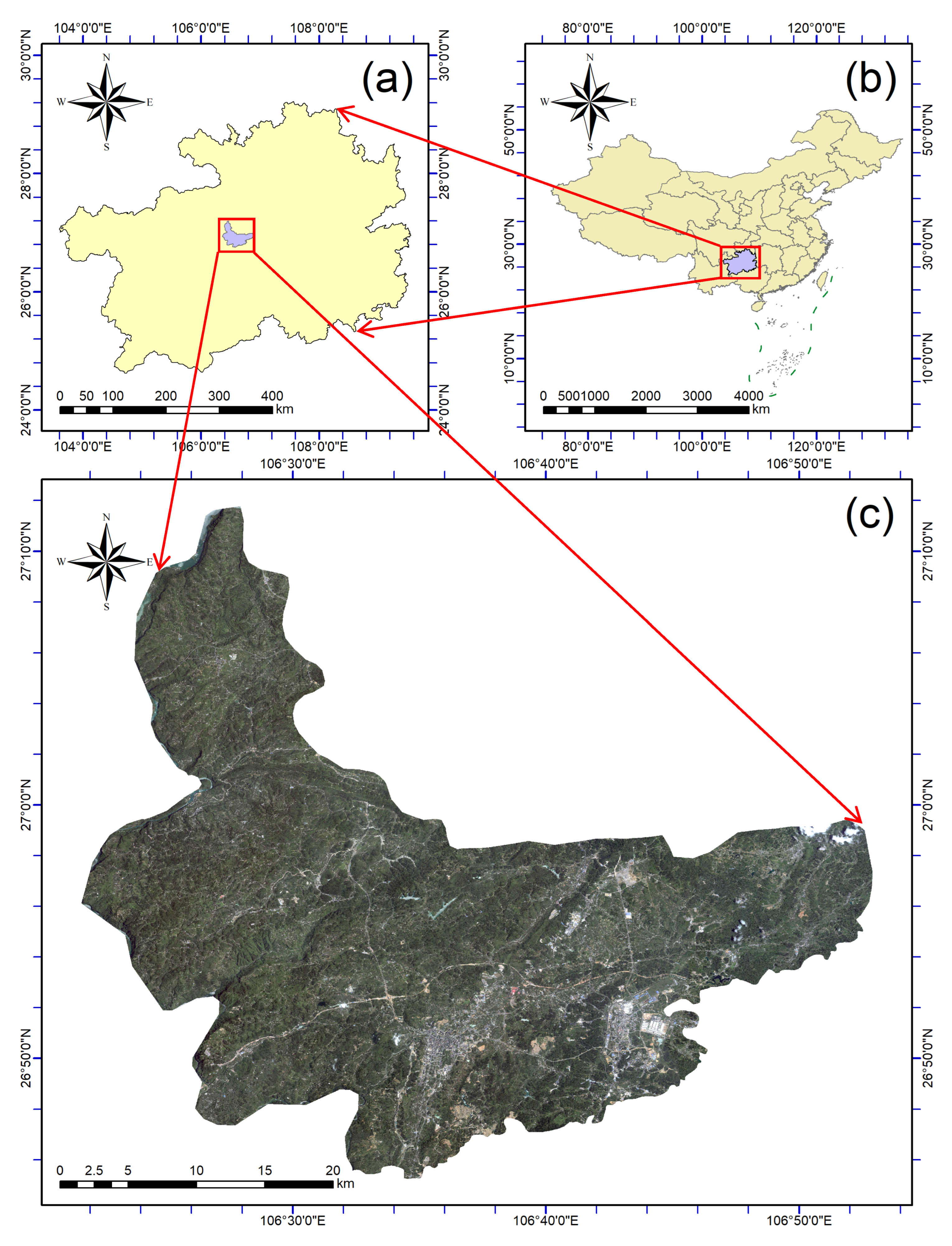

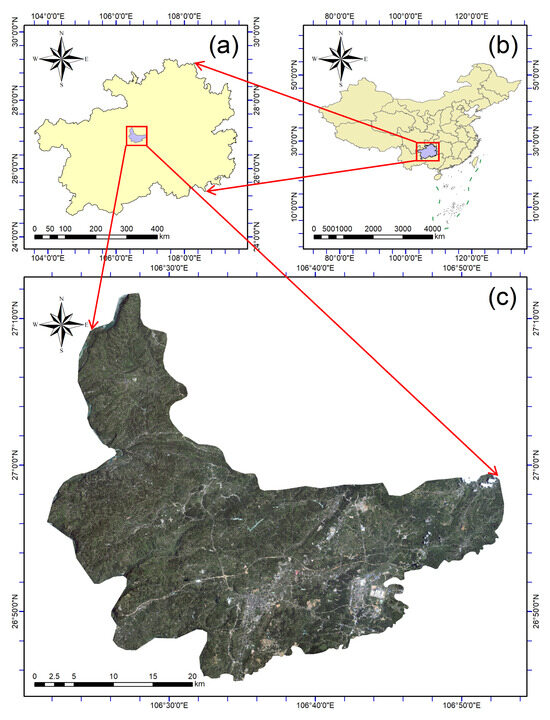

2. Study Area

Mountains account for about 65% of China’s land area. Guizhou Province, located in southwestern China, features typical mountainous characteristics that are a challenge for crop classification. Our study area is situated in Xiuwen County, Guiyang City, Guizhou Province (Figure 1). The terrain of Xiuwen County slopes from east to northwest, with the highest altitude reaching 1610 m and the lowest at 666 m; most areas fall between 1200 and 1300 m. Xiuwen County has a subtropical humid monsoon climate characterized by a pleasant year-round climate, with no hot summers or cold winters and abundant rainfall. The annual average temperature is 13~16 °C, the annual average precipitation is about 1000~1100 mm, and the annual runoff is between 500 and 600 mm. The staple crops in Xiuwen County primarily include rice, corn, wheat, and potatoes.

Figure 1.

The geographical location and image of Xiuwen County. (a) The location of Guizhou Province in China. (b) The location of Xiuwen County in Guizhou Province. (c) High-resolution images of Xiuwen County.

3. Methods

3.1. Dataset

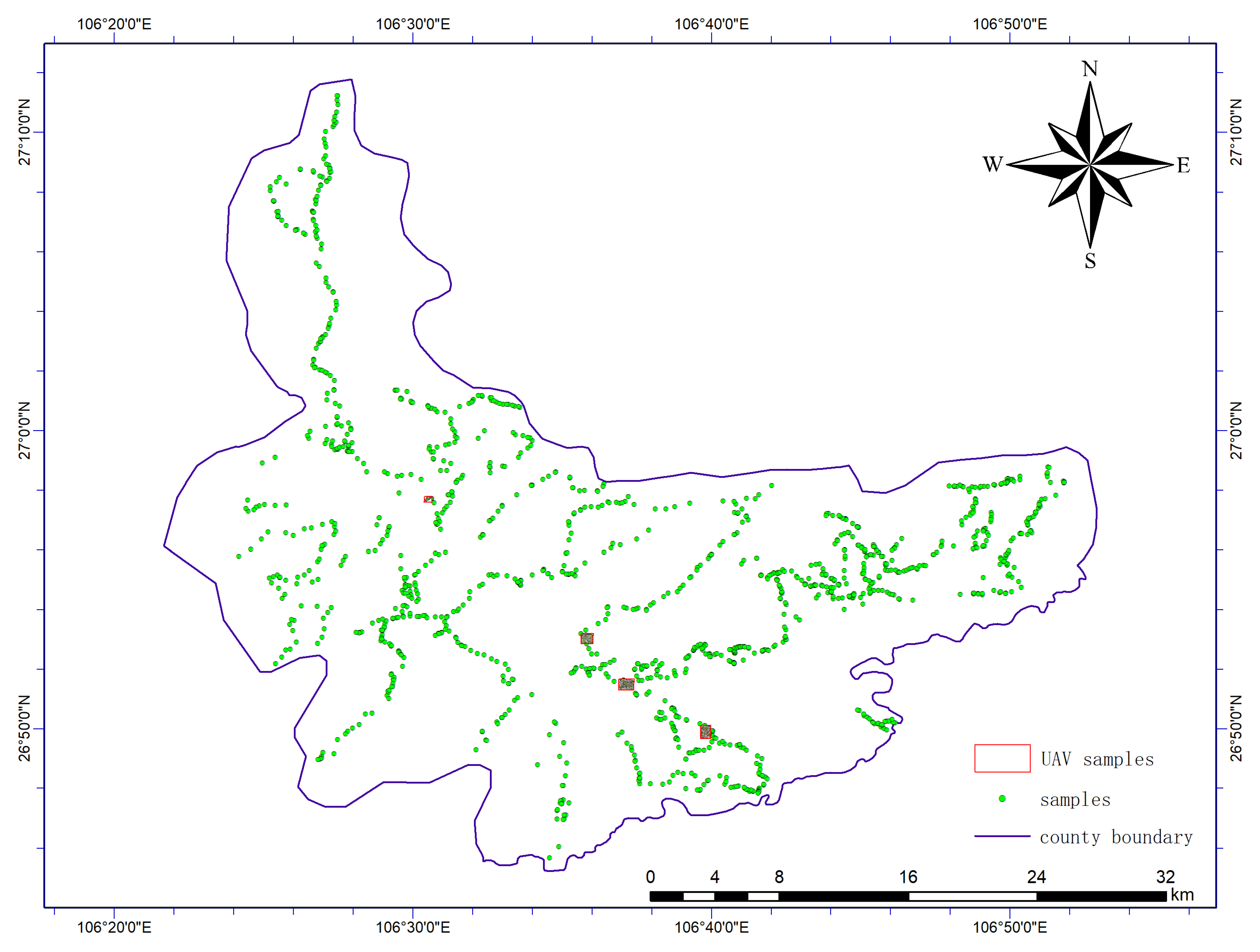

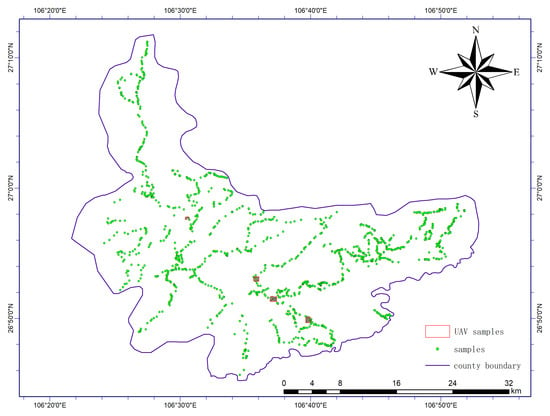

In this study, a classification dataset for Xiuwen County was established for the training and validation of the model. The dataset includes sampling data, optical bands, and spectral features from various locations within Xiuwen County; specifically, high-resolution remote sensing images (0.8 m) were sampled based on ultrahigh resolution (UAV) images (<0.1 m) and ground sampling data (Figure 2).

Figure 2.

Sample distribution in the study area.

The red, green, and blue bands (RGB) from GF-2 data with a spatial resolution of 0.8 m were used as the optical bands, corresponding to wavelengths of 630–690 nm, 520–590 nm, and 450–520 nm, respectively.

The near-infrared band (NIR) was also obtained from GF-2 data. The normalized difference vegetation index (NDVI) was calculated by NIR and red bands and used as spectral features (SF).

RGB was used as the main branch input, and NIR and SF were used as auxiliary branch input.

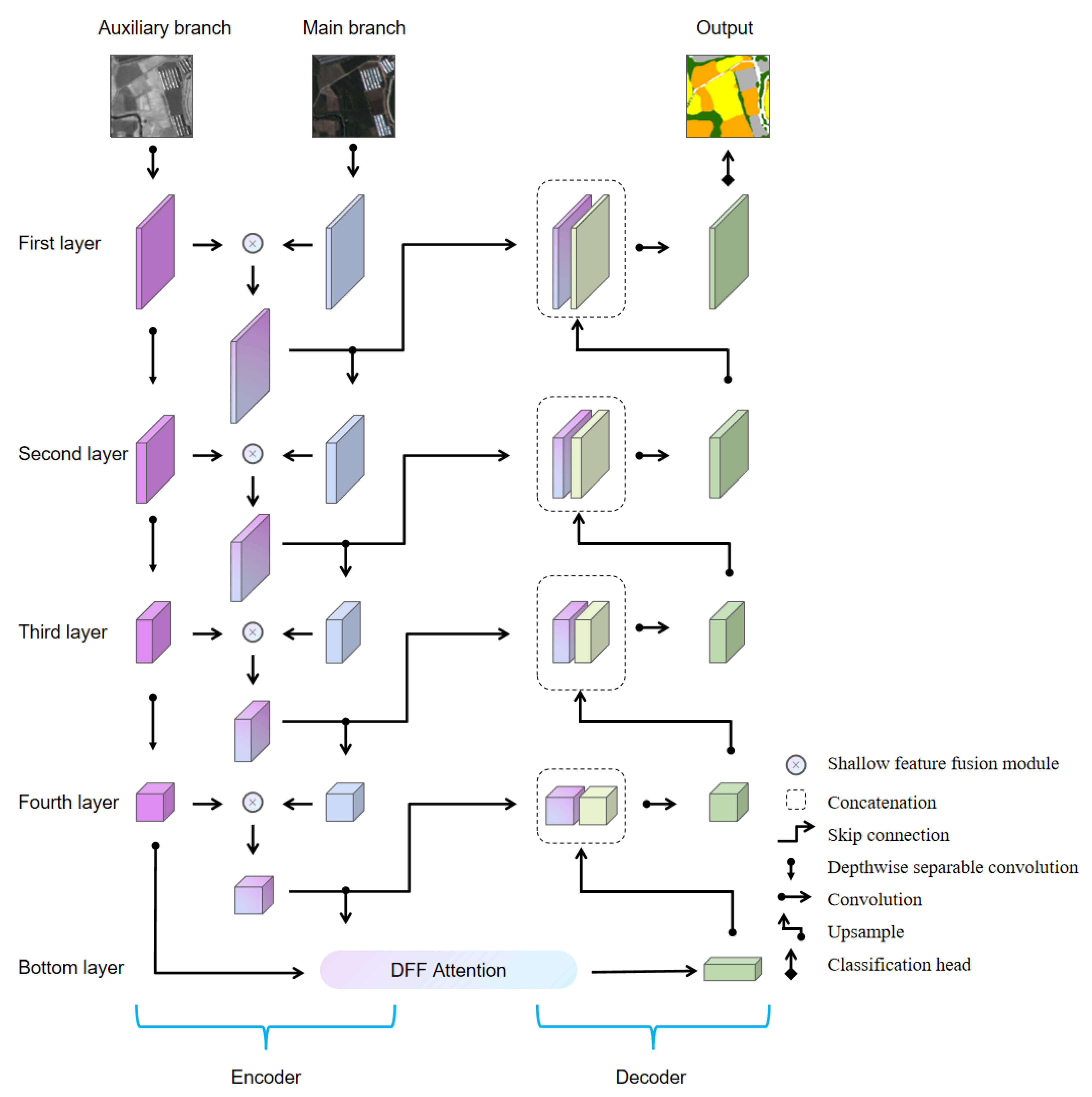

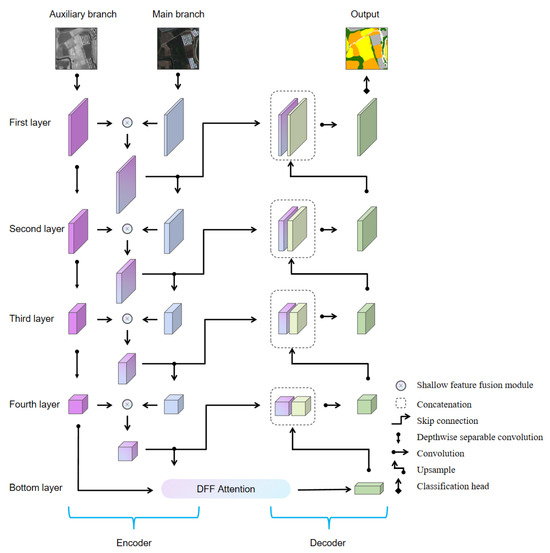

3.2. The Architecture of Complex Scene Crop Classification U-Net (CSCCU)

The network structure of CSCCU is shown in Figure 3. Specifically, the U-Net encoder learns both shallow and deep features from each convolution layer. The proposed CSCCU consists of two branches: one takes the RGB band as the input, and the other deals with the NIR band and spectral feature data. The branch using the RGB band is the main branch, and the branch using the NIR band and spectral feature data is the auxiliary branch. CSCCU can achieve better block identification and boundary capture by incorporating an attention module into U-Net.

Figure 3.

Overview of the proposed Complex Scene Crop Classification U-Net (CSCCU). The encoders of both branches are based on CNN blocks, and each CNN block consists of two depthwise separable convolutions. These CNN blocks use the SFF module to fuse them in the first four layers, and the DFF module is used for attention allocation at the bottom layer. The decoder uses the cascaded upsampling technique to generate the segmentation map using the fused features.

In the encoder, the SFF module is utilized for shallow-level feature fusion and the bottom DFF module is used for deep-level feature fusion. Through a feature-level fusion mechanism, the extracted features from both branches in the first four layers are enhanced and fused by SFF modules. Then, the fused features are used as the extraction results of the main branch and input to the next layer. At the bottom of the encoder, a DFF attention layer is set up to fuse the two branch features. Using the DFF module, through the allocation of attention, the deep features are further fused to strengthen the contextual representational information. Finally, the fusion result output by the DFF attention layer is transmitted to the cascaded upsampling decoder to recover the spatial information. It is worth mentioning that the hierarchical feature fusion scheme in CSCCU can also be used for multi-modal data feature fusion.

In the following sections, we will introduce the key feature fusion modules of CSCCU in detail.

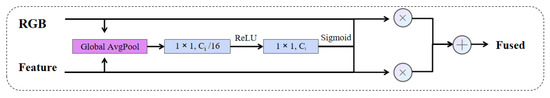

3.2.1. SFF Module

We use X ∈ RH×W×C and Y ∈ RH×W×C to represent the input of the main branch and the auxiliary branch, where H, W, and C represent the height, width, and channel counts of the inputs. In this study, our main branch has three channels, while the auxiliary branch has two channels (Figure 3). The proposed CSCCU employs a dual-branch encoder, which extracts features from each input. Specifically, the first four convolutional layers of the encoder use the SFF module for hierarchical feature fusion. The size of the downsampling feature map is reduced by half after each layer of convolution; that is, H and W are divided by 2 on the basis of the previous layer. In particular, the results of each layer of feature fusion will be used as the input of the next layer of the main branch; that is, the features extracted by the auxiliary branch are fused into the main branch to enhance the features of the main branch, while the input of the next layer of the auxiliary branch is only composed of the features extracted by the previous layer of the auxiliary branch. At the same time, in the skip connection part of the network, the output result fused by the SFF module is also used as the feature map in the encoder to connect to the corresponding decoder layer. These decoder layers are designed to recover spatial information and contextual information.

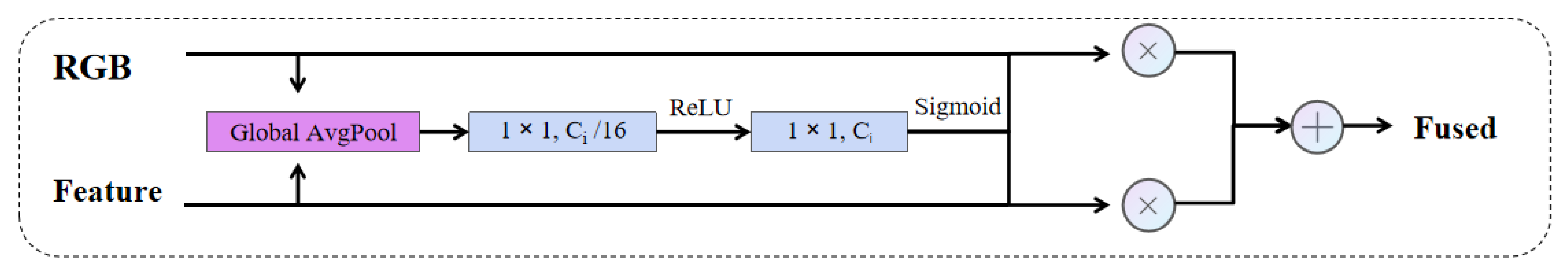

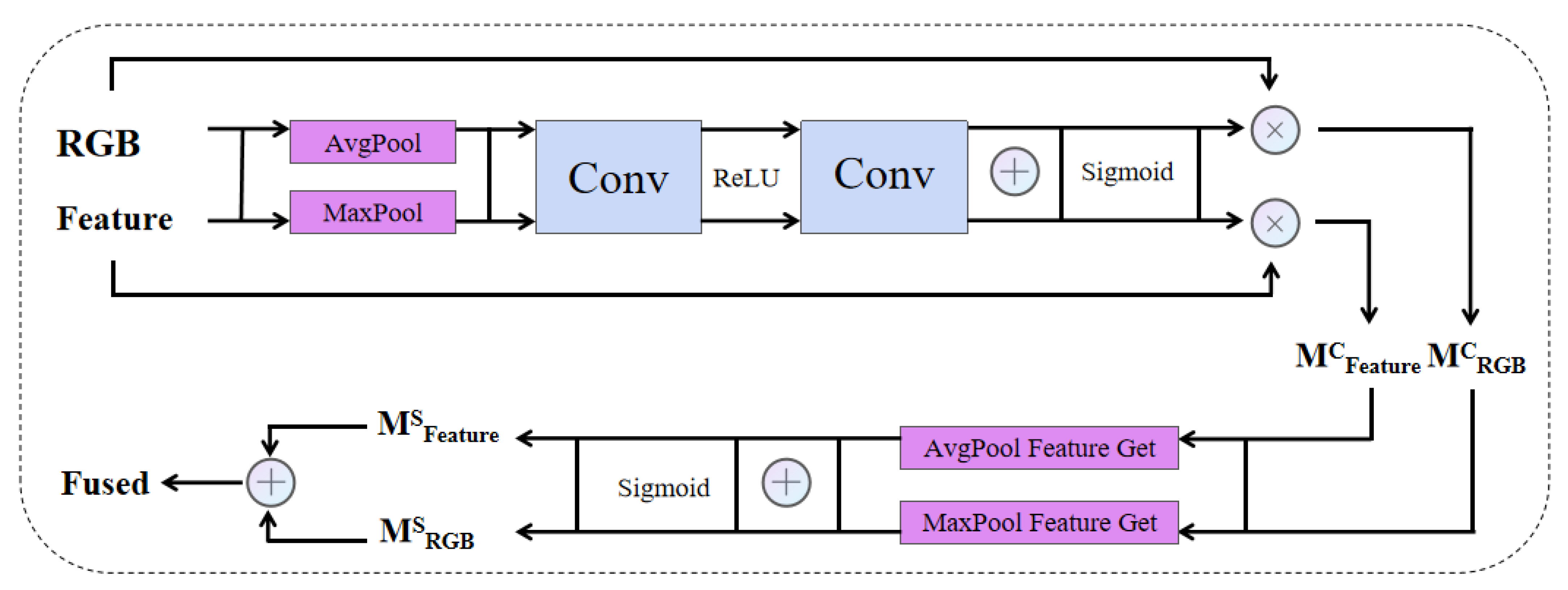

Figure 4 shows the structure of the SFF module. The SFF module first performs global average pooling on the input data of the two branches to aggregate global information and then performs the SE process using depthwise separable convolution twice and uses ReLU and Sigmoid activation functions, respectively. Finally, the features of the main branch and the auxiliary branch are weighted and combined element by element to realize the shallow feature fusion.

Figure 4.

The composition of the SFF module. It takes the features of two branches as input, enhances the features in a channel-level manner, and then fuses the information between branches through element-level addition.

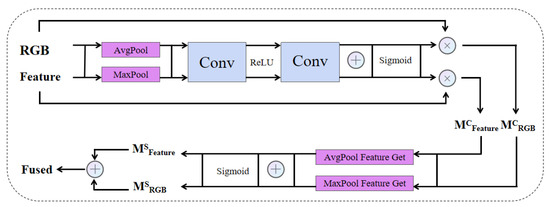

3.2.2. DFF Module

The attention mechanism has been widely recognized in deep learning. Many scholars have studied the performance of attention mechanisms in deep learning and proposed improved methods such as CBAM [36], DA [37], and MSCA [38]. Inspired by the above research, our DFF module is composed of two parts: channel attention and spatial attention (Figure 5).

Figure 5.

The proposed DFF module performs deep-level feature fusion at the bottom of the model. It uses both average pool and maximum pool to fuse features through the two dimensions of channel and space and finally merges the results through element-wise addition.

The channel attention unit generates a channel attention map by analyzing the interactions between different channels, where each channel acts as a feature detector. To efficiently capture channel attention, we reduce the spatial dimensions of the input. While average pooling (Avgpool) is commonly used for this purpose, maximum pooling (Maxpool) can provide additional information to help refine the attention across different channels; therefore, we utilize Avgpool and Maxpool at the same time.

In the channel attention part, we first use Avgpool and Maxpool for each branch to aggregate the spatial information of the feature map so as to obtain the spatial context features of Avgpool and Maxpool: FAVG and FMAX. Then, these two features are used to generate a channel attention map M ∈ RC×1×1 through a shared network, which consists of a multi-layer perceptron with a hidden layer. We apply a reduction ratio r to set the size of the hidden layer to C/r × 1 × 1. After processing each feature through the shared network, the obtained feature vectors are finally combined by element-by-element summation.

The convolution blocks we use in the DFF are all depthwise separable convolutions. This convolution is a commonly used convolution method. It decomposes the conventional convolution operation into two steps: depthwise convolution and pointwise convolution. It can reduce the calculated cost and parameter number under the condition of basically maintaining the accuracy of the model, which can reduce the computational complexity. Many well-known networks, such as Xception [34] and MobileNet [39], use depthwise separable convolution. Through depthwise separable convolution, the operating parameters are reduced and computational efficiency is improved.

Also, in the spatial attention part, the spatial attention map is generated by spatial relationships between input features. Channel attention focuses on the content of important information, while spatial attention focuses on the location of important information.

We perform Avgpool and Maxpool operations along the channel axis of each branch to calculate spatial attention and then concatenate the two pooling results to form a feature. Pooling along the channel axis has been shown to effectively highlight key information regions [36]. Then, we perform a convolution operation on the merged features to generate the final spatial attention map M ∈ RH×W, which can emphasize or suppress the region where the information is located.

3.2.3. The Cascaded Decoder

In the cascaded decoder (Figure 3), multiple upsampling modules are used in turn to recover the hidden fusion features. Specifically, the decoder first upsamples the output results of the DFF module at the bottom of the network, restores them to the size of the corresponding layer of the encoder, and then concatenates them. The skip connection is realized by concatenating fusion features from the corresponding layer of the encoder. This repeats until the spatial resolution is restored to the original input size H × W. Each decoder block includes upsampling and convolution operations. Finally, the classification head produces the final prediction result.

3.3. Evaluation Metrics

In this paper, we segment and classify various land objects, including bare cultivated lands (corn, rice, and other crops), vegetation, buildings, impervious surfaces, and water features. To evaluate the effectiveness, we use mean intersection over union (mIoU) and overall accuracy (OA) as key performance indicators for assessing the network’s performance.

OA indicates pixel accuracy across all terrain classes, while mIoU represents the average ratio of the intersection to the union of predicted and true values across all land types. The specific calculation formulas are as follows:

4. Results

4.1. The Experimental Setting

The patch size of input data is 256 × 256 pixels. We divided a total of 1000 patches and divided the training set and the testing set 8:2; that is, 800 as the training set and 200 as the test set. The experimental setting is presented in Table 1.

Table 1.

The experimental setting.

No pre-trained backbones, such as U-Net or DeeplabV3+, were employed in our experiments. All models were trained from scratch for further comparisons.

Our classification system divides ground objects into seven categories: corn, rice, other crops, vegetation, buildings, water, and impervious surfaces.

4.2. Comparisons of CSCCU Among Different Methods

In this section, we compare the proposed method with several recognized semantic segmentation models, including U-Net [40], SegNet [41], and DeeplabV3+ [42]. The results are presented in Table 2. U-Net, with its U-shaped network structure, uses dense connections in the decoder, improving segmentation accuracy. However, for objects with complex edge details, it may still produce some segmentation blurriness. SegNet features a relatively simple architecture, utilizing a deconvolution layer for upsampling, and performs well on basic image segmentation tasks. However, the decoder in SegNet employs a fixed deconvolution operation for upsampling, limiting its ability to learn task-specific upsampling patterns, which may result in the loss of finer details during segmentation. Deeplabv3+ leverages dilated convolution in constructing a spatial pyramid pooling module, yet it does not fully exploit dilated convolution to expand the receptive field effectively, leading to unsatisfactory performance when handling multi-scale challenges.

Table 2.

Comparison of results between different methods.

The method proposed in this paper achieves a mIoU of 85.61%, while U-Net, SegNet, and DeepLabv3+ achieve 82.21%, 78.16%, and 81.93%, respectively. The CSCCU model mentioned in this study has a lead of 3.40, 7.45, and 3.68 percentage points, respectively. This improvement can be attributed to the dual-channel attention mechanism of DFF attention, which overcomes the limitations of traditional convolutional receptive fields and enables more comprehensive feature extraction for irregular shapes, resulting in outstanding performance on remote sensing datasets. In the classification of staple crop categories, CSSCU is 5.56–10.20% better in correctly classifying corn, 7.66–14.92% better in classifying rice, and 0.56–5.04% better in classifying other crops (Table 2).

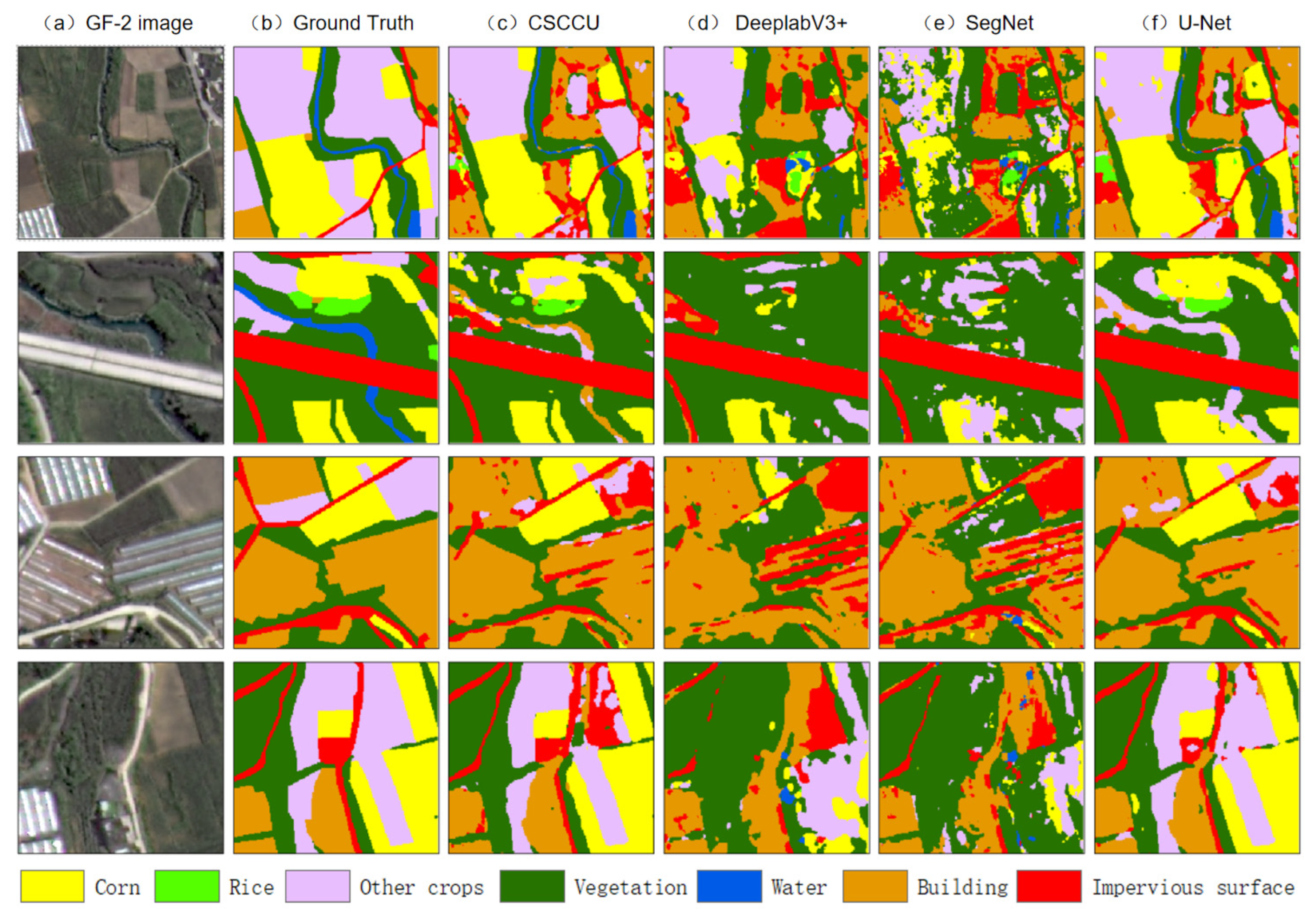

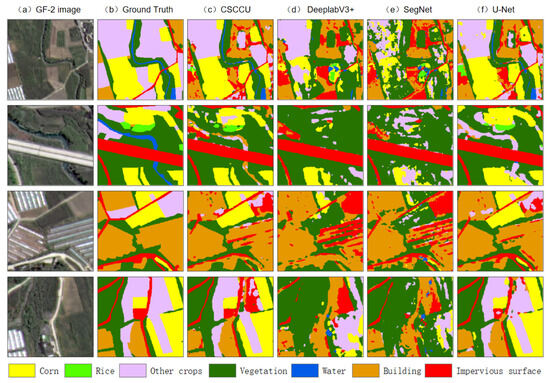

Specifically, we compared the prediction results under different crop distributions, farmland plot areas, and terrain conditions to further evaluate the performance of CSCCU in staple crop classification.

As shown in Figure 6, the CSCCU classification method demonstrates a stronger classification ability for staple crops in complex scenes. In the first row, the categories are small and uneven. In this case, SegNet and DeeplabV3+ cannot identify the crops close to the building on the left side of the image and the partially silted rivers in the middle part of the image. In the second row, rice and other crops are interlaced, and other models (U-Net, SegNet, DeepLabv3+) confuse rice with corn plots, where SegNet and DeeplabV3+ do not identify rice at all, and U-Net and our model identify most rice. In the third row, the weed is adjacent to the corn, resulting in poor identification of corn by other models, and the boundary of the corn plot is not clearly identified. In the fourth row, corn and other crops are interlaced, and our model more completely identifies the plots of corn and other crops.

Figure 6.

Identification of major features by various methods in four example scenes.

Compared with other methods, our model better captures the feature correlation between auxiliary features and visible light bands by means of hierarchical feature fusion so as to achieve accurate identification of staple crops, such as strengthening the identification of rice by means of the proximity relationship between water and rice.

Therefore, multiple sets of experimental data demonstrate that the method presented in this paper is highly effective in addressing the challenges of staple crop plot classification in complex scenes. The DFF module enhances the features of remote sensing images, yielding superior results compared to conventional classification methods.

4.3. Results Under Different Input Data

Table 3 presents the classification accuracy of various models using different input data. The results reveal the following insights:

- (1)

- Overall, the proposed CSCCU model achieved the highest classification accuracy for staple crops across different input characteristics. Specifically, when RGB, NIR, and SF were used, CSCCU’s mIoU was 85.61%, which is 3.40%, 7.45%, and 3.68% higher than that of U-Net, SegNet, and DeeplabV3+, respectively. Additionally, the OA is 89.51%, which is 6.34%, 12.41%, and 2.91% higher than that of U-Net, SegNet, and DeeplabV3+, respectively. CSCCU also recorded the highest IoU among all models.

- (2)

- Incorporating NIR and SF into the model had a positive impact on performance. The models that utilize multiple input data in Table 3 outperformed those that rely solely on the RGB. When the input data are only RGB, the OA of the model is 76.39% (U-Net), 72.33% (SegNet), 80.43% (DeeplabV3+), and 79.58% (CSCCU). However, when the input data are RGB, NIR, and SF, the OA of the model is 83.17% (U-Net), 77.10% (SegNet), 86.60% (DeeplabV3+), and 89.51% (CSCCU), which is 9.48%, 4,77%, 6.17%, and 9.93% higher than that using RGB only. Therefore, when RGB, NIR, and SF are all input into models, CSCCU, U-Net, SegNet, and DeeplabV3+ achieve higher accuracy compared to models using only the RGB. Overall, the highest values in all categories were obtained when CSCCU used RGB, NIR, and SF (Table 3).

Table 3.

Results under different input features between different methods.

Table 3.

Results under different input features between different methods.

| Method | Input Data | Accuracy (%) | OA (%) | mIOU (%) | |

|---|---|---|---|---|---|

| Corn | Rice | ||||

| U-Net | RGB, NIR, SF | 83.44 | 80.95 | 83.17 | 82.21 |

| RGB, NIR | 82.87 | 79.64 | 81.35 | 81.02 | |

| RGB | 78.38 | 72.15 | 76.39 | 74.14 | |

| SegNet | RGB, NIR, SF | 79.52 | 73.69 | 77.10 | 78.16 |

| RGB, NIR | 78.83 | 71.72 | 76.25 | 75.36 | |

| RGB | 70.03 | 67.52 | 72.33 | 69.14 | |

| DeeplabV3+ | RGB, NIR, SF | 84.16 | 78.28 | 86.60 | 81.93 |

| RGB | 80.80 | 74.46 | 80.43 | 79.50 | |

| CSCCU | RGB, NIR, SF | 89.72 | 88.61 | 89.51 | 85.61 |

| RGB, NIR | 87.74 | 85.28 | 86.33 | 83.60 | |

| RGB | 79.18 | 75.96 | 79.58 | 76.47 | |

Bolded values represent the maximum values in the table.

4.4. Ablation Experiment

In this section, we conducted two sets of ablation experiments to evaluate the effectiveness of the dual-branch network design of CSCCU and its feature fusion module.

In the first experiment, to investigate the effectiveness of the dual-branch structure, we input all data through the main branch without feeding any data into the auxiliary branch, thereby simulating a single-branch structure. The results were compared with those obtained using the dual-branch input, as shown in Table 4, demonstrating the significant advantage of the dual-branch structure. Specifically, compared to the single-branch input, the dual-branch structure improved the classification accuracy of corn by 6.57%, rice by 10.28%, and overall accuracy by 9.31%. This indicates that the dual-branch structure is highly effective in improving model accuracy.

Table 4.

The effectiveness of the dual branch structure.

In the second experiment, based on the dual-branch structure, we divided the experiment into three parts to evaluate the effectiveness of the SFF and DFF modules (Table 5).

Table 5.

The effectiveness of SFF and DFF.

- (a)

- The first part aimed to explore the effectiveness of the feature fusion module at the bottom of the network. Since the output of the bottom layer directly feeds into the decoder, we only replaced DFF with the SFF module to assess the effectiveness of DFF. The results showed that replacing DFF with SFF led to an overall decline in accuracy. Specifically, compared to using SFF, the DFF module improved the classification accuracy of corn by 2.23%, rice by 4.39%, and overall accuracy by 3.45%. This indicates that using DFF for feature fusion at the bottom layer is highly effective.

- (b)

- In the second part, the effectiveness of the SFF module was investigated by suppressing SFF in the shallow layers. Specifically, the SFF module was suppressed at different layers, meaning no feature fusion was performed at those layers. The results indicated that suppressing the SFF module at any layer led to an overall decline in accuracy. This indicates that the SFF module in each layer plays a crucial role in the model.

- (c)

- In the third part, the impact of reducing the number of SFF layers on model performance was studied by progressively suppressing SFF in the shallow layers. Specifically, the SFF module was suppressed in the first two layers, the first three layers, and the first four layers, respectively. The results showed that with each additional suppressed SFF layer, the model’s accuracy declined. When the SFF module was suppressed in all shallow layers, the overall accuracy of the model decreased by 7.77% compared to the case where all SFF modules were used. This demonstrates the effectiveness of the SFF module in the shallow layers.

Overall, the results of the ablation experiments further confirm that the proposed dual-branch network based on the hierarchical feature fusion strategy can more effectively extract and integrate multimodal data, thereby achieving better performance and enabling fine-grained classification of staple crops in complex scenes.

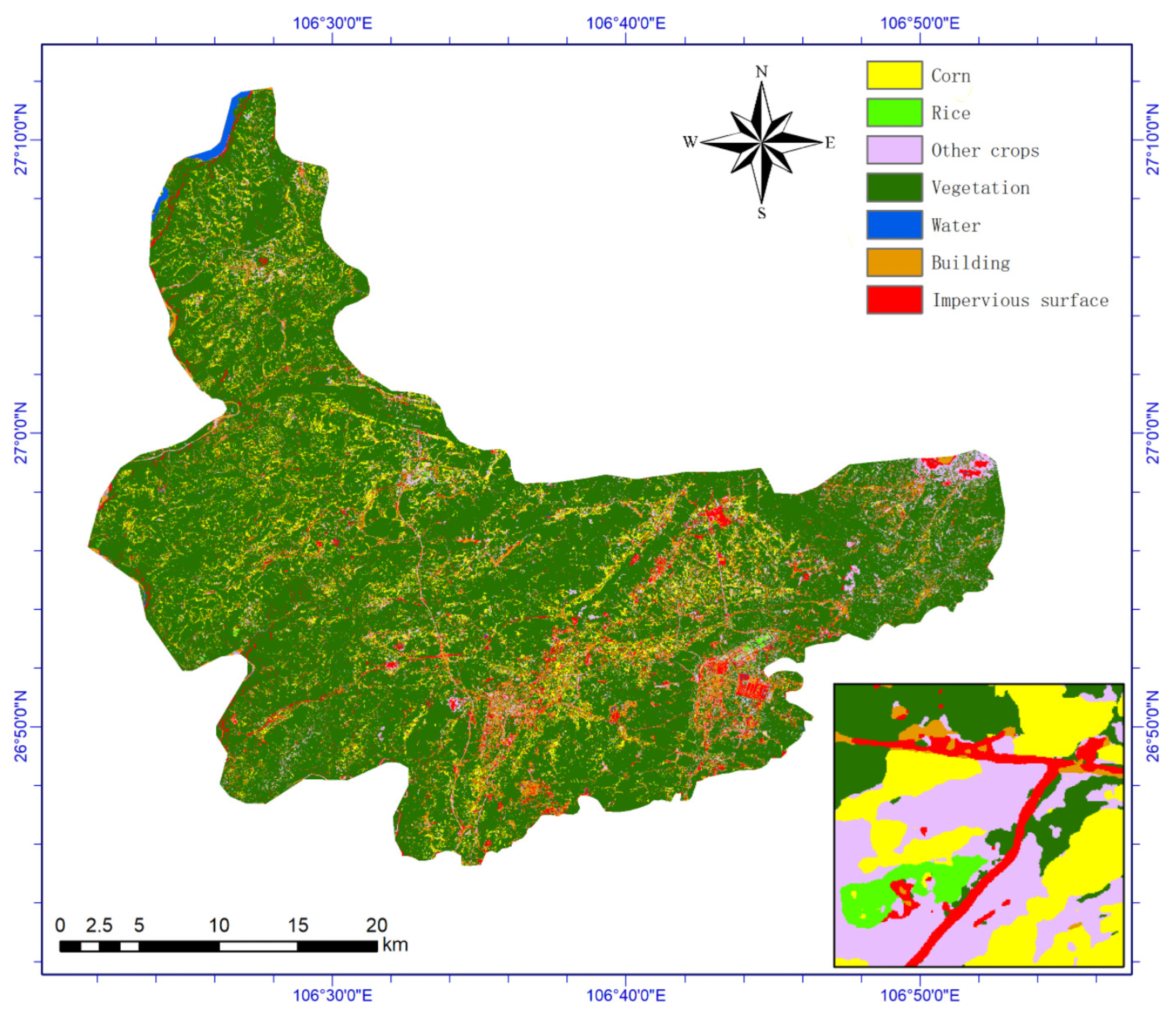

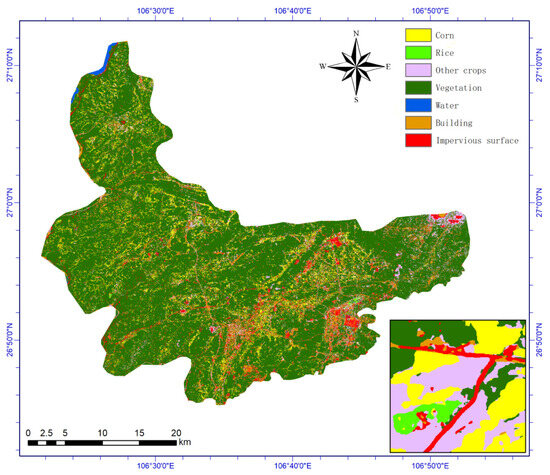

4.5. Mapping Results in Xiuwen County

As shown in Figure 7, we used the proposed CSCCU method to complete the classification of staple crops at a resolution of 0.8 m in Xiuwen County, Guizhou Province, in 2023, including corn, rice, and other categories.

Figure 7.

Mapping results from GF-2 satellite images in Xiuwen County, Guizhou Province, China, obtained by the proposed CSCCU method.

5. Discussion

5.1. Structural Advantages for Complex Scene Applications

In this study, we proposed a neural network for complex scene classification, named CSCCU, aimed at the accurate mapping of staple crops in challenging environments. Xiuwen County, Guiyang City, Guizhou Province, located in southwestern China, is characterized by typical mountainous terrain. The region’s complex topography, climatic conditions, and variable crop planting practices present significant challenges for staple crop classification. While previous researchers have applied remote sensing technology to crop mapping [30,43,44,45,46,47], their focus has varied, e.g., some researchers have enhanced mapping accuracy by increasing the amount of data and data modalities, including the use of large-scale training datasets or incorporating time series images as input [30,43]. However, in complex scenes, acquiring large-scale data often presents significant challenges, and maintaining temporal continuity, especially for high-resolution imagery, can be difficult, and some scholars focus on regions with relatively simple planting conditions, such as flat areas or large, regular plots [48]. However, complex scenes are characterized by land fragmentation and variable planting conditions, which may limit the effectiveness of these methods in achieving accurate results.

To address the challenge of staple crop classification in complex scenes, the CSCCU proposed in this paper utilizes a dual-branch structure design that incorporates NIR and SF as input. This approach enhances classification accuracy through the implementation of DFF attention allocation by integrating spectral features into deep learning algorithms. In comparison to mainstream models such as U-Net, SegNet, and DeepLabV3+, the proposed CSCCU achieved superior results in the classification and mapping tasks for Xiuwen County (Table 2), particularly in the classification of staple crops like corn and rice. Compared with other crop classification studies [30,31,49,50] (Table 6), our model achieves similar or even higher prediction accuracy with less input data in complex scenes.

Table 6.

Comparison with other crop classification studies.

5.2. The Performance Advantages of CSCCU

The CSCCU proposed in this paper has a dual-branch structure, and the spectral feature information of different branches is more effectively fused through shallow and deep hierarchical feature-level fusion, which improves classification accuracy. As shown in Table 2 and Table 3, this dual-branch method enables the model to learn discriminant features from the main branch and the auxiliary branch, which can be proved by the comparison results with U-Net, SegNet, and DeepLabV3+. In addition, the hierarchical feature-level fusion scheme improves the accuracy of staple crop classification in complex scenes. Specifically, when using RGB, NIR, and SF at the same time, the overall accuracy of CSCCU is 2.91% to 12.41% higher than that of U-Net, SegNet, and DeepLabV3+. We attribute the superiority of CSCCU to its structural design. CSCCU adopts hierarchical feature-level fusion. Starting from the SFF module, shallow feature fusion is performed at each layer, fusing texture and edge information captured by different branches, and then transmitted to the next layer. Then, the DFF module is used for deep feature fusion at the bottom layer, and the abstract information is further integrated through the attention distribution. This method can ultimately maximize the use of rich input information.

According to Table 2, the proposed CSCCU achieves recognition accuracies of 89.72% for corn and 88.61% for rice, outperforming the comparison models. However, it shows no advantage, and even slight lag, in classifying buildings and water. This can be understood, as we incorporated the NDVI index as a spectral feature in the auxiliary branch, which primarily analyzes and represents vegetation states. NDVI effectively distinguishes between vegetation and non-vegetation areas due to plants’ light absorption and reflection characteristics. The dual-branch design of CSCCU enhances its ability to capture rich information from the input data, leading to improved accuracy in vegetation-related categories. It is reasonable to assume that future work, incorporating auxiliary data sensitive to other categories, could further enhance CSCCU’s accuracy in those categories.

5.3. Limitations of CSCCU

Given the research focus on staple crop classification in complex scenes, this article primarily utilizes the NIR band and NDVI as auxiliary data. However, there are numerous remote sensing indices beyond NDVI that could aid in staple crop classification and complex scene recognition. Texture features and spatial structure are also crucial for effective classification. Some scholars also use SAR data [49], which are less affected by light intensity, weather, and other factors and have good time continuity as input to combine the model with the time series method to improve the recognition ability of the model.

Furthermore, the model does not exhibit a significant advantage in classification accuracy for other categories. We believe that this is because only feature data sensitive to vegetation were used as input, while data that are sensitive to other categories were lacking. In the future, introducing feature data tailored to other categories may help enhance classification accuracy for those classes.

Additionally, although the model employs depthwise separable convolutions to reduce the number of parameters, no quantitative analysis of its computational efficiency was performed. This is due to the fact that the experimental platform can significantly influence computational efficiency. The experimental platform used in this study was a laptop, which differs from those used in other studies, making direct comparisons difficult. Future work could include a quantitative analysis of the model’s computational efficiency across different platforms to explore its potential for deployment on lightweight devices.

Finally, the study area in this paper is located in Guizhou Province, China. Although this region meets the requirements for complex scenes, it is predominantly composed of plateaus and mountains and is characterized by a subtropical monsoon climate from a broader perspective. In future research, establishing study areas and sampling points on a global scale could be considered to explore the model’s adaptability under diverse geographic and climatic conditions.

In the future, as the model’s accuracy and its applicability to diverse data continue to improve, it is expected to possess practical value in agricultural information extraction, thereby supporting agricultural monitoring efforts and informing policy decisions related to agriculture.

6. Conclusions

In this study, we propose a dual-branch network, Complex Scene Crop Classification U-Net (CSCCU), to achieve fine classification of staple crops in complex scenes. The CSCCU integrates the attention mechanism into U-Net. Through the dual-branch structure, it effectively captures the intrinsic features and spectral characteristics of the input data of each branch and uses the feature-level hierarchical fusion method to hierarchically connect the two branches, thereby improving the overall performance of the model. The DFF module uses the attention mechanism to regulate the deep feature extraction.

The classification results from Xiuwen County, Guizhou Province, China, demonstrate that CSCCU achieves the highest overall accuracy (OA) and mean intersection over union (mIoU) values, surpassing U-Net, SegNet, and DeepLabV3+. The integration of spectral features with deep learning (DL) models significantly enhances the accuracy of staple crop mapping in complex scenes. This model serves as a valuable technical reference for the precise mapping of staple crops in challenging environments and contributes to enriching the existing land cover and land use classification schemes.

Author Contributions

J.Z. conceptualized the methodology, performed the analyses, and wrote the manuscript. L.Z. and H.Y. supervised the research, improved the methodology and analyses, and edited the final manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (Grant No. 2021YFB3900505).

Data Availability Statement

The remote sensing datasets containing GF-2 images were downloaded from https://data.cresda.cn (accessed on 30 September 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Monfreda, C.; Ramankutty, N.; Foley, J.A. Farming the Planet: 2. Geographic Distribution of Crop Areas, Yields, Physiological Types, and Net Primary Production in the Year 2000. Glob. Biogeochem. Cycles 2008, 22, 2007GB002947. [Google Scholar] [CrossRef]

- Lyu, X.; Wang, Y.; Niu, S.; Peng, W. Spatio-Temporal Pattern and Influence Mechanism of Cultivated Land System Resilience: Case from China. Land 2021, 11, 11. [Google Scholar] [CrossRef]

- Vicente-Guijalba, F.; Martinez-Marin, T.; Lopez-Sanchez, J.M. Dynamical Approach for Real-Time Monitoring of Agricultural Crops. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3278–3293. [Google Scholar] [CrossRef]

- Kuenzer, C.; Knauer, K. Remote Sensing of Rice Crop Areas. Int. J. Remote Sens. 2013, 34, 2101–2139. [Google Scholar] [CrossRef]

- Karthikeyan, L.; Chawla, I.; Mishra, A.K. A Review of Remote Sensing Applications in Agriculture for Food Security: Crop Growth and Yield, Irrigation, and Crop Losses. J. Hydrol. 2020, 586, 124905. [Google Scholar] [CrossRef]

- Macdonald, R.B. A Summary of the History of the Development of Automated Remote Sensing for Agricultural Applications. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 473–482. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, Z.; Peng, D.; Benediktsson, J.A.; Liu, B.; Zou, L.; Li, J.; Plaza, A. Remotely Sensed Big Data: Evolution in Model Development for Information Extraction [Point of View]. Proc. IEEE 2019, 107, 2294–2301. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current Status of Landsat Program, Science, and Applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS Satellite, Imagery, and Products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who Launched What, When and Why; Trends in Global Land-Cover Observation Capacity from Civilian Earth Observation Satellites. ISPRS J. Photogramm. Remote Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Saldaña, M.D.M.; Aguilar, F.J. Assessing Geometric Accuracy of the Orthorectification Process from GeoEye-1 and WorldView-2 Panchromatic Images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Li, D.; Wang, M.; Jiang, J. China’s High-Resolution Optical Remote Sensing Satellites and Their Mapping Applications. Geo-Spat. Inf. Sci. 2021, 24, 85–94. [Google Scholar] [CrossRef]

- Biradar, C.M.; Thenkabail, P.S.; Noojipady, P.; Li, Y.; Dheeravath, V.; Turral, H.; Velpuri, M.; Gumma, M.K.; Gangalakunta, O.R.P.; Cai, X.L.; et al. A Global Map of Rainfed Cropland Areas (GMRCA) at the End of Last Millennium Using Remote Sensing. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 114–129. [Google Scholar] [CrossRef]

- Salmon, J.M.; Friedl, M.A.; Frolking, S.; Wisser, D.; Douglas, E.M. Global Rain-Fed, Irrigated, and Paddy Croplands: A New High Resolution Map Derived from Remote Sensing, Crop Inventories and Climate Data. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 321–334. [Google Scholar] [CrossRef]

- Waldner, F.; Canto, G.S.; Defourny, P. Automated Annual Cropland Mapping Using Knowledge-Based Temporal Features. ISPRS J. Photogramm. Remote Sens. 2015, 110, 1–13. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Wu, Z. An Automated Cropland Classification Algorithm (ACCA) for Tajikistan by Combining Landsat, MODIS, and Secondary Data. Remote Sens. 2012, 4, 2890–2918. [Google Scholar] [CrossRef]

- Liang, D.; Zuo, Y.; Huang, L.; Zhao, J.; Teng, L.; Yang, F. Evaluation of the Consistency of MODIS Land Cover Product (MCD12Q1) Based on Chinese 30 m GlobeLand30 Datasets: A Case Study in Anhui Province, China. ISPRS Int. J. Geo-Inf. 2015, 4, 2519–2541. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.; Clinton, N.; Xin, Q.; Zhong, L.; Chen, Y.; Gong, P. FROM-GC: 30 m Global Cropland Extent Derived through Multisource Data Integration. Int. J. Digit. Earth 2013, 6, 521–533. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global Land Cover Mapping at 30m Resolution: A POK-Based Operational Approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m Landsat-Derived Cropland Extent Product of Australia and China Using Random Forest Machine Learning Algorithm on Google Earth Engine Cloud Computing Platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S.; et al. Finer Resolution Observation and Monitoring of Global Land Cover: First Mapping Results with Landsat TM and ETM+ Data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Bartholomé, E.; Belward, A.S. GLC2000: A New Approach to Global Land Cover Mapping from Earth Observation Data. Int. J. Remote Sens. 2005, 26, 1959–1977. [Google Scholar] [CrossRef]

- Arino, O.; Gross, D.; Ranera, F.; Leroy, M.; Bicheron, P.; Brockman, C.; Defourny, P.; Vancutsem, C.; Achard, F.; Durieux, L.; et al. GlobCover: ESA Service for Global Land Cover from MERIS. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–27 July 2007; IEEE: Barcelona, Spain, 2007; pp. 2412–2415. [Google Scholar] [CrossRef]

- Friedl, M.A.; Sulla-Menashe, D.; Tan, B.; Schneider, A.; Ramankutty, N.; Sibley, A.; Huang, X. MODIS Collection 5 Global Land Cover: Algorithm Refinements and Characterization of New Datasets. Remote Sens. Environ. 2010, 114, 168–182. [Google Scholar] [CrossRef]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring US Agriculture: The US Department of Agriculture, National Agricultural Statistics Service, Cropland Data Layer Program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

- Alkanan, M.; Gulzar, Y. Enhanced Corn Seed Disease Classification: Leveraging MobileNetV2 with Feature Augmentation and Transfer Learning. Front. Appl. Math. Stat. 2024, 9, 1320177. [Google Scholar] [CrossRef]

- Seelwal, P.; Dhiman, P.; Gulzar, Y.; Kaur, A.; Wadhwa, S.; Onn, C.W. A Systematic Review of Deep Learning Applications for Rice Disease Diagnosis: Current Trends and Future Directions. Front. Comput. Sci. 2024, 6, 1452961. [Google Scholar] [CrossRef]

- Amri, E.; Gulzar, Y.; Yeafi, A.; Jendoubi, S.; Dhawi, F.; Mir, M.S. Advancing Automatic Plant Classification System in Saudi Arabia: Introducing a Novel Dataset and Ensemble Deep Learning Approach. Model. Earth Syst. Environ. 2024, 10, 2693–2709. [Google Scholar] [CrossRef]

- Gulzar, Y. Enhancing Soybean Classification with Modified Inception Model: A Transfer Learning Approach. Emir. J. Food Agric. 2024, 36, 1–9. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D Convolutional Neural Networks for Crop Classification with Multi-Temporal Remote Sensing Images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef]

- Wang, L.; Wang, J.; Liu, Z.; Zhu, J.; Qin, F. Evaluation of a Deep-Learning Model for Multispectral Remote Sensing of Land Use and Crop Classification. Crop J. 2022, 10, 1435–1451. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Lu, W.; Hu, Y.; Peng, F.; Feng, Z.; Yang, Y. A Geoscience-Aware Network (GASlumNet) Combining UNet and ConvNeXt for Slum Mapping. Remote Sens. 2024, 16, 260. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. arXiv 2017, arXiv:1709.01507. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A2-Nets: Double Attention Networks. arXiv 2018, arXiv:1810.11579. [Google Scholar] [CrossRef]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. SegNeXt: Rethinking Convolutional Attention Design for Semantic Segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. arXiv 2016, arXiv:1511.00561. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Liu, W.; Cui, N.; Guo, L.; Du, S.; Wang, W. DESformer: A Dual-Branch Encoding Strategy for Semantic Segmentation of Very-High-Resolution Remote Sensing Images Based on Feature Interaction and Multiscale Context Fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 3000820. [Google Scholar] [CrossRef]

- Wang, H.; Yao, Y.; Ye, Z.; Chang, W.; Liu, J.; Zhao, Y.; Li, S.; Liu, Z.; Zhang, X. Solution for Crop Classification in Regions with Limited Labeled Samples: Deep Learning and Transfer Learning. GISci. Remote Sens. 2024, 61, 2387393. [Google Scholar] [CrossRef]

- Li, X.; Zhai, M.; Zheng, L.; Zhou, L.; Xie, X.; Zhao, W.; Zhang, W. Efficient Residual Network Using Hyperspectral Images for Corn Variety Identification. Front. Plant Sci. 2024, 15, 1376915. [Google Scholar] [CrossRef]

- Alotaibi, Y.; Rajendran, B.; Rani, K.G.; Rajendran, S. Dipper Throated Optimization with Deep Convolutional Neural Network-Based Crop Classification for Remote Sensing Image Analysis. PeerJ Comput. Sci. 2024, 10, e1828. [Google Scholar] [CrossRef]

- Lu, T.; Wan, L.; Wang, L. Fine Crop Classification in High Resolution Remote Sensing Based on Deep Learning. Front. Environ. Sci. 2022, 10, 991173. [Google Scholar] [CrossRef]

- Tian, X.; Chen, Z.; Li, Y.; Bai, Y. Crop Classification in Mountainous Areas Using Object-Oriented Methods and Multi-Source Data: A Case Study of Xishui County, China. Agronomy 2023, 13, 3037. [Google Scholar] [CrossRef]

- Ren, T.; Xu, H.; Cai, X.; Yu, S.; Qi, J. Smallholder Crop Type Mapping and Rotation Monitoring in Mountainous Areas with Sentinel-1/2 Imagery. Remote Sens. 2022, 14, 566. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).