Real-Time Compensation for Unknown Image Displacement and Rotation in Infrared Multispectral Camera Push-Broom Imaging

Abstract

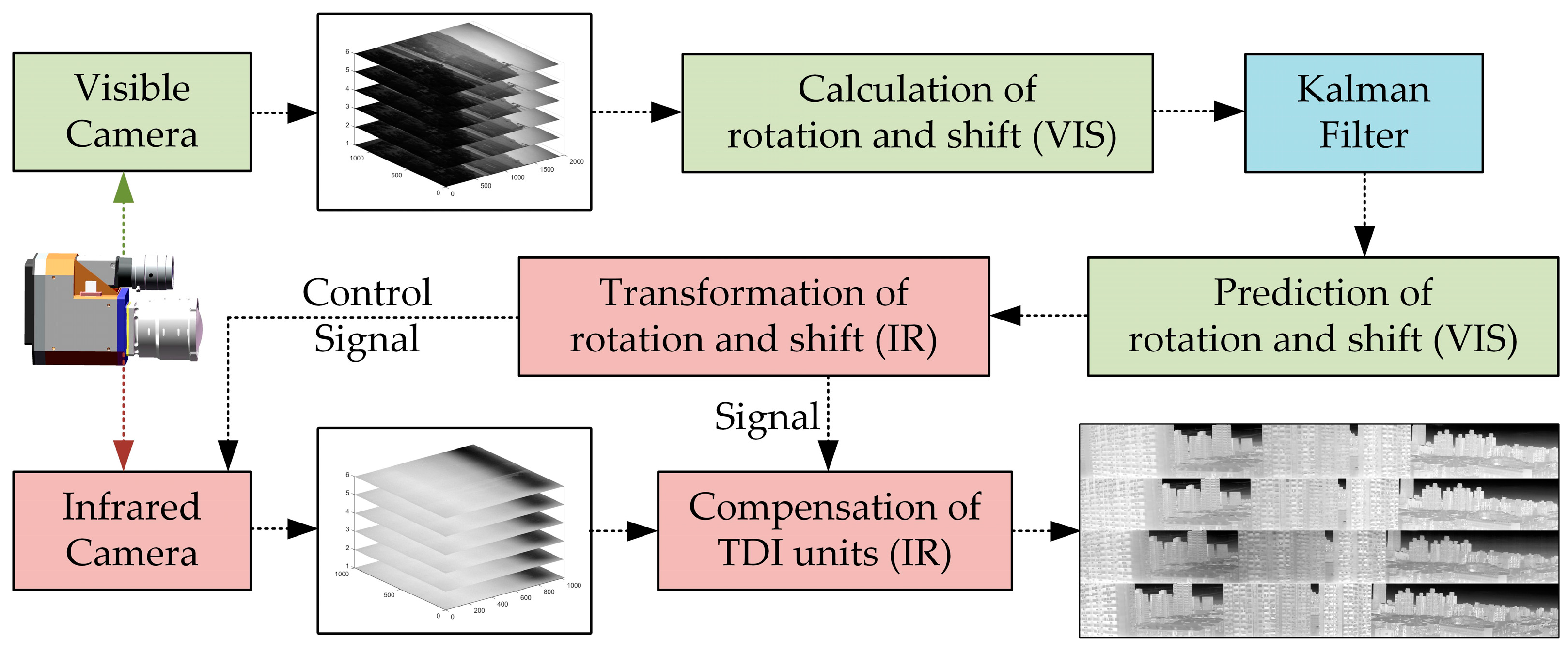

1. Introduction

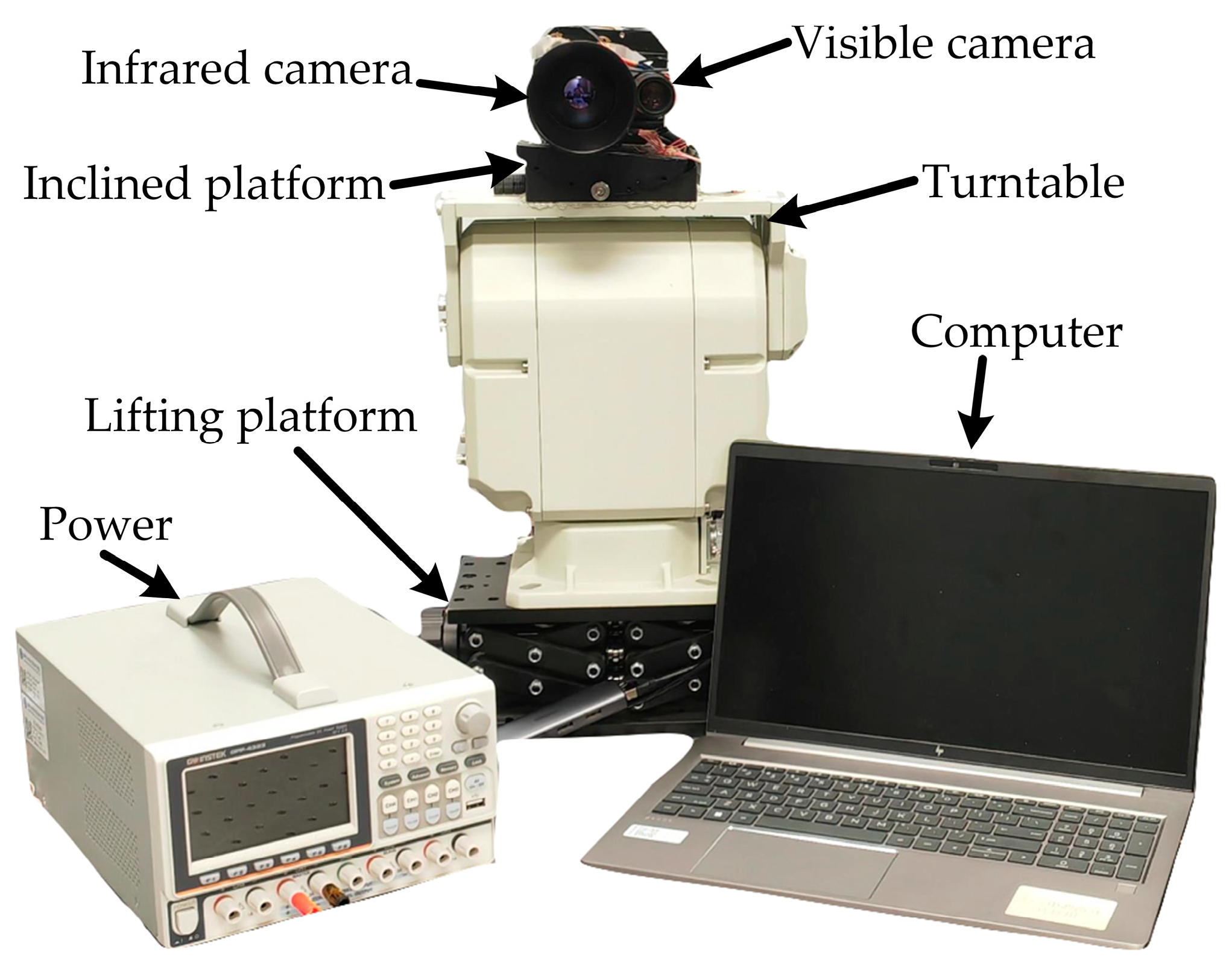

2. Materials and Methods

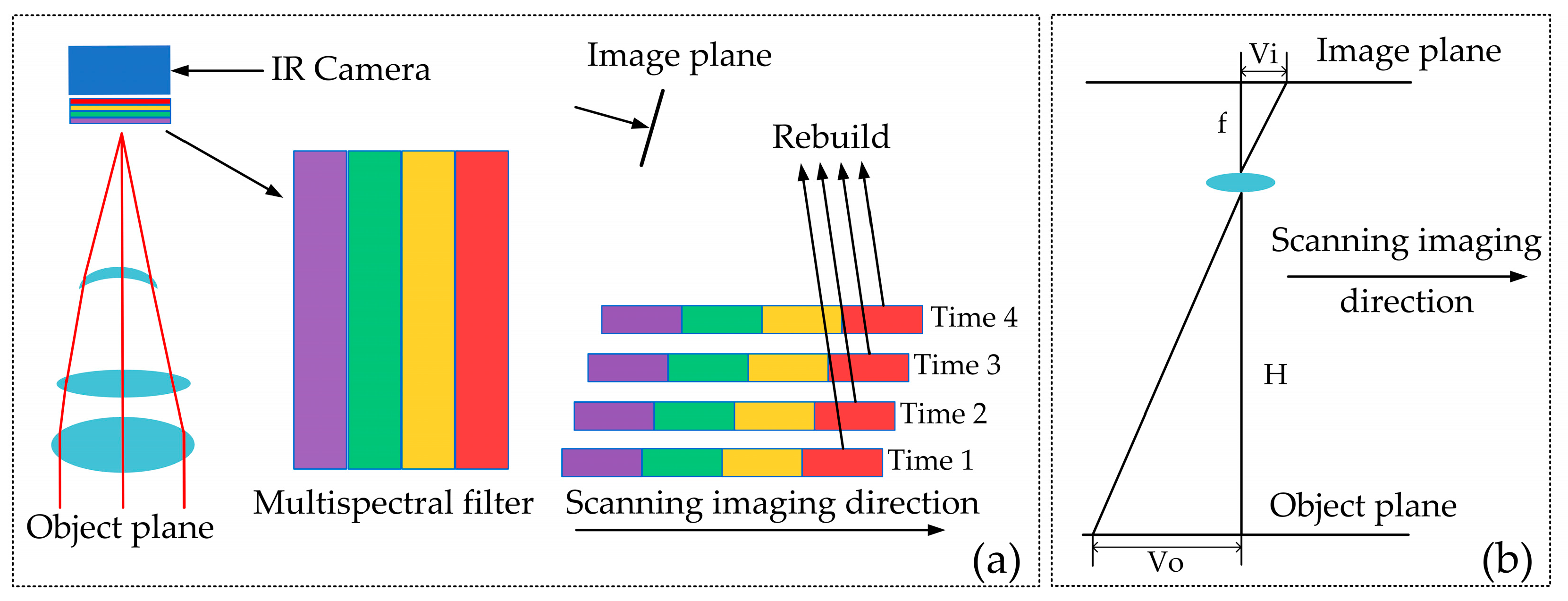

2.1. Velocity-Height Ratio Matching Model

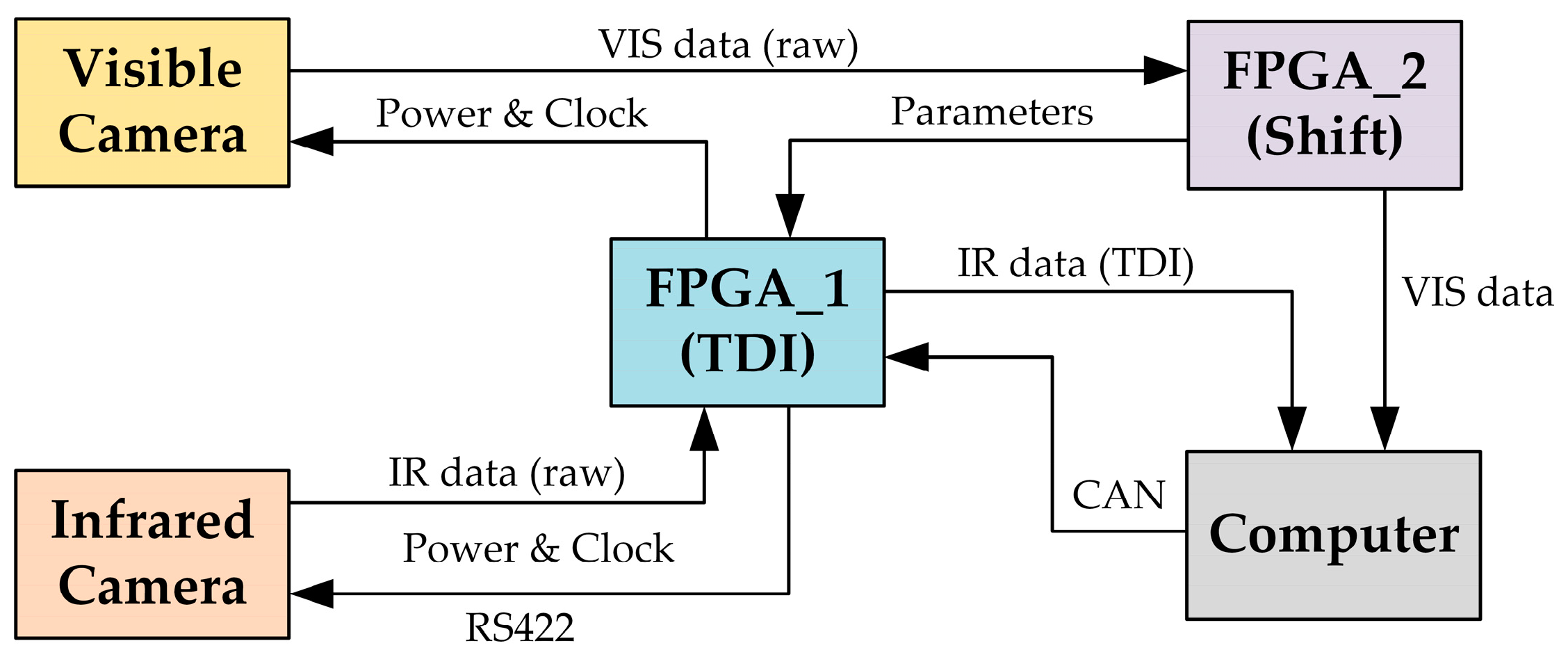

2.2. Off-Chip Digital Domain TDI

2.3. Registration Relationship Between the IR and Visible Cameras

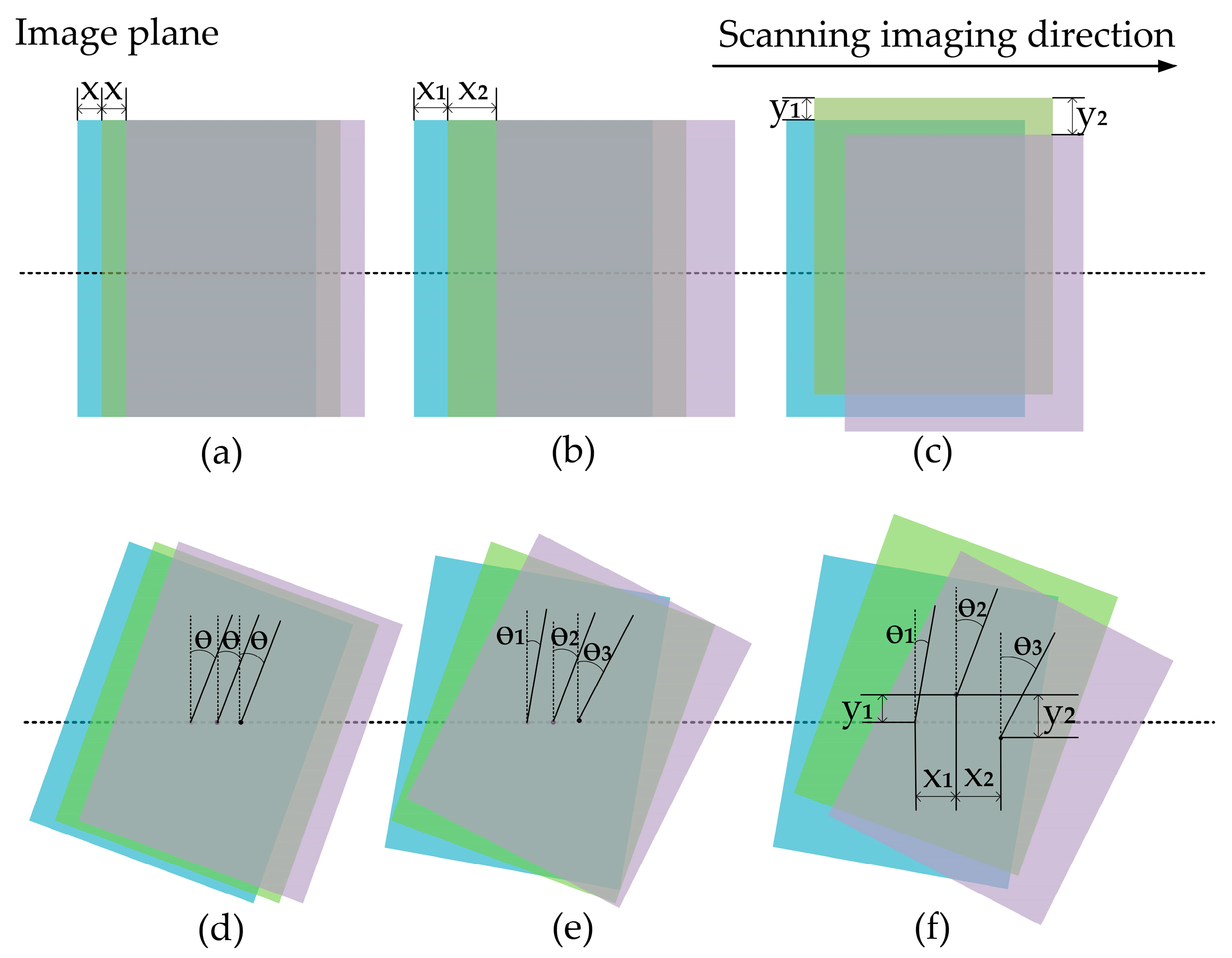

2.4. Image Rotation and Shift Calculation

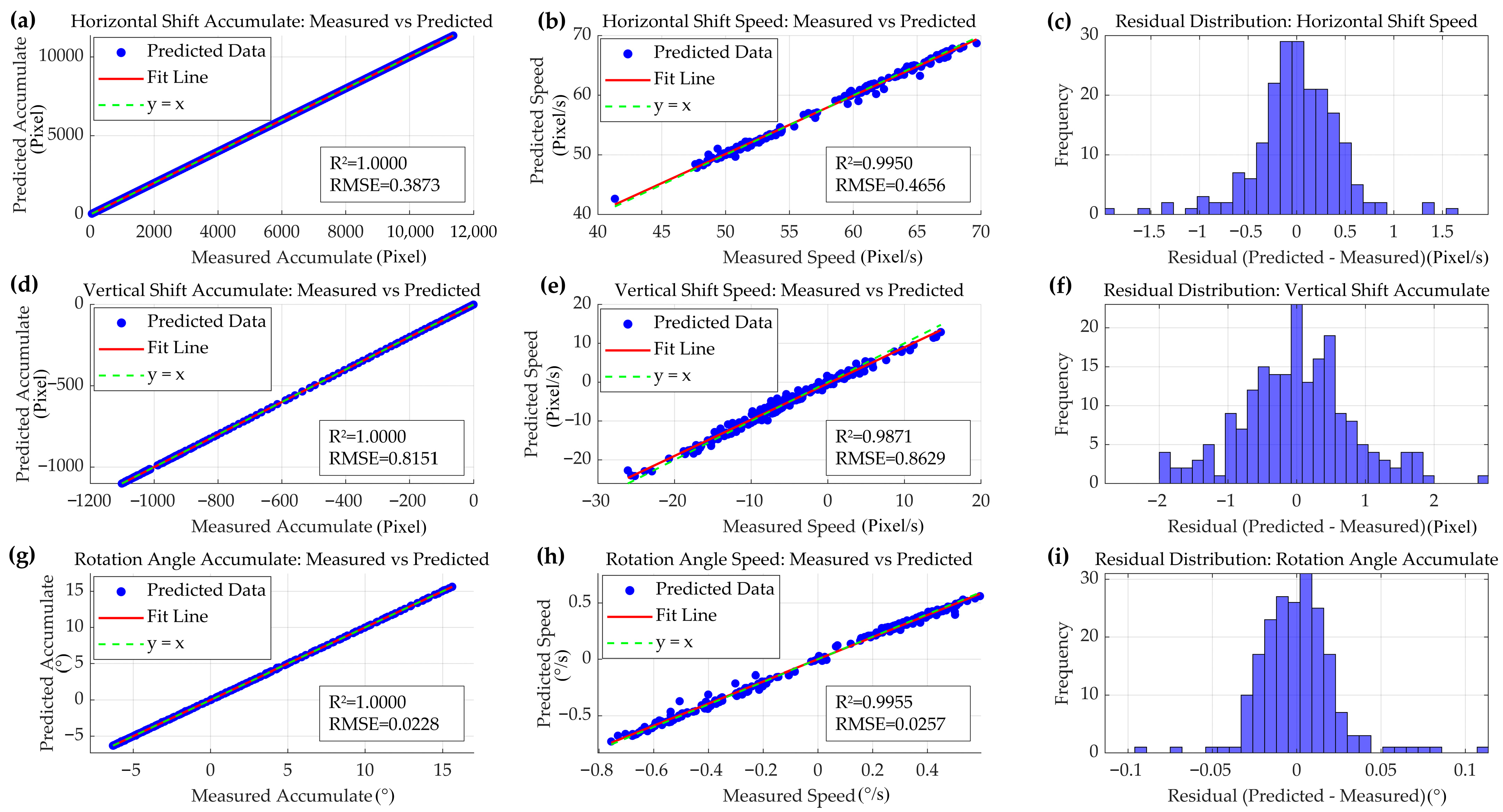

2.5. Prediction and Affine Transformation

2.6. Compensation Model

3. Results

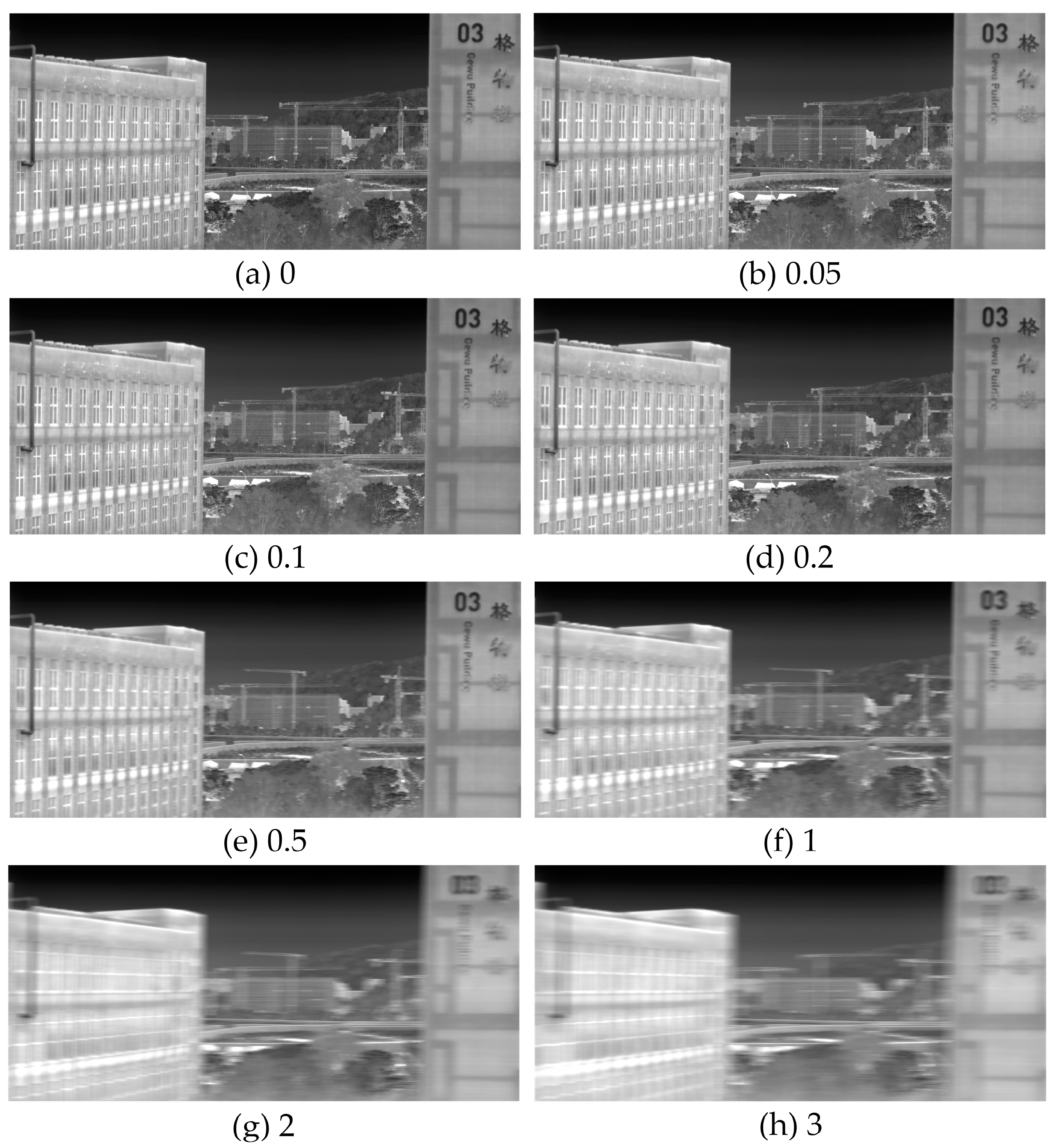

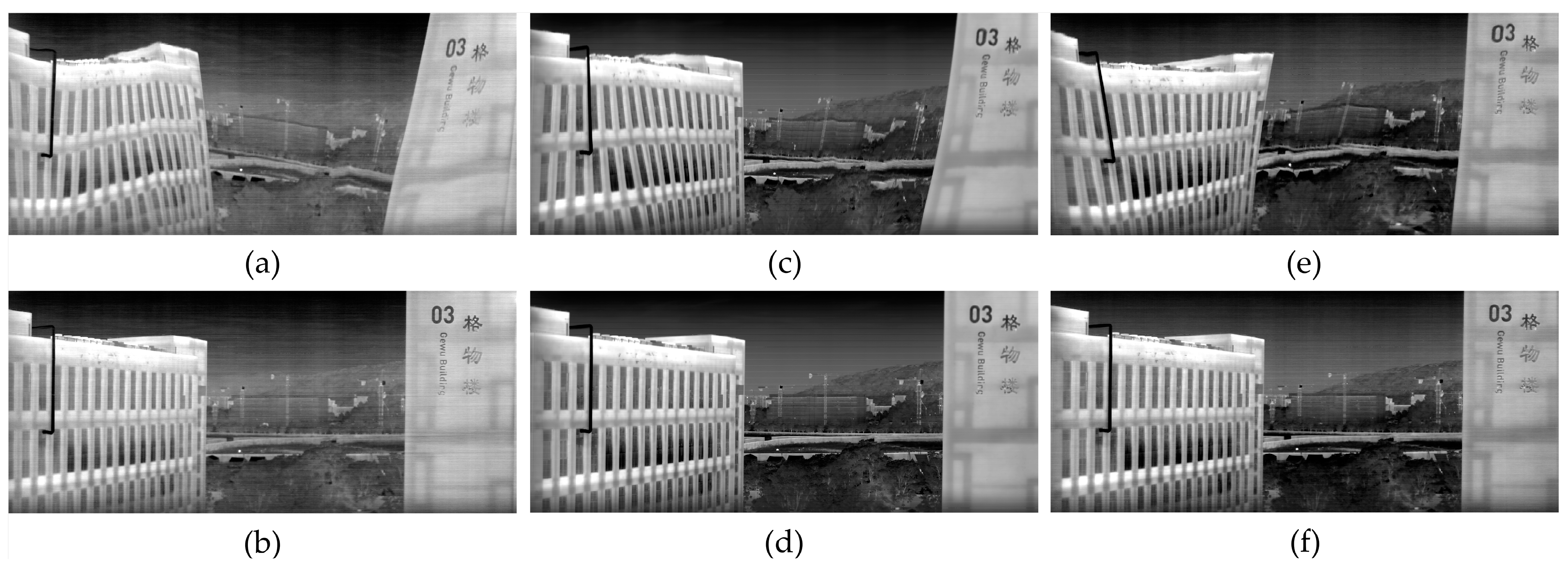

3.1. Image Shift and Rotation Effects

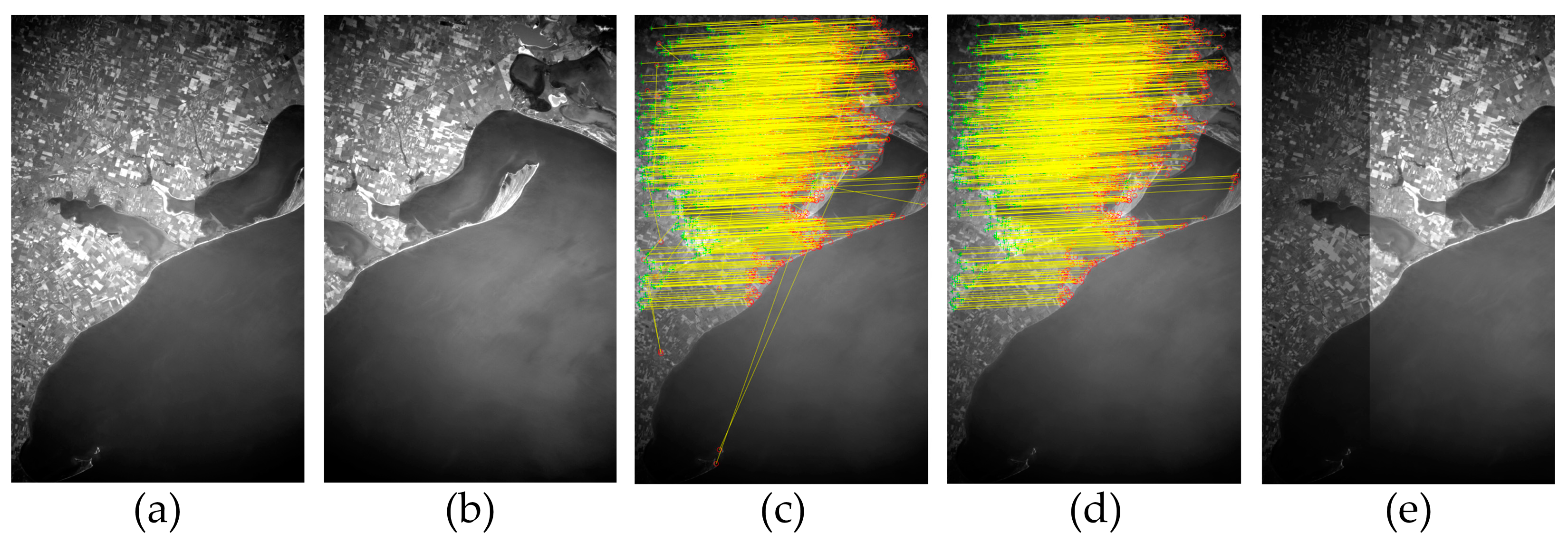

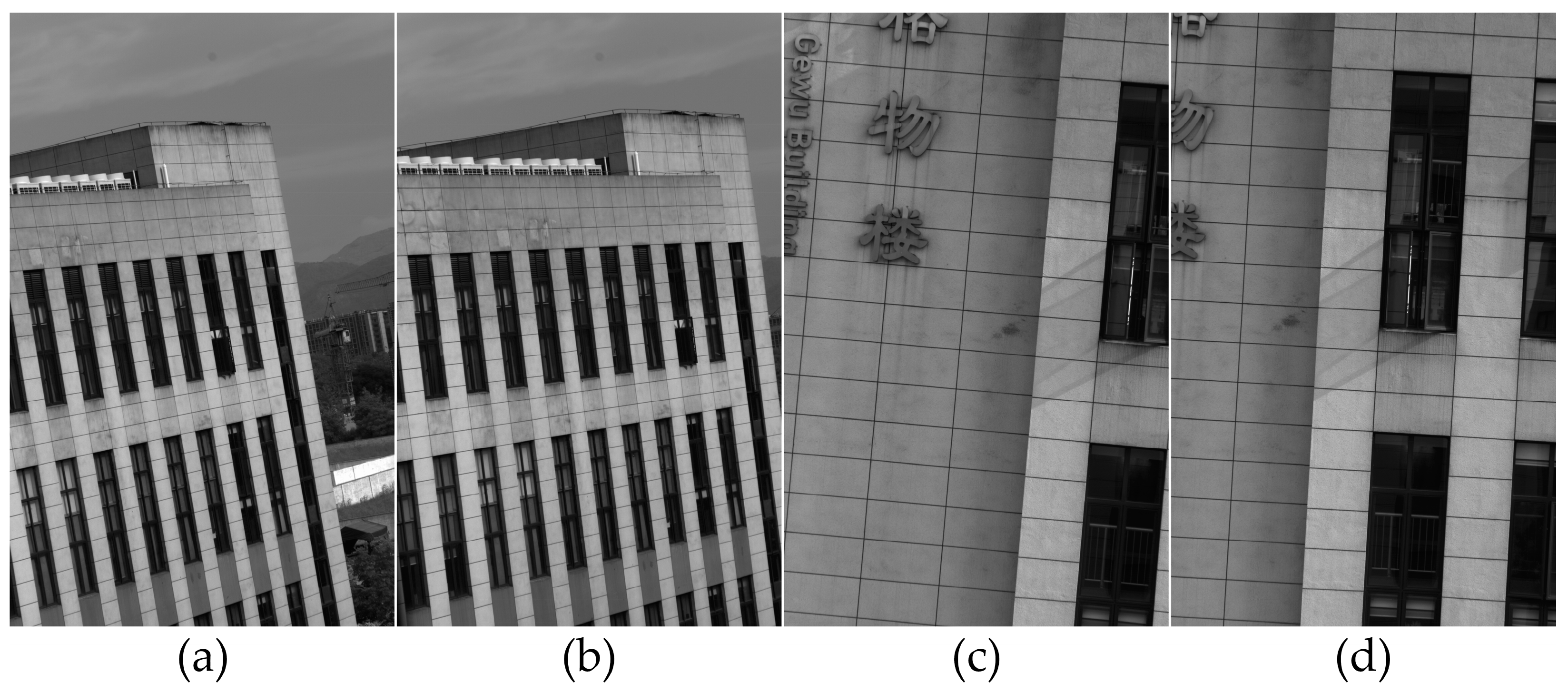

3.2. Real-Time Compensation Effects

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shen, Y.; Yan, Z.; Yang, Y.; Tang, W.; Sun, J.; Zhang, Y. Application of UAV-Borne Visible-Infared Pushbroom Imaging Hyperspectral for Rice Yield Estimation Using Feature Selection Regression Methods. Sustainability 2024, 16, 632. [Google Scholar] [CrossRef]

- Langer, D.D.; Johansen, T.A.; Sørensen, A.J. Consistent Along Track Sharpness in a Push-Broom Imaging System. In Proceedings of the IGARSS 2023–2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 4486–4489. [Google Scholar]

- Fan, C.; Li, Y.-C.; Yi, H.-W. Influence of Velocity-Height Ratio of Satellite on the TDICCD Camera. Acta Armamentarii 2007, 28, 817–821. [Google Scholar]

- Hu, J.; Zhi, X.; Gong, J.; Yin, Z.; Fan, Z. Error Tolerance and Effects Analysis of Satellite Vibration Characteristics and Measurement Error on TDICCD Image Restoration. Infrared Phys. Technol. 2018, 93, 277–285. [Google Scholar]

- Chen, X.; Zhan, H.; Fan, S.; Rao, Q.; Hong, Z.; You, Z.; Xing, F.; Liu, C. High-Accuracy Real-Time Attitude Determination and Imagery Positioning System for Satellite-Based Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1001314. [Google Scholar]

- Drouin, M.-A.; Fournier, J. Infrared and Visible Image Registration for Airborne Camera Systems. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 951–955. [Google Scholar]

- Yang, Y.; Yu, C.; Wang, Y.; Hua, N.; Kuang, H. Imaging Attitude Control and Image Motion Compensation Residual Analysis Based on a Three-Axis Inertially Stabilized Platform. Appl. Sci. 2021, 11, 5856. [Google Scholar] [CrossRef]

- Wang, L.; Liu, X.; Wang, C. Modeling and Design of Fast Steering Mirror in Image Motion Compensation for Backscanning Step and Stare Imaging Systems. Opt. Eng. 2019, 58, 103105. [Google Scholar]

- Li, W.; Hu, C.; Zhang, L.; Yan, C. Drift Angle Compensation Method for a High-Resolution and Wide-Range Space Camera. Measurement 2020, 158, 107710. [Google Scholar]

- Chang, S.; Chen, W.; Cao, J.; Mei, C.; Li, X.; Zhang, H. Research on 2D Image Motion Compensation for a Wide-Field Scanning Imaging System with Moving Base. Photonics 2023, 10, 1305. [Google Scholar] [CrossRef]

- Sun, Y.; Zeng, J.; Li, Y.; Rao, P.; Hu, T. Compensation Method for the Image Motion of a Rotary-Scan Space Camera. Opt. Quantum Electron. 2020, 52, 502. [Google Scholar]

- Li, J.; Liu, Z. High-Resolution Dynamic Inversion Imaging with Motion-Aberrations-Free Using Optical Flow Learning Networks. Sci. Rep. 2019, 9, 11319. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Xu, Y.; Liu, C.; Xie, P.; Ma, W.; Lu, Y.; Kong, X. Study of the Image Motion Compensation Method for a Vertical Orbit Dynamic Scanning TDICCD Space Camera. Opt. Express 2023, 31, 41740–41755. [Google Scholar] [PubMed]

- Liang, S.; Li, X.; Wang, J. Geometric Processing and Positioning Techniques. Advanced Remote Sensing; Elsevier: Oxford, UK, 2012; pp. 33–74. [Google Scholar]

- Tao, S.; Zhang, X.; Xu, W.; Qu, H. Realize the Image Motion Self-Registration Based on TDI in Digital Domain. IEEE Sens. J. 2019, 19, 11666–11674. [Google Scholar]

- Atkins, M. Velocity Field Measurement Using Particle Image Velocimetry (PIV). In Application of Thermo-Fluidic Measurement Techniques; Elsevier: Amsterdam, The Netherlands, 2016; pp. 125–166. [Google Scholar]

- Li, M.; Feng, G.; Deng, R.; Gao, F.; Gu, F.; Ball, A.D. Structural Vibration Mode Identification from High-Speed Camera Footages Using an Adaptive Spatial Filtering Approach. Mech. Syst. Signal Process. 2022, 166, 108422. [Google Scholar]

- Jackson, F.; Walton, W.; Baker, P. Aircraft and Satellite Measurement of Ocean Wave Directional Spectra Using Scanning-Beam Microwave Radars. J. Geophys. Res.-Ocean. 1985, 90, 987–1004. [Google Scholar]

- Cheng, P.; Shen, W.; Sun, X.; Cai, C.; Wu, K.; Shen, Z. Measuring Height Difference Using Two-Way Satellite Time and Frequency Transfer. Remote Sens. 2022, 14, 451. [Google Scholar] [CrossRef]

- Wu, Z.; Cao, C.; Feng, Z.; Ye, S.; Li, M.; Song, B.; Wei, R. Factors Influencing the Performance of Optical Heterodyne Detection System. Opt. Lasers Eng. 2023, 171, 107826. [Google Scholar]

- Tao, S.; Jin, G.; Qu, H.; He, X.; Yang, X. Design and Analysis of CMOS Camera Based on Time Delay and Integration in Digital Domain to Realize Spatial High-Resolution Imaging. Acta Opt. Sin. 2012, 32, 93–101. [Google Scholar]

- Behera, D.; Chizari, S.; Shaw, L.A.; Porter, M.; Hensleigh, R.; Xu, Z.; Roy, N.K.; Connolly, L.G.; Zheng, X.; Saha, S.; et al. Current Challenges and Potential Directions towards Precision Microscale Additive Manufacturing–Part II: Laser-Based Curing, Heating, and Trapping Processes. Precis. Eng. 2021, 68, 301–318. [Google Scholar]

- Hingant, T.; Vervoort, P.; Franks, J.W. The Relation between Uncooled Arrays Pixel Size and Optics in the Long-Wave Infrared. In Proceedings of the Advanced Optics for Defense Applications: UV Through LWIR III, SPIE, Orlando, FL, USA, 15–16 April 2018; Volume 10627, pp. 23–33. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Fischler, M.; Bolles, R. Random Sample Consensus-a Paradigm for Model-Fitting with Applications to Image-Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle Filters for Positioning, Navigation, and Tracking. IEEE Trans. Signal Process. 2002, 50, 425–437. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

| Evaluation Metric | Proposed Method | Traditional Method | Optimization Ratio |

|---|---|---|---|

| BRISQUE | 40.4325 | 51.3926 | 21.37% |

| NIQE | 2.8087 | 3.3550 | 16.28% |

| PIQE | 64.8023 | 75.1163 | 13.73% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Tang, G.; Zhu, S.; Ding, F.; Wu, W.; Bai, J.; Li, C.; Wang, J. Real-Time Compensation for Unknown Image Displacement and Rotation in Infrared Multispectral Camera Push-Broom Imaging. Remote Sens. 2025, 17, 1113. https://doi.org/10.3390/rs17071113

Zhang T, Tang G, Zhu S, Ding F, Wu W, Bai J, Li C, Wang J. Real-Time Compensation for Unknown Image Displacement and Rotation in Infrared Multispectral Camera Push-Broom Imaging. Remote Sensing. 2025; 17(7):1113. https://doi.org/10.3390/rs17071113

Chicago/Turabian StyleZhang, Tongxu, Guoliang Tang, Shouzheng Zhu, Fang Ding, Wenli Wu, Jindong Bai, Chunlai Li, and Jianyu Wang. 2025. "Real-Time Compensation for Unknown Image Displacement and Rotation in Infrared Multispectral Camera Push-Broom Imaging" Remote Sensing 17, no. 7: 1113. https://doi.org/10.3390/rs17071113

APA StyleZhang, T., Tang, G., Zhu, S., Ding, F., Wu, W., Bai, J., Li, C., & Wang, J. (2025). Real-Time Compensation for Unknown Image Displacement and Rotation in Infrared Multispectral Camera Push-Broom Imaging. Remote Sensing, 17(7), 1113. https://doi.org/10.3390/rs17071113