BathyFormer: A Transformer-Based Deep Learning Method to Map Nearshore Bathymetry with High-Resolution Multispectral Satellite Imagery

Abstract

1. Introduction

- (1)

- The first paper to use a vision transformer-based architecture to derive bathymetry from satellite data.

- (2)

- The bathymetry output is a dense pixel-wise regression layer with 3 m spatial resolution covering the nearshore of Chesapeake Bay.

Related Works: Satellite-Derived Bathymetry

2. Materials and Methods

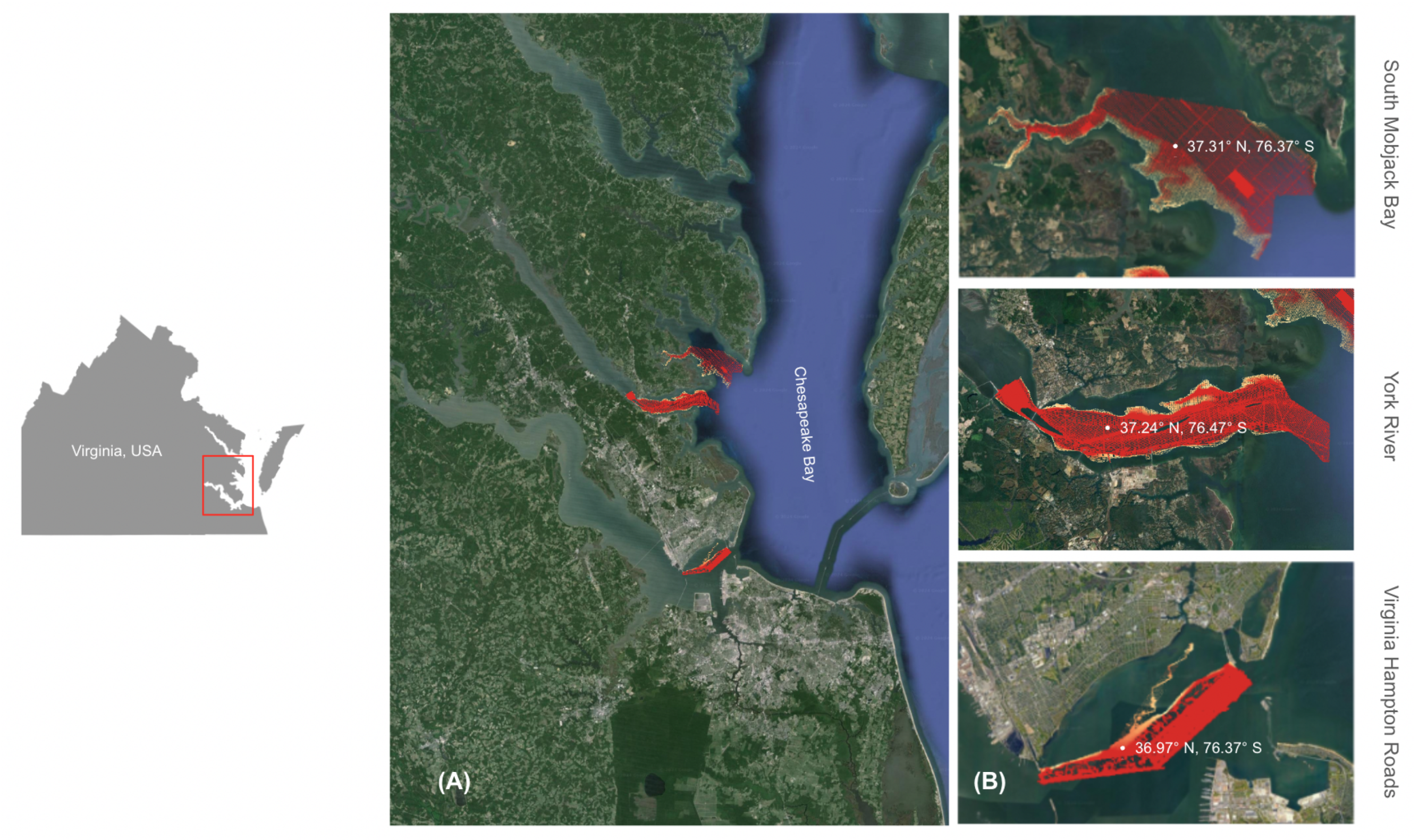

2.1. Study Area

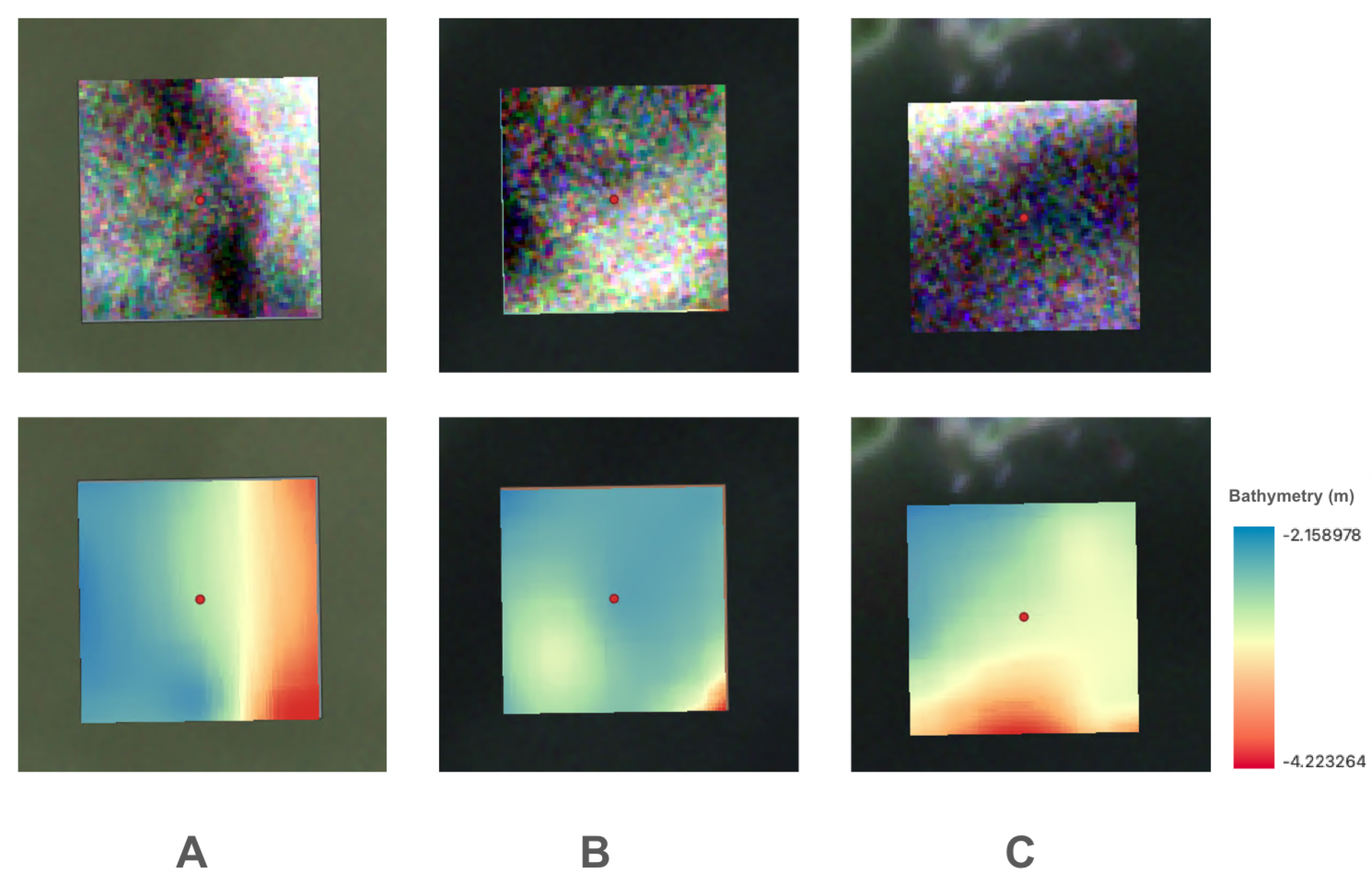

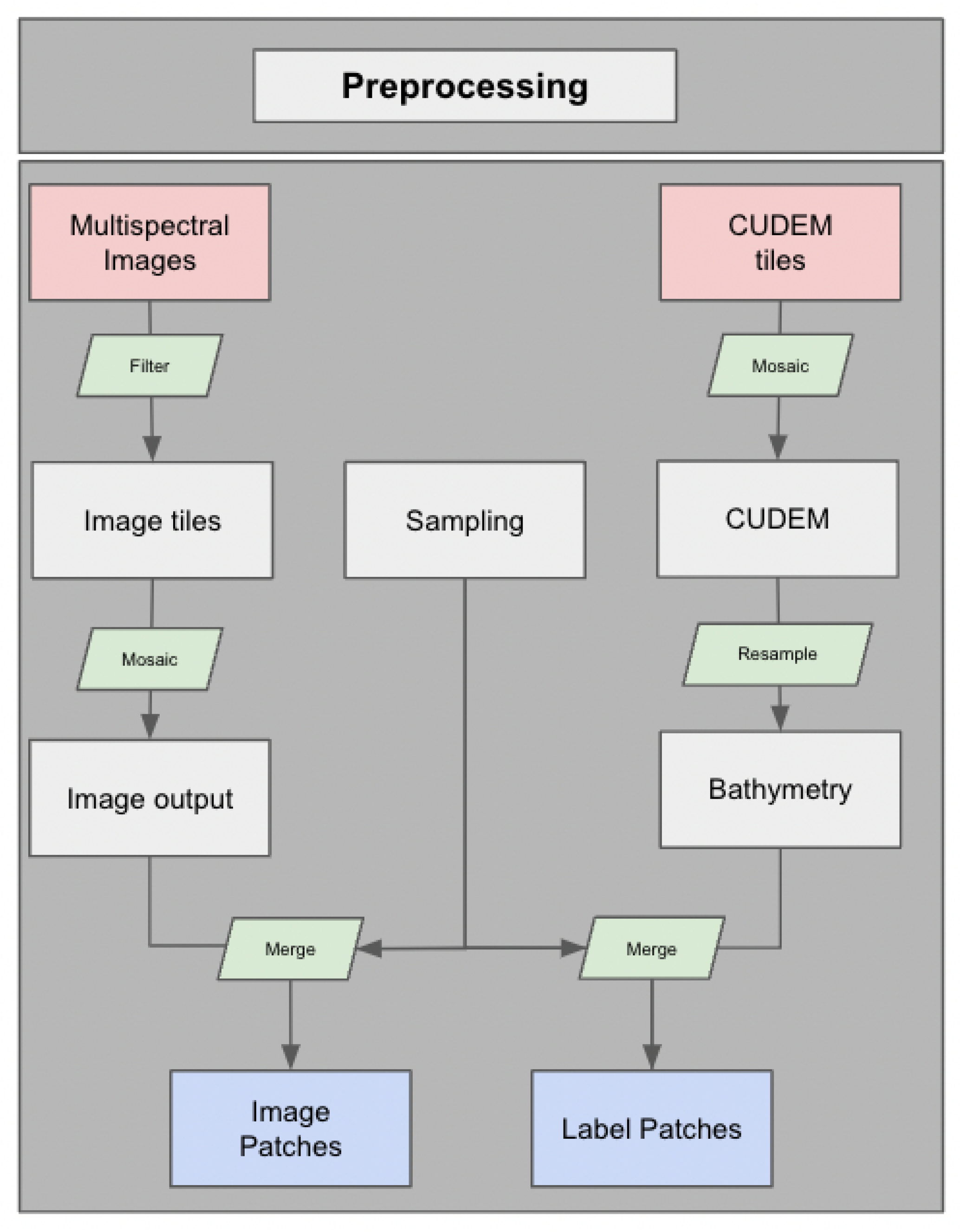

2.2. Data and Preprocessing

2.2.1. High-Resolution Satellite Imagery

2.2.2. CUDEM—Bathymetry

2.2.3. Hydrographic Survey Data

2.2.4. Sampling Strategy

2.3. Methodology

2.3.1. Random Forest

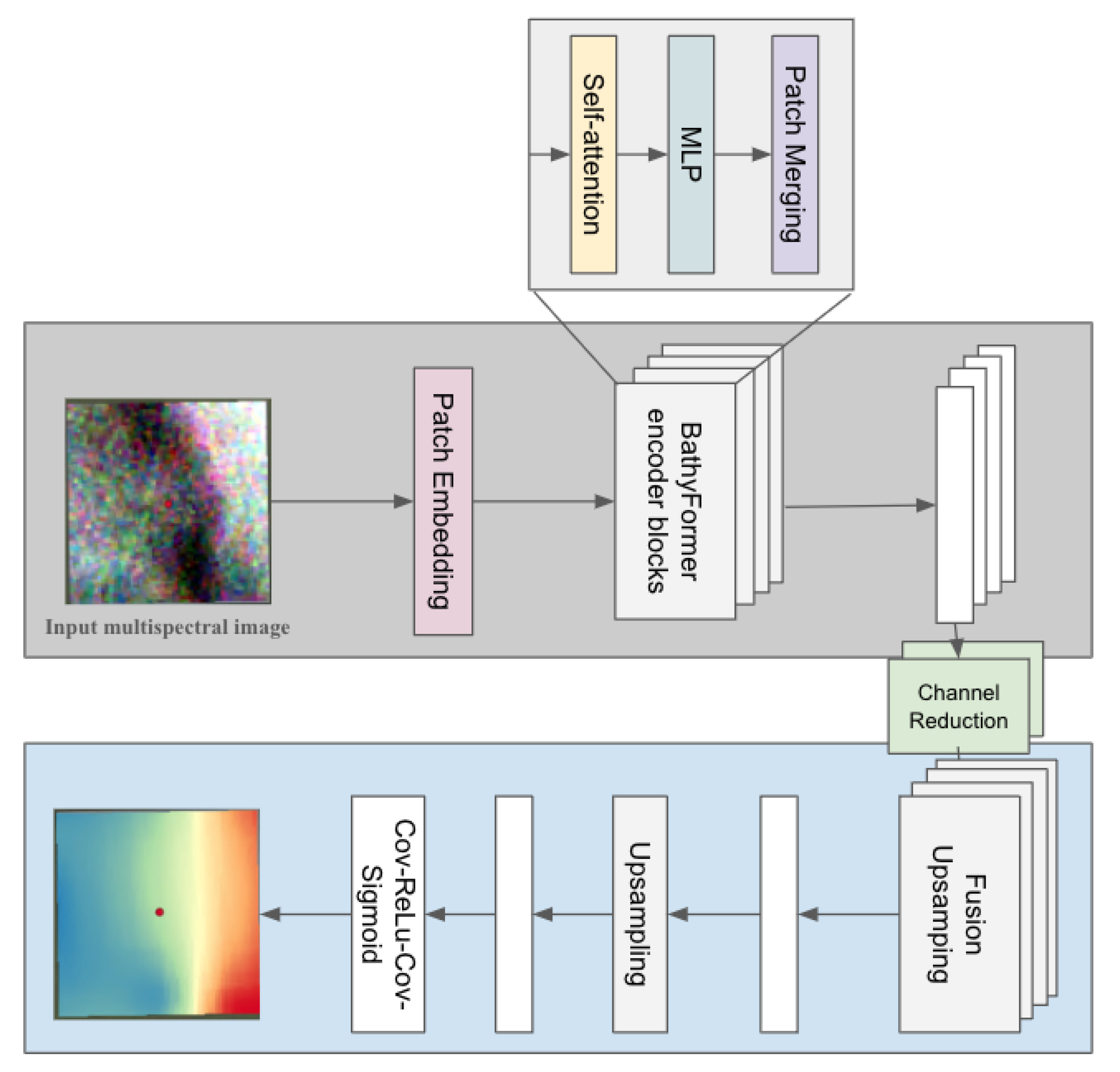

2.3.2. BathyFormer

2.3.3. Loss Function, Optimization, and Evaluation

3. Results

3.1. Comparative Analysis of RF and BathyFormer

3.2. Comparison of Prediction with Hydrographic Survey

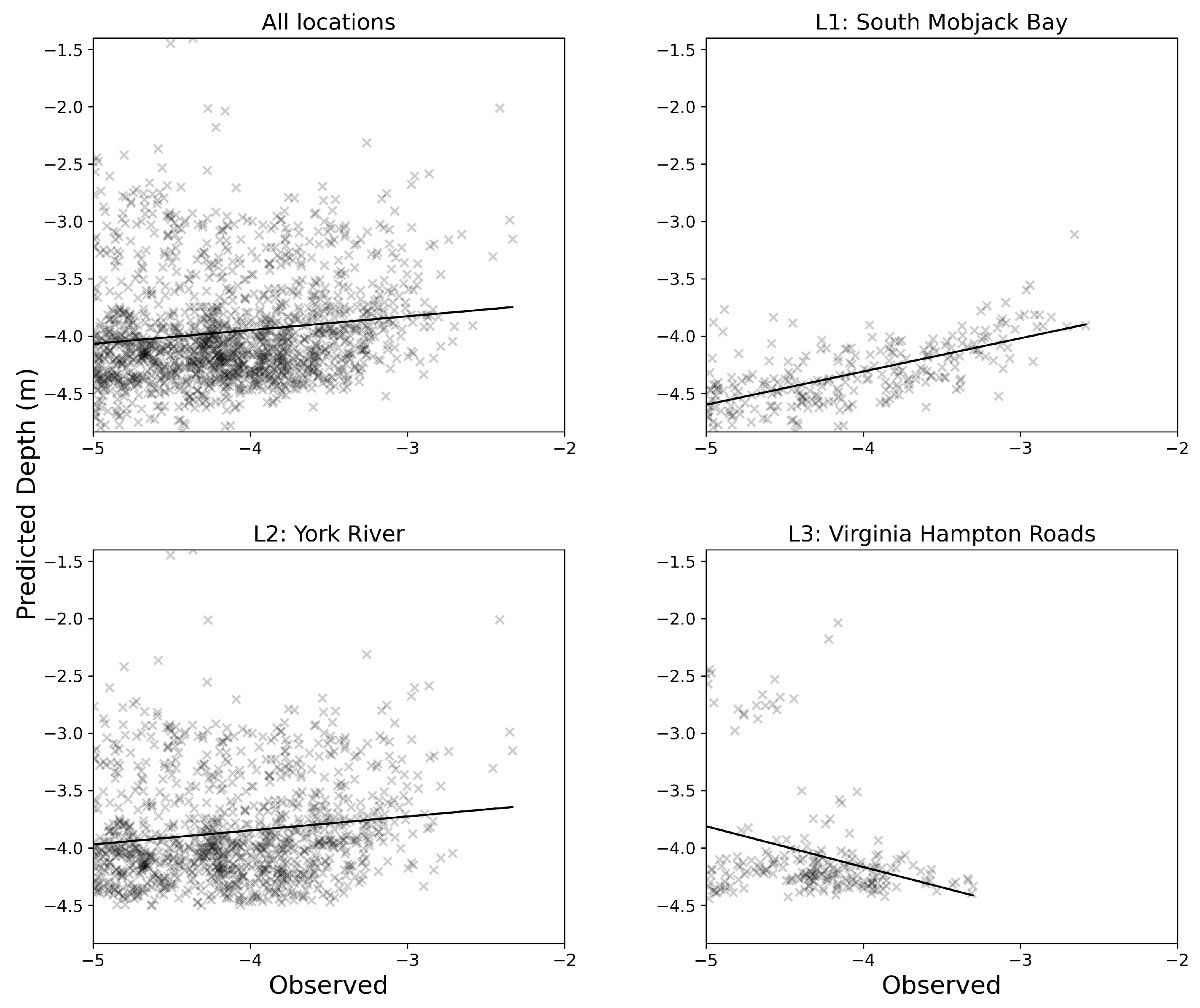

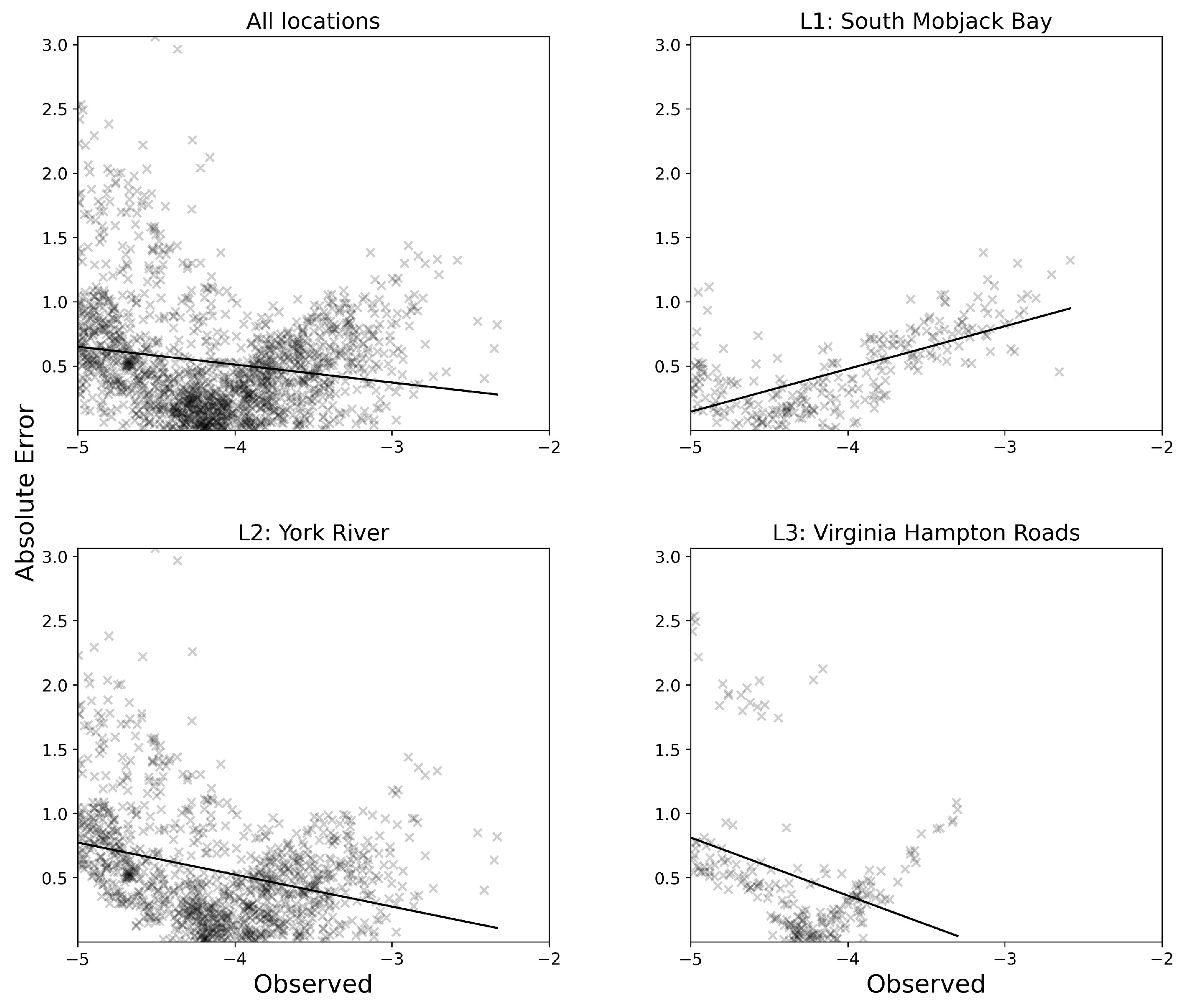

4. Discussion

4.1. Impact of Water Turbidity and Seabed Sediments

4.2. Accuracy Discrepancy Among Different Water Depths

4.3. Data Limitations in Shallow Water

4.4. Limitations Caused Due to Discrepancies Between Labeled and Ground Truth Bathymetry

4.5. Future Directions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nunez, K.; Rudnicky, T.; Mason, P.; Tombleson, C.; Berman, M. A geospatial modeling approach to assess site suitability of living shorelines and emphasize best shoreline management practices. Ecol. Eng. 2022, 179, 106617. [Google Scholar] [CrossRef]

- Twomey, A.J.; Nunez, K.; Carr, J.A.; Crooks, S.; Friess, D.A.; Glamore, W.; Orr, M.; Reef, R.; Rogers, K.; Waltham, N.J.; et al. Planning hydrological restoration of coastal wetlands: Key model considerations and solutions. Sci. Total Environ. 2024, 915, 169881. [Google Scholar] [CrossRef]

- Ye, F.; Zhang, Y.J.; Wang, H.V.; Friedrichs, M.A.; Irby, I.D.; Alteljevich, E.; Valle-Levinson, A.; Wang, Z.; Huang, H.; Shen, J.; et al. A 3D unstructured-grid model for Chesapeake Bay: Importance of bathymetry. Ocean Model. 2018, 127, 16–39. [Google Scholar] [CrossRef]

- Cai, X.; Zhang, Y.J.; Shen, J.; Wang, H.; Wang, Z.; Qin, Q.; Ye, F. A Numerical Study of Hypoxia in Chesapeake Bay Using an Unstructured Grid Model: Validation and Sensitivity to Bathymetry Representation. JAWRA J. Am. Water Resour. Assoc. 2022, 58, 898–921. [Google Scholar] [CrossRef]

- Du, J.; Shen, J.; Zhang, Y.J.; Ye, F.; Liu, Z.; Wang, Z.; Wang, Y.P.; Yu, X.; Sisson, M.; Wang, H.V. Tidal Response to Sea-Level Rise in Different Types of Estuaries: The Importance of Length, Bathymetry, and Geometry. Geophys. Res. Lett. 2018, 45, 227–235. [Google Scholar] [CrossRef]

- Ashphaq, M.; Srivastava, P.K.; Mitra, D. Review of near-shore satellite derived bathymetry: Classification and account of five decades of coastal bathymetry research. J. Ocean Eng. Sci. 2021, 6, 340–359. [Google Scholar] [CrossRef]

- Gao, J. Bathymetric mapping by means of remote sensing: Methods, accuracy and limitations. Prog. Phys. Geogr. Earth Environ. 2009, 33, 103–116. [Google Scholar] [CrossRef]

- Najar, M.A.; Benshila, R.; Bennioui, Y.E.; Thoumyre, G.; Almar, R.; Bergsma, E.W.J.; Delvit, J.M.; Wilson, D.G. Coastal Bathymetry Estimation from Sentinel-2 Satellite Imagery: Comparing Deep Learning and Physics-Based Approaches. Remote Sens. 2022, 14, 1196. [Google Scholar] [CrossRef]

- Mateo-Pérez, V.; Corral-Bobadilla, M.; Ortega-Fernández, F.; Vergara-González, E.P. Port Bathymetry Mapping Using Support Vector Machine Technique and Sentinel-2 Satellite Imagery. Remote Sens. 2020, 12, 69. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Retrieval of nearshore bathymetry from Sentinel-2A and 2B satellites in South Florida coastal waters. Estuar. Coast. Shelf Sci. 2019, 226, 106277. [Google Scholar] [CrossRef]

- Sagawa, T.; Yamashita, Y.; Okumura, T.; Yamanokuchi, T. Satellite Derived Bathymetry Using Machine Learning and Multi-Temporal Satellite Images. Remote Sens. 2019, 11, 1155. [Google Scholar] [CrossRef]

- Turner, I.L.; Harley, M.D.; Almar, R.; Bergsma, E.W.J. Satellite optical imagery in Coastal Engineering. Coast. Eng. 2021, 167, 103919. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Confronting turbidity, the major challenge for satellite-derived coastal bathymetry. Sci. Total Environ. 2023, 870, 161898. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Remote sensing of bottom reflectance and water attenuation parameters in shallow water using aircraft and Landsat data. Int. J. Remote Sens. 1981, 2, 71–82. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Towards Routine Mapping of Shallow Bathymetry in Environments with Variable Turbidity: Contribution of Sentinel-2A/B Satellites Mission. Remote Sens. 2020, 12, 451. [Google Scholar] [CrossRef]

- Poppenga, S.K.; Palaseanu-Lovejoy, M.; Gesch, D.B.; Danielson, J.J.; Tyler, D.J. Evaluating the Potential for Near-Shore Bathymetry on the Majuro Atoll, Republic of the Marshall Islands, Using Landsat 8 and WorldView-3 Imagery; U.S. Geological Survey: Reston, VA, USA, 2018. [Google Scholar] [CrossRef]

- Mandlburger, G.; Kölle, M.; Nübel, H.; Soergel, U. BathyNet: A Deep Neural Network for Water Depth Mapping from Multispectral Aerial Images. PFG J. Photogramm. 2021, 89, 71–89. [Google Scholar] [CrossRef]

- Yustisi Lumban-Gaol, K.A.O.; Peters, R. Extracting Coastal Water Depths from Multi-Temporal Sentinel-2 Images Using Convolutional Neural Networks. Mar. Geod. 2022, 45, 615–644. [Google Scholar] [CrossRef]

- Amante, C.J.; Love, M.; Carignan, K.; Sutherland, M.G.; MacFerrin, M.; Lim, E. Continuously Updated Digital Elevation Models (CUDEMs) to Support Coastal Inundation Modeling. Remote Sens. 2023, 15, 1702. [Google Scholar] [CrossRef]

- Zhong, J.; Sun, J.; Lai, Z.; Song, Y. Nearshore Bathymetry from ICESat-2 LiDAR and Sentinel-2 Imagery Datasets Using Deep Learning Approach. Remote Sens. 2022, 14, 4229. [Google Scholar] [CrossRef]

- Guo, X.; Jin, X.; Jin, S. Shallow Water Bathymetry Mapping from ICESat-2 and Sentinel-2 Based on BP Neural Network Model. Water 2022, 14, 3862. [Google Scholar] [CrossRef]

- Hsu, H.J.; Huang, C.Y.; Jasinski, M.; Li, Y.; Gao, H.; Yamanokuchi, T.; Wang, C.G.; Chang, T.M.; Ren, H.; Kuo, C.Y.; et al. A semi-empirical scheme for bathymetric mapping in shallow water by ICESat-2 and Sentinel-2: A case study in the South China Sea. ISPRS J. Photogramm. Remote Sens. 2021, 178, 1–19. [Google Scholar] [CrossRef]

- Albright, A.; Glennie, C. Nearshore Bathymetry From Fusion of Sentinel-2 and ICESat-2 Observations. IEEE Geosci. Remote Sens. Lett. 2021, 18, 900–904. [Google Scholar] [CrossRef]

- Perbet, P.; Guindon, L.; Côté, J.F.; Béland, M. Evaluating deep learning methods applied to Landsat time series subsequences to detect and classify boreal forest disturbances events: The challenge of partial and progressive disturbances. Remote Sens. Environ. 2024, 306, 114107. [Google Scholar] [CrossRef]

- Brewer, E.; Lin, J.; Kemper, P.; Hennin, J.; Runfola, D. Predicting road quality using high resolution satellite imagery: A transfer learning approach. PLoS ONE 2021, 16, e253370. [Google Scholar] [CrossRef]

- Lv, Z.; Nunez, K.; Brewer, E.; Runfola, D. Mapping the tidal marshes of coastal Virginia: A hierarchical transfer learning approach. GIScience Remote Sens. 2024, 61, 2287291. [Google Scholar] [CrossRef]

- Fayad, I.; Ciais, P.; Schwartz, M.; Wigneron, J.P.; Baghdadi, N.; de Truchis, A.; d’Aspremont, A.; Frappart, F.; Saatchi, S.; Sean, E.; et al. Hy-TeC: A hybrid vision transformer model for high-resolution and large-scale mapping of canopy height. Remote Sens. Environ. 2024, 302, 113945. [Google Scholar] [CrossRef]

- Tolan, J.; Yang, H.I.; Nosarzewski, B.; Couairon, G.; Vo, H.V.; Brandt, J.; Spore, J.; Majumdar, S.; Haziza, D.; Vamaraju, J.; et al. Very high resolution canopy height maps from RGB imagery using self-supervised vision transformer and convolutional decoder trained on aerial lidar. Remote Sens. Environ. 2024, 300, 113888. [Google Scholar] [CrossRef]

- Lv, Z.; Nunez, K.; Brewer, E.; Runfola, D. pyShore: A deep learning toolkit for shoreline structure mapping with high-resolution orthographic imagery and convolutional neural networks. Comput. Geosci. 2023, 171, 105296. [Google Scholar] [CrossRef]

- Runfola, D.; Stefanidis, A.; Lv, Z.; O’Brien, J.; Baier, H. A multi-glimpse deep learning architecture to estimate socioeconomic census metrics in the context of extreme scope variance. Int. J. Geogr. Inf. Sci. 2024, 38, 726–750. [Google Scholar] [CrossRef]

- Runfola, D.; Stefanidis, A.; Baier, H. Using satellite data and deep learning to estimate educational outcomes in data-sparse environments. Remote. Sens. Lett. 2021, 13, 87–97. [Google Scholar] [CrossRef]

- Brewer, E.; Valdrighi, G.; Solunke, P.; Rulff, J.; Piadyk, Y.; Lv, Z.; Poco, J.; Silva, C. Granularity at Scale: Estimating Neighborhood Socioeconomic Indicators From High-Resolution Orthographic Imagery and Hybrid Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5668–5679. [Google Scholar] [CrossRef]

- Brewer, E.; Lin, J.; Runfola, D. Susceptibility & defense of satellite image-trained convolutional networks to backdoor attacks. Inf. Sci. 2022, 603, 244–261. [Google Scholar] [CrossRef]

- Brewer, E.; Lv, Z.; Runfola, D. Tracking the industrial growth of modern China with high-resolution panchromatic imagery: A sequential convolutional approach. arXiv 2024, arXiv:2301.09620. [Google Scholar]

- Runfola, D.; Baier, H.; Mills, L.; Naughton-Rockwell, M.; Stefanidis, A. Deep Learning Fusion of Satellite and Social Information to Estimate Human Migratory Flows. Trans. GIS 2022, 26, 2495–2518. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. In Proceedings of the ICLR 2021—9th International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Vaswani, A.; Brain, G.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision Transformers for Dense Prediction. arXiv 2021, arXiv:2103.13413. [Google Scholar]

- Saeidi, V.; Seydi, S.T.; Kalantar, B.; Shabani, F. Water depth estimation from Sentinel-2 imagery using advanced machine learning methods and explainable artificial intelligence. Geomat. Nat. Hazards Risk 2023, 14, 2225691. [Google Scholar] [CrossRef]

- Wei, J.; Wang, M.; Lee, Z.; Briceño, H.O.; Yu, X.; Jiang, L.; Garcia, R.; Wang, J.; Luis, K. Shallow water bathymetry with multi-spectral satellite ocean color sensors: Leveraging temporal variation in image data. Remote Sens. Environ. 2020, 250, 112035. [Google Scholar] [CrossRef]

- Hedley, J.; Roelfsema, C.; Phinn, S.R. Efficient radiative transfer model inversion for remote sensing applications. Remote Sens. Environ. 2009, 113, 2527–2532. [Google Scholar] [CrossRef]

- Pacheco, A.; Horta, J.; Loureiro, C.; Ferreira, Ó. Retrieval of nearshore bathymetry from Landsat 8 images: A tool for coastal monitoring in shallow waters. Remote Sens. Environ. 2015, 159, 102–116. [Google Scholar] [CrossRef]

- Hedley, J.D.; Roelfsema, C.; Brando, V.; Giardino, C.; Kutser, T.; Phinn, S.; Mumby, P.J.; Barrilero, O.; Laporte, J.; Koetz, B. Coral reef applications of Sentinel-2: Coverage, characteristics, bathymetry and benthic mapping with comparison to Landsat 8. Remote Sens. Environ. 2018, 216, 598–614. [Google Scholar] [CrossRef]

- Lyzenga, D.; Malinas, N.; Tanis, F. Multispectral bathymetry using a simple physically based algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2251–2259. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Casal, G.; Monteys, X.; Hedley, J.; Harris, P.; Cahalane, C.; McCarthy, T. Assessment of empirical algorithms for bathymetry extraction using Sentinel-2 data. Int. J. Remote Sens. 2019, 40, 2855–2879. [Google Scholar] [CrossRef]

- Dörnhöfer, K.; Göritz, A.; Gege, P.; Pflug, B.; Oppelt, N. Water Constituents and Water Depth Retrieval from Sentinel-2A—A First Evaluation in an Oligotrophic Lake. Remote Sens. 2016, 8, 941. [Google Scholar] [CrossRef]

- Duplančić Leder, T.; Baučić, M.; Leder, N.; Gilić, F. Optical Satellite-Derived Bathymetry: An Overview and WoS and Scopus Bibliometric Analysis. Remote Sens. 2023, 15, 1294. [Google Scholar] [CrossRef]

- Wu, Z.; Mao, Z.; Shen, W.; Yuan, D.; Zhang, X.; Huang, H. Satellite-derived bathymetry based on machine learning models and an updated quasi-analytical algorithm approach. Opt. Express 2022, 30, 16773–16793. [Google Scholar] [CrossRef]

- Wicaksono, P.; Harahap, S.D.; Hendriana, R. Satellite-derived bathymetry from WorldView-2 based on linear and machine learning regression in the optically complex shallow water of the coral reef ecosystem of Kemujan island. Remote Sens. Appl. Soc. Environ. 2024, 33, 101085. [Google Scholar] [CrossRef]

- Mudiyanselage, S.; Abd-Elrahman, A.; Wilkinson, B.; and, V.L. Satellite-derived bathymetry using machine learning and optimal Sentinel-2 imagery in South-West Florida coastal waters. GIScience Remote Sens. 2022, 59, 1143–1158. [Google Scholar] [CrossRef]

- Ma, Y.; Xu, N.; Liu, Z.; Yang, B.; Yang, F.; Wang, X.H.; Li, S. Satellite-derived bathymetry using the ICESat-2 lidar and Sentinel-2 imagery datasets. Remote Sens. Environ. 2020, 250, 112047. [Google Scholar] [CrossRef]

- Xie, C.; Chen, P.; Zhang, Z.; Pan, D. Satellite-derived bathymetry combined with Sentinel-2 and ICESat-2 datasets using machine learning. Front. Earth Sci. 2023, 11, 1111817. [Google Scholar] [CrossRef]

- Casal, G.; Harris, P.; Monteys, X.; Hedley, J.; Cahalane, C.; and, T.M. Understanding satellite-derived bathymetry using Sentinel 2 imagery and spatial prediction models. GIScience Remote Sens. 2020, 57, 271–286. [Google Scholar] [CrossRef]

- Çelik, O.; Büyüksalih, G.; Gazioğlu, C. Improving the Accuracy of Satellite-Derived Bathymetry Using Multi-Layer Perceptron and Random Forest Regression Methods: A Case Study of Tavşan Island. J. Mar. Sci. Eng. 2023, 11, 2090. [Google Scholar] [CrossRef]

- Wei, C.; Zhao, Q.; Lu, Y.; Fu, D. Assessment of Empirical Algorithms for Shallow Water Bathymetry Using Multi-Spectral Imagery of Pearl River Delta Coast, China. Remote Sens. 2021, 13, 3123. [Google Scholar] [CrossRef]

- Najar, M.A.; Thoumyre, G.; Bergsma, E.W.; Almar, R.; Benshila, R.; Wilson, D.G. Satellite derived bathymetry using deep learning. Mach. Learn. 2023, 112, 1107–1130. [Google Scholar]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.S.; Khan, F.S. Transformers in Remote Sensing: A Survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Phillips, S.; McGee, B. The Economic Benefits of Cleaning Up the Chesapeake; Technical report; Chesapeake Bay Program: Annapolis, MD, USA, 2014. [Google Scholar]

- Melchor, J.R. Surface Water Turbidity in the Entrance to Chesapeake Bay, Virginia. Master’s Thesis, Old Dominion University, Norfolk, VA, USA, 1972. [Google Scholar] [CrossRef]

- NOAA. NOAA Continually Updated Shoreline Product (CUSP). 2021. Available online: https://shoreline.noaa.gov/data/datasheets/cusp.html (accessed on 1 January 2024).

- GLAD. Landsat Analysis Ready Data. 2018. Available online: https://glad.umd.edu/ (accessed on 1 July 2022).

- PlanetLabs. Planet Application Program Interface: In Space for Life on Earth, 2022.

- OCS. 2024: Vertical Datum Transformation. 2024. Available online: https://www.fisheries.noaa.gov/inport/item/39987 (accessed on 1 January 2024).

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, I.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging 2016, 66, 1285–1298. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4353–4361. [Google Scholar] [CrossRef]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C., Jr.; Beard, K.H.; Cutler, A.; Gibson, J.; Lawler, J.J. Random Forests for classification in ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random Forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

- Kim, D.; Ka, W.; Ahn, P.; Joo, D.; Chun, S.; Kim, J. Global-Local Path Networks for Monocular Depth Estimation with Vertical CutDepth. arXiv 2022, arXiv:2201.07436. [Google Scholar]

- Wang, X.; Hu, Z.; Shi, S.; Hou, M.; Xu, L.; Zhang, X. A deep learning method for optimizing semantic segmentation accuracy of remote sensing images based on improved UNet. Sci. Rep. 2023, 13, 7600. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Kwon, J.-y.; Shin, H.-k.; Kim, D.-h.; Lee, H.-g.; Bouk, J.-k.; Kim, J.-h.; Kim, T.-h. Estimation of shallow bathymetry using Sentinel-2 satellite data and Random Forest machine learning: A case study for Cheonsuman, Hallim, and Samcheok Coastal Seas. J. Appl. Remote Sens. 2024, 18, 014522. [Google Scholar] [CrossRef]

- Saputra, L.R.; Radjawane, I.M.; Park, H.; Gularso, H. Effect of Turbidity, Temperature and Salinity of Waters on Depth Data from Airborne LiDAR Bathymetry. IOP Conf. Ser. Earth Environ. Sci. 2021, 925, 012056. [Google Scholar] [CrossRef]

- Turner, J.S.; Friedrichs, C.T.; Friedrichs, M.A.M. Long-Term Trends in Chesapeake Bay Remote Sensing Reflectance: Implications for Water Clarity. J. Geophys. Res. Ocean. 2021, 126, e2021JC017959. [Google Scholar] [CrossRef]

- McCarthy, M.J.; Otis, D.B.; Hughes, D.; Muller-Karger, F.E. Automated high-resolution satellite-derived coastal bathymetry mapping. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102693. [Google Scholar] [CrossRef]

- Kay, S.; Hedley, J.D.; Lavender, S. Sun Glint Correction of High and Low Spatial Resolution Images of Aquatic Scenes: A Review of Methods for Visible and Near-Infrared Wavelengths. Remote Sens. 2009, 1, 697–730. [Google Scholar] [CrossRef]

| Spectral Bands | Wave Length (nm) |

|---|---|

| Coastal Blue | 431–452 |

| Blue | 465–515 |

| Green I | 513–549 |

| Green | 547–583 |

| Yellow | 600–620 |

| Red | 650–680 |

| RedEdge | 697–713 |

| NIR | 845–885 |

| Model | Location | MAE (m) | RMSE (m) | MAPE (%) | N |

|---|---|---|---|---|---|

| BathyFormer | L1 | 0.46 | 0.55 | 12.5 | 271 |

| L2 | 0.55 | 0.69 | 13.2 | 1139 | |

| L3 | 0.55 | 0.73 | 11.3 | 222 |

| Model | Location | MAE (m) | RMSE (m) | MAPE (%) | N |

|---|---|---|---|---|---|

| Random Forest | L1 | 0.83 | 0.97 | 19.6 | 271 |

| L2 | 1.08 | 1.24 | 25.4 | 1139 | |

| L3 | 1.13 | 1.31 | 25.5 | 222 |

| Error Metrics | 2–3 (m) | 3–4 (m) | 4–5 (m) |

|---|---|---|---|

| MAE (%) | 0.82 | 0.46 | 0.56 |

| RMSE (m) | 0.89 | 0.52 | 0.75 |

| MAPE (%) | 29 | 12.9 | 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, Z.; Herman, J.; Brewer, E.; Nunez, K.; Runfola, D. BathyFormer: A Transformer-Based Deep Learning Method to Map Nearshore Bathymetry with High-Resolution Multispectral Satellite Imagery. Remote Sens. 2025, 17, 1195. https://doi.org/10.3390/rs17071195

Lv Z, Herman J, Brewer E, Nunez K, Runfola D. BathyFormer: A Transformer-Based Deep Learning Method to Map Nearshore Bathymetry with High-Resolution Multispectral Satellite Imagery. Remote Sensing. 2025; 17(7):1195. https://doi.org/10.3390/rs17071195

Chicago/Turabian StyleLv, Zhonghui, Julie Herman, Ethan Brewer, Karinna Nunez, and Dan Runfola. 2025. "BathyFormer: A Transformer-Based Deep Learning Method to Map Nearshore Bathymetry with High-Resolution Multispectral Satellite Imagery" Remote Sensing 17, no. 7: 1195. https://doi.org/10.3390/rs17071195

APA StyleLv, Z., Herman, J., Brewer, E., Nunez, K., & Runfola, D. (2025). BathyFormer: A Transformer-Based Deep Learning Method to Map Nearshore Bathymetry with High-Resolution Multispectral Satellite Imagery. Remote Sensing, 17(7), 1195. https://doi.org/10.3390/rs17071195