Data Integration Based on UAV Multispectra and Proximal Hyperspectra Sensing for Maize Canopy Nitrogen Estimation

Abstract

1. Introduction

2. Materials and Methods

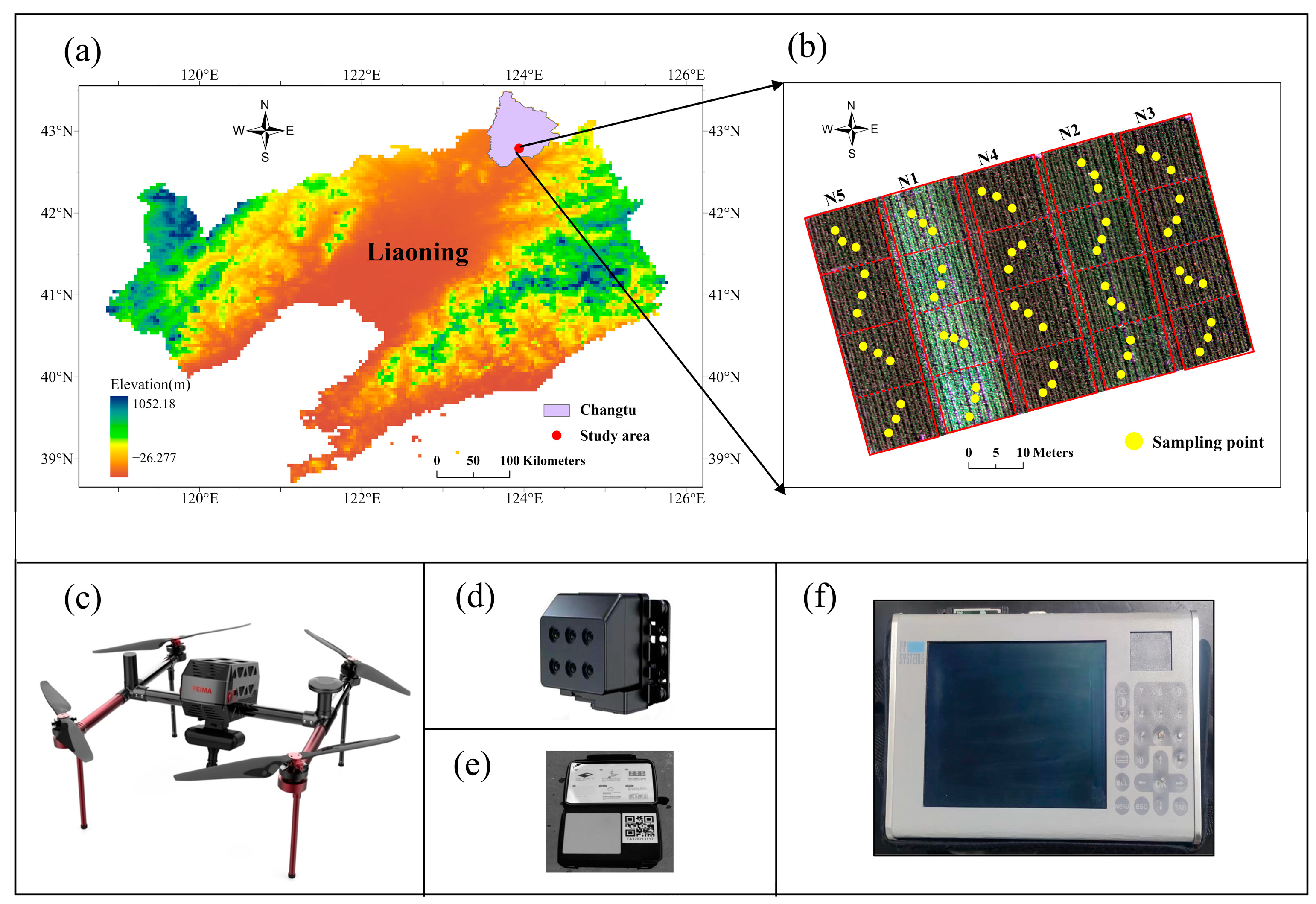

2.1. Study Area and Field Sampling

2.2. UAV-Based Multispectral Data Acquisition and Pre-Processing

- Radiometric calibration: the original digital number (DN) values of the grayscale images were converted to ground object reflectance using Yusense Ref V3.0 software (Changguang Yuchen Information Technology and Equipment Co., Ltd.; Qingdao, China).

- Image stitching: the radiometrically calibrated data were imported into Pix4D 4.5.6 software (Pix4D SA, Lausanne, Switzerland) for image stitching, producing a final mosaic of six-band images with the projection coordinate system set to WGS 84.

- Preprocessing and cropping: the stitched imagery underwent preprocessing and spatial cropping using ArcGIS 10.8 (ESRI, Redlands, CA, USA) and ENVI 5.2 (Exelis Visual Information Solutions, Boulder, CO, USA) software to isolate the study area.

- Vector file creation and texture analysis: a shapefile vector of the sampled corn plants was created in ArcGIS 10.8. Subsequently, in ENVI 5.2, texture analysis was performed using the gray-level co-occurrence matrix (GLCM) with a 3 × 3 window size, exacting 8 texture indices (Mean, Variance, Contrast, Entropy, Correlation, Homogeneity, Dissimilarity, and Second Moment) for each of the 6 spectral bands.

- Feature exaction: using Python 3.6.1, spectral and textural indices were batch-extracted from the regions of interest (ROIs). These included the mean reflectance of the 6 spectral bands, along with 24 MVIs (Table S1) and 48 MTIs. The MTIs were derived by combing the 8 GLCM-based texture parameters with the 6 spectral bands, resulting in texture indices such as Bm (mean texture of the blue), R1c (contrast texture of the red edge 1), and Nco (correlation texture of the near-infrared), among others.

2.3. Proximal Hyperspectral Data Acquisition and Pre-Processing

2.4. Workflow of This Study

3. Results

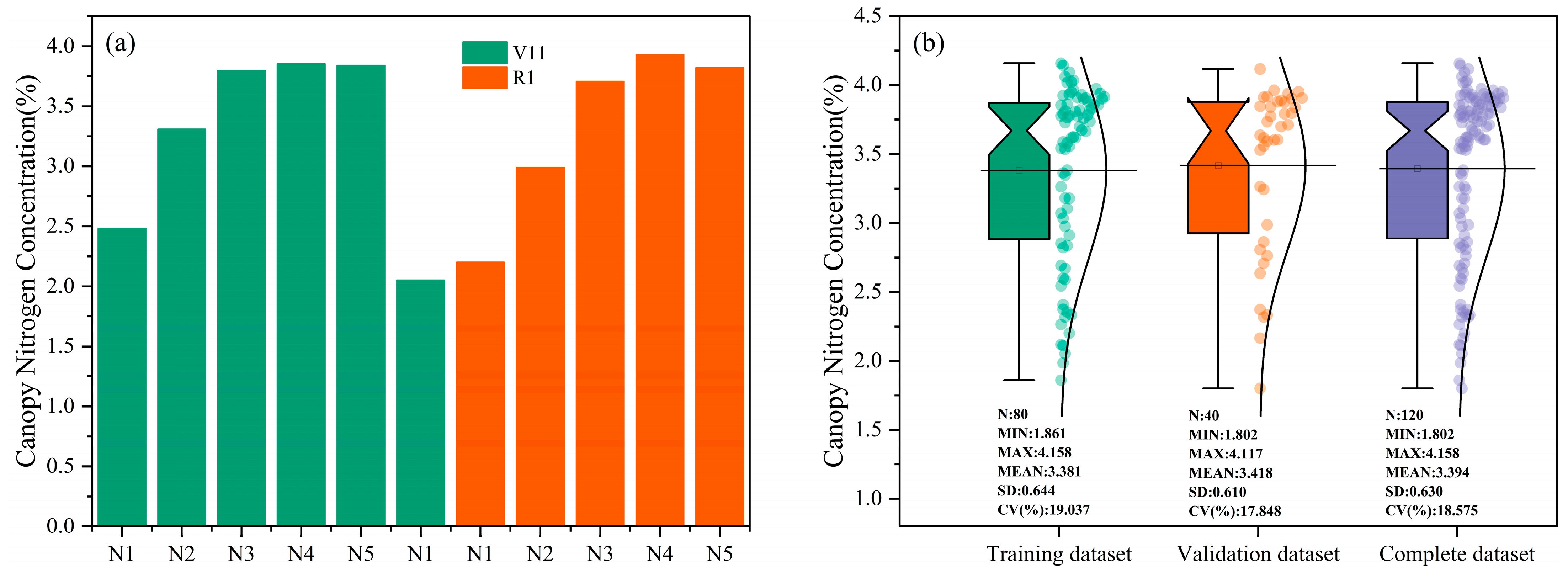

3.1. Descriptive Statistics of CNC

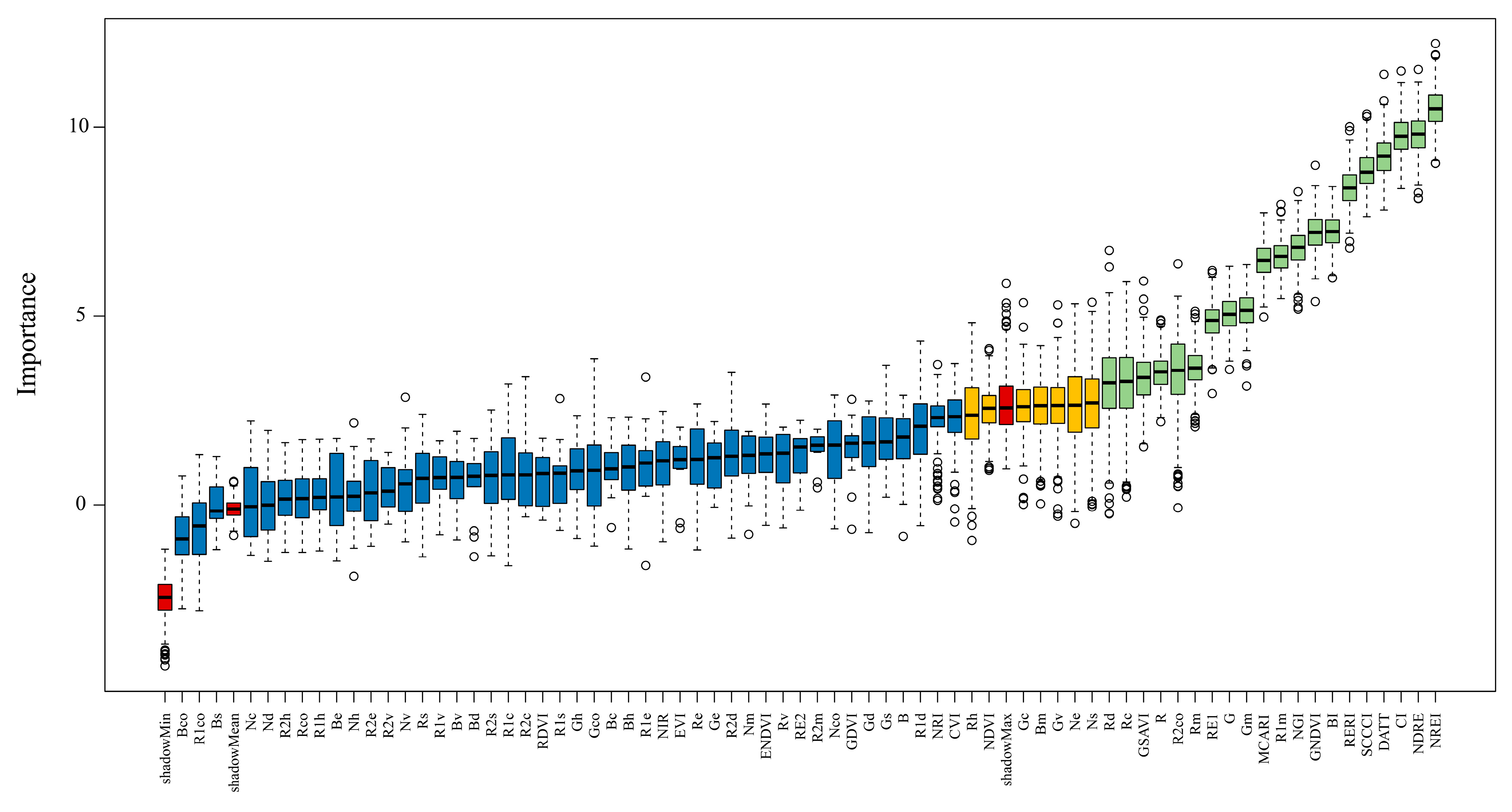

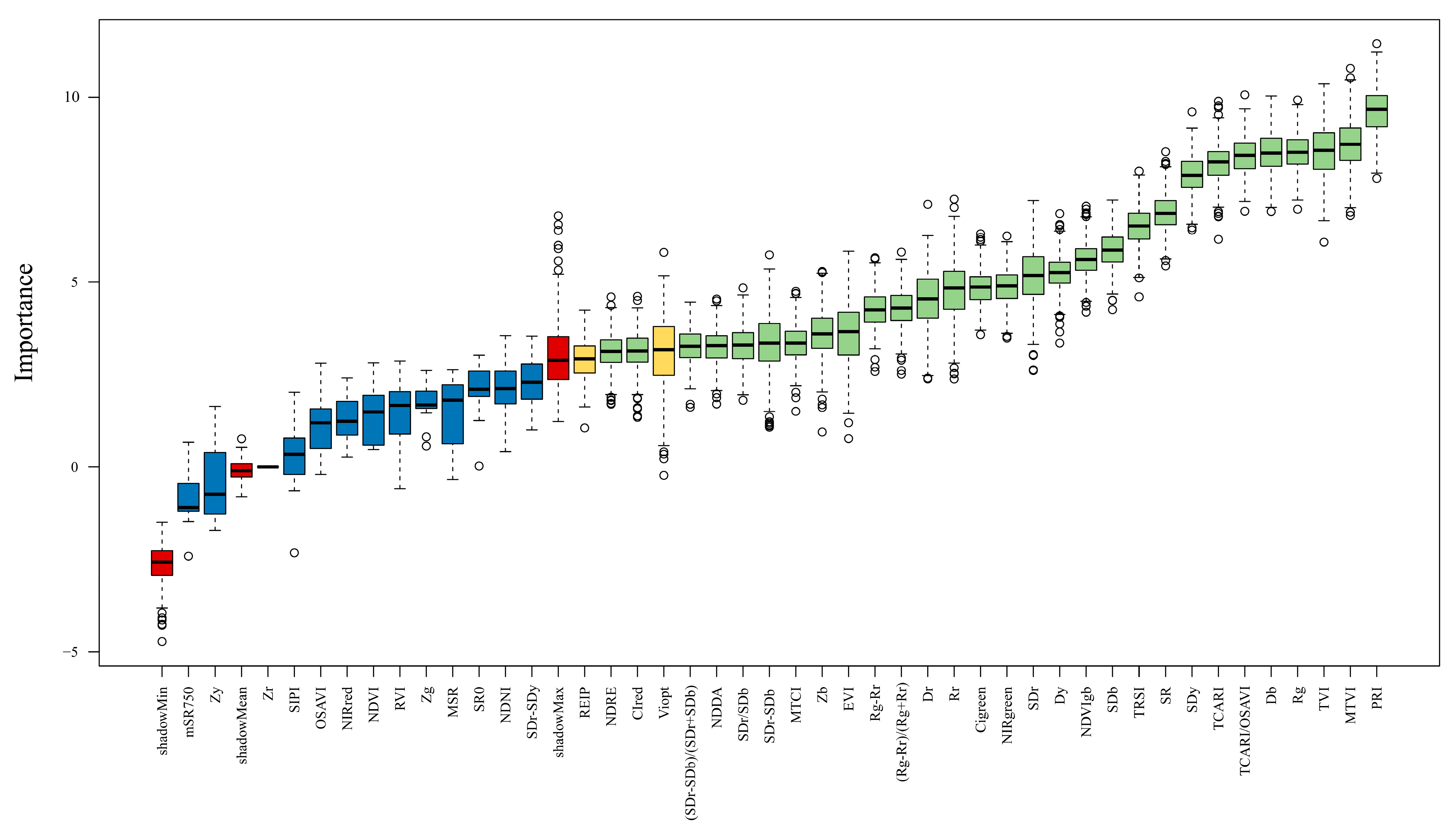

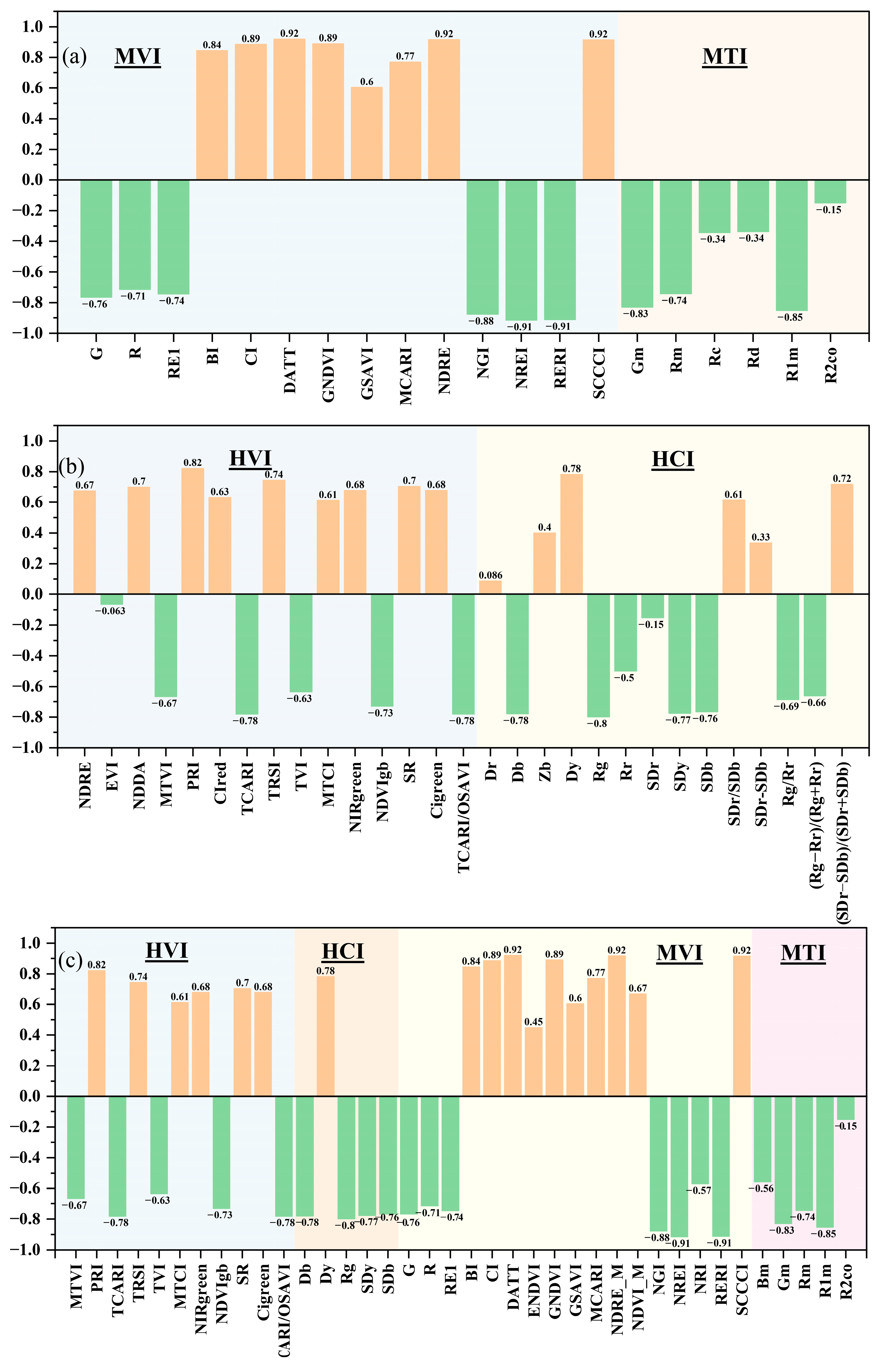

3.2. Optimal Feature Extraction and Correlation Analysis

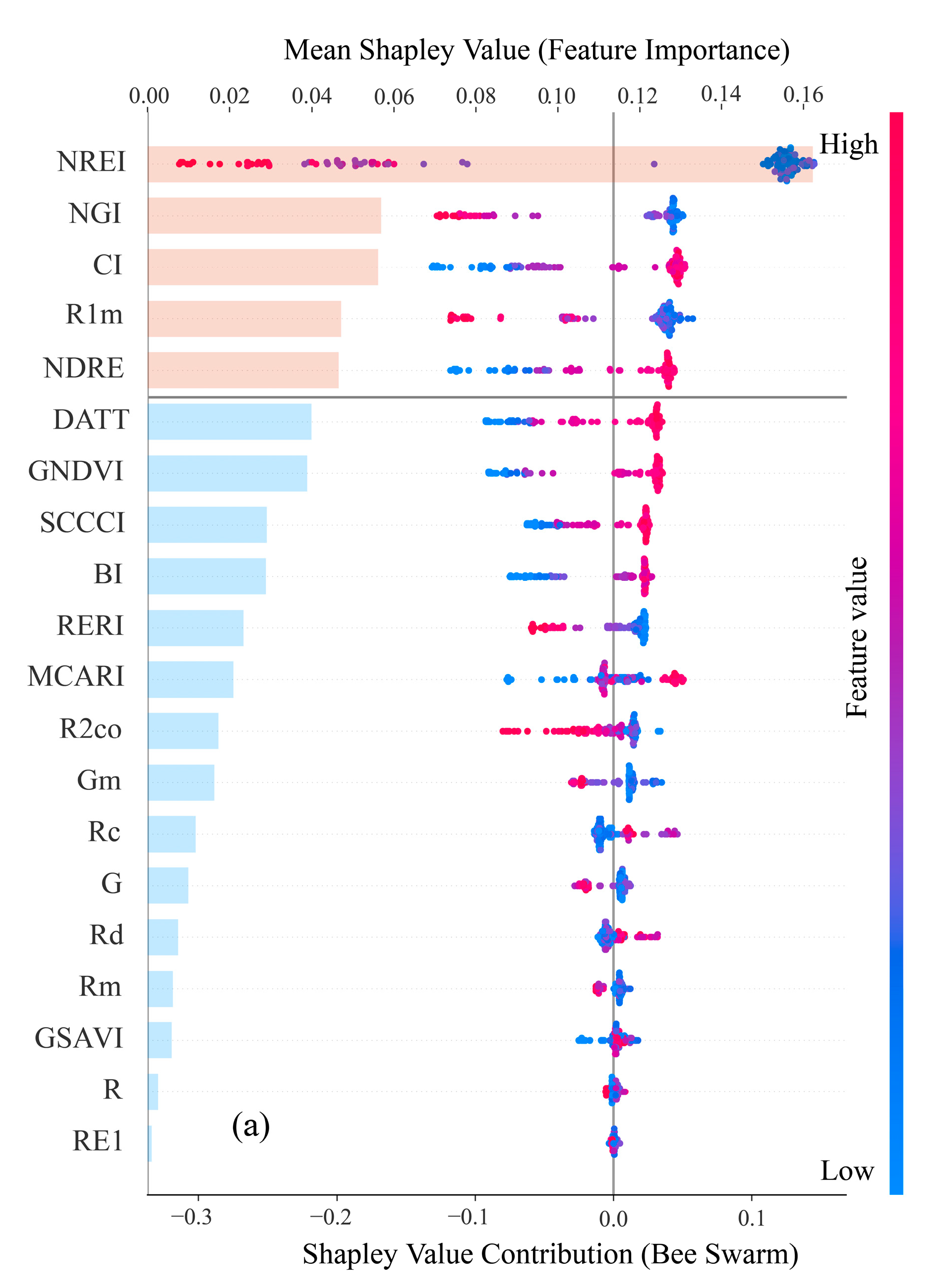

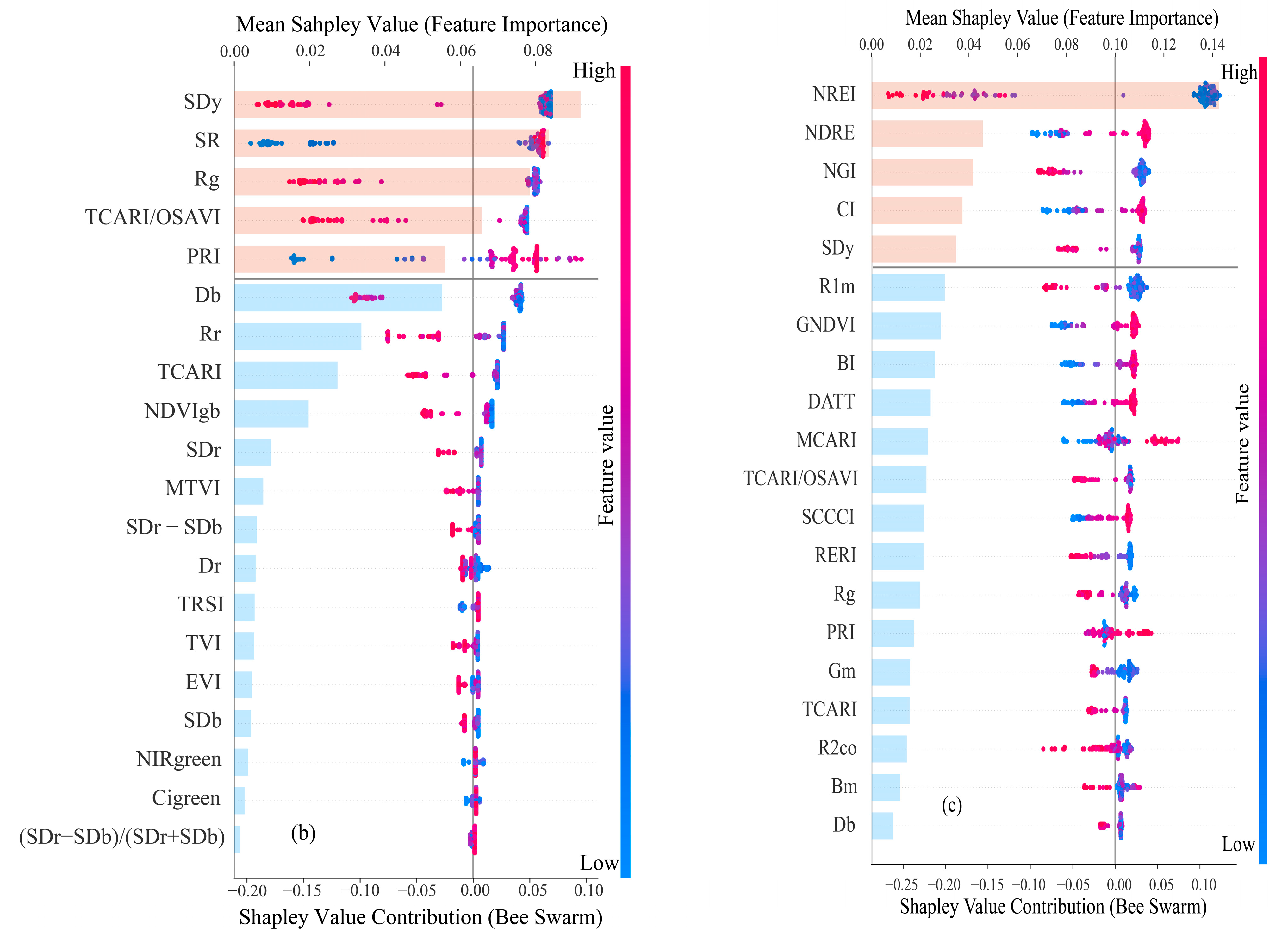

3.3. Construction and Interpretation of CNC Estimation Models

4. Discussion

4.1. Spectral Data Characteristics and Key Features for CNC Estimation

4.2. The Comparison of CNC Estimating Using UAV Multispectral and Proximal Hyperspectral Data

4.3. The Performance of the Boruta–RF–SHAP Framework in Multi-Source Data Integration

4.4. Limitations and Future Works

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, C.; Niu, J.; Xu, C.; Han, S.; Bai, W.; Song, Q.; Dang, Y.; Zhang, H. Effect of Conservation Tillage on Crop Yield and Soil Organic Carbon in Northeast China: A Meta-analysis. Soil Use Manag. 2021, 38, 1146–1161. [Google Scholar] [CrossRef]

- Liu, S. Operation of Domestic Corn Market in 2023 and Market Outlook in 2024. Cereal Feed. Ind. 2024, 2, 1. [Google Scholar]

- Quan, Z.; Zhang, X.; Davidson, E.A.; Zhu, F.; Li, S.; Zhao, X.; Chen, X.; Zhang, L.-M.; He, J.-Z.; Wei, W.; et al. Fates and Use Efficiency of Nitrogen Fertilizer in Maize Cropping Systems and Their Responses to Technologies and Management Practices: A Global Analysis on Field 15N Tracer Studies. Earth’s Future 2021, 9, e2020EF001514. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative Modelling for Leaf Nitrogen Content of Winter Wheat Using UAV-Based Hyperspectral Data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving Estimation of Summer Maize Nitrogen Status with Red Edge-Based Spectral Vegetation Indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned Aerial Vehicle to Estimate Nitrogen Status of Turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep Learning Techniques to Classify Agricultural Crops through UAV Imagery: A Review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef]

- Feng, L.; Chen, S.; Zhang, C.; Zhang, Y.; He, Y. A Comprehensive Review on Recent Applications of Unmanned Aerial Vehicle Remote Sensing with Various Sensors for High-Throughput Plant Phenotyping. Comput. Electron. Agric. 2021, 182, 106033. [Google Scholar] [CrossRef]

- Pathak, H.; Igathinathane, C.; Zhang, Z.; Archer, D.; Hendrickson, J. A Review of Unmanned Aerial Vehicle-Based Methods for Plant Stand Count Evaluation in Row Crops. Comput. Electron. Agric. 2022, 198, 107064. [Google Scholar] [CrossRef]

- Xu, X.; Fan, L.; Li, Z.; Meng, Y.; Feng, H.; Yang, H.; Xu, B. Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV. Remote Sens. 2021, 13, 340. [Google Scholar] [CrossRef]

- Nguyen, C.; Sagan, V.; Bhadra, S.; Moose, S. UAV Multisensory Data Fusion and Multi-Task Deep Learning for High-Throughput Maize Phenotyping. Sensors 2023, 23, 1827. [Google Scholar] [CrossRef]

- Zheng, H.; Ma, J.; Zhou, M.; Li, D.; Yao, X.; Cao, W.; Zhu, Y.; Cheng, T. Enhancing the Nitrogen Signals of Rice Canopies across Critical Growth Stages through the Integration of Textural and Spectral Information from Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2020, 12, 957. [Google Scholar] [CrossRef]

- Su, X.; Nian, Y.; Shaghaleh, H.; Hamad, A.; Yue, H.; Zhu, Y.; Li, J.; Wang, W.; Wang, H.; Ma, Q.; et al. Combining Features Selection Strategy and Features Fusion Strategy for SPAD Estimation of Winter Wheat Based on UAV Multispectral Imagery. Front. Plant Sci. 2024, 15, 1404238. [Google Scholar] [CrossRef] [PubMed]

- Ge, X.; Ding, J.; Teng, D.; Wang, J.; Huo, T.; Jin, X.; Wang, J.; He, B.; Han, L. Updated Soil Salinity with Fine Spatial Resolution and High Accuracy: The Synergy of Sentinel-2 MSI, Environmental Covariates and Hybrid Machine Learning Approaches. Catena 2022, 212, 106054. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, R.; Guo, J.; Dai, J.; Zhang, J.; Zhang, L.; Miao, Y. Estimation of Aboveground Biomass of Senescence Grassland in China’s Arid Region Using Multi-Source Data. Sci. Total Environ. 2024, 918, 170602. [Google Scholar] [CrossRef]

- Dai, L.; Ge, J.; Wang, L.; Zhang, Q.; Liang, T.; Bolan, N.; Lischeid, G.; Rinklebe, J. Influence of Soil Properties, Topography, and Land Cover on Soil Organic Carbon and Total Nitrogen Concentration: A Case Study in Qinghai-Tibet Plateau Based on Random Forest Regression and Structural Equation Modeling. Sci. Total Environ. 2022, 821, 153440. [Google Scholar] [CrossRef]

- Zhang, B.; Guo, B.; Zou, B.; Wei, W.; Lei, Y.; Li, T. Retrieving Soil Heavy Metals Concentrations Based on GaoFen-5 Hyperspectral Satellite Image at an Opencast Coal Mine, Inner Mongolia, China. Environ. Pollut. 2022, 300, 118981. [Google Scholar] [CrossRef]

- Zhang, J.; Cheng, T.; Shi, L.; Wang, W.; Niu, Z.; Guo, W.; Ma, X. Combining Spectral and Texture Features of UAV Hyperspectral Images for Leaf Nitrogen Content Monitoring in Winter Wheat. Int. J. Remote Sens. 2022, 43, 1–22. [Google Scholar] [CrossRef]

- Yang, H.; Yin, H.; Li, F.; Hu, Y.; Yu, K. Machine Learning Models Fed with Optimized Spectral Indices to Advance Crop Nitrogen Monitoring. Field Crop. Res. 2023, 293, 108844. [Google Scholar] [CrossRef]

- Lee, H.; Wang, J.; Leblon, B. Using Linear Regression, Random Forests, and Support Vector Machine with Unmanned Aerial Vehicle Multispectral Images to Predict Canopy Nitrogen Weight in Corn. Remote Sens. 2020, 12, 2071. [Google Scholar] [CrossRef]

- Zhu, W.; Rezaei, E.E.; Nouri, H.; Sun, Z.; Li, J.; Yu, D.; Siebert, S. UAV-Based Indicators of Crop Growth Are Robust for Distinct Water and Nutrient Management but Vary between Crop Development Phases. Field Crop. Res. 2022, 284, 108582. [Google Scholar] [CrossRef]

- Yuan, B.; Zhou, L.; Hu, F.; Wei, C. Effects of 2D/3D Urban Morphology on Land Surface Temperature: Contribution, Response, and Interaction. Urban Climb 2024, 53, 101791. [Google Scholar] [CrossRef]

- Wu, D.; Liu, S.; He, B.; Xu, Z.; Wu, X.; Xu, T.; Yang, X.; Wei, J.; Peng, Z.; Wang, X. Current Earth System Models Overestimate Ecosystem Respiration in Mid-To-High Latitude Dryland Regions. Geophys. Res. Lett. 2024, 51, e2024GL112146. [Google Scholar] [CrossRef]

- Ciampitti, I.A.; Vyn, T.J. Physiological Perspectives of Changes over Time in Maize Yield Dependency on Nitrogen Uptake and Associated Nitrogen Efficiencies: A Review. Field Crop. Res. 2012, 133, 48–67. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Pu, R.; Li, Z.; Li, H.; Xu, X.; Song, X.; Yang, X.; Zhao, C. An Overview of Crop Nitrogen Status Assessment Using Hyperspectral Remote Sensing: Current Status and Perspectives. Eur. J. Agron. 2021, 124, 126241. [Google Scholar] [CrossRef]

- Datt, B. Visible/near Infrared Reflectance and Chlorophyll Content in Eucalyptus Leaves. Int. J. Remote Sens. 1999, 20, 2741–2759. [Google Scholar] [CrossRef]

- Ma, X.; Chen, P.; Jin, X. Predicting Wheat Leaf Nitrogen Content by Combining Deep Multitask Learning and a Mechanistic Model Using UAV Hyperspectral Images. Remote Sens. 2022, 14, 6334. [Google Scholar] [CrossRef]

- Yang, X.; Yang, R.; Ye, Y.; Yuan, Z.; Wang, D.; Hua, K. Winter Wheat SPAD Estimation from UAV Hyperspectral Data Using Cluster-Regression Methods. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102618. [Google Scholar] [CrossRef]

- Yao, X.; Huang, Y.; Shang, G.; Zhou, C.; Cheng, T.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of Six Algorithms to Monitor Wheat Leaf Nitrogen Concentration. Remote Sens. 2015, 7, 14939–14966. [Google Scholar] [CrossRef]

- Walburg, G.; Bauer, M.; Daughtry, C. Effects of Nitrogen Nutrition on the Growth, Yield and Reflectance Characteristics of Corn Canopies. Agron. J. 1982, 74, 677–683. [Google Scholar] [CrossRef]

- Zhao, B.; Duan, A.; Ata-Ul-Karim, S.T.; Liu, Z.; Chen, Z.; Gong, Z.; Zhang, J.; Xiao, J.; Liu, Z.; Qin, A.; et al. Exploring New Spectral Bands and Vegetation Indices for Estimating Nitrogen Nutrition Index of Summer Maize. Eur. J. Agron. 2018, 93, 113–125. [Google Scholar] [CrossRef]

- Osborne, S.L.; Schepers, J.S.; Francis, D.D.; Schlemmer, M.R. Detection of Phosphorus and Nitrogen Deficiencies in Corn Using Spectral Radiance Measurements. Agron. J. 2002, 94, 1215–1221. [Google Scholar] [CrossRef]

- Shou, L.; Jia, L.; Cui, Z.; Chen, X.; Zhang, F. Using High-Resolution Satellite Imaging to Evaluate Nitrogen Status of Winter Wheat. J. Plant Nutr. 2007, 30, 1669–1680. [Google Scholar] [CrossRef]

- Feng, W.; He, L.; Zhang, H.-Y.; Guo, B.-B.; Zhu, Y.-J.; Wang, C.-Y.; Guo, T.-C. Assessment of Plant Nitrogen Status Using Chlorophyll Fluorescence Parameters of the Upper Leaves in Winter Wheat. Eur. J. Agron. 2015, 64, 78–87. [Google Scholar] [CrossRef]

- Zhao, D.; Huang, L.; Li, J.; Qi, J. A Comparative Analysis of Broadband and Narrowband Derived Vegetation Indices in Predicting LAI and CCD of a Cotton Canopy. Remote Sens. 2007, 62, 25–33. [Google Scholar] [CrossRef]

- Cilia, C.; Panigada, C.; Rossini, M.; Meroni, M.; Busetto, L.; Amaducci, S.; Boschetti, M.; Picchi, V.; Colombo, R. Nitrogen Status Assessment for Variable Rate Fertilization in Maize through Hyperspectral Imagery. Remote Sens. 2014, 6, 6549–6565. [Google Scholar] [CrossRef]

- Dhakal, M.; Huang, Y.; Locke, M.A.; Reddy, K.N.; Moore, M.T.; Krutz, L.J.; Gholson, D.; Bajgain, R. Assessment of Cotton and Sorghum Stand Establishment Using UAV-Based Multispectral and DSLR-Based RGB Imagery. Agrosyst. Geosci. Environ. 2022, 5, e20247. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated Narrow-Band Vegetation Indices for Prediction of Crop Chlorophyll Content for Application to Precision Agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Xia, T.; Miao, Y.; Wu, D.; Shao, H.; Khosla, R.; Mi, G. Active Optical Sensing of Spring Maize for In-Season Diagnosis of Nitrogen Status Based on Nitrogen Nutrition Index. Remote Sens. 2016, 8, 605. [Google Scholar] [CrossRef]

- Chen, P. A Comparison of Two Approaches for Estimating the Wheat Nitrogen Nutrition Index Using Remote Sensing. Remote Sens. 2015, 7, 4527–4548. [Google Scholar] [CrossRef]

- Zheng, Y.; Wu, B.; Zhang, M. Estimating the above ground biomass of winter wheat using the Sentinel-2 data. Natl. Remote Sens. Bull. 2017, 21, 318–328. [Google Scholar] [CrossRef]

- Feng, W.; Yao, X.; Zhu, Y.; Tian, Y.C.; Cao, W.X. Monitoring Leaf Nitrogen Status with Hyperspectral Reflectance in Wheat. Eur. J. Agron. 2008, 28, 394–404. [Google Scholar] [CrossRef]

- Song, X.; Xu, D.; He, L.; Feng, W.; Wang, Y.; Wang, Z.; Coburn, C.A.; Guo, T. Using Multi-Angle Hyperspectral Data to Monitor Canopy Leaf Nitrogen Content of Wheat. Precis. Agric. 2016, 17, 721–736. [Google Scholar] [CrossRef]

- Fan, L.; Zhao, J.; Xu, X.; Liang, D.; Yang, G.; Feng, H.; Yang, H.; Wang, Y.; Chen, G.; Wei, P. Hyperspectral-Based Estimation of Leaf Nitrogen Content in Corn Using Optimal Selection of Multiple Spectral Variables. Sensors 2019, 19, 2898. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Wirwahn, J.A.; Claupein, W. A Programmable Aerial Multispectral Camera System for In-Season Crop Biomass and Nitrogen Content Estimation. Agriculture 2016, 6, 4. [Google Scholar] [CrossRef]

- Mistele, B.; Schmidhalter, U. Estimating the Nitrogen Nutrition Index Using Spectral Canopy Reflectance Measurements. Eur. J. Agron. 2008, 29, 184–190. [Google Scholar] [CrossRef]

- Liu, L.; Peng, Z.; Zhang, B.; Wei, Z.; Han, N.; Lin, S.; Chen, H.; Cai, J. Canopy Nitrogen Concentration Monitoring Techniques of Summer Corn Based on Canopy Spectral Information. Sensors 2019, 19, 4123. [Google Scholar] [CrossRef]

- Zhang, J.; Qiu, X.; Wu, Y.; Zhu, Y.; Cao, Q.; Liu, X.; Cao, W. Combining Texture, Color, and Vegetation Indices from Fixed-Wing UAS Imagery to Estimate Wheat Growth Parameters Using Multivariate Regression Methods. Comput. Electron. Agric. 2021, 14, 106138. [Google Scholar] [CrossRef]

- Ma, Y.; Ma, L.; Zhang, Q.; Huang, C.; Yi, X.; Chen, X.; Hou, T.; Lv, X.; Zhang, Z. Cotton Yield Estimation Based on Vegetation Indices and Texture Features Derived from RGB Image. Front. Plant Sci. 2022, 13, 925986. [Google Scholar] [CrossRef]

- Fu, Z.; Yu, S.; Zhang, J.; Xi, H.; Gao, Y.; Lu, R.; Zheng, H.; Zhu, Y.; Cao, W.; Liu, X. Combining UAV Multispectral Imagery and Ecological Factors to Estimate Leaf Nitrogen and Grain Protein Content of Wheat. Eur. J. Agron. 2022, 132, 126405. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Combining Unmanned Aerial Vehicle (UAV)-Based Multispectral Imagery and Ground-Based Hyperspectral Data for Plant Nitrogen Concentration Estimation in Rice. Front. Plant Sci. 2018, 9, 936. [Google Scholar] [CrossRef] [PubMed]

- Yin, Q.; Zhang, Y.; Li, W.; Wang, J.; Wang, W.; Ahmad, I.; Zhou, G.; Huo, Z. Better Inversion of Wheat Canopy SPAD Values before Heading Stage Using Spectral and Texture Indices Based on UAV Multispectral Imagery. Remote Sens. 2023, 15, 4935. [Google Scholar] [CrossRef]

- Zhang, S.; Duan, J.; Qi, X.; Gao, Y.; He, L.; Liu, L.; Guo, T.; Feng, W. Combining Spectrum, Thermal, and Texture Features Using Machine Learning Algorithms for Wheat Nitrogen Nutrient Index Estimation and Model Transferability Analysis. Comput. Electron. Agric. 2024, 222, 109022. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.; Zhang, J.; Guo, Y.; Yang, X.; Yu, G.; Bai, X.; Chen, J.; Chen, Y.; Shi, L.; et al. Improving Estimation of Maize Leaf Area Index by Combining of UAV-Based Multispectral and Thermal Infrared Data: The Potential of New Texture Index. Comput. Electron. Agric. 2023, 214, 108294. [Google Scholar] [CrossRef]

- Jia, M.; Colombo, R.; Rossini, M.; Celesti, M.; Zhu, J.; Cogliati, S.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Estimation of Leaf Nitrogen Content and Photosynthetic Nitrogen Use Efficiency in Wheat Using Sun-Induced Chlorophyll Fluorescence at the Leaf and Canopy Scales. Eur. J. Agron. 2021, 122, 126192. [Google Scholar] [CrossRef]

- Jiang, J.; Wang, C.; Wang, H.; Fu, Z.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, Q.; Liu, X.-J. Evaluation of Three Portable Optical Sensors for Non-Destructive Diagnosis of Nitrogen Status in Winter Wheat. Sensors 2021, 21, 5579. [Google Scholar] [CrossRef]

- Berger, K.; Verrelst, J.; Féret, J.-B.; Wang, Z.; Wocher, M.; Strathmann, M.; Danner, M.; Mauser, W.; Hank, T. Crop Nitrogen Monitoring: Recent Progress and Principal Developments in the Context of Imaging Spectroscopy Missions. Remote Sens. Environ. 2020, 242, 111758. [Google Scholar] [CrossRef]

- Wang, W.; Yao, X.; Yao, X.; Tian, Y.; Liu, X.; Ni, J.; Cao, W.; Zhu, Y. Estimating Leaf Nitrogen Concentration with Three-Band Vegetation Indices in Rice and Wheat. Field Crop. Res. 2012, 129, 90–98. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Chen, Z. Comparison of Broad-Band and Narrow-Band Red and near-Infrared Vegetation Indices. Remote Sens. Environ. 1995, 54, 38–48. [Google Scholar] [CrossRef]

- Knox, N.; Skidmore, A.; Schlerf, M.; de Boer, W.F.; van Wieren, S.; Van der Waal, C.; Prins, H.; Slotow, R. Nitrogen Prediction in Grasses: Effect of Bandwidth and Plant Material State on Absorption Feature Selection. Int. J. Remote Sens. 2010, 31, 691–704. [Google Scholar] [CrossRef]

- Zhou, X.; Huang, W.; Kong, W.; Ye, H.; Luo, J.; Chen, P. Remote Estimation of Canopy Nitrogen Content in Winter Wheat Using Airborne Hyperspectral Reflectance Measurements. Adv. Space Res. 2016, 58, 1627–1637. [Google Scholar] [CrossRef]

- Lepine, L.C.; Ollinger, S.V.; Ouimette, A.P.; Martin, M.E. Examining Spectral Reflectance Features Related to Foliar Nitrogen in Forests: Implications for Broad-Scale Nitrogen Mapping. Remote Sens. Environ. 2016, 173, 174–186. [Google Scholar] [CrossRef]

- Zhai, W.; Li, C.; Fei, S.; Liu, Y.; Ding, F.; Cheng, Q.; Chen, Z. CatBoost Algorithm for Estimating Maize Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Multi-Source Sensor Data and SPAD Values. Comput. Electron. Agric. 2023, 214, 108306. [Google Scholar] [CrossRef]

- Chen, X.; Li, F.; Chang, Q.; Miao, Y.; Yu, K. Improving Winter Wheat Plant Nitrogen Concentration Prediction by Combining Proximal Hyperspectral Sensing and Weather Information with Machine Learning. Comput. Electron. Agric. 2025, 232, 110072. [Google Scholar] [CrossRef]

- Li, D.; Yang, S.; Du, Z.; Xu, X.; Zhang, P.; Yu, K.; Zhang, J.; Shu, M. Application of Unmanned Aerial Vehicle Optical Remote Sensing in Crop Nitrogen Diagnosis: A Systematic Literature Review. Comput. Electron. Agric. 2024, 227, 109565. [Google Scholar] [CrossRef]

- Taşan, S.; Cemek, B.; Taşan, M.; Cantürk, A. Estimation of Eggplant Yield with Machine Learning Methods Using Spectral Vegetation Indices. Comput. Electron. Agric. 2022, 202, 107367. [Google Scholar] [CrossRef]

- Tian, F.; Zhou, J.; Ransom, C.; Aloysius, N.; Sudduth, K. Estimating Corn Leaf Chlorophyll Content Using Airborne Multispectral Imagery and Machine Learning. Smart Agric. Technol. 2024, 10, 100719. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, K.; Sun, Y.; Zhao, Y.; Zhuang, H.; Ban, W.; Chen, Y.; Fu, E.; Chen, S.; Liu, J.; et al. Combining Spectral and Texture Features of UAS-Based Multispectral Images for Maize Leaf Area Index Estimation. Remote Sens. 2022, 14, 331. [Google Scholar] [CrossRef]

- Nian, Y.; Su, X.; Yue, H.; Zhu, Y.; Li, J.; Wang, W.; Sheng, Y.; Ma, Q.; Liu, J.; Li, X. Estimation of the Rice Aboveground Biomass Based on the First Derivative Spectrum and Boruta Algorithm. Front. Plant Sci. 2024, 15, 1396183. [Google Scholar] [CrossRef]

- Dong, R.; Miao, Y.; Wang, X.; Yuan, F.; Kusnierek, K. Canopy Fluorescence Sensing for In-Season Maize Nitrogen Status Diagnosis. Remote Sens. 2021, 13, 5141. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, Y.; Hao, F.; Zhang, X.; Chen, J.; De Beurs, K.; He, Y.; Fu, Y.H. Comparison of Different Machine Learning Algorithms for Predicting Maize Grain Yield Using UAV-Based Hyperspectral Images. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103528. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef]

- Vincini, M.; Frazzi, E. Comparing Narrow and Broad-Band Vegetation Indices to Estimate Leaf Chlorophyll Content in Plano phile Crop Canopies. Precis. Agric. 2011, 12, 334–344. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Wang, H.; Huang, S.; Cheng, S.; Khosla, R.; Jiang, R. Non-Destructive Estimation of Rice Plant Nitrogen Status with Crop Circle Multispectral Active Canopy Sensor. Field Crop. Res. 2013, 154, 133–144. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Li, D.; Miao, Y.; Ransom, C.; Bean, G.; Kitchen, N.; Fernández, F.; Sawyer, J.; Camberato, J.; Carter, P.; Ferguson, R.; et al. Corn Nitrogen Nutrition Index Prediction Improved by Integrating Genetic, Environmental, and Management Factors with Active Canopy Sensing Using Machine Learning. Remote Sens. 2022, 14, 394. [Google Scholar] [CrossRef]

- Shendryk, Y.; Sofonia, J.; Garrard, R.; Rist, Y.; Skocaj, D.; Thorburn, P. Fine-Scale Prediction of Biomass and Leaf Nitrogen Content in Sugarcane Using UAV LiDAR and Multispectral Imaging. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102177. [Google Scholar] [CrossRef]

- Jay, S.; Maupas, F.; Bendoula, R.; Gorretta, N. Retrieving LAI, Chlorophyll and Nitrogen Contents in Sugar Beet Crops from Multi-Angular Optical Remote Sensing: Comparison of Vegetation Indices and PROSAIL Inversion for Field Phenotyping. Field Crop. Res. 2017, 210, 33–46. [Google Scholar] [CrossRef]

- Li, Z.; Jin, X.; Yang, G.; Drummond, J.; Yang, H.; Clark, B.; Li, Z.; Zhao, C. Remote Sensing of Leaf and Canopy Nitrogen Status in Winter Wheat (Triticum aestivum L.) Based on N-PROSAIL Model. Remote Sens. 2018, 10, 1463. [Google Scholar] [CrossRef]

- Morier, T.; Cambouris, A.N.; Chokmani, K. In-Season Nitrogen Status Assessment and Yield Estimation Using Hyperspectral Vegetation Indices in a Potato Crop. Agron. J. 2015, 107, 1295–1309. [Google Scholar] [CrossRef]

- Gislum, R.; Thomopoulos, S.; Gyldengren, J.G.; Mortensen, A.K.; Boelt, B. The Use of Remote Sensing to Determine Nitrogen Status in Perennial Ryegrass (Lolium perenne L.) for Seed Production. Nitrogen 2021, 2, 229–243. [Google Scholar] [CrossRef]

- Chen, P.; Haboudane, D.; Tremblay, N.; Wang, J.; Vigneault, P.; Li, B. New Spectral Indicator Assessing the Efficiency of Crop Nitrogen Treatment in Corn and Wheat. Remote Sens. Environ. 2010, 114, 1987–1997. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Díaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.E.; Serret Molins, M.D.; Araus, J.L. Comparative UAV and Field Phenotyping to Assess Yield and Nitrogen Use Efficiency in Hybrid and Conventional Barley. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Miao, Y.; Hennig, S.D.; Gnyp, M.L.; Chen, X.; Jia, L.; Bareth, G. Evaluating Hyperspectral Vegetation Indices for Estimating Nitrogen Concentration of Winter Wheat at Different Growth Stages. Precis. Agric. 2010, 11, 335–357. [Google Scholar] [CrossRef]

- Chen, P. Estimation of Winter Wheat Grain Protein Content Based on Multisource Data Assimilation. Remote Sens. 2020, 12, 3201. [Google Scholar] [CrossRef]

- Yang, H.; Li, F.; Hu, Y.; Yu, K. Hyperspectral Indices Optimization Algorithms for Estimating Canopy Nitrogen Concentration in Potato (Solanum tuberosum L.). Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102416. [Google Scholar] [CrossRef]

- Prey, L.; Schmidhalter, U. Sensitivity of Vegetation Indices for Estimating Vegetative N Status in Winter Wheat. Sensors 2019, 19, 3712. [Google Scholar] [CrossRef]

- Din, M.; Ming, J.; Hussain, S.; Ata-Ul-Karim, S.T.; Rashid, M.; Tahir, M.N.; Hua, S.; Wang, S. Estimation of Dynamic Canopy Variables Using Hyperspectral Derived Vegetation Indices Under Varying N Rates at Diverse Phenological Stages of Rice. Front. Plant Sci. 2019, 9, 1883. [Google Scholar] [CrossRef]

- Wang, Y.; Liao, Q.; Yang, G.; Feng, H.; Yang, X.; Yue, J. Comparing Broad-Band and Red Edge-Based Spectral Vegetation Indices to Estimate Nitrogen Concentration of Crops Using Casi Data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, XLI-B7, 137–143. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L. Using Hyperspectral Remote Sensing Data for Retrieving Canopy Chlorophyll and Nitrogen Content. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 574–583. [Google Scholar] [CrossRef]

- Mistele, B.; Schmidhalter, U. Tractor-Based Quadrilateral Spectral Reflectance Measurements to Detect Biomass and Total Aer ial Nitrogen in Winter Wheat. Agron. J. 2010, 102, 499–506. [Google Scholar] [CrossRef]

| Band | Center Band (nm) | Bandwidth (nm) |

|---|---|---|

| Blue | 450 | 35 |

| Green | 555 | 25 |

| Red | 650 | 22.5 |

| Red Edge 1 | 720 | 10 |

| Red Edge 2 | 750 | 10 |

| NIR | 840 | 30 |

| Dataset | n_estimators | max_depth | min_samples_split | min_samples_leaf |

|---|---|---|---|---|

| Multispectral | 100 | 2 | 6 | 4 |

| Hyperspectral | 100 | 4 | 2 | 5 |

| Multispectral + Hyperspectral | 200 | 9 | 2 | 2 |

| Dataset | Parameter | Feature Number |

|---|---|---|

| Multispectral | MVI (24) + MTI (48) | MVI (14) + MTI (6) |

| Hyperspectral | HVI (24) + HCI (20) | HVI (15) + HCI (14) |

| Multispectral + Hyperspectral | HVI (24) + HCI (20) + MVI (24) + MTI (48) | HVI (11) + HCI (5) + MVI (17) + MTI (5) |

| Data Source | Training Set R2 (RMSE) | Validation Set R2 (RMSE) | CE (Hour) |

|---|---|---|---|

| Multispectral | 0.973 (0.107) | 0.879 (0.212) | 2.075 |

| Hyperspectral | 0.838 (0.260) | 0.832 (0.250) | 2.080 |

| Multispectral + Hyperspectral | 0.983 (0.088) | 0.903 (0.190) | 2.115 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, F.; Sun, H.; Tao, L.; Wang, P. Data Integration Based on UAV Multispectra and Proximal Hyperspectra Sensing for Maize Canopy Nitrogen Estimation. Remote Sens. 2025, 17, 1411. https://doi.org/10.3390/rs17081411

Lu F, Sun H, Tao L, Wang P. Data Integration Based on UAV Multispectra and Proximal Hyperspectra Sensing for Maize Canopy Nitrogen Estimation. Remote Sensing. 2025; 17(8):1411. https://doi.org/10.3390/rs17081411

Chicago/Turabian StyleLu, Fuhao, Haiming Sun, Lei Tao, and Peng Wang. 2025. "Data Integration Based on UAV Multispectra and Proximal Hyperspectra Sensing for Maize Canopy Nitrogen Estimation" Remote Sensing 17, no. 8: 1411. https://doi.org/10.3390/rs17081411

APA StyleLu, F., Sun, H., Tao, L., & Wang, P. (2025). Data Integration Based on UAV Multispectra and Proximal Hyperspectra Sensing for Maize Canopy Nitrogen Estimation. Remote Sensing, 17(8), 1411. https://doi.org/10.3390/rs17081411