Cover Crop Types Influence Biomass Estimation Using Unmanned Aerial Vehicle-Mounted Multispectral Sensors

Abstract

1. Introduction

2. Materials and Methods

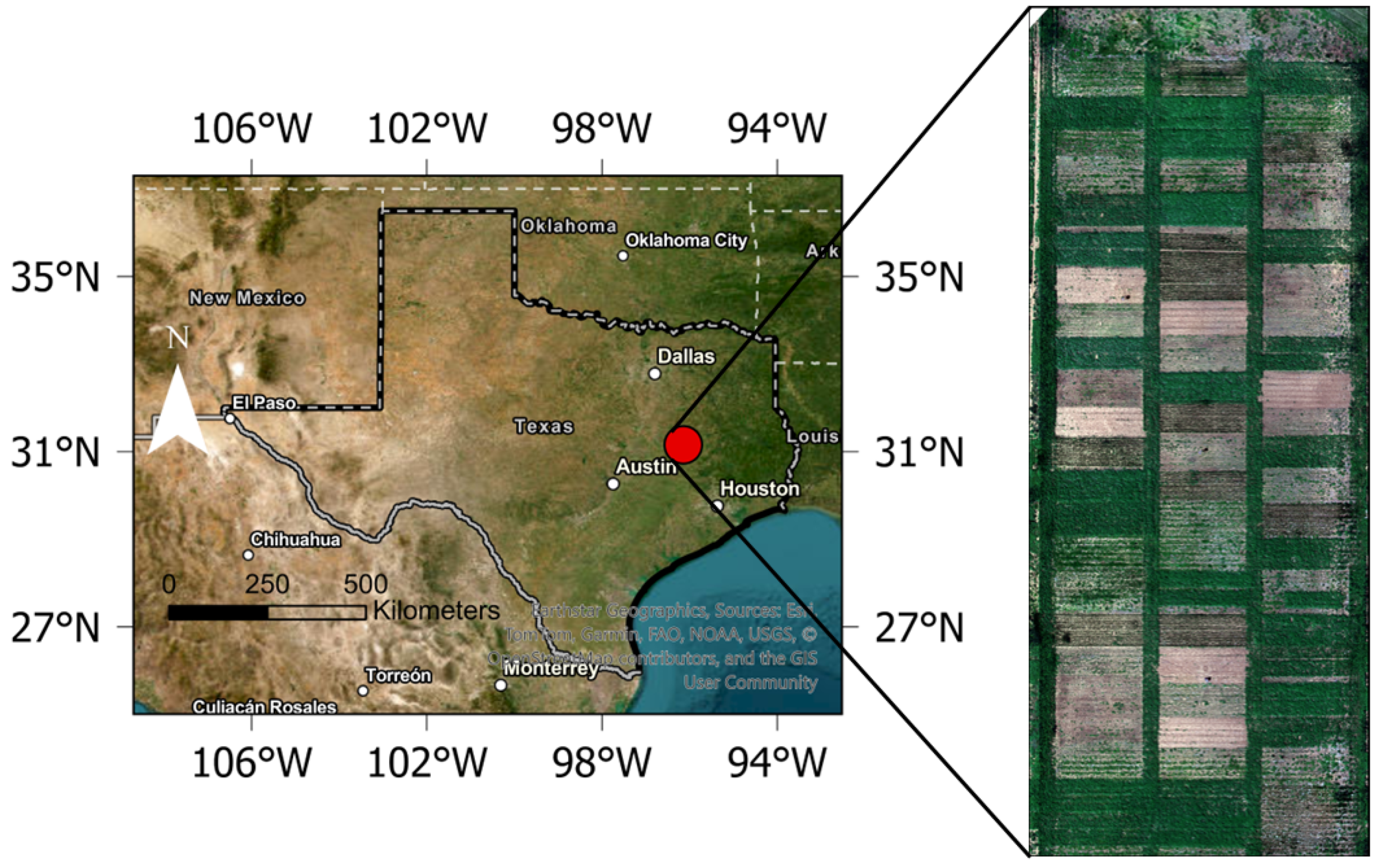

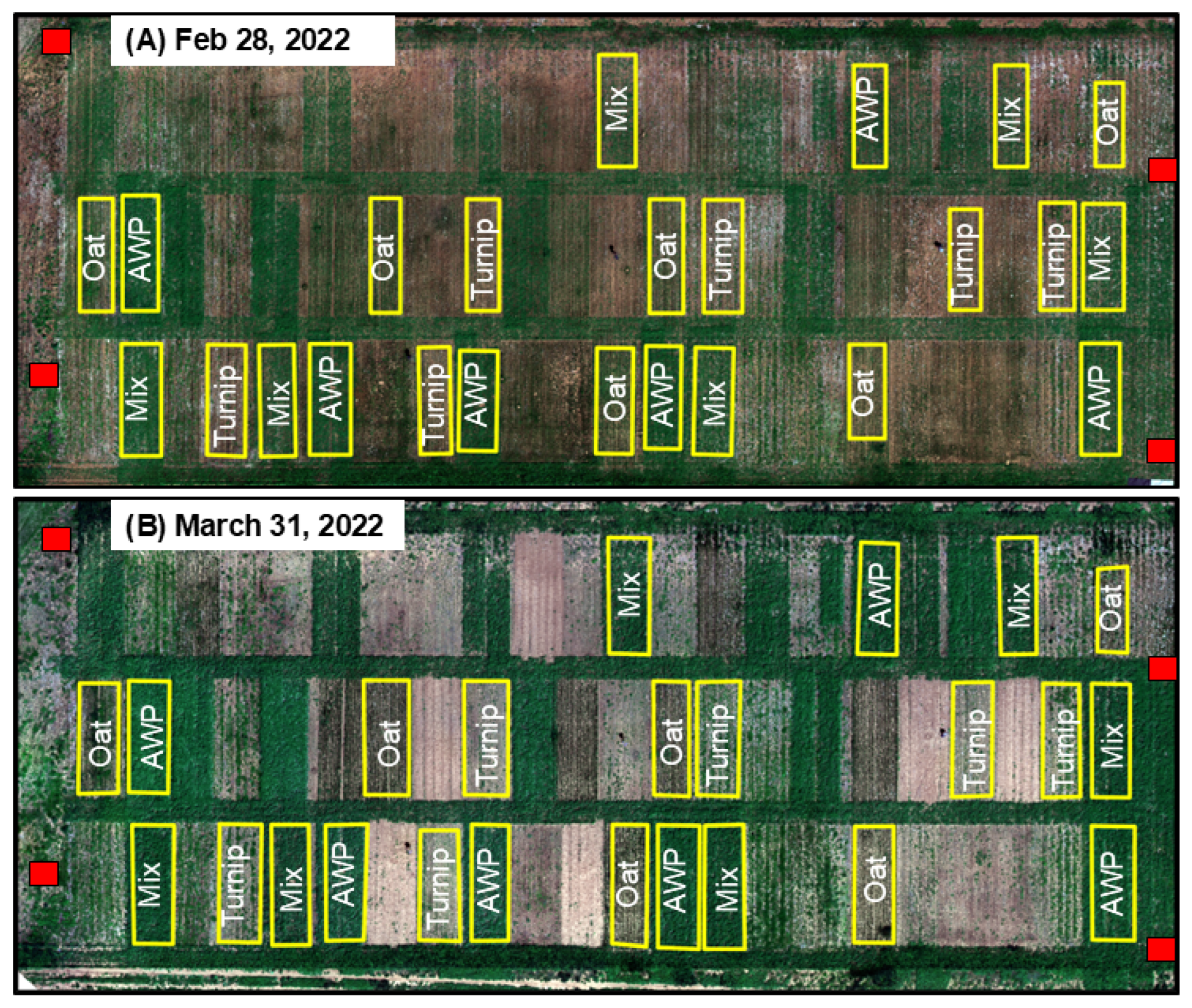

2.1. Study Site

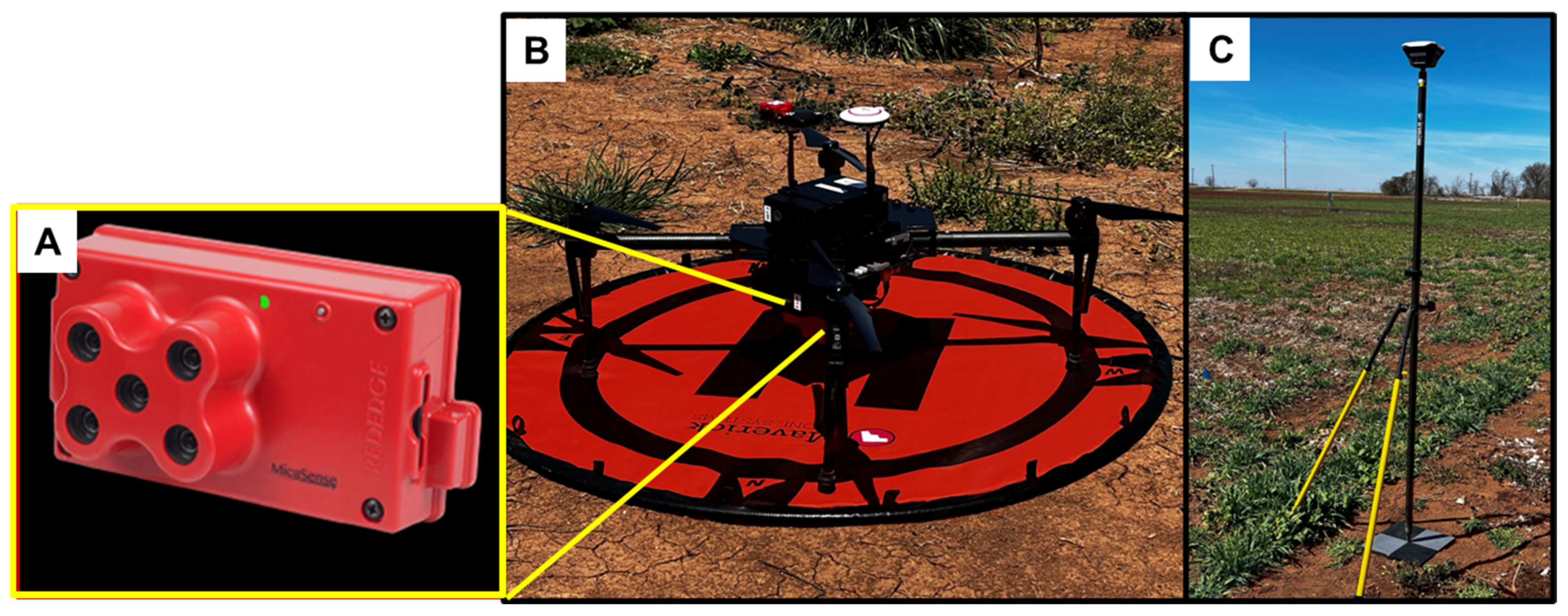

2.2. Image Acquisition

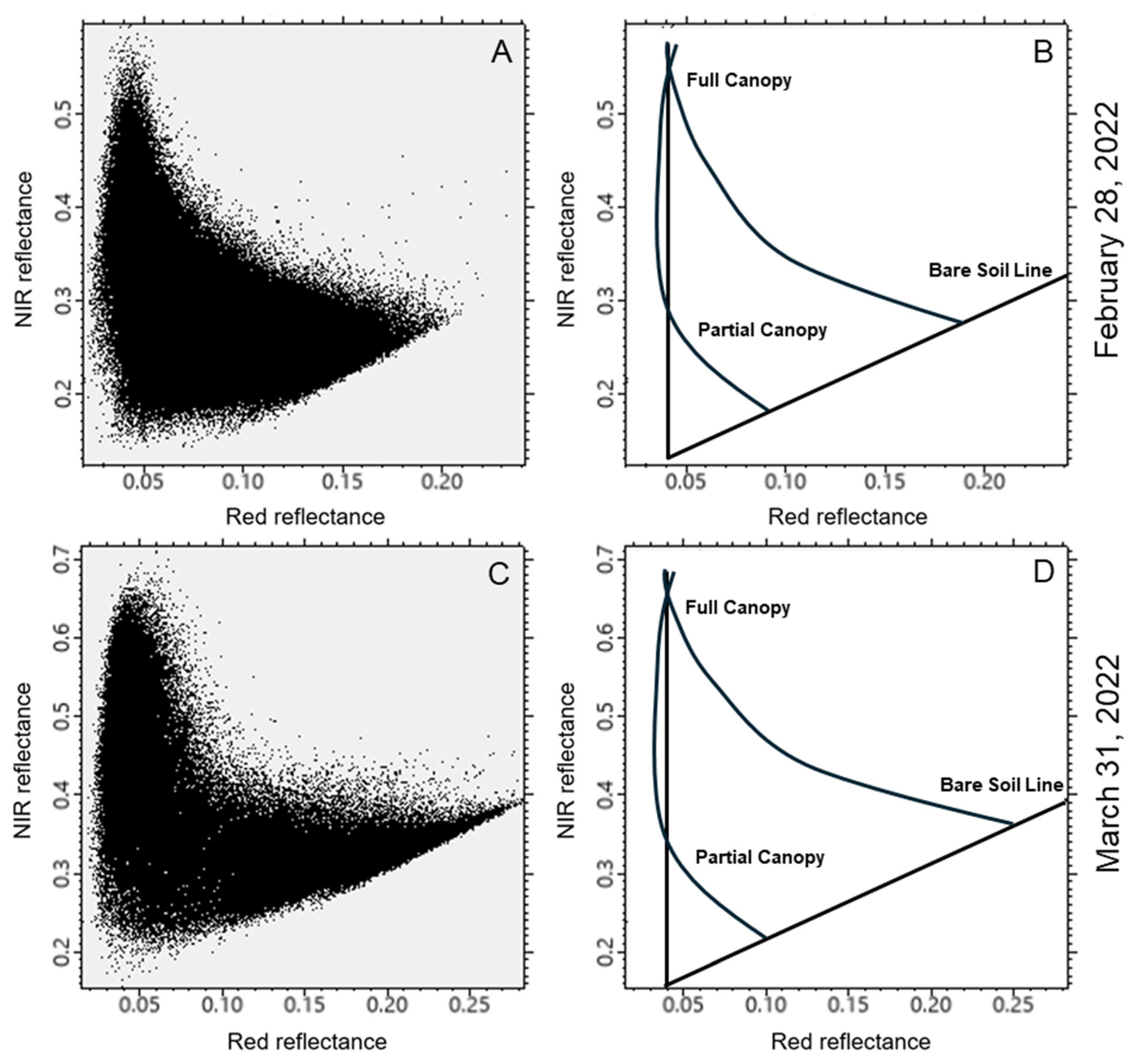

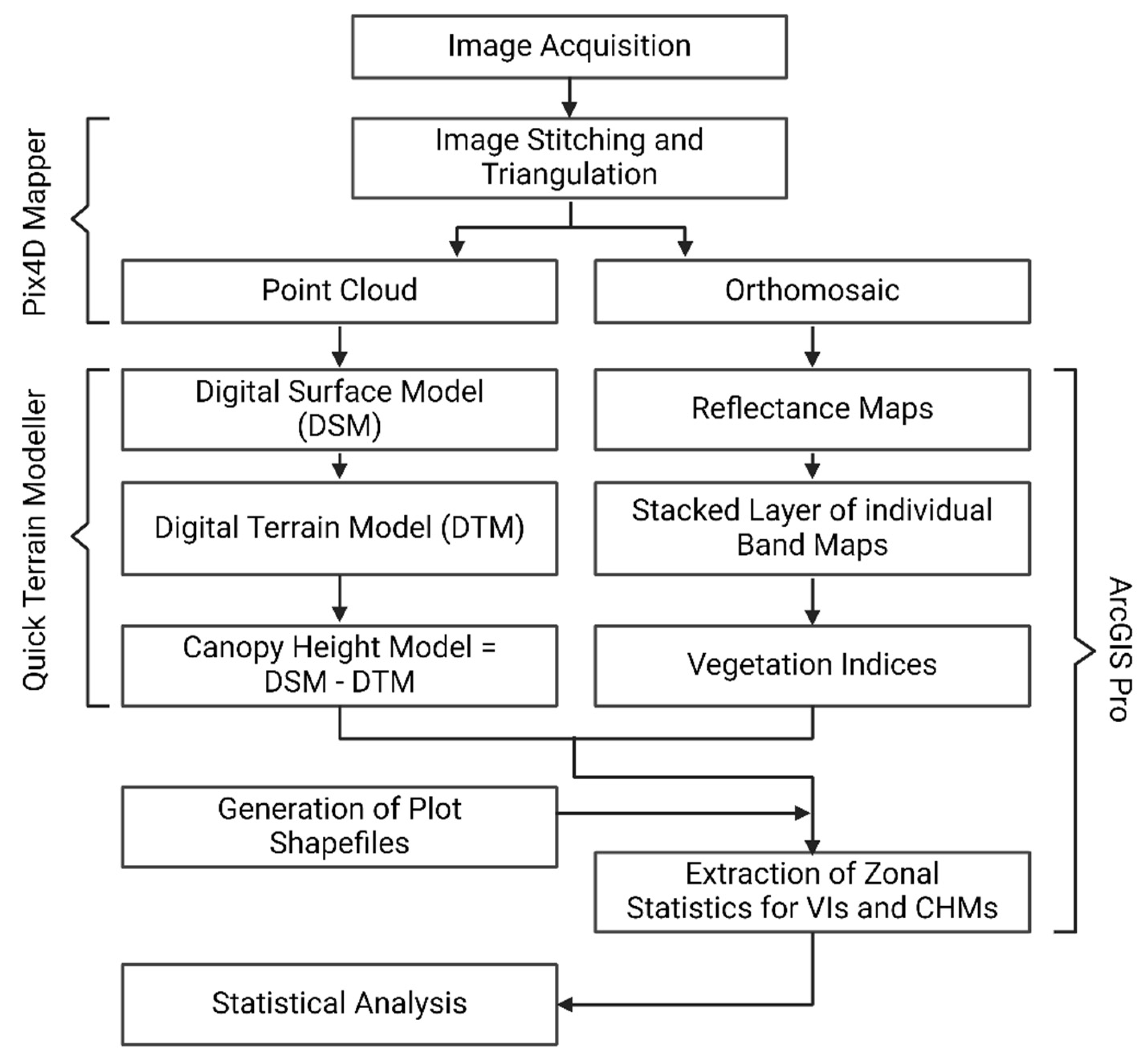

2.3. Image Processing Approach

2.4. Statistical Analyses

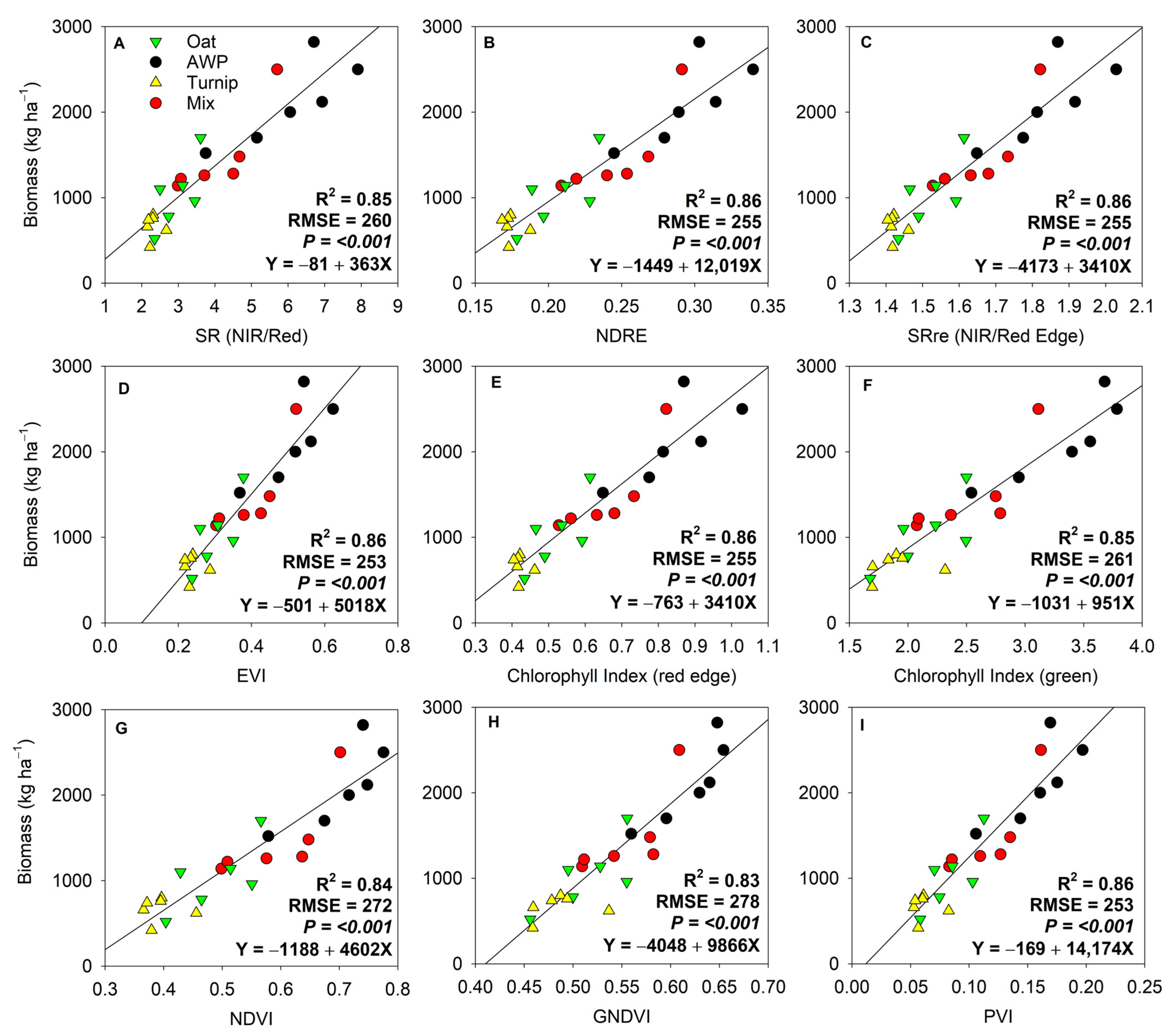

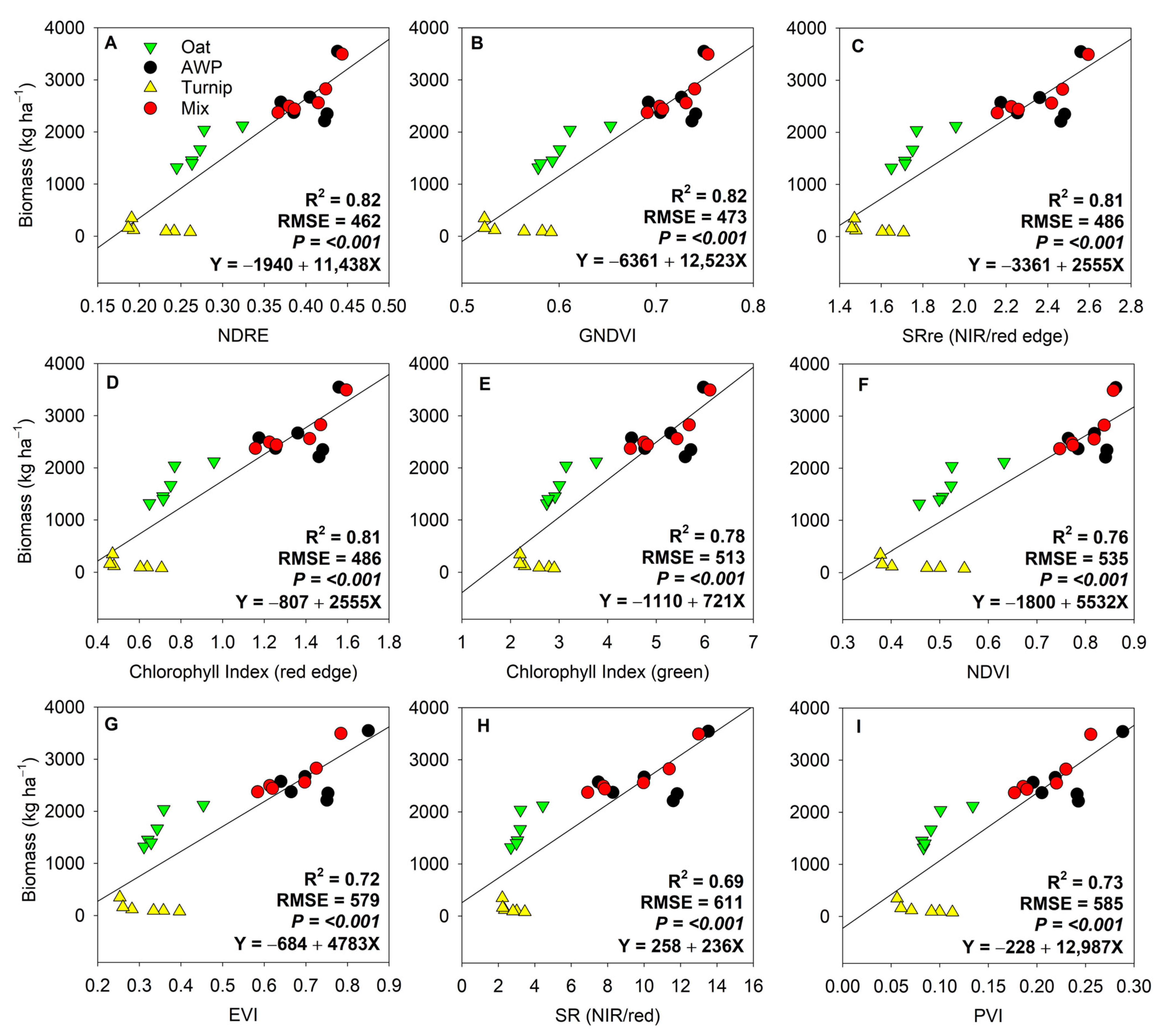

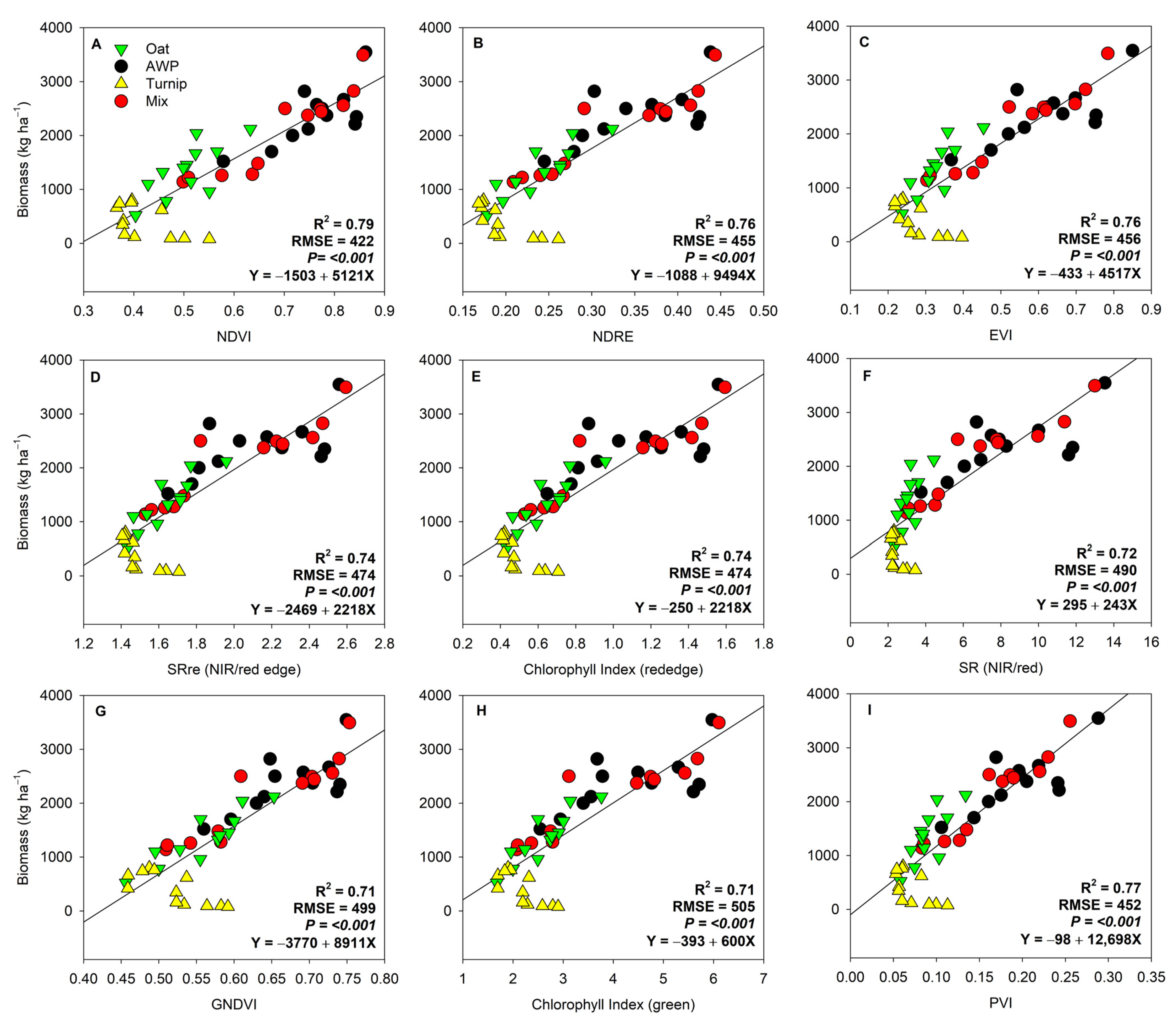

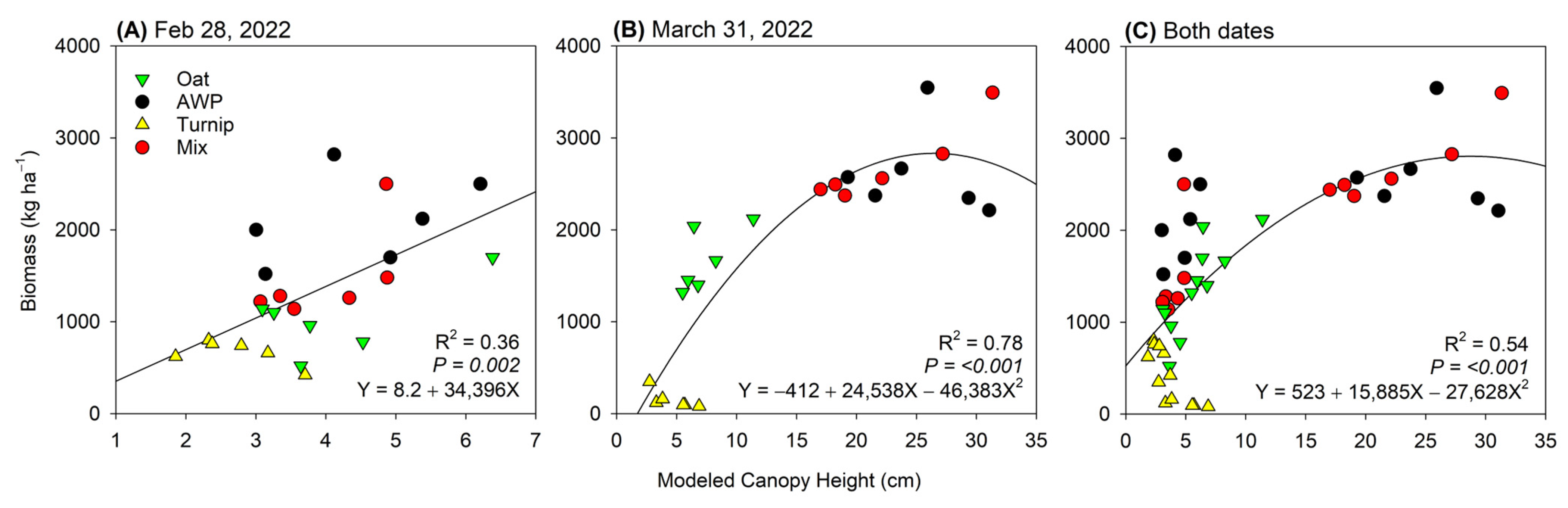

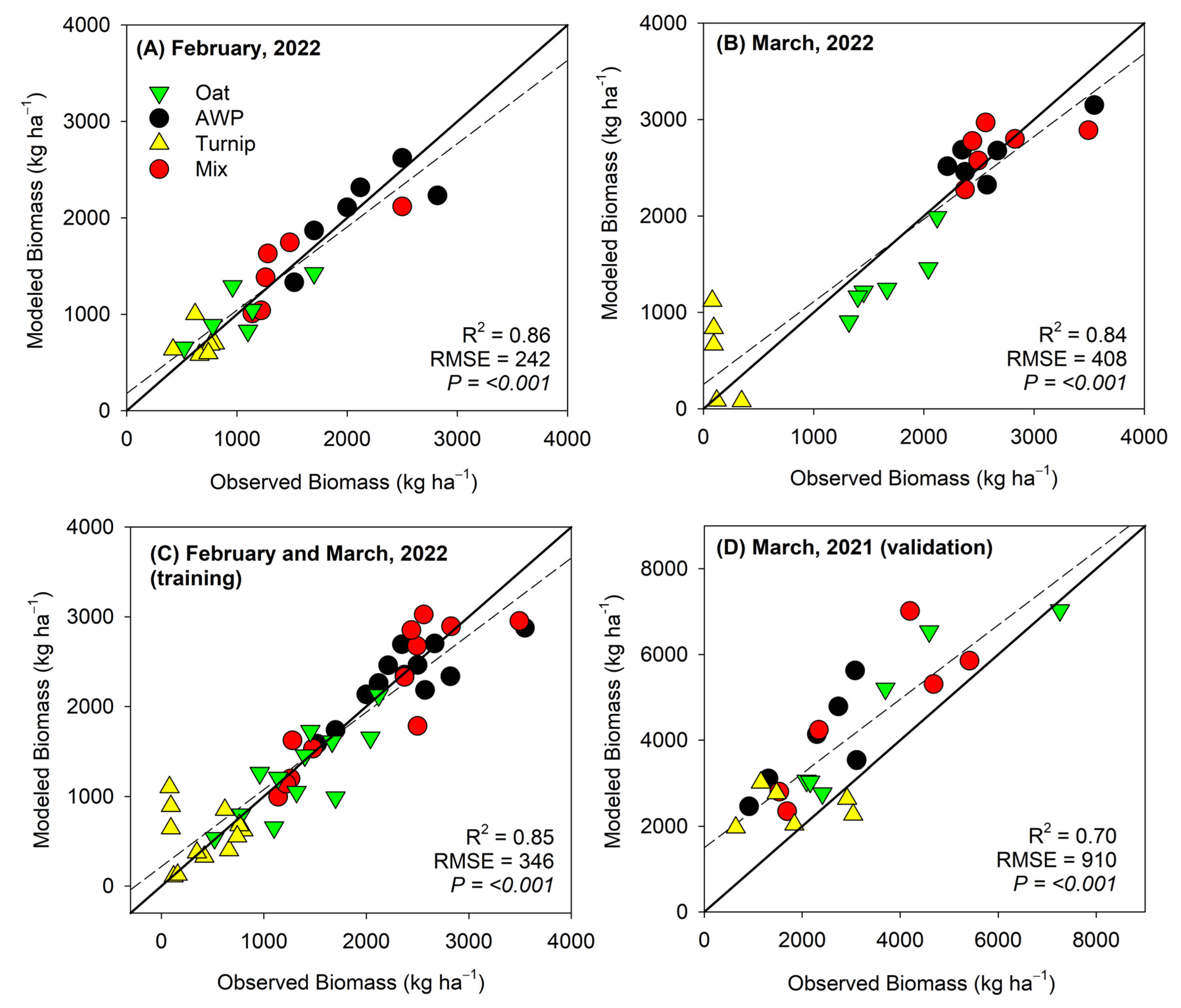

3. Results

4. Discussion

4.1. Temporal Differences in Biomass Estimation

4.2. Influence of Cover Crop Types on Biomass Estimation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| AWP | Austrian winter peas |

| NIR | Near-Infrared |

| NDVI | Normalized Difference Vegetation Index |

| NDRE | Normalized Difference Red Edge Index |

| CIg | Chlorophyll Index Green |

| CIre | Chlorophyll Index Red Edge |

| EVI | Enhanced Vegetation Index |

| GNDVI | Green Normalized Difference Vegetation Index |

| SR | Simple Ratio of Near-Infrared over Red |

| SRre | Simple Ratio of Near-Infrared over Red Edge |

| PVI | Perpendicular Vegetation Index |

| CHM | Canopy Height Model |

| GCP | Ground Control Point |

References

- Blanco-Canqui, H.; Ruis, S. Cover crop impacts on soil physical properties: A review. Soil Sci. Soc. Am. J. 2020, 84, 1527–1576. [Google Scholar] [CrossRef]

- Daryanto, S.; Fu, B.J.; Wang, L.X.; Jacinthe, P.A.; Zhao, W.W. Quantitative synthesis on the ecosystem services of cover crops. Earth-Sci. Rev. 2018, 185, 357–373. [Google Scholar] [CrossRef]

- Jian, J.; Du, X.; Reiter, M.S.; Stewart, R.D. A meta-analysis of global cropland soil carbon changes due to cover cropping. Soil Biol. Biochem. 2020, 143, 107735. [Google Scholar] [CrossRef]

- Decker, H.L.; Gamble, A.V.; Balkcom, K.S.; Johnson, A.M.; Hull, N.R. Cover crop monocultures and mixtures affect soil health indicators and crop yield in the southeast United States. Soil Sci. Soc. Am. J. 2022, 86, 1312–1326. [Google Scholar] [CrossRef]

- MacLaren, C.; Swanepoel, P.; Bennett, J.; Wright, J.; Dehnen-Schmutz, K. Cover crop biomass production is more important than diversity for weed suppression. Crop Sci. 2019, 59, 733–748. [Google Scholar] [CrossRef]

- Osipitan, O.A.; Dille, A.; Assefa, Y.; Radicetti, E.; Ayeni, A.; Knezevic, S.Z. Impact of Cover Crop Management on Level of Weed Suppression: A Meta-Analysis. Crop Sci. 2019, 59, 833–842. [Google Scholar] [CrossRef]

- Darapuneni, M.K.; Idowu, O.J.; Sarihan, B.; DuBois, D.; Grover, K.; Sanogo, S.; Djaman, K.; Lauriault, L.; Omer, M.; Dodla, S. Growth characteristics of summer cover crop grasses and their relation to soil aggregate stability and wind erosion control in arid southwest. Appl. Eng. Agric. 2021, 37, 11–23. [Google Scholar] [CrossRef]

- Zuazo, V.H.D.; Pleguezuelo, C.R.R. Soil-Erosion and Runoff Prevention by Plant Covers: A Review. In Sustainable Agriculture; Lichtfouse, E., Navarrete, M., Debaeke, P., Véronique, S., Alberola, C., Eds.; Springer: Dordrecht, The Netherlands, 2009; pp. 785–811. [Google Scholar]

- Bai, X.; Huang, Y.; Ren, W.; Coyne, M.; Jacinthe, P.-A.; Tao, B.; Hui, D.; Yang, J.; Matocha, C. Responses of soil carbon sequestration to climate-smart agriculture practices: A meta-analysis. Glob. Change Biol. 2019, 25, 2591–2606. [Google Scholar] [CrossRef]

- Blanco-Canqui, H. Cover crops and carbon sequestration: Lessons from US studies. Soil Sci. Soc. Am. J. 2022, 86, 501–519. [Google Scholar] [CrossRef]

- Kuyah, S.; Dietz, J.; Muthuri, C.; Jamnadass, R.; Mwangi, P.; Coe, R.; Neufeldt, H. Allometric equations for estimating biomass in agricultural landscapes: II. Belowground biomass. Agric. Ecosyst. Environ. 2012, 158, 225–234. [Google Scholar] [CrossRef]

- Qi, Y.L.; Wei, W.; Chen, C.G.; Chen, L.D. Plant root-shoot biomass allocation over diverse biomes: A global synthesis. Glob. Ecol. Conserv. 2019, 18, e00606. [Google Scholar] [CrossRef]

- Bai, G.; Koehler-Cole, K.; Scoby, D.; Thapa, V.R.; Basche, A.; Ge, Y.F. Enhancing estimation of cover crop biomass using field-based high-throughput phenotyping and machine learning models. Front. Plant Sci. 2024, 14, 1277672. [Google Scholar] [CrossRef]

- Xia, Y.S.; Guan, K.Y.; Copenhaver, K.; Wander, M. Estimating cover crop biomass nitrogen credits with Sentinel-2 imagery and sites covariates. Agron. J. 2021, 113, 1084–1101. [Google Scholar] [CrossRef]

- Xu, M.; Lacey, C.G.; Armstrong, S.D. The feasibility of satellite remote sensing and spatial interpolation to estimate cover crop biomass and nitrogen uptake in a small watershed. J. Soil Water Conserv. 2018, 73, 682–692. [Google Scholar] [CrossRef]

- Bendini, H.D.N.; Fieuzal, R.; Carrere, P.; Clenet, H.; Galvani, A.; Allies, A.; Ceschia, É. Estimating Winter Cover Crop Biomass in France Using Optical Sentinel-2 Dense Image Time Series and Machine Learning. Remote Sens. 2024, 16, 834. [Google Scholar] [CrossRef]

- Goffart, D.; Curnel, Y.; Planchon, V.; Goffart, J.P.; Defourny, P. Field-scale assessment of Belgian winter cover crops biomass based on Sentinel-2 data. Eur. J. Agron. 2021, 126, 126278. [Google Scholar] [CrossRef]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.S.; Neely, H.L.; et al. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef]

- Acorsi, M.G.; Miranda, F.D.A.; Martello, M.; Smaniotto, D.A.; Sartor, L.R. Estimating Biomass of Black Oat Using UAV-Based RGB Imaging. Agronomy 2019, 9, 344. [Google Scholar] [CrossRef]

- Kümmerer, R.; Noack, P.O.; Bauer, B. Using High-Resolution UAV Imaging to Measure Canopy Height of Diverse Cover Crops and Predict Biomass. Remote Sens. 2023, 15, 1520. [Google Scholar] [CrossRef]

- Roth, L.; Streit, B. Predicting cover crop biomass by lightweight UAS-based RGB and NIR photography: An applied photogrammetric approach. Precis. Agric. 2018, 19, 93–114. [Google Scholar] [CrossRef]

- Yuan, M.; Burjel, J.; Isermann, J.; Goeser, N.; Pittelkow, C. Unmanned aerial vehicle–based assessment of cover crop biomass and nitrogen uptake variability. J. Soil Water Conserv. 2019, 74, 350–359. [Google Scholar] [CrossRef]

- Kharel, T.P.; Bhandari, A.B.; Mubvumba, P.; Tyler, H.L.; Fletcher, R.S.; Reddy, K.N. Mixed-Species Cover Crop Biomass Estimation Using Planet Imagery. Sensors 2023, 23, 1541. [Google Scholar] [CrossRef]

- Prabhakara, K.; Hively, W.D.; McCarty, G.W. Evaluating the relationship between biomass, percent groundcover and remote sensing indices across six winter cover crop fields in Maryland, United States. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 88–102. [Google Scholar] [CrossRef]

- Shafian, S.; Rajan, N.; Schnell, R.; Bagavathiannan, M.; Valasek, J.; Shi, Y.; Olsenholler, J. Unmanned aerial systems-based remote sensing for monitoring sorghum growth and development. PLoS ONE 2018, 13, e0196605. [Google Scholar] [CrossRef] [PubMed]

- Roth, R.T.; Chen, K.; Scott, J.R.; Jung, J.; Yang, Y.; Camberato, J.J.; Armstrong, S.D. Prediction of Cereal Rye Cover Crop Biomass and Nutrient Accumulation Using Multi-Temporal Unmanned Aerial Vehicle Based Visible-Spectrum Vegetation Indices. Remote Sens. 2023, 15, 580. [Google Scholar] [CrossRef]

- Holzhauser, K.; Räbiger, T.; Rose, T.; Kage, H.; Kühling, I. Estimation of Biomass and N Uptake in Different Winter Cover Crops from UAV-Based Multispectral Canopy Reflectance Data. Remote Sens. 2022, 14, 4525. [Google Scholar] [CrossRef]

- Sangjan, W.; McGee, R.J.; Sankaran, S. Optimization of UAV-Based Imaging and Image Processing Orthomosaic and Point Cloud Approaches for Estimating Biomass in a Forage Crop. Remote Sens. 2022, 14, 2396. [Google Scholar] [CrossRef]

- Elhakeem, A.; Bastiaans, L.; Houben, S.; Couwenberg, T.; Makowski, D.; van der Werf, W. Do cover crop mixtures give higher and more stable yields than pure stands? Field Crops Res. 2021, 270, 108217. [Google Scholar] [CrossRef]

- Salehin, S.M.U.; Rajan, N.; Mowrer, J.; Casey, K.D.; Tomlinson, P.; Somenahally, A.; Bagavathiannan, M. Cover crops in organic cotton influence greenhouse gas emissions and soil microclimate. Agron. J. 2025, 117, e21735. [Google Scholar] [CrossRef]

- Dal Lago, P.; Vavlas, N.; Kooistra, L.; De Deyn, G.B. Estimation of nitrogen uptake, biomass, and nitrogen concentration, in cover crop monocultures and mixtures from optical UAV images. Smart Agric. Technol. 2024, 9, 100608. [Google Scholar] [CrossRef]

- van der Meij, B.; Kooistra, L.; Suomalainen, J.; Barel, J.M.; De Deyn, G.B. Remote sensing of plant trait responses to field-based plant-soil feedback using UAV-based optical sensors. Biogeosciences 2017, 14, 733–749. [Google Scholar] [CrossRef]

- National Oceanic and Atmospheric Administration. Climate Data Online (CDO). NOAA. 2023. Available online: https://www.ncei.noaa.gov/cdo-web (accessed on 25 May 2023).

- Soil Survey Staff, Natural Resources Conservation Service, United State Department of Agriculture. Web Soil Survey. Available online: https://websoilsurvey.nrcs.usda.gov/app/WebSoilSurvey.aspx (accessed on 11 April 2021).

- Hinzmann, T.; Schonberger, J.L.; Pollefeys, M.; Siegwart, R. Mapping on the fly: Real-time 3D dense reconstruction, digital surface map and incremental orthomosaic generation for unmanned aerial vehicles. In Field and Service Robotics; Hutter, M., Siegwart, R., Eds.; Springer Proceedings in Advanced Robotics; Springer: Cham, Switzerland, 2018; pp. 383–396. [Google Scholar]

- Esri. ArcGIS Pro (Version 3.2). Environmental Systems Research Institute. 2024. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 15 April 2021).

- Hunt, E.R.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote Sensing Leaf Chlorophyll Content Using a Visible Band Index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Nagler, P.L.; Scott, R.L.; Westenburg, C.; Cleverly, J.R.; Glenn, E.P.; Huete, A.R. Evapotranspiration on western US rivers estimated using the Enhanced Vegetation Index from MODIS and data from eddy covariance and Bowen ratio flux towers. Remote Sens. Environ. 2005, 97, 337–351. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ 1974, 351, 309. [Google Scholar]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huang, W.; Peng, D.; Qin, Q.; Mortimer, H.; Casa, R.; Pignatti, S.; Laneve, G.; Pascucci, S.; et al. Vegetation indices combining the red and red-edge spectral information for leaf area index retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1482–1493. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Maas, S.J. Linear mixture modeling approach for estimating cotton canopy ground cover using satellite multispectral imagery. Remote Sens. Environ. 2000, 72, 304–308. [Google Scholar] [CrossRef]

- GeoCue Group Inc. Quick Terrain Modeller, Version 8.4; GeoCue Group Inc.: Madison, AL, USA, 2024. [Google Scholar]

- R core Team. R: A Language and Environment for Statistical Computing. 2024. Available online: https://www.R-project.org (accessed on 15 April 2021).

- Wei, T.; Simko, V. CORRPLOT: Visualization of a Correlation Matrix (R Package Version 0.95). 2021. Available online: https://github.com/taiyun/corrplot (accessed on 15 April 2021).

- Hebbali, A. OLSRR: Tools for Building OLS Regression Models (R Package Version 0.5.3). 2020. Available online: https://CRAN.R-project.org/package=olsrr (accessed on 15 April 2021).

- Fox, J.; Weisberg, S. An R Companion to Applied Regression, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2019. [Google Scholar]

- Buchhart, C.; Schmidhalter, U. Daytime and seasonal reflectance of maize grown in varying compass directions. Front. Plant Sci. 2022, 13, 1029612. [Google Scholar] [CrossRef]

- Zeng, L.J.; Chen, C.C. Using remote sensing to estimate forage biomass and nutrient contents at different growth stages. Biomass Bioenergy 2018, 115, 74–81. [Google Scholar] [CrossRef]

- Hansen, P.M.; Schjoerring, J.K. Reflectance measurement of canopy biomass and nitrogen status in wheat crops using normalized difference vegetation indices and partial least squares regression. Remote Sens. Environ. 2003, 86, 542–553. [Google Scholar] [CrossRef]

- Kanke, Y.; Tubaña, B.; Dalen, M.; Harrell, D. Evaluation of red and red-edge reflectance-based vegetation indices for rice biomass and grain yield prediction models in paddy fields. Precis. Agric. 2016, 17, 507–530. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of Using Different Vegetative Indices to Quantify Agricultural Crop Characteristics at Different Growth Stages under Varying Management Practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef]

- Martin, K.L.; Girma, K.; Freeman, K.W.; Teal, R.K.; Tubańa, B.; Arnall, D.B.; Chung, B.; Walsh, O.; Solie, J.B.; Stone, M.L.; et al. Expression of variability in corn as influenced by growth stage using optical sensor measurements. Agron. J. 2007, 99, 384–389. [Google Scholar] [CrossRef]

- Barboza, T.O.C.; Ardigueri, M.; Souza, G.F.C.; Ferraz, M.A.J.; Gaudencio, J.R.F.; Santos, A.F.D. Performance of Vegetation Indices to Estimate Green Biomass Accumulation in Common Bean. Agriengineering 2023, 5, 840–854. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef]

- Miller, J.O.; Shober, A.L.; Taraila, J. Assessing relationships of cover crop biomass and nitrogen content to multispectral imagery. Agron. J. 2024, 116, 1417–1427. [Google Scholar] [CrossRef]

- Kross, A.; McNairn, H.; Lapen, D.; Sunohara, M.; Champagne, C. Assessment of RapidEye vegetation indices for estimation of leaf area index and biomass in corn and soybean crops. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 235–248. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Fengabcd, H.; Panabe, L.; Yan, F.; Peiabg, H.; Wang, H.; Yang, G. Height and biomass inversion of winter wheat based on canopy height model. In Proceedings of the 38th IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Zhang, H.; Sun, Y.; Chang, L.; Qin, Y.; Chen, J.; Qin, Y.; Du, J.; Yi, S.; Wang, Y. Estimation of Grassland Canopy Height and Aboveground Biomass at the Quadrat Scale Using Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 851. [Google Scholar] [CrossRef]

- Jin, X.; Yang, G.; Xu, X.; Yang, H.; Feng, H.; Li, Z.; Shen, J.; Lan, Y.; Zhao, C. Combined Multi-Temporal Optical and Radar Parameters for Estimating LAI and Biomass in Winter Wheat Using HJ and RADARSAR-2 Data. Remote Sens. 2015, 7, 13251–13272. [Google Scholar] [CrossRef]

- Dube, T.; Shoko, C.; Gara, T.W. Remote sensing of aboveground grass biomass between protected and non-protected areas in savannah rangelands. Afr. J. Ecol. 2021, 59, 687–695. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Shi, H.; Li, L.; Eamus, D.; Huete, A.; Cleverly, J.; Tian, X.; Yu, Q.; Wang, S.; Montagnani, L.; Magliulo, V.; et al. Assessing the ability of MODIS EVI to estimate terrestrial ecosystem gross primary production of multiple land cover types. Ecol. Indic. 2017, 72, 153–164. [Google Scholar] [CrossRef]

- Jiang, J.; Johansen, K.; Stanschewski, C.S.; Wellman, G.; Mousa, M.A.A.; Fiene, G.M.; Asiry, K.A.; Tester, M.; McCabe, M.F. Phenotyping a diversity panel of quinoa using UAV-retrieved leaf area index, SPAD-based chlorophyll and a random forest approach. Precis. Agric. 2022, 23, 961–983. [Google Scholar] [CrossRef]

- Corti, M.; Cavalli, D.; Cabassi, G.; Bechini, L.; Pricca, N.; Paolo, D.; Marinoni, L.; Vigoni, A.; Degano, L.; Gallina, P.M. Improved estimation of herbaceous crop aboveground biomass using UAV-derived crop height combined with vegetation indices. Precis. Agric. 2023, 24, 587–606. [Google Scholar] [CrossRef]

- Biewer, S.; Fricke, T.; Wachendorf, M. Determination of Dry Matter Yield from Legume-Grass Swards by Field Spectroscopy. Crop Sci. 2009, 49, 1927–1936. [Google Scholar] [CrossRef]

- Pulina, A.; Rolo, V.; Hernández-Esteban, A.; Seddaiu, G.; Roggero, P.P.; Moreno, G. Long-term legacy of sowing legume-rich mixtures in Mediterranean wooded grasslands. Agric. Ecosyst. Environ. 2023, 348, 108397. [Google Scholar] [CrossRef]

| Band Name | Center Wavelength (nm) | Bandwidth (nm) |

|---|---|---|

| Blue | 475 | 32 |

| Green | 560 | 27 |

| Red | 668 | 14 |

| Red Edge | 717 | 12 |

| Near-Infrared | 842 | 57 |

| Vegetation Index | Abbreviation | Band Formula | Reference |

|---|---|---|---|

| Chlorophyll Index Green | Clg | [37] | |

| Chlorophyll Index Red Edge | Clre | [37] | |

| Enhanced Vegetation Index | EVI | [38] | |

| Green Normalized Difference Vegetation Index | GNDVI | [39] | |

| Normalized Difference Vegetation Index | NDVI | [40] | |

| Normalized Difference Red Edge Index | NDRE | [37] | |

| Simple Ratio | SR | [41] | |

| Simple Ratio Red Edge | SRre | [42] | |

| Perpendicular vegetation index | PVI | [43] |

| Vegetation Indices | 28 February | 31 March |

|---|---|---|

| Correlation Coefficients | ||

| Blue band | −0.83 | −0.91 |

| Green band | −0.65 | −0.87 |

| Red band | −0.89 | −0.90 |

| Red edge band | 0.62 | −0.55 |

| NIR band | 0.91 | 0.76 |

| NDVI | 0.92 | 0.88 |

| GNDVI | 0.91 | 0.91 |

| NDRE | 0.93 | 0.91 |

| SR | 0.93 | 0.84 |

| SR red edge | 0.93 | 0.90 |

| CI green | 0.92 | 0.89 |

| CI red edge | 0.93 | 0.90 |

| EVI | 0.93 | 0.86 |

| PVI | 0.93 | 0.86 |

| CHM | 0.60 | 0.84 |

| Variable | Coefficient | p-Value | Adjusted R2 | RMSE |

|---|---|---|---|---|

| 28 February 2022 | ||||

| Intercept | −169.1 | <0.001 | 0.86 | 242.3 |

| PVI | 14,174 | |||

| 31 March 2022 | ||||

| Intercept | −3297.5 | <0.001 | 0.84 | 408.3 |

| NDRE | 18,671.1 | |||

| CHM | −6679.7 | |||

| Both dates | ||||

| Intercept | −694.7 | <0.001 | 0.85 | 345.8 |

| Green | −33,464.3 | |||

| CHM | −6760.3 | |||

| SRre | 3160.1 | |||

| Variable | Coefficient | p-Value | Adjusted R2 | RMSE |

|---|---|---|---|---|

| Oat | ||||

| Intercept | −500.9 | <0.001 | 0.86 | 242.4 |

| EVI | 5018.3 | |||

| Austrian winter pea | ||||

| Intercept | −5530.1 | <0.001 | 0.71 | 261.7 |

| Red edge | 39,904.1 | |||

| Turnip | ||||

| Intercept | 952.1 | <0.001 | 0.95 | 55.1 |

| NIR | −7690.8 | |||

| GNDVI | 3910.9 | |||

| CHM | −5773.6 | |||

| Mixed species | ||||

| Intercept | −6421.7 | <0.001 | 0.93 | 179.4 |

| NIR | 141,191.7 | |||

| Blue | 81,897.9 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salehin, S.M.U.; Poudyal, C.; Rajan, N.; Bagavathiannan, M. Cover Crop Types Influence Biomass Estimation Using Unmanned Aerial Vehicle-Mounted Multispectral Sensors. Remote Sens. 2025, 17, 1471. https://doi.org/10.3390/rs17081471

Salehin SMU, Poudyal C, Rajan N, Bagavathiannan M. Cover Crop Types Influence Biomass Estimation Using Unmanned Aerial Vehicle-Mounted Multispectral Sensors. Remote Sensing. 2025; 17(8):1471. https://doi.org/10.3390/rs17081471

Chicago/Turabian StyleSalehin, Sk Musfiq Us, Chiranjibi Poudyal, Nithya Rajan, and Muthukumar Bagavathiannan. 2025. "Cover Crop Types Influence Biomass Estimation Using Unmanned Aerial Vehicle-Mounted Multispectral Sensors" Remote Sensing 17, no. 8: 1471. https://doi.org/10.3390/rs17081471

APA StyleSalehin, S. M. U., Poudyal, C., Rajan, N., & Bagavathiannan, M. (2025). Cover Crop Types Influence Biomass Estimation Using Unmanned Aerial Vehicle-Mounted Multispectral Sensors. Remote Sensing, 17(8), 1471. https://doi.org/10.3390/rs17081471