Optimizing Unmanned Aerial Vehicle LiDAR Data Collection in Cotton Through Flight Settings and Data Processing

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Site Description

2.2. Aerial LiDAR Data Collection and Pre-Processing

2.3. Data Analysis and Validation

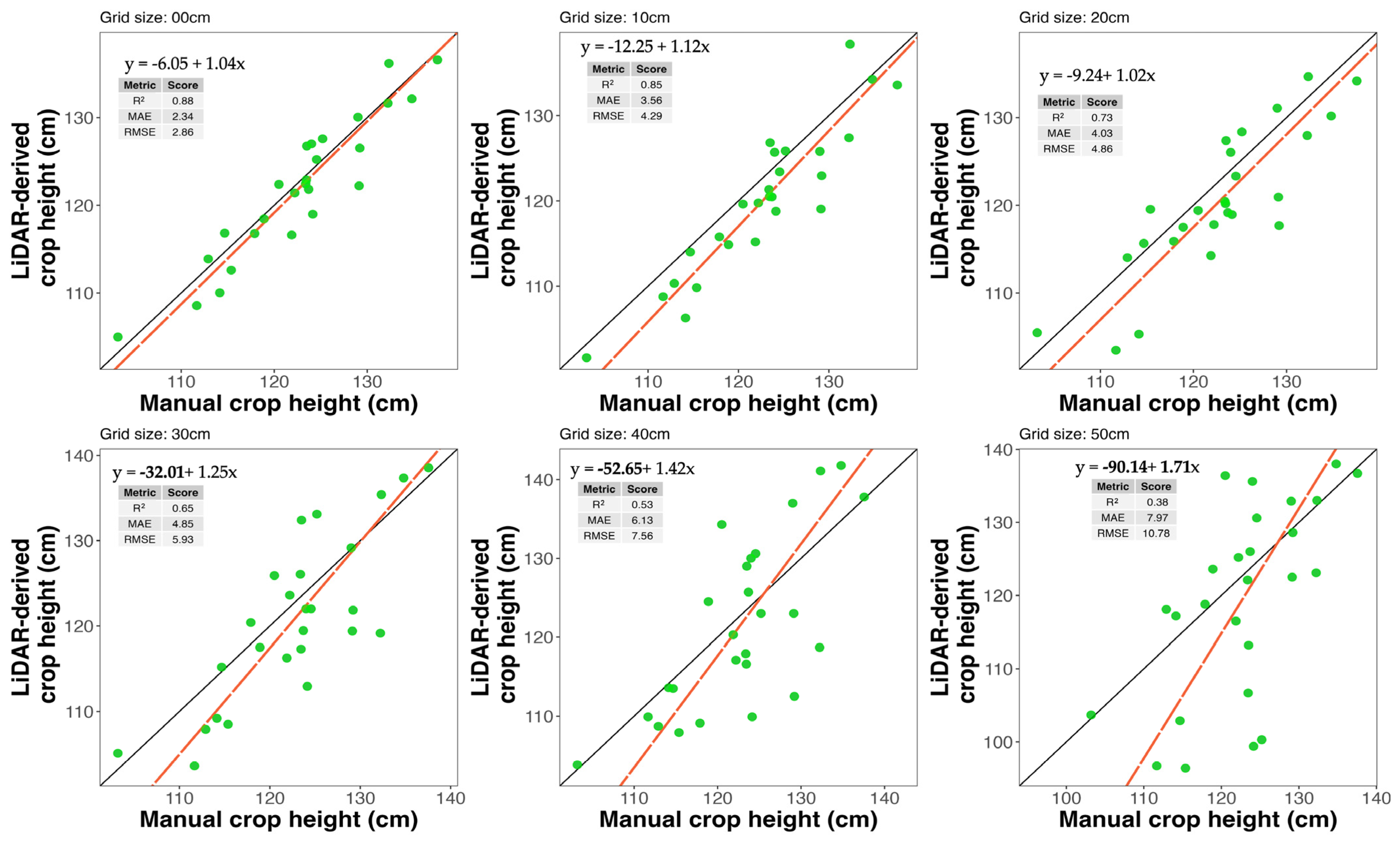

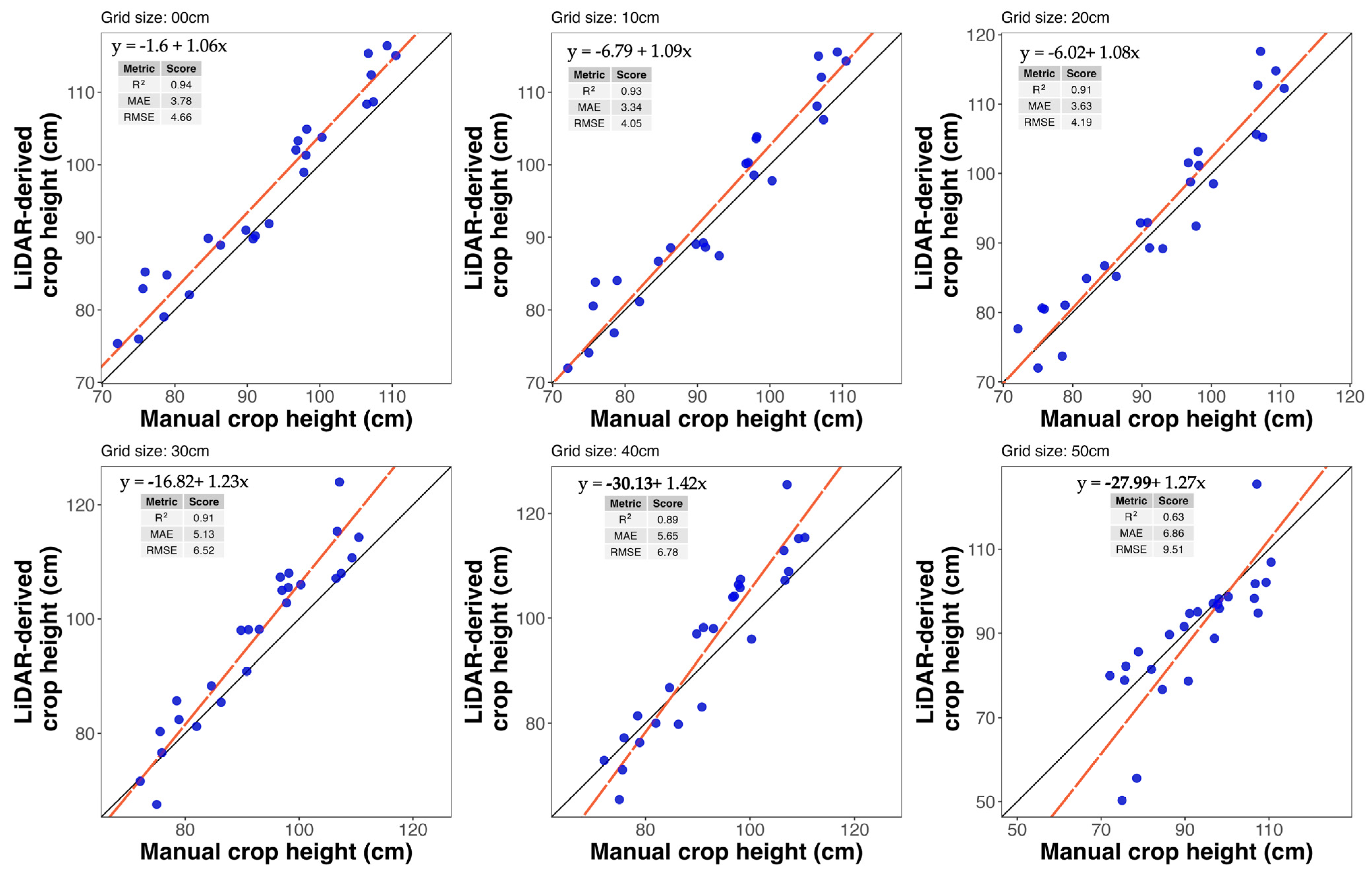

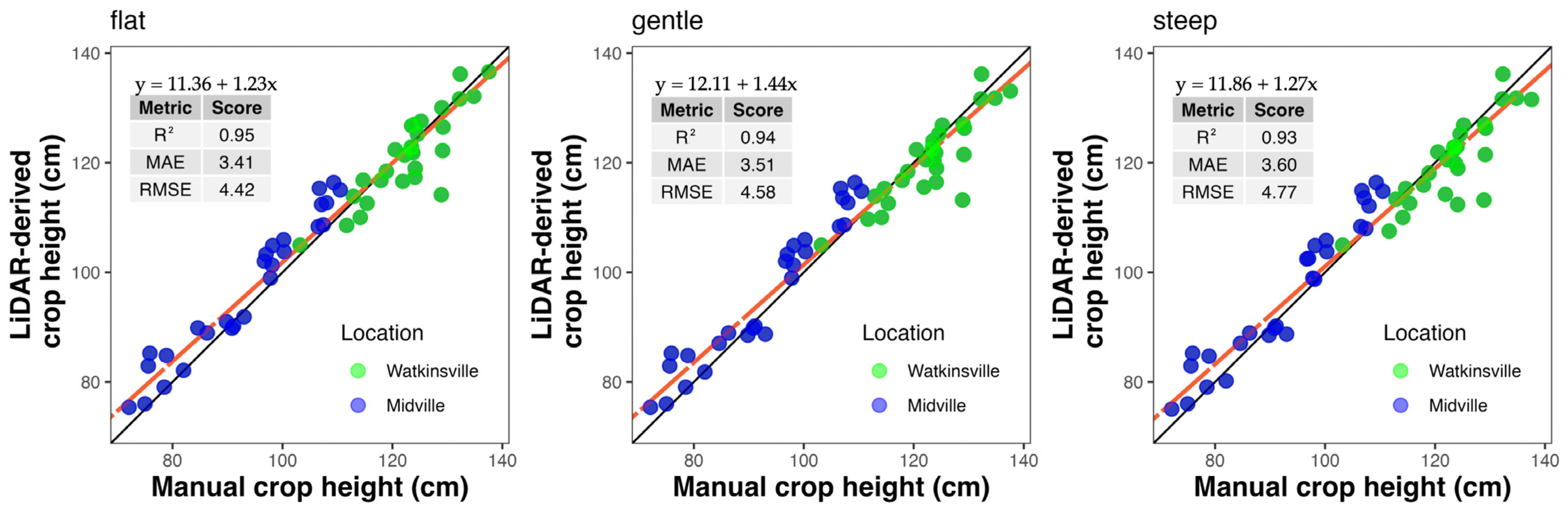

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meyer, L. Cotton and Wool Outlook: October 2024. 2024. Available online: https://ers.usda.gov/sites/default/files/_laserfiche/outlooks/110207/CWS-24j.pdf?v=42812 (accessed on 20 February 2025).

- USDA. Cotton Explorer International Production Assessment Division, United States Department of Agriculture. 2024. Available online: https://ipad.fas.usda.gov/cropexplorer/cropview/commodityView.aspx?cropid=2631000 (accessed on 18 February 2025).

- Cothren, J.T.; Oosterhuis, D.M. Use of Growth Regulators in Cotton Production. In Physiology of Cotton; Stewart, J.M., Oosterhuis, D.M., Heitholt, J.J., Mauney, J.R., Eds.; Springer: Dordrecht, The Netherlands, 2010; pp. 289–303. [Google Scholar] [CrossRef]

- Pettigrew, W.T. Effects of Different Seeding Rates and Plant Growth Regulators on Early-planted Cotton. J. Cotton Sci. 2005, 9, 189–198. [Google Scholar]

- Bradow, J.M.; Davidonis, G.H. Quantitation of Fiber Quality and the Cotton Production-Processing Interface: A Physiologist’s Perspective. J. Cotton Sci. 2000, 4, 34–64. [Google Scholar]

- Oosterhuis, D.M. Growth and Development of a Cotton Plant. In Nitrogen Nutrition of Cotton: Practical Issues; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 1990; pp. 1–24. [Google Scholar] [CrossRef]

- Kerby, T.A.; Plant, R.E.; Horrocks, R.D. Height-to-Node Ratio as an Index of Early Season Cotton Growth. J. Prod. Agric. 1997, 10, 80–83. [Google Scholar] [CrossRef]

- Hand, L.C.; Snider, J.; Roberts, P. Cotton Growth Monitoring and PGR Management; Circular 1244; University of Georgia: Athens, GA, USA, 2022. [Google Scholar]

- Byrd, S. Plant Growth Regulators in Cotton; PSS-2189; Oklahoma Cooperative Extensive Service: Oklahoma City, OK, USA, 2019. [Google Scholar]

- Kawamura, K.; Asai, H.; Yasuda, T.; Khanthavong, P.; Soisouvanh, P.; Phongchanmixay, S. Field phenotyping of plant height in an upland rice field in Laos using low-cost small unmanned aerial vehicles (UAVs). Plant Prod. Sci. 2020, 23, 452–465. [Google Scholar] [CrossRef]

- Lu, W.; Okayama, T.; Komatsuzaki, M. Rice Height Monitoring between Different Estimation Models Using UAV Photogrammetry and Multispectral Technology. Remote Sens. 2022, 14, 78. [Google Scholar] [CrossRef]

- Sun, S.; Li, C.; Paterson, A.H.; Jiang, Y.; Xu, R.; Robertson, J.S.; Snider, J.L.; Chee, P.W. In-field High Throughput Phenotyping and Cotton Plant Growth Analysis Using LiDAR. Front. Plant Sci. 2018, 9, 16. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-Throughput Phenotyping of Sorghum Plant Height Using an Unmanned Aerial Vehicle and Its Application to Genomic Prediction Modeling. Front. Plant Sci. 2017, 8, 421. [Google Scholar] [CrossRef]

- Amann, M.-C.; Bosch, T.M.; Lescure, M.; Myllylae, R.A.; Rioux, M. Laser ranging: A critical review of unusual techniques for distance measurement. Opt. Eng. 2001, 40, 10–19. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Tao, W.; Lei, Y.; Mooney, P. Dense point cloud extraction from UAV captured images in forest area. In Proceedings of the 2011 IEEE International Conference on Spatial Data Mining and Geographical Knowledge Services, Fuzhou, China, 29 June–1 July 2011; pp. 389–392. [Google Scholar] [CrossRef]

- Garrido, M.; Paraforos, D.S.; Reiser, D.; Vázquez Arellano, M.; Griepentrog, H.W.; Valero, C. 3D Maize Plant Reconstruction Based on Georeferenced Overlapping LiDAR Point Clouds. Remote Sens. 2015, 7, 17077–17096. [Google Scholar] [CrossRef]

- Anderson, S.L.; Murray, S.C.; Malambo, L.; Ratcliff, C.; Popescu, S.; Cope, D.; Chang, A.; Jung, J.; Thomasson, J.A. Prediction of Maize Grain Yield before Maturity Using Improved Temporal Height Estimates of Unmanned Aerial Systems. Plant Phenome J. 2019, 2, 190004. [Google Scholar] [CrossRef]

- Xu, W.; Yang, W.; Wu, J.; Chen, P.; Lan, Y.; Zhang, L. Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy 2023, 13, 2584. [Google Scholar] [CrossRef]

- Peng, X.; Zhao, A.; Chen, Y.; Chen, Q.; Liu, H. Tree Height Measurements in Degraded Tropical Forests Based on UAV-LiDAR Data of Different Point Cloud Densities: A Case Study on Dacrydium pierrei in China. Forests 2021, 12, 328. [Google Scholar] [CrossRef]

- Singh, K.K.; Chen, G.; McCarter, J.B.; Meentemeyer, R.K. Effects of LiDAR point density and landscape context on estimates of urban forest biomass. ISPRS J. Photogramm. Remote Sens. 2015, 101, 310–322. [Google Scholar] [CrossRef]

- Jakubowski, M.K.; Guo, Q.; Kelly, M. Tradeoffs between lidar pulse density and forest measurement accuracy. Remote Sens. Environ. 2013, 130, 245–253. [Google Scholar] [CrossRef]

- Béjar-Martos, J.A.; Rueda-Ruiz, A.J.; Ogayar-Anguita, C.J.; Segura-Sánchez, R.J.; López-Ruiz, A. Strategies for the Storage of Large LiDAR Datasets—A Performance Comparison. Remote Sens. 2022, 14, 2623. [Google Scholar] [CrossRef]

- Zhang, K.; Chen, S.-C.; Whitman, D.; Shyu, M.-L.; Yan, J.; Zhang, C. A progressive morphological filter for removing nonground measurements from airborne LIDAR data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 872–882. [Google Scholar] [CrossRef]

- Soil Survey Staff. Soil Survey Geographic Database (SSURGO)|Natural Resources Conservation Service. 2025. Available online: https://www.nrcs.usda.gov/resources/data-and-reports/soil-survey-geographic-database-ssurgo (accessed on 10 February 2025).

- Wu, Q. geemap: A Python package for interactive mapping with Google Earth Engine. J. Open Source Softw. 2020, 5, 2305. [Google Scholar] [CrossRef]

- Hijmans, R.J.; Barbosa, M.; Bivand, R.; Brown, A.; Chirico, M.; Cordano, E.; Dyba, K.; Pebesma, E.; Rowlingson, B.; Sumner, M.D. terra: Spatial Data Analysis (Version 1.8-15) [Computer software]. 2025. Available online: https://cran.rproject.org/web/packages/terra/index.html (accessed on 10 February 2025).

- Mauney, J.R.; Stewart, J.M. Cotton Physiology. 1986. Available online: https://www.cotton.org/foundation/reference-books/cotton-physiology/upload/COTTON-PHYSIOLOGY.pdf (accessed on 10 February 2025).

- Kuželka, K.; Slavík, M.; Surový, P. Very High Density Point Clouds from UAV Laser Scanning for Automatic Tree Stem Detection and Direct Diameter Measurement. Remote Sens. 2020, 12, 1236. [Google Scholar] [CrossRef]

- Luo, S.; Chen, J.M.; Wang, C.; Xi, X.; Zeng, H.; Peng, D.; Li, D. Effects of LiDAR point density, sampling size and height threshold on estimation accuracy of crop biophysical parameters. Opt. Express 2016, 24, 11578–11593. [Google Scholar] [CrossRef]

- Boucher, P.B.; Hockridge, E.G.; Singh, J.; Davies, A.B. Flying high: Sampling savanna vegetation with UAV-lidar. Methods Ecol. Evol. 2023, 14, 1668–1686. [Google Scholar] [CrossRef]

- Brede, B.; Calders, K.; Lau, A.; Raumonen, P.; Bartholomeus, H.M.; Herold, M.; Kooistra, L. Non-destructive tree volume estimation through quantitative structure modelling: Comparing UAV laser scanning with terrestrial LIDAR. Remote Sens. Environ. 2019, 233, 111355. [Google Scholar] [CrossRef]

- Zhang, Q.; Hu, M.; Zhou, Y.; Wan, B.; Jiang, L.; Zhang, Q.; Wang, D. Effects of UAV-LiDAR and Photogrammetric Point Density on Tea Plucking Area Identification. Remote Sens. 2022, 14, 1505. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The application of Unmanned Aerial Vehicles (UAVs) to estimate above-ground biomass of mangrove ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Moudrý, V.; Gdulová, K.; Fogl, M.; Klápště, P.; Urban, R.; Komárek, J.; Moudrá, L.; Štroner, M.; Barták, V.; Solský, M. Comparison of leaf-off and leaf-on combined UAV imagery and airborne LiDAR for assessment of a post-mining site terrain and vegetation structure: Prospects for monitoring hazards and restoration success. Appl. Geogr. 2019, 104, 32–41. [Google Scholar] [CrossRef]

- Roussel, J.-R.; Auty, D.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Meador, A.S.; Bourdon, J.-F.; de Boissieu, F.; Achim, A. lidR: An R package for analysis of Airborne Laser Scanning (ALS) data. Remote Sens. Environ. 2020, 251, 112061. [Google Scholar] [CrossRef]

- Chang, Y.; Habib, A.; Lee, D.; Yom, J. Automatic classification of lidar data into ground and non-ground points. Int. Arch. Photogramm. Remote Sens. 2008, 37, 463–468. [Google Scholar]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R.R. High Throughput Determination of Plant Height, Ground Cover, and Above-Ground Biomass in Wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Walter, J.D.C.; Edwards, J.; McDonald, G.; Kuchel, H. Estimating Biomass and Canopy Height With LiDAR for Field Crop Breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef]

- Alexander, C.; Korstjens, A.H.; Hill, R.A. Influence of micro-topography and crown characteristics on tree height estimations in tropical forests based on LiDAR canopy height models. Int. J. Appl. Earth Obs. Geoinf. 2018, 65, 105–113. [Google Scholar] [CrossRef]

- Fox, J.; Weisberg, S. An R Companion to Applied Regression, 3rd ed.; Sage: Washington, DC, USA, 2019; Available online: https://us.sagepub.com/en-us/nam/an-r-companion-to-applied-regression/book246125#resources (accessed on 20 January 2025).

- Hand, L.C.; Stanley, C.; Jenna, V.; Glen, H.; Bob, K.; Liu, Y. 2024 Georgia Cotton Production Guide. UGA Extension Cotton Team 2360 Rainwater Road Tifton, GA 31793. 2024. Available online: https://secure.caes.uga.edu/extension/publications/files/pdf/AP%20124-4_2.PDF (accessed on 10 February 2025).

- Vaz, C.M.P.; Franchini, J.C.; Speranza, E.A.; Inamasu, R.Y.; De CJorge, L.A.; Rabello, L.M.; De ONLopes, I.; Das Chagas, S.; De Souza, J.L.R.; De Souza, M.; et al. Zonal Application of Plant Growth Regulator in Cotton to Reduce Variability and Increase Yield in a Highly Variable Field. J. Cotton Sci. 2023, 23, 60–73. [Google Scholar] [CrossRef]

- Fang, S.; Gao, K.; Hu, W.; Wang, S.; Chen, B.; Zhou, Z. Foliar and seed application of plant growth regulators affects cotton yield by altering leaf physiology and floral bud carbohydrate accumulation. Field Crops Res. 2019, 231, 105–114. [Google Scholar] [CrossRef]

- Samples, C.; Dodds, D.M.; Catchot, A.L.; Golden, B.R.; Gore, J.; Varco, J.J. Determining optimum plant growth regulator application rates in response to fruiting structure and flower bud removal. J. Cotton Sci. 2015, 19, 359–367. [Google Scholar] [CrossRef]

- Leal, A.J.F.; Piati, G.L.; Leite, R.C.; Zanella, M.S.; Osorio, C.R.W.S.; Lima, S.F. Nitrogen and mepiquat chloride can affect fiber quality and cotton yield. Revista Brasileira de Engenharia Agrícola e Ambiental 2020, 24, 238–243. [Google Scholar] [CrossRef]

- Tung, S.A.; Huang, Y.; Hafeez, A.; Ali, S.; Liu, A.; Chattha, M.S.; Ahmad, S.; Yang, G. Morpho-physiological Effects and Molecular Mode of Action of Mepiquat Chloride Application in Cotton: A Review. J. Soil Sci. Plant Nutr. 2020, 20, 2073–2086. [Google Scholar] [CrossRef]

- Sawana, Z.M.; Hafez, S.A.; Alkassas, A.R. Nitrogen, potassium and plant growth retardant effects on oil content and quality of cotton seed. Grasas y Aceites 2007, 58, 243–251. [Google Scholar] [CrossRef]

- Scarpin, G.J.; Cereijo, A.E.; Dileo, P.N.; Winkler, H.H.M.; Muchut, R.J.; Lorenzini, F.G.; Roeschlin, R.A.; Paytas, M. Delayed harvest time affects strength and color parameters in cotton fibers. Agron. J. 2023, 115, 583–594. [Google Scholar] [CrossRef]

- Trevisan, R.G.; Vilanova Júnior, N.S.; Eitelwein, M.T.; Molin, J.P. Management of Plant Growth Regulators in Cotton Using Active Crop Canopy Sensors. Agriculture 2018, 8, 101. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef]

- Wang, H.; Singh, K.; Poudel, H.; Ravichandran, P.; Natarajan, M.; Eisenreich, B. Estimation of Crop Height and Digital Biomass from UAV-Based Multispectral Imagery. In Proceedings of the 2023 13th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Athens, Greece, 31 October–2 November 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and soybean heights estimation from unmanned aerial vehicle (UAV) LiDAR data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Roussel, J.-R.; Caspersen, J.; Béland, M.; Thomas, S.; Achim, A. Removing bias from LiDAR-based estimates of canopy height: Accounting for the effects of pulse density and footprint size. Remote Sens. Environ. 2017, 198, 1–16. [Google Scholar] [CrossRef]

- Storch, M.; Jarmer, T.; Adam, M.; De Lange, N. Systematic Approach for Remote Sensing of Historical Conflict Landscapes with UAV-Based Laserscanning. Sensors 2021, 22, 217. [Google Scholar] [CrossRef]

- Maguya, A.S.; Junttila, V.; Kauranne, T. Adaptive algorithm for large scale dtm interpolation from lidar data for forestry applications in steep forested terrain. ISPRS J. Photogramm. Remote Sens. 2013, 85, 74–83. [Google Scholar] [CrossRef]

- Tsoulias, N.; Paraforos, D.S.; Fountas, S.; Zude-Sasse, M. Estimating Canopy Parameters Based on the Stem Position in Apple Trees Using a 2D LiDAR. Agronomy 2019, 9, 740. [Google Scholar] [CrossRef]

- Stereńczak, K.; Ciesielski, M.; Balazy, R.; Zawiła-Niedźwiecki, T. Comparison of various algorithms for DTM interpolation from LIDAR data in dense mountain forests. Eur. J. Remote Sens. 2016, 49, 599–621. [Google Scholar] [CrossRef]

- Li, D.; Zhou, Z.; Wei, Y. Unsupervised shape-aware SOM down-sampling for plant point clouds. ISPRS J. Photogramm. Remote Sens. 2024, 211, 172–207. [Google Scholar] [CrossRef]

- Hämmerle, M.; Höfle, B. Effects of Reduced Terrestrial LiDAR Point Density on High-Resolution Grain Crop Surface Models in Precision Agriculture. Sensors 2014, 14, 24212–24230. [Google Scholar] [CrossRef]

- Ding, Q.; Chen, W.; King, B.; Liu, Y.; Liu, G. Combination of overlap-driven adjustment and Phong model for LiDAR intensity correction. ISPRS J. Photogramm. Remote Sens. 2013, 75, 40–47. [Google Scholar] [CrossRef]

| Grid Sub-Sampling (cm) | Point Cloud Density (points/m2) | Benchmarking Time DJI Terra (3D LiDAR Point Cloud Modeling) (s) | Benchmarking Time R (Elevation Modeling) (s) | Total Time for Processing (s) | File Size (mb) |

|---|---|---|---|---|---|

| 0 | 5834 | 143 | 63 | 206 | 812 |

| 10 | 539 | 101 | 55 | 156 | 149 |

| 20 | 110 | 83 | 37 | 120 | 35 |

| 30 | 41 | 82 | 21 | 103 | 13 |

| 40 | 20 | 80 | 19 | 99 | 6 |

| 50 | 21 | 77 | 14 | 91 | 4 |

| Grid Sub-Sampling (cm) | Point Cloud Density (points/m2) | Benchmarking Time DJI Terra (3D LiDAR Point Cloud Modeling) (s) | Benchmarking Time R (Elevation Modeling) (s) | Total Time for Processing (s) | File Size (mb) |

|---|---|---|---|---|---|

| 0 | 2637 | 127 | 56 | 183 | 718 |

| 10 | 362 | 77 | 40 | 117 | 99 |

| 20 | 87 | 70 | 26 | 96 | 24 |

| 30 | 34 | 70 | 17 | 87 | 9 |

| 40 | 17 | 67 | 13 | 80 | 5 |

| 50 | 9 | 67 | 11 | 78 | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhattarai, A.; Scarpin, G.J.; Jakhar, A.; Porter, W.; Hand, L.C.; Snider, J.L.; Bastos, L.M. Optimizing Unmanned Aerial Vehicle LiDAR Data Collection in Cotton Through Flight Settings and Data Processing. Remote Sens. 2025, 17, 1504. https://doi.org/10.3390/rs17091504

Bhattarai A, Scarpin GJ, Jakhar A, Porter W, Hand LC, Snider JL, Bastos LM. Optimizing Unmanned Aerial Vehicle LiDAR Data Collection in Cotton Through Flight Settings and Data Processing. Remote Sensing. 2025; 17(9):1504. https://doi.org/10.3390/rs17091504

Chicago/Turabian StyleBhattarai, Anish, Gonzalo J. Scarpin, Amrinder Jakhar, Wesley Porter, Lavesta C. Hand, John L. Snider, and Leonardo M. Bastos. 2025. "Optimizing Unmanned Aerial Vehicle LiDAR Data Collection in Cotton Through Flight Settings and Data Processing" Remote Sensing 17, no. 9: 1504. https://doi.org/10.3390/rs17091504

APA StyleBhattarai, A., Scarpin, G. J., Jakhar, A., Porter, W., Hand, L. C., Snider, J. L., & Bastos, L. M. (2025). Optimizing Unmanned Aerial Vehicle LiDAR Data Collection in Cotton Through Flight Settings and Data Processing. Remote Sensing, 17(9), 1504. https://doi.org/10.3390/rs17091504