Abstract

The Global Navigation Satellite System (GNSS)/Inertial Navigation System (INS) integrated navigation technology is widely utilized in vehicle positioning. However, in complex environments such as urban canyons or tunnels, GNSS signal outages due to obstructions lead to rapid error accumulation in INS-only operation, with error growth rates reaching 10–50 m per min. To enhance positioning accuracy during GNSS outages, this paper proposes an error compensation method based on CNN-BiLSTM-Attention. When GNSS signals are available, a mapping model is established between specific force, angular velocity, speed, heading angle, and GNSS position increments. During outages, this model, combined with an improved Kalman filter, predicts pseudo-GNSS positions and their covariances in real-time to compute an aided navigation solution. The improved Kalman filter integrates Sage–Husa adaptive filtering and strong tracking Kalman filtering, dynamically estimating noise covariances to enhance robustness and address the challenge of unknown pseudo-GNSS covariances. Real-vehicle experiments conducted in a city in Jiangsu Province simulated a 120 s GNSS outage, demonstrating that the proposed method delivers a stable navigation solution with a post-convergence positioning accuracy of 0.7275 m root mean square error (RMSE), representing a 93.66% improvement over pure INS. Moreover, compared to other deep learning models (e.g., LSTM), this approach exhibits faster convergence and higher precision, offering a reliable solution for vehicle positioning in GNSS-denied scenarios.

1. Introduction

The GNSS serves as a cornerstone of modern navigation and positioning technologies [1], providing high-precision position and velocity information with non-accumulating measurement errors, alongside all-weather, continuous, and global coverage capabilities. This makes the GNSS indispensable across applications ranging from mobile devices to aerospace. However, in highly dynamic and complex environments—such as urban canyons, tunnels, or adverse weather conditions—GNSS signals are susceptible to electromagnetic interference and multipath effects, posing significant challenges to real-time, accurate positioning [2]. These limitations underscore the need for robust complementary navigation systems to enhance performance under demanding conditions.

In vehicular navigation, the Micro-Electro-Mechanical System–Inertial Navigation System (MEMS-INS) has become widely adopted due to its low cost, compact size, and ease of integration. MEMS-INS plays a pivotal role in vehicle positioning, attitude control, and autonomous driving. Its core component, the Inertial Measurement Unit (IMU), comprises accelerometers and gyroscopes, with positioning accuracy reliant on key performance metrics such as scale factor errors, bias stability and random walk. Based on these metrics, IMUs are categorized into consumer-grade, navigation-grade, and tactical-grade levels. Compared to GNSS, the INS delivers high-frequency navigation data and can continuously compute a vehicle’s position, offering high-precision positioning in the short term [3]. However, the INS derives navigation information through integration, leading to error accumulation in position, velocity, and attitude over time, necessitating external calibration. Consequently, standalone low-cost INSs are unsuitable for long-term, high-precision positioning in applications like vehicle navigation. To address this, integrating the complementary strengths of GNSS and INS enables vehicular positioning systems with high accuracy, interference resistance, and frequent updates [4].

Nevertheless, GNSS signals have limited penetration capabilities and are frequently disrupted in environments such as urban streets, tunnels, under bridges, or dense forests due to obstructions. When the number of visible satellites is insufficient, GNSS receivers fail to provide valid positioning solutions [5]. In such scenarios, GNSS/INS integrated navigation technology must rely entirely on INSs for independent operation. In loosely coupled architectures, the absence of GNSS data impedes effective state updates, resulting in rapid error divergence and a significant decline in accuracy. In contrast, tightly coupled systems, which directly fuse raw GNSS observations (e.g., pseudorange and pseudorange rate) with INS-derived information, can maintain functionality with partial satellite availability [6]. However, when GNSS signals are completely unavailable, even tightly coupled systems struggle to deliver accurate positioning [7]. This challenge, particularly critical in high-precision navigation scenarios like autonomous driving, highlights the urgent need for innovative error compensation strategies during GNSS outages.

To address the rapid divergence of positioning errors during GNSS outages, existing compensation methods can be broadly classified into three categories. The first approach incorporates additional sensors—such as wheel odometry, visual sensors (cameras), or LiDAR—into GNSSs/INSs to impose motion constraints, thereby enhancing positioning accuracy [8]. While effective, this method increases data fusion complexity and significantly raises hardware costs and computational demands. The second category leverages environmental perception and map-matching techniques [9], utilizing pre-built high-precision maps or real-time environmental features (e.g., road signs, lane markings) to aid positioning in GNSS-denied conditions. However, its applicability is limited by the quality of environmental data and map update frequency, with substantial computational overhead. The third category focuses on advanced data processing techniques, encompassing traditional filtering methods and emerging deep learning-based algorithms. Among traditional methods, Kalman filtering (KF) [10] and its extensions (e.g., EKF [11], RKF [12], RAKF [13], H-Infinity filtering [14], and square-root unscented kalman Filtering [15]) are widely used for multi-sensor data fusion to improve interference resistance. These methods perform well during short-term GNSS outages but struggle to maintain accurate estimates during prolonged interruptions due to their reliance on observation data and statistical modeling, which falters with non-Gaussian noise accumulation.

In recent years, the rapid advancement of artificial intelligence has positioned neural networks as a promising tool for error compensation in integrated navigation systems, owing to their superior nonlinear modeling and pattern recognition capabilities [16]. For instance, Sharaf et al. [17] introduced a Radial Basis Function (RBF) neural network to predict filter outputs during GNSS outages, enabling effective feedback correction of INS-derived navigation parameters. Tan Xinglong et al. [18] improved the RBF neural network using a genetic algorithm, while Gao Weiguang et al. [19] enhanced the Backpropagation (BP) neural network for online state equation correction in GNSSs/INSs. Zhang et al. [20] employed an improved Multilayer Perceptron (MLP) neural network to predict pseudo-GNSS positions, with experimental results showing superior performance over traditional models. Despite these achievements, such static models struggle to capture the dynamic characteristics of time-series data, leading to suboptimal performance during prolonged GNSS outages. To address this, Dai H et al. [21] introduced a Recurrent Neural Network (RNN) to predict and correct INS errors, though RNNs are limited by gradient vanishing issues. LSTM networks, capable of correlating current and past information to address long-term dependencies, were employed by Zhang Y. et al. [22] to predict positioning during GNSS outages, demonstrating improved accuracy. However, LSTM models still fall short in feature extraction from extremely long sequences, temporal modeling, and attention allocation, with lengthy training times hindering real-time applications [23].

To bridge GNSS outages in integrated navigation systems, this paper proposes an error compensation method based on CNN-BiLSTM-Attention. The primary contributions are as follows:

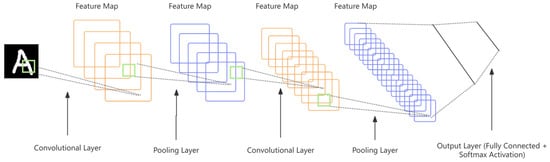

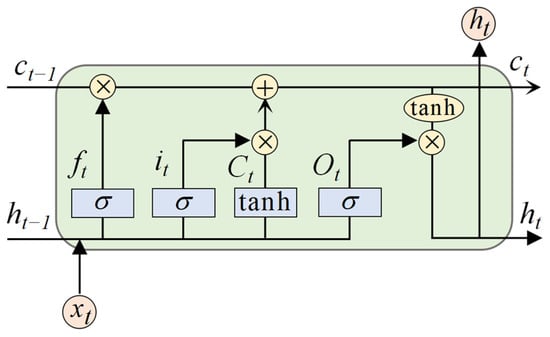

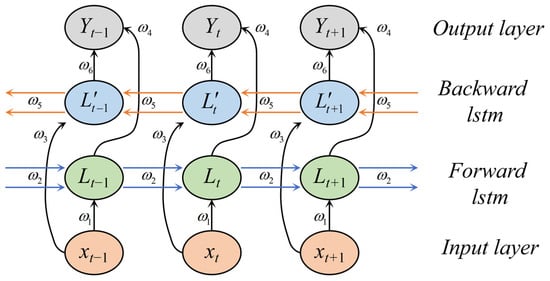

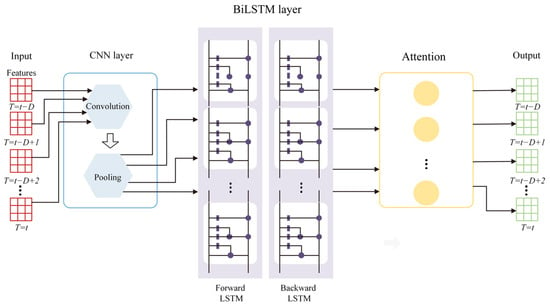

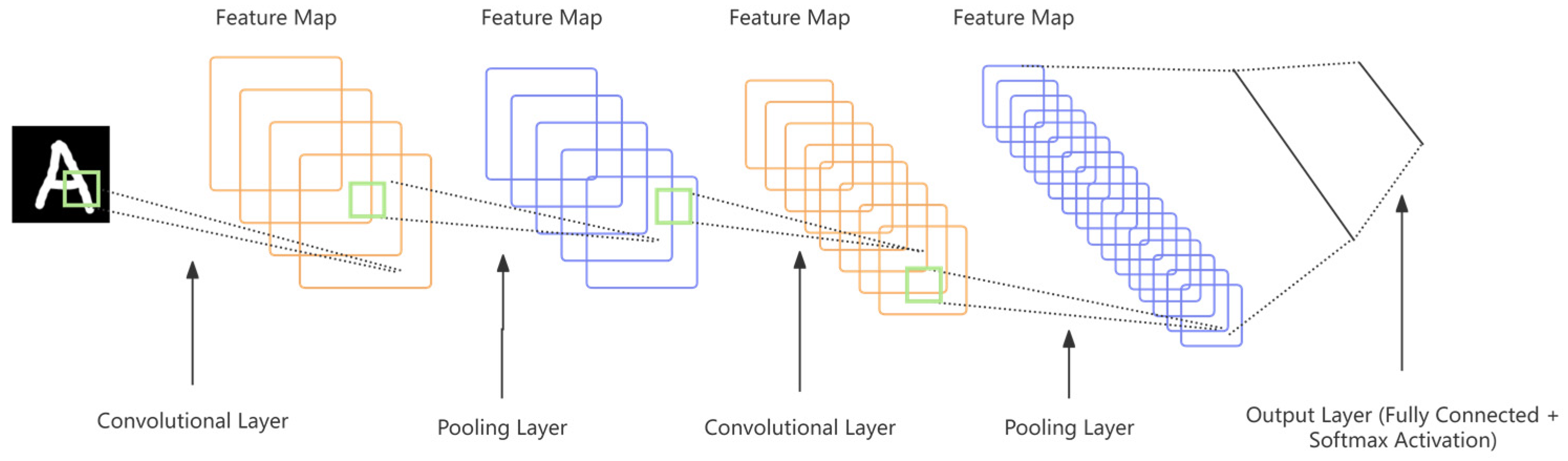

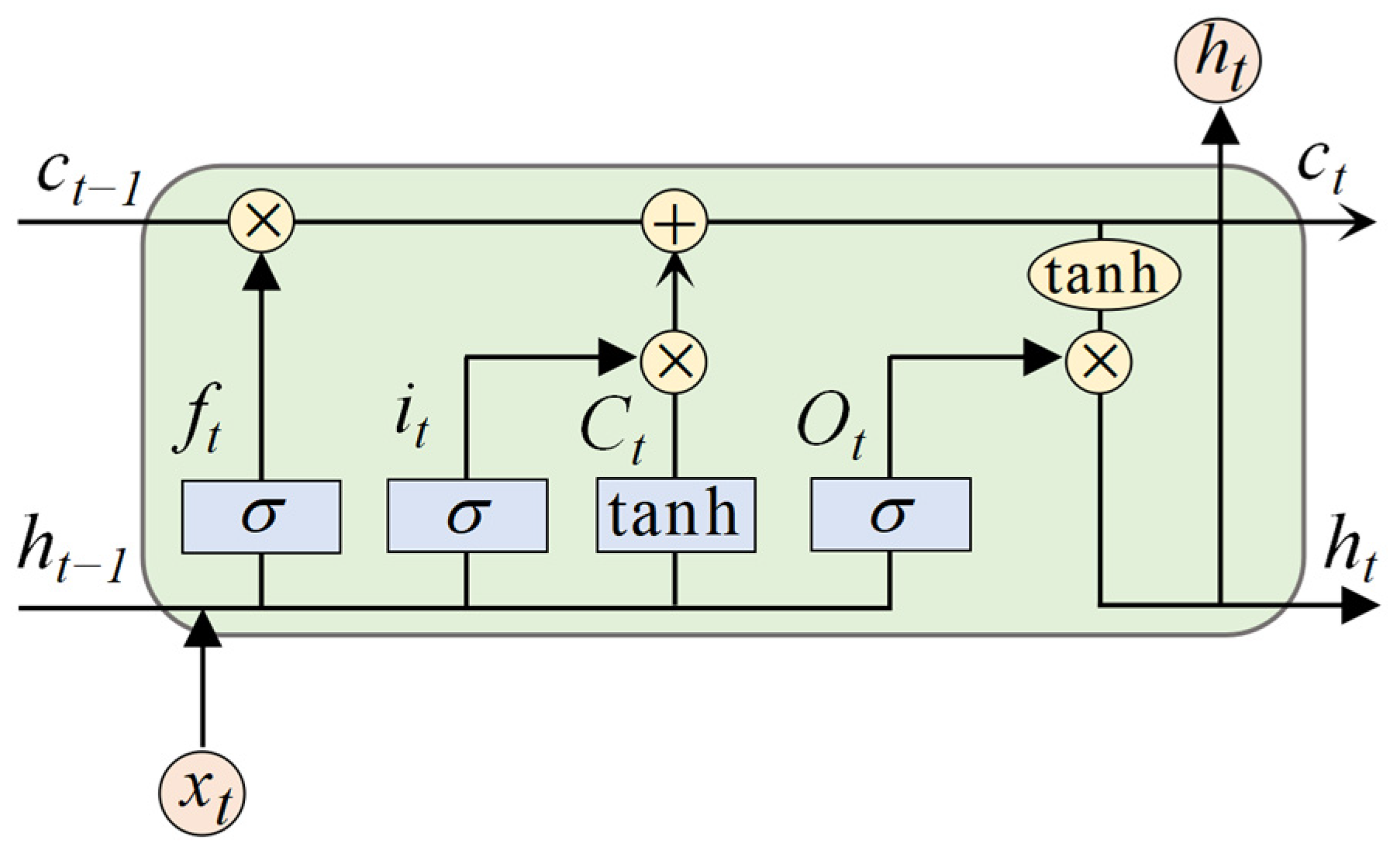

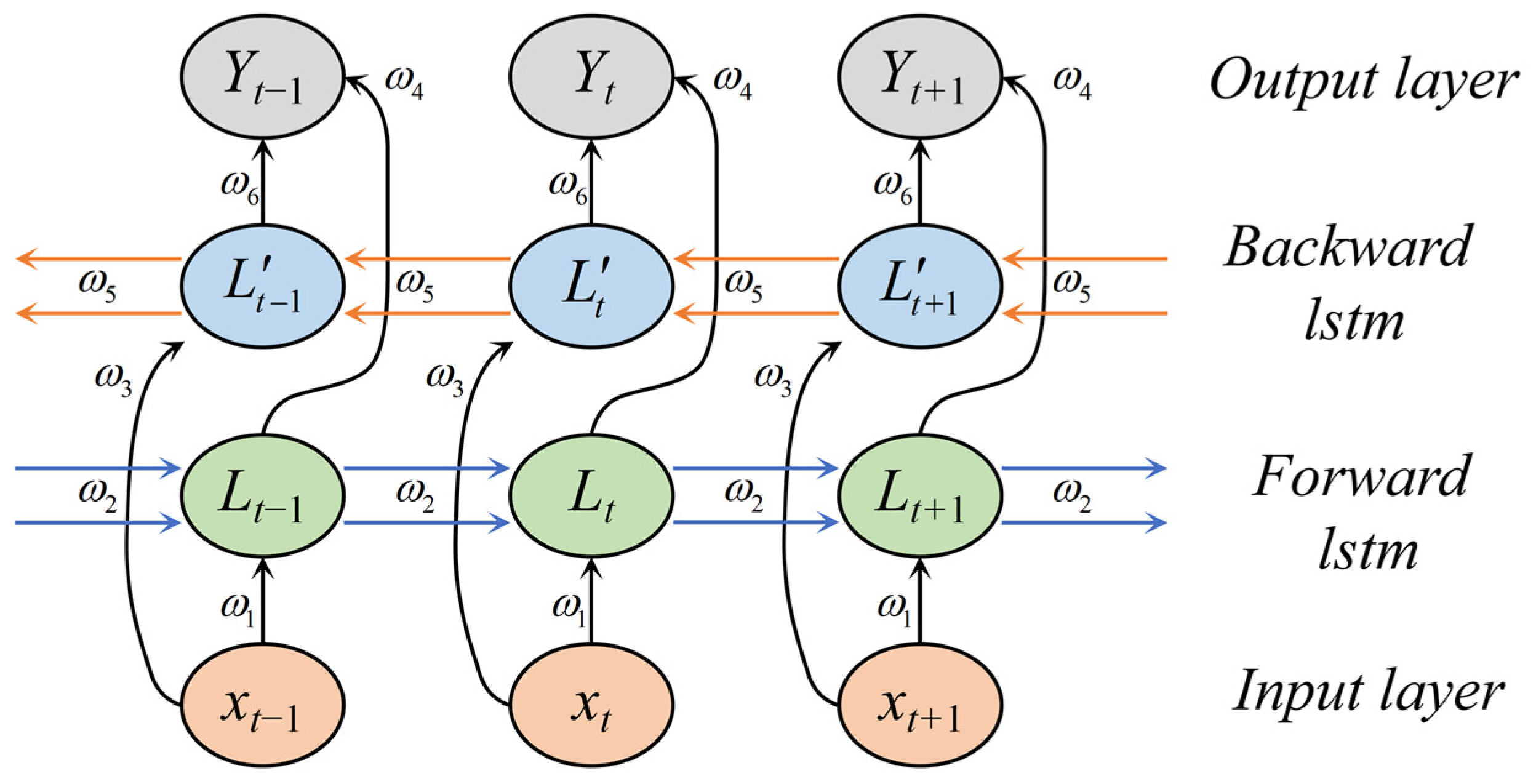

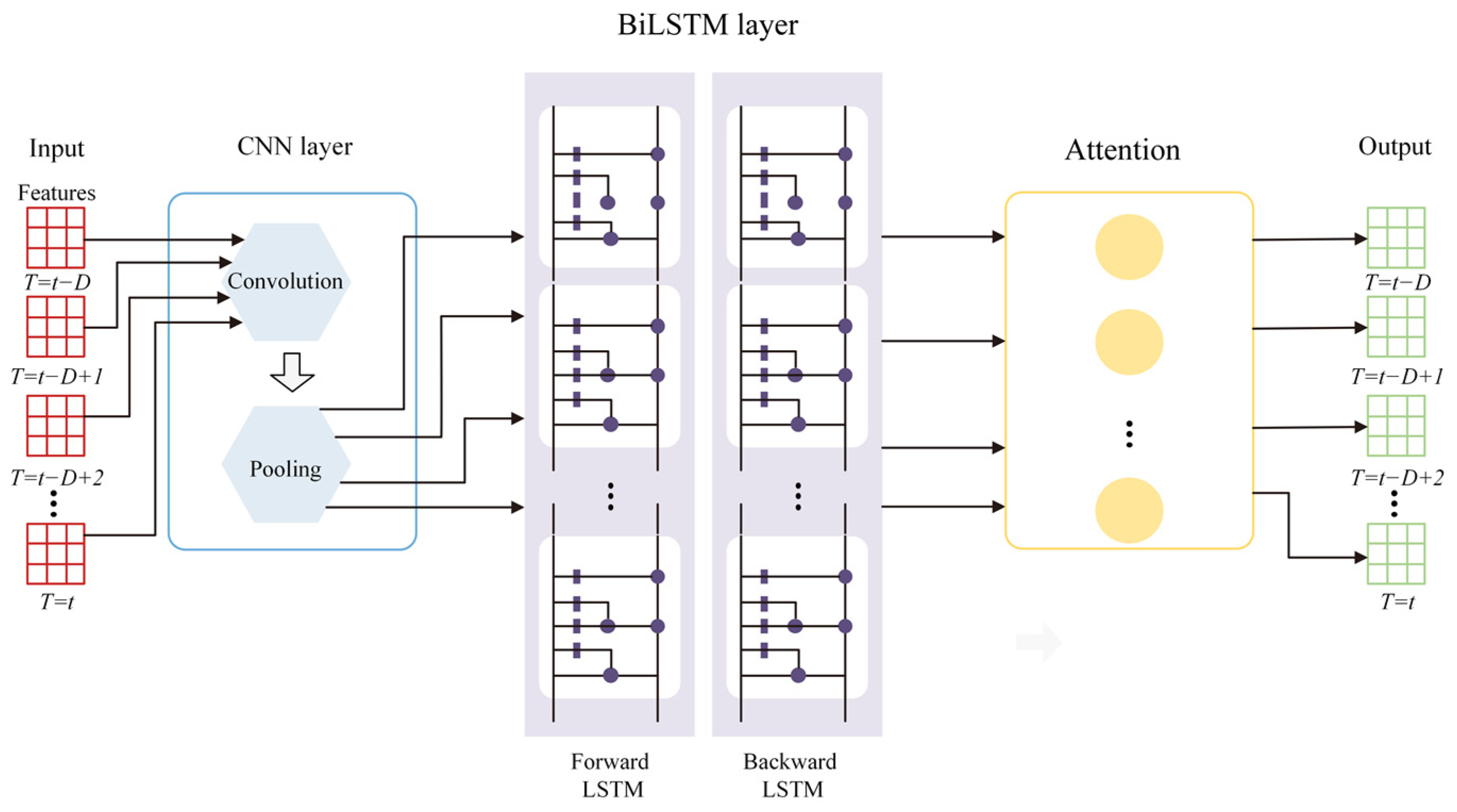

Model Design: A novel CNN-BiLSTM-Attention model is proposed, integrating Convolutional Neural Networks to extract spatial features from IMU data, Bidirectional Long Short-Term Memory to capture long-term dependencies in time-series data, and an Attention Mechanism to enhance focus on critical time steps, thereby improving the prediction accuracy of pseudo-GNSS position increments. Compared to traditional neural networks, this model more effectively handles long time-series data, reducing error accumulation and enhancing navigation continuity.

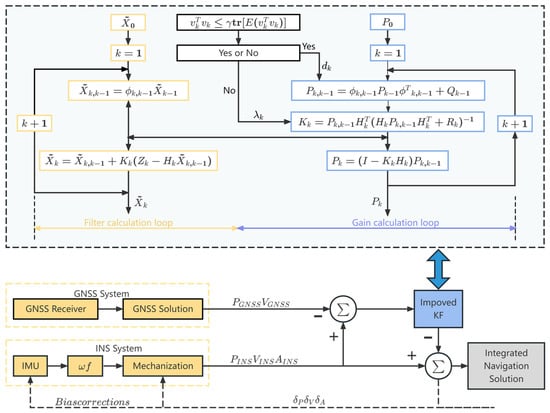

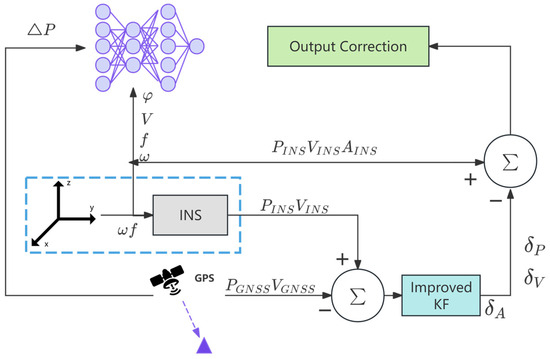

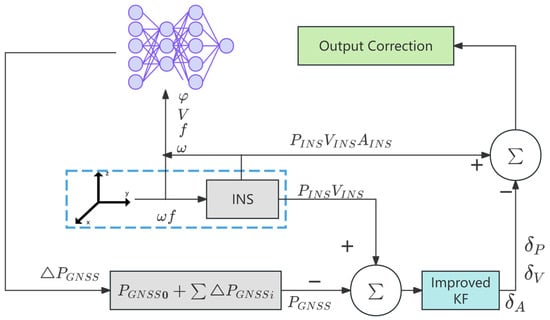

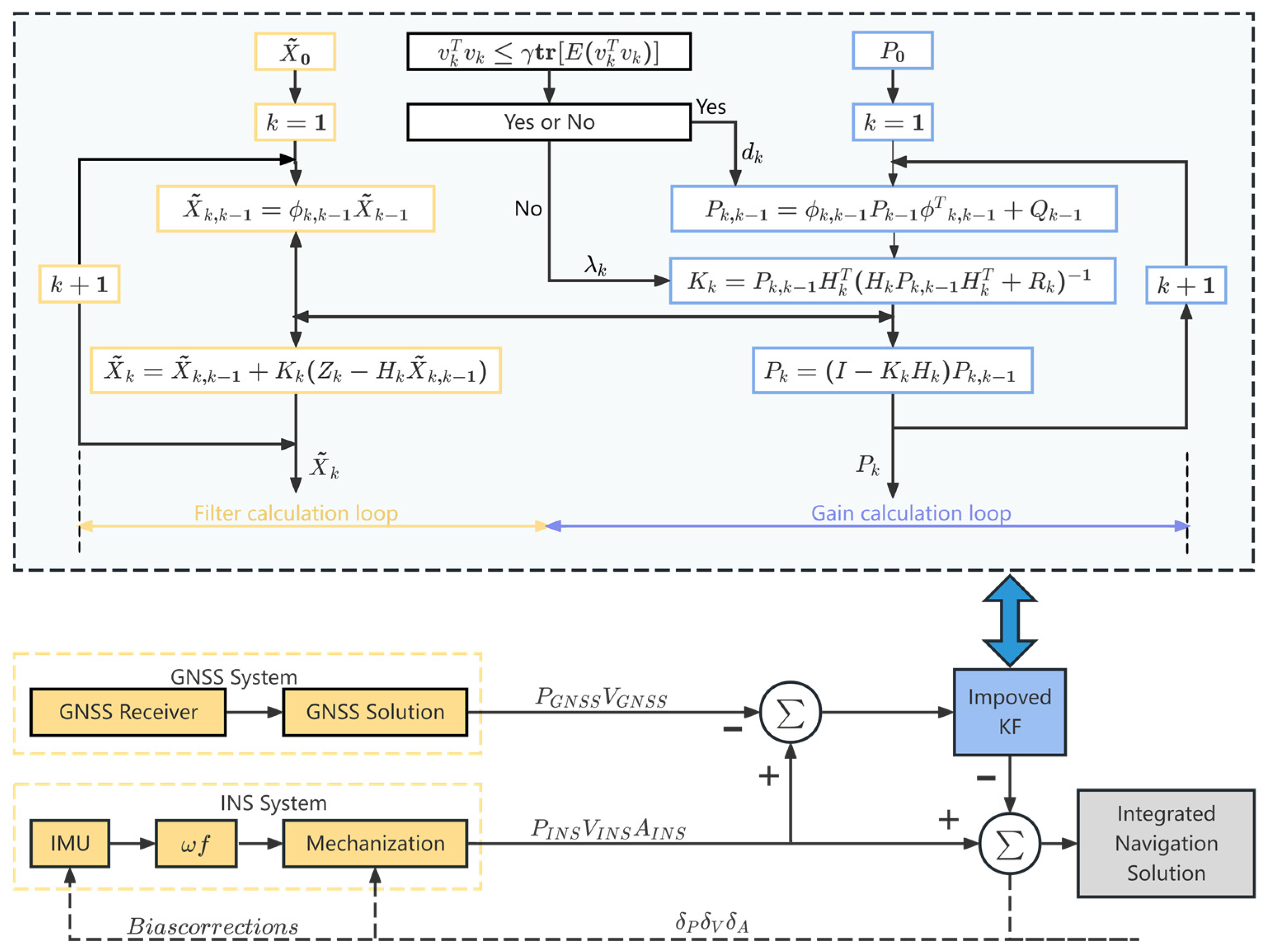

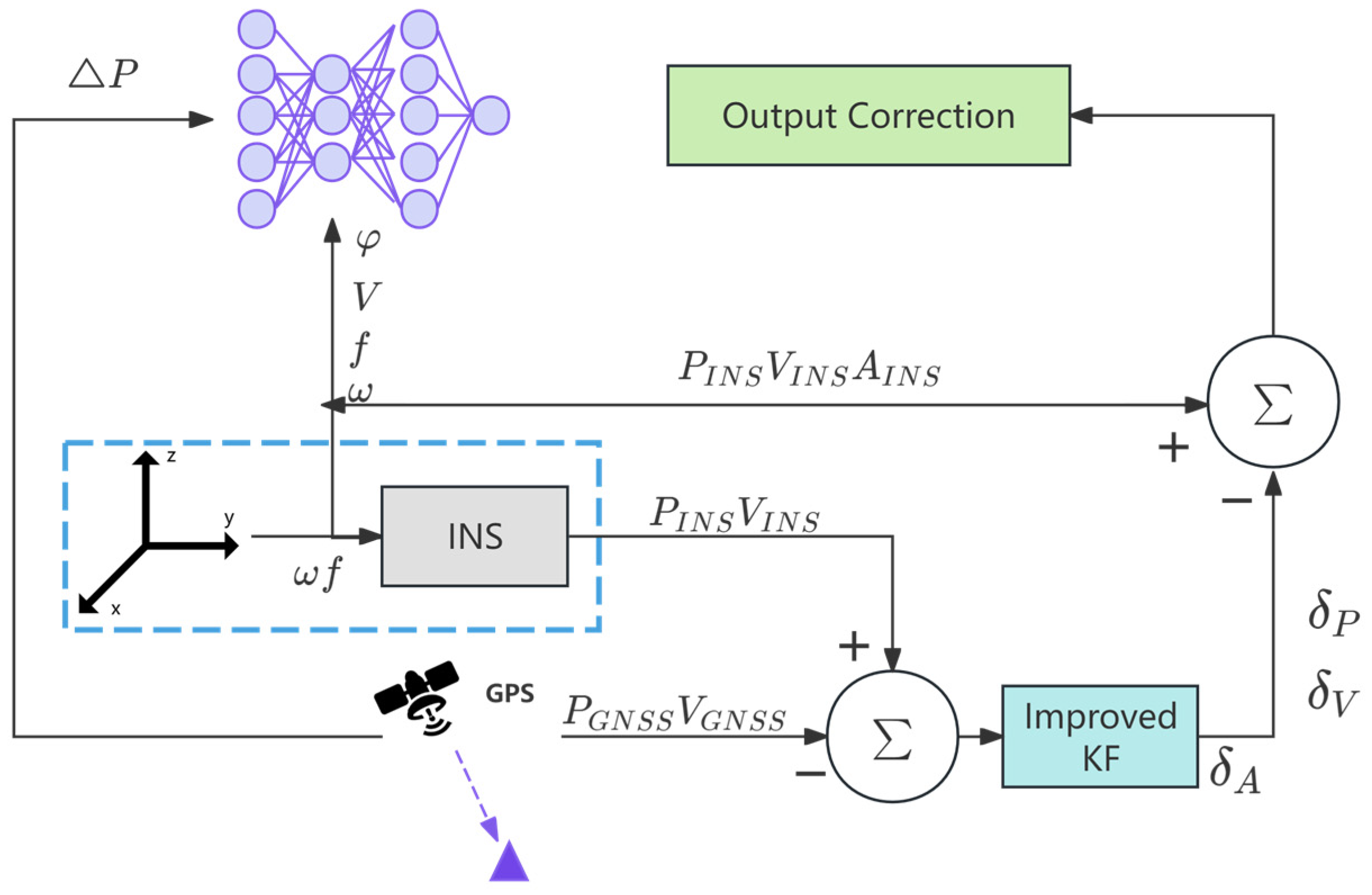

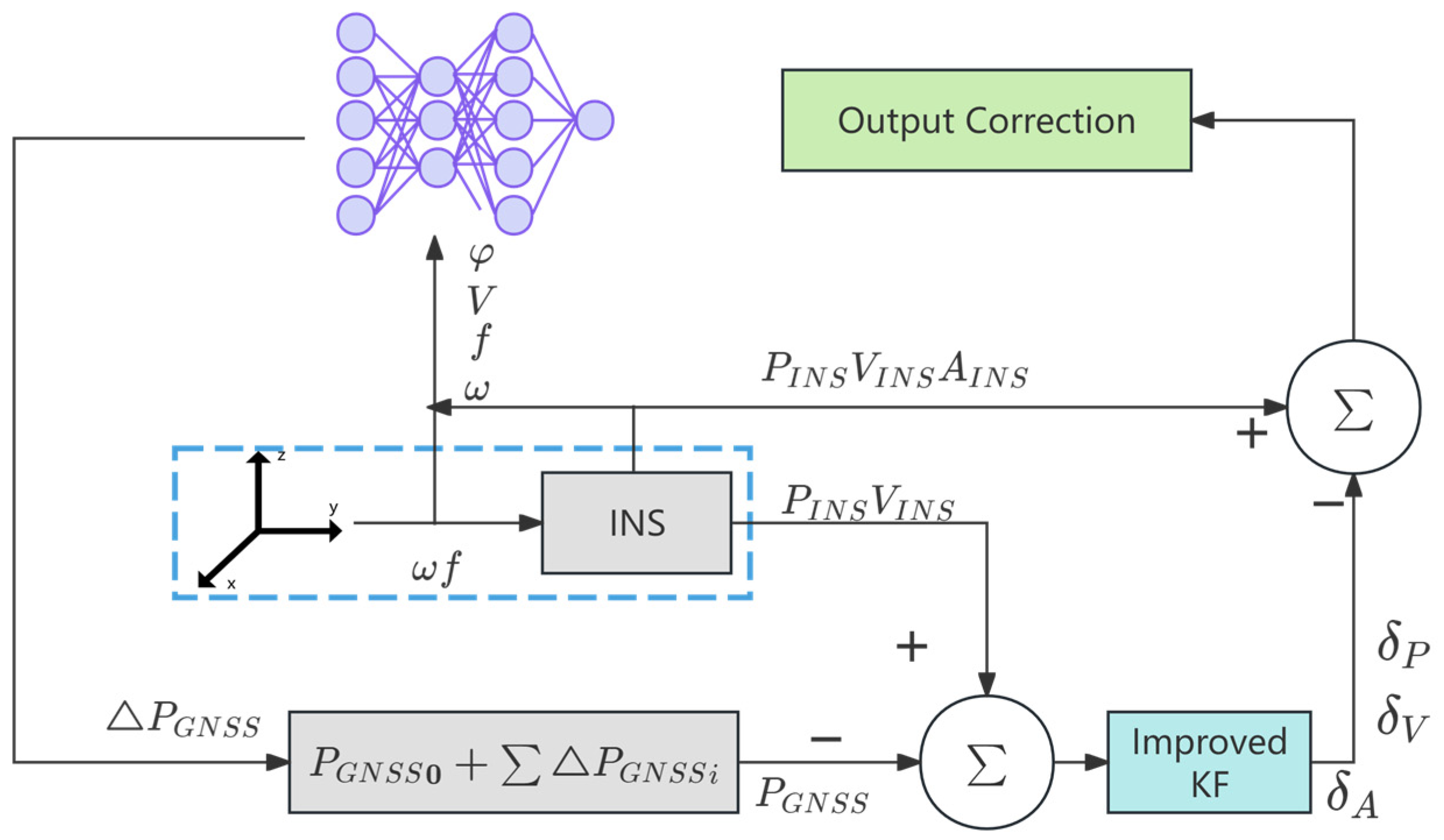

Data Fusion and Model Training: An adaptive strong tracking filter is designed, combining Sage–Husa adaptive filtering and strong tracking Kalman filtering to dynamically estimate noise covariances and adjust filtering strategies, addressing the challenge of unknown pseudo-GNSS position covariances while enhancing robustness and stability. When GNSS signals are available, an improved Kalman filter is employed to deeply fuse GNSS and INS data, generating high-precision navigation solutions that train the CNN-BiLSTM-Attention model to establish a nonlinear mapping between specific force, angular velocity, speed, heading angle, and GNSS position increments. During GNSS outages, the trained model generates pseudo-GNSS signals to aid the integrated navigation system, effectively mitigating rapid positioning error divergence.

Experimental Validation: The performance of the proposed method is rigorously evaluated through real-vehicle experiments. The results demonstrate that, during GNSS outages, the CNN-BiLSTM-Attention method provides stable, high-precision navigation solutions, significantly outperforming standalone INS and other deep learning approaches, while exhibiting faster convergence and higher prediction reliability.

The organization of this paper is outlined as follows: Section 2 presents conventional Kalman filtering techniques for GNSS/INS integrated navigation, along with the enhanced Kalman filter proposed in this study. Section 3 elaborates on the architecture and implementation details of the CNN-BiLSTM-Attention model. Section 4 provides a comprehensive evaluation of the proposed error compensation approach through real-vehicle experiments. Finally, Section 5 summarizes the key findings and concludes the study.

4. Experiments and Analysis

To further validate the compensation performance of the proposed method for the GNSS/INS integrated navigation during GNSS signal outages, a real-vehicle experiment was conducted in Jiangsu Province, China. The experimental setup involved a SPAN-CPT inertial navigation system (IMU sampling rate: 100 Hz) and a Leica 1200 RTK-GNSS receiver (GNSS update rate: 1 Hz). The detailed specifications of the IMU sensor are provided in Table 2. In this study, the high-precision RTK-GNSS measurements were used as the reference trajectory, and the proposed algorithm was evaluated through post-processed navigation data during simulated GNSS outages.

Table 2.

Specifications of the IMU sensor.

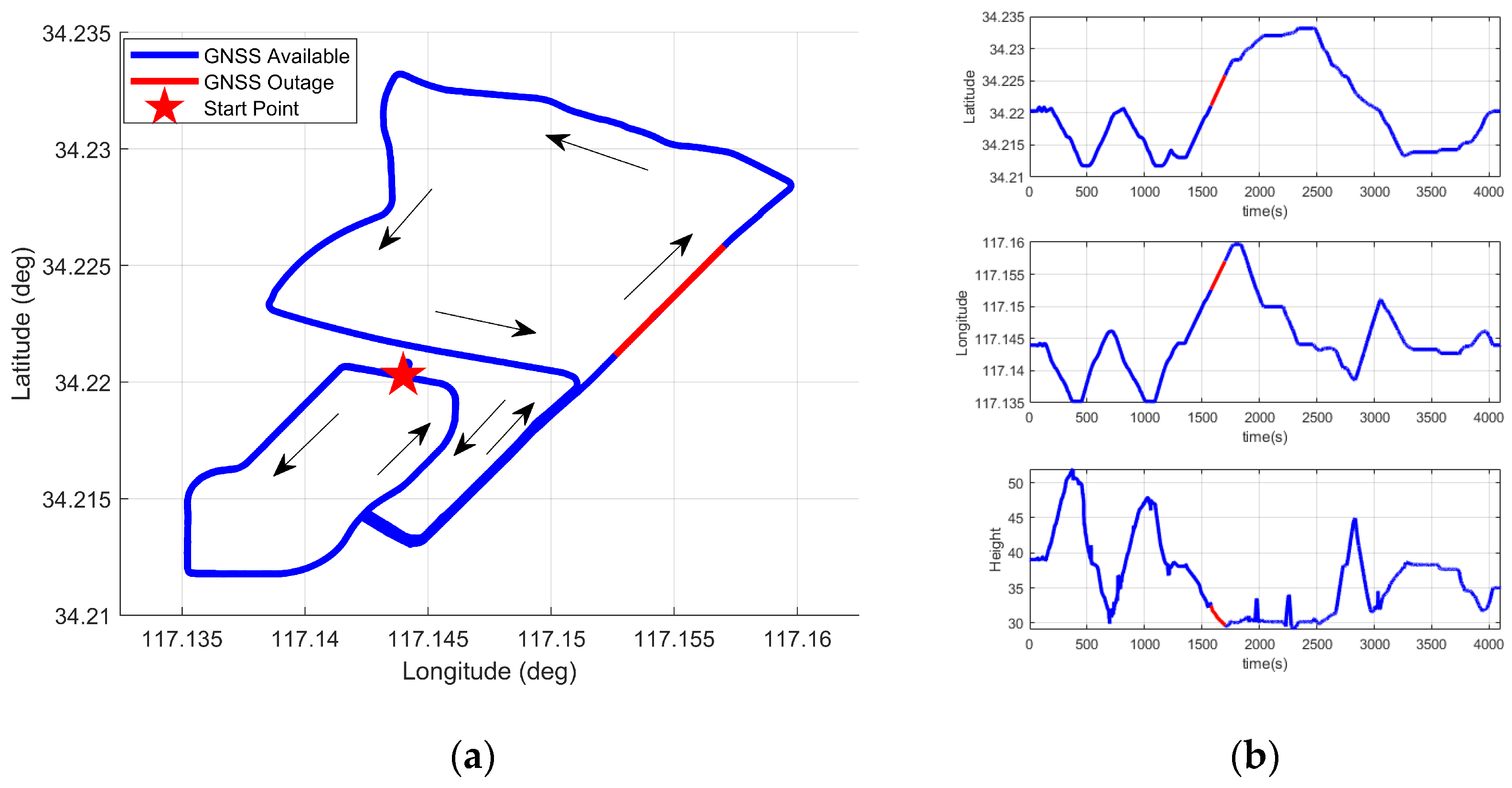

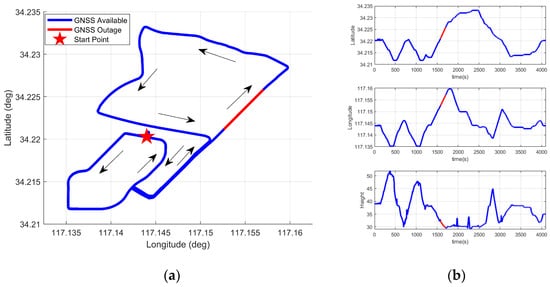

The vehicle traveled for a duration of one hour, and the driving trajectory is shown in Figure 8. To ensure the integrity of the experimental data, the experiment was conducted in an open area. In the later stage of the experiment, GNSS signal interruptions were simulated by manually removing GNSS observation data. In the figure, the blue solid line indicates the segments with available GNSS signals. The red solid line represents the prediction segment. A 100 s data segment starting from the 23rd minute was used as training data. The GNSS signal was cut off at the 26th minute and 20th second to simulate a 120 s GNSS outage. The red pentagram denotes the starting point of the vehicle. Figure 9 shows the positioning errors in the north, east, and down directions under different neural network algorithms.

Figure 8.

Vehicle test trajectory. (a) Vehicle reference trajectory. (b) Vehicle position trajectory.

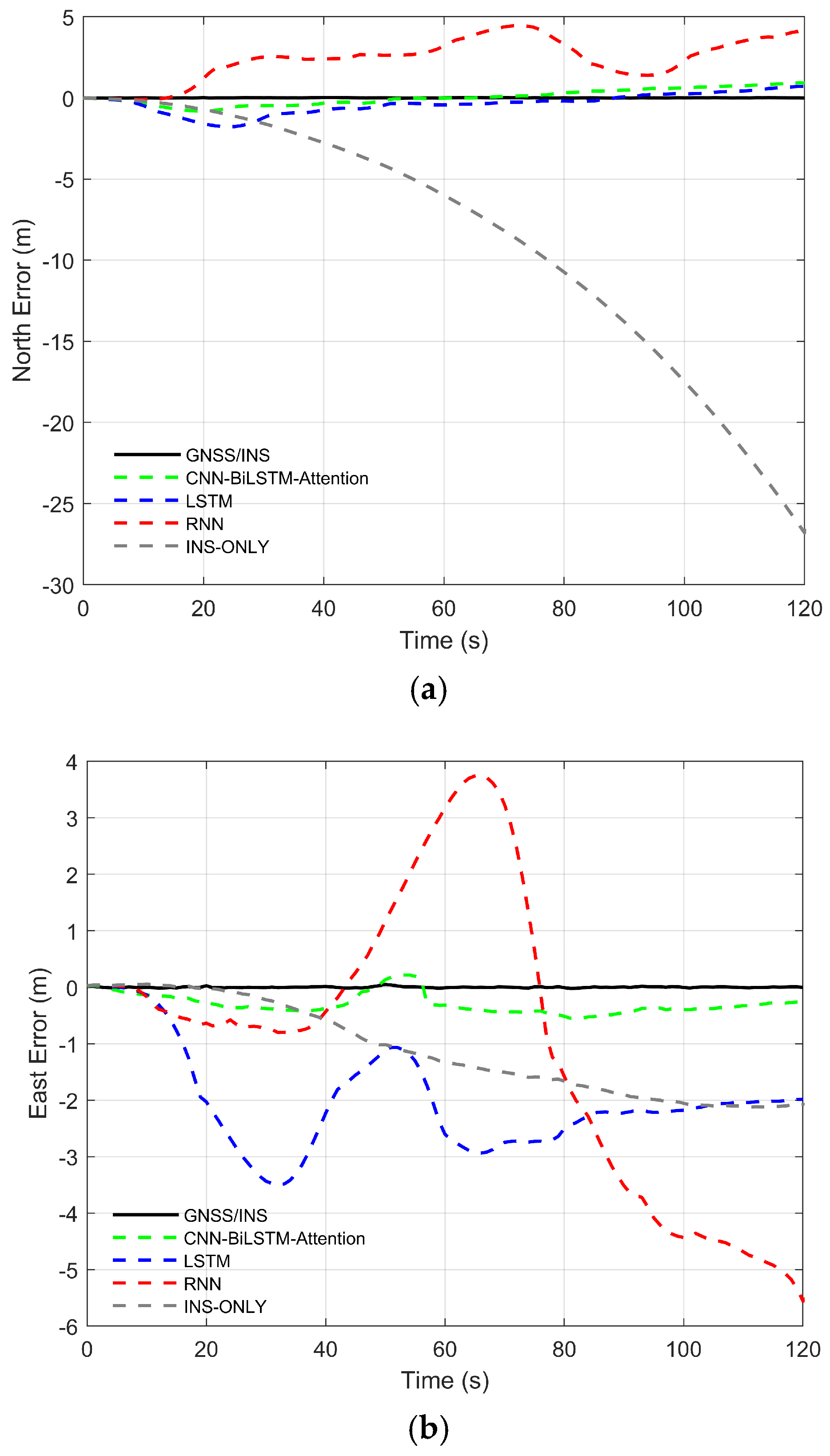

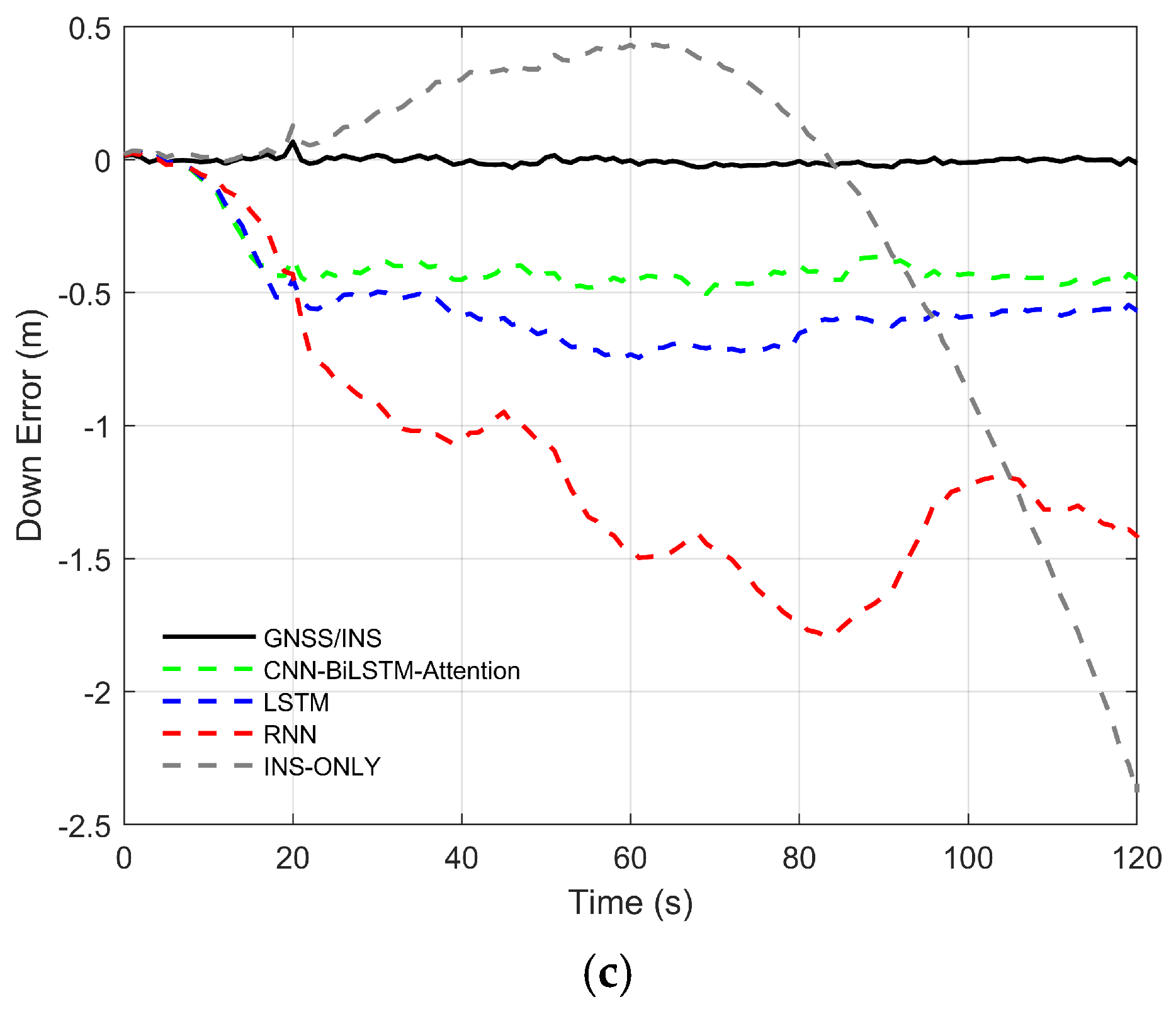

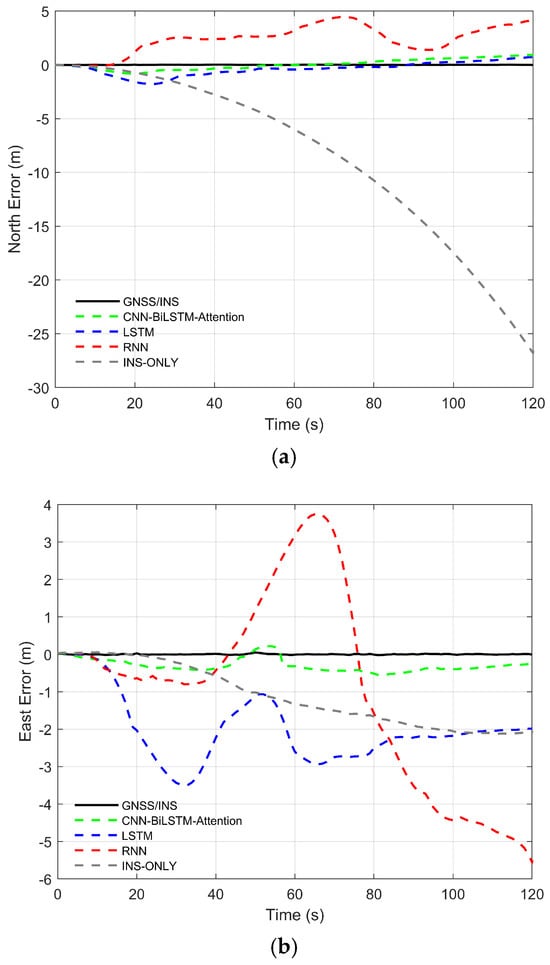

Figure 9.

Positioning errors under different algorithms. (a) North error. (b) East error. (c) Down error.

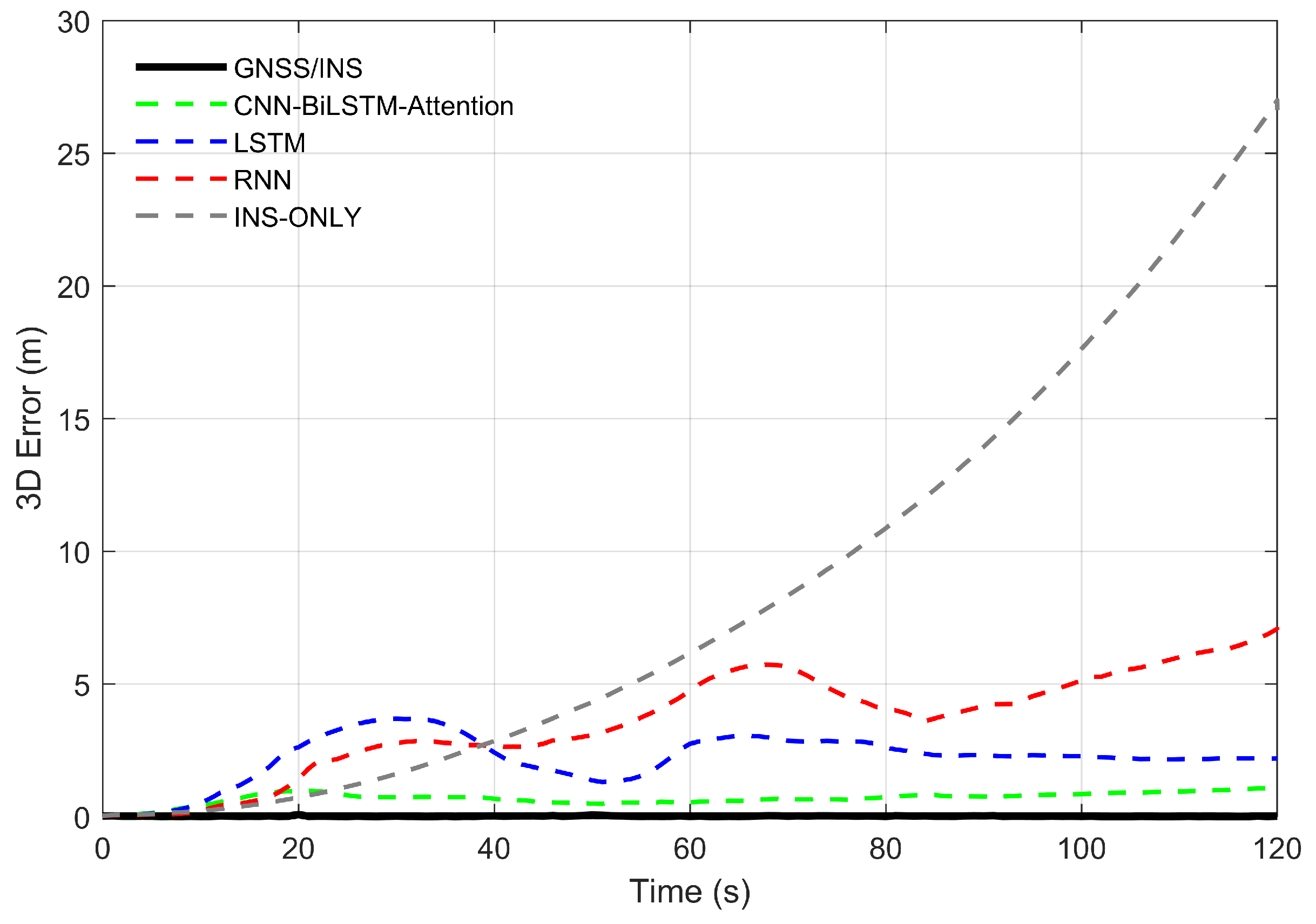

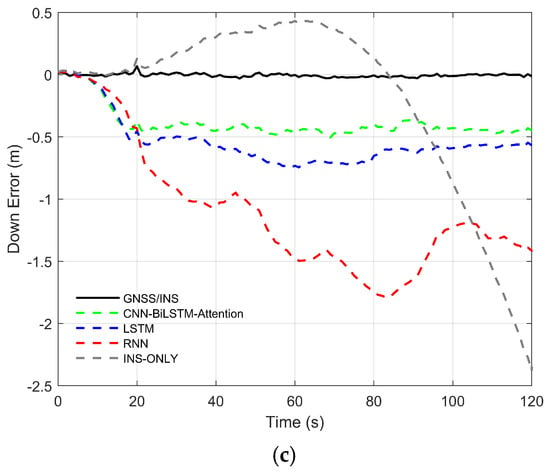

The three-dimensional positioning errors of the vehicle across various algorithms are presented in Figure 10. The corresponding computation formula is provided below:

where , , and represent the north positioning error, east positioning error, altitude positioning error, and three-dimensional position error, respectively.

Figure 10.

Vehicle positioning three-dimensional error.

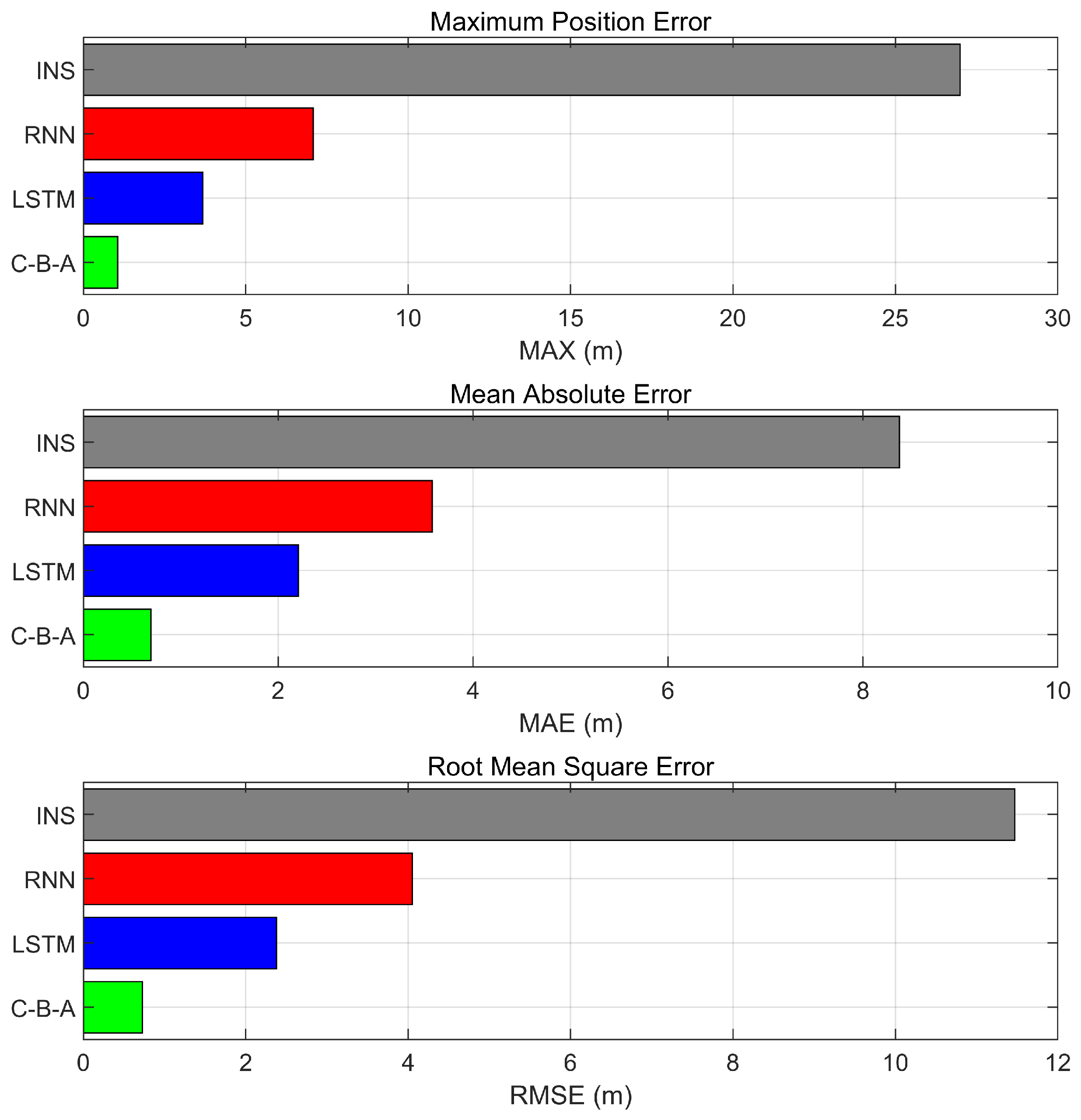

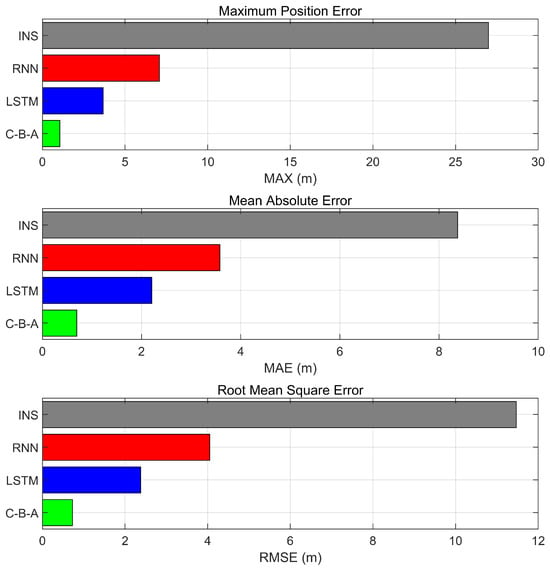

The maximum error (MAX), mean absolute error (MAE), and root mean square error (RMSE) are employed to evaluate the error compensation accuracy of different methods. The percentage error reduction of the proposed method relative to the standalone INS is also calculated. The statistical results are presented in Table 3, and the MAX, MAE, and RMSE in three-dimensional directions are shown in Figure 11. The calculation formulas are as follows:

where represents the error of the i-th sample, and is the total number of samples.

Table 3.

Statistical results of field experimental errors during GNSS outage.

Figure 11.

Evaluation metrics for three-dimensional position and orientation.

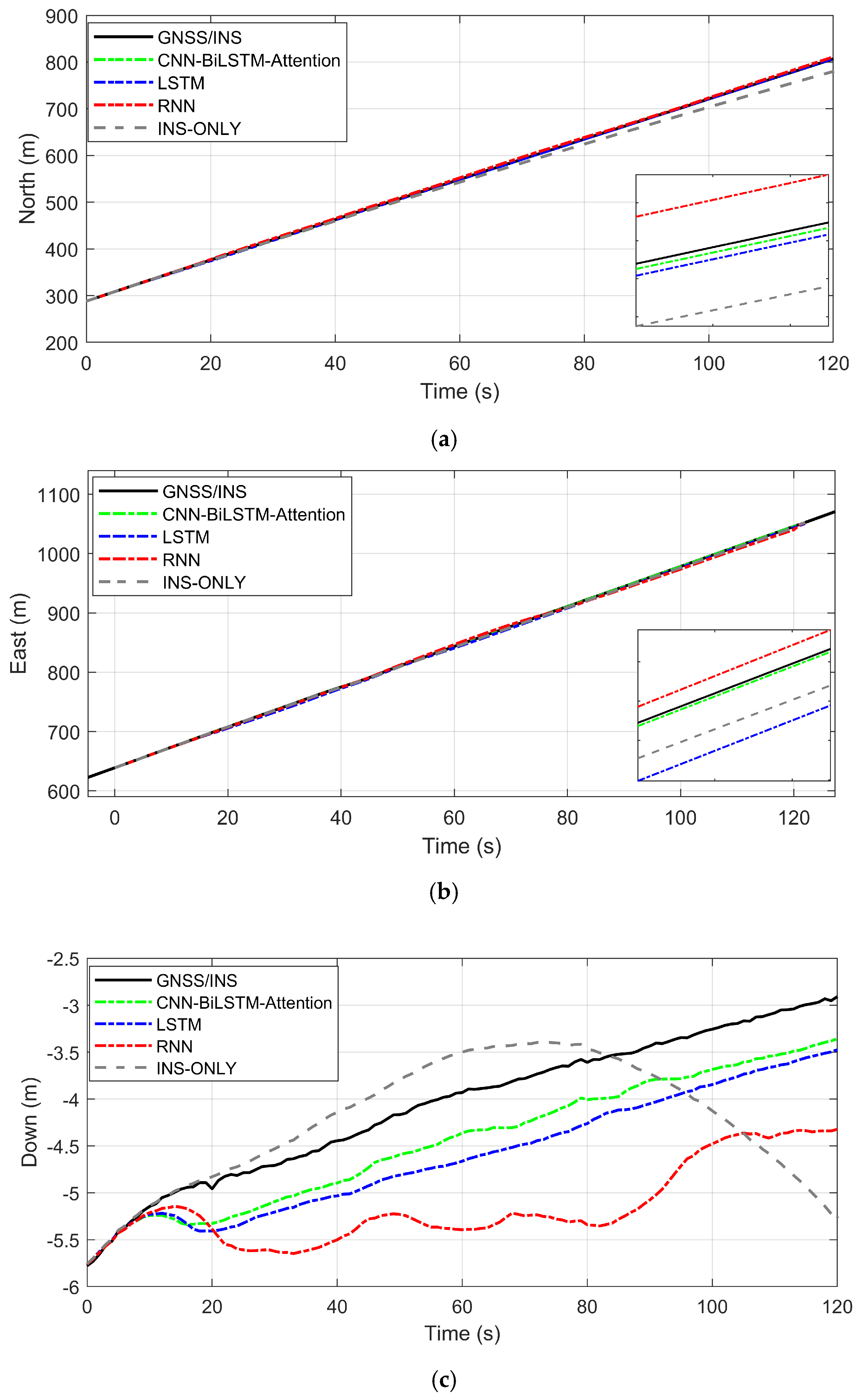

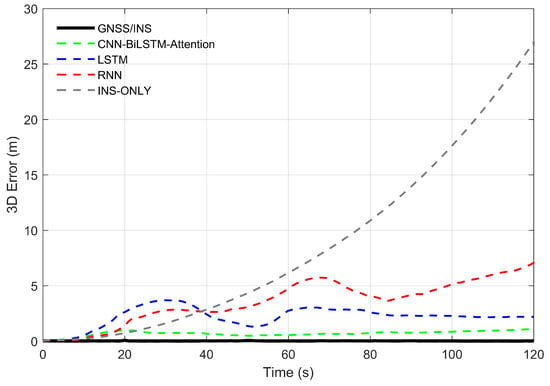

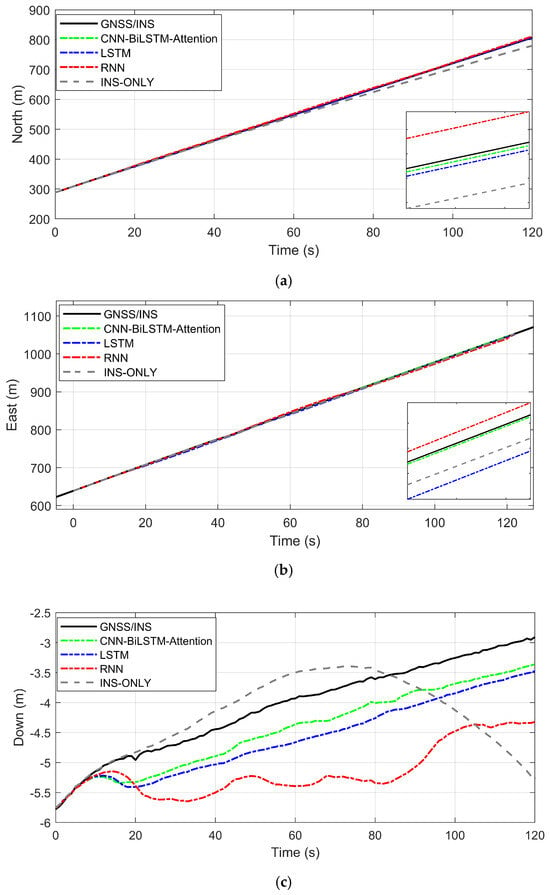

During GNSS outages, different neural network algorithms were used to predict the GNSS displacement increments, and the vehicle’s position was calculated using the improved Kalman filter. The position changes of the vehicle are shown in Figure 12, with the first epoch of the GNSS signal used as the reference point.

Figure 12.

Position changes of vehicle under different algorithms during GNSS outages. (a) north direction; (b) east direction; (c) vertical direction.

From the above figures and tables, it can be observed that different methods exhibit varying compensation performance for the integrated navigation system during GNSS signal outages. The findings can be summarized as follows:

- During GNSS signal outages, the INS cannot integrate with the GNSS to correct system errors. This leads to rapid divergence of positioning errors in the standalone INS over time, with the divergence rate increasing progressively, failing to meet positioning requirements.

- Compared with the standalone INS, the introduction of RNN and LSTM for error compensation significantly improves positioning accuracy in the integrated navigation system. Among them, LSTM outperforms RNN, demonstrating its advantage in extracting temporal feature information. However, as the duration of GNSS signal outages increases, the positioning accuracy of RNN and LSTM in the east-direction error compensation gradually declines. In contrast, the proposed CNN-BiLSTM-Attention model remains unaffected by the prolonged outage duration, benefiting from both the bidirectional processing capability of BiLSTM and the dynamic focus of the Attention Mechanism. The synergy of these two components enables the model to comprehensively capture long-term dependencies and key information within the navigation data, thereby maintaining high positioning accuracy during GNSS signal outages.

- The CNN-BiLSTM-Attention method demonstrates the best compensation performance among the four methods. CNN extracts low-level spatial or temporal features from raw inputs, providing higher-quality input for the subsequent BiLSTM. BiLSTM combines forward and backward information flows, and the Attention Mechanism further optimizes the representation of sequential features by focusing on critical information. This model effectively suppresses the divergence of positioning errors, whether in the short or long term.

- In the north direction, the maximum error (MAX) of the INS reaches 26.8112 m, with MAE and RMSE being 8.2734 m and 11.3666 m, respectively. After introducing RNN, LSTM, and CNN-BiLSTM-Attention, the maximum errors are 4.4577 m, 1.7820 m, and 0.9282 m, respectively; the MAEs are 2.4095 m, 0.5577 m, and 0.4134 m; and the RMSEs are 2.7288 m, 0.7182 m, and 0.4953 m. Compared to INS, RNN, and LSTM, the positioning error of CNN-BiLSTM-Attention decreases by 95.00%, 82.84%, and 25.88% in MAE, and by 95.64%, 81.85%, and 31.04% in RMSE, respectively.

- In the east direction, the maximum error (MAX) of the INS reaches 2.1216 m, with MAE and RMSE being 1.1092 m and 1.3669 m, respectively. After introducing RNN, LSTM, and CNN-BiLSTM-Attention for error compensation, the maximum errors are 5.5597 m, 3.5069 m, and 0.5624 m, respectively; the MAEs are 2.1219 m, 2.0000 m, and 0.3152 m; and the RMSEs are 2.7420 m, 2.1932 m, and 0.3423 m. Compared to INS, RNN, and LSTM, the positioning error of CNN-BiLSTM-Attention decreases by 71.58%, 85.15%, and 84.24% in MAE, and by 74.96%, 87.52%, and 84.39% in RMSE, respectively.

- In the down direction, the maximum error (MAX) of INS reaches 2.3810 m, with MAE and RMSE being 0.4680 m and 0.7323 m, respectively. After introducing RNN, LSTM, and CNN-BiLSTM-Attention for error compensation, the maximum errors are 1.7906 m, 0.7455 m, and 0.5046 m, respectively; the MAEs are 1.0900 m, 0.5381 m, and 0.3893 m; and the RMSEs are 1.2034 m, 0.5713 m, and 0.4083 m. Compared to INS, RNN, and LSTM, the positioning error of CNN-BiLSTM-Attention decreases by 16.82%, 64.28%, and 27.65% in MAE, and by 44.24%, 66.07%, and 28.52% in RMSE, respectively.

- In the three-dimensional direction, the maximum error (MAX) of the INS is 26.9968 m, with MAE and RMSE being 8.3736 m and 11.4719 m, respectively. After introducing RNN, LSTM, and CNN-BiLSTM-Attention, the maximum errors are 7.0729 m, 3.6818 m, and 1.0580 m, respectively; the MAEs are 3.5801 m, 2.2055 m, and 0.6927 m; and the RMSEs are 4.0513 m, 2.3775 m, and 0.7275 m. In terms of MAE, compared to the other methods, the positioning accuracy of CNN-BiLSTM-Attention improves by 91.73%, 80.65%, and 68.59%, respectively. In terms of RMSE, the positioning accuracy of CNN-BiLSTM-Attention improves by 93.66%, 82.04%, and 69.40%, respectively. Overall, CNN-BiLSTM-Attention achieves the highest error compensation accuracy compared to the other methods.

Through the analysis of experimental results, compared to traditional neural networks, CNN-BiLSTM-Attention can better suppress the divergence of positioning errors, improving the vehicle’s positioning accuracy in the absence of GNSS signals.

5. Conclusions

This paper proposes an error compensation method for GNSS/INS integrated navigation based on CNN-BiLSTM-Attention to address positioning errors during GNSS signal outages. The feasibility and effectiveness of the proposed method were validated through real-vehicle experiments. The CNN-BiLSTM-Attention model integrates the strengths of CNN, BiLSTM, and the Attention Mechanism. Specifically, CNN extracts low-level spatial and temporal features from raw inputs, providing high-quality data for the subsequent BiLSTM. BiLSTM effectively models long-term dependencies within sequential data by integrating both forward and backward information streams. The Attention Mechanism further refines the output of BiLSTM by optimizing the representation of sequential features, enabling the model to dynamically focus on the most relevant information for the prediction task. This integration significantly enhances global dependency capture, dynamic feature selection, and computational efficiency.

When GNSS signals are available, a mapping relationship between IMU data and GNSS positional increments is established using the neural network model. During GNSS outages, the trained model generates pseudo-GNSS signals, which are combined with an improved Kalman filter to compensate for errors in the integrated navigation system, effectively suppressing the divergence of positioning errors. To evaluate the performance of the proposed method, real-vehicle experiments were conducted in Jiangsu Province, China. The experimental results demonstrate that, compared to standalone INS, RNN, and LSTM, the CNN-BiLSTM-Attention method improves positioning accuracy during GNSS outages by 91.06%, 86.58%, and 79.58%, respectively. These findings confirm that the proposed method can significantly mitigate the divergence of positioning errors in the absence of GNSS signals, thereby enhancing the positioning accuracy and robustness of the integrated navigation system. This approach provides reliable technical support for navigation tasks in challenging environments.

Author Contributions

Conceptualization, W.D. and J.W.; methodology, W.D.; software, W.D.; validation, W.D. and J.W.; formal analysis, W.D.; investigation, W.D.; resources, J.W. and H.H.; data curation, W.D.; writing—original draft preparation, W.D.; writing—review and editing, W.D., H.H., X.X., D.L., C.C. and L.W.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 42374024 and No. 42274029), the Beijing Nova Program (Grant No. 20230484270), and the BUCEA Doctor Graduate Scientific Research Ability Improvement Project (DG2024033).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Andrade, A.A.L. The Global Navigation Satellite System: Navigating into the New Millennium; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Zidan, J.; Adegoke, E.I.; Kampert, E.; Birrell, S.A.; Ford, C.R.; Higgins, M.D. GNSS vulnerabilities and existing solutions: A review of the literature. IEEE Access 2020, 9, 153960–153976. [Google Scholar] [CrossRef]

- Elsanhoury, M.; Mäkelä, P.; Koljonen, J.; Välisuo, P.; Shamsuzzoha, A.; Mantere, T.; Elmusrati, M.; Kuusniemi, H. Precision positioning for smart logistics using ultra-wideband technology-based indoor navigation: A review. IEEE Access 2022, 10, 44413–44445. [Google Scholar] [CrossRef]

- Noureldin, A.; Karamat, T.B.; Eberts, M.D.; El-Shafie, A. Performance enhancement of MEMS-based INS/GPS integration for low-cost navigation applications. IEEE Trans. Veh. Technol. 2008, 58, 1077–1096. [Google Scholar] [CrossRef]

- Li, X.; Ge, M.; Dai, X.; Ren, X.; Fritsche, M.; Wickert, J.; Schuh, H. Accuracy and reliability of multi-GNSS real-time precise positioning: GPS, GLONASS, BeiDou, and Galileo. J. Geod. 2015, 89, 607–635. [Google Scholar] [CrossRef]

- Falco, G.; Pini, M.; Marucco, G. Loose and tight GNSS/INS integrations: Comparison of performance assessed in real urban scenarios. Sensors 2017, 17, 255. [Google Scholar] [CrossRef]

- Jing, H.; Gao, Y.; Shahbeigi, S.; Dianati, M. Integrity monitoring of GNSS/INS based positioning systems for autonomous vehicles: State-of-the-art and open challenges. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14166–14187. [Google Scholar] [CrossRef]

- Zhuang, Y.; Sun, X.; Li, Y.; Huai, J.; Hua, L.; Yang, X.; Cao, X.; Zhang, P.; Cao, Y.; Qi, L.; et al. Multi-sensor integrated navigation/positioning systems using data fusion: From analytics-based to learning-based approaches. Inf. Fusion 2023, 95, 62–90. [Google Scholar] [CrossRef]

- Lu, Y.; Ma, H.; Smart, E.; Yu, H. Real-time performance-focused localization techniques for autonomous vehicle: A review. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6082–6100. [Google Scholar] [CrossRef]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman filter and its application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015. [Google Scholar]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Soken, H.E.; Hajiyev, C.; Sakai, S.I. Robust Kalman filtering for small satellite attitude estimation in the presence of measurement faults. Eur. J. Control. 2014, 20, 64–72. [Google Scholar] [CrossRef]

- Hajiyev, C.; Soken, H.E. Robust adaptive Kalman filter for estimation of UAV dynamics in the presence of sensor/actuator faults. Aerosp. Sci. Technol. 2013, 28, 376–383. [Google Scholar] [CrossRef]

- Li, W.; Jia, Y. H-infinity filtering for a class of nonlinear discrete-time systems based on unscented transform. Signal Process. 2010, 90, 3301–3307. [Google Scholar] [CrossRef]

- Arasaratnam, I.; Haykin, S. Square-root quadrature Kalman filtering. IEEE Trans. Signal Process. 2008, 56, 2589–2593. [Google Scholar] [CrossRef]

- Jwo, D.J.; Biswal, A.; Mir, I.A. Artificial neural networks for navigation systems: A review of recent research. Appl. Sci. 2023, 13, 4475. [Google Scholar] [CrossRef]

- Sharaf, R.; Noureldin, A. Sensor integration for satellite-based vehicular navigation using neural networks. IEEE Trans. Neural Netw. 2007, 18, 589–594. [Google Scholar] [CrossRef]

- Tan, X.; Wang, J.; Han, H.; Yao, Y. Improved Neural Network-Assisted GPS/INS Integrated Navigation Algorithm. J. China Univ. Min. Technol. 2014, 43, 526–533. [Google Scholar]

- Gao, W.; Feng, X.; Zhu, D. Neural Network-Assisted Adaptive GPS/INS Integrated Navigation Algorithm. Acta Geod. Cartogr. Sin. 2007, 26, 64–67. [Google Scholar]

- Zhang, Y.; Wang, L. A hybrid intelligent algorithm DGP-MLP for GNSS/INS integration during GNSS outages. J. Navig. 2018, 72, 375–388. [Google Scholar] [CrossRef]

- Dai, H.F.; Bian, H.W.; Wang, R.Y.; Ma, H. An INS/GNSS integrated navigation in GNSS denied environment using recurrent neural network. Def. Technol. 2020, 16, 334–340. [Google Scholar] [CrossRef]

- Zhang, Y. A fusion methodology to bridge GPS outages for INS/GPS integrated navigation system. IEEE Access 2019, 7, 61296–61306. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Q.; Shao, S.; Niu, T.; Yang, X. Attention-based LSTM network for rotatory machine remaining useful life prediction. IEEE Access 2020, 8, 132188–132199. [Google Scholar] [CrossRef]

- Simon, D. Kalman filtering. Embed. Syst. Program. 2001, 14, 72–79. [Google Scholar]

- Khodarahmi, M.; Vafa, M. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Narasimhappa, M.; Mahindrakar, A.D.; Guizilini, V.C.; Terra, M.H.; Sabat, S.L. MEMS-based IMU drift minimization: Sage Husa adaptive robust Kalman filtering. IEEE Sens. J. 2019, 20, 250–260. [Google Scholar] [CrossRef]

- Jwo, D.-J.; Wang, S.-H. Adaptive fuzzy strong tracking extended Kalman filtering for GPS navigation. IEEE Sens. J. 2007, 7, 778–789. [Google Scholar] [CrossRef]

- Park, W.B.; Chung, J.; Jung, J.; Sohn, K.; Singh, S.P.; Pyo, M.; Shin, N.; Sohn, K.S. Classification of crystal structure using a convolutional neural network. IUCrJ 2017, 4, 486–494. [Google Scholar] [CrossRef]

- Yang, A.; Yang, X.; Wu, W.; Liu, H.; Zhuansun, Y. Research on feature extraction of tumor image based on convolutional neural network. IEEE Access 2019, 7, 24204–24213. [Google Scholar] [CrossRef]

- Cui, Z.; Chen, W.; Chen, Y. Multi-scale convolutional neural networks for time series classification. arXiv 2016, arXiv:1603.06995. [Google Scholar]

- Salem, F.M. Recurrent Neural Networks; Springer International Publishing: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Wang, J.; Sun, L.; Li, H.; Ding, R.; Chen, N. Prediction model of fouling thickness of heat exchanger based on TA-LSTM structure. Processes 2023, 11, 2594. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; HU, S.-M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Liu, Y.; Pu, H.; Sun, D.-W. Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends Food Sci. Technol. 2021, 113, 193–204. [Google Scholar] [CrossRef]

- Ye, X.; Song, F.; Zhang, Z.; Zeng, Q. A review of small UAV navigation system based on multisource sensor fusion. IEEE Sens. J. 2023, 23, 18926–18948. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).