Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery

Abstract

:1. Introduction

2. Methodology

2.1. Description of the Study Site

2.2. Airborne Data Collection

2.3. Field Data Collection

2.4. Vegetation Classes

| Abbreviation | Dominant Species | Subdominant Species | Canopy Height (cm) | Total Coverage of Vegetation (%) | Measured Area (m2) |

|---|---|---|---|---|---|

| CYN | Cynodon dactylon | Achillea collina | 21.2 | 96.2 | 211 |

| FAC | Festuca pseudovina | Achillea collina | 3.0 | 80.0 | 141 |

| FAR | Festuca pseudovina | Artemisia santonica | 28.3 | 80.8 | 96 |

| CAM | Camphorosma annua | - | 4.4 | 28.0 | 118 |

| PHO | Pholiurus pannonicus | - | 18.6 | 47.0 | 142 |

| ART | Artemisia santonica | Pholiurus pannonicus | 13.7 | 43.7 | 64 |

| ELY | Elymus repens | - | 96.0 | 64.0 | 402 |

| ALO | Alopecurus pratensis | Agrostis stolonifera | 48.3 | 93.3 | 531 |

| BEC | Beckmannia eruciformis | Agrostis stolonifera, Cirsium brachycephalum | 87.5 | 91.2 | 552 |

| ACI | Alopecurus pratensis | Cirsium arvense Elymus repens | 140.0 | 85.0 | 82 |

| CAR | Carex spp. | - | 100.0 | 90.0 | 253 |

| GLY | Glyceria maxima | - | 40.0 | 90.0 | 229 |

| TYP | Typha angustifolia | Salvinia natans | 200.0 | 70.0 | 63 |

| SAL | Salvinia natans | Typha angustifolia, Utricularia vulgaris | 133.0 | 70.0 | 65 |

| BOL | Bolboschoenus maritimus | - | 76.2 | 78.8 | 179 |

| SCH | Schoenoplectus lacustris ssp. tabernaemontani | - | 166.0 | 87.0 | 121 |

| PHR | Phragmites communis | - | 250.0 | 100.0 | 297 |

| FMM * | Alopecurus pratensis | - | 10.0 | 80.0 | 351 |

| ARA * | Gypsophyla muralis, Polygonum aviculare | - | 8.0 | 80.0 | 123 |

| MUD ** | not relevant | - | 10.0 | 8.0 | 158 |

2.5. Image Processing

2.6. Separating the Classes Using Narrow Band NDVI

2.7. Image Classification

2.7.1. Applied Classification Methods

2.7.2. Image Classification Using Original Spectral Bands

| Field Samples (Pixel) | Random Samples (Pixel) |

|---|---|

| 60–80 | 30 |

| 81–100 | 40 |

| 101–200 | 50 |

| 201–600 | 100 |

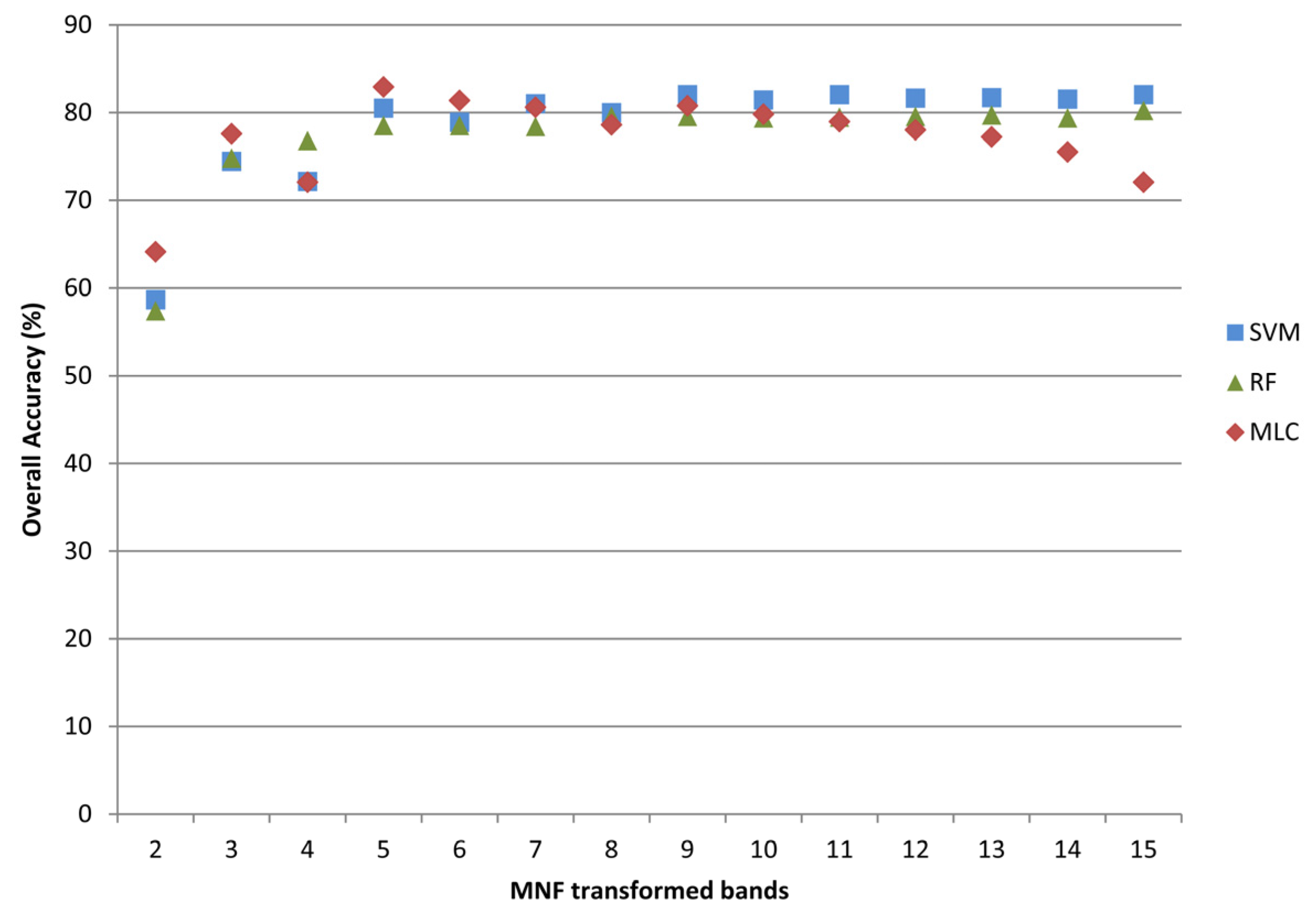

2.7.3. Image Classification Using MNF-Transformed Bands

3. Results

3.1. Separating the Classes Using Narrow Band NDVI

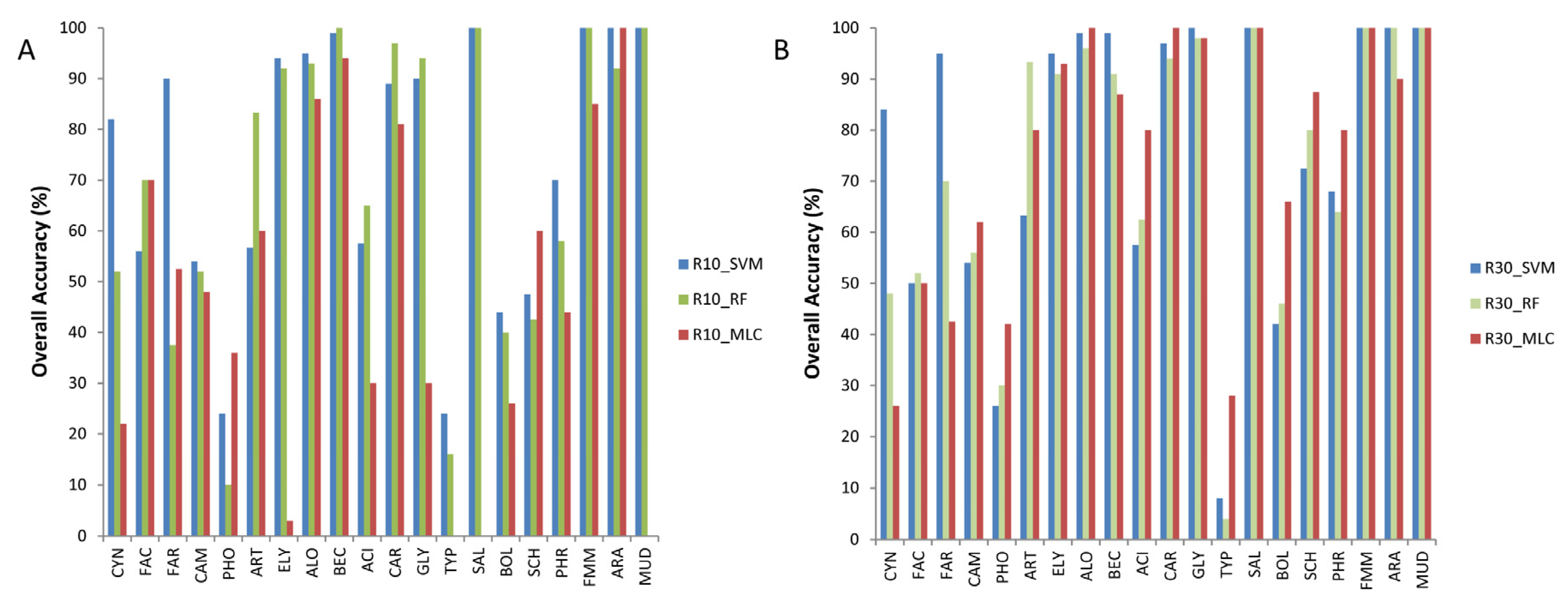

3.2. Image Classification Using Original Spectral Bands

| Class | CYN | FAC | FAR | CAM | PHO | ART | ELY | ALO | BEC | ACI | CAR | GLY | TYP | SAL | BOL | SCH | PHR | FMM | ARA | MUD | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CYN | 19 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 34 |

| FAC | 0 | 29 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 34 |

| FAR | 0 | 21 | 35 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 56 |

| CAM | 0 | 0 | 0 | 36 | 8 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 46 |

| PHO | 0 | 0 | 0 | 9 | 13 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 32 |

| ART | 0 | 0 | 0 | 5 | 29 | 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 53 |

| ELY | 0 | 0 | 0 | 0 | 0 | 0 | 79 | 0 | 30 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 116 |

| ALO | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 89 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 95 |

| BEC | 3 | 0 | 0 | 0 | 0 | 0 | 7 | 0 | 63 | 9 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 85 |

| ACI | 28 | 0 | 0 | 0 | 0 | 0 | 1 | 11 | 0 | 23 | 5 | 5 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 74 |

| CAR | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 78 | 3 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 83 |

| GLY | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 12 | 40 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 54 |

| TYP | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 4 | 0 | 7 | 3 | 4 | 0 | 0 | 0 | 19 |

| SAL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 30 | 0 | 0 | 0 | 0 | 0 | 0 | 30 |

| BOL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 26 | 0 | 31 | 16 | 3 | 0 | 0 | 0 | 76 |

| SCH | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 9 | 21 | 3 | 0 | 0 | 0 | 33 |

| PHR | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 3 | 0 | 37 | 0 | 0 | 0 | 41 |

| FMM | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100 | 0 | 0 | 100 |

| ARA | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 49 | 0 | 49 |

| MUD | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 50 | 50 |

| Total | 50 | 50 | 40 | 50 | 50 | 30 | 100 | 100 | 100 | 40 | 100 | 50 | 30 | 30 | 50 | 40 | 50 | 100 | 50 | 50 | 1160 |

| PA (%) | 38.0 | 58.0 | 87.5 | 72.0 | 26.0 | 63.3 | 79.0 | 89.0 | 63.0 | 57.5 | 78.0 | 80.0 | 13.3 | 100.0 | 62.0 | 52.5 | 74.0 | 100.0 | 98.0 | 100.0 | |

| UA (%) | 55.9 | 85.3 | 62.5 | 78.3 | 40.6 | 35.8 | 68.1 | 93.7 | 74.1 | 31.1 | 94.0 | 74.1 | 9.3 | 100.0 | 40.8 | 63.6 | 90.2 | 100.0 | 100.0 | 100.0 |

3.3. Image Classification Using MNF-Transformed Bands

| Class | CYN | FAC | FAR | CAM | PHO | ART | ELY | ALO | BEC | ACI | CAR | GLY | TYP | SAL | BOL | SCH | PHR | FMM | ARA | MUD | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CYN | 42 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 43 |

| FAC | 0 | 25 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 27 |

| FAR | 0 | 25 | 38 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 63 |

| CAM | 0 | 0 | 0 | 36 | 8 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 45 |

| PHO | 0 | 0 | 0 | 9 | 13 | 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 33 |

| ART | 0 | 0 | 0 | 5 | 29 | 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 53 |

| ELY | 0 | 0 | 0 | 0 | 0 | 0 | 95 | 0 | 1 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 103 |

| ALO | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99 |

| BEC | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 109 |

| ACI | 7 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 0 | 23 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 36 |

| CAR | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 97 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 97 |

| GLY | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 3 | 50 | 0 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 60 |

| TYP | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 23 | 2 | 5 | 0 | 0 | 0 | 33 |

| SAL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 30 | 0 | 0 | 0 | 0 | 0 | 0 | 30 |

| BOL | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 21 | 9 | 4 | 0 | 0 | 0 | 61 |

| SCH | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 6 | 29 | 0 | 0 | 0 | 0 | 35 |

| PHR | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 34 | 0 | 0 | 0 | 34 |

| FMM | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100 | 0 | 0 | 100 |

| ARA | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 49 | 0 | 49 |

| MUD | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 50 | 50 |

| Total | 50 | 50 | 40 | 50 | 50 | 30 | 100 | 100 | 100 | 40 | 100 | 50 | 30 | 30 | 50 | 40 | 50 | 100 | 50 | 50 | 1160 |

| PA (%) | 84.0 | 50.0 | 95.0 | 72.0 | 26.0 | 63.3 | 95.0 | 99.0 | 99.0 | 57.5 | 97.0 | 100.0 | 10.0 | 100.0 | 42.0 | 72.5 | 68.0 | 100.0 | 98.0 | 100.0 | |

| UA (%) | 97.0 | 92.6 | 60.3 | 80.0 | 39.4 | 35.8 | 92.2 | 100.0 | 90.8 | 63.9 | 100.0 | 83.3 | 9.1 | 100.0 | 34.4 | 82.9 | 100.0 | 100.0 | 100.0 | 100.0 |

| SVM | RF | MLC | ||||

|---|---|---|---|---|---|---|

| Original Bands | MNF Bands | Original Bands | MNF Bands | Original Bands | MNF Bands | |

| Overall accuracy of vegetation classes (%) | 72.85 | 82.06 | 72.89 | 79.14 | - | 80.78 |

| Overall accuracy of vegetation groups (%) | 93.30 | 98.70 | 90.70 | 95.77 | - | 95.77 |

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mace, G.M.; Norris, K.; Fitter, A.H. Biodiversity and ecosystem services: A multilayered relationship. Trends Ecol. Evol. 2012, 27, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Lengyel, S.; Déri, E.; Varga, Z.; Horváth, R.; Tóthmérész, B.; Henry, P.Y.; Kobler, A.; Kutnar, L.; Babij, V.; Seliskar, A.; et al. Habitat monitoring in Europe: A description of current practices. Biodivers. Conserv. 2008, 17, 3327–3339. [Google Scholar] [CrossRef]

- Burai, P.; Lövei, G.; Lénárt, Cs.; Nagy, I.; Enyedi, P. Mapping aquatic vegetation of the Rakamaz-Tiszanagyfalui Nagy-Morotva using hyperspectral imagery. Acta Geogr. Debr. Landsc. Environ. Ser. 2010, 4, 1–10. [Google Scholar]

- Kobayashi, T.; Tsend-Ayush, J.; Tateishi, R.A. New tree cover percentage map in Eurasia at 500 m resolution using MODIS data. Remote Sens. 2014, 6, 209–232. [Google Scholar] [CrossRef]

- Cole, B.; McMorrow, J.; Evans, M. Empirical modelling of vegetation abundance from airborne hyperspectral data for upland Peatland restoration monitoring. Remote Sens. 2014, 6, 716–739. [Google Scholar] [CrossRef]

- Alexander, C.; Bøcher, P.K.; Arge, L.; Svenning, J.C. Regional-scale mapping of tree cover, height and main phenological tree types using airborne laser scanning data. Remote Sens. Environ. 2014, 147, 156–172. [Google Scholar] [CrossRef]

- Zlinszky, A.; Schroiff, A.; Kania, A.; Deák, B.; Mücke, W.; Vári, Á.; Székely, B.; Pfeifer, N. Categorizing grassland vegetation with full-waveform airborne laser scanning: A feasibility study for detecting Natura 2000 habitat types. Remote Sens. 2014, 6, 8056–8087. [Google Scholar] [CrossRef]

- Beamish, D. Peat mapping associations of airborne radiometric survey data. Remote Sens. 2014, 6, 521–539. [Google Scholar] [CrossRef]

- Li, C.; Wang, J.; Hu, L.; Yu, L.; Clinton, N.; Huang, H.; Yang, J.; Gong, P.A. Circa 2010 thirty meter resolution forest map for China. Remote Sens. 2014, 6, 5325–5343. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Fusion of hyperspectral and LiDAR remote sensing data for classification of complex forest areas. IEEE Trans. Geosci. Remote. Sens. 2008, 46, 1416–1427. [Google Scholar] [CrossRef]

- Thenkabail, P.S. Hyperspectral Remote Sensing of Vegetation; Taylor and Francis: New York, NY, USA, 2011; p. 781. [Google Scholar]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetlands Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, 110–122. [Google Scholar] [CrossRef]

- Vanden Borre, J.; Paelinckx, D.; Mücher, C.A.; Kooistra, L.; Haest, B.; de Blust, G.; Schmidt, A.M. Integrating remote sensing in Natura 2000 habitat monitoring: Prospects on the way forward. J. Nat. Cons. 2011, 19, 116–125. [Google Scholar] [CrossRef]

- Pu, R.; Bell, S. A protocol for improving mapping and assessing of seagrass abundance along the West Central Coast of Florida using Landsat TM and EO-1 ALI/Hyperion images. ISPRS J. Photogramm. Remote Sens. 2013, 83, 116–129. [Google Scholar] [CrossRef]

- Stratoulias, D.; Balzter, H.; Zlinszky, A.; Toth, V.R. Assessment of ecophysiology of lake shore reed vegetation based on chlorophyll fluorescence, field spectroscopy and hyperspectral airborne imagery. Remote Sens. Environ. 2015, 157, 72–84. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Asner, G.P. Applications of remote sensing to alien invasive plant studies. Sensors 2009, 9, 4869–4889. [Google Scholar] [CrossRef] [PubMed]

- Mirik, M.; Ansley, R.J.; Steddom, K.; Jones, D.C.; Rush, C.M.; Michels, G.J., Jr.; Elliott, N.C. Remote distinction of a noxious weed (musk thistle: Carduus nutans) using airborne hyperspectral imagery and the Support Vector Machine Classifier. Remote Sens. 2013, 5, 612–630. [Google Scholar] [CrossRef]

- Green, A.A.; Berman, M.; Switzer, P.; Craig, M.D. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef]

- Landgrebe, D.A. Signal Theory Methods in Multispectral Remote Sensing; John Wiley & Sons: New York, NY, USA, 2003; p. 503. [Google Scholar]

- Molnár, Z.; Bölöni, J.; Biró, M.; Horváth, F. Distribution of the Hungarian (semi-) natural habitats I. Marshes and grasslands. Acta Bot. Hung. 2008, 50, 59–105. [Google Scholar] [CrossRef]

- Eliáš, P.; Sopotlieva, D.; Dítě, D.; Hájková, P.; Apostolova, I.; Senko, D.; Melečková, Z.; Hájek, M. Vegetation diversity of salt-rich grasslands in Southeast Europe. Appl. Veg. Sci. 2013, 16, 521–537. [Google Scholar] [CrossRef]

- Török, P.; Kapocsi, I.; Deák, B. Conservation and management of alkali grass-land biodiversity in Central-Europe. In Grasslands: Types, Biodiversity and Impacts; Zhang, W.J., Ed.; Nova Science Publishers Inc.: New York, NY, USA, 2012; pp. 109–118. [Google Scholar]

- Borhidi, A.; Kevey, B.; Lendvai, G. Plant communities of Hungary; Akadémiai Kiadó: Budapest, Hungary, 2012; p. 544. [Google Scholar]

- Kelemen, A.; Török, P.; Valkó, O.; Miglécz, T.; Tóthmérész, B. Mechanisms shaping plant biomass and species richness: Plant strategies and litter effect in alkali and loess grasslands. J. Veg. Sci. 2013, 24, 1195–1203. [Google Scholar] [CrossRef]

- Deák, B.; Valkó, O.; Alexander, C.; Mücke, W.; Kania, A.; Tamás, J.; Heilmeier, H. Fine-scale vertical position as an indicator of vegetation in alkali grasslands—Case study based on remotely sensed data. Flora- Morphol. Distribut. Funct. Ecol. Plants 2014, 209, 693–697. [Google Scholar] [CrossRef]

- Valkó, O.; Tóthmérész, B.; Kelemen, A.; Simon, E.; Miglécz, T.; Lukács, B.; Török, P. Environmental factors driving vegetation and seed bank diversity in alkali grasslands. Agric. Ecosyst. Environ. 2014, 182, 80–87. [Google Scholar] [CrossRef]

- Deák, B.; Valkó, O.; Török, P.; Tóthmérész, B. Solonetz meadow vegetation (Beckmannion eruciformis) in East-Hungary—An alliance driven by moisture and salinity. Tuexenia 2014, 34, 187–203. [Google Scholar]

- Deák, B.; Valkó, O.; Tóthmérész, B.; Török, P. Alkali marshes of Central-Europe—Ecology, management and nature conservation. In Salt Marshes: Ecosystem, Vegetation and Restoration Strategies; Shao, B., Ed.; Nova Science Publishers Inc.: New York, NY, USA, 2014; pp. 1–11. [Google Scholar]

- Pettorelli, N. The Normalised Difference Vegetation Index; Oxford University Press: Oxford, UK, 2013; p. 194. [Google Scholar]

- Hurcom, S.J.; Harrison, A.R. The NDVI and spectral decomposition for semi-arid vegetation abundance estimation. Int. J. Remote Sens. 1998, 19, 3109–3126. [Google Scholar] [CrossRef]

- Rabe, A.; Jakimow, B.; Held, M.; van der Linden, S.; Hostert, P. EnMAP-Box. Version 2.0. 2014. Available online: www.enmap.org (accessed on 28 January 2015).

- Heldens, W.; Heiden, U.; Esch, T.; Stein, E.; Muller, A. Can the future EnMAP mission contribute to urban applications? A literature survey. Remote Sens. 2011, 3, 1817–1846. [Google Scholar] [CrossRef]

- Mansour, K.; Mutanga, O.; Everson, T.; Adam, E. Discriminating indicator grass species for rangeland degradation assessment using hyperspectral data resampled to AISA Eagle resolution. ISPRS J. Photogramm. Remote Sens. 2012, 70, 56–65. [Google Scholar] [CrossRef]

- Burai, P.; Laposi, R.; Enyedi, P.; Schmotzer, A.; Kozma, B.V. Mapping invasive vegetation using AISA Eagle airborne hyperspectral imagery in the Mid-Ipoly-Valley. In Proceedings of the 3rd IEEE GRSS Workshop on Hyperspectral Image and Signal Processing-WHISPERS’2011, Lisboa, Portugal, 6–9 June 2011.

- Maselli, F.; Conese, C.; Petkov, L.; Resti, R. Inclusion of prior probabilities derived from a nonparametric process into the maximum likelihood classifier. Photogramm. Eng. Remote Sens. 1992, 58, 201–207. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis; Springer-Verlag: Berlin, Germany, 1999; p. 240. [Google Scholar]

- Yang, C.; Everitt, J.H.; Fletcher, R.S.; Jensen, R.R.; Mausel, P.W. Evaluating AISA+ hyperspectral imagery for mapping black mangrove along the South Texas Gulf, Coast. Photogramm. Eng. Remote Sens. 2009, 75, 425–435. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Lawrence, R.; Wood, S.; Sheley, R. Mapping invasive plants using hyperspectral imagery and Breiman Cutler classifications (RandomForest). Remote Sens. Environ. 2006, 100, 356–362. [Google Scholar] [CrossRef]

- Vapnick, V.N. Statistical Learning Theory; John Wiley and Sons Inc.: Hoboken, NJ, USA, 1988; p. 768. [Google Scholar]

- Camps-Valls, G.; Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1351–1362. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Calpe-Maravilla, J.; Martin-Guerrero, J.D.; Soria-Olivas, E.; Alonso-Chorda, L.; Moreno, J. Robust support vector method for hyperspectral data classification and knowledge discovery. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1530–1542. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Chan, J.C.-W.; Spanhove, T.; Ma, J.; Vanden Borre, J.; Paelinckx, D.; Canters, F. Natura 2000 habitat identification and conservation status assessment with superresolution enhanced hyperspectral (CHRIS/Proba) imagery. In Proceedings of GEOBIA 2010 geographic object-based image analysis, Ghent, Belgium, 29 June–2 July 2010.

- Chopping, M.J.; Rango, A.; Ritchie, J.C. Improved semi-arid community type differentiation with the NOAA AVHRR via exploitation of the directional signal. IEEE Trans. Geosci. Remote Sens. 2002, 40, 1132–1149. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Burai, P.; Deák, B.; Valkó, O.; Tomor, T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sens. 2015, 7, 2046-2066. https://doi.org/10.3390/rs70202046

Burai P, Deák B, Valkó O, Tomor T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sensing. 2015; 7(2):2046-2066. https://doi.org/10.3390/rs70202046

Chicago/Turabian StyleBurai, Péter, Balázs Deák, Orsolya Valkó, and Tamás Tomor. 2015. "Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery" Remote Sensing 7, no. 2: 2046-2066. https://doi.org/10.3390/rs70202046

APA StyleBurai, P., Deák, B., Valkó, O., & Tomor, T. (2015). Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sensing, 7(2), 2046-2066. https://doi.org/10.3390/rs70202046