Figure 1.

Visualized examples of the effects of the terms “accuracy” and “precision” in mapping (the red polyline is the ground-truth building footprint): (a) neither accurate nor precise; (b) accurate only; (c) precise only; and (d) both accurate and precise.

Figure 1.

Visualized examples of the effects of the terms “accuracy” and “precision” in mapping (the red polyline is the ground-truth building footprint): (a) neither accurate nor precise; (b) accurate only; (c) precise only; and (d) both accurate and precise.

Figure 2.

The overall workflow of the proposed framework.

Figure 2.

The overall workflow of the proposed framework.

Figure 3.

Sample road markings in the aerial images of the Hitotsubashi area in central Tokyo. Some of the problematic areas are highlighted by the red dotted rectangles.

Figure 3.

Sample road markings in the aerial images of the Hitotsubashi area in central Tokyo. Some of the problematic areas are highlighted by the red dotted rectangles.

Figure 4.

DSM reconstruction from ALS by 2.5D Delaunay triangulation: (a) input ALS point cloud (color denotes intensity); and (b) reconstructed DSM mesh (color denotes height).

Figure 4.

DSM reconstruction from ALS by 2.5D Delaunay triangulation: (a) input ALS point cloud (color denotes intensity); and (b) reconstructed DSM mesh (color denotes height).

Figure 5.

Generation of POM from the DSM: (a) overview of the buildings’ perspective occlusion map generation; (b) original aerial image; (c) generated POM; and (d) filtered result of the aerial image.

Figure 5.

Generation of POM from the DSM: (a) overview of the buildings’ perspective occlusion map generation; (b) original aerial image; (c) generated POM; and (d) filtered result of the aerial image.

Figure 6.

Filtering moving vehicles from aerial image: (a) first aerial image; (b) enlarged view of some vehicles in the first image; (c) second aerial image; (d) enlarged view of the same areas in the second image; (e) vehicle filtering result; and (f) enlarged view of the same areas in the filtered result, showing both filtered vehicles and a non-filtered vehicle.

Figure 6.

Filtering moving vehicles from aerial image: (a) first aerial image; (b) enlarged view of some vehicles in the first image; (c) second aerial image; (d) enlarged view of the same areas in the second image; (e) vehicle filtering result; and (f) enlarged view of the same areas in the filtered result, showing both filtered vehicles and a non-filtered vehicle.

Figure 7.

Road marking extraction from an aerial image by adaptive thresholding: (a) a part of the original aerial image; and (b) result of the road marking extraction process.

Figure 7.

Road marking extraction from an aerial image by adaptive thresholding: (a) a part of the original aerial image; and (b) result of the road marking extraction process.

Figure 8.

Road segmentation from an MMS point cloud: (a) original MMS point cloud consisting of buildings, trees, vehicles and road signs (RGB color is derived from the camera); and (b) the result of ground segmentation. The red points represent the ground, and the blue points are off-ground.

Figure 8.

Road segmentation from an MMS point cloud: (a) original MMS point cloud consisting of buildings, trees, vehicles and road signs (RGB color is derived from the camera); and (b) the result of ground segmentation. The red points represent the ground, and the blue points are off-ground.

Figure 9.

The effect of range and incidence angle on the shape of the laser footprint and the power of the reflected signal. As can be seen, as the range increase and the incidence angle decreases, area of the laser footprint increases, which degrades the quality of sampling.

Figure 9.

The effect of range and incidence angle on the shape of the laser footprint and the power of the reflected signal. As can be seen, as the range increase and the incidence angle decreases, area of the laser footprint increases, which degrades the quality of sampling.

Figure 10.

Effect of the increase of the distance to the reflected intensity value of the asphalt surface. By increasing distance, the angle of incidence inherently decreases. The red curve illustrates the curve fit to the relation between distance and intensity using a nonlinear least squares fitting method.

Figure 10.

Effect of the increase of the distance to the reflected intensity value of the asphalt surface. By increasing distance, the angle of incidence inherently decreases. The red curve illustrates the curve fit to the relation between distance and intensity using a nonlinear least squares fitting method.

Figure 11.

Intensity calibration of MMS point cloud: (a) original intensity of the MMS point cloud; and (b) calibrated intensity using the proposed method.

Figure 11.

Intensity calibration of MMS point cloud: (a) original intensity of the MMS point cloud; and (b) calibrated intensity using the proposed method.

Figure 12.

Road marking extraction from MMS point cloud: (a) original MMS point cloud (the color represents the original intensity); and (b) the result of road marking extraction.

Figure 12.

Road marking extraction from MMS point cloud: (a) original MMS point cloud (the color represents the original intensity); and (b) the result of road marking extraction.

Figure 13.

Generation of the Gaussian distribution from the reference data: (a) estimated 1D Gaussian distribution of the sample points; (b) estimated 2D Gaussian distribution of the sample points; (c) extracted aerial road markings; and (b) generated NDT map with a 2 m grid size.

Figure 13.

Generation of the Gaussian distribution from the reference data: (a) estimated 1D Gaussian distribution of the sample points; (b) estimated 2D Gaussian distribution of the sample points; (c) extracted aerial road markings; and (b) generated NDT map with a 2 m grid size.

Figure 14.

Illustration of single laser scan and MMS survey.

Figure 14.

Illustration of single laser scan and MMS survey.

Figure 15.

Concept of the dynamic sliding window.

Figure 15.

Concept of the dynamic sliding window.

Figure 16.

The procedure of defining the window length for the target patch. First, the initial window around the target patch is divided into a feature grid (left). Second, the occupied cells—those containing five or more points—are calculated (middle). The window is extended by adding new patches until the number of occupied cells meets or exceeds the required feature count (right).

Figure 16.

The procedure of defining the window length for the target patch. First, the initial window around the target patch is divided into a feature grid (left). Second, the occupied cells—those containing five or more points—are calculated (middle). The window is extended by adding new patches until the number of occupied cells meets or exceeds the required feature count (right).

Figure 17.

MMS system description: Mitsubishi Electric’s MMS-K320 (bottom); and the configuration of two SICK LMS-511 laser scanners and RTK GPS receivers (top).

Figure 17.

MMS system description: Mitsubishi Electric’s MMS-K320 (bottom); and the configuration of two SICK LMS-511 laser scanners and RTK GPS receivers (top).

Figure 18.

Aerial surveillance system description: (a) the aircraft used for aerial data collection; and (b) the sensor setup for the aerial imagery.

Figure 18.

Aerial surveillance system description: (a) the aircraft used for aerial data collection; and (b) the sensor setup for the aerial imagery.

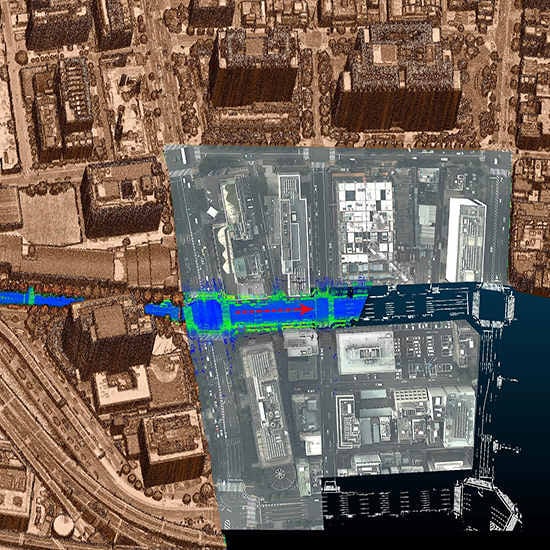

Figure 19.

The experimental area around Hitotsubashi intersection, a dense urban area in Chiyoda-ku, Tokyo, Japan.

Figure 19.

The experimental area around Hitotsubashi intersection, a dense urban area in Chiyoda-ku, Tokyo, Japan.

Figure 20.

Routes of the nine surveys S01–S07 on the map. The colors show the estimated GPS error reported by MMS.

Figure 20.

Routes of the nine surveys S01–S07 on the map. The colors show the estimated GPS error reported by MMS.

Figure 21.

Visual evaluation of the proposed method for survey No. 6: (a) survey route of the MMS on the aerial image (after registration); (b) enlarged view of the original MMS point cloud before registration (the red points are aerial road markings); and (c) enlarged view of the MMS point cloud after applying the proposed method (the red points are aerial road markings).

Figure 21.

Visual evaluation of the proposed method for survey No. 6: (a) survey route of the MMS on the aerial image (after registration); (b) enlarged view of the original MMS point cloud before registration (the red points are aerial road markings); and (c) enlarged view of the MMS point cloud after applying the proposed method (the red points are aerial road markings).

Figure 22.

Estimated GPS error by MMS-K320 for each of the nine surveys.

Figure 22.

Estimated GPS error by MMS-K320 for each of the nine surveys.

Figure 23.

Distribution of the GCPs collected in the experimental area utilizing the total station survey. The GCPs are from the corner points of road signs, which are clearly captured by both the MMS camera and the point cloud.

Figure 23.

Distribution of the GCPs collected in the experimental area utilizing the total station survey. The GCPs are from the corner points of road signs, which are clearly captured by both the MMS camera and the point cloud.

Figure 24.

The 2D error of survey No. 6 in the original data, after the landmark update, and after the proposed method.

Figure 24.

The 2D error of survey No. 6 in the original data, after the landmark update, and after the proposed method.

Figure 25.

Generated Gaussian mixture models using different grid sizes.

Figure 25.

Generated Gaussian mixture models using different grid sizes.

Figure 26.

Length of the dynamic sliding window for survey No. 6

Figure 26.

Length of the dynamic sliding window for survey No. 6

Figure 27.

Effect of the patch length on the smoothness of the georeferenced MMS point cloud.

Figure 27.

Effect of the patch length on the smoothness of the georeferenced MMS point cloud.

Figure 28.

Total 2D error of the nine surveys in the original data and by the proposed method.

Figure 28.

Total 2D error of the nine surveys in the original data and by the proposed method.

Figure 29.

Total 2D error of the nine surveys by the landmark update method and by the proposed method.

Figure 29.

Total 2D error of the nine surveys by the landmark update method and by the proposed method.

Figure 30.

Areas where the road markings were repainted after 12 June 2014 (the date of the aerial image acquisition).

Figure 30.

Areas where the road markings were repainted after 12 June 2014 (the date of the aerial image acquisition).

Table 1.

Details of the sensor platforms employed for the experiments.

Table 1.

Details of the sensor platforms employed for the experiments.

| Item | Value |

|---|

| MMS * | Laser Scanner | Manufacturer (Model) | SICK (LMS-511) |

| No. mounted | 2 single layer lasers |

| Mounting direction | CH1: Front/Down (−25°), CH2: Front/Up (25°) |

| Intensity | Can be acquired |

| No. of points | 27,100 points/s (1 unit) |

| Range (max.) | 65 m |

| Viewing angle | 180° |

| Camera | No. mounted | 3 |

| No. of pixels | 5 megapixels |

| Max capture rate | 10 images/s |

| View angle | Wide viewing angle (h: 80°, v: 64°) |

| Localization platform | Manufacturer | Mitsubishi Electrics |

| Method | RTK-GPS/IMU/odometer |

| Self-positioning accuracy *1 | Within 6 cm (rms) *4,*5 |

| Relative accuracy data *2 | Within 1 cm (rms) |

| Absolute accuracy data *1,*3 | Within 10 cm (rms) |

| Aerial system | Flying height | ~1700 m |

| Laser Scanner | Manufacturer (Model) | Leica (ALS70) |

| Mounting direction | Direct-down |

| Intensity | Can be acquired |

| Max measurement rate | 500 kHz |

| Point cloud density *6 | Less than 10 pts/m2 |

| Field of view | ~75° |

| Camera | Manufacturer (Model) | Leica (RCD30) |

| Mounting direction | Direct-down |

| No. of pixels | 80 MP (10,320 × 7752 pixels) |

| Maximum frame rate | 0.8 fps |

| Forward overlap | 60% |

| GSD *7 | 12 cm/pixel |

| Localization platform | Manufacturer (Model) | Novatel (IMU-LN200) |

| Method | GNSS/IMU |

Table 2.

Summary of the parameters applied in our experiment.

Table 2.

Summary of the parameters applied in our experiment.

| Parameters | Value | Description |

|---|

| POM Generation | Height threshold | 5 m (above the ground) | Defined based on the maximum height of the vehicles |

| Resolution | 12 cm/pixel | Equal to the GSD of the aerial image |

| MMS ground segmentation | Cloth resolution | 2 m | Larger grids do not cover the ground well (set empirically) |

| Max iteration | 1000 | More than 500 is suggested |

| Classification threshold | 20 cm | If the cloth resolution is set correctly, small values give suitable results |

| Adaptive thresholding | Block size | 2.5 m (21 pixels) | Empirically defined |

| Threshold | weighted mean—c | c = 17 empirically defined |

| Dynamic sliding window | Patch length | 0.5 m | Defined based on IMU performance to limit the error within 1 cm |

| Window length | Dynamic | - |

| Initial window length | 60 patches | Equal to 30 m (60 × 0.5 m) |

| Feature grid size | 1 m | Empirically defined |

| Required feature count | 400 | Empirically defined |

| NDT registration | NDT grid size | 1 m | Defined to be smaller than the distance between the lane markings and signs in the middle of the lanes |

| NDT iterations | 30 | Should be high enough to let the NDT converge (set empirically) |

Table 3.

Evaluation result of survey No. 6.

Table 3.

Evaluation result of survey No. 6.

| Method | Error (m) |

|---|

| Mean | Max | Stdev |

|---|

| Original data (GPS/IMU/Odometer) | 1.186 | 1.405 | 0.19 |

| Landmark updating | 0.208 | 0.664 | 0.11 |

| Proposed method | 0.102 | 0.206 | 0.05 |

Table 4.

The proposed framework’s sensitivity to different NDT grid sizes.

Table 4.

The proposed framework’s sensitivity to different NDT grid sizes.

| Item | Value |

|---|

| Proposed | | |

|---|

| NDT grid size * (m) | 0.5 | 1 | 2 | 4 |

| Mean error (m) | 5.01 | 0.11 | 1.20 | 1.22 |

Table 5.

Evaluation of the sensitivity of the framework to different initial window lengths.

Table 5.

Evaluation of the sensitivity of the framework to different initial window lengths.

| Item | Value |

|---|

| | Proposed | |

|---|

| Initial window length * (patch) | 15 | 30 | 60 | 120 |

| Min win length (patch) | 39 | 39 | 60 | 120 |

| Mean win length (patch) | 59.22 | 59.16 | 64.67 | 120 |

| Max win length (patch) | 95 | 95 | 95 | 120 |

| Mean error (m) | 0.80 | 0.52 | 0.11 | 3.34 |

Table 6.

Evaluation of the sensitivity of the framework to different numbers of required features.

Table 6.

Evaluation of the sensitivity of the framework to different numbers of required features.

| Item | Value |

|---|

| Proposed | | |

|---|

| Required feature count * | 200 | 400 | 600 | 800 |

| Min win length (patch) | 60 | 60 | 69 | 90 |

| Mean win length (patch) | 60 | 64.67 | 90.13 | 119.68 |

| Max win length (patch) | 60 | 95 | 130 | 165 |

| Mean error (m) | 2.37 | 0.11 | 0.72 | 3.58 |

Table 7.

Overall performance comparison.

Table 7.

Overall performance comparison.

| Method | Error (m) |

|---|

| Mean | Max | Stdev |

|---|

| Original data (GPS/IMU/Odometer) | 0.997 | 2.064 | 0.22 |

| Landmark updating | 0.208 | 0.72 | 0.16 |

| Proposed method | 0.116 | 0.277 | 0.07 |

| Original data for [35] (Graph SLAM) | 1.3 * | 1.93 * | - |

| Kümmerle et al. [35] | 0.85 * | 1.47 * | - |

| Original data for [38] (GNSS/IMU/Odometer) | 2.13 ** | 2.40 ** | 0.13 ** |

| Hussnain et al. [38] | 0.18 ** | 0.32 ** | - |