Predicting the Optimal Input Parameters for the Desired Print Quality Using Machine Learning

Abstract

1. Introduction

2. Neural Networks

2.1. Convolutional Neural Network (CNN)

2.2. MobileNet

2.3. InceptionV3

2.4. Resnet152

3. Methodology

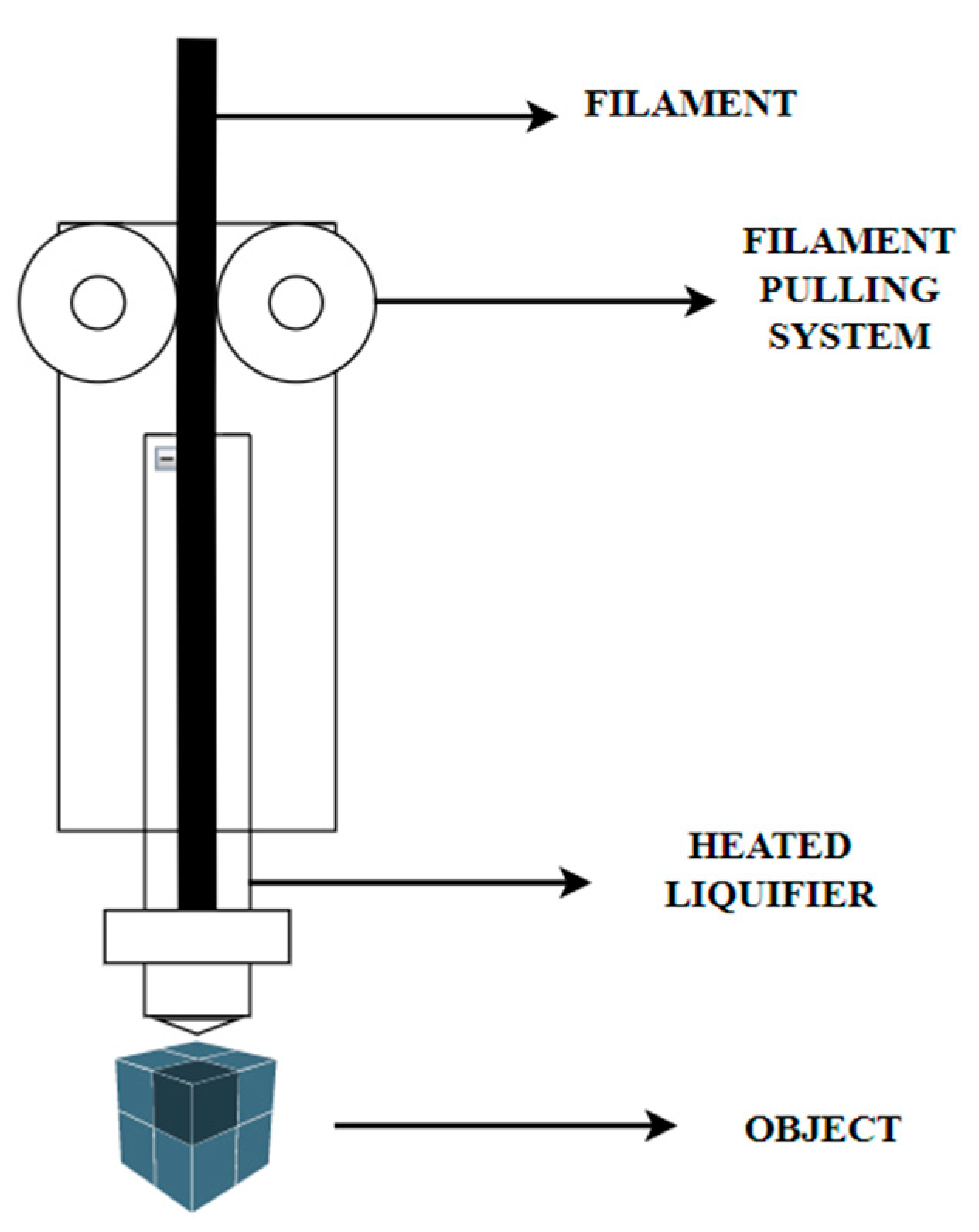

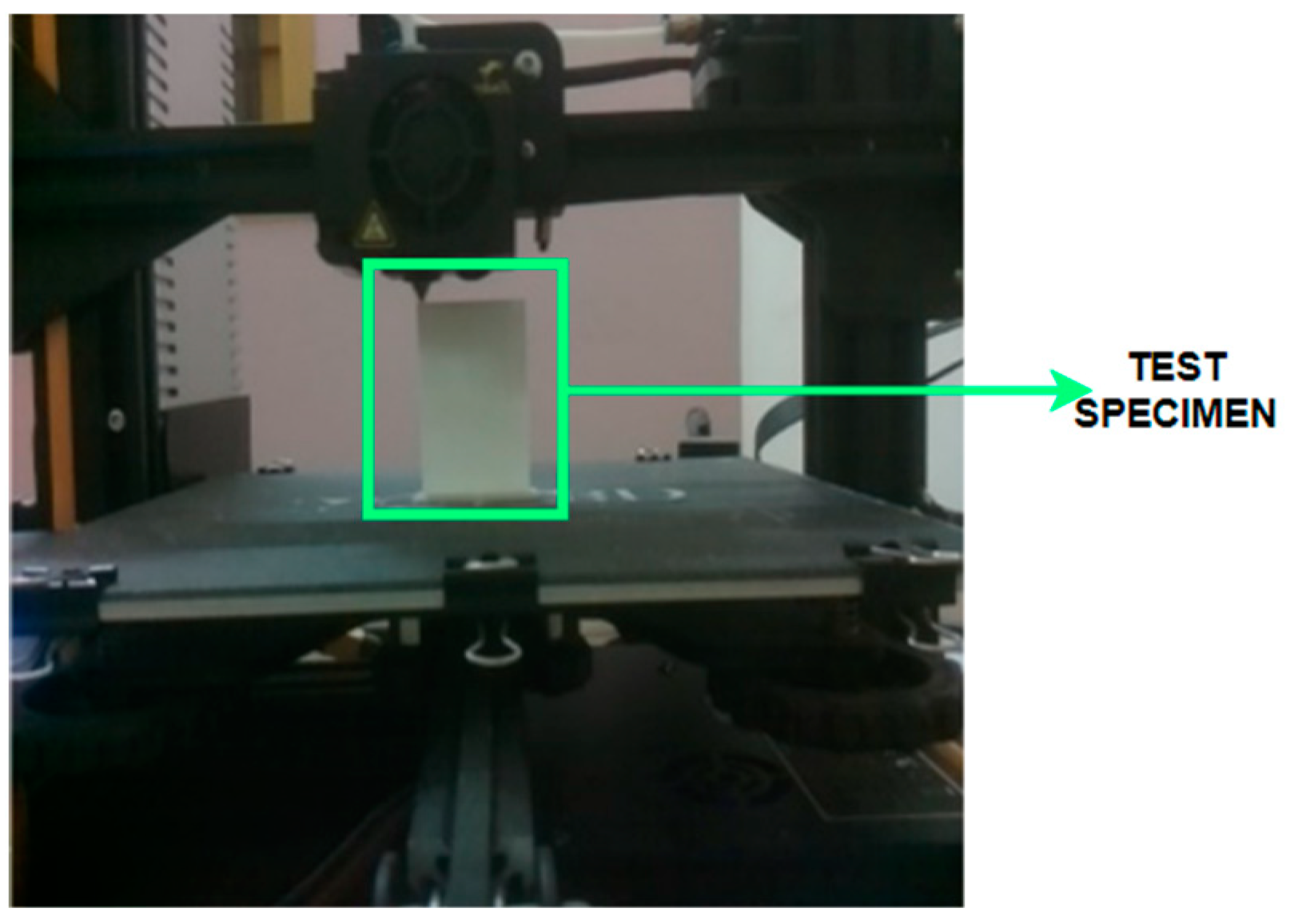

3.1. Fused Filament Fabrication (FFF)

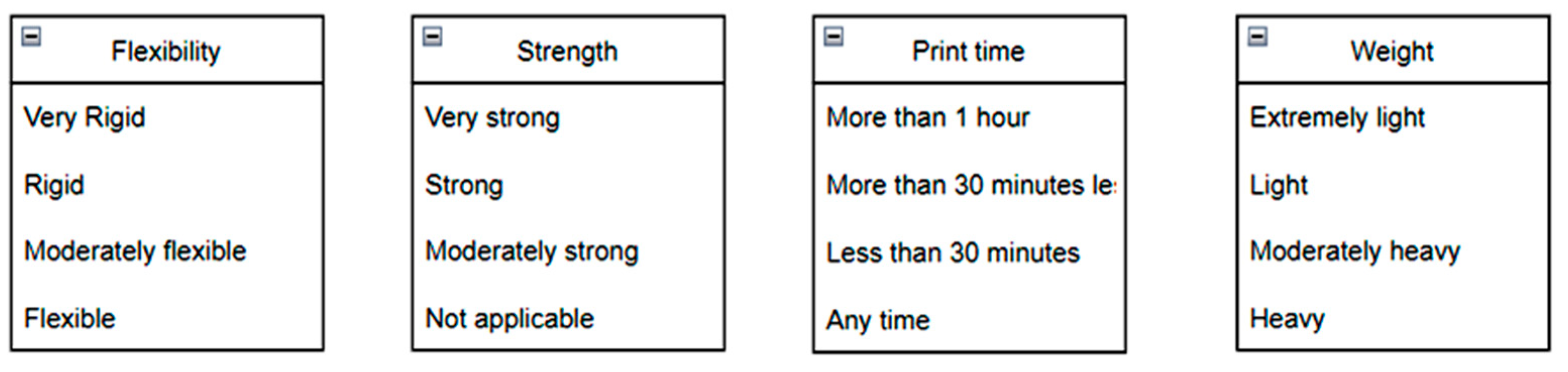

3.2. Design of Experiments—Taguchi

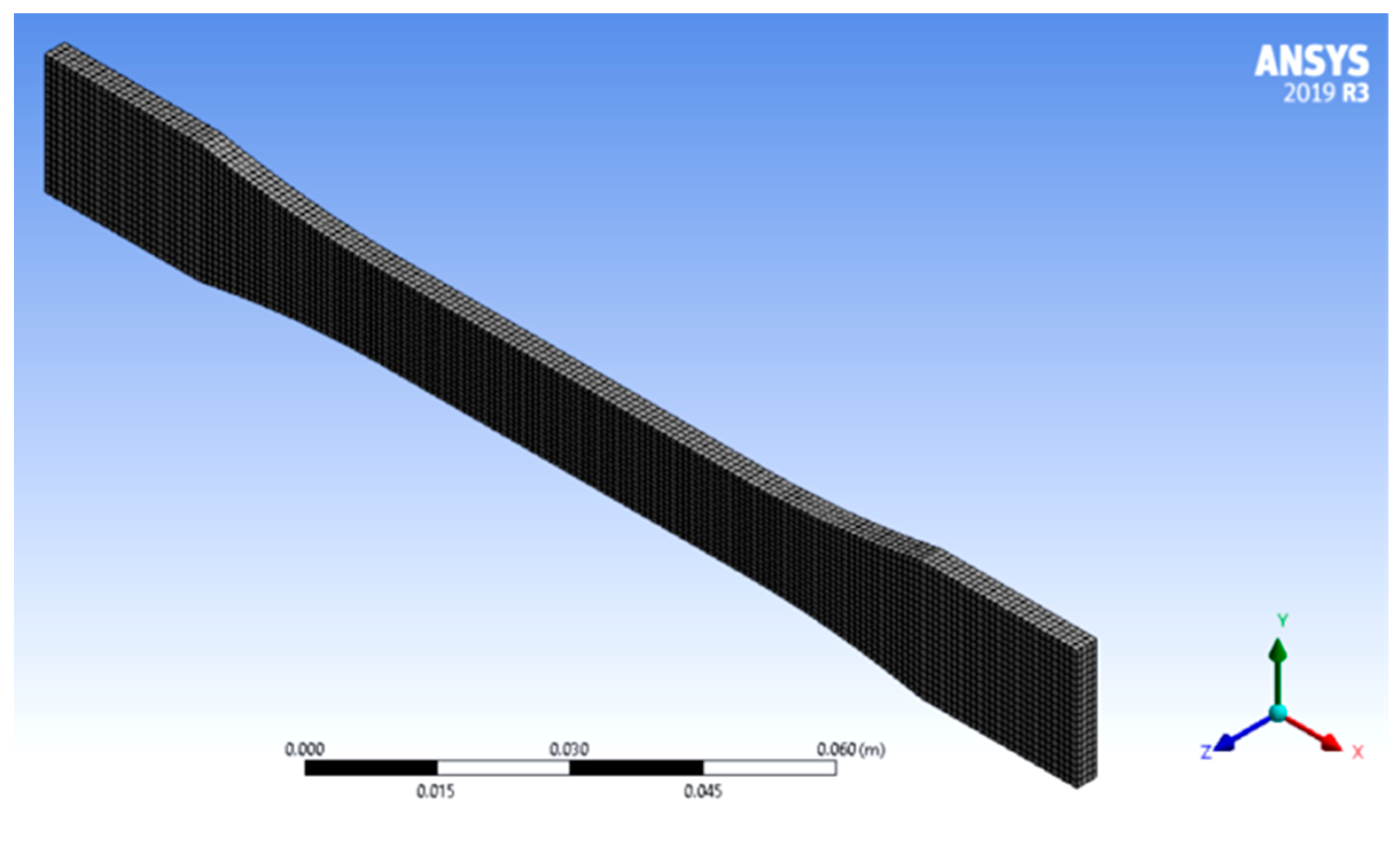

3.3. Modelling and Finite Element Analysis

3.4. System Design

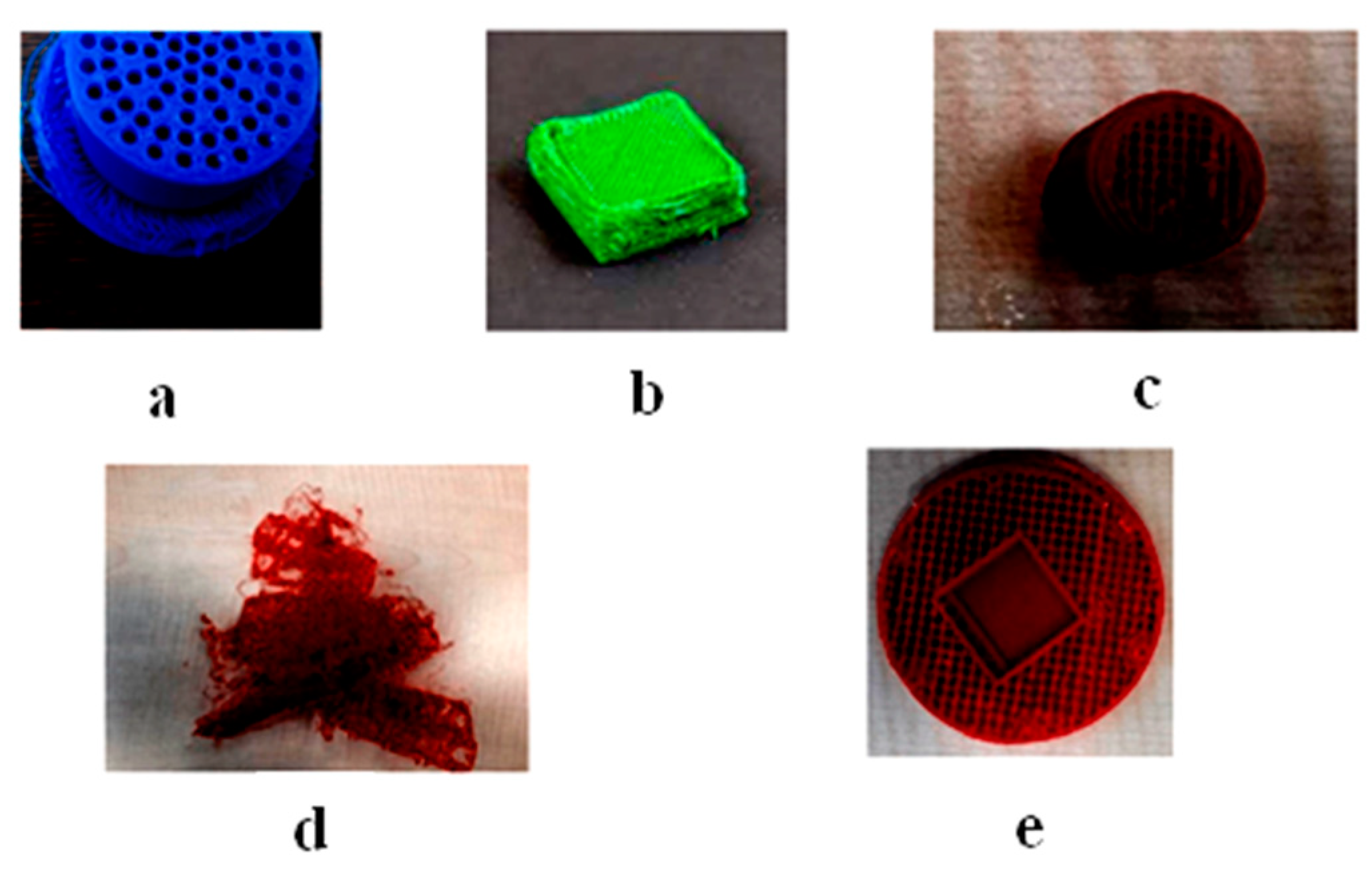

3.5. Training the Machine Learning Algorithm

3.6. Hyperparameters of Chosen Network Architectures

- Learning Rate: The amount by which weights are updated after each epoch is referred to as the learning rate. This value typically ranges between 0.0 and 1.0. The lower the learning rate, the higher the training time, and vice versa. However, extremely high values are not preferable as the coverage might be constrained, so the resulting accuracy may not be optimal.

- Optimizer: Optimizers help update the weights along with other parameters. The model’s performance is highly dependent on Optimizer.

- Batch Size: The interval of examples after which model parameters must be updated. This value must be greater than or equal to 1 but less than the number of training examples.

- Activation function: These functions determine the neural network’s output and map it into its range.

- Epochs: This refers to the number of times a model learns and updates itself during training.

- F1 Score: This is a means to evaluate and express the model’s performance and classifier.

4. Results and Discussion

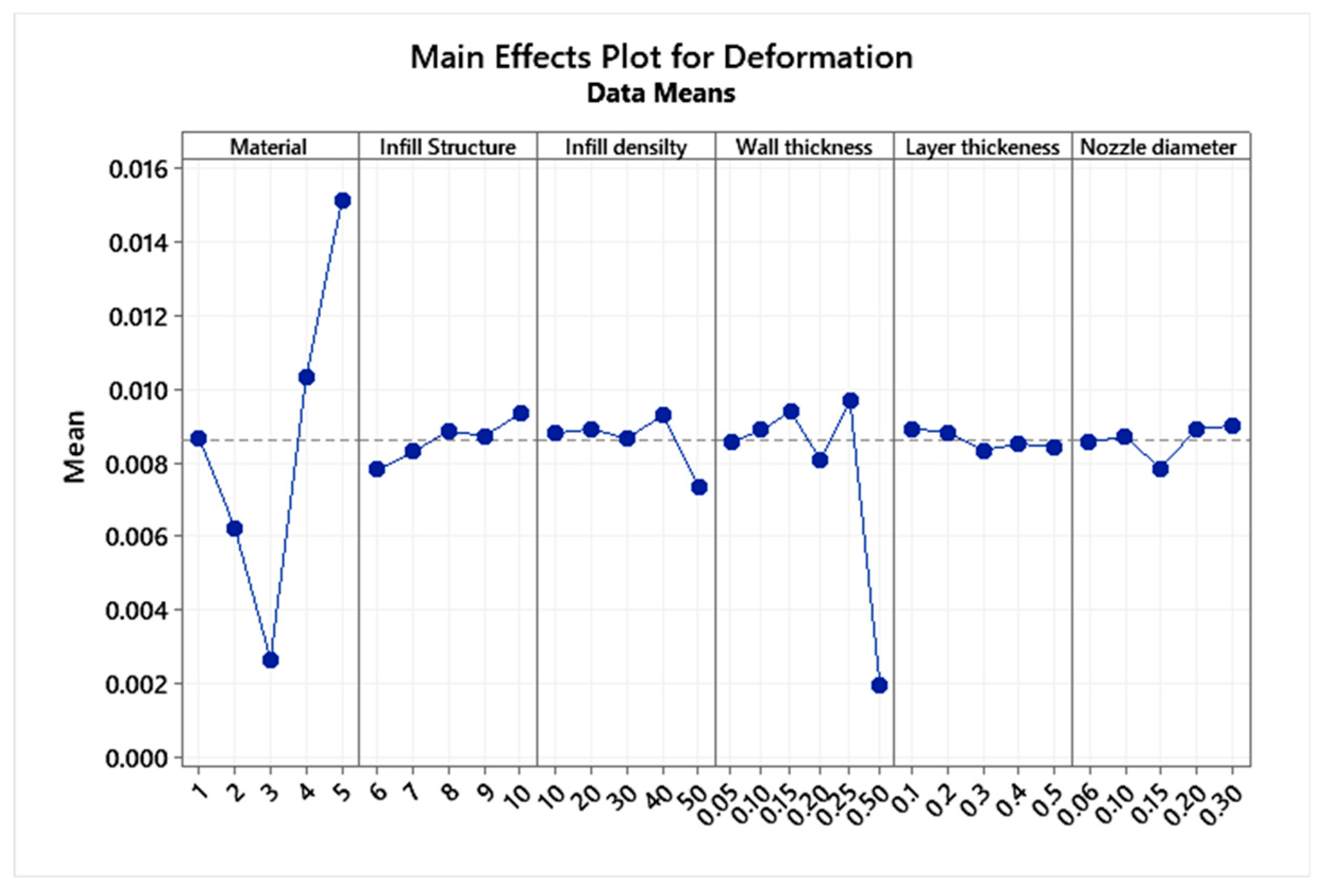

4.1. Regression Equation and Optimization

4.2. Algorithm Precision by a Confirmatory Test

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| CNN | Convolutional Neural Network |

| RSM | Response Surface Methodology |

| FFF | Fused Deposition Modelling |

| PLA | Polylactic acid |

| ABS | Acrylonitrile Butadiene Styrene |

| PETG | Polyethylene terephthalate glycol |

| PVA | Polyvinyl Alcohol Plastic |

References

- Kruth, J.P. Material Incress Manufacturing by Rapid Prototyping Techniques. CIRP Ann. Manuf. Technol. 1991, 40, 603–614. [Google Scholar] [CrossRef]

- Too, M.H.; Leong, K.F.; Chua, C.K.; Du, Z.H.; Yang, S.F.; Cheah, C.M.; Ho, S.L. Investigation of 3D Non-Random Porous Structures by Fused Deposition Modelling. Int. J. Adv. Manuf. Technol. 2002, 19, 217–223. [Google Scholar] [CrossRef]

- Masood, S.H.; Rattanawong, W.; Iovenitti, P. Part Build Orientations Based on Volumetric Error in Fused Deposition Modelling. Int. J. Adv. Manuf. Technol. 2000, 16, 162–168. [Google Scholar] [CrossRef]

- Grimm, T. Fused Deposition Modeling: A Technology Evaluation; T. A. Grimm & Associates, Inc.: Edgewood, KY, USA, 2002; Volume 11, pp. 1–12. [Google Scholar]

- Turkmen, K.G.H.S. Common FDM 3D Printing Defects. In Proceedings of the International Congress on 3D Printing (Additive Manufacturing) Technologies and Digital Industry, Antalya, Turkey, 19–21 April 2018; pp. 1–7. [Google Scholar]

- Pang, X.; Zhuang, X.; Tang, Z.; Chen, X. Polylactic Acid (PLA): Research, Development and Industrialization. Biotechnol. J. 2010, 5, 1125–1136. [Google Scholar] [CrossRef]

- Singhvi, M.S.; Zinjarde, S.S.; Gokhale, D.V. Polylactic Acid: Synthesis and Biomedical Applications. J. Appl. Microbiol. 2019, 127, 1612–1626. [Google Scholar] [CrossRef]

- Ligon, S.C.; Liska, R.; Stampfl, J.; Gurr, M.; Mülhaupt, R. Polymers for 3D Printing and Customized Additive Manufacturing. Chem. Rev. 2017, 117, 10212–10290. [Google Scholar] [CrossRef]

- Durgun, I.; Ertan, R. Experimental Investigation of FFF Process for Improvement of Mechanical Properties and Production Cost. Rapid Prototyp. J. 2014, 20, 228–235. [Google Scholar] [CrossRef]

- Garg, A.; Bhattacharya, A.; Batish, A. Chemical Vapor Treatment of ABS Parts Built by FFF: Analysis of Surface Finish and Mechanical Strength. Int. J. Adv. Manuf. Technol. 2017, 89, 2175–2191. [Google Scholar] [CrossRef]

- Alafaghani, A.; Qattawi, A.; Alrawi, B.; Guzman, A. Experimental Optimization of Fused Deposition Modelling Processing Parameters: A Design-for-Manufacturing Approach. Procedia Manuf. 2017, 10, 791–803. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, J.; Wang, Y.; Feng, S.; Peng, T.; Yang, H.; Zou, J. A CNN-Based Adaptive Surface Monitoring System for Fused Deposition Modeling. IEEE/ASME Trans. Mechatron. 2020, 25, 2287–2296. [Google Scholar] [CrossRef]

- Wu, Y.; He, K.; Zhou, X.; DIng, W. Machine Vision Based Statistical Process Control in Fused Deposition Modeling. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 936–941. [Google Scholar] [CrossRef]

- Nam, J.; Jo, N.; Kim, J.S.; Lee, S.W. Development of a Health Monitoring and Diagnosis Framework for Fused Deposition Modeling Process Based on a Machine Learning Algorithm. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2020, 234, 324–332. [Google Scholar] [CrossRef]

- Wen, Y.; Yue, X.; Hunt, J.H.; Shi, J. Feasibility Analysis of Composite Fuselage Shape Control via Finite Element Analysis. J. Manuf. Syst. 2018, 46, 272–281. [Google Scholar] [CrossRef]

- Abbot, D.W.; Kallon, D.V.V.; Anghel, C.; Dube, P. Finite Element Analysis of 3D Printed Model via Compression Tests. Procedia Manuf. 2019, 35, 164–173. [Google Scholar] [CrossRef]

- Durakovic, B.; Basic, H. Textile Cutting Process Optimization Model Based On Six Sigma Methodology in a Medium-Sized Company. Period. Eng. Nat. Sci. 2013, 1, 39–46. [Google Scholar] [CrossRef]

- Paulo, F.; Santos, L. Design of Experiments for Microencapsulation Applications: A Review. Mater. Sci. Eng. C 2017, 77, 1327–1340. [Google Scholar] [CrossRef] [PubMed]

- Selvaraj, V.K.; Jeyanthi, S.; Thiyagarajan, R.; Kumar, M.S.; Yuvaraj, L.; Ravindran, P.; Niveditha, D.M.; Gebremichael, Y.B. Experimental Analysis and Optimization of Tribological Properties of Self-Lubricating Aluminum Hybrid Nanocomposites Using the Taguchi Approach. Adv. Mater. Sci. Eng. 2022, 2022, 4511140. [Google Scholar] [CrossRef]

- Subramanian, J.; Vinoth Kumar, S.; Venkatachalam, G.; Gupta, M.; Singh, R. An Investigation of EMI Shielding Effectiveness of Organic Polyurethane Composite Reinforced with MWCNT-CuO-Bamboo Charcoal Nanoparticles. J. Electron. Mater. 2021, 50, 1282–1291. [Google Scholar] [CrossRef]

- Kumar, V.; Jeyanthi, S. A Comparative Study of Smart Polyurethane Foam Using RSM and COMSOL Multiphysics for Acoustical Applications : From Materials to Component. J. Porous Mater. 2022, 29, 1–17. [Google Scholar] [CrossRef]

- Yu, P.; Low, M.Y.; Zhou, W. Design of Experiments and Regression Modelling in Food Flavour and Sensory Analysis: A Review. Trends Food Sci. Technol. 2018, 71, 202–215. [Google Scholar] [CrossRef]

- Schlueter, A.; Geyer, P. Linking BIM and Design of Experiments to Balance Architectural and Technical Design Factors for Energy Performance. Autom. Constr. 2018, 86, 33–43. [Google Scholar] [CrossRef]

- Garud, S.S.; Karimi, I.A.; Kraft, M. Design of Computer Experiments: A Review. Comput. Chem. Eng. 2017, 106, 71–95. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.M.; Alzawi, S. Layers of a Convolutional Neural Network; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- El Naqa, I.; Li, R.; Murphy, M.J. Machine Learning in Radiation Oncology (Theory and Applications); Springer: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Barratt, S.; Sharma, R. A Note on the Inception Score. arXiv 2018, arXiv:1801.01973. [Google Scholar]

- Wang, C.N.; Yang, F.C.; Nguyen, V.T.T.; Vo, N.T.M. CFD Analysis and Optimum Design for a Centrifugal Pump Using an Effectively Artificial Intelligent Algorithm. Micromachines 2022, 13, 1208. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.V.T.; Huynh, N.T.; Vu, N.C.; Kieu, V.N.D.; Huang, S.C. Optimizing Compliant Gripper Mechanism Design by Employing an Effective Bi-Algorithm: Fuzzy Logic and ANFIS. Microsyst. Technol. 2021, 27, 3389–3412. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions; Springer International Publishing: New York, NY, USA, 2021; p. 8. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent Advances in Convolutional Neural Networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Palaz, D.; Magimai-Doss, M.; Collobert, R. End-to-End Acoustic Modeling Using Convolutional Neural Networks for HMM-Based Automatic Speech Recognition. Speech Commun. 2019, 108, 15–32. [Google Scholar] [CrossRef]

- Wang, Y.; Hong, K.; Zou, J.; Peng, T.; Yang, H. A CNN-Based Visual Sorting System with Cloud-Edge Computing for Flexible Manufacturing Systems. IEEE Trans. Ind. Inform. 2020, 16, 4726–4735. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Wang, T.; Yao, Y.; Chen, Y.; Zhang, M.; Tao, F.; Snoussi, H. Auto-Sorting System Toward Smart Factory Based on Deep Learning for Image Segmentation. IEEE Sens. J. 2018, 18, 8493–8501. [Google Scholar] [CrossRef]

- Lee, K.B.; Cheon, S.; Kim, C.O. A Convolutional Neural Network for Fault Classification and Diagnosis in Semiconductor Manufacturing Processes. IEEE Trans. Semicond. Manuf. 2017, 30, 135–142. [Google Scholar] [CrossRef]

- Saha, O.; Kusupati, A.; Simhadri, H.V.; Varma, M.; Jain, P. RNNPool: Efficient Non-Linear Pooling for RAM Constrained Inference. Adv. Neural Inf. Process. Syst. 2020, 33, 20473–20484. [Google Scholar]

- Srinivasu, P.N.; Sivasai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Guo, Z.; Zhou, Z.; Liu, B.; Li, L.; Jiao, Q.; Huang, C.; Zhang, J. An Improved Neural Network Model Based on Inception-v3 for Oracle Bone Inscription Character Recognition. Sci. Program. 2022, 7490363. [Google Scholar] [CrossRef]

- Ramaneswaran, S.; Srinivasan, K.; Vincent, P.M.D.R.; Chang, C.Y. Hybrid Inception v3 XGBoost Model for Acute Lymphoblastic Leukemia Classification. Comput. Math. Methods Med. 2021, 2577375. [Google Scholar] [CrossRef]

- Cao, J.; Yan, M.; Jia, Y.; Tian, X.; Zhang, Z. Application of a Modified Inception-v3 Model in the Dynasty-Based Classification of Ancient Murals. EURASIP J. Adv. Signal Process. 2021, 2021, 49. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 770–778. [Google Scholar] [CrossRef]

| Thermoplastic | Melting Range (Celsius) |

|---|---|

| ABS | 180–230° |

| PLA | 210–250° |

| PETG | 220–250° |

| Nylon | 240–260° |

| Symbol | Parameter | Level | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| X1 | Material | 1 | 2 | 3 | 4 | 5 |

| X2 | Infill Structure | 6 | 7 | 8 | 9 | 10 |

| X3 | Infill density (%) | 10 | 20 | 30 | 40 | 50 |

| X4 | Wall thickness (mm) | 0.05 | 0.10 | 0.15 | 0.2 | 0.25 |

| X5 | Layer thickness (mm) | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 |

| X6 | Nozzle diameter (mm) | 0.06 | 0.1 | 0.15 | 0.2 | 0.3 |

| Standard Order | Material | Infill Structure | Infill Density | Wall Thickness | Layer Thickness | Nozzle Diameter |

|---|---|---|---|---|---|---|

| 1 | 1 | 6 | 10 | 0.050 | 0.100 | 0.060 |

| 2 | 1 | 7 | 20 | 0.100 | 0.200 | 0.100 |

| 3 | 1 | 8 | 30 | 0.150 | 0.300 | 0.150 |

| 4 | 1 | 9 | 40 | 0.200 | 0.400 | 0.200 |

| 5 | 1 | 10 | 50 | 0.250 | 0.500 | 0.300 |

| 6 | 2 | 6 | 20 | 0.150 | 0.400 | 0.300 |

| 7 | 2 | 7 | 30 | 0.200 | 0.500 | 0.060 |

| 8 | 2 | 8 | 40 | 0.250 | 0.100 | 0.100 |

| 9 | 2 | 9 | 50 | 0.050 | 0.200 | 0.150 |

| 10 | 2 | 10 | 10 | 0.100 | 0.300 | 0.200 |

| 11 | 3 | 6 | 30 | 0.500 | 0.200 | 0.200 |

| 12 | 3 | 7 | 40 | 0.050 | 0.300 | 0.300 |

| 13 | 3 | 8 | 50 | 0.100 | 0.400 | 0.060 |

| 14 | 3 | 9 | 10 | 0.150 | 0.500 | 0.100 |

| 15 | 3 | 10 | 20 | 0.200 | 0.100 | 0.150 |

| 16 | 4 | 6 | 40 | 0.100 | 0.500 | 0.150 |

| 17 | 4 | 7 | 50 | 0.150 | 0.100 | 0.200 |

| 18 | 4 | 8 | 10 | 0.200 | 0.200 | 0.300 |

| 19 | 4 | 9 | 20 | 0.250 | 0.300 | 0.060 |

| 20 | 4 | 10 | 30 | 0.050 | 0.400 | 0.100 |

| 21 | 5 | 6 | 50 | 0.200 | 0.300 | 0.100 |

| 22 | 5 | 7 | 10 | 0.250 | 0.400 | 0.150 |

| 23 | 5 | 8 | 20 | 0.050 | 0.500 | 0.200 |

| 24 | 5 | 9 | 30 | 0.100 | 0.100 | 0.300 |

| 25 | 5 | 10 | 40 | 0.150 | 0.200 | 0.060 |

| Model Parameters | CNN | Resnet152 | MobileNet | Inception |

|---|---|---|---|---|

| Epochs | 100 | 100 | 100 | 100 |

| Precision | 0.64 | 0.8 | 0.63 | 1.0 |

| Accuracy | 0.95 | 0.95 | 0.11 | 0.97 |

| F1 score | 0.78 | 0.64 | 0.08 | 1.0 |

| Time taken per epoch | 75s | 341s | 49s | 35s |

| Validation split | 0.3 | 0.2 | 0.3 | 0.3 |

| Batch Size | 64 | 32 | 64 | 32 |

| Activation Function | BCE | CCE | BCE | SM |

| Standard Order | The Factor of Safety from FEA | Factor of Safety from Equation | Error | Max Equivalent Stress from FEA | Max Equivalent Stress from Equation | Error | Total Deformation from FEA | Total Deformation from Regression Equation (Equations (1)–(3)) | Error |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 5.300 | 5.000 | 5.600 | 14.700 | 15.000 | 2.000 | 0.008 | 0.008 | 3.600 |

| 2 | 4.500 | 4.600 | 2.200 | 12.100 | 12.000 | 0.800 | 0.009 | 0.009 | 3.000 |

| 3 | 5.100 | 5.000 | 1.900 | 14.300 | 15.000 | 4.600 | 0.009 | 0.009 | 2.900 |

| 4 | 4.800 | 5.000 | 4.100 | 13.600 | 14.000 | 2.800 | 0.009 | 0.009 | 1.700 |

| 5 | 5.700 | 5.800 | 1.700 | 15.200 | 16.000 | 5.000 | 0.008 | 0.008 | 1.200 |

| 6 | 6.600 | 6.900 | 4.500 | 19.800 | 20.000 | 1.000 | 0.007 | 0.007 | 2.400 |

| 7 | 7.000 | 7.000 | 0.000 | 20.400 | 20.000 | 2.000 | 0.005 | 0.005 | 1.700 |

| 8 | 6.400 | 6.000 | 6.200 | 17.300 | 17.000 | 1.700 | 0.007 | 0.007 | 1.600 |

| 9 | 8.900 | 9.000 | 1.100 | 22.500 | 22.000 | 2.200 | 0.005 | 0.005 | 1.900 |

| 10 | 6.100 | 6.000 | 1.600 | 15.900 | 15.500 | 2.500 | 0.007 | 0.008 | 0.500 |

| 11 | 9.700 | 10.000 | 3.000 | 30.000 | 29.000 | 3.400 | 0.002 | 0.002 | 2.000 |

| 12 | 9.100 | 9.000 | 1.000 | 26.300 | 27.000 | 2.500 | 0.003 | 0.003 | 4.100 |

| 13 | 10.000 | 10.000 | 0.000 | 30.200 | 30.000 | 0.600 | 0.002 | 0.002 | 5.500 |

| 14 | 8.900 | 9.000 | 1.100 | 24.600 | 25.000 | 1.600 | 0.004 | 0.004 | 2.700 |

| 15 | 9.300 | 9.000 | 3.200 | 27.100 | 27.000 | 0.300 | 0.003 | 0.003 | 2.900 |

| 16 | 4.400 | 4.500 | 2.200 | 11.000 | 11.500 | 4.300 | 0.010 | 0.010 | 4.100 |

| 17 | 4.100 | 4.000 | 2.400 | 10.300 | 10.000 | 3.000 | 0.010 | 0.010 | 1.900 |

| 18 | 3.800 | 4.000 | 5.200 | 9.900 | 10.000 | 1.000 | 0.011 | 0.011 | 0.900 |

| 19 | 4.400 | 4.500 | 2.200 | 10.900 | 11.000 | 0.900 | 0.010 | 0.010 | 0.300 |

| 20 | 4.000 | 4.000 | 0.000 | 10.100 | 10.000 | 1.000 | 0.011 | 0.011 | 0.900 |

| 21 | 3.300 | 3.400 | 3.000 | 9.700 | 10.000 | 3.000 | 0.012 | 0.013 | 4.800 |

| 22 | 2.000 | 2.000 | 0.000 | 8.200 | 8.000 | 2.500 | 0.014 | 0.014 | 2.100 |

| 23 | 2.400 | 2.500 | 4.100 | 9.100 | 9.000 | 1.100 | 0.016 | 0.016 | 1.200 |

| 24 | 2.300 | 2.400 | 4.300 | 8.600 | 9.000 | 4.400 | 0.016 | 0.016 | 1.800 |

| 25 | 3.000 | 3.000 | 0.000 | 9.600 | 10.000 | 4.000 | 0.018 | 0.018 | 2.800 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ratnavel, R.; Viswanath, S.; Subramanian, J.; Selvaraj, V.K.; Prahasam, V.; Siddharth, S. Predicting the Optimal Input Parameters for the Desired Print Quality Using Machine Learning. Micromachines 2022, 13, 2231. https://doi.org/10.3390/mi13122231

Ratnavel R, Viswanath S, Subramanian J, Selvaraj VK, Prahasam V, Siddharth S. Predicting the Optimal Input Parameters for the Desired Print Quality Using Machine Learning. Micromachines. 2022; 13(12):2231. https://doi.org/10.3390/mi13122231

Chicago/Turabian StyleRatnavel, Rajalakshmi, Shreya Viswanath, Jeyanthi Subramanian, Vinoth Kumar Selvaraj, Valarmathi Prahasam, and Sanjay Siddharth. 2022. "Predicting the Optimal Input Parameters for the Desired Print Quality Using Machine Learning" Micromachines 13, no. 12: 2231. https://doi.org/10.3390/mi13122231

APA StyleRatnavel, R., Viswanath, S., Subramanian, J., Selvaraj, V. K., Prahasam, V., & Siddharth, S. (2022). Predicting the Optimal Input Parameters for the Desired Print Quality Using Machine Learning. Micromachines, 13(12), 2231. https://doi.org/10.3390/mi13122231