Tactile Perception Object Recognition Based on an Improved Support Vector Machine

Abstract

:1. Introduction

2. An SVM Optimization Method Based on Tactile Perception Target Recognition

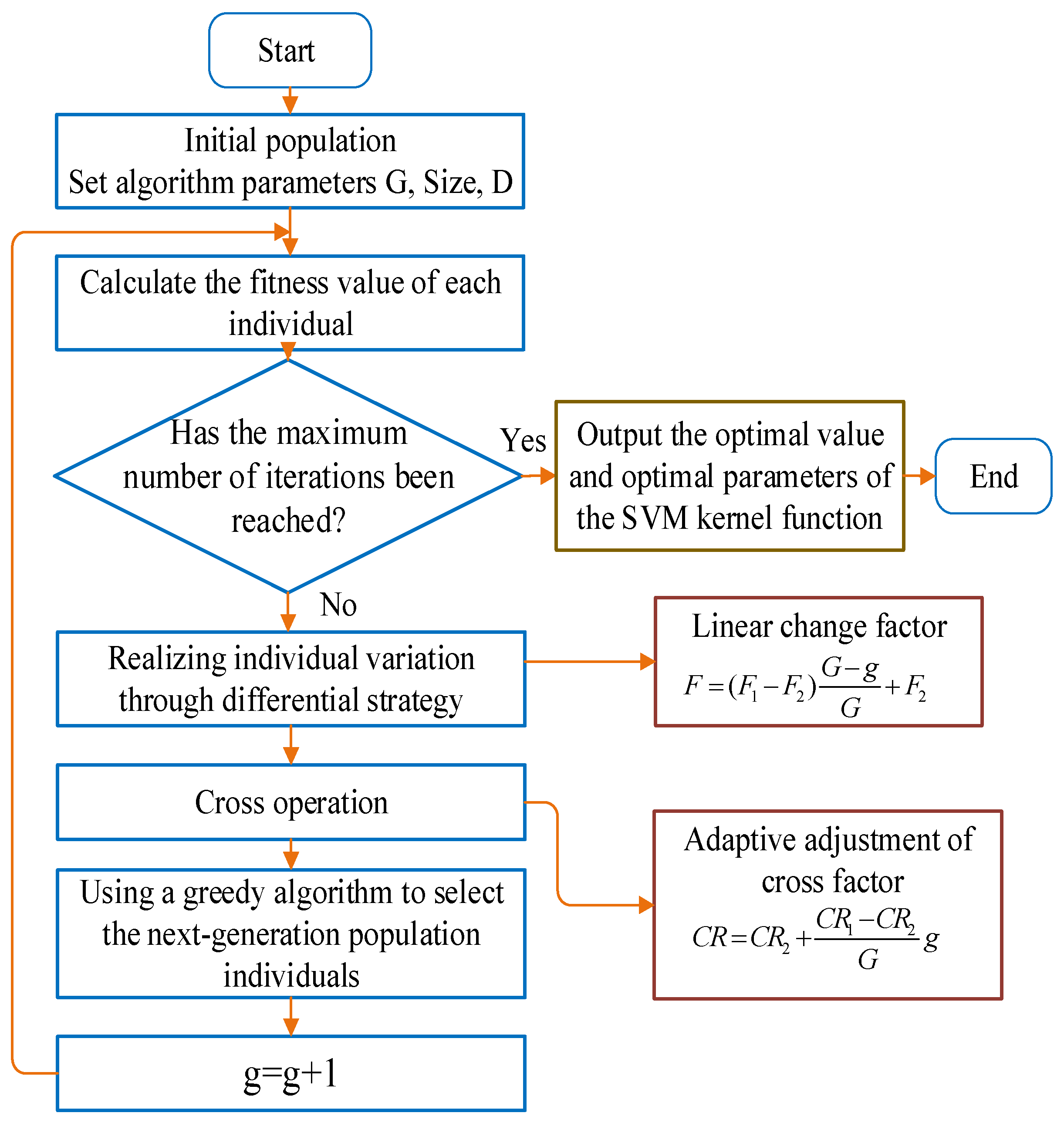

2.1. Improved Differential Evolution Algorithm

2.1.1. The Original Differential Evolution Algorithm

2.1.2. Improved Differential Evolution Algorithm (DE)

2.2. Improved Differential Evolution Algorithm (DE) to Optimize Support Vector Machine (SVM)

2.2.1. The Original Support Vector Machine (SVM)

2.2.2. Improved Differential Evolution Algorithm to Optimize Support Vector Machine (DESVM)

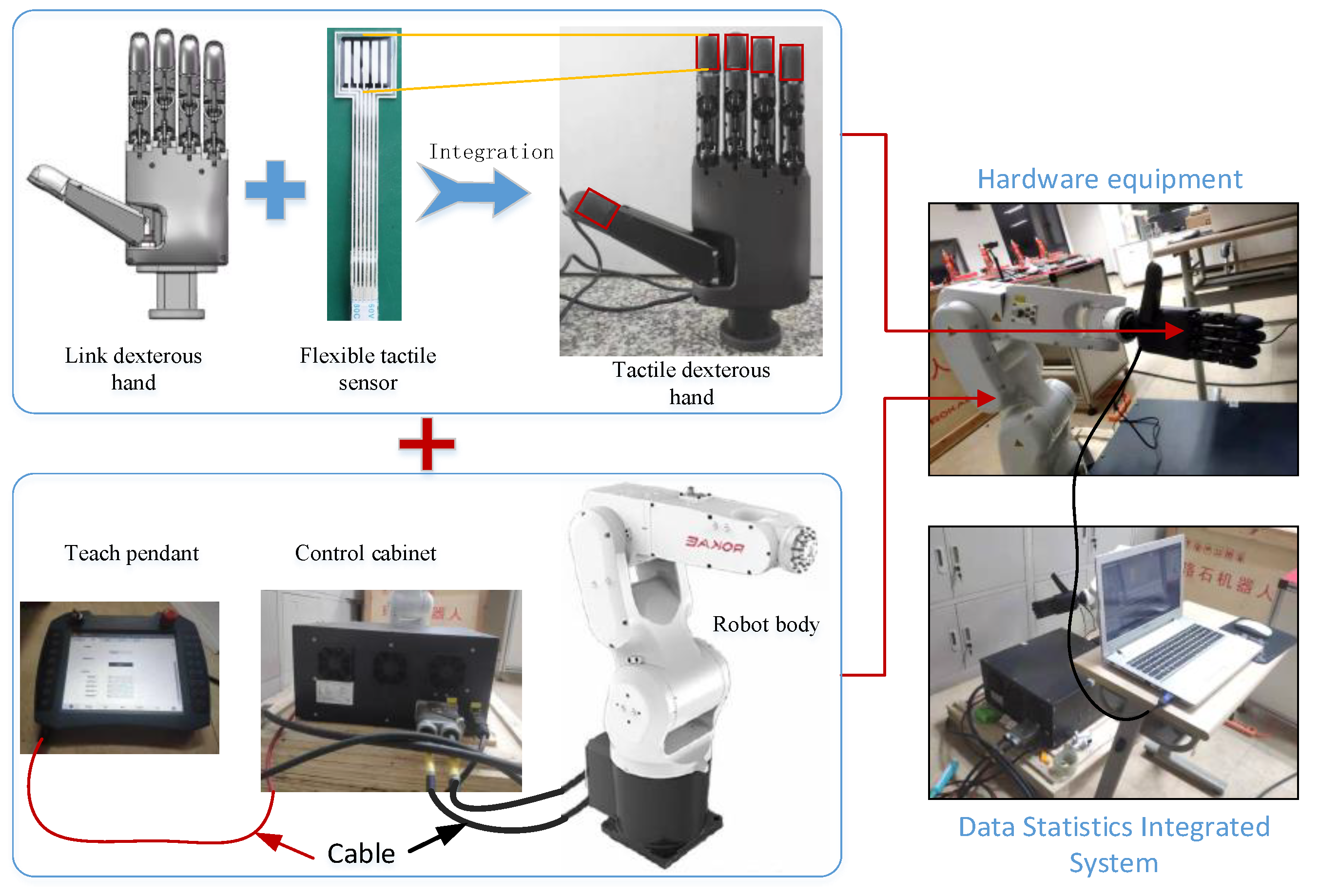

3. Tactile Data Collection Platform for the Dexterous Hand

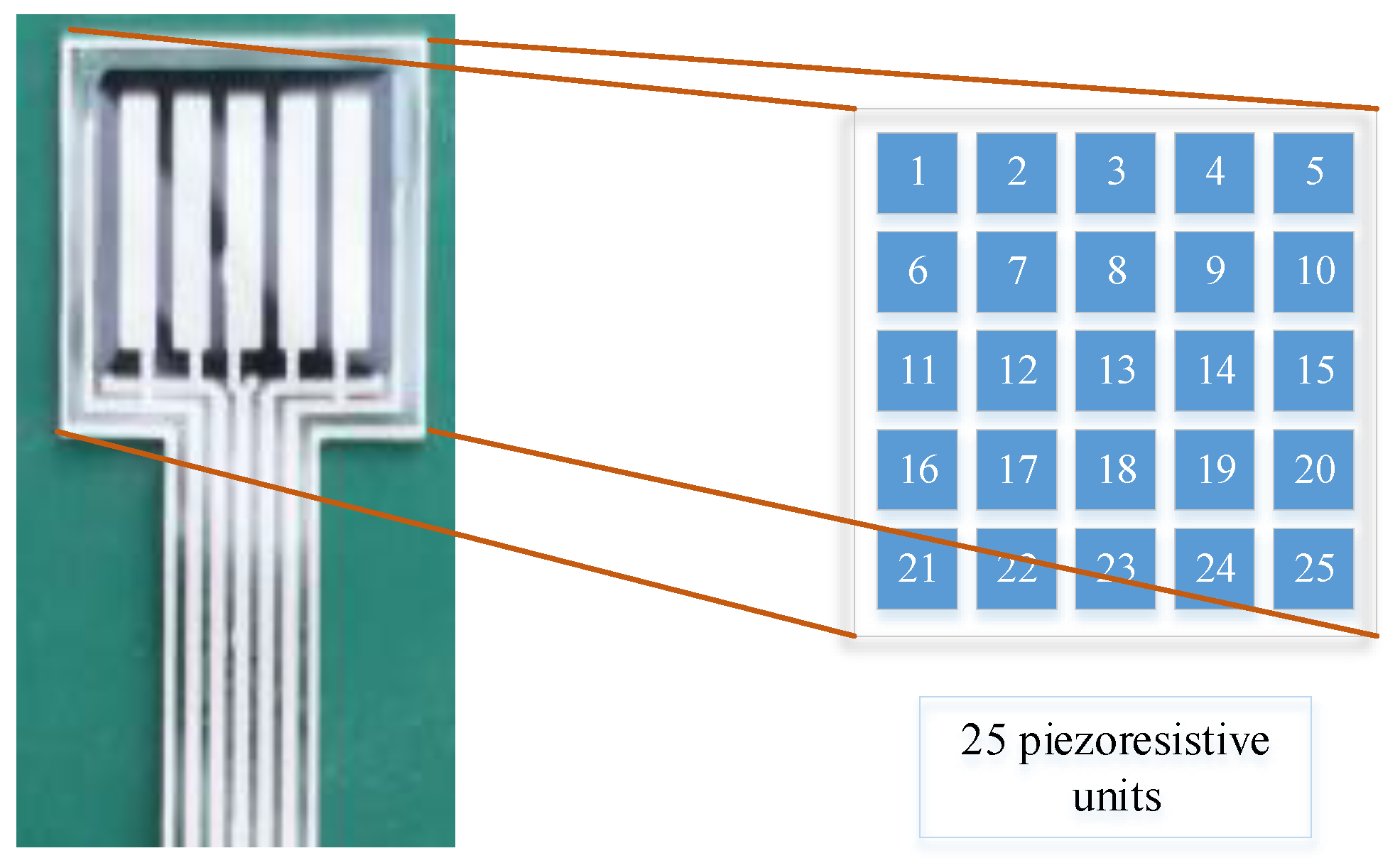

3.1. Construction of the Data Collection Platform

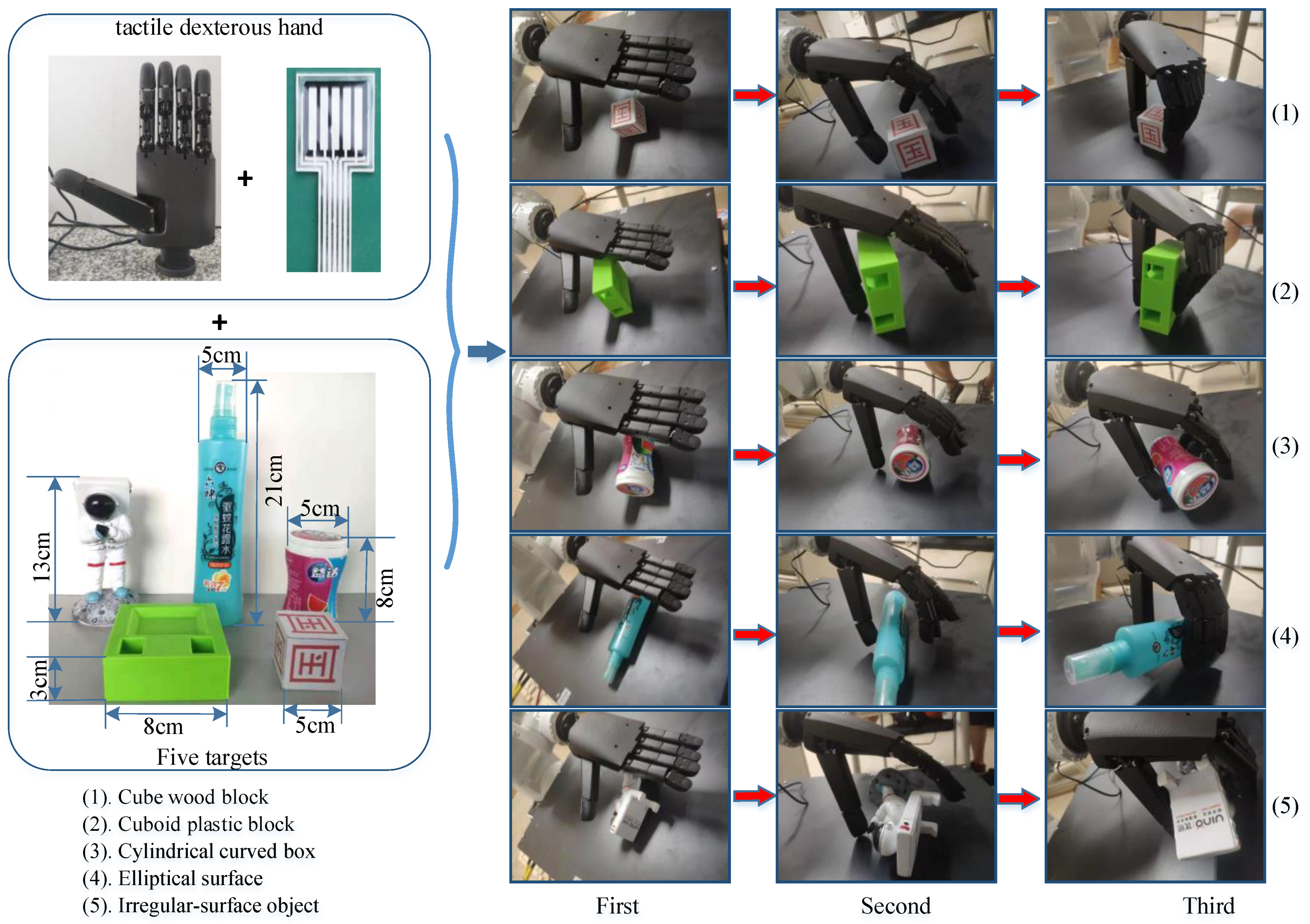

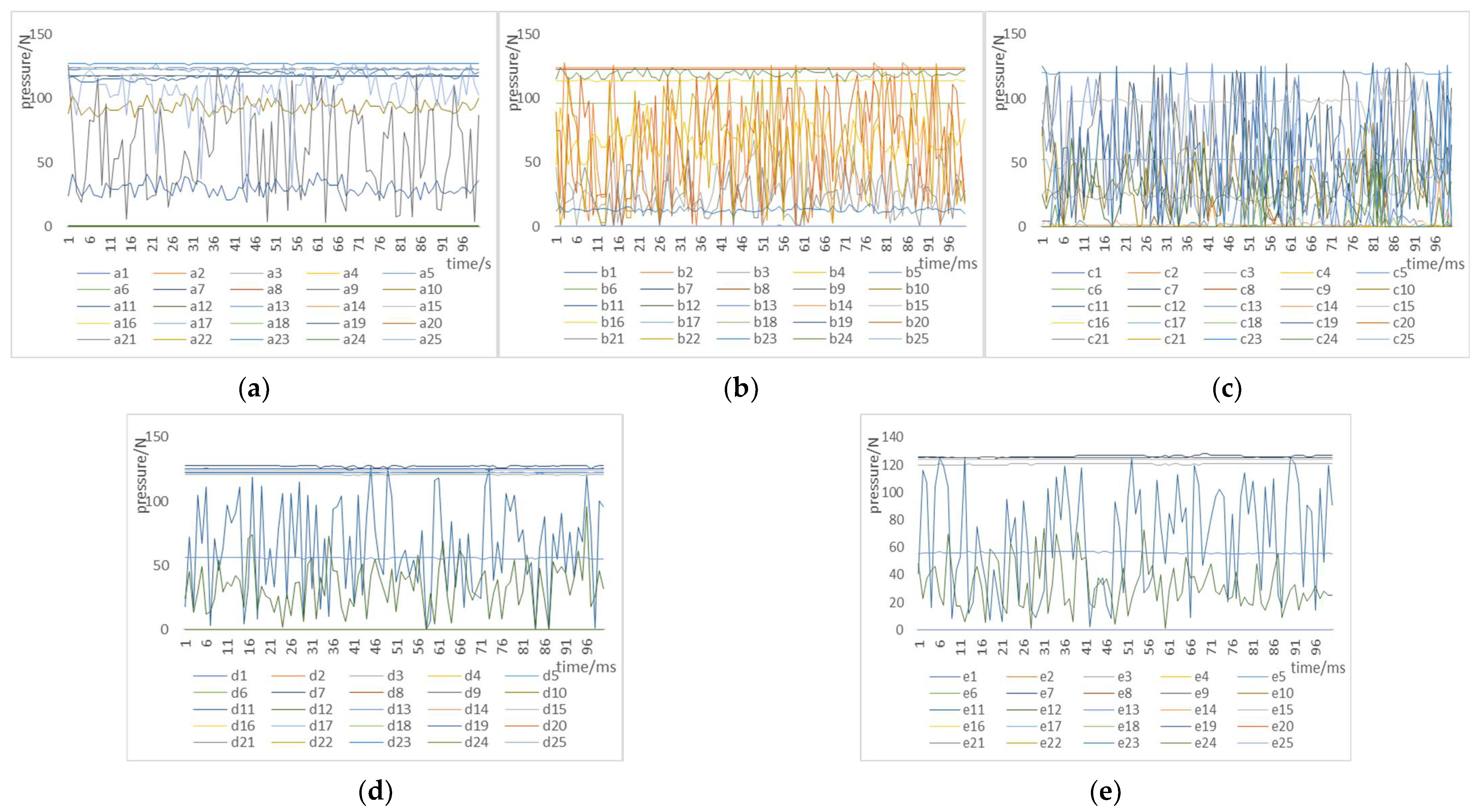

3.2. Tactile Data Collection Experiment

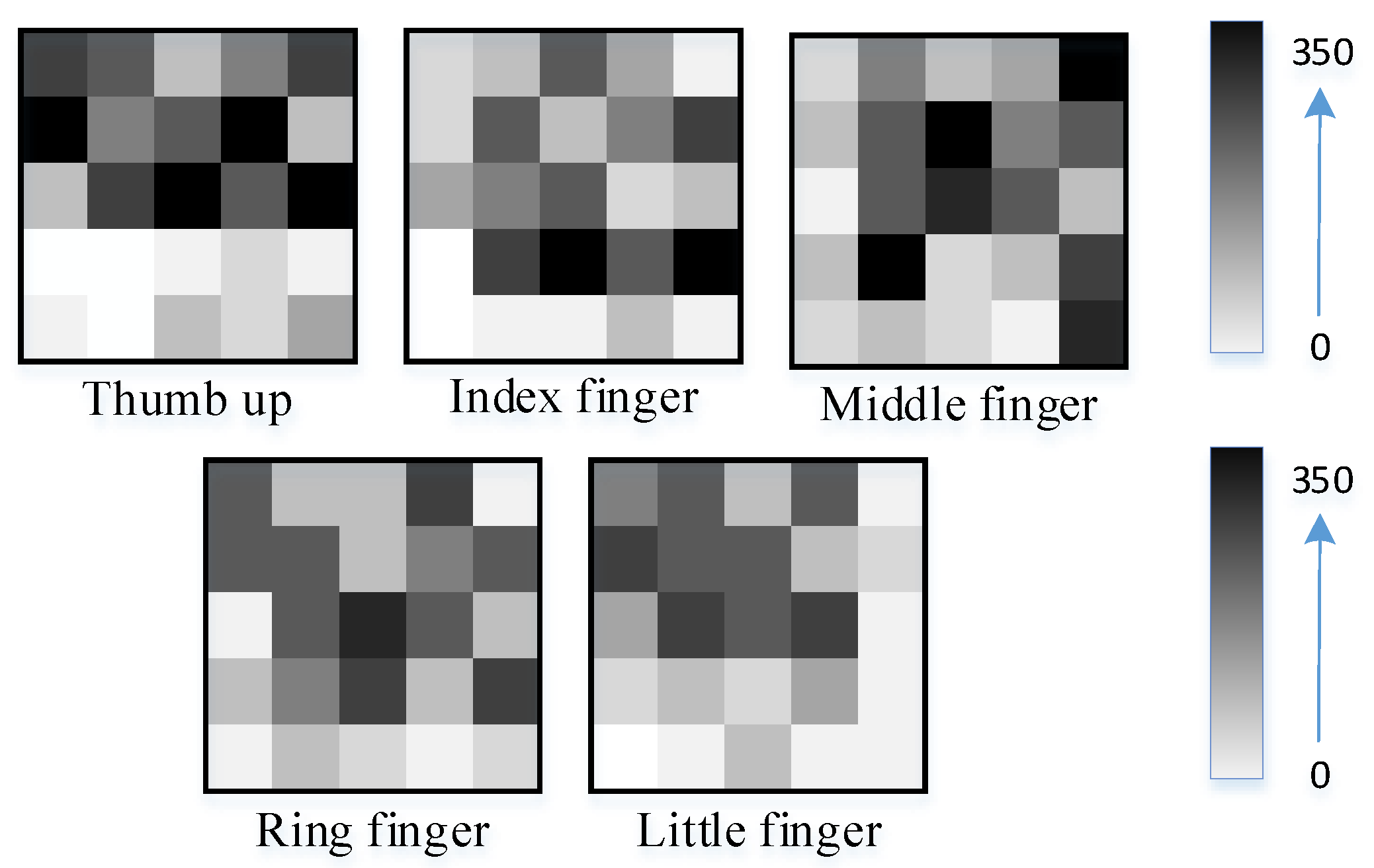

3.3. Data Preprocessing

4. Experimental Results and Analysis

4.1. Division of the Tactile Perception Dataset

4.2. Comprehensive Evaluation Method

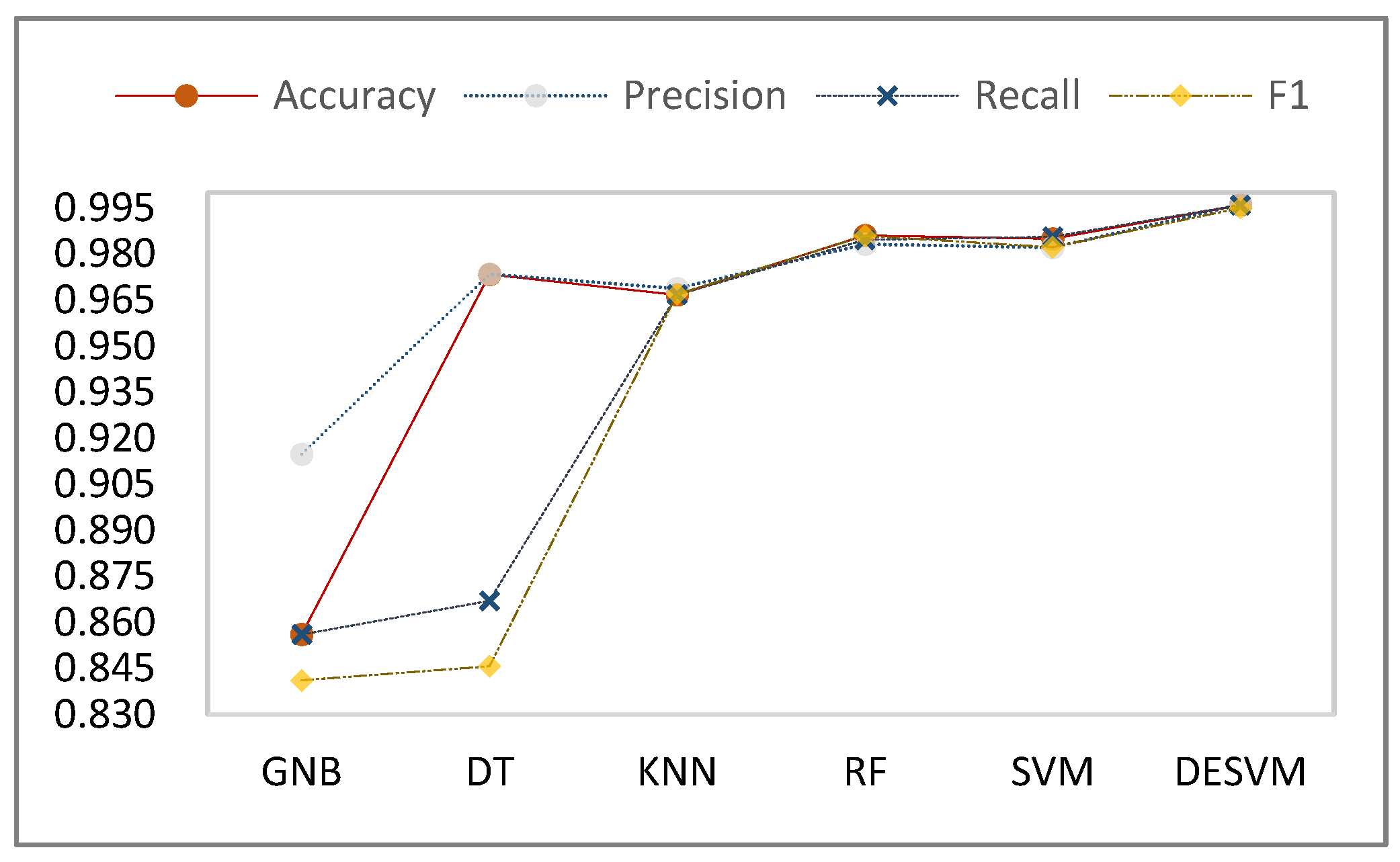

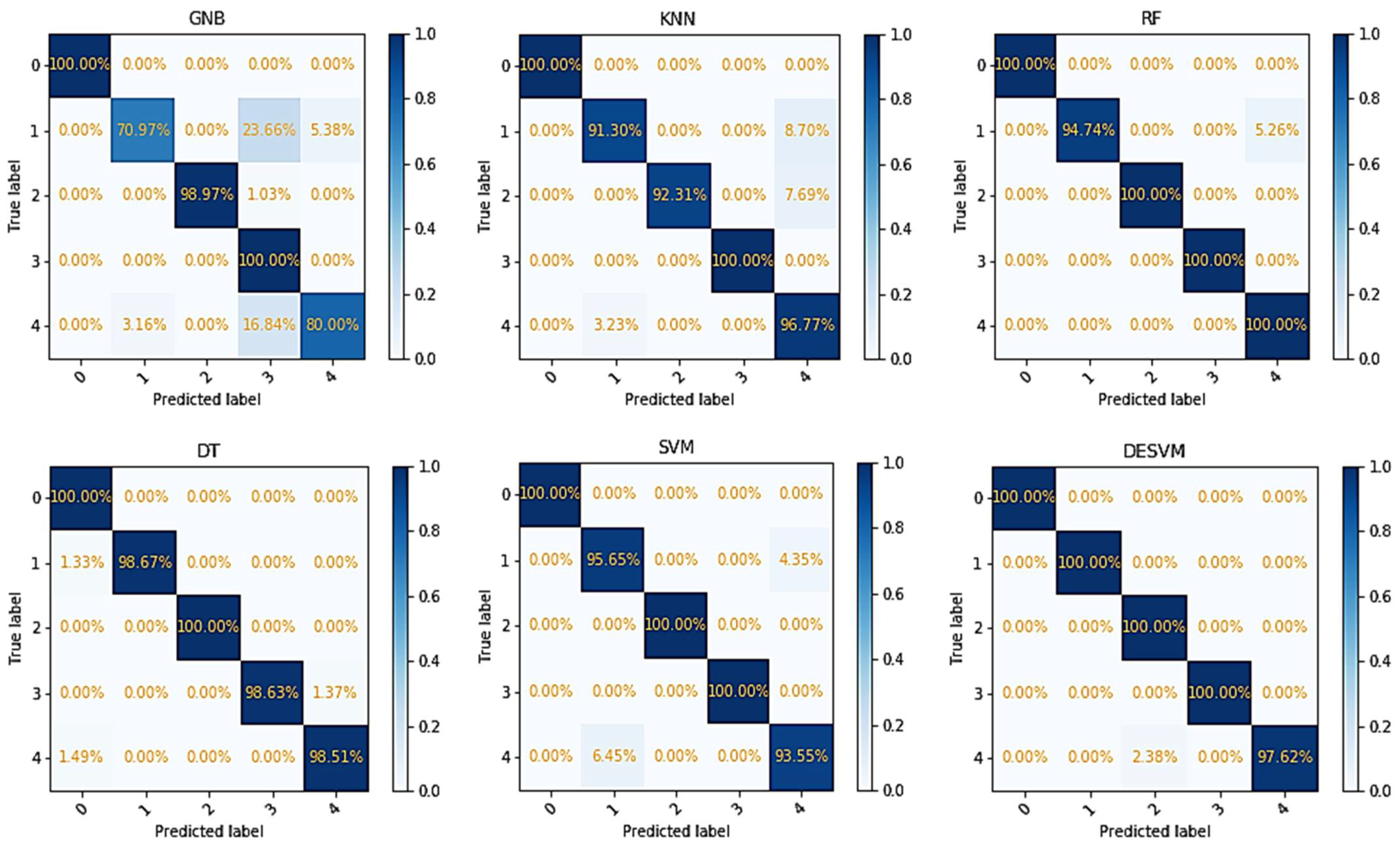

4.3. Object Recognition Result Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cretu, A.; de Oliveira, T.E.A.; da Fonseca, V.P.; Tawbe, B.; Petriu, E.M.; Groza, V.Z. Computational intelligence and mechatronics solutions for robotic tactile object recognition. In Proceedings of the 2015 IEEE 9th International Symposium on Intelligent Signal Processing (WISP), Siena, Italy, 15–17 May 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.P.; Kim, J.; Hwang, H.; Kim, J.; Park, D.; Jeong, U. Object shape recognition using tactile sensor arrays by a spiking neural network with unsupervised learning. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 178–183. [Google Scholar] [CrossRef]

- Abderrahmane, Z.; Ganesh, G.; Crosnier, A.; Cherubini, A. A Deep Learning Framework for Tactile Recognition of Known as Well as Novel Objects. IEEE Trans. Ind. Inform. 2020, 16, 423–432. [Google Scholar] [CrossRef]

- Gandarias, J.M.; Gómez-de-Gabriel, J.M.; García-Cerezo, A. Human and object recognition with a high-resolution tactile sensor. In 2017 IEEE Sensors; IEEE: Piscataway, NJ, USA, 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Luo, S.; Mou, W.; Althoefer, K.; Liu, H. Novel Tactile-SIFT Descriptor for Object Shape Recognition. IEEE Sens. J. 2015, 15, 5001–5009. [Google Scholar] [CrossRef]

- Wang, Y.; Zhibin, P.; Yiwei, P. A Training Data Set Cleaning Method by Classification Ability Ranking for the $k$ -Nearest Neighbor Classifier. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1544–1556. [Google Scholar] [CrossRef] [PubMed]

- Owens, E.A.; Griffiths, R.E.; Ratnatunga, K.U. Using oblique decision trees for the morphological classification of galaxies. Mon. Not. R. Astron. Soc. 1996, 281, 153–157. [Google Scholar] [CrossRef]

- Yang, J.; Diego, K. Bayesian Active Learning for Choice Models With Deep Gaussian Processes. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1080–1092. [Google Scholar] [CrossRef]

- Gandarias, J.M.; García-Cerezo, A.J.; Gómez-de-Gabriel, J.M. CNN-Based Methods for Object Recognition With High-Resolution Tactile Sensors. IEEE Sens. J. 2019, 19, 6872–6882. [Google Scholar] [CrossRef]

- Ruiz, L.I.; Beccaro, W.; Evaristo, B.G.; Fernandez, F.J.R. Tactile Sensing Glove-Based System for Objects Classification Using Support Vector Machine. IEEE Lat. Am. Trans. 2018, 16, 1658–1663. [Google Scholar] [CrossRef]

- Zhan, L.; Ma, X.; Fang, W.; Wang, R.; Liu, Z.; Song, Y.; Zhao, H. A rapid classification method of aluminum alloy based on laser-induced breakdown spectroscopy and random forest algorithm. Plasma Sci. Technol. 2019, 21, 34018. [Google Scholar]

- Kappassov, Z.; Baimukashev, D.; Adiyatov, O.; Salakchinov, S.; Massalin, Y.; Varol, H.A. A Series Elastic Tactile Sensing Array for Tactile Exploration of Deformable and Rigid Objects; IEEE: Madrid, Spain, 2018; pp. 520–525. [Google Scholar]

- Donlon, E.; Dong, S.; Liu, M.; Li, J.; Adelson, E.; Rodriguez, A. GelSlim: A High-Resolution, Compact, Robust, and Calibrated Tactile-Sensing Finger; IEEE: Madrid, Spain, 2018. [Google Scholar]

- Calandra, R.; Owens, A.; Jayaraman, D.; Lin, J.; Yuan, W.; Malik, J.; Adelson, E.H.; Levine, S. More Than a Feeling: Learning to Grasp and Regrasp Using Vision and Touch. IEEE Robot. Autom. Lett. 2018, 3, 3300–3307. [Google Scholar] [CrossRef]

- Luo, S.; Mou, W.; Li, M.; Althoefer, K.; Liu, H. Rotation and translation invariant object recognition with a tactile sensor. In SENSORS, 2014 IEEE; IEEE: Piscataway, NJ, USA, 2014; pp. 1030–1033. [Google Scholar] [CrossRef]

- Drimus, A.; Kootstra, G.; Bilberg, A.; Kragic, D. Design of a flexible tactile sensor for classification of rigid and deformable objects. Robot. Auton. Syst. 2014, 62, 3–15. [Google Scholar] [CrossRef]

- Sundaram, S.; Kellnhofer, P.; Li, Y.; Zhu, J.Y.; Torralba, A.; Matusik, W. Learning the signatures of the human grasp using a scalable tactile glove. Nature 2019, 569, 698–702. [Google Scholar] [CrossRef] [PubMed]

- Madry, M.; Bo, L.; Kragic, D.; Fox, D. ST-HMP: Unsupervised Spatio-Temporal feature learning for tactile data. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Lambeta, M.; Chou, P.-W.; Tian, S.; Yang, B.; Maloon, B.; Most, V.R.; Stroud, D.; Santos, R.; Byagowi, A.; Kammerer, G.; et al. DIGIT: A Novel Design for a Low-Cost Compact High-Resolution Tactile Sensor With Application to In-Hand Manipulation. IEEE Robot. Autom. Lett. 2020, 5, 3838–3845. [Google Scholar] [CrossRef]

- Liu, C.; Huang, W.; Sun, F.; Luo, M.; Tan, C. LDS-FCM: A Linear Dynamical System Based Fuzzy C-Means Method for Tactile Recognition. IEEE Trans. Fuzzy Syst. 2019, 27, 72–83. [Google Scholar] [CrossRef]

- Zapata-Impata, B.S.; Gil, P.; Mezouar, Y.; Torres, F. Generation of Tactile Data From 3D Vision and Target Robotic Grasps. IEEE Trans. Haptics 2021, 14, 57–67. [Google Scholar] [CrossRef] [PubMed]

- Funabashi, S.; Morikuni, S.; Geier, A.; Schmitz, A.; Ogasa, S.; Torno, T.P.; Somlor, S.; Sugano, S. Object Recognition through Active Sensing Using a Multi-Fingered Robot Hand with 3D Tactile Sensors; IEEE: Madrid, Spain, 2018; pp. 2589–2595. [Google Scholar]

- Das, S.; Abraham, A.; Konar, A. Automatic Clustering Using an Improved Differential Evolution Algorithm. IEEE Trans. Syst. Man Cybern.—Part A Syst. Hum. 2008, 38, 218–237. [Google Scholar] [CrossRef]

- Liu, M.; Li, J.; Zong, T. Parameter identification of Box-Jenkins systems based on the differential evolution algorithm. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 1557–1561. [Google Scholar] [CrossRef]

- Hou, Y.; Ding, X.; Hou, R. Support vector machine classification prediction model based on improved chaotic differential evolution algorithm. In Proceedings of the 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Guilin, China, 29–31 July 2017; pp. 123–129. [Google Scholar] [CrossRef]

- Shu, W.; Cai, K. A SVM Multi-Class Image Classification Method Based on DE and KNN in Smart City Management. IEEE Access 2019, 7, 132775–132785. [Google Scholar] [CrossRef]

- Cheng, Y.; Li, D.; Guo, Z.; Jiang, B.; Geng, J.; Lin, J.; Fan, X.; Yu, X.; Bai, W.; Qu, L.; et al. Accelerating End-to-End Deep Learning Workflow With Codesign of Data Preprocessing and Scheduling. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 1802–1814. [Google Scholar] [CrossRef]

- Xian, G. Parallel Machine Learning Algorithm Using Fine-Grained-Mode Spark on a Mesos Big Data Cloud Computing Software Framework for Mobile Robotic Intelligent Fault Recognition. IEEE Access 2020, 8, 131885–131900. [Google Scholar] [CrossRef]

- Tao, R.; Zhang, S.; Huang, X.; Tao, M.; Ma, J.; Ma, S.; Zhang, C.; Zhang, T.; Tang, F.; Lu, J.; et al. Magnetocardiography-Based Ischemic Heart Disease Detection and Localization Using Machine Learning Methods. IEEE Trans. Biomed. Eng. 2019, 66, 1658–1667. [Google Scholar] [CrossRef] [PubMed]

| Objects | Cube Wood Block | Cuboid Plastic Block | Cylindrical Curved Box | Elliptical Surface | Irregular Surface Objects |

|---|---|---|---|---|---|

| Training set | 70 | 70 | 70 | 70 | 70 |

| Testing set | 30 | 30 | 30 | 30 | 30 |

| Total | Training set: 350, testing set: 150 | ||||

| Algorithm | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| GNB | 0.8560 | 0.9148 | 0.8560 | 0.8411 |

| KNN | 0.9667 | 0.9687 | 0.9667 | 0.9669 |

| DT | 0.9733 | 0.9735 | 0.8670 | 0.8456 |

| RF | 0.9860 | 0.9832 | 0.9846 | 0.9859 |

| SVM | 0.9850 | 0.9821 | 0.9856 | 0.9822 |

| DESVM | 0.9960 | 0.9961 | 0.9960 | 0.9950 |

| Algorithm | Avg. Accuracy | Std. Accuracy |

|---|---|---|

| GNB | 0.882 | 0.032 |

| KNN | 0.964 | 0.022 |

| DT | 0.978 | 0.025 |

| RF | 0.988 | 0.008 |

| SVM | 0.986 | 0.017 |

| DESVM | 0.996 | 0.006 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Li, S.; Yang, J.; Wang, Y.; Huang, Z.; Zhang, J. Tactile Perception Object Recognition Based on an Improved Support Vector Machine. Micromachines 2022, 13, 1538. https://doi.org/10.3390/mi13091538

Zhang X, Li S, Yang J, Wang Y, Huang Z, Zhang J. Tactile Perception Object Recognition Based on an Improved Support Vector Machine. Micromachines. 2022; 13(9):1538. https://doi.org/10.3390/mi13091538

Chicago/Turabian StyleZhang, Xingxing, Shaobo Li, Jing Yang, Yang Wang, Zichen Huang, and Jinhu Zhang. 2022. "Tactile Perception Object Recognition Based on an Improved Support Vector Machine" Micromachines 13, no. 9: 1538. https://doi.org/10.3390/mi13091538

APA StyleZhang, X., Li, S., Yang, J., Wang, Y., Huang, Z., & Zhang, J. (2022). Tactile Perception Object Recognition Based on an Improved Support Vector Machine. Micromachines, 13(9), 1538. https://doi.org/10.3390/mi13091538