Infrared UAV Target Detection Based on Continuous-Coupled Neural Network

Abstract

:1. Introduction

- (1)

- It introduces the primary visual cortex model into infrared image processing and analyzes the status characteristics of the CCNN in the infrared image processing.

- (2)

- It proposes a new framework for the automatic detection of infrared UAVs. This framework is capable of automatically configuring the parameters based on the input image, then groups the image pixel values through the iterative process of the CCNN, reconstructs the image through the output value, and controls the number of iterations through the entropy of the image.

- (3)

- The proposed detection framework was tested in a complex environment to verify the effectiveness of the method. This work was performed on infrared images collected with complex buildings and cloud cover. The average IoU of this framework in the UAV infrared images reached 74.79% (up to 97.01%), which can effectively achieve unmanned operation for machine detection tasks.

2. Related Works

2.1. UAV Detection Method Based on Machine Learning

2.2. UAV Detection Method Based on Deep Learning

2.3. Brain-Inspired Computing

3. Method

3.1. Continuous-Coupled Neural Network

3.2. Image-Processing Framework

3.2.1. Erosion–Dilation Algorithm

3.2.2. Minimum Bounding Rectangle

4. Experiment

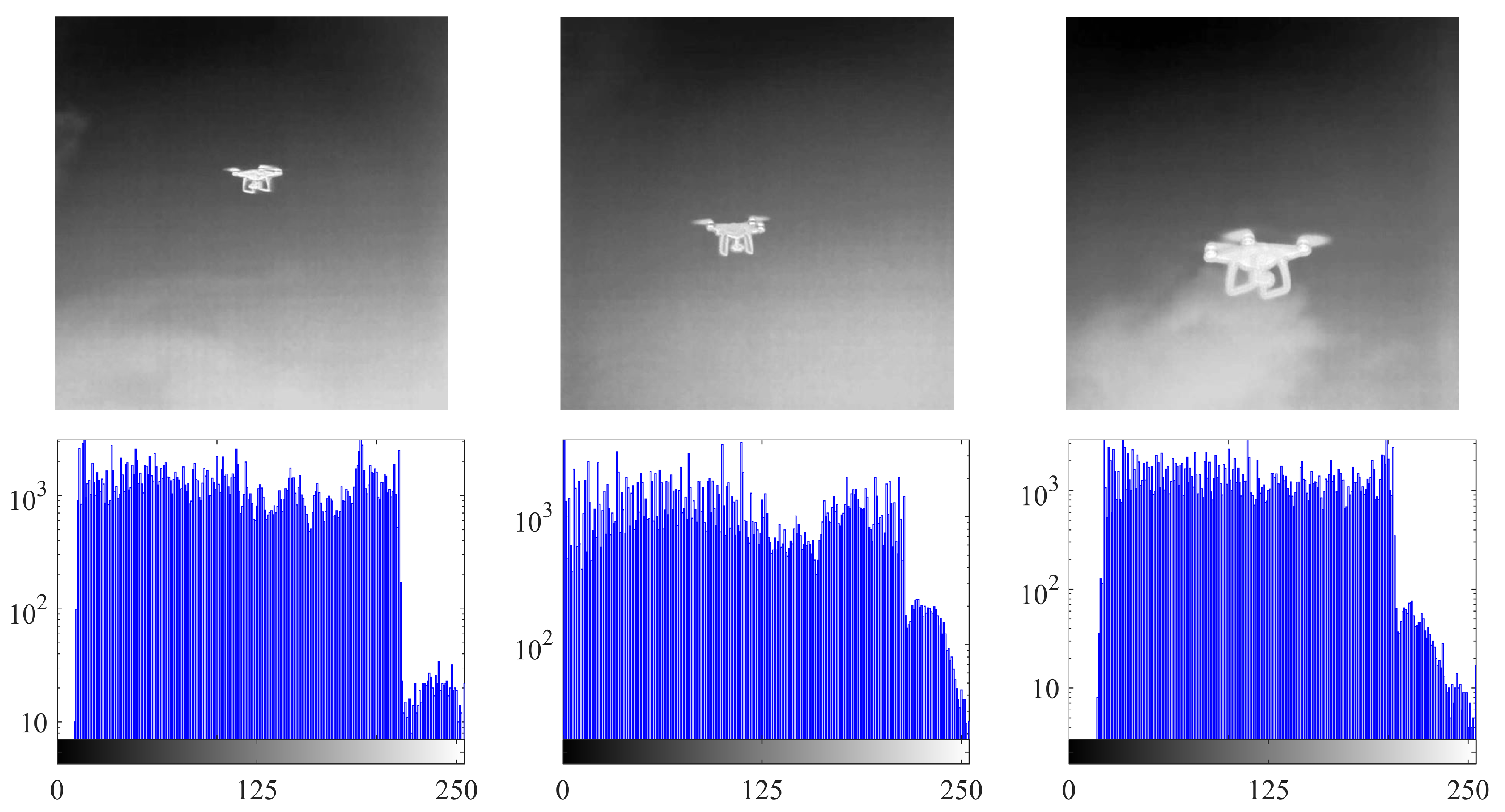

4.1. Dataset

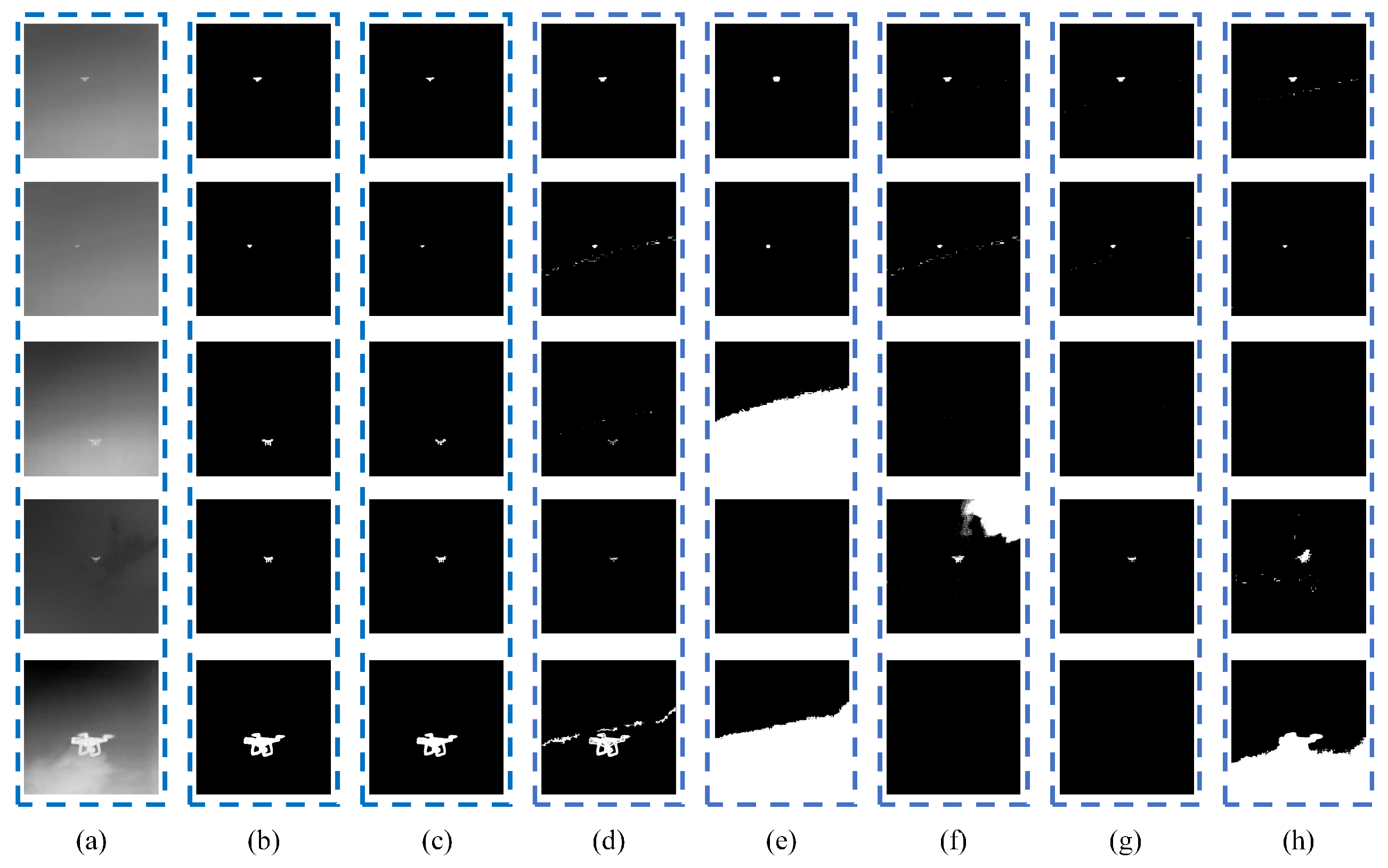

4.2. Experimental Results

4.3. Performance Measure

4.4. Detection Results

4.5. Status Characteristics

5. Limitations and Future Work

- (1)

- The result showed that the model still had the phenomenon of missing detection. In the future, we hope to introduce structural information of the UAV to better detect all parts of the UAV.

- (2)

- How to design an algorithm for video tasks based on the characteristics of the CCNN model to achieve the goal of real-time detection is also one of the important tasks in the future.

- (3)

- In this work, we used the CCNN to process infrared images and realize the detection of UAVs. In the future, we will work on implementing hardware-based CCNNs based on memristive synaptic devices.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Behera, D.K.; Bazil Raj, A. Drone Detection and Classification using Deep Learning. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; pp. 1012–1016. [Google Scholar] [CrossRef]

- Shi, Q.; Li, J. Objects Detection of UAV for Anti-UAV Based on YOLOv4. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology ICCASIT, Weihai, China, 14–16 October 2020; pp. 1048–1052. [Google Scholar] [CrossRef]

- Memon, S.A.; Ullah, I. Detection and tracking of the trajectories of dynamic UAVs in restricted and cluttered environment. Expert Syst. Appl. 2021, 183, 115309. [Google Scholar] [CrossRef]

- Memon, S.A.; Son, H.; Kim, W.G.; Khan, A.M.; Shahzad, M.; Khan, U. Tracking Multiple Unmanned Aerial Vehicles through Occlusion in Low-Altitude Airspace. Drones 2023, 7, 241. [Google Scholar] [CrossRef]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Jiang, B.; Ma, X.; Lu, Y.; Li, Y.; Feng, L.; Shi, Z. Ship detection in spaceborne infrared images based on Convolutional Neural Networks and synthetic targets. Infrared Phys. Technol. 2019, 97, 229–234. [Google Scholar] [CrossRef]

- Lin, Z.; Huang, M.; Zhou, Q. Infrared small target detection based on YOLO v4. J. Phys. Conf. Ser. 2023, 2450, 012019. [Google Scholar] [CrossRef]

- Fang, H.; Xia, M.; Zhou, G.; Chang, Y.; Yan, L. Infrared Small UAV Target Detection Based on Residual Image Prediction via Global and Local Dilated Residual Networks. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, J.; Lian, J.; Sprott, J.C.; Liu, Q.; Ma, Y. The Butterfly Effect in Primary Visual Cortex. IEEE Trans. Comput. 2022, 71, 2803–2815. [Google Scholar] [CrossRef]

- Molchanov, P.; Harmanny, R.I.; de Wit, J.J.; Egiazarian, K.; Astola, J. Classification of small UAVs and birds by micro-Doppler signatures. Int. J. Microw. Wirel. Technol. 2014, 6, 435–444. [Google Scholar] [CrossRef]

- Taha, B.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Jahangir, M.; Baker, C.J. Extended dwell Doppler characteristics of birds and micro-UAS at l-band. In Proceedings of the 2017 18th International Radar Symposium (IRS), Prague, Czech Republic, 28–30 June 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Jahangir, M.; Baker, C.J.; Oswald, G.A. Doppler characteristics of micro-drones with L-Band multibeam staring radar. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017; pp. 1052–1057. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Guvenc, I. Wavelet transform analytics for RF-based UAV detection and identification system using machine learning. Pervasive Mob. Comput. 2022, 82, 101569. [Google Scholar] [CrossRef]

- Hall, E.; Kruger, R.; Dwyer, S.; Hall, D.; Mclaren, R.; Lodwick, G. A Survey of Preprocessing and Feature Extraction Techniques for Radiographic Images. IEEE Trans. Comput. 1971, C-20, 1032–1044. [Google Scholar] [CrossRef]

- Loew, M.H. Feature extraction. Handb. Med Imaging 2000, 2, 273–342. [Google Scholar]

- Seidaliyeva, U.; Alduraibi, M.; Ilipbayeva, L.; Almagambetov, A. Detection of loaded and unloaded UAV using deep neural network. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020; pp. 490–494. [Google Scholar] [CrossRef]

- Dou, J.X.; Pan, A.Q.; Bao, R.; Mao, H.H.; Luo, L. Sampling through the lens of sequential decision making. arXiv 2022, arXiv:2208.08056. [Google Scholar]

- Zhu, H.; Zhang, M.; Zhang, X.; Zhang, L. Two-branch encoding and iterative attention decoding network for semantic segmentation. Neural Comput. Appl. 2020, 33, 5151–5166. [Google Scholar] [CrossRef]

- Zhu, H.; Wang, K.; Zhang, Z.; Liu, Y.; Jiang, W. Low-light image enhancement network with decomposition and adaptive information fusion. Neural Comput. Appl. 2022, 34, 7733–7748. [Google Scholar] [CrossRef]

- Dou, J.X.; Bao, R.; Song, S.; Yang, S.; Zhang, Y.; Liang, P.P.; Mao, H.H. Demystify the Gravity Well in the Optimization Landscape (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep Learning-Based UAV Detection in Pulse-Doppler Radar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Dou, J.X.; Mao, H.; Bao, R.; Liang, P.P.; Tan, X.; Zhang, S.; Jia, M.; Zhou, P.; Mao, Z.H. The Measurement of Knowledge in Knowledge Graphs. In Proceedings of the AAAI 2023 Workshop on Representation Learning for Responsible Human-Centric AI (R2HCAI); Association for the Advancement of Artificial Intelligence (AAAI): Washington, DC, USA, 2023. [Google Scholar]

- Yi, Z.; Lian, J.; Liu, Q.; Zhu, H.; Liang, D.; Liu, J. Learning rules in spiking neural networks: A survey. Neurocomputing 2023, 531, 163–179. [Google Scholar] [CrossRef]

- Di, J.; Ren, L.; Liu, J.; Guo, W.; Zhange, H.; Liu, Q.; Lian, J. FDNet: An end-to-end fusion decomposition network for infrared and visible images. PLOS ONE 2023, 18, e0290231. [Google Scholar] [CrossRef]

- Ma, T.; Mou, J.; Al-Barakati, A.A.; Jahanshahi, H.; Miao, M. Hidden dynamics of memristor-coupled neurons with multi-stability and multi-transient hyperchaotic behavior. Phys. Scr. 2023, 98, 105202. [Google Scholar] [CrossRef]

- Ma, T.; Mou, J.; Banerjee, S.; Cao, Y. Analysis of the functional behavior of fractional-order discrete neuron under electromagnetic radiation. Chaos Solitons Fractals 2023, 176, 114113. [Google Scholar] [CrossRef]

- Zhang, X.R.; Wang, X.Y.; Ge, Z.Y.; Li, Z.L.; Wu, M.Y.; Borah, S. A Novel Memristive Neural Network Circuit and Its Application in Character Recognition. Micromachines 2022, 12, 2074. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.Y.; Dong, C.T.; Zhou, P.F.; Nandi, S.K.; Nath, S.K.; Elliman, R.G.; Iu, H.H.C.; Kang, S.M.; Eshraghian, J.K. Low-Variance Memristor-Based Multi-Level Ternary Combinational Logic. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 2423–2434. [Google Scholar] [CrossRef]

- Ding, S.; Wang, N.; Bao, H.; Chen, B.; Wu, H.; Xu, Q. Memristor synapse-coupled piecewise-linear simplified Hopfield neural network: Dynamics analysis and circuit implementation. Chaos Solitons Fractals 2023, 166, 112899. [Google Scholar] [CrossRef]

- Lian, J.; Yang, Z.; Liu, J.; Sun, W.; Zheng, L.; Du, X.; Yi, Z.; Shi, B.; Ma, Y. An overview of image segmentation based on pulse-coupled neural network. Arch. Comput. Methods Eng. 2021, 28, 387–403. [Google Scholar] [CrossRef]

- Lian, J.; Ma, Y.; Ma, Y.; Shi, B.; Liu, J.; Yang, Z.; Guo, Y. Automatic gallbladder and gallstone regions segmentation in ultrasound image. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Qi, Y.; Yang, Z.; Lian, J.; Guo, Y.; Sun, W.; Liu, J.; Wang, R.; Ma, Y. A new heterogeneous neural network model and its application in image enhancement. Neurocomputing 2021, 440, 336–350. [Google Scholar] [CrossRef]

- Lian, J.; Liu, J.; Yang, Z.; Qi, Y.; Zhang, H.; Zhang, M.; Ma, Y. A Pulse-Number-Adjustable MSPCNN and Its Image Enhancement Application. IEEE Access 2021, 9, 161069–161086. [Google Scholar] [CrossRef]

- Di, J.; Guo, W.; Liu, J.; Lian, J.; Ren, L. Medical image fusion based on rolling guide filter and adaptive PCNN in NSCT domain. Appl. Res. Comput. Yingyong Yanjiu 2023, 40, 15374–15406. [Google Scholar]

- Qi, Y.; Yang, Z.; Lei, J.; Lian, J.; Liu, J.; Feng, W.; Ma, Y. Morph_SPCNN model and its application in breast density segmentation. Multimed. Tools Appl. 2020, 80, 2821–2845. [Google Scholar] [CrossRef]

- Wang, X.Y.; Zhang, X.R.; Gao, M.; Tian, Y.Z.; Wang, C.H.; Iu, H.H. A Color Image Encryption Algorithm Based on Hash Table, Hilbert Curve and Hyper-Chaotic Synchronization. Mathematics 2023, 3, 567. [Google Scholar] [CrossRef]

- Lian, J.; Shi, B.; Li, M.; Nan, Z.; Ma, Y. An automatic segmentation method of a parameter-adaptive PCNN for medical images. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1511–1519. [Google Scholar] [CrossRef] [PubMed]

- Zhou, D.; Zhou, H.; Gao, C.; Guo, Y. Simplified parameters model of PCNN and its application to image segmentation. Pattern Anal. Appl. 2015, 19, 939–951. [Google Scholar] [CrossRef]

- Zhan, K.; Zhang, H.; Ma, Y. New Spiking Cortical Model for Invariant Texture Retrieval and Image Processing. IEEE Trans. Neural Netw. 2009, 20, 1980–1986. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Park, S.K.; Ma, Y.; Ala, R. A New Automatic Parameter Setting Method of a Simplified PCNN for Image Segmentation. IEEE Trans. Neural Netw. 2011, 22, 880–892. [Google Scholar] [CrossRef] [PubMed]

- Johnson, T.; Iacoviello, F.; Hayden, D.; Welsh, J.; Levison, P.; Shearing, P.; Bracewell, D. Packed bed compression visualisation and flow simulation using an erosion-dilation approach. J. Chromatogr. A 2020, 1611, 460601. [Google Scholar] [CrossRef]

- Li, Z.; Guo, B.; Ren, X.; Liao, N.N. Vertical Interior Distance Ratio to Minimum Bounding Rectangle of a Shape. In Hybrid Intelligent Systems; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Cheng, B.; Girshick, R.; Dollar, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving Object-Centric Image Segmentation Evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 15334–15342. [Google Scholar]

| Image | CCNN | PCNN | FCM | Otsu | Iteration | Bimodal |

|---|---|---|---|---|---|---|

| 1 | 0.7479 | 0.8333 1 | 0.6598 | 0.7882 | 0.7995 | 0.5961 |

| 2 | 0.6218 | 0.1870 | 0.6768 | 0.1978 | 0.5975 | 0.6964 1 |

| 3 | 0.5983 1 | 0.2755 | 0.0039 | 0.0000 | 0.0000 | 0.0000 |

| 4 | 0.7936 1 | 0.3722 | 0.0022 | 0.0163 | 0.6322 | 0.2717 |

| 5 | 0.9701 1 | 0.6494 | 0.0489 | 0.0000 | 0.0000 | 0.0000 |

| 6 | 0.5576 1 | 0.5330 | 0.3459 | 0.4425 | 0.4485 | 0.3125 |

| 7 | 0.7865 1 | 0.2043 | 0.0135 | 0.1218 | 0.1727 | 0.0000 |

| 8 | 0.8654 1 | 0.0002 | 0.3955 | 0.0000 | 0.0000 | 0.0000 |

| 9 | 0.7384 | 0.6638 | 0.2980 | 0.9353 | 0.9782 1 | 0.8000 |

| 10 | 0.5843 1 | 0.1384 | 0.0063 | 0.1568 | 0.1719 | 0.0000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Lian, J.; Liu, J. Infrared UAV Target Detection Based on Continuous-Coupled Neural Network. Micromachines 2023, 14, 2113. https://doi.org/10.3390/mi14112113

Yang Z, Lian J, Liu J. Infrared UAV Target Detection Based on Continuous-Coupled Neural Network. Micromachines. 2023; 14(11):2113. https://doi.org/10.3390/mi14112113

Chicago/Turabian StyleYang, Zhuoran, Jing Lian, and Jizhao Liu. 2023. "Infrared UAV Target Detection Based on Continuous-Coupled Neural Network" Micromachines 14, no. 11: 2113. https://doi.org/10.3390/mi14112113

APA StyleYang, Z., Lian, J., & Liu, J. (2023). Infrared UAV Target Detection Based on Continuous-Coupled Neural Network. Micromachines, 14(11), 2113. https://doi.org/10.3390/mi14112113