Diagnostic and Prognostic Deep Learning Applications for Histological Assessment of Cutaneous Melanoma

Abstract

Simple Summary

Abstract

1. Introduction

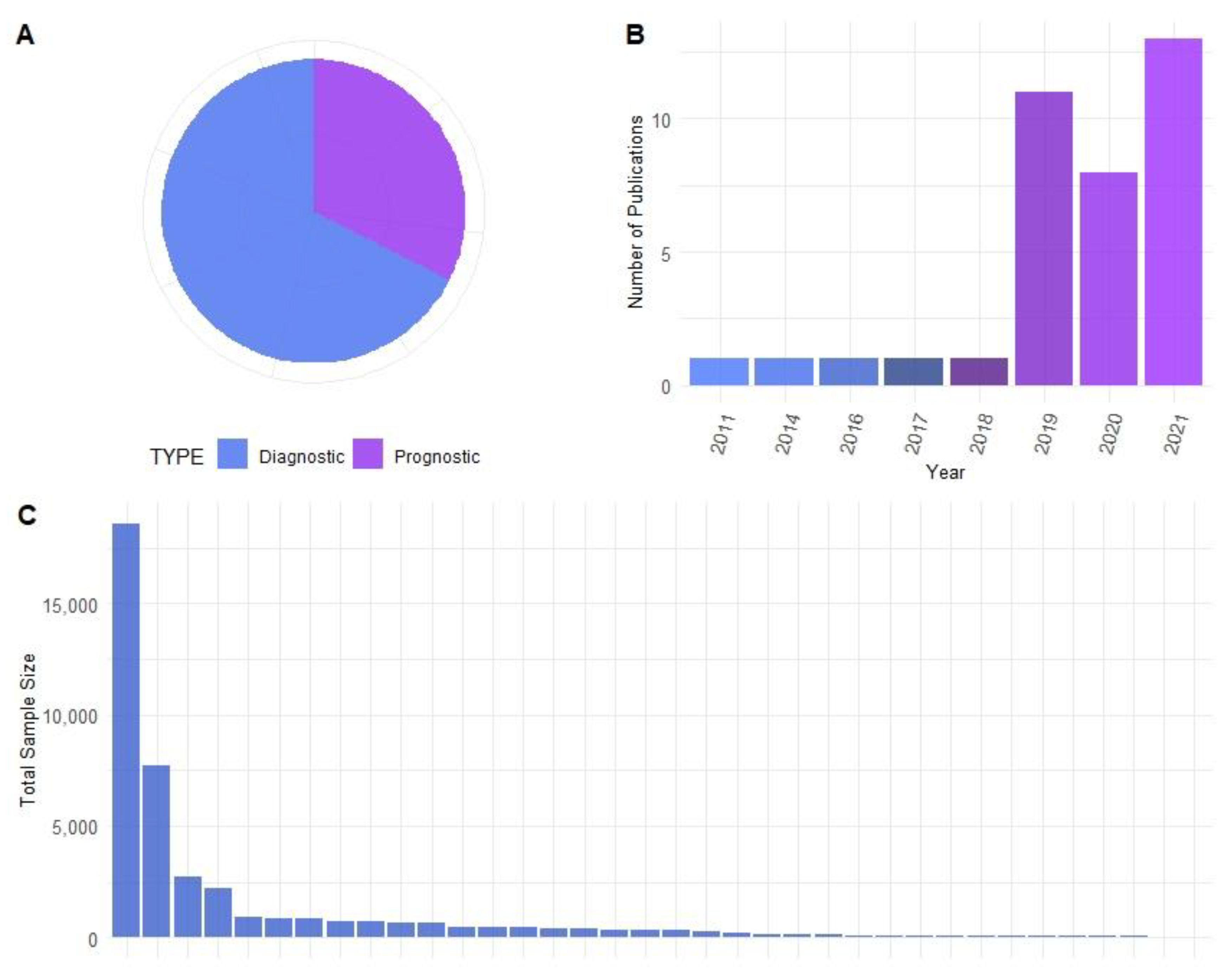

2. Materials and Methods

2.1. Identification of Research Articles

2.2. Data Extraction of Research Articles

2.3. Creation of Figures

3. Results

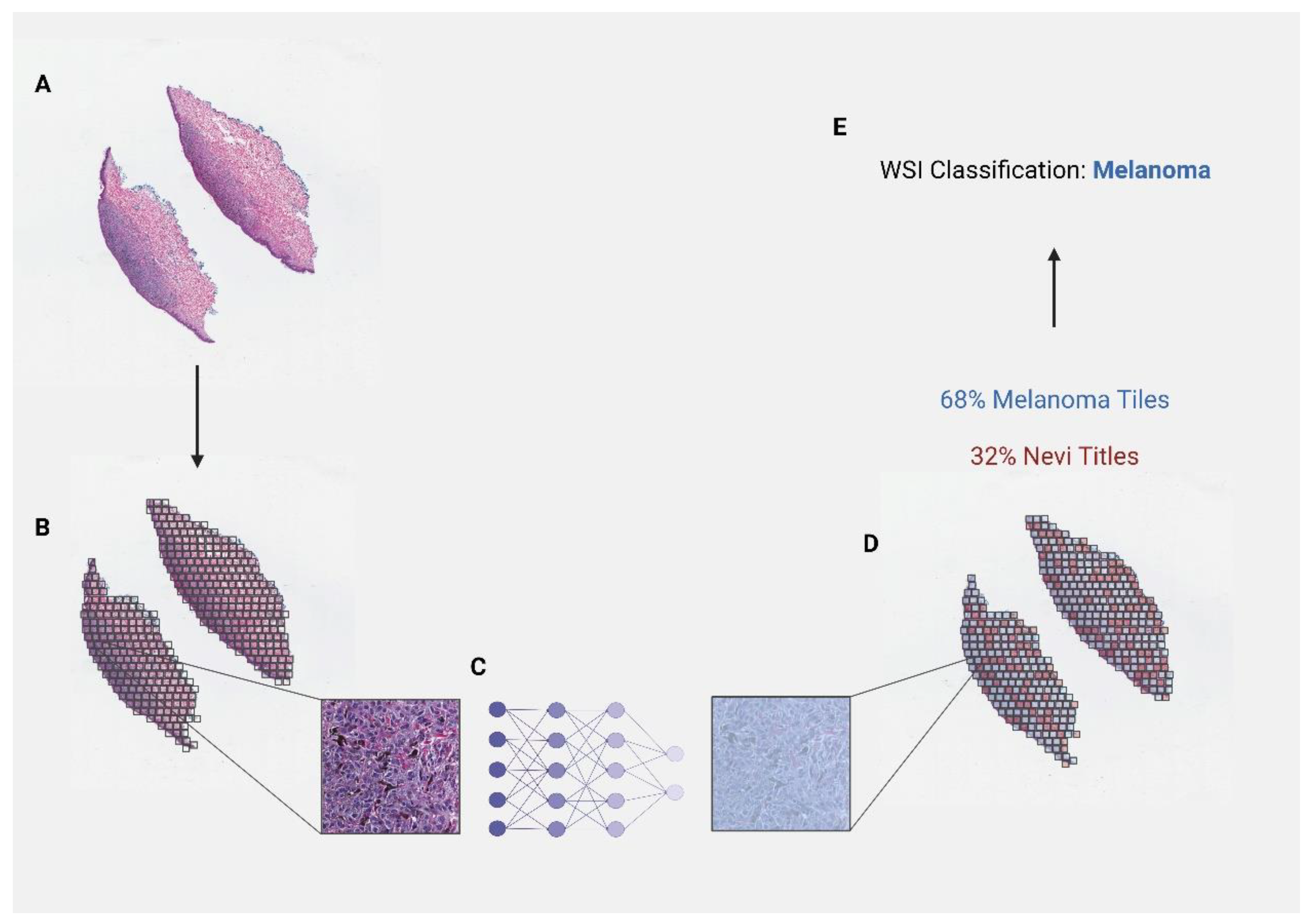

3.1. Diagnostic Applications

| Author | Dataset Size (# of Images) | Dataset Type | Model Type | Model Application | Reported Model Performance | Main Findings |

|---|---|---|---|---|---|---|

| Hekler et al. [25] (2019) | Train = 595 Test = 100 | H&E-stained images | CNN | Melanoma vs. nevi | Sensitivity = 76% Specificity = 60% Accuracy = 68% | Classification algorithm differentiated between melanoma and nevi H&E slides. In comparison to 11 histopathologists, the algorithm performed significantly better on the test dataset (p = 0.016). |

| Hohn et al. [26] (2021) | Train = 232 Test = 132 Validation = 67 | H&E-stained images; clinical information | CNN | Melanoma vs. nevi | mean AUROC = 92.30% mean balanced accuracy = 83.17% | Classification model to diagnose melanoma and nevi whole-slide images. This group compared the efficacy of a CNN model alone to the CNN model assisted with commonly used patient data in multiple fusion model settings. They found that the independent CNN model achieved the highest performance. |

| Brinker et al. [27] (2021) | 100 | H&E-stained images | CNN | Melanoma vs. nevi | Sensitivity = 94% Specificity = 90% Accuracy = 92% AUC = 0.97 (annotated) | Transfer learning of pre-trained CNN model diagnosed melanoma and nevi whole-slide images with and without annotation of lesion region. The success of the trained model was compared to image classification performed by 18 expert pathologists. The model tested using annotated images outperformed the overall accuracy of pathologists. |

| Ianni et al. [36] (2020) | Train = 5070 Test = 13,537 | H&E-stained images | CNN | basaloid vs. squamous vs. melanocytic vs. other | Accuracy = 90–98% | Diagnosis of either basaloid, squamous, melanocytic, or with no visible pathology or conclusive diagnosis. Multiple CNN models each served a unique purpose in their diagnostic pipeline. The accuracy of the model was tested on images from three different labs. |

| Amor et al. [28] (2021) | 54 | H&E-stained images | CNN | spitzoid melanoma vs. nevi | accuracy = 0.9231, 0.8 | Computational pipeline consisted of ROI extraction to identify tiles of tumor regions followed by a classification model to diagnose WSIs. |

| Xu et al. [34] (2018) | 66 | H&E-stained images | mSVM | Melanoma vs. nevi vs. normal skin | 95% classification accuracy | Model classified skin WSI samples as melanoma, nevi, or normal skin. The model first segments the epidermis and dermis, analyzes epidermal and dermal features in parallel, and finally classifies the image into one of three classes. |

| Lu et al. [35] (2015) | 66 | H&E-stained images | mSVM | Melanoma vs. nevi vs. normal skin | 90% classification accuracy | This pipeline first segments keratinocytes and melanocytes in the epidermis, then constructs spatial distribution and morphological features for WSI diagnosis of melanoma, nevi, and normal skin. |

| Wang et al. [29] (2019) | 155 | H&E-stained images | CNN, RF | Melanoma vs. nevi | AUC = 0.998 Accuracy = 98.2 | CNN pre-trained models to predict diagnosis used in combination with a random-forest algorithm to classify WSIs as melanoma or nevi. Final predictions of the model based on validation datasets were compared to those of seven pathologists. The model was shown to outperform human pathologists. |

| Xie et al. [30] (2021) | 841 | H&E-stained images | CNN | Melanoma vs. nevi | AUROC = 0.962 Accuracy = 0.933 | CNN model was trained using melanoma and nevi WSIs. A Grad-CAM method was then used to reveal the logic behind the CNN and understand the impact of specific areas in the model. Use of the Grad-CAM method and feature heatmaps revealed similarities between this group’s model and accepted pathological features. |

| Hekler et al. [31] (2019) | 695 | H&E-stained images | CNN | Melanoma vs. nevi | - | A pretrained model was adapted to a training dataset of melanoma and nevi histology images. This model was then tested on additional data and compared to diagnoses of a histopathologist. The misclassification rates for the trained model were 18% for melanomas and 20% for nevi. |

| Sankarapandian et al. [38] (2021) | 7685 | H&E-stained images | Deep learning system: hierarchical classification | Basaloid vs. squamous vs. melanoma high, intermediate, low risk and other | 0.93 training 0.95 validation #1 0.82 validation #2 | Hierarchical clustering was utilized for binary classification of nonmelanoma vs. melanocytic images followed by further classification of melanoma as “high risk” (melanoma) “intermediate risk” (melanoma in situ or severe dysplasia) or “rest” consisting of nonmelanoma skin cancers, nevus, or mild-to-moderate dysplasia. |

| Oskal et al. [40] (2019) | Train = 36 Test = 33 | H&E-stained images | CNN | Epidermal segmentation | Mean positive predictive value = 0.89 ± 0.16 Sensitivity 0.92 ± 0.1 | Epidermal regions were first annotated by an expert pathologist in Aperio ImageScope. WSIs were then split into small tiles, where a binary classifier categorized tiles as epidermis or non-epidermis. |

| Li et al. [33] (2021) | 701 | H&E-stained images | CNN | Melanoma vs. nevi | AUROC = 0.971 (CI: 0.952–0.990) | WSIs were divided into tiles, where individual predictions were made to assess the overall diagnostic probability of melanoma or nevi. Images diagnosed as melanoma were then further analyzed to determine the location of the lesion using a probability heatmap method. |

| Wang et al. [29] (2019) | 155 | H&E-stained images | CNN, RF | Melanoma vs. nevi | AUC = 0.998 Accuracy = 98.2% Sensitivity = 100% Specificity = 96.5% | Using whole-slide images of melanoma and nevi samples, regions of sample on the slide were segmented and split into tiles. The model was then used to create a tumor probabilistic heatmap and identify key features for melanoma diagnosis. Final binary classification of WSI was performed using a random-forest model based on extracted feature vectors. The performance of the model was compared to seven human pathologists, showing improved accuracy. |

| Sturm et al. [44] (2022) | 99 | H&E-stained images | CNN | Mitosis detection | Accuracy = 75% | Concordance of predeveloped algorithm along with multiple expert pathologists was tested for detection of mitoses in melanocytic lesions. Little improvement in diagnostic accuracy was observed with or without the aid of this algorithm (75% vs. 68%). |

| Ba et al. [32] (2021) | Train/Test = 781 Validation = 104 | H&E-stained images | CNN, RF | Melanoma vs. nevi | Sensitivity = 100% Specificity = 94.7% AUROC = 0.99 | The developed pipeline first extracts regions of interest then splits regions into tiles, where a CNN indicates whether the tile is a nevi or melanoma. Additional image features are then implemented into a random-forest classifier, where a final diagnosis for the WSI is made. The overall performance of the model matched that of the average dermatopathologist for differentiation between varying MPATH-DX categories. |

| Andres et al. [43] (2016) | 59 | H&E-stained images; Ki-67-stained slides | RF | Mitosis detection | 83% | Mitoses were detected by assessing the individual pixel probability of contributing to mitotic cells. A significant correlation was found between the number of mitoses detected by their program and the number of Ki67-positive cells detected in Ki67-stained tissue slides. |

| Xie et al. [30] (2021) | 2241 | H&E-stained images | CNN | Melanoma vs. nevi | Sensitivity = 0.92 Specificity = 0.97 AUC = 0.99 | Tested the efficacy of two different CNN for the differentiation of melanoma and nevi at four different magnification levels (4×–40×). The most accurate model was at 40× magnification. |

| Liu et al. [46] (2021) | 227 | H&E-stained images | CNN | Melanocytic proliferation segmentation | Accuracy = 0.927 | A framework was developed for aiding pathologists in the identification and segmentation of melanocytic proliferations. Using sparse annotations generated by the pathologist, this pipeline finetunes the segmented regions using a CNN model on tile WSI regions. |

| Osborne et al. [49] (2011) | 126 | H&E-stained images | SVM | Melanoma vs. nevi | Sensitivity = 100% Specificity = 75% Accuracy = 90% | For differentiation between melanoma and nevi WSIs, this pipeline first removes irrelevant regions of the slide and then distinguishes the area of nuclei and cytoplasm in individual cells. Multiple features involving the nuclei are extracted including the number of nuclei per cell, nuclei-to-cytoplasm area ratio, perimeter, and shape. |

| Alheejawi et al. [45] (2020) | 9 | H&E-stained images | CNN, SVM | Melanocyte detection | Accuracy = 90% | CNN model segments individual nuclei within the image and extracts multiple morphological and textural features. SVM later classifies nuclei as normal or abnormal based on these features. |

| Zhang et al. [42] (2022) | 30 | H&E-stained images | CNN | Melanoma segmentation | Precision = 0.9740 Recall = 0.9861 Accuracy = 0.9553 | WSIs of malignant melanoma were split into numerous tiles where developed CNN model differentiated patches, which represented benign and malignant tissues. The final model outputs a probabilistic heatmap of tumor regions and was found to outperform multiple similar predeveloped models for melanoma segmentation accuracy. |

| De Logu et al. [39] (2020) | 100 | H&E-stained images | CNN | Melanoma vs. healthy tissue patches | Accuracy = 96.5% Sensitivity = 95.7% Specificity = 97.7% | Based on WSIs of melanoma, slides were split into numerous tiles and individual tiles were classified using a pre-trained network as either melanoma or surrounding normal tissue. |

| Kucharski et al. [47] (2020) | 70 | H&E-stained images | DNN | Melanocyte nest detection | Dice similarity coefficient = 0.81 Sensitivity = 0.76 Specificity = 0.94 | Based on a convolutional autoencoder neural-network architecture, a framework was implemented for the use of melanocytic nest detection. Slides were split into tiles, where individual tiles were classified as part or not part of a nest, eventually allowing for the segmentation of whole nests. |

| Kuiava et al. [37] (2020) | 2732 | H&E-stained images | CNN | Melanoma vs. basal vs. squamous vs. normal | Sensitivity = 92%, 91.6%, 98.3% Specificity = 97%, 95.4%, 98.8% | The efficacy of three different models to differentiate disease classifications was tested using a large dataset of melanoma, basal cell carcinoma, squamous cell carcinoma, and normal skin. All three models were able to differentiate between different types of cancer with high sensitivity and specificity. |

3.2. Prognostic Applications

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- National Cancer Institute: Surveillance, Epidemiology, and End Results Program. Cancer Stat Facts: Melanoma of the Skin. Available online: https://seer.cancer.gov/statfacts/html/melan.html (accessed on 19 May 2022).

- Arnold, M.; Singh, D.; Laversanne, M.; Vignat, J.; Vaccarella, S.; Meheus, F.; Cust, A.E.; de Vries, E.; Whiteman, D.C.; Bray, F. Global Burden of Cutaneous Melanoma in 2020 and Projections to 2040. JAMA Dermatol. 2022, 158, 495–503. [Google Scholar] [CrossRef]

- Rastrelli, M.; Tropea, S.; Rossi, C.R.; Alaibac, M. Melanoma: Epidemiology, risk factors, pathogenesis, diagnosis and classification. In Vivo 2014, 28, 1005–1011. [Google Scholar]

- Emri, G.; Paragh, G.; Tosaki, A.; Janka, E.; Kollar, S.; Hegedus, C.; Gellen, E.; Horkay, I.; Koncz, G.; Remenyik, E. Ultraviolet radiation-mediated development of cutaneous melanoma: An update. J. Photochem. Photobiol. B 2018, 185, 169–175. [Google Scholar] [CrossRef]

- Hartman, R.I.; Lin, J.Y. Cutaneous Melanoma-A Review in Detection, Staging, and Management. Hematol. Oncol. Clin. N. Am. 2019, 33, 25–38. [Google Scholar] [CrossRef]

- Wilson, M.L. Histopathologic and Molecular Diagnosis of Melanoma. Clin. Plast. Surg. 2021, 48, 587–598. [Google Scholar] [CrossRef]

- Ding, L.; Gosh, A.; Lee, D.J.; Emri, G.; Huss, W.J.; Bogner, P.N.; Paragh, G. Prognostic biomarkers of cutaneous melanoma. Photodermatol. Photoimmunol. Photomed. 2022, 38, 418–434. [Google Scholar] [CrossRef]

- Almashali, M.; Ellis, R.; Paragh, G. Melanoma Epidemiology, Staging and Prognostic Factors. In Practical Manual for Dermatologic and Surgical Melanoma Management; Springer: Cham, Switzerland, 2020; pp. 61–81. [Google Scholar]

- Graber, M.L. The incidence of diagnostic error in medicine. BMJ Qual. Saf. 2013, 22 (Suppl. S2), ii21–ii27. [Google Scholar] [CrossRef]

- Raab, S.S. Variability of practice in anatomic pathology and its effect on patient outcomes. Semin. Diagn. Pathol. 2005, 22, 177–185. [Google Scholar] [CrossRef]

- Tizhoosh, H.R.; Diamandis, P.; Campbell, C.J.V.; Safarpoor, A.; Kalra, S.; Maleki, D.; Riasatian, A.; Babaie, M. Searching Images for Consensus: Can AI Remove Observer Variability in Pathology? Am. J. Pathol. 2021, 191, 1702–1708. [Google Scholar] [CrossRef]

- DeJohn, C.R.; Grant, S.R.; Seshadri, M. Application of Machine Learning Methods to Improve the Performance of Ultrasound in Head and Neck Oncology: A Literature Review. Cancers 2022, 14, 665. [Google Scholar] [CrossRef]

- Rowe, M. An Introduction to Machine Learning for Clinicians. Acad. Med. 2019, 94, 1433–1436. [Google Scholar] [CrossRef]

- Greener, J.G.; Kandathil, S.M.; Moffat, L.; Jones, D.T. A guide to machine learning for biologists. Nat. Rev. Mol. Cell Biol. 2022, 23, 40–55. [Google Scholar] [CrossRef]

- Garside, N.; Zaribafzadeh, H.; Henao, R.; Chung, R.; Buckland, D. CPT to RVU conversion improves model performance in the prediction of surgical case length. Sci. Rep. 2021, 11, 14169. [Google Scholar] [CrossRef]

- Adlung, L.; Cohen, Y.; Mor, U.; Elinav, E. Machine learning in clinical decision making. Med 2021, 2, 642–665. [Google Scholar] [CrossRef]

- van der Laak, J.; Litjens, G.; Ciompi, F. Deep learning in histopathology: The path to the clinic. Nat. Med. 2021, 27, 775–784. [Google Scholar] [CrossRef]

- Echle, A.; Rindtorff, N.T.; Brinker, T.J.; Luedde, T.; Pearson, A.T.; Kather, J.N. Deep learning in cancer pathology: A new generation of clinical biomarkers. Br. J. Cancer 2021, 124, 686–696. [Google Scholar] [CrossRef]

- Jiang, Y.; Yang, M.; Wang, S.; Li, X.; Sun, Y. Emerging role of deep learning-based artificial intelligence in tumor pathology. Cancer Commun. 2020, 40, 154–166. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing, R version 4.1.1; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer-Verlag: New York, NY, USA, 2016. [Google Scholar]

- Kassambara, A. ggpubr: ‘ggplot2’ Based Publication Ready Plots. R package version 0.4.0. 2020. [Google Scholar]

- Choi, J.N.; Hanlon, A.; Leffell, D. Melanoma and nevi: Detection and diagnosis. Curr. Probl. Cancer 2011, 35, 138–161. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Solass, W.; Schmitt, M.; Klode, J.; Schadendorf, D.; Sondermann, W.; Franklin, C.; Bestvater, F.; et al. Deep learning outperformed 11 pathologists in the classification of histopathological melanoma images. Eur. J. Cancer 2019, 118, 91–96. [Google Scholar] [CrossRef]

- Hohn, J.; Krieghoff-Henning, E.; Jutzi, T.B.; von Kalle, C.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Hobelsberger, S.; Hauschild, A.; Schlager, J.G.; et al. Combining CNN-based histologic whole slide image analysis and patient data to improve skin cancer classification. Eur. J. Cancer 2021, 149, 94–101. [Google Scholar] [CrossRef]

- Brinker, T.J.; Schmitt, M.; Krieghoff-Henning, E.I.; Barnhill, R.; Beltraminelli, H.; Braun, S.A.; Carr, R.; Fernandez-Figueras, M.T.; Ferrara, G.; Fraitag, S.; et al. Diagnostic performance of artificial intelligence for histologic melanoma recognition compared to 18 international expert pathologists. J. Am. Acad. Dermatol. 2022, 86, 640–642. [Google Scholar] [CrossRef]

- Del Amor, R.; Launet, L.; Colomer, A.; Moscardo, A.; Mosquera-Zamudio, A.; Monteagudo, C.; Naranjo, V. An attention-based weakly supervised framework for spitzoid melanocytic lesion diagnosis in whole slide images. Artif. Intell. Med. 2021, 121, 102197. [Google Scholar] [CrossRef]

- Wang, L.; Ding, L.; Liu, Z.; Sun, L.; Chen, L.; Jia, R.; Dai, X.; Cao, J.; Ye, J. Automated identification of malignancy in whole-slide pathological images: Identification of eyelid malignant melanoma in gigapixel pathological slides using deep learning. Br. J. Ophthalmol. 2020, 104, 318–323. [Google Scholar] [CrossRef]

- Xie, P.; Zuo, K.; Liu, J.; Chen, M.; Zhao, S.; Kang, W.; Li, F. Interpretable Diagnosis for Whole-Slide Melanoma Histology Images Using Convolutional Neural Network. J. Healthc. Eng. 2021, 2021, 8396438. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Berking, C.; Klode, J.; Schadendorf, D.; Jansen, P.; Franklin, C.; Holland-Letz, T.; Krahl, D.; et al. Pathologist-level classification of histopathological melanoma images with deep neural networks. Eur. J. Cancer 2019, 115, 79–83. [Google Scholar] [CrossRef]

- Ba, W.; Wang, R.; Yin, G.; Song, Z.; Zou, J.; Zhong, C.; Yang, J.; Yu, G.; Yang, H.; Zhang, L.; et al. Diagnostic assessment of deep learning for melanocytic lesions using whole-slide pathological images. Transl. Oncol. 2021, 14, 101161. [Google Scholar] [CrossRef]

- Li, T.; Xie, P.; Liu, J.; Chen, M.; Zhao, S.; Kang, W.; Zuo, K.; Li, F. Automated Diagnosis and Localization of Melanoma from Skin Histopathology Slides Using Deep Learning: A Multicenter Study. J. Healthc. Eng. 2021, 2021, 5972962. [Google Scholar] [CrossRef]

- Xu, H.; Lu, C.; Berendt, R.; Jha, N.; Mandal, M. Automated analysis and classification of melanocytic tumor on skin whole slide images. Comput. Med. Imaging Graph 2018, 66, 124–134. [Google Scholar] [CrossRef]

- Lu, C.; Mandal, M. Automated Analysis and diagnosis of skin melanoma on whole slide histopathological images. Pattern Recognit. 2015, 48, 2738–2750. [Google Scholar] [CrossRef]

- Ianni, J.D.; Soans, R.E.; Sankarapandian, S.; Chamarthi, R.V.; Ayyagari, D.; Olsen, T.G.; Bonham, M.J.; Stavish, C.C.; Motaparthi, K.; Cockerell, C.J.; et al. Tailored for Real-World: A Whole Slide Image Classification System Validated on Uncurated Multi-Site Data Emulating the Prospective Pathology Workload. Sci. Rep. 2020, 10, 3217. [Google Scholar] [CrossRef]

- Kuiava, V.A.; Kuiava, E.L.; Chielle, E.O.; De Bittencourt, F.M. Artificial Intelligence Algorithm for the Histopathological Diagnosis of Skin Cancer. Clin. Biomed. Res. 2020, 40. [Google Scholar] [CrossRef]

- Sankarapandian, S.; Kohn, S.; Spurrier, V.; Grullon, S.; Soans, R.E.; Ayyagari, K.D.; Chamarthi, R.V.; Motaparthi, K.; Lee, J.B.; Shon, W.; et al. A Pathology Deep Learning System Capable of Triage of Melanoma Specimens Utilizing Dermatopathologist Consensus as Ground Truth. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; IEEE Computer Society: Los Alamitos, CA, USA, 2021; pp. 629–638. [Google Scholar]

- De Logu, F.; Ugolini, F.; Maio, V.; Simi, S.; Cossu, A.; Massi, D.; Italian Association for Cancer Research (AIRC) Study Group; Nassini, R.; Laurino, M. Recognition of Cutaneous Melanoma on Digitized Histopathological Slides via Artificial Intelligence Algorithm. Front. Oncol. 2020, 10, 1559. [Google Scholar] [CrossRef]

- Oskal, K.R.J.; Risdal, M.; Janssen, E.A.M.; Undersrud, E.S.; Gulsrud, T.O. A U-net based approach to epidermal tissue segmentation in whole slide histopathological images. SN Appl. Sci. 2019, 1, 672. [Google Scholar] [CrossRef]

- Phillips, A.; Teo, I.Y.H.; Lang, J. Fully Convolutional Network for Melanoma Diagnostics. arXiv 2018, arXiv:1806.04765. [Google Scholar]

- Zhang, D.; Han, H.; Du, S.; Zhu, L.; Yang, J.; Wang, X.; Wang, L.; Xu, M. MPMR: Multi-Scale Feature and Probability Map for Melanoma Recognition. Front. Med. 2021, 8, 775587. [Google Scholar] [CrossRef]

- Andres, C.; Andres-Belloni, B.; Hein, R.; Biedermann, T.; Schape, A.; Brieu, N.; Schonmeyer, R.; Yigitsoy, M.; Ring, J.; Schmidt, G.; et al. iDermatoPath—A novel software tool for mitosis detection in H&E-stained tissue sections of malignant melanoma. J. Eur. Acad. Dermatol. Venereol. 2017, 31, 1137–1147. [Google Scholar] [CrossRef]

- Sturm, B.; Creytens, D.; Smits, J.; Ooms, A.; Eijken, E.; Kurpershoek, E.; Kusters-Vandevelde, H.V.N.; Wauters, C.; Blokx, W.A.M.; van der Laak, J. Computer-Aided Assessment of Melanocytic Lesions by Means of a Mitosis Algorithm. Diagnostics 2022, 12, 436. [Google Scholar] [CrossRef]

- Alheejawi, S.; Berendt, R.; Jha, N.; Mandal, M. Melanoma Cell Detection in Lymph Nodes Histopathological Images Using Deep Learning. Signal Image Process. Int. J. 2020, 11, 1–11. [Google Scholar] [CrossRef]

- Liu, K.; Mokhtari, M.; Li, B.; Nofallah, S.; May, C.; Chang, O.; Knezevich, S.; Elmore, J.; Shapiro, L. Learning Melanocytic Proliferation Segmentation in Histopathology Images from Imperfect Annotations. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 3761–3770. [Google Scholar]

- Kucharski, D.; Kleczek, P.; Jaworek-Korjakowska, J.; Dyduch, G.; Gorgon, M. Semi-Supervised Nests of Melanocytes Segmentation Method Using Convolutional Autoencoders. Sensors 2020, 20, 1546. [Google Scholar] [CrossRef]

- Loescher, L.J.; Janda, M.; Soyer, H.P.; Shea, K.; Curiel-Lewandrowski, C. Advances in skin cancer early detection and diagnosis. Semin. Oncol. Nurs. 2013, 29, 170–181. [Google Scholar] [CrossRef]

- Osborne, J.D.; Gao, S.; Chen, W.-B.; Andea, A.; Zhang, C. Machine Classification of Melanoma and Nevi from Skin Lesions. Assoc. Comput. Mach. 2011. [Google Scholar] [CrossRef]

- Bobos, M. Histopathologic classification and prognostic factors of melanoma: A 2021 update. Ital. J. Dermatol. Venerol. 2021, 156, 300–321. [Google Scholar] [CrossRef]

- Paragh, G. Epidermal melanoma prognostic factors: A link to paracrine transforming growth factor-beta signalling. Br. J. Dermatol. 2022, 186, 606–607. [Google Scholar] [CrossRef]

- Cosgarea, I.; McConnell, A.T.; Ewen, T.; Tang, D.; Hill, D.S.; Anagnostou, M.; Elias, M.; Ellis, R.A.; Murray, A.; Spender, L.C.; et al. Melanoma secretion of transforming growth factor-beta2 leads to loss of epidermal AMBRA1 threatening epidermal integrity and facilitating tumour ulceration. Br. J. Dermatol. 2022, 186, 694–704. [Google Scholar] [CrossRef]

- Kulkarni, P.M.; Robinson, E.J.; Sarin Pradhan, J.; Gartrell-Corrado, R.D.; Rohr, B.R.; Trager, M.H.; Geskin, L.J.; Kluger, H.M.; Wong, P.F.; Acs, B.; et al. Deep Learning Based on Standard H&E Images of Primary Melanoma Tumors Identifies Patients at Risk for Visceral Recurrence and Death. Clin. Cancer Res. 2020, 26, 1126–1134. [Google Scholar] [CrossRef]

- Faries, M.B.; Thompson, J.F.; Cochran, A.J.; Andtbacka, R.H.; Mozzillo, N.; Zager, J.S.; Jahkola, T.; Bowles, T.L.; Testori, A.; Beitsch, P.D.; et al. Completion Dissection or Observation for Sentinel-Node Metastasis in Melanoma. N. Engl. J. Med. 2017, 376, 2211–2222. [Google Scholar] [CrossRef]

- Leiter, U.; Stadler, R.; Mauch, C.; Hohenberger, W.; Brockmeyer, N.H.; Berking, C.; Sunderkotter, C.; Kaatz, M.; Schatton, K.; Lehmann, P.; et al. Final Analysis of DeCOG-SLT Trial: No Survival Benefit for Complete Lymph Node Dissection in Patients with Melanoma with Positive Sentinel Node. J. Clin. Oncol. 2019, 37, 3000–3008. [Google Scholar] [CrossRef]

- Brinker, T.J.; Kiehl, L.; Schmitt, M.; Jutzi, T.B.; Krieghoff-Henning, E.I.; Krahl, D.; Kutzner, H.; Gholam, P.; Haferkamp, S.; Klode, J.; et al. Deep learning approach to predict sentinel lymph node status directly from routine histology of primary melanoma tumours. Eur. J. Cancer 2021, 154, 227–234. [Google Scholar] [CrossRef]

- Rodriguez-Cerdeira, C.; Carnero Gregorio, M.; Lopez-Barcenas, A.; Sanchez-Blanco, E.; Sanchez-Blanco, B.; Fabbrocini, G.; Bardhi, B.; Sinani, A.; Guzman, R.A. Advances in Immunotherapy for Melanoma: A Comprehensive Review. Mediat. Inflamm 2017, 2017, 3264217. [Google Scholar] [CrossRef]

- Ernstoff, M.S.; Gandhi, S.; Pandey, M.; Puzanov, I.; Grivas, P.; Montero, A.; Velcheti, V.; Turk, M.J.; Diaz-Montero, C.M.; Lewis, L.D.; et al. Challenges faced when identifying patients for combination immunotherapy. Future Oncol. 2017, 13, 1607–1618. [Google Scholar] [CrossRef]

- Hu, J.; Cui, C.; Yang, W.; Huang, L.; Yu, R.; Liu, S.; Kong, Y. Using deep learning to predict anti-PD-1 response in melanoma and lung cancer patients from histopathology images. Transl. Oncol. 2021, 14, 100921. [Google Scholar] [CrossRef]

- Johannet, P.; Coudray, N.; Donnelly, D.M.; Jour, G.; Illa-Bochaca, I.; Xia, Y.; Johnson, D.B.; Wheless, L.; Patrinely, J.R.; Nomikou, S.; et al. Using Machine Learning Algorithms to Predict Immunotherapy Response in Patients with Advanced Melanoma. Clin. Cancer Res. 2021, 27, 131–140. [Google Scholar] [CrossRef]

- Maibach, F.; Sadozai, H.; Seyed Jafari, S.M.; Hunger, R.E.; Schenk, M. Tumor-Infiltrating Lymphocytes and Their Prognostic Value in Cutaneous Melanoma. Front. Immunol. 2020, 11, 2105. [Google Scholar] [CrossRef]

- Acs, B.; Ahmed, F.S.; Gupta, S.; Wong, P.F.; Gartrell, R.D.; Sarin Pradhan, J.; Rizk, E.M.; Gould Rothberg, B.; Saenger, Y.M.; Rimm, D.L. An open source automated tumor infiltrating lymphocyte algorithm for prognosis in melanoma. Nat. Commun. 2019, 10, 5440. [Google Scholar] [CrossRef]

- Moore, M.R.; Friesner, I.D.; Rizk, E.M.; Fullerton, B.T.; Mondal, M.; Trager, M.H.; Mendelson, K.; Chikeka, I.; Kurc, T.; Gupta, R.; et al. Automated digital TIL analysis (ADTA) adds prognostic value to standard assessment of depth and ulceration in primary melanoma. Sci. Rep. 2021, 11, 2809. [Google Scholar] [CrossRef]

- Chou, M.; Illa-Bochaca, I.; Minxi, B.; Darvishian, F.; Johannet, P.; Moran, U.; Shapiro, R.L.; Berman, R.S.; Osman, I.; Jour, G.; et al. Optimization of an automated tumor-infiltrating lymphocyte algorithm for improved prognostication in primary melanoma. Mod. Pathol. 2021, 34, 562–571. [Google Scholar] [CrossRef]

- Davies, H.; Bignell, G.R.; Cox, C.; Stephens, P.; Edkins, S.; Clegg, S.; Teague, J.; Woffendin, H.; Garnett, M.J.; Bottomley, W.; et al. Mutations of the BRAF gene in human cancer. Nature 2002, 417, 949–954. [Google Scholar] [CrossRef]

- Cheng, L.; Lopez-Beltran, A.; Massari, F.; MacLennan, G.T.; Montironi, R. Molecular testing for BRAF mutations to inform melanoma treatment decisions: A move toward precision medicine. Mod. Pathol. 2018, 31, 24–38. [Google Scholar] [CrossRef]

- Kim, R.H.; Nomikou, S.; Dawood, Z.; Jour, G.; Donnelly, D.; Moran, U.; Weber, J.S.; Razavian, N.; Snuderl, M.; Shapiro, R.; et al. A Deep Learning Approach for Rapid Mutational Screening in Melanoma. bioRxiv 2019. [CrossRef]

- Kim, R.H.; Nomikou, S.; Coudray, N.; Jour, G.; Dawood, Z.; Hong, R.; Esteva, E.; Sakellaropoulos, T.; Donnelly, D.; Moran, U.; et al. Deep Learning and Pathomics Analyses Reveal Cell Nuclei as Important Features for Mutation Prediction of BRAF-Mutated Melanomas. J. Investig. Dermatol. 2022, 142, 1650–1658. [Google Scholar] [CrossRef] [PubMed]

- Forchhammer, S.; Abu-Ghazaleh, A.; Metzler, G.; Garbe, C.; Eigentler, T. Development of an Image Analysis-Based Prognosis Score Using Google’s Teachable Machine in Melanoma. Cancers 2022, 14, 2243. [Google Scholar] [CrossRef] [PubMed]

| Author | Dataset Size (# of Images) | Dataset Type | Model Type | Model Application | Reported Model Performance | Main Findings |

|---|---|---|---|---|---|---|

| Hu et al. [59] (2020) | 476 | H&E-stained images; clinical information | CNN | responder vs. non-responder | AUC of 0.778 | The model predicted progression-free survival of melanoma patients that received anti-PD-1 monoclonal antibody monotherapy. Patients were classified as either responders or non-responders to therapy. |

| Kulkarni et al. [53] (2020) | Train = 108 Test #1 = 104 Test #2 = 51 | H&E-stained images; clinical information | DNN | prediction of disease specific survival | AUC1 = 0.905 AUC2 = 0.88 | Deep neural network predicted whether a patient would develop distant metastasis recurrence. The model used a CNN to extract features followed by an RNN to identify patterns, ultimately outputting a distant metastasis recurrence prediction. |

| Brinker et al. [56] (2021) | 415 | H&E-stained images; sentinel node status; clinical information | ANN | prediction of sentinel lymph node status | AUROC = 61.8% | The model predicted sentinel lymph node status based on WSI of primary melanoma tumors. Cell detection classified cells as tumor, immune, or other followed by further cell feature extraction. Additionally, clinical features were implemented including the tumor thickness, ulceration, and patient age. |

| Johannet et al. [60] (2021) | Train = 302 Validation = 39 | H&E-stained images; clinical information | CNN | prediction of ICI response (PFS) | AUC = 0.8 | Multivariable classifier to distinguish patients as having a high or low risk of cancer progression in response to ICI treatment. This pipeline first used a segmentation classifier to distinguish between different cell types then implemented a response classifier to predict the probability of response for each tile, ultimately leading to whole-slide classification based on tile majority. |

| Acs et al. [62] (2019) | 641 | H&E-stained images; clinical information | NN | TIL scoring | - | An algorithm recognized and segmented TIL within WSIs and then calculated the frequency of these cells within each image. Automated TIL scoring was found to be consistent with TIL scoring performed by a pathologist. It was also shown that automated TIL scoring correlated with patient prognostic factors. |

| Moore et al. [63] (2021) | Train = 80 Validation = 145 | H&E-stained images; clinical information | NN | TIL scoring | - | By testing the ability of the automated TIL scores to predict patient outcomes, a significant correlation was found between the TIL score and disease-specific survival. |

| Chou et al. [64] (2021) | 453 | H&E-stained images; clinical information | NN | TIL scoring | - | Utilized a TIL percentage score to predict overall survival outcomes. Using a defined low and high TIL score, significant differences were found between the recurrence-free survival and overall survival probability. |

| Kim et al. [67] (2020) | Train = 256 Validation = 68 | H&E-stained images; BRAF-mutant status | CNN | Prediction BRAF genotype | 0.72 AUC test; 0.75 validation | Based on a multi-step deep-learning model and pathomics analysis to extract unique features of BRAF-mutated cells within melanoma lesions, it was found that cells with BRAF mutations showed larger and rounder nuclei. |

| Kim et al. [68] (2022) | Training = 256 Validation #1 = 21 Validation #2 = 28 | H&E-stained images; BRAF-mutant status; clinical information | CNN | Prediction BRAF genotype | AUC = 0.89 | After first extracting BRAF-mutated cell nuclei features, a final prediction model was created to combine clinical information, deep learning, and extracted nuclei features to predict the mutation status in WSIs of melanoma. |

| Forchhammer et al. [69] (2022) | 831 | H&E-stained images; clinical information | CNN | Overall survival | AUC = 0.694 | The developed model was able to correctly classify low- and high-risk individuals based on the survival status during 2-year patient follow-up. There was a statistical difference in recurrence-free survival (p < 0.001) and AJCC Stage IV vs. AJCC Stage I-III (p < 0.05) between patients categorized in the low- and high-risk groups. |

| Phillips et al. [41] (2018) | 50 | H&E-stained images | CNN | Dermis vs. epidermis vs. tumor | - | The accuracy of segmentation of dermal, epidermal, and tumor regions by the model was compared to the segmentations of a pathologist. The multi-stride fully convolutional network proved to be diagnostically equivalent to pathologists, indicating a new automated resource for measurement of Breslow’s depth. |

| Chou et al. [64] (2020) | 457 | H&E-stained images; clinical information; pathologist generated TIL scoring | NN | Prediction of recurrence-free survival and overall survival | Using a predeveloped neural network classifier that generates an automated TIL score in addition to human-based pathological analysis, automated TIL scoring was correlated with AJCC staging. The percentage of TILs found in the slide significantly improved the prediction of survival outcomes compared to Clarke’s grading. Using a threshold score of 16.6% TIL, significant differences were found in RFS (p = 0.00059) and OS (p = 0.0022) between “high” and “low” TIL-scoring patients. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grant, S.R.; Andrew, T.W.; Alvarez, E.V.; Huss, W.J.; Paragh, G. Diagnostic and Prognostic Deep Learning Applications for Histological Assessment of Cutaneous Melanoma. Cancers 2022, 14, 6231. https://doi.org/10.3390/cancers14246231

Grant SR, Andrew TW, Alvarez EV, Huss WJ, Paragh G. Diagnostic and Prognostic Deep Learning Applications for Histological Assessment of Cutaneous Melanoma. Cancers. 2022; 14(24):6231. https://doi.org/10.3390/cancers14246231

Chicago/Turabian StyleGrant, Sydney R., Tom W. Andrew, Eileen V. Alvarez, Wendy J. Huss, and Gyorgy Paragh. 2022. "Diagnostic and Prognostic Deep Learning Applications for Histological Assessment of Cutaneous Melanoma" Cancers 14, no. 24: 6231. https://doi.org/10.3390/cancers14246231

APA StyleGrant, S. R., Andrew, T. W., Alvarez, E. V., Huss, W. J., & Paragh, G. (2022). Diagnostic and Prognostic Deep Learning Applications for Histological Assessment of Cutaneous Melanoma. Cancers, 14(24), 6231. https://doi.org/10.3390/cancers14246231