RadWise: A Rank-Based Hybrid Feature Weighting and Selection Method for Proteomic Categorization of Chemoirradiation in Patients with Glioblastoma

Abstract

:Simple Summary

Abstract

1. Introduction

- To the best of our knowledge, this is the first study that utilizes a proteomic dataset acquired pre- and post-completion of CRT to categorize its alteration based on thousands of proteomic features available before and following intervention.

- To our knowledge, this is also the first study that employs filter and embedding-based FS algorithms for GBM proteomic data acquired with SOC treatment.

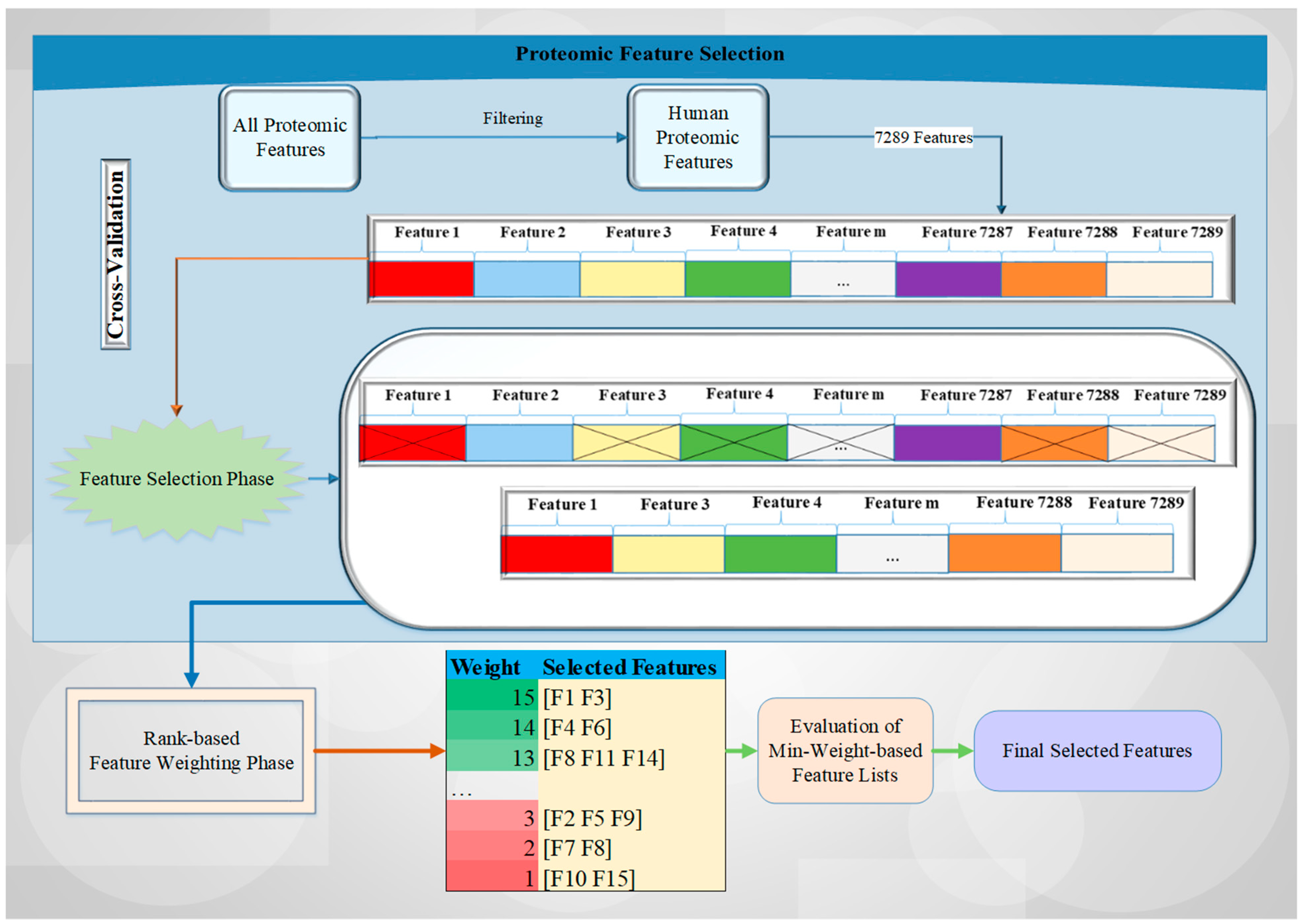

- We present a novel rank-based feature weighting and selection mechanism (RadWise) to identify relevant feature subsets with a cross-validation technique for machine learning problems.

- We combine the advantages of the two efficient and popular FS methods, namely, LASSO and mRMR, with rank-based feature weighting for pattern classification.

- We have investigated the comprehensive effects of FS and feature weighting methods separately for five different learning models on the proteomic dataset.

- The effects and the results of conventional statistical approaches without feature engineering have also been presented, compared, and discussed in detail and were compared to the FS method.

- We compared our proposed methodology with our statistical threshold-based heuristic method as well.

- We achieve high-performance results with approximately a thousand times smaller size features than the original proteomic dataset predictors.

- We examined the identified features in ingenuity pathway analysis (IPA) to link to disease (brain cancer, malignancy), pathways, and upstream proteins.

- Our results present promising results for GBM proteomic biomarker research in our field.

2. Methods

2.1. The Proposed Architecture for Feature Selection of Radiation Therapy Categorization of Glioblastoma Patients

2.2. Feature Selection Methods

2.2.1. mRMR

2.2.2. LASSO

2.3. Feature Weighting

2.4. Classification Methods

2.4.1. Support Vector Machine

2.4.2. Logistic Regression

2.4.3. K Nearest Neighbors

2.4.4. Random Forest

2.4.5. AdaBoost

3. Experimental Work

3.1. Experimental Process

3.2. Dataset

3.3. Performance Metrics

3.4. Computational Results

3.4.1. The Effects of Using Feature Selection Methods

3.4.2. The Effects of Using Only LASSO Feature Selection and Weighting Methods

3.4.3. The Effects of Using Only mRMR Feature Selection and Feature Weighting Methods

3.4.4. The Effects of Using LASSO and mRMR Feature Selection and Feature Weighting Methods

3.4.5. Performance Results Based on Feature Selection and Weighting Process

3.5. Comparison with the Related Methods for Proteomic Biomarker Identification

3.5.1. Comparison with Statistical Threshold-Based Heuristic Method (OSTH)

3.5.2. Comparison with the Related Statistical Test-Based Method

- Our feature selection approach employs two strategies: Multivariate filter FS (i.e., mRMR) and embedded FS (i.e., LASSO).

- Our hybrid FS method utilizes cross-validation to obtain a more robust result and a final feature set for our dataset.

- Our rank-based weighting approach assigns more importance to mRMR than to LASSO to arrive at the described performance results (i.e., accuracy rate). Due to this weighting criterion, the most prominent features identified in the statistical analyses are also identified by ML. However, signals such as cystatin M that in the purely statistical test-based approach rank lower can be elevated given their interaction with other targets. This is of great importance since novel large proteomic panels may identify molecules with known, unknown, or unassigned biological annotation and eventually novel functions or roles that are context specific.

- We experimented with all minimum weight-based values to obtain the minimum selected features with the highest accuracy rate. This process reduced 7289 protein signals to 8 with a higher accuracy rate.

- If the current feature selection process were not applied, the number and names of the features could not be determined precisely.

| Entrez Gene Symbol | Target Full Name | Biological Relevance to Glioma |

|---|---|---|

| K2C5 | Keratin, type II cytoskeletal 5 | Yes, evolving biomarker/target [61] |

| Keratin-1 | Keratin, type II cytoskeletal 1 | Yes, evolving biomarker/target [61] |

| STRATIFIN (SFN) | 14-3-3 protein sigma | Yes, tumor suppressor gene expression pattern correlates with glioma grade and prognosis [62] |

| MIC-1 (GDF15) | Growth/differentiation factor 15 | Yes, biomarker, novel immune checkpoint [63] |

| GFAP | Glial fibrillary acidic protein | Yes, evolving biomarker/target [64] |

| CSPG3 (NCAN) | Neurocan core protein | Yes, glycoproteomic profiles of GBM subtypes, differential expression versus control tissue [65] |

| Cystatin M (CST6) | Cystatin M | Yes, cell type-specific expression in normal brain and epigenetic silencing in glioma [66] |

| Proteinase-3 (PRTN3) | Proteinase-3 | Yes, evolving role, may relate to pyroptosis, oxidative stress and immune response [59] |

3.5.3. Connecting the Identified Markers to Disease, Pathways, and Upstream Proteins

4. Discussion

5. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

| Protocol | Most recent amendment approval date | Current protocol version date |

| 02C0064 | 14 March 2022 | 14 March 2022 |

| 04C0200 | 19 April 2022 | 25 February 2022 |

| 06C0112 | Study closure approval 30 June 2020 | NA |

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACC | Accuracy |

| AdaBoost | Adaptive Boosting |

| AUC | Area Under the ROC Curve |

| CRT | Chemoirradiation |

| CNS | Central Nervous System |

| F1 | F-Measure |

| FS | Feature Selection |

| FW | Feature Weighting |

| GBM | Glioblastoma Multiforme |

| HGG | High-Grade Glioma |

| IPA | Ingenuity Pathway Analysis |

| KNN | K Nearest Neighbors |

| LGG | Low-Grade Glioma |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| LR | Logistic Regression |

| MRI | Magnetic Resonance Imaging |

| MRMR | Minimum Redundancy Maximum Relevance |

| NCI | National Cancer Institute |

| NIH | National Institutes of Health |

| NP-Hard | Non-Deterministic Polynomial Time Hard |

| OS | Overall Survival |

| PRE | Precision |

| REC | Recall |

| RF | Random Forest |

| RT | Radiation Therapy |

| ROC | Receiver Operating Characteristic |

| SOC | Standard of Care |

| SPEC | Specificity |

| SVM | Support Vector Machine |

| TCGA | The Cancer Genome Atlas |

| TMZ | Temozolomide |

| WHO | World Health Organization |

References

- Brain Tumors. Available online: https://www.aans.org/en/Patients/Neurosurgical-Conditions-and-Treatments/Brain-Tumors (accessed on 23 January 2023).

- Hanif, F.; Muzaffar, K.; Perveen, K.; Malhi, S.M.; Simjee, S.U. Glioblastoma multiforme: A review of its epidemiology and pathogenesis through clinical presentation and treatment. Asian Pac. J. Cancer Prev. APJCP 2017, 18, 3. [Google Scholar] [PubMed]

- Rock, K.; McArdle, O.; Forde, P.; Dunne, M.; Fitzpatrick, D.; O’Neill, B.; Faul, C. A clinical review of treatment outcomes in glioblastoma multiforme—The validation in a non-trial population of the results of a randomised Phase III clinical trial: Has a more radical approach improved survival? Br. J. Radiol. 2012, 85, e729–e733. [Google Scholar] [CrossRef] [PubMed]

- Senders, J.T.; Staples, P.; Mehrtash, A.; Cote, D.J.; Taphoorn, M.J.B.; Reardon, D.A.; Gormley, W.B.; Smith, T.R.; Broekman, M.L.; Arnaout, O. An Online Calculator for the Prediction of Survival in Glioblastoma Patients Using Classical Statistics and Machine Learning. Neurosurgery 2020, 86, E184–E192. [Google Scholar] [CrossRef] [PubMed]

- Zhao, R.; Zeng, J.; DeVries, K.; Proulx, R.; Krauze, A.V. Optimizing management of the elderly patient with glioblastoma: Survival prediction online tool based on BC Cancer Registry real-world data. Neurooncol Adv. 2022, 4, vdac052. [Google Scholar] [CrossRef] [PubMed]

- Louis, D.N.; Perry, A.; Wesseling, P.; Brat, D.J.; Cree, I.A.; Figarella-Branger, D.; Hawkins, C.; Ng, H.; Pfister, S.M.; Reifenberger, G. The 2021 WHO classification of tumors of the central nervous system: A summary. Neuro-Oncol. 2021, 23, 1231–1251. [Google Scholar] [CrossRef]

- Kalinina, J.; Peng, J.; Ritchie, J.C.; Van Meir, E.G. Proteomics of gliomas: Initial biomarker discovery and evolution of technology. Neuro-Oncol. 2011, 13, 926–942. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Zheng, S.; Yu, J.-K.; Zhang, J.-M.; Chen, Z. Serum protein fingerprinting coupled with artificial neural network distinguishes glioma from healthy population or brain benign tumor. J. Zhejiang Univ. Sci. B 2005, 6, 4. [Google Scholar] [CrossRef][Green Version]

- Cervi, D.; Yip, T.-T.; Bhattacharya, N.; Podust, V.N.; Peterson, J.; Abou-Slaybi, A.; Naumov, G.N.; Bender, E.; Almog, N.; Italiano, J.E., Jr. Platelet-associated PF-4 as a biomarker of early tumor growth. Blood J. Am. Soc. Hematol. 2008, 111, 1201–1207. [Google Scholar] [CrossRef]

- Chen, S.; Zhao, H.; Deng, J.; Liao, P.; Xu, Z.; Cheng, Y. Comparative proteomics of glioma stem cells and differentiated tumor cells identifies S100 A 9 as a potential therapeutic target. J. Cell. Biochem. 2013, 114, 2795–2808. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Tasci, E.; Zhuge, Y.; Kaur, H.; Camphausen, K.; Krauze, A.V. Hierarchical Voting-Based Feature Selection and Ensemble Learning Model Scheme for Glioma Grading with Clinical and Molecular Characteristics. Int. J. Mol. Sci. 2022, 23, 14155. [Google Scholar] [CrossRef]

- Chen, R.-C.; Dewi, C.; Huang, S.-W.; Caraka, R.E. Selecting critical features for data classification based on machine learning methods. J. Big Data 2020, 7, 1–26. [Google Scholar] [CrossRef]

- Gokalp, O.; Tasci, E.; Ugur, A. A novel wrapper feature selection algorithm based on iterated greedy metaheuristic for sentiment classification. Expert Syst. Appl. 2020, 146, 113176. [Google Scholar] [CrossRef]

- Remeseiro, B.; Bolon-Canedo, V. A review of feature selection methods in medical applications. Comput. Biol. Med. 2019, 112, 103375. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, A.; Peng, C.; Wang, M. Improve glioblastoma multiforme prognosis prediction by using feature selection and multiple kernel learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2016, 13, 825–835. [Google Scholar] [CrossRef] [PubMed]

- Baid, U.; Rane, S.U.; Talbar, S.; Gupta, S.; Thakur, M.H.; Moiyadi, A.; Mahajan, A. Overall survival prediction in glioblastoma with radiomic features using machine learning. Front. Comput. Neurosci. 2020, 14, 61. [Google Scholar] [CrossRef] [PubMed]

- Bijari, S.; Jahanbakhshi, A.; Hajishafiezahramini, P.; Abdolmaleki, P. Differentiating Glioblastoma Multiforme from Brain Metastases Using Multidimensional Radiomics Features Derived from MRI and Multiple Machine Learning Models. BioMed Res. Int. 2022, 2022, 2016006. [Google Scholar] [CrossRef]

- Tasci, E.; Zhuge, Y.; Camphausen, K.; Krauze, A.V. Bias and Class Imbalance in Oncologic Data—Towards Inclusive and Transferrable AI in Large Scale Oncology Data Sets. Cancers 2022, 14, 2897. [Google Scholar] [CrossRef]

- Hilario, M.; Kalousis, A. Approaches to dimensionality reduction in proteomic biomarker studies. Brief. Bioinform. 2008, 9, 102–118. [Google Scholar] [CrossRef][Green Version]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Tang, J.; Alelyani, S.; Liu, H. Feature selection for classification: A review. Data Classif. Algorithms Appl. 2014, 37, 1–29. [Google Scholar]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 2017, 50, 1–45. [Google Scholar] [CrossRef][Green Version]

- Zhao, Z.; Anand, R.; Wang, M. Maximum relevance and minimum redundancy feature selection methods for a marketing machine learning platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 442–452. [Google Scholar]

- Dhal, P.; Azad, C. A comprehensive survey on feature selection in the various fields of machine learning. Appl. Intell. 2022, 52, 4543–4581. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Alshamlan, H.; Badr, G.; Alohali, Y. mRMR-ABC: A hybrid gene selection algorithm for cancer classification using microarray gene expression profiling. BioMed Res. Int. 2015, 2015, 604910. [Google Scholar] [CrossRef][Green Version]

- Freijeiro-González, L.; Febrero-Bande, M.; González-Manteiga, W. A critical review of LASSO and its derivatives for variable selection under dependence among covariates. Int. Stat. Rev. 2022, 90, 118–145. [Google Scholar] [CrossRef]

- Muthukrishnan, R.; Rohini, R. LASSO: A feature selection technique in predictive modeling for machine learning. In Proceedings of the 2016 IEEE International Conference on Advances in Computer Applications (ICACA), Coimbatore, India, 4–24 October 2016; pp. 18–20. [Google Scholar]

- Lasso. Available online: https://scikit-learn.org/stable/modules/linear_model.html#lasso (accessed on 19 August 2022).

- Zou, H.; Hastie, T.; Tibshirani, R. On the “degrees of freedom” of the lasso. Ann. Stat. 2007, 35, 2173–2192. [Google Scholar] [CrossRef]

- Tahir, M.A.; Bouridane, A.; Kurugollu, F. Simultaneous feature selection and feature weighting using Hybrid Tabu Search/K-nearest neighbor classifier. Pattern Recognit. Lett. 2007, 28, 438–446. [Google Scholar] [CrossRef]

- Jiang, T.; Gradus, J.L.; Rosellini, A.J. Supervised machine learning: A brief primer. Behav. Ther. 2020, 51, 675–687. [Google Scholar] [CrossRef]

- Cunningham, P.; Cord, M.; Delany, S.J. Supervised learning. In Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval; Springer: Berlin/Heidelberg, Germany, 2008; pp. 21–49. [Google Scholar] [CrossRef]

- Cristianini, N.; Ricci, E. Support vector machines. Encycl. Algorithm 2008. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Othman, M.F.B.; Abdullah, N.B.; Kamal, N.F.B. MRI brain classification using support vector machine. In Proceedings of the 2011 Fourth International Conference on Modeling, Simulation and Applied Optimization, Kuala Lumpur, Malaysia, 19–21 April 2011; pp. 1–4. [Google Scholar]

- Schlag, S.; Schmitt, M.; Schulz, C. Faster support vector machines. J. Exp. Algorithmics (JEA) 2021, 26, 1–21. [Google Scholar] [CrossRef]

- Aizerman, A. Theoretical foundations of the potential function method in pattern recognition learning. Autom. Remote Control 1964, 25, 821–837. [Google Scholar]

- Seddik, A.F.; Shawky, D.M. Logistic regression model for breast cancer automatic diagnosis. In Proceedings of the 2015 SAI Intelligent Systems Conference (IntelliSys), London, UK, 10–11 November 2015; pp. 150–154. [Google Scholar]

- Boateng, E.Y.; Abaye, D.A. A review of the logistic regression model with emphasis on medical research. J. Data Anal. Inf. Process. 2019, 7, 190–207. [Google Scholar] [CrossRef][Green Version]

- Cunningham, P.; Delany, S.J. k-Nearest neighbour classifiers-A Tutorial. ACM Comput. Surv. (CSUR) 2021, 54, 1–25. [Google Scholar] [CrossRef]

- Jiang, L.; Cai, Z.; Wang, D.; Jiang, S. Survey of improving k-nearest-neighbor for classification. In Proceedings of the Fourth International Conference on Fuzzy Systems and Knowledge Discovery (FSKD 2007), Haikou, China, 24–27 August 2007; pp. 679–683. [Google Scholar]

- Zhang, Z. Introduction to machine learning: K-nearest neighbors. Ann. Transl. Med. 2016, 4, 218. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef][Green Version]

- Biau, G. Analysis of a random forests model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Tasci, E. A meta-ensemble classifier approach: Random rotation forest. Balk. J. Electr. Comput. Eng. 2019, 7, 182–187. [Google Scholar] [CrossRef][Green Version]

- Oshiro, T.M.; Perez, P.S.; Baranauskas, J.A. How many trees in a random forest? In Proceedings of the Machine Learning and Data Mining in Pattern Recognition: 8th International Conference, MLDM 2012, Berlin, Germany, 13–20 July 2012; pp. 154–168. [Google Scholar]

- Wang, F.; Li, Z.; He, F.; Wang, R.; Yu, W.; Nie, F. Feature learning viewpoint of AdaBoost and a new algorithm. IEEE Access 2019, 7, 149890–149899. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Scikit-Learn. Available online: https://scikit-learn.org/stable/ (accessed on 25 August 2022).

- mRMR Feature Selection. Available online: https://github.com/smazzanti/mrmr (accessed on 17 February 2023).

- Candia, J.; Daya, G.N.; Tanaka, T.; Ferrucci, L.; Walker, K.A. Assessment of variability in the plasma 7k SomaScan proteomics assay. Sci. Rep. 2022, 12, 17147. [Google Scholar] [CrossRef] [PubMed]

- Palantir Foundry—The NIH Integrated Data Analysis Platform (NIDAP); NCI Center for Biomedical Informatics & Information Technology (CBIIT); Software Provided by Palantir Technologies Inc. Available online: https://www.palantir.com (accessed on 7 March 2023).

- Gold, L.; Walker, J.J.; Wilcox, S.K.; Williams, S. Advances in human proteomics at high scale with the SOMAscan proteomics platform. New Biotechnol. 2012, 29, 543–549. [Google Scholar] [CrossRef] [PubMed]

- Tuerk, C.; Gold, L. Systematic evolution of ligands by exponential enrichment: RNA ligands to bacteriophage T4 DNA polymerase. Science 1990, 249, 505–510. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Zeng, S.; Li, W.; Ouyang, H.; Xie, Y.; Feng, X.; Huang, L. A Novel Prognostic Pyroptosis-Related Gene Signature Correlates to Oxidative Stress and Immune-Related Features in Gliomas. Oxid. Med. Cell. Longev. 2023, 2023, 4256116. [Google Scholar] [CrossRef]

- Krauze, A.V.; Michael, S.; Trinh, N.; Chen, Q.; Yan, C.; Hu, Y.; Jiang, W.; Tasci, E.; Cooley, Z.T.; Sproull, M.T.; et al. Glioblastoma survival is associated with distinct proteomic alteration signatures post chemoirradiation in a large-scale proteomic panel. Crit. Rev. Oncol./Hematol. 2023. Submitted. [Google Scholar]

- Zottel, A.; Jovčevska, I.; Šamec, N.; Komel, R. Cytoskeletal proteins as glioblastoma biomarkers and targets for therapy: A systematic review. Criti. Rev. Oncol./Hematol. 2021, 160, 103283. [Google Scholar] [CrossRef]

- Deng, J.; Gao, G.; Wang, L.; Wang, T.; Yu, J.; Zhao, Z. Stratifin expression is a novel prognostic factor in human gliomas. Pathol.-Res. Pract. 2011, 207, 674–679. [Google Scholar] [CrossRef]

- Wischhusen, J.; Melero, I.; Fridman, W.H. Growth/Differentiation Factor-15 (GDF-15): From Biomarker to Novel Targetable Immune Checkpoint. Front. Immunol. 2020, 11, 951. [Google Scholar] [CrossRef]

- Radu, R.; Petrescu, G.E.D.; Gorgan, R.M.; Brehar, F.M. GFAPδ: A Promising Biomarker and Therapeutic Target in Glioblastoma. Front. Oncol. 2022, 12, 859247. [Google Scholar] [CrossRef]

- Sethi, M.K.; Downs, M.; Shao, C.; Hackett, W.E.; Phillips, J.J.; Zaia, J. In-Depth Matrisome and Glycoproteomic Analysis of Human Brain Glioblastoma Versus Control Tissue. Mol. Cell Proteomics 2022, 21, 100216. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Qiu, J.; Ai, L.; Ramachandran, C.; Yao, B.; Gopalakrishnan, S.; Fields, C.R.; Delmas, A.L.; Dyer, L.M.; Melnick, S.J.; Yachnis, A.T.; et al. Invasion suppressor cystatin E/M (CST6): High-level cell type-specific expression in normal brain and epigenetic silencing in gliomas. Lab. Investig. 2008, 88, 910–925. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Krämer, A.; Green, J.; Pollard, J., Jr.; Tugendreich, S. Causal analysis approaches in Ingenuity Pathway Analysis. Bioinformatics 2014, 30, 523–530. [Google Scholar] [CrossRef] [PubMed]

- Mann, M.; Kumar, C.; Zeng, W.-F.; Strauss, M.T. Artificial intelligence for proteomics and biomarker discovery. Cell Syst. 2021, 12, 759–770. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Swan, A.L.; Mobasheri, A.; Allaway, D.; Liddell, S.; Bacardit, J. Application of machine learning to proteomics data: Classification and biomarker identification in postgenomics biology. Omics J. Integr. Biol. 2013, 17, 595–610. [Google Scholar] [CrossRef]

- Sumonja, N.; Gemovic, B.; Veljkovic, N.; Perovic, V. Automated feature engineering improves prediction of protein–protein interactions. Amino Acids 2019, 51, 1187–1200. [Google Scholar] [CrossRef]

- Koras, K.; Juraeva, D.; Kreis, J.; Mazur, J.; Staub, E.; Szczurek, E. Feature selection strategies for drug sensitivity prediction. Sci. Rep. 2020, 10, 9377. [Google Scholar] [CrossRef]

- Demirel, H.C.; Arici, M.K.; Tuncbag, N. Computational approaches leveraging integrated connections of multi-omic data toward clinical applications. Mol. Omics 2022, 18, 7–18. [Google Scholar] [CrossRef]

- Jiang, L.; Zhang, Z.; Guo, S.; Zhao, Y.; Zhou, P. Clinical-Radiomics Nomogram Based on Contrast-Enhanced Ultrasound for Preoperative Prediction of Cervical Lymph Node Metastasis in Papillary Thyroid Carcinoma. Cancers 2023, 15, 1613. [Google Scholar] [CrossRef]

- Adeoye, J.; Wan, C.C.J.; Zheng, L.-W.; Thomson, P.; Choi, S.-W.; Su, Y.-X. Machine Learning-Based Genome-Wide Salivary DNA Methylation Analysis for Identification of Noninvasive Biomarkers in Oral Cancer Diagnosis. Cancers 2022, 14, 4935. [Google Scholar] [CrossRef]

- D’Urso, P.; Farneti, A.; Marucci, L.; Marzi, S.; Piludu, F.; Vidiri, A.; Sanguineti, G. Predictors of outcome after (chemo) radiotherapy for node-positive oropharyngeal cancer: The role of functional MRI. Cancers 2022, 14, 2477. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Ghandhi, S.A.; Ming, L.; Ivanov, V.N.; Hei, T.K.; Amundson, S.A. Regulation of early signaling and gene expression in the alpha-particle and bystander response of IMR-90 human fibroblasts. BMC Med. Genomics 2010, 3, 31. [Google Scholar] [CrossRef] [PubMed]

| ML-ACC | Without FS | LASSO FS | mRMR FS |

|---|---|---|---|

| SVM | 57.860 | 78.674 | 91.515 |

| LR | 67.633 | 85.341 | 92.708 |

| KNN | 62.197 | 53.068 | 88.466 |

| RF | 73.826 | 88.466 | 89.659 |

| AdaBoost | 88.409 | 89.072 | 88.447 |

| k | # of Features | SVM | LR | KNN | RF | AdaBoost |

|---|---|---|---|---|---|---|

| 5 | 11 | 93.314 | 92.083 | 82.386 | 91.496 | 91.477 |

| 4 | 26 | 89.640 | 93.314 | 62.197 | 93.939 | 90.890 |

| 3 | 44 | 89.053 | 96.363 | 46.345 | 92.121 | 93.939 |

| 2 | 90 | 85.985 | 92.064 | 60.379 | 91.496 | 93.901 |

| 1 | 197 | 76.269 | 85.966 | 54.868 | 87.841 | 87.822 |

| k | # of Features | SVM | LR | KNN | RF | AdaBoost |

|---|---|---|---|---|---|---|

| 5 | 5 | 86.004 | 88.428 | 87.235 | 87.841 | 87.197 |

| 4 | 7 | 90.890 | 92.708 | 90.265 | 91.496 | 91.496 |

| 3 | 8 | 95.152 | 96.364 | 92.708 | 90.871 | 93.920 |

| 2 | 11 | 92.708 | 96.364 | 92.689 | 90.284 | 94.508 |

| 1 | 34 | 92.102 | 92.102 | 87.254 | 92.121 | 90.871 |

| k | # of Features | SVM | LR | KNN | RF | AdaBoost |

|---|---|---|---|---|---|---|

| 15 | 2 | 87.216 | 87.216 | 89.659 | 87.841 | 85.379 |

| 14 | 2 | 87.216 | 87.216 | 89.659 | 87.841 | 85.379 |

| 13 | 4 | 90.265 | 90.265 | 90.284 | 92.727 | 89.034 |

| 12 | 6 | 93.920 | 92.708 | 91.477 | 92.102 | 93.314 |

| 11 | 6 | 93.920 | 92.708 | 91.477 | 92.102 | 93.314 |

| 10 | 8 | 95.152 | 96.364 | 92.708 | 90.265 | 93.920 |

| 9 | 8 | 95.152 | 96.364 | 92.708 | 90.265 | 93.920 |

| 8 | 8 | 95.152 | 96.364 | 92.708 | 90.265 | 93.920 |

| 7 | 10 | 93.333 | 94.546 | 90.284 | 92.102 | 90.284 |

| 6 | 12 | 93.939 | 95.152 | 90.909 | 91.496 | 90.246 |

| 5 | 17 | 96.345 | 95.114 | 88.447 | 91.496 | 90.871 |

| 4 | 32 | 93.295 | 95.739 | 68.921 | 90.890 | 89.640 |

| 3 | 52 | 92.121 | 95.151 | 49.962 | 93.333 | 92.670 |

| 2 | 113 | 87.216 | 92.670 | 60.379 | 91.496 | 95.152 |

| 1 | 218 | 78.087 | 85.966 | 55.492 | 89.053 | 93.314 |

| k | # of Features | SVM | LR | KNN | RF | AdaBoost |

|---|---|---|---|---|---|---|

| 15 | 2 | 5.175 | 4.408 | 4.072 | 4.232 | 2.191 |

| 14 | 2 | 5.175 | 4.408 | 4.072 | 4.232 | 2.191 |

| 13 | 4 | 4.423 | 4.821 | 4.425 | 4.924 | 2.384 |

| 12 | 6 | 5.060 | 5.269 | 3.509 | 4.088 | 3.515 |

| 11 | 6 | 5.060 | 5.269 | 3.509 | 4.088 | 3.515 |

| 10 | 8 | 3.636 | 4.454 | 5.269 | 3.496 | 4.272 |

| 9 | 8 | 3.636 | 4.454 | 5.269 | 3.496 | 4.272 |

| 8 | 8 | 3.636 | 4.454 | 5.269 | 3.496 | 4.272 |

| 7 | 10 | 4.848 | 5.555 | 4.425 | 4.088 | 4.425 |

| 6 | 12 | 4.285 | 3.636 | 6.357 | 3.995 | 3.526 |

| 5 | 17 | 2.966 | 2.444 | 3.476 | 4.827 | 5.400 |

| 4 | 32 | 6.177 | 4.105 | 6.384 | 4.259 | 1.442 |

| 3 | 52 | 4.535 | 2.424 | 7.329 | 4.020 | 2.469 |

| 2 | 113 | 4.807 | 1.557 | 4.954 | 4.430 | 4.924 |

| 1 | 218 | 4.720 | 3.133 | 5.559 | 3.031 | 3.515 |

| ML | ACC% | AUC | F1 | PRE | REC | SPEC |

|---|---|---|---|---|---|---|

| SVM | 57.860 | 0.415 | 0.518 | 0.698 | 0.515 | 0.690 |

| LR | 67.633 | 0.755 | 0.676 | 0.681 | 0.681 | 0.673 |

| KNN | 62.197 | 0.647 | 0.581 | 0.662 | 0.527 | 0.722 |

| RF | 73.826 | 0.808 | 0.744 | 0.768 | 0.746 | 0.737 |

| AdaBoost | 88.409 | 0.951 | 0.886 | 0.882 | 0.893 | 0.873 |

| ML | ACC% | AUC | F1 | PRE | REC | SPEC |

|---|---|---|---|---|---|---|

| SVM | 95.152 | 0.989 | 0.949 | 0.975 | 0.928 | 0.976 |

| LR | 96.364 | 0.987 | 0.964 | 0.963 | 0.965 | 0.965 |

| KNN | 92.708 | 0.965 | 0.930 | 0.929 | 0.932 | 0.923 |

| RF | 90.265 | 0.978 | 0.902 | 0.885 | 0.928 | 0.876 |

| AdaBoost | 93.920 | 0.979 | 0.941 | 0.941 | 0.942 | 0.935 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tasci, E.; Jagasia, S.; Zhuge, Y.; Sproull, M.; Cooley Zgela, T.; Mackey, M.; Camphausen, K.; Krauze, A.V. RadWise: A Rank-Based Hybrid Feature Weighting and Selection Method for Proteomic Categorization of Chemoirradiation in Patients with Glioblastoma. Cancers 2023, 15, 2672. https://doi.org/10.3390/cancers15102672

Tasci E, Jagasia S, Zhuge Y, Sproull M, Cooley Zgela T, Mackey M, Camphausen K, Krauze AV. RadWise: A Rank-Based Hybrid Feature Weighting and Selection Method for Proteomic Categorization of Chemoirradiation in Patients with Glioblastoma. Cancers. 2023; 15(10):2672. https://doi.org/10.3390/cancers15102672

Chicago/Turabian StyleTasci, Erdal, Sarisha Jagasia, Ying Zhuge, Mary Sproull, Theresa Cooley Zgela, Megan Mackey, Kevin Camphausen, and Andra Valentina Krauze. 2023. "RadWise: A Rank-Based Hybrid Feature Weighting and Selection Method for Proteomic Categorization of Chemoirradiation in Patients with Glioblastoma" Cancers 15, no. 10: 2672. https://doi.org/10.3390/cancers15102672