MVI-TR: A Transformer-Based Deep Learning Model with Contrast-Enhanced CT for Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma

Abstract

:Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Patients

- (a).

- Pathologically confirmed HCC after R0 resection or LT;

- (b).

- Single tumor without satellite nodules and a lesion diameter ≤ 5 cm;

- (c).

- Available for the pathological assessment of MVI;

- (d).

- Receipt of preoperative hepatic CECT scan < 1 month;

- (e).

- With well-preserved clinical and imaging information for reevaluation.

- (a).

- Lack of hepatic CECT within 1 month before resection or LT;

- (b).

- Patients with recurrent HCC;

- (c).

- Presence of extrahepatic metastases or macrovascular invasion;

- (d).

- With multiple liver tumors;

- (e).

- Clinical or pathological information was not available;

- (f).

- With poor imaging quality that did not match the region of interest (ROI) definition;

- (g).

- Patient received cancer-related preoperative treatments, including TACE, radiofrequency ablation (RFA), chemotherapy, targeted therapy, immunotherapy, or other antitumor treatments.

2.2. Clinical Characteristics and Pathological Examination

2.3. CT Data Collection

2.4. Tumor Segmentation

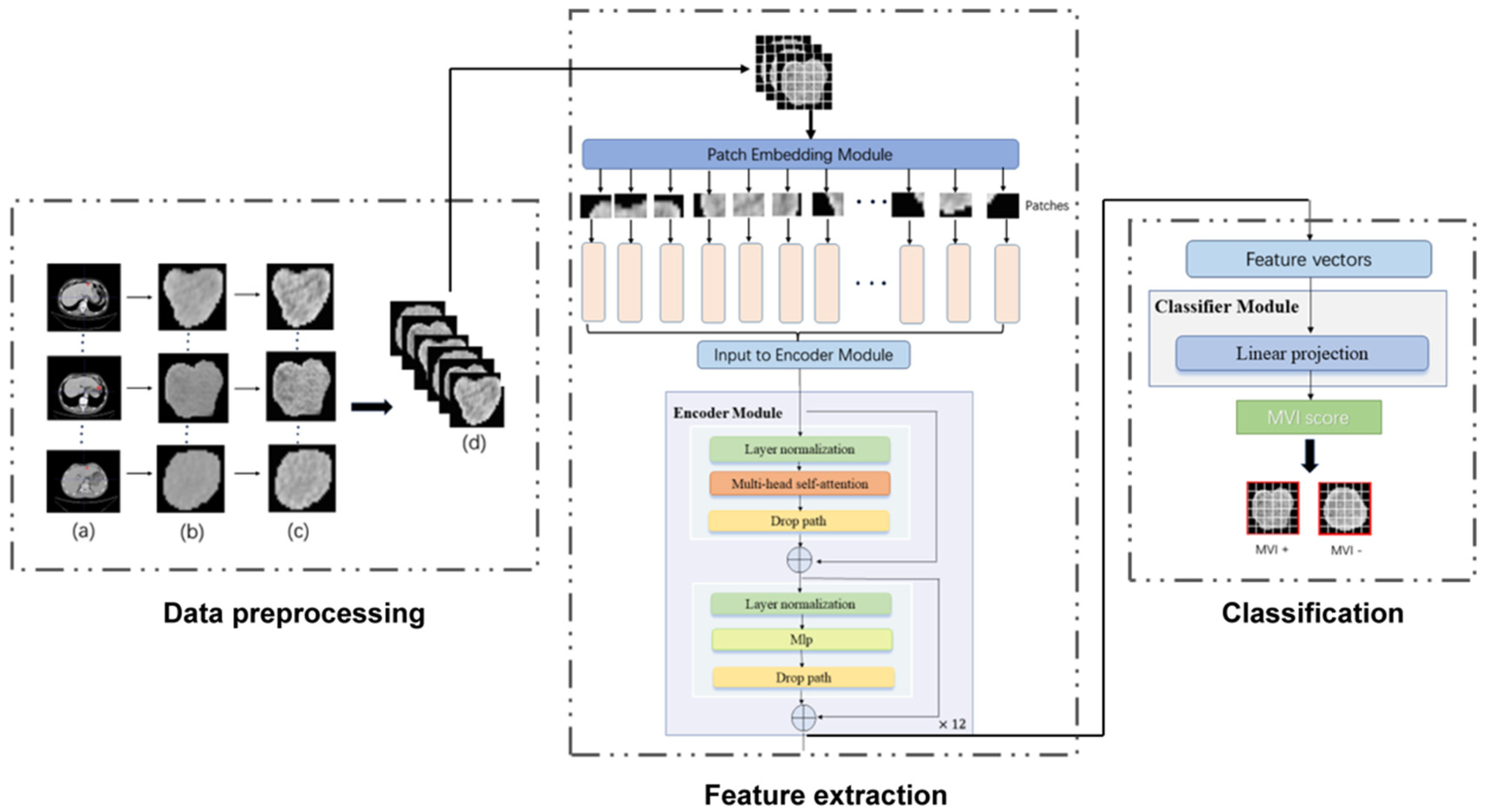

2.5. Data Preprocessing

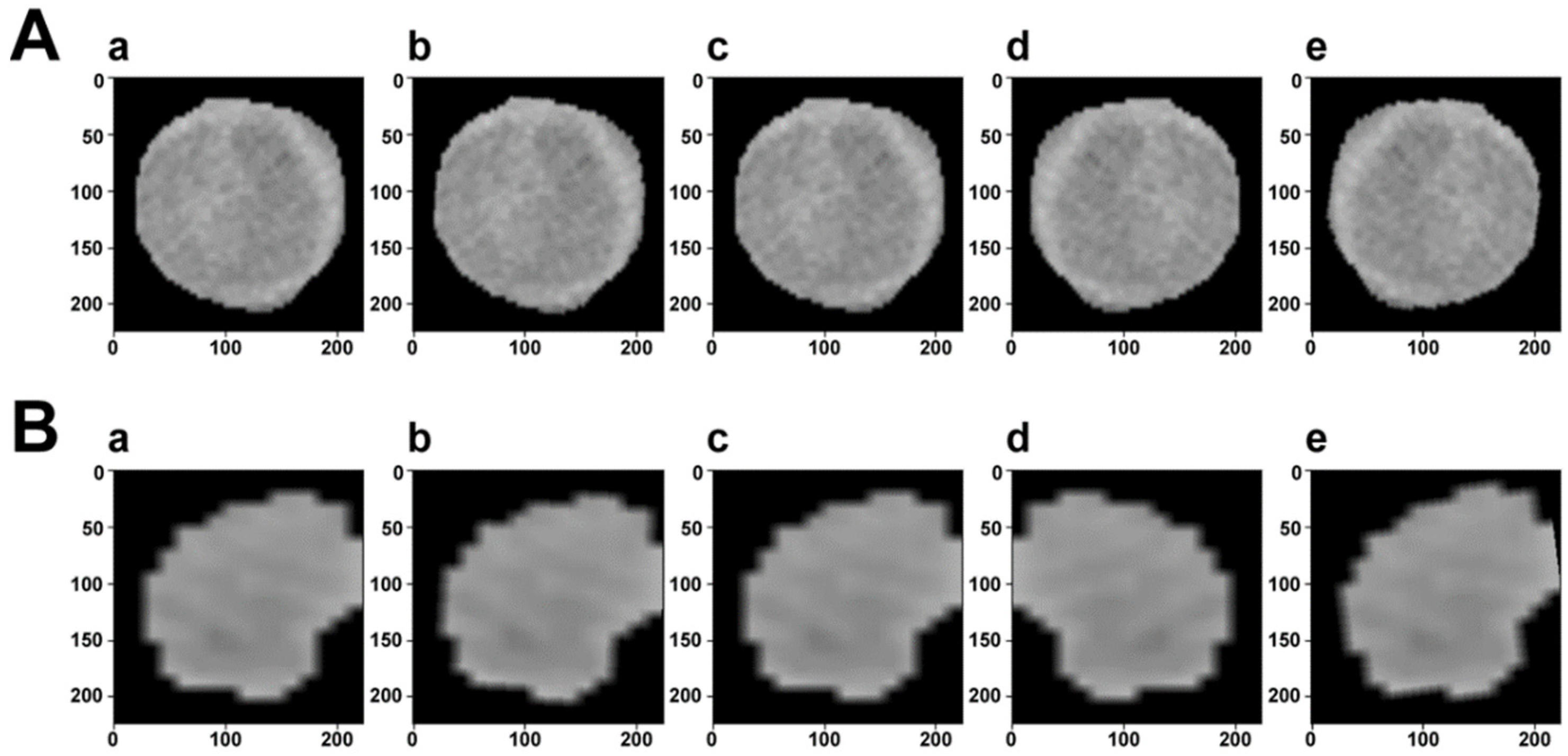

2.6. Data Augmentation

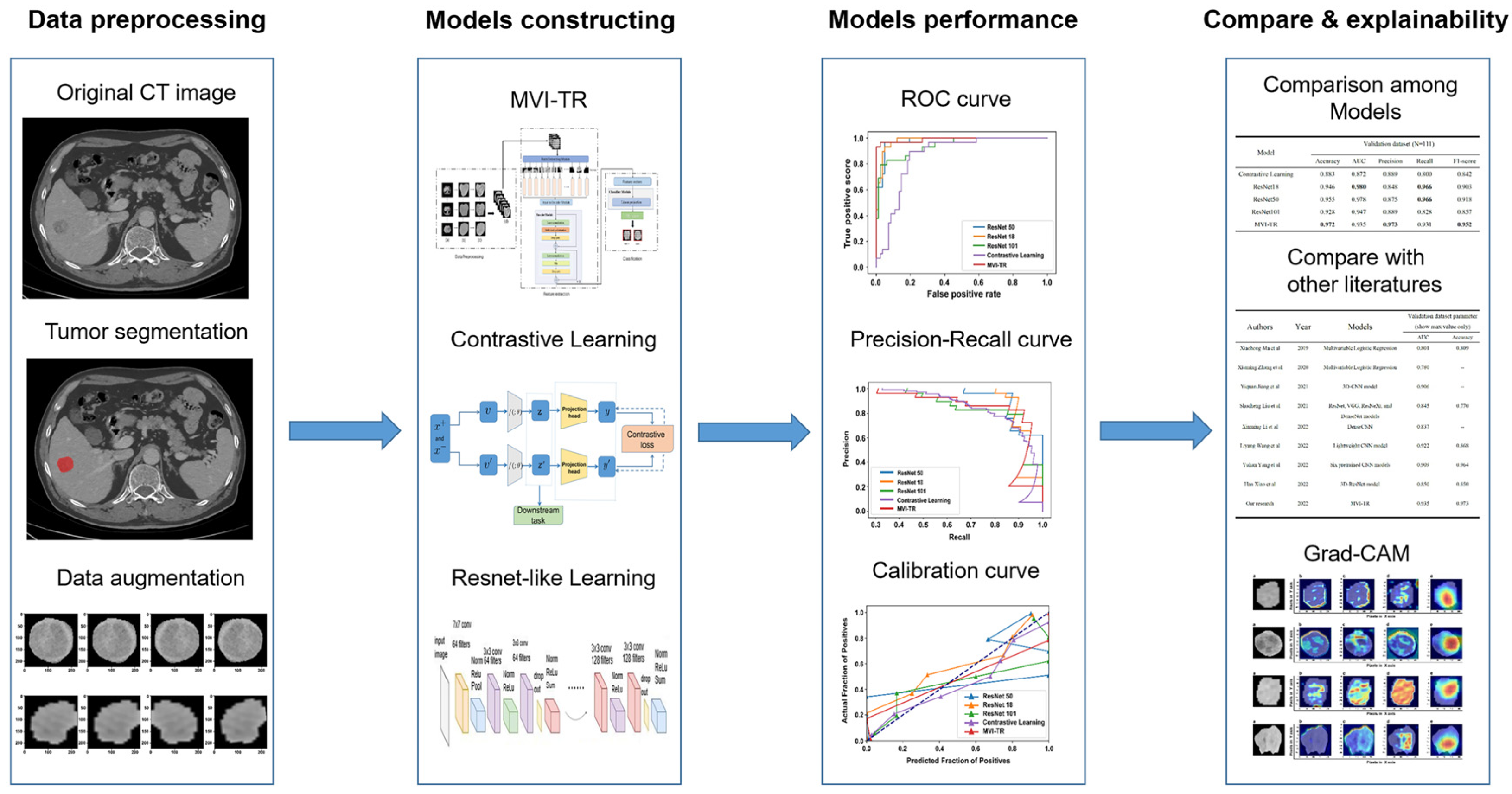

2.7. Deep Learning Models

2.7.1. Contrastive Learning

2.7.2. MVI-TR: A Transformer-Based Model

2.7.3. ResNets Family

2.8. Regularization Techniques

2.9. Implementation

- (1)

- Rotation at a randomly selected angle from −10 degrees to 10 degrees;

- (2)

- Cutting the image with a random size from 0.8 to 1.0 and a random longitudinal ratio from 0.95 to 1.05;

- (3)

- Rolling the image horizontally with a probability of 1/2.

3. Results

3.1. Demographic Characteristics

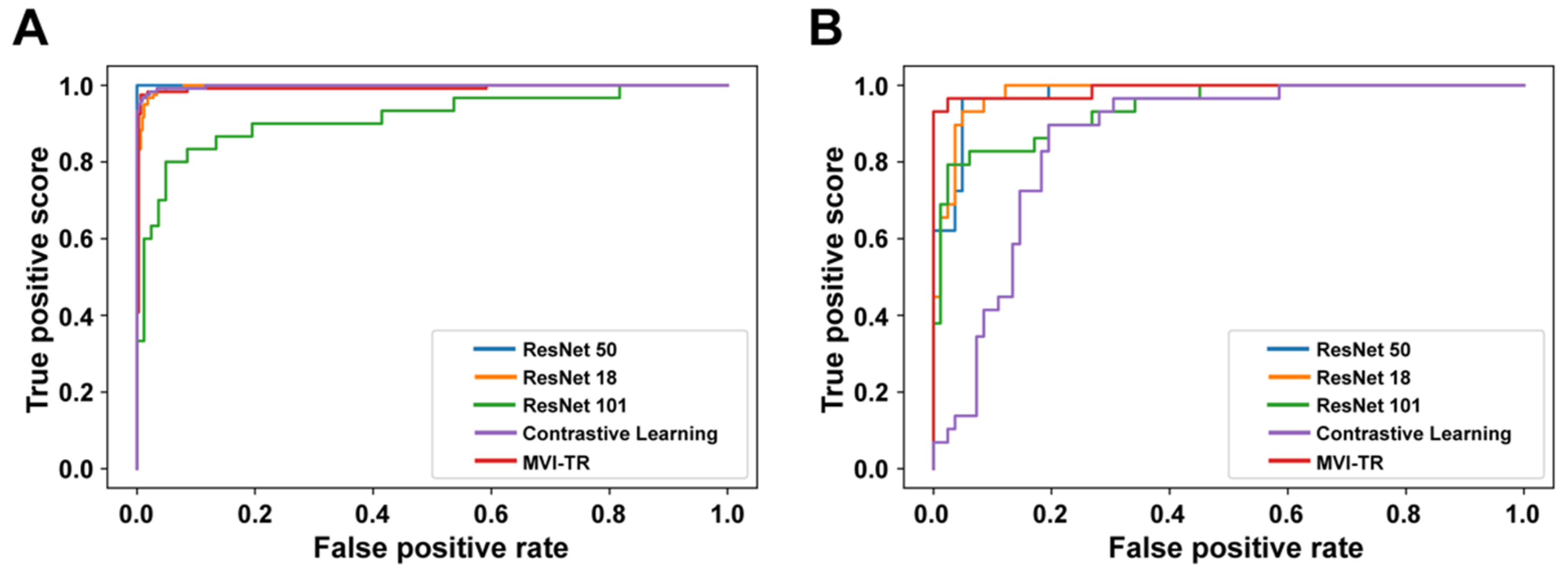

3.2. Performance of MVI Prediction Models

3.3. The Advantages of MVI-TR

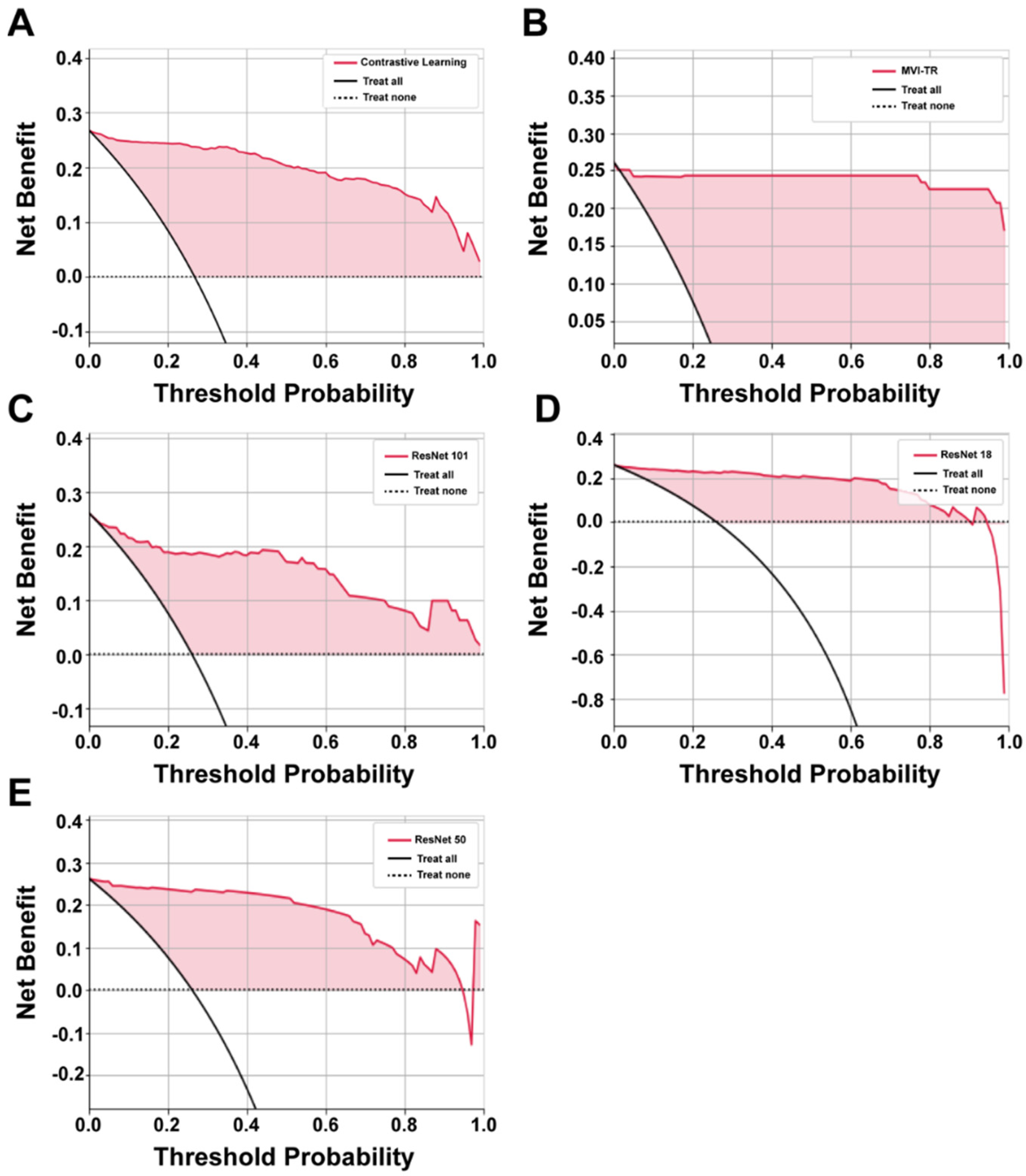

3.3.1. Decision Curve Analysis (DCA)

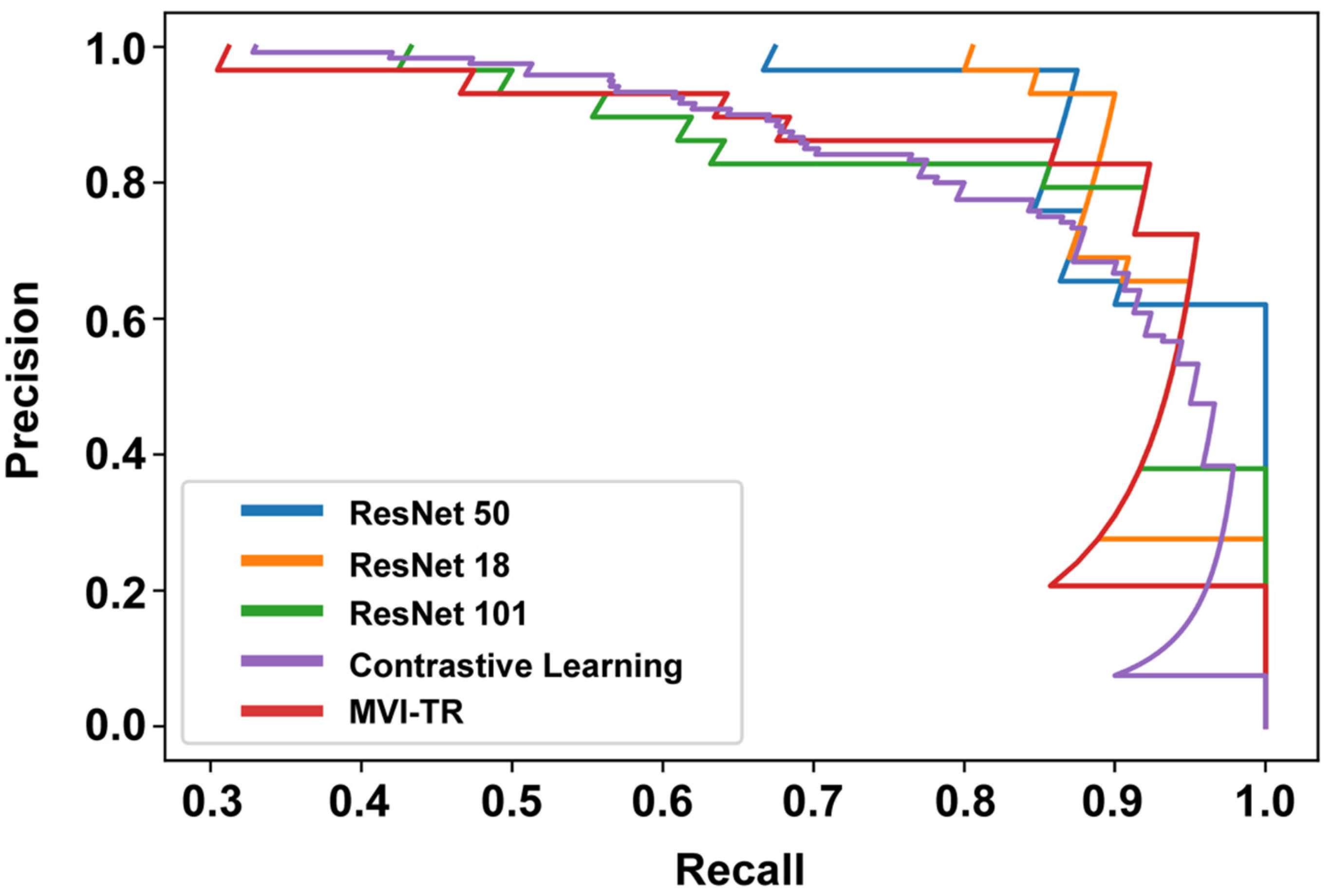

3.3.2. Precision-Recall (PR) Curve

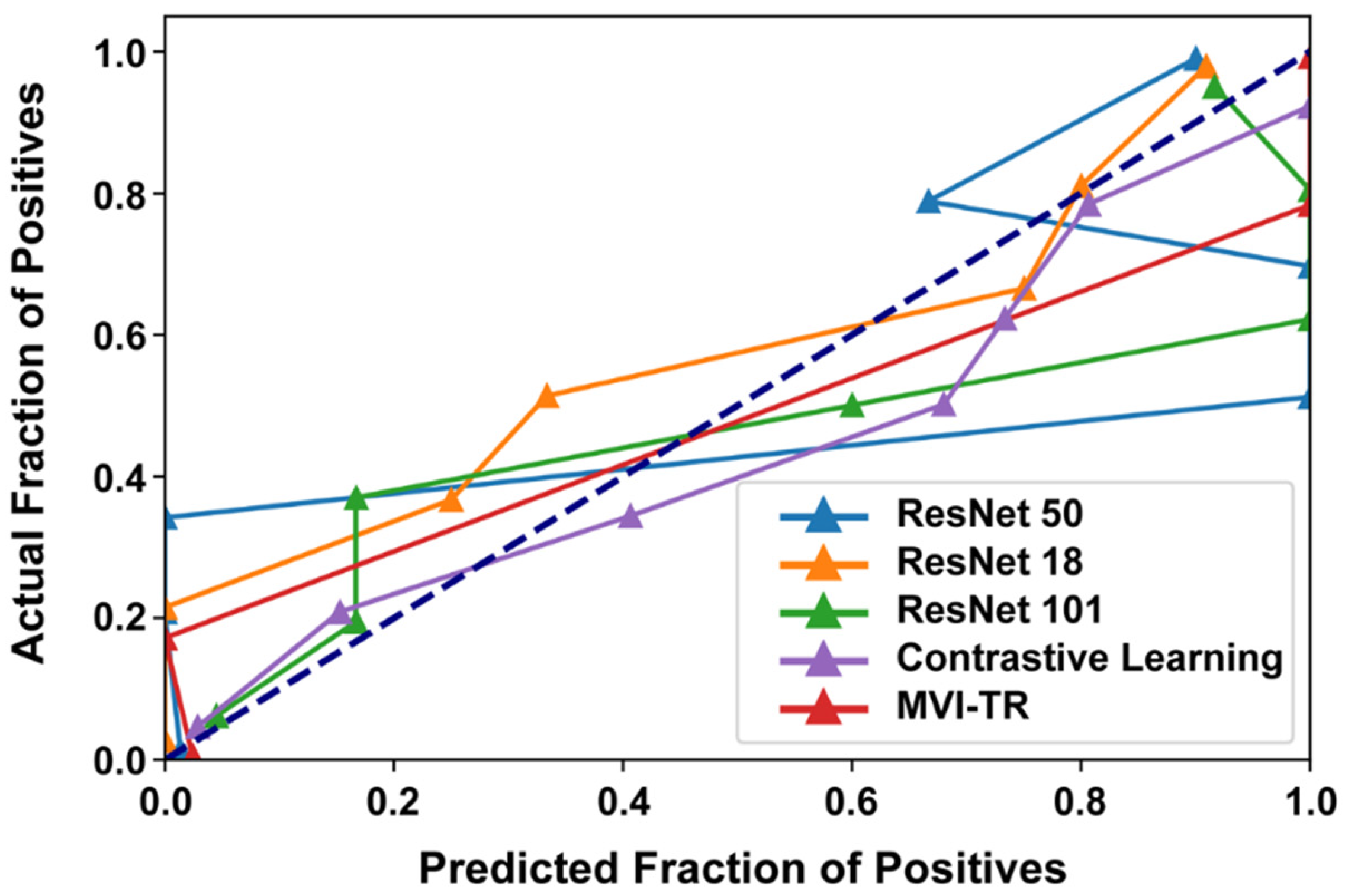

3.3.3. Calibration Curve

3.4. Model Explainability

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AFP | Alpha-fetoprotein |

| AP | Arterial phase |

| AUC | Area under the receiver operating characteristic curve |

| CA19-9 | Carbohydrate antigen 19-9 |

| CAD | Computer-aided diagnosis |

| CEA | Carcinoembryonic antigen |

| CECT | Contrast-enhanced Computed Tomography |

| CNN | Convolutional Neural Network |

| DCNN | Deep convolutional neural network |

| DL | Deep learning |

| FC | Fully connected |

| FNN | Feed-forward neural network |

| Grad-CAM | Gradient-weighted class activation mapping |

| HBsAg | Hepatitis B surface antigen |

| HCC | Hepatocellular cancer |

| LT | Liver transplantation |

| MLP | Multi-layer perceptron |

| MSA | Multi-head self-attention |

| MVI | Microvascular invasion |

| MVI-TR | Transformer-based end-to-end DL model |

| NC | Non-contrast |

| NLP | Natural language processing |

| PIVKA II | Protein induced by vitamin K absence/antagonist-II |

| ResNets | Residual networks |

| RFA | Radiofrequency ablation |

| ROI | Region of Interest |

| TACE | Transcatheter arterial chemoembolization |

| VP | Venous phase |

| WL | Window Level |

| WW | Window Width |

References

- Llovet, J.M.; Montal, R.; Sia, D.; Finn, R.S. Molecular therapies and precision medicine for hepatocellular carcinoma. Nat. Rev. Clin. Oncol. 2018, 15, 599–616. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Nault, J.C.; Sutter, O.; Nahon, P.; Ganne-Carrié, N.; Séror, O. Percutaneous treatment of hepatocellular carcinoma: State of the art and innovations. J. Hepatol. 2018, 68, 783–797. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sapisochin, G.; Bruix, J. Liver transplantation for hepatocellular carcinoma: Outcomes and novel surgical approaches. Nat. Rev. Gastroenterol. Hepatol. 2017, 14, 203–217. [Google Scholar] [CrossRef]

- Lim, K.C.; Chow, P.K.; Allen, J.C.; Siddiqui, F.J.; Chan, E.S.; Tan, S.B. Systematic review of outcomes of liver resection for early hepatocellular carcinoma within the Milan criteria. Br. J. Surg. 2012, 99, 1622–1629. [Google Scholar] [CrossRef]

- Hong, S.B.; Choi, S.H.; Kim, S.Y.; Shim, J.H.; Lee, S.S.; Byun, J.H.; Park, S.H.; Kim, K.W.; Kim, S.; Lee, N.K. MRI Features for Predicting Microvascular Invasion of Hepatocellular Carcinoma: A Systematic Review and Meta-Analysis. Liver Cancer 2021, 10, 94–106. [Google Scholar] [CrossRef]

- Lee, S.; Kang, T.W.; Song, K.D.; Lee, M.W.; Rhim, H.; Lim, H.K.; Kim, S.Y.; Sinn, D.H.; Kim, J.M.; Kim, K.; et al. Effect of Microvascular Invasion Risk on Early Recurrence of Hepatocellular Carcinoma After Surgery and Radiofrequency Ablation. Ann. Surg 2021, 273, 564–571. [Google Scholar] [CrossRef]

- Tang, Y.; Xu, L.; Ren, Y.; Li, Y.; Yuan, F.; Cao, M.; Zhang, Y.; Deng, M.; Yao, Z. Identification and Validation of a Prognostic Model Based on Three MVI-Related Genes in Hepatocellular Carcinoma. Int. J. Biol. Sci. 2022, 18, 261–275. [Google Scholar] [CrossRef]

- Wang, H.; Feng, L.H.; Qian, Y.W.; Cao, Z.Y.; Wu, M.C.; Cong, W.M. Does microvascular invasion in Barcelona Clinic Liver Cancer stage A multinodular hepatocellular carcinoma indicate early-stage behavior? Ann. Transl. Med. 2019, 7, 428. [Google Scholar] [CrossRef]

- Degroote, H.; Piñero, F.; Costentin, C.; Notarpaolo, A.; Boin, I.F.; Boudjema, K.; Baccaro, C.; Chagas, A.L.; Bachellier, P.; Ettorre, G.M.; et al. International study on the outcome of locoregional therapy for liver transplant in hepatocellular carcinoma beyond Milan criteria. JHEP Rep. 2021, 3, 100331. [Google Scholar] [CrossRef]

- Lei, Z.; Li, J.; Wu, D.; Xia, Y.; Wang, Q.; Si, A.; Wang, K.; Wan, X.; Lau, W.Y.; Wu, M.; et al. Nomogram for Preoperative Estimation of Microvascular Invasion Risk in Hepatitis B Virus-Related Hepatocellular Carcinoma Within the Milan Criteria. JAMA Surg. 2016, 151, 356–363. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.L.; An, J.; Park, J.A.; Park, S.H.; Lim, Y.S.; Lee, E.K. Magnetic Resonance Imaging Is Cost-Effective for Hepatocellular Carcinoma Surveillance in High-Risk Patients With Cirrhosis. Hepatology 2019, 69, 1599–1613. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Zhang, H.L.; Liu, Q.P.; Sun, S.W.; Zhang, J.; Zhu, F.P.; Yang, G.; Yan, X.; Zhang, Y.D.; Liu, X.S. Radiomic analysis of contrast-enhanced CT predicts microvascular invasion and outcome in hepatocellular carcinoma. J. Hepatol. 2019, 70, 1133–1144. [Google Scholar] [CrossRef] [PubMed]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef] [Green Version]

- Peng, H.; Dong, D.; Fang, M.J.; Li, L.; Tang, L.L.; Chen, L.; Li, W.F.; Mao, Y.P.; Fan, W.; Liu, L.Z.; et al. Prognostic Value of Deep Learning PET/CT-Based Radiomics: Potential Role for Future Individual Induction Chemotherapy in Advanced Nasopharyngeal Carcinoma. Clin. Cancer Res. 2019, 25, 4271–4279. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hosny, A.; Parmar, C.; Coroller, T.P.; Grossmann, P.; Zeleznik, R.; Kumar, A.; Bussink, J.; Gillies, R.J.; Mak, R.H.; Aerts, H. Deep learning for lung cancer prognostication: A retrospective multi-cohort radiomics study. PLoS Med. 2018, 15, e1002711. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Hou, X.; Hu, Y.; Huang, G.; Ye, X.; Nie, S. A deep learning- and CT image-based prognostic model for the prediction of survival in non-small cell lung cancer. Med. Phys. 2021, 48, 7946–7958. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Fang, M.; Dong, D.; Wang, X.; Ke, X.; Zhang, L.; Hu, C.; Guo, L.; Guan, X.; Zhou, J.; et al. Development and validation of a CT-based radiomic nomogram for preoperative prediction of early recurrence in advanced gastric cancer. Radiother. Oncol. 2020, 145, 13–20. [Google Scholar] [CrossRef]

- Kim, H.; Goo, J.M.; Lee, K.H.; Kim, Y.T.; Park, C.M. Preoperative CT-based Deep Learning Model for Predicting Disease-Free Survival in Patients with Lung Adenocarcinomas. Radiology 2020, 296, 216–224. [Google Scholar] [CrossRef]

- Wang, K.; Xiang, Y.; Yan, J.; Zhu, Y.; Chen, H.; Yu, H.; Cheng, Y.; Li, X.; Dong, W.; Ji, Y.; et al. A deep learning model with incorporation of microvascular invasion area as a factor in predicting prognosis of hepatocellular carcinoma after R0 hepatectomy. Hepatol. Int. 2022, 16, 1188–1198. [Google Scholar] [CrossRef]

- Sun, L.; Sun, Z.; Wang, C.; Cheng, S.; Wang, K.; Huang, M. PCformer: An MVI recognition method via classification of the MVI boundary according to histopathological images of liver cancer. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2022, 39, 1673–1681. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Chen, Q.; Chen, Y.; Zhu, Y.; Zhang, Y.; Cao, D.; Zhou, W.; Liang, X.; Yang, Y.; Lin, L.; et al. A novel multimodal deep learning model for preoperative prediction of microvascular invasion and outcome in hepatocellular carcinoma. Eur J. Surg Oncol. 2023, 49, 156–164. [Google Scholar] [CrossRef]

- Deng, Y.; Jia, X.; Yu, G.; Hou, J.; Xu, H.; Ren, A.; Wang, Z.; Yang, D.; Yang, Z. Can a proposed double branch multimodality-contribution-aware TripNet improve the prediction performance of the microvascular invasion of hepatocellular carcinoma based on small samples? Front. Oncol. 2022, 12, 1035775. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wu, M.; Li, R.; Xu, X.; Zhu, C.; Feng, X. MVI-Mind: A Novel Deep-Learning Strategy Using Computed Tomography (CT)-Based Radiomics for End-to-End High Efficiency Prediction of Microvascular Invasion in Hepatocellular Carcinoma. Cancers 2022, 14, 2956. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhou, Y.; Zhou, C.; Ma, X. Deep learning radiomics based on contrast enhanced computed tomography predicts microvascular invasion and survival outcome in early stage hepatocellular carcinoma. Eur. J. Surg. Oncol. 2022, 48, 1068–1077. [Google Scholar] [CrossRef]

- Zhou, J.; Sun, H.C.; Wang, Z.; Cong, W.M.; Wang, J.H.; Zeng, M.S.; Yang, J.M.; Bie, P.; Liu, L.X.; Wen, T.F.; et al. Guidelines for Diagnosis and Treatment of Primary Liver Cancer in China (2017 Edition). Liver Cancer 2018, 7, 235–260. [Google Scholar] [CrossRef]

- Gao, R.; Zhao, S.; Aishanjiang, K.; Cai, H.; Wei, T.; Zhang, Y.; Liu, Z.; Zhou, J.; Han, B.; Wang, J.; et al. Deep learning for differential diagnosis of malignant hepatic tumors based on multi-phase contrast-enhanced CT and clinical data. J. Hematol. Oncol. 2021, 14, 154. [Google Scholar] [CrossRef]

- Yushkevich, P.A.; Piven, J.; Hazlett, H.C.; Smith, R.G.; Ho, S.; Gee, J.C.; Gerig, G. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage 2006, 31, 1116–1128. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning (ICML), Electronic Network, 13–18 July 2020. [Google Scholar]

- Dangovski, R.; Jing, L.; Loh, C.; Han, S.; Srivastava, A.; Cheung, B.; Agrawal, P.; Soljačić, M. Equivariant Contrastive Learning. arXiv 2021, arXiv:2111.00899. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE-Computer-Society Conference on Computer Vision and Pattern Recognition Workshops, Miami Beach, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. FractalNet: Ultra-Deep Neural Networks without Residuals. arXiv 2016, arXiv:1605.07648. [Google Scholar]

- Ma, X.; Wei, J.; Gu, D.; Zhu, Y.; Feng, B.; Liang, M.; Wang, S.; Zhao, X.; Tian, J. Preoperative radiomics nomogram for microvascular invasion prediction in hepatocellular carcinoma using contrast-enhanced CT. Eur. Radiol. 2019, 29, 3595–3605. [Google Scholar] [CrossRef] [PubMed]

- Vickers, A.J.; Elkin, E.B. Decision curve analysis: A novel method for evaluating prediction models. Med. Decis. Mak. 2006, 26, 565–574. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Rousson, V.; Lee, W.C.; Ferdynus, C.; Chen, M.; Qian, X.; Guo, Y. Decision curve analysis: A technical note. Ann. Transl Med. 2018, 6, 308. [Google Scholar] [CrossRef]

- Lim, C.; Bhangui, P.; Salloum, C.; Gómez-Gavara, C.; Lahat, E.; Luciani, A.; Compagnon, P.; Calderaro, J.; Feray, C.; Azoulay, D. Impact of time to surgery in the outcome of patients with liver resection for BCLC 0-A stage hepatocellular carcinoma. J. Hepatol. 2017. [Google Scholar] [CrossRef] [PubMed]

- Gunasekaran, G.; Bekki, Y.; Lourdusamy, V.; Schwartz, M. Surgical Treatments of Hepatobiliary Cancers. Hepatology 2021, 73 (Suppl. S1), 128–136. [Google Scholar] [CrossRef]

- Wei, X.; Su, R.; Yang, M.; Pan, B.; Lu, J.; Lin, H.; Shu, W.; Wang, R.; Xu, X. Quantitative proteomic profiling of hepatocellular carcinoma at different serum alpha-fetoprotein level. Transl. Oncol. 2022, 20, 101422. [Google Scholar] [CrossRef]

- Peng, Z.; Chen, S.; Xiao, H.; Wang, Y.; Li, J.; Mei, J.; Chen, Z.; Zhou, Q.; Feng, S.; Chen, M.; et al. Microvascular Invasion as a Predictor of Response to Treatment with Sorafenib and Transarterial Chemoembolization for Recurrent Intermediate-Stage Hepatocellular Carcinoma. Radiology 2019, 292, 237–247. [Google Scholar] [CrossRef]

- Wang, L.; Ke, Q.; Lin, K.; Chen, J.; Wang, R.; Xiao, C.; Liu, X.; Liu, J. Not All Hepatocellular Carcinoma Patients with Microvascular Invasion After R0 Resection Could Be Benefited from Prophylactic Transarterial Chemoembolization: A Propensity Score Matching Study. Cancer Manag. Res. 2020, 12, 3815–3825. [Google Scholar] [CrossRef]

- Liu, S.C.; Lai, J.; Huang, J.Y.; Cho, C.F.; Lee, P.H.; Lu, M.H.; Yeh, C.C.; Yu, J.; Lin, W.C. Predicting microvascular invasion in hepatocellular carcinoma: A deep learning model validated across hospitals. Cancer Imaging 2021, 21, 56. [Google Scholar] [CrossRef] [PubMed]

- Xiao, H.; Guo, Y.; Zhou, Q.; Chen, Q.; Du, Q.; Chen, S.; Fu, S.; Lin, J.; Li, D.; Song, X.; et al. Prediction of microvascular invasion in hepatocellular carcinoma with expert-inspiration and skeleton sharing deep learning. Liver Int. 2022, 42, 1423–1431. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Ruan, S.; Xiao, W.; Shao, J.; Tian, W.; Liu, W.; Zhang, Z.; Wan, D.; Huang, J.; Huang, Q.; et al. Contrast-enhanced CT radiomics for preoperative evaluation of microvascular invasion in hepatocellular carcinoma: A two-center study. Clin. Transl. Med. 2020, 10, e111. [Google Scholar] [CrossRef]

- Jiang, Y.Q.; Cao, S.E.; Cao, S.; Chen, J.N.; Wang, G.Y.; Shi, W.Q.; Deng, Y.N.; Cheng, N.; Ma, K.; Zeng, K.N.; et al. Preoperative identification of microvascular invasion in hepatocellular carcinoma by XGBoost and deep learning. J. Cancer Res. Clin. Oncol. 2021, 147, 821–833. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Qi, Z.; Du, H.; Geng, Z.; Li, Z.; Qin, S.; Zhang, X.; Liang, J.; Zhang, X.; Liang, W.; et al. Deep convolutional neural network for preoperative prediction of microvascular invasion and clinical outcomes in patients with HCCs. Eur. Radiol. 2022, 32, 771–782. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Sun, S.W.; Liu, Q.P.; Xu, X.; Zhang, Y.; Zhang, Y.D. TED: Two-stage expert-guided interpretable diagnosis framework for microvascular invasion in hepatocellular carcinoma. Med. Image Anal. 2022, 82, 102575. [Google Scholar] [CrossRef]

| Clinical Characteristics | All Subjects (n = 559) | Training Cohort (n = 448) | Validation Cohort (n = 111) | p Value |

|---|---|---|---|---|

| MVI, no (%) | 559 (100) | 448 (100) | 111 (100) | 0.888 |

| Absence | 410 (73.3) | 328 (73.2) | 82 (73.9) | |

| Presence | 149 (26.7) | 120 (26.8) | 29 (26.1) | |

| Age (years), median (IQR) | 58 (52–66) | 58 (51.3–66) | 59 (52–66) | 0.835 |

| Gender, no (%) | 559 (100) | 448 (100) | 111 (100) | 0.827 |

| Male | 444 (79.4) | 355 (79.2) | 89 (80.2) | |

| Female | 115 (20.6) | 93 (20.8) | 22 (19.8) | |

| MDT (cm), median (IQR) | 2.6 (2.0–3.5) | 2.6 (2.0–3.5) | 2.7 (2.0–3.5) | 0.631 |

| HBsAg status, no (%) | 555 (99.3) | 445 (99.3) | 110 (99.1) | 0.151 |

| Negative | 100 (17.9) | 75 (16.7) | 25 (22.5) | |

| Positive | 455 (81.4) | 370 (82.6) | 85 (76.6) | |

| HBV-DNA | 517 (92.5) | 413 (92.2) | 104 (93.7) | 0.498 |

| Detectable (≥103) | 154 (27.6) | 126 (28.1) | 28 (25.2) | |

| Beyond detection (<103) | 363 (64.9) | 287 (64.1) | 76 (68.5) | |

| AFP, no (%) | 549 (98.2) | 439 (98.0) | 110 (99.1) | 0.511 |

| Median (IQR), ng/mL | 10.6 (3.6–131.0) | 11.4 (3.6–131.1) | 8.5 (3.6–125.1) | |

| CEA, no (%) | 546 (97.7) | 436 (97.3) | 110 (99.1) | 0.499 |

| Median (IQR), ng/mL | 2.7 (1.9–4.0) | 2.7 (1.8–4.0) | 2.8 (2.0–4.3) | |

| CA19-9, no (%) | 492 (88.0) | 391 (87.3) | 101 (91) | 0.318 |

| Median (IQR), KU/L | 7.65 (4.7–14.1) | 7.4 (4.7–13.7) | 9.9 (5.1–15.2) | |

| PIVKA II, no (%) | 334 (59.7) | 269 (60) | 65 (58.6) | 0.967 |

| Median (IQR), KU/L | 82 (35–400) | 83 (35–391) | 71 (38.5–448) | |

| Therapeutic Method | 559 (100) | 448 (100) | 111 (100) | 1.000 |

| Resection | 540 (96.6) | 433 (96.7) | 107 (96.4) | |

| Transplantation | 19 (3.4) | 15 (3.3) | 4 (3.6) | |

| Child Score | 559 (100) | 448 (100) | 111 (100) | 0.625 |

| A | 545 (97.5) | 438 (97.8) | 107 (96.4) | |

| B | 14 (2.5) | 10 (2.2) | 4 (3.6) |

| Model | Training Dataset (n = 448) | Validation Dataset (n = 111) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | AUC | Precision | Recall | F1-Score | Accuracy | AUC | Precision | Recall | F1-Score | |

| Contrastive Learning | 0.998 | 1.000 | 0.996 | 0.989 | 0.992 | 0.883 | 0.872 | 0.889 | 0.800 | 0.842 |

| ResNet18 | 0.975 | 0.997 | 0.936 | 0.975 | 0.955 | 0.946 | 0.980 | 0.848 | 0.966 | 0.903 |

| ResNet50 | 0.998 | 0.987 | 0.992 | 1.000 | 0.996 | 0.955 | 0.978 | 0.875 | 0.966 | 0.918 |

| ResNet101 | 0.917 | 0.939 | 0.906 | 0.774 | 0.833 | 0.928 | 0.947 | 0.889 | 0.828 | 0.857 |

| MVI-TR | 0.991 | 0.980 | 0.993 | 0.988 | 0.991 | 0.972 | 0.935 | 0.973 | 0.931 | 0.952 |

| Model | Parameters’ Number | FLOPs |

|---|---|---|

| Contrastive learning | 24.68 M | 4132.87 M |

| ResNet18 | 11.17 M | 27,335.88 M |

| ResNet50 | 35.36 M | 5366.323 M |

| ResNet101 | 44.59 M | 123,992.21 M |

| MVI-TR | 85.80 M | 16,863.63 M |

| Authors | Year | Models | Validation Dataset Parameter (Show Max Value Only) | ||||

|---|---|---|---|---|---|---|---|

| AUC | Accuracy | Precision | Recall | F1-Score | |||

| Xiaohong Ma et al. [34] | 2019 | Multivariable Logistic Regression | 0.801 | 0.809 | -- | -- | -- |

| Xiuming Zhang et al. [44] | 2020 | Multivariable Logistic Regression | 0.780 | -- | -- | -- | -- |

| Yiquan Jiang et al. [45] | 2021 | 3D-CNN model | 0.906 | -- | -- | -- | 0.8 |

| Shucheng Liu et al. [42] | 2021 | ResNet, VGG, ResNeXt, and DenseNet models | 0.845 | 0.770 | -- | -- | -- |

| Xinming Li et al. [46] | 2022 | DenseCNN | 0.837 | -- | -- | -- | -- |

| Liyang Wang et al. [24] | 2022 | Lightweight CNN model | 0.922 | 0.868 | 0.875 | 0.827 | 0.8488 |

| Yuhan Yang et al. [25] | 2022 | Six pretrained CNN models | 0.909 | 0.964 | -- | -- | -- |

| Han Xiao et al. [43] | 2022 | 3D-ResNet model | 0.850 | 0.850 | -- | -- | -- |

| Yuhang Zhou et al. [47] | 2022 | Two-stage Expert-guided Diagnosis | 0.766 | 0.572 | 0.676 | 0.517 | 0.807 |

| Our research | 2023 | MVI-TR | 0.935 | 0.973 | 0.973 | 0.931 | 0.952 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, L.; Wang, Q.; Hong, J.; Han, Y.; Zhang, W.; Zhong, X.; Che, Y.; Ma, Y.; Du, K.; Wu, D.; et al. MVI-TR: A Transformer-Based Deep Learning Model with Contrast-Enhanced CT for Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma. Cancers 2023, 15, 1538. https://doi.org/10.3390/cancers15051538

Cao L, Wang Q, Hong J, Han Y, Zhang W, Zhong X, Che Y, Ma Y, Du K, Wu D, et al. MVI-TR: A Transformer-Based Deep Learning Model with Contrast-Enhanced CT for Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma. Cancers. 2023; 15(5):1538. https://doi.org/10.3390/cancers15051538

Chicago/Turabian StyleCao, Linping, Qing Wang, Jiawei Hong, Yuzhe Han, Weichen Zhang, Xun Zhong, Yongqian Che, Yaqi Ma, Keyi Du, Dongyan Wu, and et al. 2023. "MVI-TR: A Transformer-Based Deep Learning Model with Contrast-Enhanced CT for Preoperative Prediction of Microvascular Invasion in Hepatocellular Carcinoma" Cancers 15, no. 5: 1538. https://doi.org/10.3390/cancers15051538