Simple Summary

Skin diseases can be serious, and early detection is key to effective treatment. Unfortunately, the quality of images used to diagnose these diseases often suffers due to interference from hair, making accurate diagnosis challenging. This research introduces a novel technology, the DM–AHR, a self-supervised conditional diffusion model designed specifically to generate clear, hairless images for better skin disease diagnosis. Our work not only presents a new, advanced model that expertly identifies and removes hair from dermoscopic images but also introduces a specialized dataset, DERMAHAIR, to further research and improve diagnostic processes. The enhancements in image quality provided by DM–AHR significantly improve the accuracy of skin disease diagnoses, and it promises to be a valuable tool in medical imaging.

Abstract

Accurate skin diagnosis through end-user applications is important for early detection and cure of severe skin diseases. However, the low quality of dermoscopic images hampers this mission, especially with the presence of hair on these kinds of images. This paper introduces DM–AHR, a novel, self-supervised conditional diffusion model designed specifically for the automatic generation of hairless dermoscopic images to improve the quality of skin diagnosis applications. The current research contributes in three significant ways to the field of dermatologic imaging. First, we develop a customized diffusion model that adeptly differentiates between hair and skin features. Second, we pioneer a novel self-supervised learning strategy that is specifically tailored to optimize performance for hairless imaging. Third, we introduce a new dataset, named DERMAHAIR (DERMatologic Automatic HAIR Removal Dataset), that is designed to advance and benchmark research in this specialized domain. These contributions significantly enhance the clarity of dermoscopic images, improving the accuracy of skin diagnosis procedures. We elaborate on the architecture of DM–AHR and demonstrate its effective performance in removing hair while preserving critical details of skin lesions. Our results show an enhancement in the accuracy of skin lesion analysis when compared to existing techniques. Given its robust performance, DM–AHR holds considerable promise for broader application in medical image enhancement.

1. Introduction

Global skin cancer rates are escalating, highlighting the urgent need for accurate and efficient diagnostic methods. The World Health Organization (WHO) reports that skin cancers comprise a significant proportion of all cancer cases worldwide, with melanoma recognized as the most lethal form. The incidence of melanoma has been steadily increasing over the past few decades, marking it as a critical public health concern (WHO, 2021) [1]. Early detection is paramount in the fight against skin cancer, as it significantly improves prognosis. As part of this fight, there has been an increase in end user applications designed to diagnose skin health conditions. However, these applications are still hampered by the presence of many conditions that decrease the detection accuracy.

The integration of artificial intelligence (AI) [2,3,4,5,6] in medical imaging, particularly in dermatology, has greatly benefited the early detection and diagnosis of skin cancers, including melanoma [7,8,9]. Dermoscopy, a noninvasive skin imaging technique, is crucial in this process. However, the presence of hair in dermoscopic images often poses significant challenges [10], as it can obscure critical details of skin lesions and may lead to potential diagnostic errors. Physical removal of hair is a solution, but it can be uncomfortable for patients and may not always be feasible. To address this issue, we introduce DM–AHR, a novel conditional diffusion model specifically designed to generate hairless dermoscopic images that are more suitable for diagnosis and detection. Diffusion models have emerged as powerful tools in the field of machine learning, particularly for image-generation tasks. These models create high-quality, detailed images by iteratively refining a signal from a random noise distribution based on stochastic process principles. This approach allows for the generation of images with remarkable fidelity and diversity. Our research focuses on improving the quality of dermoscopic images, which is vital for early skin cancer detection and diagnosis. We believe DM–AHR can significantly contribute to this field.

The flexibility and robustness of diffusion models make them especially effective for tasks requiring nuanced understanding and manipulation of visual data. Our study repurposes diffusion models to enhance the clarity of medical skin images. The DM–AHR model is designed to discriminate between hair and underlying skin features, gradually generating a hair-free image from the original hairy image. This approach not only augments the visibility of critical dermatological details but also streamlines the pre-diagnostic process by eliminating the need for physical hair removal. The main contributions of this paper are as follows:

- We introduce DM–AHR, a customized self-supervised diffusion model for automatic hair removal in dermoscopic images. This model enhances the clarity and diagnostic quality of skin images by effectively differentiating hair from skin features.

- We demonstrate significant improvement in skin lesion detection accuracy after applying DM–AHR as a preprocessing step before the classification model.

- We introduce the novel DERMAHAIR dataset, advancing research in the task of automatic hair removal from skin images.

- We introduce a new self-supervised technique specifically tailored for the task of automatic hair removal from skin images.

- We demonstrate DM–AHR’s potential in broader skin diagnosis applications and automated dermatological systems.

This study details the architecture and optimization of DM–AHR. The structure of the paper is as follows: Section 2 discusses related works in the field, providing background and context for the current research. Section 3 describes the proposed methodology, detailing the design, implementation, and theoretical foundation of the DM–AHR model. Section 4 presents the experimental results, offering a thorough analysis of the model’s performance, comparisons with existing methods, and insights gained from the experiments. Finally, Section 5 concludes the paper, summarizing the key findings, discussing implications, and suggesting directions for future research.

2. Related Works

The domain of medical image analysis has seen substantial advancements due to the integration of AI and machine learning techniques. Particularly, in dermatology, the automatic analysis of dermoscopic images plays a crucial role in the early detection of skin diseases, including melanoma. This section reviews the existing literature in three primary areas: diffusion models in image processing, automatic hair removal techniques in dermoscopic images, and their implications for skin disease diagnosis.

First, diffusion models have gained prominence in image processing due to their exceptional ability to generate and reconstruct high-quality images. Recent studies, such as those by [11,12], have demonstrated the potential of these models in tasks like image denoising, super-resolution, and inpainting. Their application in medical image analysis, however, remains relatively unexplored. The adaptability of diffusion models to different contexts, as shown by [13], provides a foundation for their application in enhancing the quality of medical images, including dermoscopic images. Another notable development in the domain of dermatoscopic image enhancement is the Derm-T2IM approach, as explored by Farooq et al [14]. Their work utilizes stable diffusion models to generate large-scale, high-quality synthetic skin lesion datasets, enhancing machine learning model training by providing a rich variety of realistic dermatoscopic images that improve the robustness and accuracy of skin disease classification models when integrated into training pipelines.

Hair removal in dermoscopic images is a critical preprocessing step in skin lesion analysis. Traditional methods, as reviewed by [15,16], have relied on techniques such as morphological operations and edge detection. However, these methods often struggle with maintaining lesion integrity. The advent of deep learning has introduced new possibilities [17,18,19]. More recent studies have started exploring convolutional neural networks (CNNs) [20,21,22] and Generative Adversarial Networks (GAN) [23,24,25] for this purpose, achieving better accuracy while preserving important dermatological features.

The accuracy of skin disease diagnosis, particularly when diagnosing melanoma, is heavily dependent on the quality of dermoscopic images. As highlighted by [26], combining AI with high-quality images can outperform traditional diagnostic methods. The removal of occluding elements like hair is therefore not just a technical challenge, but a clinical necessity, as emphasized by [27,28]. The integration of recent advanced image processing techniques, such as diffusion models, in this domain holds significant promise for improving diagnostic accuracy and patient outcomes. Moreover, leveraging self-supervised techniques and constructing new datasets could advance the state of the art in this domain. This study addresses these improvement gaps and introduces the DM–AHR model that will be described in the next section.

3. Proposed Method

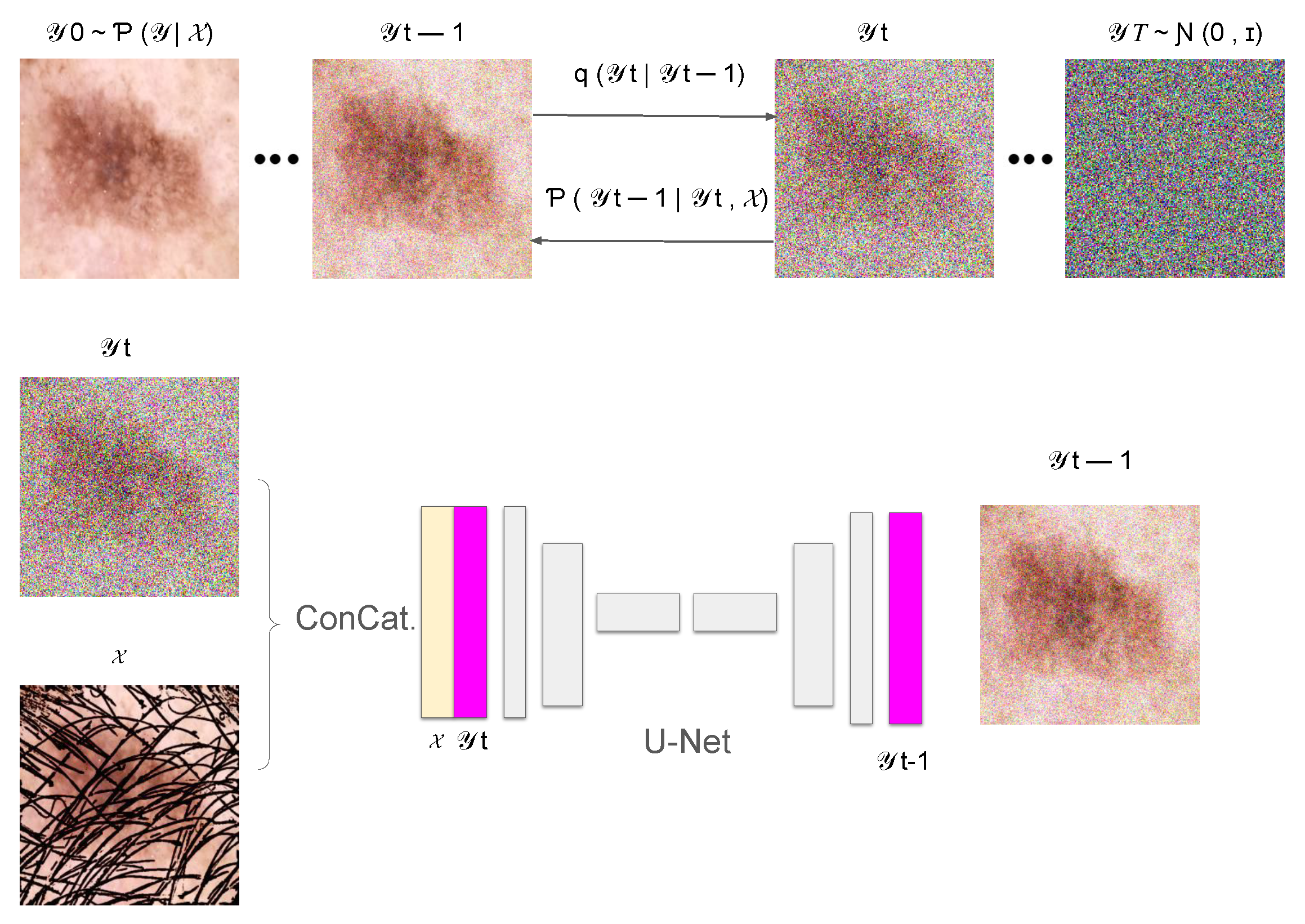

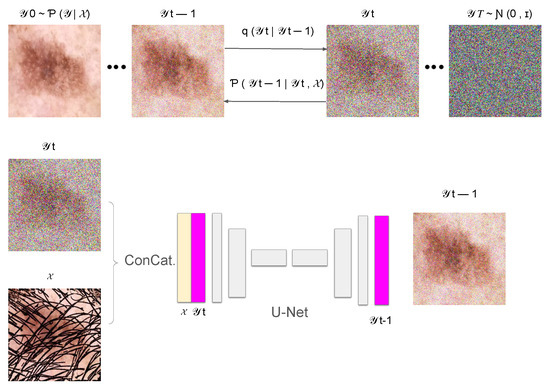

In this section, we introduce DM–AHR (Diffusion Model for Automatic Hair Removal), based on modifying the standard architecture of Denoising Diffusion Probabilistic Models (DDPM) [11] for the purpose of automatic hair removal in dermoscopic images. The global architecture of DM–AHR is illustrated in Figure 1.

Figure 1.

Illustration of the DM–AHR Diffusion process.

3.1. Dataset and Problem Formulation

Starting from a dataset , where each is a dermoscopic image exhibiting hair artifacts, and represents the corresponding hair-free image. The dataset is crucial for training the DM–AHR model to understand and learn the complex patterns associated with hair in such medical images. Formally, we define our task as learning a mapping function F, such that , where F represents the DM–AHR model’s transformation capability. Due to the difficulty of constructing real pairs of hairy and hair-free images, the hair is added artificially to real hair-free skin images, as explained in the Experimental Section later.

3.2. Conditional Denoising Diffusion Process

The DM–AHR model incorporates a sophisticated conditional denoising diffusion process inspired by prominent diffusion model advancements [11,13,29]. The architecture central to this model is a U-Net [30], which is pivotal for denoising and feature differentiation, specifically between hair and skin features in images. This U-Net employs a multi-scale strategy with channels varying in dimensionality corresponding to the resolution of input images, ensuring a tailored approach for different scales, as described in Figure 1.

Although the DM–AHR model utilizes the U-Net structure, it is distinctly modified to include a conditioning branch at every denoising step. The inputs to the model are images of skin cancer with hair, which are instrumental in guiding the model to align more accurately with the content of the skin cancer image. This conditioning mechanism significantly enhances the model’s ability to adhere to the relevant features in the images, as visually represented in Figure 1.

The U-Net comprises several layers with depth multipliers at each resolution stage to enhance its capacity and adaptability to the input’s complexity. It utilizes a series of ResNet blocks [31] at each stage, and these blocks help it to learn residual behaviors between noisy and denoised states, thus improving the model’s efficiency and accuracy. Activation functions within these blocks are primarily ReLU, ensuring non-linearity preservation in the denoising process.

For the diffusion process, the model starts with a forward diffusion to generate noisy versions of the original image, which are subsequently refined by the conditional U-Net. This U-Net takes both the noisy image and a low-resolution, interpolated, and up-sampled version of the original image as inputs. It then progressively enhances the image resolution through its depth stages, adapting its parameters dynamically as dictated by the underlying complexity of different image features. The loss function employed is tailored to minimize the difference between the clean and generated images, ensuring the preservation and accurate reconstruction of critical features such as hair and skin textures.

3.2.1. Forward Diffusion Process

The forward diffusion process is modeled as a Markov chain that incrementally adds Gaussian noise to an image. Formally, the process is defined as follows:

where is the original hair-free image, represents the sequence of images with incrementally added noise, and are the noise levels at each step. This part is illustrated in the upper part of Figure 1.

3.2.2. Reverse Diffusion Process

In the reverse diffusion process, the aim is to recover the original hair-free image from the noisy image. This is achieved by a learned neural network , which performs denoising at each step. Here, we added the conditioning based on the original image with hair, represented as , to guide the reconstruction process to remove hair and noise jointly. This is shown in the bottom part of the Figure 1.

The reverse process is defined as follows:

where and are learned parameters of the model and is the input hair-containing image. The reverse diffusion process is iterative, starting from a purely noisy image and progressively recovering the hair-free image, guided by the input image characteristics.

3.3. Training of the Denoising Model

The denoising model , a pivotal component of the DM–AHR architecture, is tasked with estimating and eliminating the noise introduced at each step of the forward diffusion process. This process is crucial for reconstructing the hair-free image from its noisy counterpart. The training of is conducted using pairs of noisy and original hair-free images, with the aim of fine-tuning the model parameters to accurately estimate the noise vector.

3.3.1. Loss Function

The training objective is encapsulated in a loss function that measures the difference between the noise estimated by the model and the actual noise applied during the forward process. Formally, the loss function is as follows:

where represents the noisy image at time step t and denotes the noise vector. This function aims to minimize the mean squared error between the noise predicted by and the actual noise.

3.3.2. Training Algorithm

The training algorithm for is carefully designed to iteratively refine the model parameters, thereby optimizing its ability to estimate and remove noise from images. The process can be dissected into several key steps, each with a mathematical basis, as demonstrated in Algorithm 1:

| Algorithm 1: Training algorithm for the DM–AHR Model |

while not converged do for each batch in the training dataset do Sample a pair from the batch for to T do Sample noise level from a predefined noise schedule Generate noise Create a noisy image using the formula: Compute the loss function for backpropagation: Update by applying the gradient descent step: end for end for end while |

In the training algorithm for the DM–AHR model, each iteration commences with sampling a pair of images , where represents the original image with hair and its hair-free counterpart. At each time step t, noise is added to based on a sampled noise level , creating a noisy version . The loss function, which is critical for guiding the model, measures the difference between the model’s estimated noise and the actual noise added. Model parameters are updated via gradient descent, where the learning rate () and the gradient of the loss function () are key factors. This approach ensures effective learning and robust noise estimation capabilities, enabling the model to accurately map noisy images to their hair-free versions under a variety of noise levels.

3.4. Inference Process of the DM–AHR Model

During the inference process of DM–AHR model, the Iterative Refinement Algorithm [29] is adapted to the current problem. The inference is performed for multiple iterations and guided by the input image containing hair and it leverages the denoising model (the U-Net model shown in Figure 1) at each iteration.

The algorithm initializes with a noisy image and progressively applies denoising to reach the final hair-free image . The refinement at each step is mathematically governed by the following equation:

where is the noisy image at step t, and is the original hair-containing image. The function is responsible for estimating the difference between and the hair-free image, effectively removing noise and hair artifacts.

The effectiveness of this algorithm in hair removal can be attributed to its iterative nature, allowing gradual refinement of the image. Each iteration removes a portion of the noise and hair, refining the image closer to its hair-free version. The process is described in Algorithm 2, where T is the number of iterations fixed by the user to regulate the inference.

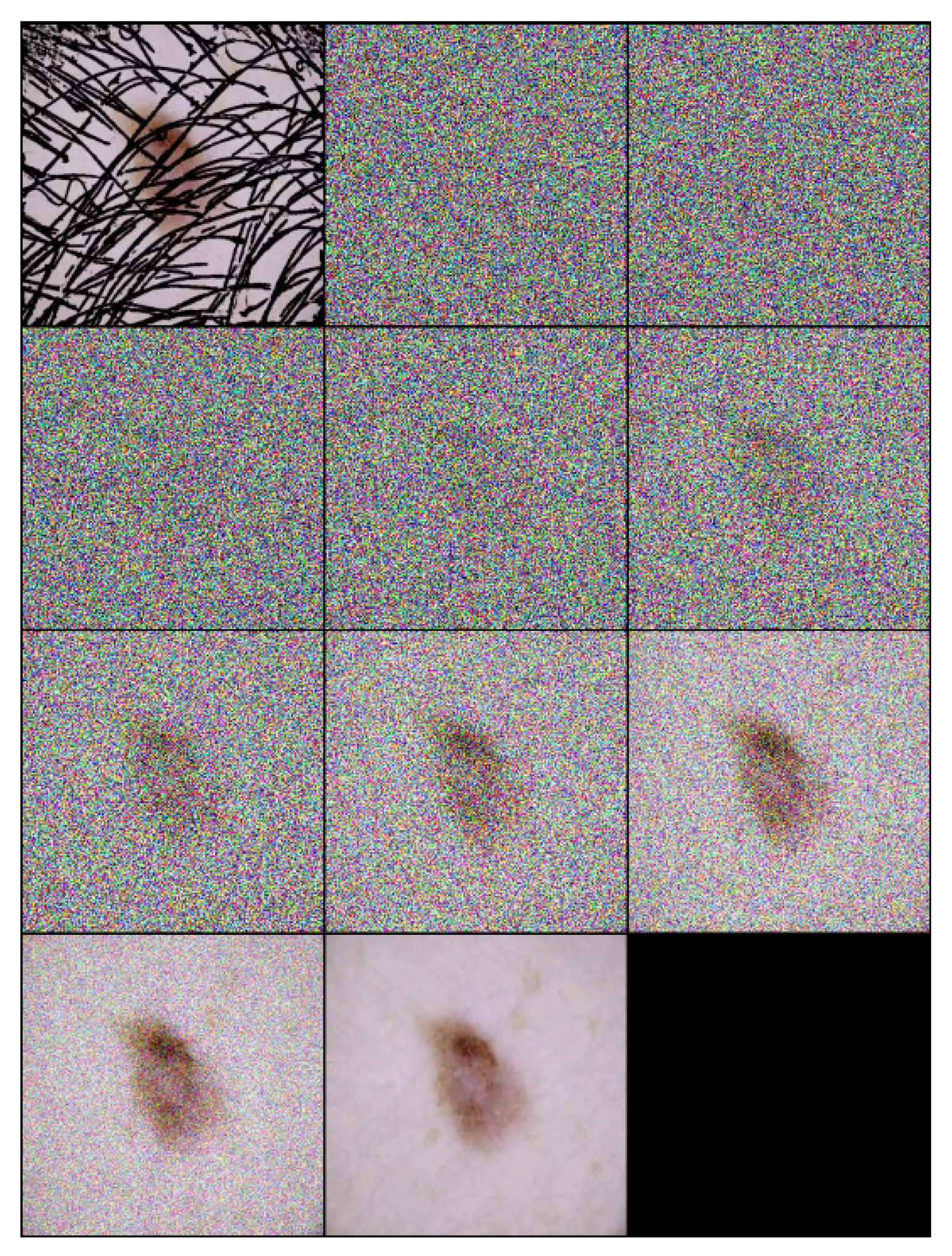

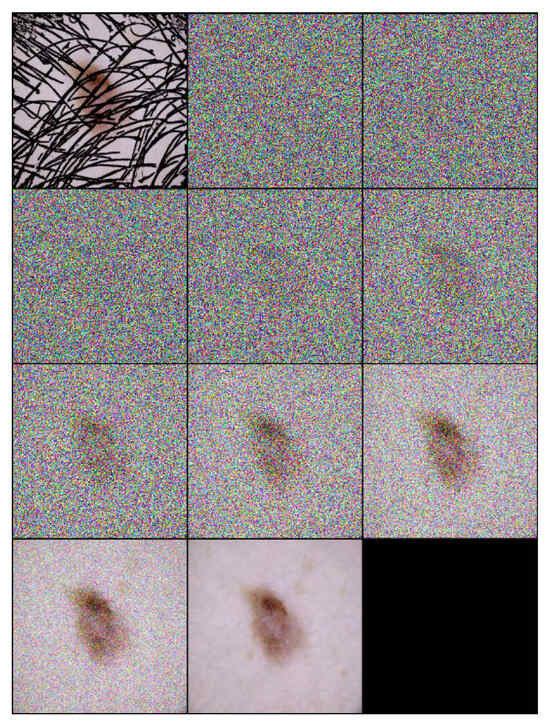

Each step of the algorithm incrementally removes noise and hair, leveraging the learned model to guide the transformation towards a clear, hair-free image. An example of the inference process applied on a one sample image is illustrated in Figure 2, and the inference number is 10 ().

| Algorithm 2: Inference process for hair removal in DM–AHR |

Initialize for down to 1 do Sample noise Apply the denoising model: end for return , the final hair-free image |

Figure 2.

Inference steps of DM–AHR applied on one melanoma skin case performed in 10 iterations ().

The noise schedule of the DM–AHR model is defined by a piecewise uniform distribution, which is crucial for controlling the noise variance at each diffusion step:

where is a uniform distribution between noise levels and , and T is the total number of diffusion steps. This noise schedule is vital for the model’s ability to handle various noise levels, enhancing its robustness and adaptability for effective hair removal in medical imaging applications.

3.5. Self-Supervised Technique

The training of the DM–AHR model employs a novel self-supervised technique, which is specifically tailored for the automatic removal of hair from dermoscopic skin images. This approach leverages the algorithm as shown in Algorithm 3. The key idea is to artificially synthesize hair-like patterns on hair-free dermoscopic images, creating a modified dataset that simulates real-world scenarios in which hair obscures skin lesions.

The process begins with an original, hair-free dermoscopic image, denoted as . A pre-set number of lines, , for example, 1000, are algorithmically drawn on this image to imitate hair. These lines are generated with randomized starting points, lengths, and directions, ensuring a diverse and realistic representation of hair patterns across the dataset.

| Algorithm 3: Self-supervised technique customized for training skin hair removal models |

Input: {the original hair-free dermoscopic image} Output: {Image with the small artificial lines simulating hair-like patterns} {Fix the number of lines to draw} Size of {Get the input image dimensions} for to do {pick a random integer in } {pick a random integer in } {pick a random integer in } {pick a random direction for diagonal orientation} {End x-coordinate} {End y-coordinate} Draw line on I from to on end for return |

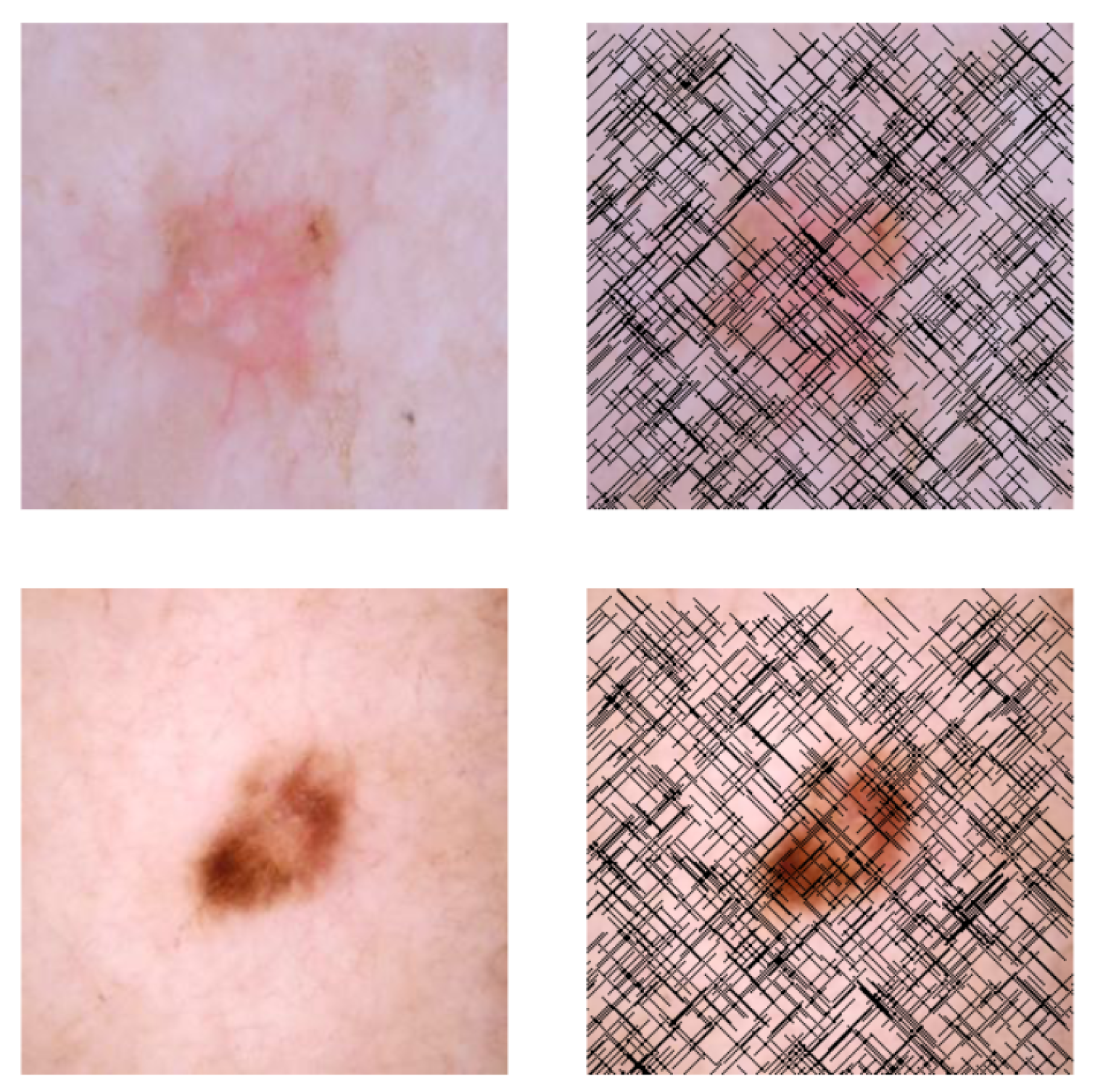

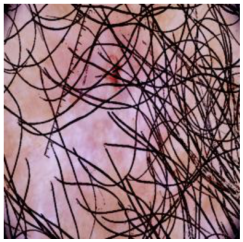

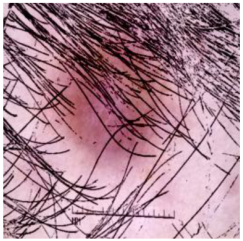

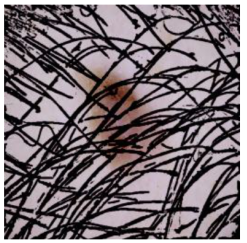

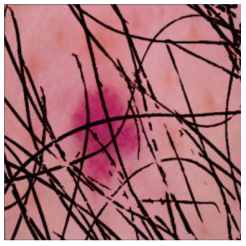

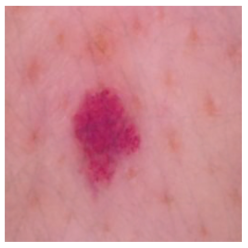

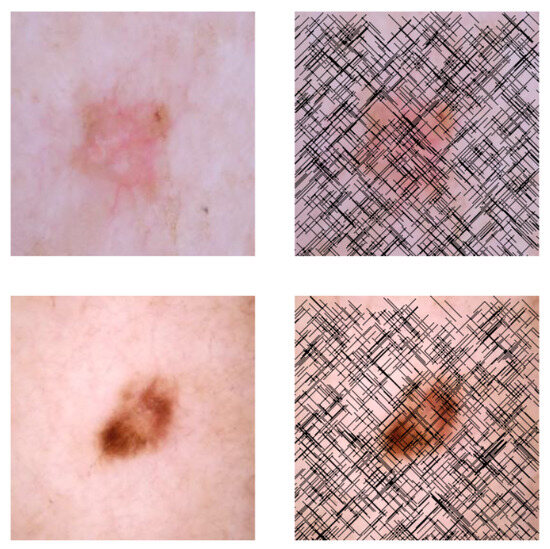

This self-supervised approach significantly augments the model’s proficiency in processing real-world dermatoscopic images while obviating the necessity for a large corpus of manually annotated images obscured by hair. Figure 3 presents two exemplar images produced by this technique, which effectively mimic natural hair patterns. This visual mimicry is instrumental in enabling the DM–AHR model to autonomously discern and segregate hair from skin features. Notably, the implementation of this technique has led to a substantial enhancement in the performance of the DM–AHR model, which complements its prior training on the meticulously constructed DERMAHAIR dataset, as detailed in the subsequent section.

Figure 3.

Generated samples using the customized self-supervised technique.

4. Experiments

4.1. Experimental Settings

The DM–AHR model’s training and evaluation process was conducted using a high-performance NVIDIA Quadro RTX 8000 GPU with 48 GB VRAM, known for its substantial computational capabilities. The DM–AHR model underwent an intensive training protocol consisting of 2000 time steps for detailed refinement and 20,000 iterations for comprehensive learning across diverse imaging conditions. This extensive training regime was crucial for iterative improvement in image quality and ensuring the model’s robustness. The Adam optimizer was employed with a fixed rate of 0.0001, facilitating stable and consistent training. The model and resources are made available to the community at: https://github.com/AnasHXH/DM-AHR (accessed on 7 August 2024).

4.2. Dataset Description

This study introduces the dataset DERMAHAIR (DERMatologic Automatic HAIR Removal Dataset). It is created to benchmark the task of Automatic Skin Hair Removal to enhance skin diagnosis and analysis. DERMAHAIR was created by sampling 100 images from each of the seven lesion categories within the HAM10000 dataset [32], resulting in 700 unique images. Later, for every image, an artificial hair pattern was picked randomly from the Digital Hair Dataset [33] and added artificially to the image. In the following paragraphs, we will provide a description of the HAM10000 dataset, and we will explain the artificial hair addition process. The DERMAHAIR dataset is open sourced at the following link: https://www.kaggle.com/datasets/riotulab/skin-cancer-hair-removal (accessed on 7 August 2024).

4.2.1. Description of the HAM10000 Dataset

The HAM10000 dataset [32] is a cornerstone in dermatological research, encompassing 10,000 dermatoscopic images that represent a broad spectrum of skin lesions, including melanomas and nevi. This dataset is distinguished by its comprehensive diversity in lesion characteristics and patient demographics. Each image is meticulously annotated with essential metadata such as diagnosis, lesion location, and patient specifics, thereby providing a robust foundation for the development of machine learning models aimed at skin lesion classification and melanoma detection. The HAM10000 dataset includes seven lesion categories: Melanocytic nevi (benign melanocyte tumors, nv), Melanoma (the deadliest form of skin cancer, mel), Benign keratosis (comprising seborrheic keratoses and lentigines, non-malignant growths from keratinocytes, bkl), Basal cell carcinoma (the most common but least aggressive skin cancer, bcc), Actinic keratoses (sun-related precancerous lesions, akiec), Vascular lesions (examples include angiomas, benign vascular proliferations, vasc), and Dermatofibroma (non-malignant fibrous skin tumors, df). The diverse and detailed classification of skin conditions in this dataset renders it invaluable for training advanced AI models in dermatological diagnostics.

We reserved 100 images from each category to form a test set of 700 images, ensuring each class is equally represented in the evaluation process. This stratified sampling minimizes bias and robustly measures the model’s performance across varied conditions. The remaining images were augmented with synthetic hair, forming a diverse training set. Given the specific challenge of hair removal in skin cancer images, the size of the dataset has proven sufficient for achieving high accuracy and reliability in this niche application, as evidenced by our experimental results and the specific adaptations we have made to mitigate the limitations of a smaller sample size.

4.2.2. Description of the Artificial Hair Addition Process

Let us consider a simplified 2D matrix representation of a single color channel from an RGB image:

These matrices symbolize pixel intensity values within a specific color channel, normalized between 0 and 1.

Hair Mask Images: To simulate hair artifacts, we employed binary masks sourced from a digital hair dataset [33]. In these masks, hair is represented by white pixels (value = 1), contrasting with the black background pixels (value = 0). Let us consider a simplified representation:

Image Processing and Synthesis: The process of artificial hair addition encompassed several key steps:

Normalization of Hair Mask: Initially, the hair mask image underwent normalization. This process involved inverting the pixel values, effectively converting white hair pixels to black, thus mirroring the appearance of hair in authentic dermatoscopic images:

Merging Images: Subsequently, the normalized hair mask was merged with the dermatoscopic image. This crucial step superimposed the artificial hair structure onto the skin lesion image:

Post-Processing: The final stage entailed a post-processing routine. By subtracting one from the merged image’s pixel values, the hair regions were effectively restored to their original skin color hues, while normalizing the background:

The DERMAHAIR dataset is constructed by picking 100 images from every class in the HAM10000 [32] and constructing a hairy version using this described hair addition process. The generated pair will be used to train any hair removal model, including the DM–AHR model described in this study.

In terms of its organization, the dataset is methodically divided into two principal directories: Data_Skin_with_Hair and Data_Skin_without_Hair. Each directory encompasses seven subdirectories corresponding to the seven diagnostic categories, namely akiec, bcc, bkl, df, mel, nv, and vasc. This structure ensures streamlined access and efficient categorization of the dataset.

4.3. Evaluation Metrics

The evaluation metrics are divided into two groups. First, there are the metrics used to measure the performance of the DM–AHR model itself. Second, there are the metrics used to measure the performance of the skin lesion classification before and after using the DM–AHR model to improve the quality of the skin images.

4.3.1. Evaluation Metrics of the DM–AHR Performance

In the evaluation of the DM–AHR model for Skin Image Dehairing, three key metrics are employed: Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index Measure (SSIM), and Learned Perceptual Image Patch Similarity (LPIPS).

Peak Signal-to-Noise Ratio (PSNR): PSNR is widely used in image processing to assess the quality of reconstructed images. It is defined as follows:

where is the maximum possible pixel value of the image and MSE is the Mean Squared Error between the original and processed images. Higher PSNR values indicate better quality, with values above 30 dB generally considered good in medical imaging.

Structural Similarity Index Measure (SSIM): SSIM measures the perceived quality of an image by comparing its structural information with that of the original image. It considers changes in texture, luminance, and contrast, and is calculated as follows:

where are the original and processed images, and are the mean and variance, is the covariance, and are constants to stabilize the division. The SSIM values range from −1 to 1, with higher values indicating better similarity.

Learned Perceptual Image Patch Similarity (LPIPS): LPIPS [34] is a metric that evaluates the perceptual similarity between two images, using deep learning models to capture the human visual system’s response. It is defined as follows:

where are the images being compared, denotes the feature map at layer l, are the dimensions of the feature map, and are layer-wise weights. LPIPS quantifies perceptual differences more aligned with human visual perception.

These metrics collectively provide a multi-faceted evaluation of image quality, which is crucial for accurate diagnosis and analysis in medical imaging.

4.3.2. Evaluation Metrics of the Skin Lesion Classification

After assessing the performance of the DM–AHR itself, we move on to the evaluation of the skin diagnosis task before and after using the DM–AHR. The classification of skin classes used six key performance metrics: the accuracy, the loss value, the precision, the recall, the specificity, and the F1-score. Each metric provides a unique perspective on the model’s performance before and after using DM–AHR to enhance the skin image quality for skin diagnosis purposes.

Accuracy is the most intuitive performance measure and it is simply a ratio of correctly predicted observations to the total observations. It is defined as follows:

where accuracy is particularly useful when the classes in the dataset are nearly balanced.

Loss, specifically in the context of neural networks, is a measure of how well the model’s predictions match the actual labels during the training. For the current study, the cross-entropy loss is used in all the experiments:

where is the actual label and is the model’s predicted probability for that class.

Precision is the ratio of correctly predicted positive observations to the total predicted positive observations. High precision relates to the low false-positive rate. It is defined as:

Recall (sensitivity) measures the proportion of actual positives that were correctly identified. It is defined as follows:

Specificity is a measure that tells us what proportion of negatives was identified correctly. It complements recall and is defined as follows:

F1-score is the weighted average of precision and recall. Therefore, this score takes both false-positives and false-negatives into account. It is a good way to show that a classifier has a good value for both recall and precision. The F1-score is defined as follows:

Understanding these metrics in tandem allows for a more nuanced interpretation of a model’s performance, particularly in applications like medical image analysis, where the cost of false negatives or false positives can be high.

4.4. Performance of the Skin Image Enhancement Using the DM–AHR Model

The DM–AHR model exhibits high efficacy in enhancing and reconstructing images across various cancer types, as evidenced by its PSNR, SSIM, and LPIPS metrics in Table 1 (PSNR), Table 2 (SSIM), and Table 3 (LPIPS). The DM–AHR model performance is compared with the performance of the GAN architecture [35], the SwinIR architecture [36], the standard Stable Diffusion Model (SDM) [37], the DM–AHR model without self-supervision (DM–AHR), and the full DM–AHR(SS) model with self-supervision. The best results are highlighted in bold.

Table 1.

Comparison of the PSNR performance of DM–AHR with other models.

Table 2.

Comparison of the SSIM performance of DM–AHR with other model.

Table 3.

Comparison of the LPIPS performance of DM–AHR with other models.

The PSNR values, as shown in Table 1, reveal a consistent superiority of DM–AHR(SS), the self-supervised version of DM–AHR, over other models. Such high PSNR values indicate that DM–AHR(SS) retains maximal fidelity to the original image. This is crucial in medical imaging, where detail preservation is paramount.

Table 2 presents the SSIM scores, underscoring DM–AHR(SS)’s ability to maintain structural integrity. The model exhibits exceptional performance in preserving textural and contrast details, as evidenced by it achieving the highest SSIM in every class.

The LPIPS metric, detailed in Table 3, further cements the superiority of DM–AHR(SS). LPIPS, as a perception-based measure, highlights the model’s proficiency in maintaining perceptual image quality, a critical aspect that is often overlooked by conventional metrics. The DM–AHR(SS) model consistently exhibits the lowest (best) LPIPS scores across all cancer types, confirming its excellence in perceptual image fidelity.

When juxtaposed with models like SRGAN and SwinIR, DM–AHR(SS) not only surpasses them in terms of numerical metrics but also in qualitative aspects such as noise reduction, detail enhancement, and overall visual quality. The self-supervised learning approach ingrained in DM–AHR (SS) evidently contributes to its nuanced understanding and handling of complex dermatoscopic images.

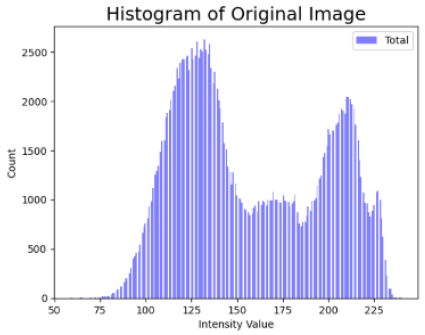

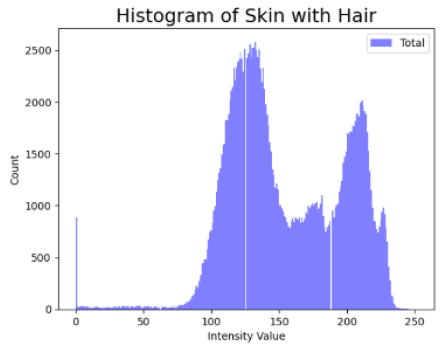

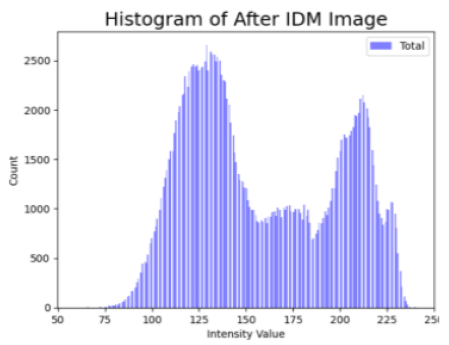

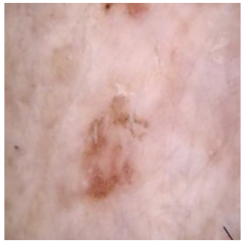

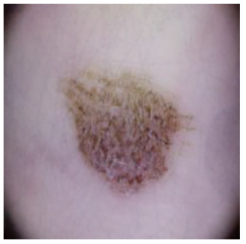

Table 4 shows an example of a skin image without hair, the artificially added hair, and the reconstructed image using the DM–AHR model. We can see visually that the image is reconstructed at a high fidelity scale, alongside its histogram.

Table 4.

Visual results of DM–AHR applied on one set of skin images with the corresponding histograms.

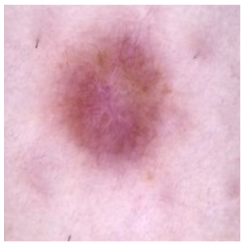

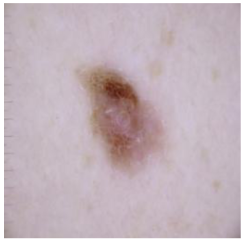

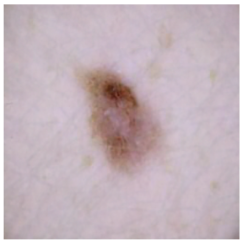

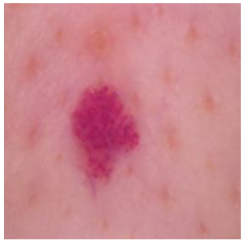

In Table 5, we show samples from different skin lesion classes with the reconstructed images using the DM–AHR model. We can also see the high reconstruction quality of the model and its successful discrimination between the skin features and the hair degradation patterns.

Table 5.

Comparison of skin images before and after application of DM–AHR dehairing.

4.5. Improvement of the Medical Skin Diagnosis after Using DM–AHR

In the evolving landscape of medical skin diagnosis, the robustness and accuracy of skin cancer classification models are paramount. This study presents a comprehensive evaluation of three state-of-the-art deep learning architectures: resnext101_32x8d [38] (in Table 6), maxvit_t [39] (in Table 7), and Swin-Transformer [40] (in Table 8). The performance of these models is assessed based on the original images, on the hairy version of the images, and finally on the dehaired version of the images using DM–AHR model. The ensuing tables encapsulate key performance metrics, including accuracy, loss, precision, recall, specificity, and F1-score for each model under the specified conditions.

Table 6.

Performance metrics for the resnext101_32x8d model.

Table 7.

Performance metrics for the maxvit_t model.

Table 8.

Performance Metrics for the SwinTransformer Model.

For the resnext101_32x8d model, the introduction of hair to the images led to a substantial decrease in accuracy, from an impressive 98.5% in the original state to 87.9% post-modification. This significant decline highlights the model’s sensitivity to visual perturbations. However, the subsequent application of the IDM hair removal method effectively mitigated this impact, elevating the accuracy to 97.3%. The precision and recall metrics mirrored this trend, suggesting that DM–AHR preprocessing can significantly recover the model’s discriminatory power.

Similarly, the maxvit_t model, while demonstrating remarkable resilience with an original accuracy of 98.9%, also experienced a decline in performance after hair was added to the image, though to a lesser extent (95.1% accuracy). Post-DM–AHR application, the model not only recovered but exceeded its original performance, achieving an accuracy of 99.3%. This result suggests that the IDM method may not only compensate for the added noise but also enhances the model’s overall interpretive accuracy. This is a notable property of the DM–AHR model that is worthy of investigation in future research studies.

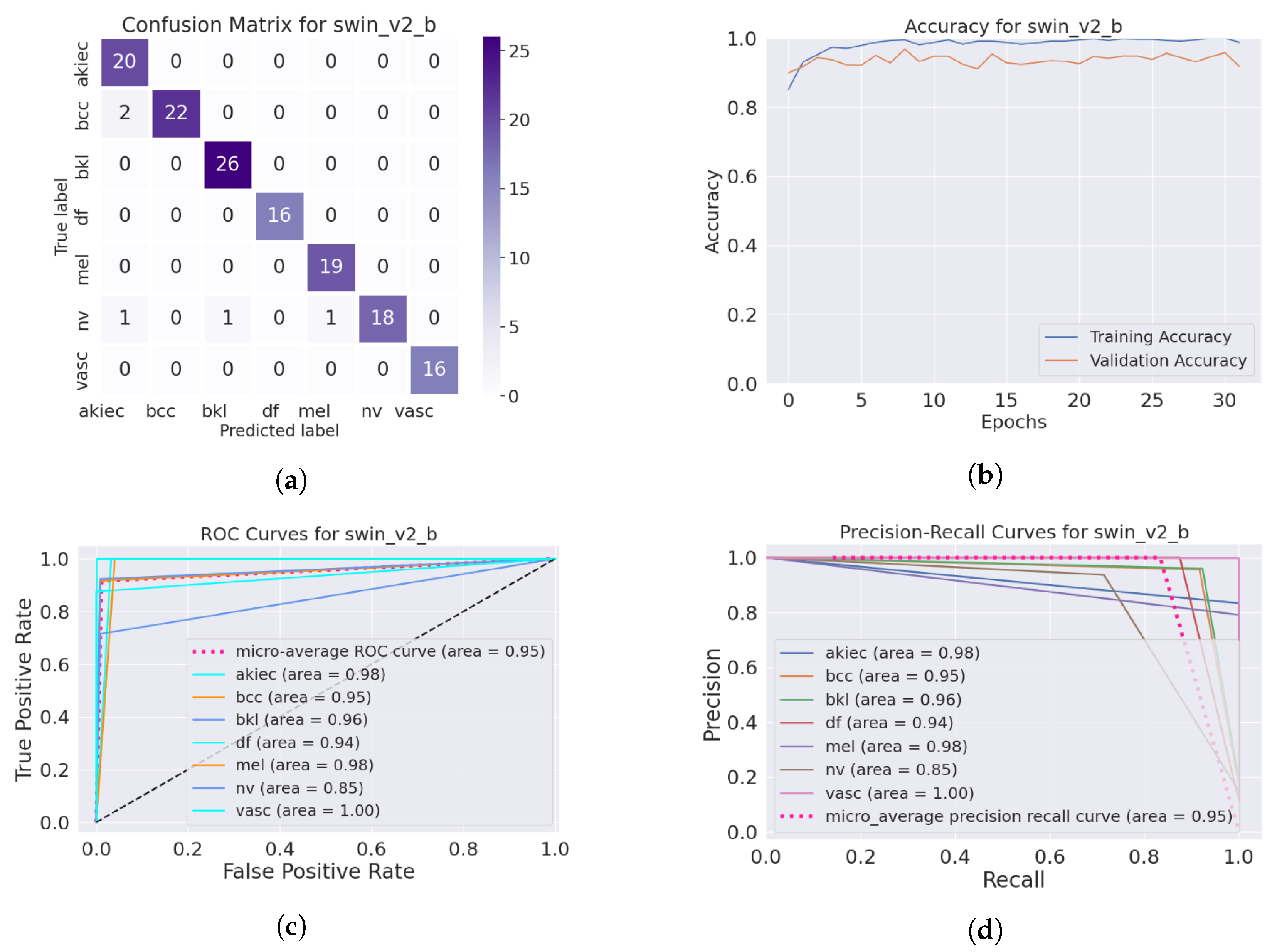

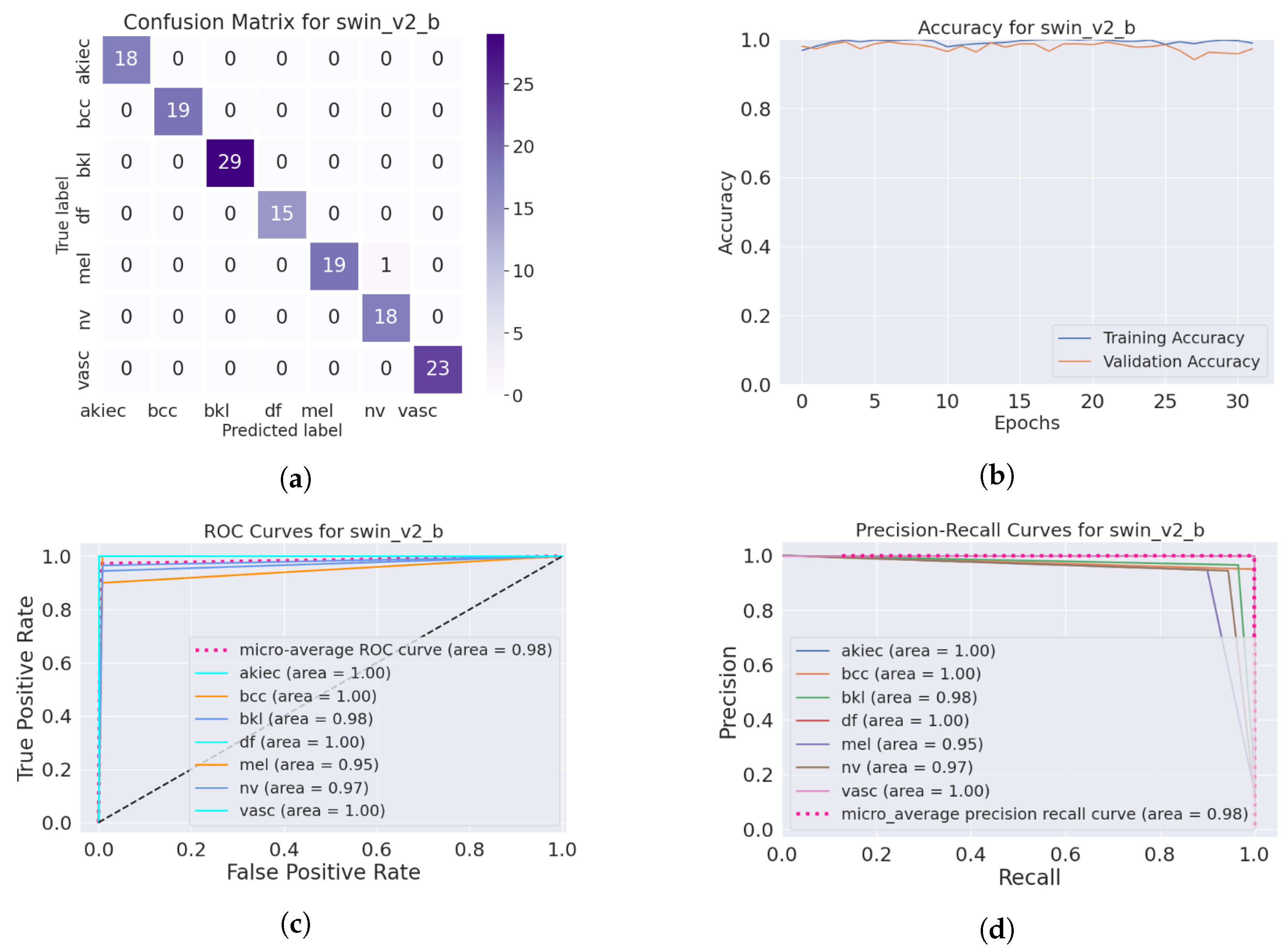

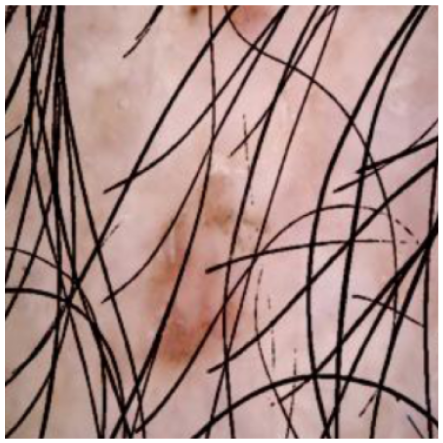

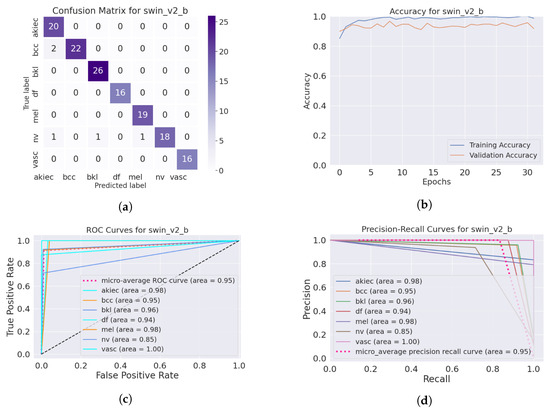

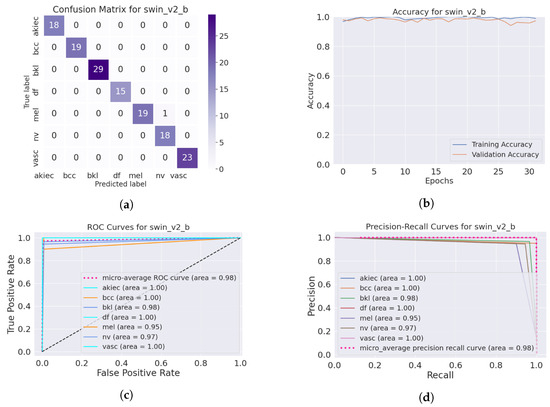

The SwinTransformer model showed a consistent pattern of performance reduction upon hair addition, followed by a notable recovery with IDM processing. The original accuracy of 98.3% reduced to 96.8% after hair was added and then increased to 99.3% post-DM–AHR, paralleling the trends seen in the other models. This consistency across different architectures underscores the general applicability and effectiveness of the DM–AHR preprocessing technique in enhancing model robustness. Figure 4 and Figure 5 illustrate the performance of the skin classification and diagnosis using the Swin Transformer before and after the enhancement step using the DM–AHR model. The analysis of the confusion matrices, the accuracy curves, the ROC curves, and the precision–recall curves shows the significant improvement of the skin analysis after the preprocessing using the DM–AHR model.

Figure 4.

Performance of the skin classification using Swin Transformer before using DM–AHR (hairy occluded images): (a) Confusion matrix, (b) accuracy curve during training, (c) ROC curve, and (d) precision–recall curve.

Figure 5.

Performance of the skin classification using Swin Transformer after using DM–AHR (dehaired images): (a) Confusion matrix, (b) accuracy curve during training, (c) ROC curve, (d) precision–recall curve.

Concerning the comparative study between these three skin classification models, this study suggests distinct applications for each model based on their performance. The resnext101 model is ideal for environments with clean, structured data due to its high precision and accuracy in such conditions. The maxvit_t model, which demonstrates remarkable robustness to occlusions, is recommended for more challenging clinical settings where data variability and robustness are critical. SwinTransformer, with its consistent performance across varying conditions, serves as a versatile option for diverse medical imaging tasks. In scenarios requiring robust preprocessing, both maxvit_t and SwinTransformer show significant improvements, making them suitable choices. A combination of these models or ensemble techniques could be advantageous in environments with diverse and unpredictable image qualities, leveraging the individual strengths of each model. Table 9 shows the training time and the model size for every model apart.

Table 9.

Model size and training time for different models.

In the deployment of the DM–AHR model, its primary function is to preprocess dermoscopic images by removing hair before classifying the type of skin cancer. While the model achieves high accuracy, there are instances of misidentification, albeit at a very low rate. These rare errors typically involve the misclassification between hair artifacts and certain types of skin lesions. However, it is important to highlight that in a clinical setting, the final diagnosis is corroborated by expert dermatologists. The DM–AHR model significantly aids this process by enhancing image clarity, thus making the detection of skin anomalies more straightforward and rapid. This not only assists dermatologists in making more informed decisions but also contributes to quicker diagnostic processes, potentially speeding up the initiation of appropriate treatment plans and improving patient outcomes. The integration of the DM–AHR model into clinical workflows is therefore seen not just as a technological enhancement but as a vital tool in the fight against skin cancer, offering a blend of precision and speed that is crucial for effective medical care.

The collective findings from this study highlight the critical role of preprocessing in medical image analysis. Introduced herein, the DM–AHR model has demonstrated notable efficiency in mitigating a common form of medical image degradation, underscoring its potential utility as a preprocessing tool not only for dermatological assessments but also across various medical imaging applications. However, it is important to note that the computational demands of the DM–AHR model are considerable. Specifically, processing an image of size pixels through 2000 steps requires approximately 2 min on a single GPU RTX 8000 with 48 GB of VRAM. This substantial resource requirement is justified by the model’s capability to effectively remove hair from dermoscopic images, which is a crucial step in enhancing the visibility and detectability of skin cancer lesions. Skin cancer, as a potentially lethal disease, necessitates the highest accuracy in diagnostic imaging processes.

The DM–AHR model, therefore, presents a trade-off between computational expense and clinical value. The increased processing time and resource use are offset by the model’s ability to deliver clearer, more interpretable images that are essential for accurate diagnosis and subsequent treatment planning. This balance underscores the model’s practical relevance, particularly in settings where diagnostic precision is paramount.

5. Conclusions

In conclusion, the DM–AHR model presents a significant contribution to the topic of dermatological image processing. The model, with its self-supervised learning approach, has demonstrated remarkable proficiency in enhancing skin lesion images, thereby facilitating more accurate and efficient skin diagnostics. With skin cancer rates increasing globally, tools like DM–AHR can play a major role in early detection and treatment, possibly saving lives. The model’s ability to effectively remove hair from dermatoscopic images addresses a significant challenge in the field, paving the way for clearer and more reliable lesion analysis. However, the high processing cost of DM–AHR remains its main limitation that should be addressed in future research works.

Future research should focus on integrating the DM–AHR model into clinical workflows to harness its diagnostic capabilities in real-world settings and extend its application to other medical imaging modalities such as ultrasound, CT, and MRI. Exploring the adaptation of diffusion-based models to address various imaging artifacts could lead to significant development in medical image analysis by improving diagnostic accuracy, expediting disease detection, and enhancing patient care. This integration promises comprehensive pre-processing tools that support a wide range of diagnostic procedures, potentially transforming patient outcomes by facilitating early disease detection and treatment through advanced technological applications.

Author Contributions

Conceptualization, B.B. and A.M.A.; formal analysis, A.M.A. and B.B.; investigation, A.M.A. and B.B.; methodology, A.M.A. and B.B.; project administration, B.B. and A.K.; software, A.M.A.; supervision, B.B. and A.K.; validation, A.M.A.; visualization, A.M.A.; writing—original draft, A.M.A. and B.B.; writing—review and editing, B.B., A.K., W.B. and A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Prince Sultan University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data Availability Statements at https://www.kaggle.com/datasets/riotulab/skin-cancer-hair-removal (accessed on 7 August 2024).

Acknowledgments

The authors thank Prince Sultan University for the support and funding in conducting this research.

Conflicts of Interest

The authors declare no conflicts of interest associated with this publication.

References

- World Health Organization. Ultraviolet (UV) Radiation and Skin Cancer. 2023. Available online: https://www.who.int/news-room/questions-and-answers/item/radiation-ultraviolet-(uv)-radiation-and-skin-cancer (accessed on 25 December 2023).

- Mahmood, T.; Saba, T.; Rehman, A.; Alamri, F.S. Harnessing the power of radiomics and deep learning for improved breast cancer diagnosis with multiparametric breast mammography. Expert Syst. Appl. 2024, 249, 123747. [Google Scholar] [CrossRef]

- Omar Bappi, J.; Rony, M.A.T.; Shariful Islam, M.; Alshathri, S.; El-Shafai, W. A Novel Deep Learning Approach for Accurate Cancer Type and Subtype Identification. IEEE Access 2024, 12, 94116–94134. [Google Scholar] [CrossRef]

- Abu Tareq Rony, M.; Shariful Islam, M.; Sultan, T.; Alshathri, S.; El-Shafai, W. MediGPT: Exploring Potentials of Conventional and Large Language Models on Medical Data. IEEE Access 2024, 12, 103473–103487. [Google Scholar] [CrossRef]

- Soleimani, M.; Harooni, A.; Erfani, N.; Khan, A.R.; Saba, T.; Bahaj, S.A. Classification of cancer types based on microRNA expression using a hybrid radial basis function and particle swarm optimization algorithm. Microsc. Res. Tech. 2024, 87, 1052–1062. [Google Scholar] [CrossRef]

- Emara, H.M.; El-Shafai, W.; Algarni, A.D.; Soliman, N.F.; El-Samie, F.E.A. A Hybrid Compressive Sensing and Classification Approach for Dynamic Storage Management of Vital Biomedical Signals. IEEE Access 2023, 11, 108126–108151. [Google Scholar] [CrossRef]

- Vestergaard, M.; Macaskill, P.; Holt, P.; Menzies, S. Dermoscopy compared with naked eye examination for the diagnosis of primary melanoma: A meta-analysis of studies performed in a clinical setting. Br. J. Dermatol. 2008, 159, 669–676. [Google Scholar] [CrossRef] [PubMed]

- Hammad, M.; Pławiak, P.; ElAffendi, M.; El-Latif, A.A.A.; Latif, A.A.A. Enhanced deep learning approach for accurate eczema and psoriasis skin detection. Sensors 2023, 23, 7295. [Google Scholar] [CrossRef] [PubMed]

- Alyami, J.; Rehman, A.; Sadad, T.; Alruwaythi, M.; Saba, T.; Bahaj, S.A. Automatic skin lesions detection from images through microscopic hybrid features set and machine learning classifiers. Microsc. Res. Tech. 2022, 85, 3600–3607. [Google Scholar] [CrossRef]

- Nawaz, M.; Mehmood, Z.; Nazir, T.; Naqvi, R.A.; Rehman, A.; Iqbal, M.; Saba, T. Skin cancer detection from dermoscopic images using deep learning and fuzzy k-means clustering. Microsc. Res. Tech. 2022, 85, 339–351. [Google Scholar] [CrossRef] [PubMed]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 2256–2265. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Farooq, M.A.; Yao, W.; Schukat, M.; Little, M.A.; Corcoran, P. Derm-t2im: Harnessing synthetic skin lesion data via stable diffusion models for enhanced skin disease classification using vit and cnn. arXiv 2024, arXiv:2401.05159. [Google Scholar]

- Abuzaghleh, O.; Barkana, B.D.; Faezipour, M. Automated skin lesion analysis based on color and shape geometry feature set for melanoma early detection and prevention. In Proceedings of the IEEE Long Island Systems, Applications and Technology (LISAT) Conference 2014, Farmingdale, NY, USA, 2 May 2014; pp. 1–6. [Google Scholar]

- Maglogiannis, I.; Doukas, C.N. Overview of advanced computer vision systems for skin lesions characterization. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 721–733. [Google Scholar] [CrossRef] [PubMed]

- Jing, W.; Wang, S.; Zhang, W.; Li, C. Reconstruction of Neural Radiance Fields With Vivid Scenes in the Metaverse. IEEE Trans. Consum. Electron. 2023, 70, 3222–3231. [Google Scholar] [CrossRef]

- Bao, Q.; Liu, Y.; Gang, B.; Yang, W.; Liao, Q. S2Net: Shadow Mask-Based Semantic-Aware Network for Single-Image Shadow Removal. IEEE Trans. Consum. Electron. 2022, 68, 209–220. [Google Scholar] [CrossRef]

- Ji, Z.; Zheng, H.; Zhang, Z.; Ye, Q.; Zhao, Y.; Xu, M. Multi-Scale Interaction Network for Low-Light Stereo Image Enhancement. IEEE Trans. Consum. Electron. 2023, 70, 3626–3634. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Nayak, S.R.; Koundal, D.; Prakash, D.; Verma, K. An automated deep learning models for classification of skin disease using Dermoscopy images: A comprehensive study. Multimed. Tools Appl. 2022, 81, 37379–37401. [Google Scholar] [CrossRef]

- Li, W.; Raj, A.N.J.; Tjahjadi, T.; Zhuang, Z. Digital hair removal by deep learning for skin lesion segmentation. Pattern Recognit. 2021, 117, 107994. [Google Scholar] [CrossRef]

- Guo, Z.; Chen, J.; He, T.; Wang, W.; Abbas, H.; Lv, Z. DS-CNN: Dual-Stream Convolutional Neural Networks-Based Heart Sound Classification for Wearable Devices. IEEE Trans. Consum. Electron. 2023, 69, 1186–1194. [Google Scholar] [CrossRef]

- Kim, D.; Hong, B.W. Unsupervised feature elimination via generative adversarial networks: Application to hair removal in melanoma classification. IEEE Access 2021, 9, 42610–42620. [Google Scholar] [CrossRef]

- Dong, L.; Zhou, Y.; Jiang, J. Dual-Clustered Conditioning towards GAN-based Diverse Image Generation. IEEE Trans. Consum. Electron. 2024, 70, 2817–2825. [Google Scholar] [CrossRef]

- He, Y.; Jin, X.; Jiang, Q.; Cheng, Z.; Wang, P.; Zhou, W. LKAT-GAN: A GAN for Thermal Infrared Image Colorization Based on Large Kernel and AttentionUNet-Transformer. IEEE Trans. Consum. Electron. 2023, 69, 478–489. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Delibasis, K.; Moutselos, K.; Vorgiazidou, E.; Maglogiannis, I. Automated hair removal in dermoscopy images using shallow and deep learning neural architectures. Comput. Methods Programs Biomed. Update 2023, 4, 100109. [Google Scholar] [CrossRef]

- Abbas, Q.; Celebi, M.E.; García, I.F. Hair removal methods: A comparative study for dermoscopy images. Biomed. Signal Process. Control 2011, 6, 395–404. [Google Scholar] [CrossRef]

- Saharia, C.; Ho, J.; Chan, W.; Salimans, T.; Fleet, D.J.; Norouzi, M. Image super-resolution via iterative refinement. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4713–4726. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Digital Hair Dataset. Available online: https://www.kaggle.com/datasets/weilizai/digital-hair-dataset (accessed on 17 December 2023).

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. Maxvit: Multi-axis vision transformer. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 459–479. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).