Artificial Intelligence in Ultrasound Diagnoses of Ovarian Cancer: A Systematic Review and Meta-Analysis

Abstract

Simple Summary

Abstract

1. Introduction and Background

2. Review

2.1. Methods

2.2. Inclusion and Exclusion Criteria

2.3. Initial Screening

2.4. Statistical Analysis

3. Results

3.1. Prisma Flow Chart

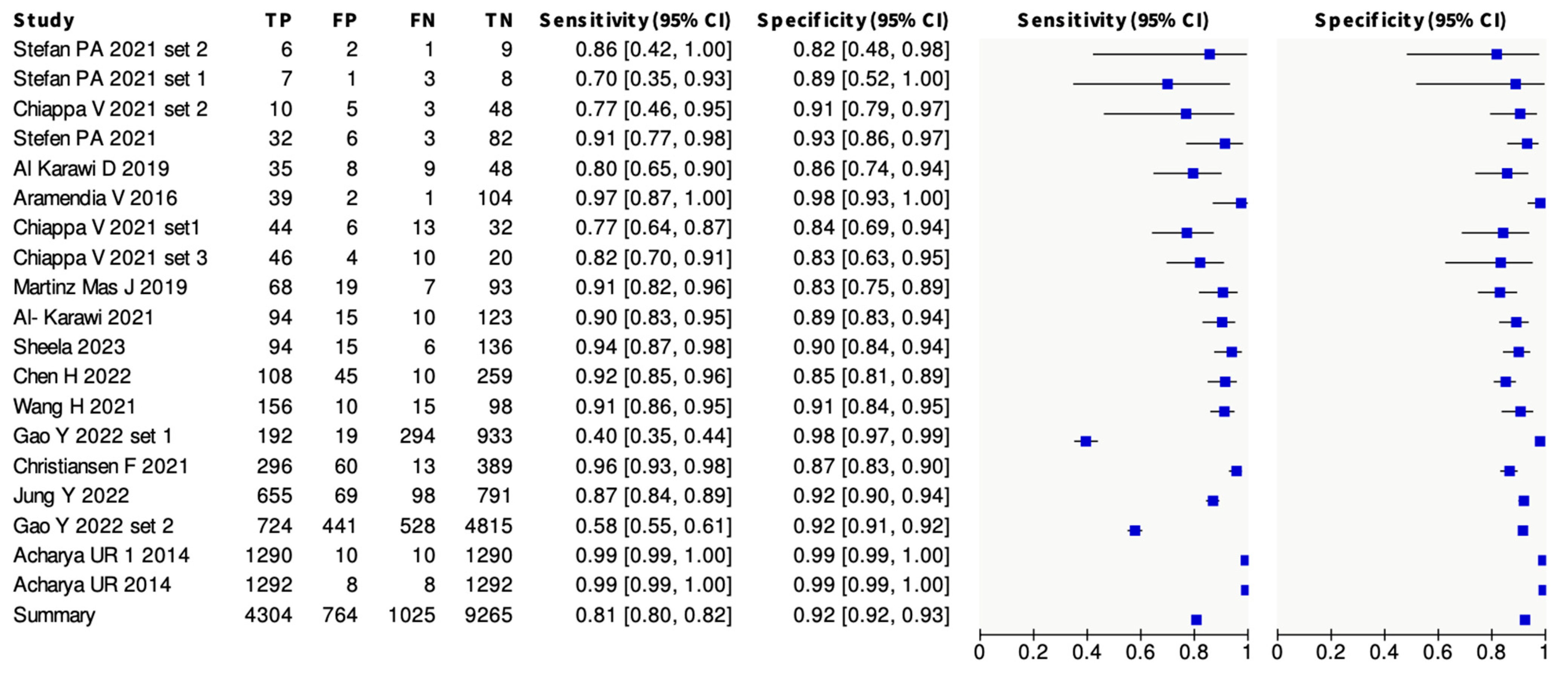

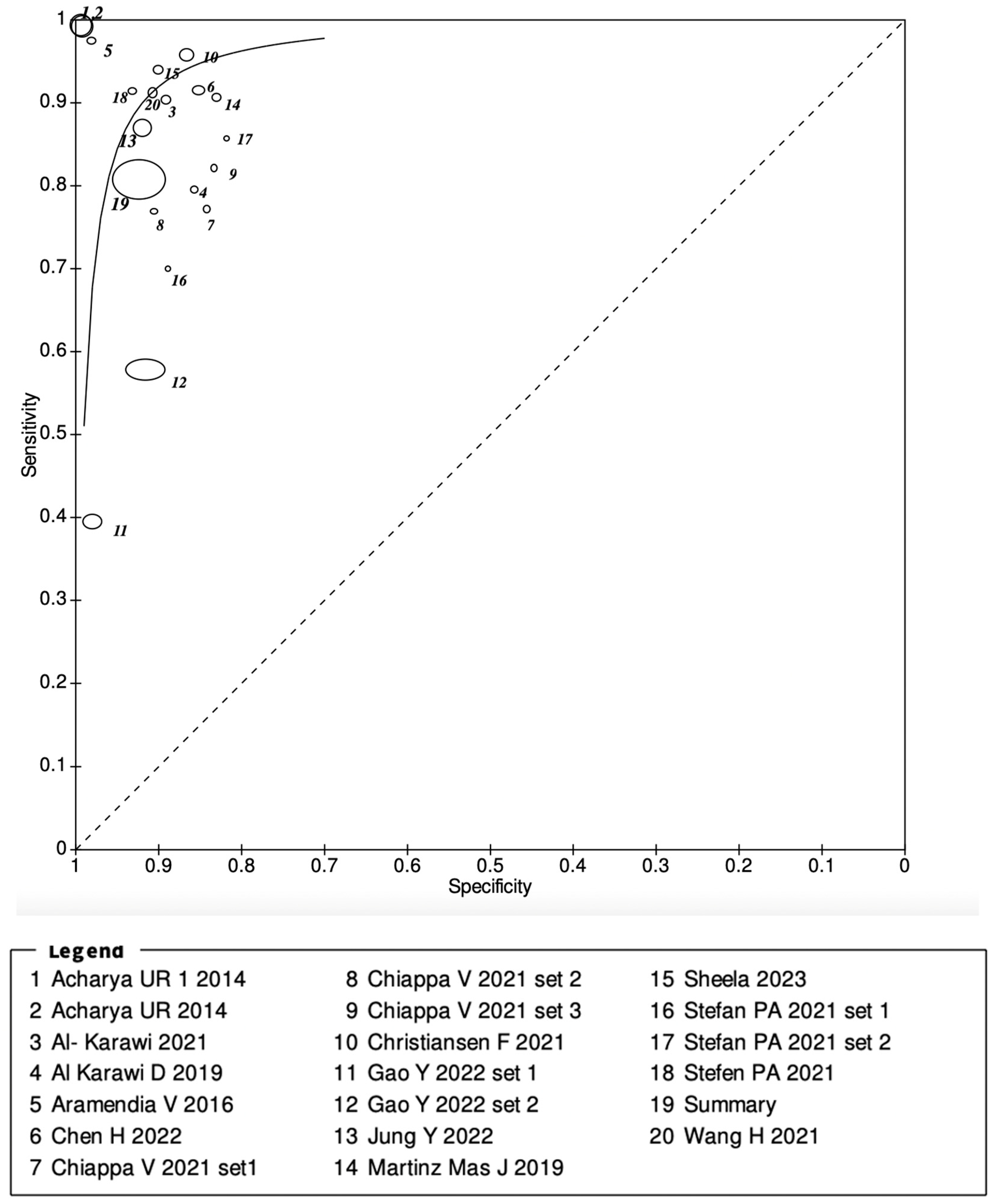

3.2. Meta-Analysis Results

4. Discussion

5. Future Directions

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sayasneh, A.; Ekechi, C.; Ferrara, L.; Kaijser, J.; Stalder, C.; Sur, S.; Timmerman, D.; Bourne, T. The characteristic ultrasound features of specific types of ovarian pathology (Review). Int. J. Oncol. 2015, 46, 445–458. [Google Scholar] [CrossRef]

- Cancer Research UK. Ovarian Cancer Statistics. Available online: https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/ovarian-cancer#heading-Zero (accessed on 6 May 2022).

- Reid, B.M.; Permuth, J.B.; Sellers, T.A. Epidemiology of ovarian cancer: A review. Cancer Biol. Med. 2017, 14, 9. [Google Scholar] [CrossRef]

- Koshiyama, M.; Matsumura, N.; Konishi, I. Subtypes of Ovarian Cancer and Ovarian Cancer Screening. Diagnostics 2017, 7, 12. [Google Scholar] [CrossRef]

- Nash, Z.; Menon, U. Ovarian cancer screening: Current status and future directions. Best Pract. Res. Clin. Obstet. Gynaecol. 2020, 65, 32–45. [Google Scholar] [CrossRef] [PubMed]

- RCOG. Ovarian Masses in Premenopausal Women, Management of Suspected (Green-Top Guideline No. 62). Available online: https://www.rcog.org.uk/guidance/browse-all-guidance/green-top-guidelines/ovarian-masses-in-premenopausal-women-management-of-suspected-green-top-guideline-no-62/ (accessed on 25 July 2023).

- Asp, M.; Peber, E.; Kannisto, P.; Måsbäck, A.; Malander, S. Ovarian tumor frozen section, a multidisciplinary affair. Acta Oncol. 2022, 61, 785–792. [Google Scholar] [CrossRef]

- Gaughran, J.; O’sullivan, H.R.; Lyne, T.; Abdelbar, A.; Abdalla, M.; Sayasneh, A. Fertility Preserving Surgery Outcomes for Ovarian Malignancy: Data from a Tertiary Cancer Centre in Central London. J. Clin. Med. 2022, 11, 3195. [Google Scholar] [CrossRef]

- Valentin, L.; Ameye, L.; Franchi, D.; Guerriero, S.; Jurkovic, D.; Savelli, L.; Fischerova, D.; Lissoni, A.; Van Holsbeke, C.; Fruscio, R.; et al. Risk of malignancy in unilocular cysts: A study of 1148 adnexal masses classified as unilocular cysts at transvaginal ultrasound and review of the literature. Ultrasound Obstet. Gynecol. 2013, 41, 80–89. [Google Scholar] [CrossRef]

- Forstner, R.; Thomassin-Naggara, I.; Cunha, T.M.; Kinkel, K.; Masselli, G.; Kubik-Huch, R.; Spencer, J.A.; Rockall, A. ESUR recommendations for MR imaging of the sonographically indeterminate adnexal mass: An update. Eur. Radiol. 2017, 27, 2248–2257. [Google Scholar] [CrossRef]

- Vernooij, F.; Heintz, P.; Witteveen, E.; van der Graaf, Y. The outcomes of ovarian cancer treatment are better when provided by gynecologic oncologists and in specialized hospitals: A systematic review. Gynecol. Oncol. 2007, 105, 801–812. [Google Scholar] [CrossRef]

- Meys, E.M.J.; Kaijser, J.; Kruitwagen, R.F.P.M.; Slangen, B.F.M.; Van Calster, B.; Aertgeerts, B.; Verbakel, J.Y.; Timmerman, D.; Van Gorp, T. Subjective assessment versus ultra-sound models to diagnose ovarian cancer: A systematic review and meta-analysis. Eur. J. Cancer 2016, 58, 17–29. [Google Scholar] [CrossRef]

- Fischerova, D.; Burgetova, A. Imaging techniques for the evaluation of ovarian cancer. Best Pract. Res. Clin. Obstet. Gynaecol. 2014, 28, 697–720. [Google Scholar] [CrossRef]

- Coccia, M.E.; Rizzello, F.; Romanelli, C.; Capezzuoli, T. Adnexal masses: What is the role of ultrasonographic imaging? Arch. Gynecol. Obstet. 2014, 290, 843–854. [Google Scholar] [CrossRef]

- Piovano, E.; Cavallero, C.; Fuso, L.; Viora, E.; Ferrero, A.; Gregori, G.; Grillo, C.; Macchi, C.; Mengozzi, G.; Mitidieri, M.; et al. Diagnostic accuracy and cost-effectiveness of different strategies to triage women with adnexal masses: A prospective study. Ultrasound Obstet. Gynecol. 2017, 50, 395–403. [Google Scholar] [CrossRef]

- Jacobs, I.; Oram, D.; Fairbanks, J.; Turner, J.; Frost, C.; Grudzinskas, J.G. A risk of malignancy index incorporating CA 125, ultrasound and menopausal status for the accurate preoperative diagnosis of ovarian cancer. BJOG Int. J. Obstet. Gynaecol. 1990, 97, 922–929. [Google Scholar] [CrossRef]

- Phillips, C.H.; Guo, Y.; Strachowski, L.M.; Jha, P.; Reinhold, C.; Andreotti, R.F. The Ovarian/Adnexal Reporting and Data System for Ultrasound: From Standardized Terminology to Optimal Risk Assessment and Management. Can. Assoc. Radiol. J. 2023, 74, 44–57. [Google Scholar] [CrossRef]

- Timmerman, D.; Bourne, T.H.; Tailor, A.; Collins, W.P.; Verrelst, H.; Vandenberghe, K.; Vergote, I. A comparison of methods for preoperative discrimination between malignant and benign adnexal masses: The development of a new logistic regression model. Am. J. Obstet. Gynecol. 1999, 181, 57–65. [Google Scholar] [CrossRef]

- Timmerman, D.; Testa, A.C.; Bourne, T.; Ferrazzi, E.; Ameye, L.; Konstantinovic, M.L.; Van Calster, B.; Collins, W.P.; Vergote, I.; Van Huffel, S.; et al. Logistic Regression Model to Distinguish Between the Benign and Malignant Adnexal Mass Before Surgery: A Multicenter Study by the International Ovarian Tumor Analysis Group. J. Clin. Oncol. 2005, 23, 8794–8801. [Google Scholar] [CrossRef]

- Al-Karawi, D.; Al-Assam, H.; Du, H.; Sayasneh, A.; Landolfo, C.; Timmerman, D.; Bourne, T.; Jassim, S. An Evaluation of the Effectiveness of Image-based Texture Features Extracted from Static B-mode Ultrasound Images in Distinguishing between Benign and Malignant Ovarian Masses. Ultrason. Imaging 2021, 43, 124–138. [Google Scholar] [CrossRef]

- Arezzo, F.; Loizzi, V.; La Forgia, D.; Moschetta, M.; Tagliafico, A.S.; Cataldo, V.; Kawosha, A.A.; Venerito, V.; Cazzato, G.; Ingravallo, G.; et al. Radiomics Analysis in Ovarian Cancer: A Narrative Review. Appl. Sci. 2021, 11, 7833. [Google Scholar] [CrossRef]

- Acharya, U.R.; Sree, S.V.; Kulshreshtha, S.; Molinari, F.; Koh, J.E.W.; Saba, L.; Suri, J.S. GyneScan: An improved online paradigm for screening of ovarian cancer via tissue characterization. Technol. Cancer Res. Treat. 2014, 13, 529–540. [Google Scholar] [CrossRef]

- Aramendía-Vidaurreta, V.; Cabeza, R.; Villanueva, A.; Navallas, J.; Alcázar, J.L. Ultrasound Image Discrimination between Benign and Malignant Adnexal Masses Based on a Neural Network Approach. Ultrasound Med. Biol. 2016, 42, 742–752. [Google Scholar] [CrossRef]

- Chiappa, V.; Bogani, G.; Interlenghi, M.; Salvatore, C.; Bertolina, F.; Sarpietro, G.; Signorelli, M.; Castiglioni, I.; Raspagliesi, F. The Adoption of Radiomics and machine learning improves the diagnostic processes of women with Ovarian MAsses (the AROMA pilot study). J. Ultrasound 2021, 24, 429–437. [Google Scholar] [CrossRef]

- Wang, H.; Liu, C.; Zhao, Z.; Zhang, C.; Wang, X.; Li, H.; Wu, H.; Liu, X.; Li, C.; Qi, L.; et al. Application of Deep Convolutional Neural Networks for Discriminating Benign, Borderline, and Malignant Serous Ovarian Tumors From Ultrasound Images. Front. Oncol. 2021, 11, 770683. [Google Scholar] [CrossRef]

- Cochrane Training. RevMan. Available online: https://training.cochrane.org/online-learning/core-software/revman (accessed on 15 August 2023).

- Ștefan, P.-A.; Lupean, R.-A.; Mihu, C.M.; Lebovici, A.; Oancea, M.D.; Hîțu, L.; Duma, D.; Csutak, C. Ultrasonography in the Diagnosis of Adnexal Lesions: The Role of Texture Analysis. Diagnostics 2021, 11, 812. [Google Scholar] [CrossRef]

- Al-Karawi, D.; Landolfo, C.; Du, H.; Al-Assam, H.; Sayasneh, A.; Timmerman, D.; Bourne, T.; Jassim, S. Prospective clinical evaluation of texture-based features analysis of ultrasound ovarian scans for distinguishing benign and malignant adnexal tumors. Australas. J. Ultrasound Med. 2019, 22, 144. [Google Scholar] [CrossRef]

- Martínez-Más, J.; Bueno-Crespo, A.; Khazendar, S.; Remezal-Solano, M.; Martínez-Cendán, J.-P.; Jassim, S.; Du, H.; Al Assam, H.; Bourne, T.; Timmerman, D. Evaluation of machine learning methods with Fourier Transform features for classifying ovarian tumors based on ultrasound images. PLoS ONE 2019, 14, e0219388. [Google Scholar] [CrossRef]

- Sheela, S.; Sumathi, M. An Evaluation of Effectiveness of a Texture Feature Based Computerized Diagnostic Model in Classifying the Ovarian Cyst as Benign and Malignant from Static 2D B-Mode Ultrasound Images. Curr. Med. Imaging 2022, 19, 292–305. [Google Scholar] [CrossRef]

- Chen, H.; Yang, B.-W.; Qian, L.; Meng, Y.-S.; Bai, X.-H.; Hong, X.-W.; He, X.; Jiang, M.-J.; Yuan, F.; Du, Q.-W.; et al. Deep Learning Prediction of Ovarian Malignancy at US Compared with O-RADS and Expert Assessment. Radiology 2022, 304, 106–113. [Google Scholar] [CrossRef]

- Gao, Y.; Zeng, S.; Xu, X.; Li, H.; Yao, S.; Song, K.; Li, X.; Chen, L.; Tang, J.; Xing, H.; et al. Deep learning-enabled pelvic ultrasound images for accurate diagnosis of ovarian cancer in China: A retrospective, multicentre, diagnostic study. Lancet Digit. Health 2022, 4, e179–e187. [Google Scholar] [CrossRef]

- Christiansen, F.; Epstein, E.L.; Smedberg, E.; Åkerlund, M.; Smith, K. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: Comparison with expert subjective assessment. Ultrasound Obstet. Gynecol. 2021, 57, 155–163. [Google Scholar] [CrossRef]

- Jung, Y.; Kim, T.; Han, M.-R.; Kim, S.; Kim, G.; Lee, S.; Choi, Y.J. Ovarian tumor diagnosis using deep convolutional neural networks and a denoising convolutional autoencoder. Sci. Rep. 2022, 12, 17024. [Google Scholar] [CrossRef]

- Acharya, U.R.; Mookiah, M.R.K.; Sree, S.V.; Yanti, R.; Martis, R.J.; Saba, L.; Molinari, F.; Guerriero, S.; Suri, J.S. Evolutionary algorithm-based classifier parameter tuning for automatic ovarian cancer tissue characterization and classification. Ultraschall Med.-Eur. J. Ultrasound 2014, 35, 237–245. [Google Scholar]

- Gupta, K.K.; Gupta, V.K.; Naumann, R.W. Ovarian cancer: Screening and future directions. Int. J. Gynecol. Cancer 2019, 29, 195–200. [Google Scholar] [CrossRef]

- Kim, Y.H. Artificial intelligence in medical ultrasonography: Driving on an unpaved road. Ultrasonography 2021, 40, 313–317. [Google Scholar] [CrossRef]

- Xu, H.-L.; Gong, T.-T.; Liu, F.-H.; Chen, H.-Y.; Xiao, Q.; Hou, Y.; Huang, Y.; Sun, H.-Z.; Shi, Y.; Gao, S.; et al. Artificial intelligence performance in image-based ovarian cancer identification: A systematic review and meta-analysis. EClinicalMedicine 2022, 53, 101662. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, J.; Liu, L. RETRACTED ARTICLE: Improved Deep Learning Network Based in combination with Cost-sensitive Learning for Early Detection of Ovarian Cancer in Color Ultrasound Detecting System. J. Med. Syst. 2019, 43, 251. [Google Scholar] [CrossRef]

- Mendelson, E.B.; Bohm-Velez, M.; Joseph, N.; Neiman, H.L. Gynecologic imaging: Comparison of transabdominal and transvaginal sonography. Radiology 1988, 166, 321–324. [Google Scholar] [CrossRef]

- Tessler, F.N.; Schiller, V.L.; Perrella, R.R.; Sutherland, M.L.; Grant, E.G. Transabdominal versus endovaginal pelvic sonography: Prospective study. Radiology 1989, 170, 553–556. [Google Scholar] [CrossRef]

- Andolf, E.; Jörgensen, C. A prospective comparison of transabdominal and transvaginal ultrasound with surgical findings in gynecologic disease. J. Ultrasound Med. 1990, 9, 71–75. [Google Scholar] [CrossRef]

- Timmerman, D.; Ameye, L.; Fischerova, D.; Epstein, E.; Melis, G.B.; Guerriero, S.; Van Holsbeke, C.; Savelli, L.; Fruscio, R.; Lissoni, A.A.; et al. Simple ultrasound rules to distinguish between benign and malignant adnexal masses before surgery: Prospective validation by IOTA group. BMJ 2010, 341, c6839. [Google Scholar] [CrossRef]

- Valentin, L.; Hagen, B.; Tingulstad, S.; Eik-Nes, S. Comparison of ‘pattern recognition’ and logistic regression models for discrimination between benign and malignant pelvic masses: A prospective cross validation. Ultrasound Obstet. Gynecol. 2001, 18, 357–365. [Google Scholar] [CrossRef]

- Sayasneh, A.; Ferrara, L.; De Cock, B.; Saso, S.; Al-Memar, M.; Johnson, S.; Kaijser, J.; Carvalho, J.; Husicka, R.; Smith, A.; et al. Evaluating the risk of ovarian cancer before surgery using the ADNEX model: A multicentre external validation study. Br. J. Cancer 2016, 115, 542–548. [Google Scholar] [CrossRef]

- Yazbek, J.; Ameye, L.; Timmerman, D.; Testa, A.C.; Valentin, L.; Holland, T.K.; Van Holsbeke, C.; Jurkovic, D. Use of ultrasound pattern recognition by expert operators to identify borderline ovarian tumors: A study of diagnostic performance and interobserver agreement. Ultrasound Obstet. Gynecol. 2010, 35, 84–88. [Google Scholar] [CrossRef]

- Yazbek, J.; Raju, K.S.; Ben-Nagi, J.; Holland, T.; Hillaby, K.; Jurkovic, D. Accuracy of ultrasound subjective ‘pattern recognition’ for the diagnosis of borderline ovarian tumors. Ultrasound Obstet. Gynecol. 2007, 29, 489–495. [Google Scholar] [CrossRef]

- Coburn, S.B.; Bray, F.; Sherman, M.E.; Trabert, B. International patterns and trends in ovarian cancer incidence, overall and by histologic subtype. Int. J. Cancer 2017, 140, 2451–2460. [Google Scholar] [CrossRef]

- Fischerova, D.; Zikan, M.; Semeradova, I.; Slama, J.; Kocian, R.; Dundr, P.; Nemejcova, K.; Burgetova, A.; Dusek, L.; Cibula, D. Ultrasound in preoperative assessment of pelvic and abdominal spread in patients with ovarian cancer: A prospective study. Ultrasound Obstet. Gynecol. 2017, 49, 263–274. [Google Scholar] [CrossRef]

- Cathcart, A.M.; Nezhat, F.R.; Emerson, J.; Pejovic, T.; Nezhat, C.H.; Nezhat, C.R. Adnexal masses during pregnancy: Diagnosis, treatment, and prognosis. Am. J. Obstet. Gynecol. 2023, 228, 601–612. [Google Scholar] [CrossRef]

| Study | Design | Type of Ultrasound |

|---|---|---|

| Stefan et al., 2021 [27] | Retrospective | Transvaginal |

| Chiappa et al., 2021 [24] | Retrospective | Transvaginal |

| Al-Karawi et al., 2019 [28] | Prospective | Transabdominal and transvaginal |

| Aramendia V et al., 2016 [23] | Prospective | Transvaginal |

| Martinez Mas et al., 2019 [29] | Retrospective | Transabdominal and transvaginal |

| Al-Karawi et al., 2021 [20] | Retrospective | Transabdominal and transvaginal |

| Sheela et al., 2022 [30] | Retrospective | Transvaginal |

| Chen H et al., 2022 [31] | Retrospective | Transabdominal and transvaginal |

| Wang et al., 2021 [25] | Retrospective | Transabdominal |

| Gao Y et al., 2022 [32] | Retrospective | Transvaginal |

| Christiansen F et al., 2021 [33] | Retrospective | Transvaginal |

| Jung Y et al., 2022 [34] | Retrospective | Transabdominal and transvaginal |

| Acharya et al., 2014 [22] | Retrospective | Transvaginal |

| Acharya et al., 2014 [35] | Retrospective | Transvaginal |

| Study | AI Model | Type of Learning (Machine or Deep) |

|---|---|---|

| Stefan et al., 2021 [27] | K-nearest number classifier (KNN) | Machine learning |

| Chiappa et al., 2021 [24] | Support vector machines (SVM) | Machine learning |

| Al-Karawi et al., 2019 [28] | Support vector machine (SVM) | Machine learning |

| Aramendia V et al., 2016 [23] | Multilayer perceptron network (MLP)/Neural network | Deep learning |

| Martinez Mas et al., 2019 [29] | K-nearest neighbours (KNN)/Linear discriminant (LD)/Support vector machine (SVM)/Extreme learning machine (ELM) | Machine learning |

| Al-Karawi et al., 2021 [20] | Support vector machine (SVM) | Machine learning |

| Sheela et al., 2022 [30] | Support vector machine (SVM) | Machine learning |

| Chen H et al., 2022 [31] | Residual network with two fusion strategies (feature and decision fusion) | Deep learning |

| Wang et al., 2021 [25] | Deep convolutional neural network (DCNN) | Deep learning |

| Gao Y et al., 2022 [32] | Deep convolutional neural network (DCNN) | Deep learning |

| Christiansen F et al., 2021 [33] | Deep neural network (DNN) | Deep learning |

| Jung Y et al., 2022 [34] | Deep convolutional neural network | Deep learning |

| Acharya et al., 2014 [22] | Probabilistic neural network (PNN), support vector machine (SVM), decision tree (DT), K-nearest neighbours (KNN), Naïve Bayes (NB) | Machine learning |

| Acharya et al., 2014 [35] | Probabilistic neural network (PNN) | Machine learning |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mitchell, S.; Nikolopoulos, M.; El-Zarka, A.; Al-Karawi, D.; Al-Zaidi, S.; Ghai, A.; Gaughran, J.E.; Sayasneh, A. Artificial Intelligence in Ultrasound Diagnoses of Ovarian Cancer: A Systematic Review and Meta-Analysis. Cancers 2024, 16, 422. https://doi.org/10.3390/cancers16020422

Mitchell S, Nikolopoulos M, El-Zarka A, Al-Karawi D, Al-Zaidi S, Ghai A, Gaughran JE, Sayasneh A. Artificial Intelligence in Ultrasound Diagnoses of Ovarian Cancer: A Systematic Review and Meta-Analysis. Cancers. 2024; 16(2):422. https://doi.org/10.3390/cancers16020422

Chicago/Turabian StyleMitchell, Sian, Manolis Nikolopoulos, Alaa El-Zarka, Dhurgham Al-Karawi, Shakir Al-Zaidi, Avi Ghai, Jonathan E. Gaughran, and Ahmad Sayasneh. 2024. "Artificial Intelligence in Ultrasound Diagnoses of Ovarian Cancer: A Systematic Review and Meta-Analysis" Cancers 16, no. 2: 422. https://doi.org/10.3390/cancers16020422

APA StyleMitchell, S., Nikolopoulos, M., El-Zarka, A., Al-Karawi, D., Al-Zaidi, S., Ghai, A., Gaughran, J. E., & Sayasneh, A. (2024). Artificial Intelligence in Ultrasound Diagnoses of Ovarian Cancer: A Systematic Review and Meta-Analysis. Cancers, 16(2), 422. https://doi.org/10.3390/cancers16020422