Reliability of Automated RECIST 1.1 and Volumetric RECIST Target Lesion Response Evaluation in Follow-Up CT—A Multi-Center, Multi-Observer Reading Study

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Sample

2.2. Imaging

2.3. Definition of Target Lesions and RECIST Timepoint Response Evaluation

2.4. Manual Evaluation

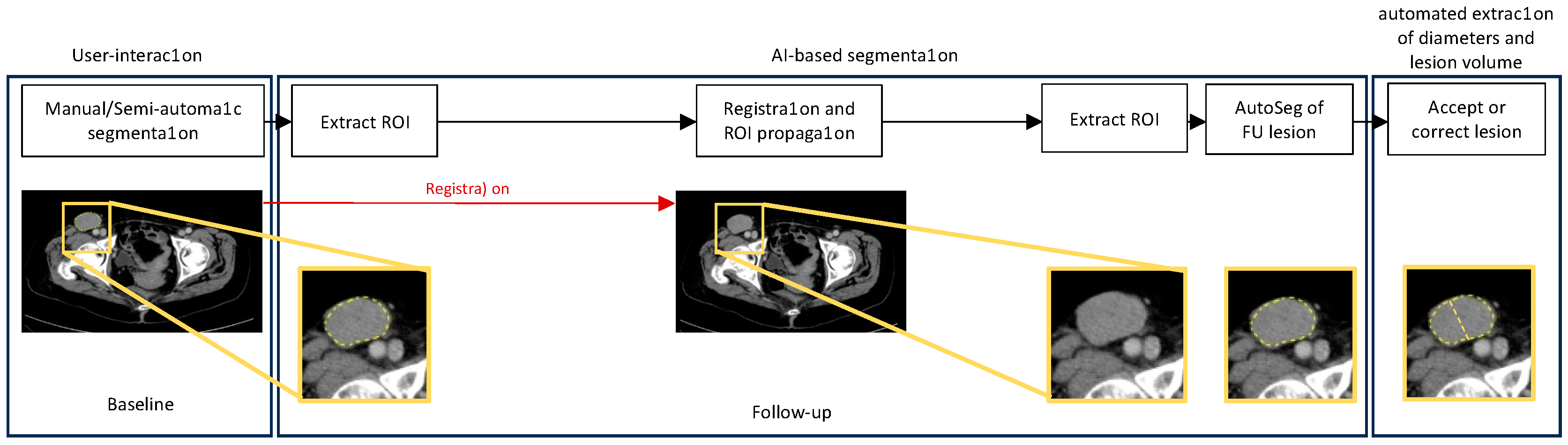

2.5. Automated Diameter Plotting and Volumetric Segmentation

2.6. Statistical Analysis

3. Results

3.1. Patient Characteristics

3.2. Intra-Reader Reliability

3.3. Inter-Reader Reliability

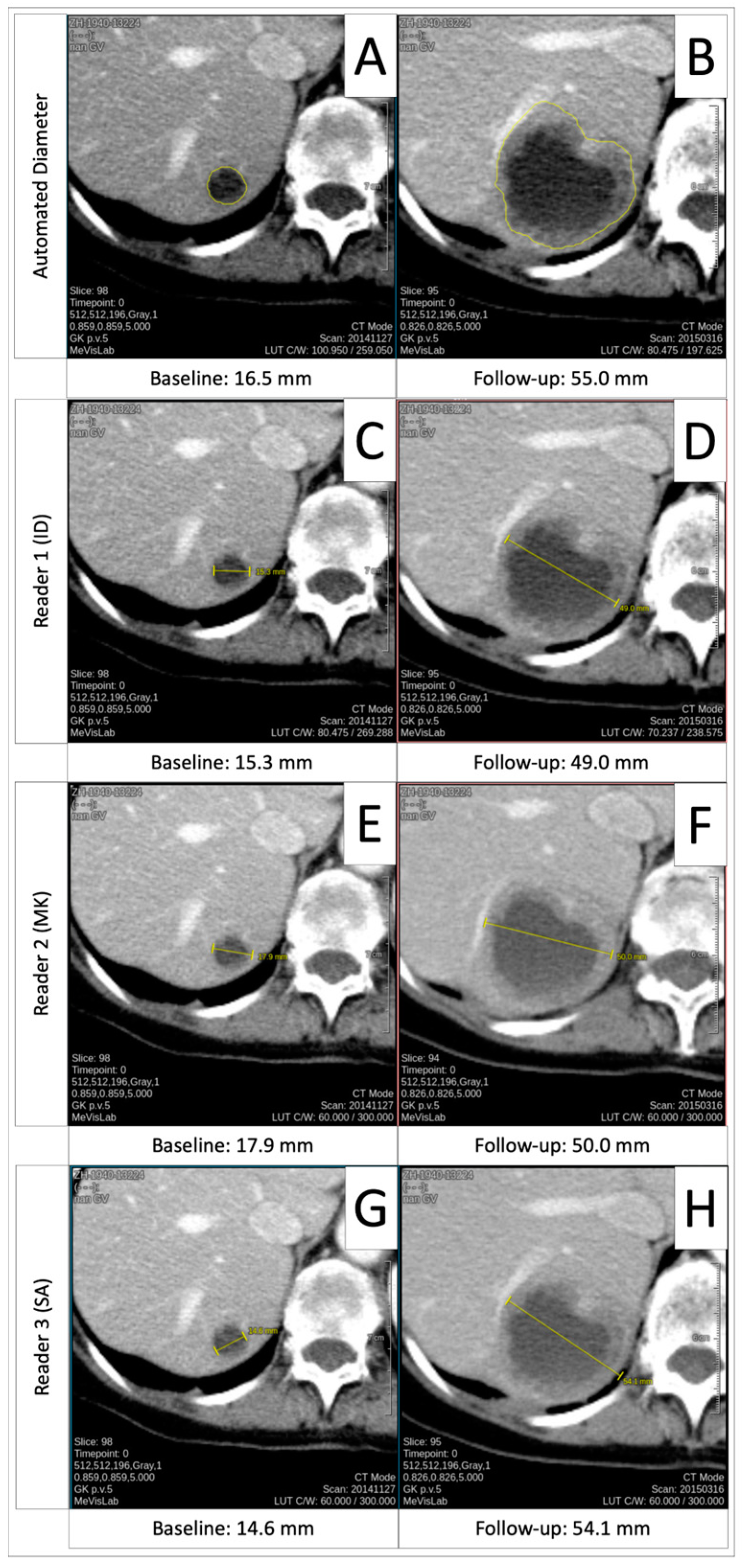

3.4. Comparison of Automated Diameters to Manual Measurements

3.5. Comparison of Manual and Automated Diameter Timepoint Response to Volumetric Timepoint Response

3.6. Progressive Disease Timepoint Response Deviation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AD | Automated diameter |

| AV | Automated volume |

| BL | Baseline |

| CI | Confidence interval |

| CNN | Convolutional neural network |

| CR | Complete response |

| CT | Computed tomography |

| EORTC | European Organisation of Research and Treatment in Cancer |

| ESOI | European Society of Oncologic Imaging |

| FU | Follow-up |

| ICC | Intraclass correlation coefficient |

| IQR | Interquartile range |

| k | Kappa |

| mm | Millimeter |

| MRI | Magnetic resonance imaging |

| n | Number |

| nnUNet | “No-New-Net” |

| NTL | Non-target lesion |

| PD | Progressive disease |

| PR | Partial response |

| RECIST | Response Evaluation Criteria in Solid Tumors |

| SD | Stable disease |

| SD | Standard deviation |

| TL | Target lesion |

| TPR | Timepoint response |

Appendix A. Scan Parameters and CT Scanner/Vendor Details

| Number of Patients | |||||

|---|---|---|---|---|---|

| Cohort | Scanner | Vendor | Baseline | Follow-Up | Total |

| In-house | SOMATOM Definition AS+ | Siemens | 6 | 10 | 16 |

| SOMATOM Definition Flash | Siemens | 3 | 1 | 4 | |

| SOMATOM Force | Siemens | 22 | 23 | 45 | |

| Sensation 64 | Siemens | 5 | 7 | 12 | |

| Biograph128 | Siemens | 11 | 11 | 22 | |

| External | Aquillion One | Canon | 1 | 1 | 2 |

| Lightspeed VCT | GE | 1 | 1 | ||

| Optima CT540 | GE | 1 | 1 | ||

| Ingenuity Core | Philips | 1 | 1 | ||

| Biograph64 | Siemens | 1 | 1 | ||

| Emotion 16 | Siemens | 2 | 1 | 3 | |

| Scope | Siemens | 1 | 1 | ||

| SOMATOM Definition AS | Siemens | 4 | 1 | 5 | |

| SOMATOM Definition Edge | Siemens | 1 | 1 | ||

| SOMATOM Definition Flash | Siemens | 1 | 1 | ||

| Total | 58 | 58 | 116 | ||

Appendix B

References

- Eisenhauer, E.A.; Therasse, P.; Bogaerts, J.; Schwartz, L.H.; Sargent, D.; Ford, R.; Dancey, J.; Arbuck, S.; Gwyther, S.; Mooney, M.; et al. New response evaluation criteria in solid tumours: Revised RECIST guideline (version 1.1). Eur. J. Cancer 2009, 45, 228–247. [Google Scholar] [CrossRef] [PubMed]

- Therasse, P.; Arbuck, S.G.; Eisenhauer, E.A.; Wanders, J.; Kaplan, R.S.; Rubinstein, L.; Verweij, J.; Van Glabbeke, M.; van Oosterom, A.T.; Christian, M.C.; et al. New guidelines to evaluate the response to treatment in solid tumors. European Organization for Research and Treatment of Cancer, National Cancer Institute of the United States, National Cancer Institute of Canada. J. Natl. Cancer Inst. 2000, 92, 205–216. [Google Scholar] [CrossRef] [PubMed]

- Bellomi, M.; De Piano, F.; Ancona, E.; Lodigiani, A.F.; Curigliano, G.; Raimondi, S.; Preda, L. Evaluation of inter-observer variability according to RECIST 1.1 and its influence on response classification in CT measurement of liver metastases. Eur. J. Radiol. 2017, 95, 96–101. [Google Scholar] [CrossRef] [PubMed]

- Muenzel, D.; Engels, H.P.; Bruegel, M.; Kehl, V.; Rummeny, E.J.; Metz, S. Intra- and inter-observer variability in measurement of target lesions: Implication on response evaluation according to RECIST 1.1. Radiol. Oncol. 2012, 46, 8–18. [Google Scholar] [CrossRef]

- Marten, K.; Auer, F.; Schmidt, S.; Kohl, G.; Rummeny, E.J.; Engelke, C. Inadequacy of manual measurements compared to automated CT volumetry in assessment of treatment response of pulmonary metastases using RECIST criteria. Eur. Radiol. 2006, 16, 781–790. [Google Scholar] [CrossRef]

- Prasad, S.R.; Jhaveri, K.S.; Saini, S.; Hahn, P.F.; Halpern, E.F.; Sumner, J.E. CT tumor measurement for therapeutic response assessment: Comparison of unidimensional, bidimensional, and volumetric techniques initial observations. Radiology 2002, 225, 416–419. [Google Scholar] [CrossRef]

- Moltz, J.H.; D’Anastasi, M.; Kiessling, A.; Santos, D.P.D.; Schulke, C.; Peitgen, H.O. Workflow-centred evaluation of an automatic lesion tracking software for chemotherapy monitoring by CT. Eur. Radiol. 2012, 22, 2759–2767. [Google Scholar] [CrossRef]

- Ben-Cohen, A.; Klang, E.; Diamant, I.; Rozendorn, N.; Amitai, M.M.; Greenspan, H. Automated method for detection and segmentation of liver metastatic lesions in follow-up CT examinations. J. Med. Imaging 2015, 2, 034502. [Google Scholar] [CrossRef]

- Primakov, S.P.; Ibrahim, A.; van Timmeren, J.E.; Wu, G.; Keek, S.A.; Beuque, M.; Granzier, R.W.Y.; Lavrova, E.; Scrivener, M.; Sanduleanu, S.; et al. Automated detection and segmentation of non-small cell lung cancer computed tomography images. Nat. Commun. 2022, 13, 3423. [Google Scholar] [CrossRef]

- Zhou, L.; Yu, L.; Wang, L. RECIST-Induced Reliable Learning: Geometry-Driven Label Propagation for Universal Lesion Segmentation. IEEE Trans. Med. Imaging 2024, 43, 149–161. [Google Scholar] [CrossRef]

- Qiu, Y.; Xu, J. Delving into Universal Lesion Segmentation: Method, Dataset, and Benchmark. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 485–503. [Google Scholar]

- Tang, Y.; Cai, J.; Yan, K.; Huang, L.; Xie, G.; Xiao, J.; Lu, J.; Lin, G.; Lu, L. Weakly-Supervised Universal Lesion Segmentation with Regional Level Set Loss. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2021, Proceedings of the 24th International Conference, Strasbourg, France, 27 September–1 October 2021; de Bruijne, M., Cattin, P.C., Cotin, S., Padoy, N., Speidel, S., Zheng, Y., Essert, C., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 515–525. [Google Scholar]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Beaumont, H.; Bertrand, A.S.; Klifa, C.; Patriti, S.; Cippolini, S.; Lovera, C.; Iannessi, A. Radiology workflow for RECIST assessment in clinical trials: Can we reconcile time-efficiency and quality? Eur. J. Radiol. 2019, 118, 257–263. [Google Scholar] [CrossRef] [PubMed]

- Winter, K.S.; Hofmann, F.O.; Thierfelder, K.M.; Holch, J.W.; Hesse, N.; Baumann, A.B.; Modest, D.P.; Stintzing, S.; Heinemann, V.; Ricke, J.; et al. Towards volumetric thresholds in RECIST 1.1: Therapeutic response assessment in hepatic metastases. Eur. Radiol. 2018, 28, 4839–4848. [Google Scholar] [CrossRef] [PubMed]

- Hering, A.; Peisen, F.; Amaral, T.; Gatidis, S.; Eigentler, T.; Othman, A.; Moltz, J.H. Whole-Body Soft-Tissue Lesion Tracking and Segmentation in Longitudinal CT Imaging Studies. In Proceedings of the Fourth Conference on Medical Imaging with Deep Learning, Lübeck, Germany, 7–9 July 2021. [Google Scholar]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Benchoufi, M.; Matzner-Lober, E.; Molinari, N.; Jannot, A.S.; Soyer, P. Interobserver agreement issues in radiology. Diagn. Interv. Imaging 2020, 101, 639–641. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Cicchetti, D. Guidelines, Criteria, and Rules of Thumb for Evaluating Normed and Standardized Assessment Instrument in Psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Yoon, S.H.; Kim, K.W.; Goo, J.M.; Kim, D.W.; Hahn, S. Observer variability in RECIST-based tumour burden measurements: A meta-analysis. Eur. J. Cancer 2016, 53, 5–15. [Google Scholar] [CrossRef]

- Jacene, H.A.; Leboulleux, S.; Baba, S.; Chatzifotiadis, D.; Goudarzi, B.; Teytelbaum, O.; Horton, K.M.; Kamel, I.; Macura, K.J.; Tsai, H.L.; et al. Assessment of interobserver reproducibility in quantitative 18F-FDG PET and CT measurements of tumor response to therapy. J. Nucl. Med. 2009, 50, 1760–1769. [Google Scholar] [CrossRef]

- McErlean, A.; Panicek, D.M.; Zabor, E.C.; Moskowitz, C.S.; Bitar, R.; Motzer, R.J.; Hricak, H.; Ginsberg, M.S. Intra- and interobserver variability in CT measurements in oncology. Radiology 2013, 269, 451–459. [Google Scholar] [CrossRef] [PubMed]

- Kuhl, C.K.; Alparslan, Y.; Schmoee, J.; Sequeira, B.; Keulers, A.; Brummendorf, T.H.; Keil, S. Validity of RECIST Version 1.1 for Response Assessment in Metastatic Cancer: A Prospective, Multireader Study. Radiology 2019, 290, 349–356. [Google Scholar] [CrossRef] [PubMed]

- Fournier, L.; de Geus-Oei, L.-F.; Regge, D.; Oprea-Lager, D.-E.; D’anastasi, M.; Bidaut, L.; Bäuerle, T.; Lopci, E.; Cappello, G.; Lecouvet, F.; et al. Twenty Years On: RECIST as a Biomarker of Response in Solid Tumours an EORTC Imaging Group-ESOI Joint Paper. Front Oncol. 2021, 11, 800547. [Google Scholar] [CrossRef] [PubMed]

- Barash, Y.; Klang, E. Automated quantitative assessment of oncological disease progression using deep learning. Ann. Transl. Med. 2019, 7 (Suppl. S8), S379. [Google Scholar] [CrossRef] [PubMed]

- Rubin, D.L.; Willrett, D.; O’Connor, M.J.; Hage, C.; Kurtz, C.; Moreira, D.A. Automated tracking of quantitative assessments of tumor burden in clinical trials. Transl. Oncol. 2014, 7, 23–35. [Google Scholar] [CrossRef]

- Kickingereder, P.; Isensee, F.; Tursunova, I.; Petersen, J.; Neuberger, U.; Bonekamp, D.; Brugnara, G.; Schell, M.; Kessler, T.; Foltyn, M.; et al. Automated quantitative tumour response assessment of MRI in neuro-oncology with artificial neural networks: A multicentre, retrospective study. Lancet Oncol. 2019, 20, 728–740. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Yan, K.; Xiao, J.; Summers, R.M. One Click Lesion RECIST Measurement and Segmentation on CT Scans; Springer International Publishing: Cham, Switzerland, 2020; pp. 573–583. [Google Scholar]

- Greenberg, V.; Lazarev, I.; Frank, Y.; Dudnik, J.; Ariad, S.; Shelef, I. Semi-automatic volumetric measurement of response to chemotherapy in lung cancer patients: How wrong are we using RECIST? Lung Cancer 2017, 108, 90–95. [Google Scholar] [CrossRef]

- Zimmermann, M.; Kuhl, C.K.; Engelke, H.; Bettermann, G.; Keil, S. CT-based whole-body tumor volumetry versus RECIST 1.1: Feasibility and implications for inter-reader variability. Eur. J. Radiol. 2021, 135, 109514. [Google Scholar] [CrossRef]

- Abbas, E.; Fanni, S.C.; Bandini, C.; Francischello, R.; Febi, M.; Aghakhanyan, G.; Ambrosini, I.; Faggioni, L.; Cioni, D.; Lencioni, R.A.; et al. Delta-radiomics in cancer immunotherapy response prediction: A systematic review. Eur. J. Radiol. Open 2023, 11, 100511. [Google Scholar] [CrossRef] [PubMed]

- Beaumont, H.; Evans, T.L.; Klifa, C.; Guermazi, A.; Hong, S.R.; Chadjaa, M.; Monostori, Z. Discrepancies of assessments in a RECIST 1.1 phase II clinical trial-association between adjudication rate and variability in images and tumors selection. Cancer Imaging 2018, 18, 50. [Google Scholar] [CrossRef] [PubMed]

- Iannessi, A.; Beaumont, H.; Liu, Y.; Bertrand, A.S. RECIST 1.1 and lesion selection: How to deal with ambiguity at baseline? Insights Imaging 2021, 12, 36. [Google Scholar] [CrossRef] [PubMed]

| n | % | |

|---|---|---|

| Gender | ||

| Female | 25 | 43 |

| Male | 33 | 57 |

| Age (years, [SD 1]) | 62.8 [12.9] | |

| Treatment | ||

| Immunotherapy | 40 | 69 |

| Targeted therapy | 18 | 31 |

| Number of target lesions | 114 | |

| Soft tissue | 39 | 34 |

| Lymph node | 28 | 26 |

| Lung | 20 | 18 |

| Liver | 18 | 16 |

| Adrenal gland | 7 | 6 |

| Osseous | 2 | 2 |

| Median number of target lesions per patient (n, [IQR 2]) | 2 [1.75] | |

| Mean baseline lesion diameter (mm, [SD]) | 27.2 [0.85] | |

| Mean follow-up lesion diameter (mm, [SD]) | 21.88 [0.43] |

| Mean Diameter Difference (mm) | SD (mm) | p | |

|---|---|---|---|

| Lesion level | |||

| Reader | |||

| ID (BL 1) | 0.62 | 5.03 | 0.19 |

| ID (FU 2) | 0.15 | 4.06 | 0.69 |

| MK (BL) | 0.11 | 2.21 | 0.59 |

| MK (FU) | 0.10 | 6.26 | 0.86 |

| SA (BL) | 0.40 | 2.53 | 0.10 |

| SA (FU) | 0.56 | 2.11 | 0.01 |

| Patient level | |||

| Reader | |||

| ID (BL) | 1.23 | 7.37 | 0.21 |

| ID (FU) | 0.30 | 5.20 | 0.66 |

| MK (BL) | 0.22 | 3.07 | 0.59 |

| MK (FU) | 0.21 | 9.38 | 0.87 |

| SA (BL) | 0.78 | 3.38 | 0.08 |

| SA (FU) | 1.01 | 2.48 | <0.01 |

| ICC 1 | 95% CI 2 | |

|---|---|---|

| Lesion level | ||

| Reader | ||

| ID (BL) | 0.97 | 0.96–0.98 |

| ID (FU) | 0.99 | 0.99–0.99 |

| MK (BL) | 0.99 | 0.99–1.00 |

| MK (FU) | 0.99 | 0.99–1.00 |

| SA (BL) | 0.99 | 0.99–1.00 |

| SA (FU) | 0.99 | 0.99–1.00 |

| Patient level | ||

| Reader | ||

| ID (BL) | 0.99 | 0.99–1.00 |

| ID (FU) | 0.99 | 0.99–1.00 |

| MK (BL) | 1.00 | 1.00–1.00 |

| MK (FU) | 0.99 | 0.98–0.99 |

| SA (BL) | 1.00 | 1.00–1.00 |

| SA (FU) | 1.00 | 1.00–1.00 |

| Diameter Measurements | ICC | 95% CI |

|---|---|---|

| Radiologists only | ||

| Lesion level | ||

| BL | 0.99 | 0.99–1.00 |

| FU | 0.99 | 0.99–1.00 |

| Patient level | ||

| BL | 1.00 | 0.99–1.00 |

| FU | 1.00 | 1.00–1.00 |

| Inclusive of automated diameters | ||

| Reader | ||

| Lesion level | ||

| BL | 0.99 | 0.99–0.99 |

| FU | 0.98 | 0.97–0.98 |

| Patient level | ||

| BL | 0.99 | 0.99–1.00 |

| FU | 0.99 | 0.99–0.99 |

| Timepoint response | Fleiss’ k 3 | 95% CI |

| Radiologists only | ||

| Lesion level | 0.79 | 0.79–0.79 |

| Patient level | 0.68 | 0.68–0.68 |

| Inclusive of automated diameters | ||

| Lesion level | 0.66 | 0.66–0.66 |

| Patient level | 0.69 | 0.69–0.69 |

| Inclusive of automated volumes | ||

| Lesion level | 0.66 | 0.66–0.67 |

| Patient level | 0.67 | 0.67–0.68 |

| Cohen’s k | 95% CI | |

| All readers mean vs. AD 1 | ||

| Lesion level | 0.67 | 0.56–0.78 |

| Patient level | 0.76 | 0.61–0.90 |

| All readers mean vs. AV 2 | ||

| Lesion level | 0.69 | 0.59–0.80 |

| Patient level | 0.73 | 0.58–0.87 |

| Automated diameters vs. volumes | ||

| Lesion level | 0.81 | 0.72–0.90 |

| Patient level | 0.81 | 0.67–0.94 |

| Mean Diameter Difference (mm) | SD (mm) | p | |

|---|---|---|---|

| Lesion level | |||

| Reader | |||

| ID vs. AD (BL) | 0.36 | 6.60 | 0.56 |

| ID vs. AD (FU) | 0.36 | 10.62 | 0.72 |

| MK vs. AD (BL) | 2.21 | 8.00 | <0.01 |

| MK vs. AD (FU) | 0.37 | 10.62 | 0.71 |

| SA vs. AD (BL) | 2.16 | 8.00 | 0.01 |

| SA vs. AD (FU) | 0.88 | 12.16 | 0.44 |

| All readers vs. AD (BL) | 1.01 | 7.00 | 0.13 |

| All readers vs. AD (FU) | 0.44 | 10.91 | 0.67 |

| Patient level | |||

| Reader | |||

| ID vs. AD (BL) | 0.71 | 9.90 | 0.56 |

| ID vs. AD (FU) | 0.70 | 14.30 | 0.71 |

| MK vs. AD (BL) | 4.27 | 11.05 | 0.01 |

| MK vs. AD (FU) | 1.78 | 16.09 | 0.40 |

| SA vs. AD (BL) | 0.97 | 11.26 | 0.51 |

| SA vs. AD (FU) | 0.09 | 15.56 | 0.97 |

| All readers vs. AD (BL) | 1.99 | 9.92 | 0.13 |

| All readers vs. AD (FU) | 0.86 | 14.70 | 0.66 |

| Timepoint Response | Timepoint Response | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Patient | ID | MK | SA | AD | AV | Patient | ID | MK | SA | AD | AV |

| 1 | PR | PR | PR | PR | PR | 30 | PR | CR | PR | PR | PR |

| 2 | PR | PR | PR | PR | PR | 31 | SD | SD | SD | SD | SD |

| 3 | SD | SD | SD | SD | SD | 32 | PR | PR | PR | PR | PR |

| 4 | PR | PR | PR | PR | PR | 33 | CR | CR | PR | PR | PR |

| 5 | SD | SD | PD | PD | SD | 34 | PR | PR | PR | PR | PR |

| 6 | PR | PR | PR | PR | PR | 35 | PD | SD | PD | SD | SD |

| 7 | PR | PR | PR | PR | PR | 36 | PR | PR | PR | PR | PR |

| 8 | SD | SD | SD | SD | SD | 37 | SD | SD | PR | SD | SD |

| 9 | SD | SD | SD | PR | SD | 38 | PR | PR | PR | PR | PR |

| 10 | SD | SD | SD | SD | SD | 39 | SD | SD | SD | SD | SD |

| 11 | PR | PR | PR | PR | PR | 40 | CR | CR | PR | PR | PR |

| 12 | PR | PR | PR | PR | PR | 41 | CR | CR | PR | PR | PR |

| 13 | SD | SD | SD | SD | SD | 42 | SD | SD | SD | SD | SD |

| 14 | SD | SD | SD | SD | SD | 43 | PD | PD | PD | PD | PD |

| 15 | PR | SD | SD | SD | SD | 44 | PD | PD | PD | PD | SD |

| 16 | PR | SD | SD | SD | SD | 45 | SD | SD | PD | SD | SD |

| 17 | SD | PD | SD | SD | SD | 46 | SD | SD | PR | PR | PR |

| 18 | PD | PD | PD | PD | PD | 47 | SD | SD | PD | PD | SD |

| 19 | PR | SD | PR | SD | PR | 48 | SD | SD | SD | SD | SD |

| 20 | SD | SD | SD | SD | SD | 49 | PR | PR | PR | PR | PR |

| 21 | PR | PR | PR | PR | PR | 50 | PD | PD | PD | PD | SD |

| 22 | SD | SD | SD | SD | PR | 51 | PR | PR | PR | PR | PR |

| 23 | PD | SD | PD | PD | PD | 52 | CR | CR | PR | PR | PR |

| 24 | PD | PD | PD | PD | PD | 53 | PR | PR | PR | PR | PR |

| 25 | SD | SD | SD | SD | SD | 54 | PD | PR | PR | PD | PD |

| 26 | PR | CR | PR | PR | PR | 55 | PR | CR | PR | PR | PR |

| 27 | PD | PD | PD | PD | PD | 56 | PR | PR | PR | PR | PR |

| 28 | PD | PD | PD | PR | PR | 57 | PD | PD | PD | PD | PD |

| 29 | PR | PR | PR | PR | PR | 58 | PD | PD | PD | PD | PD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dahm, I.C.; Kolb, M.; Altmann, S.; Nikolaou, K.; Gatidis, S.; Othman, A.E.; Hering, A.; Moltz, J.H.; Peisen, F. Reliability of Automated RECIST 1.1 and Volumetric RECIST Target Lesion Response Evaluation in Follow-Up CT—A Multi-Center, Multi-Observer Reading Study. Cancers 2024, 16, 4009. https://doi.org/10.3390/cancers16234009

Dahm IC, Kolb M, Altmann S, Nikolaou K, Gatidis S, Othman AE, Hering A, Moltz JH, Peisen F. Reliability of Automated RECIST 1.1 and Volumetric RECIST Target Lesion Response Evaluation in Follow-Up CT—A Multi-Center, Multi-Observer Reading Study. Cancers. 2024; 16(23):4009. https://doi.org/10.3390/cancers16234009

Chicago/Turabian StyleDahm, Isabel C., Manuel Kolb, Sebastian Altmann, Konstantin Nikolaou, Sergios Gatidis, Ahmed E. Othman, Alessa Hering, Jan H. Moltz, and Felix Peisen. 2024. "Reliability of Automated RECIST 1.1 and Volumetric RECIST Target Lesion Response Evaluation in Follow-Up CT—A Multi-Center, Multi-Observer Reading Study" Cancers 16, no. 23: 4009. https://doi.org/10.3390/cancers16234009

APA StyleDahm, I. C., Kolb, M., Altmann, S., Nikolaou, K., Gatidis, S., Othman, A. E., Hering, A., Moltz, J. H., & Peisen, F. (2024). Reliability of Automated RECIST 1.1 and Volumetric RECIST Target Lesion Response Evaluation in Follow-Up CT—A Multi-Center, Multi-Observer Reading Study. Cancers, 16(23), 4009. https://doi.org/10.3390/cancers16234009